2.1. Overview

For autonomous vehicles, the trajectories of the detected vehicles are not deterministic, as they are affected by the environment, the destination of the driver and driving habits, which increase the difficulty of collision avoidance. However, certain considerations about the dynamic features of the moving vehicle, traffic rules and the road structure around the vehicle can provide some information to facilitate predictions. For instance, it is known that the maximum acceleration of a vehicle is limited, and the trajectory curvature must be smaller than a certain value to ensure stability. The varying driving habits of different drivers may lead to different trajectories; however, some common rules should be followed to ensure comfort and safety, such as following the center line of the lane, which is defined as normal moving in this paper.

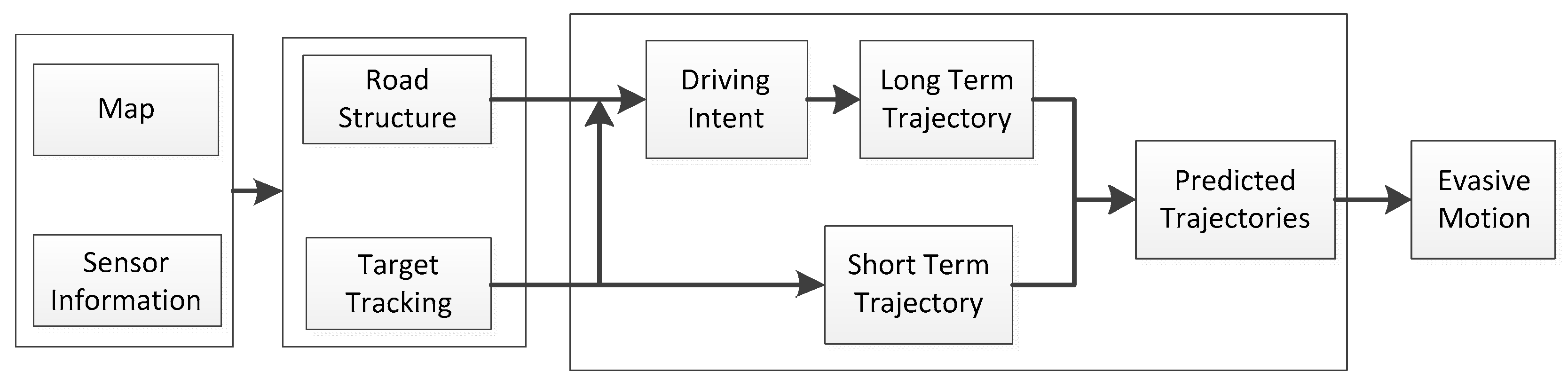

The structure of the proposed IEMMB method is shown in

Figure 1.

Online sensor information and a priori environmental information are combined to increase the accuracy and robustness of environmental perception, which is crucial to collision avoidance. Then, the road structure and moving vehicles are extracted; the driving intent of the detected moving vehicles can be estimated using this information. In the next step, the long-term trajectory considering driving intent and the short-term trajectory considering the current moving state, as well as motion model are combined to predict the real trajectory. With the prediction, potential collisions can be detected, and appropriate evasive maneuvers will be generated to avoid collisions in the space or time dimension.

2.2. Intent Estimation

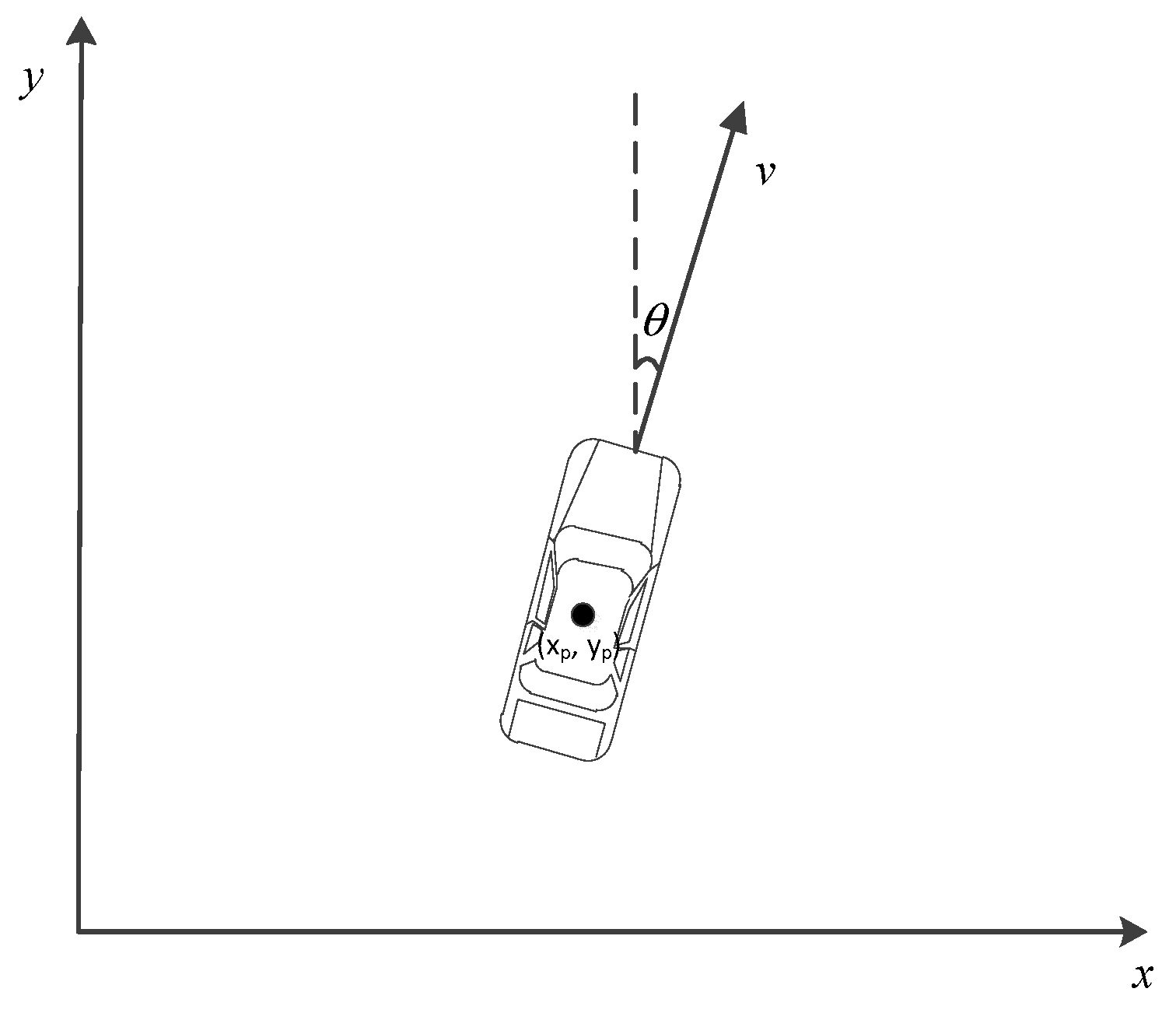

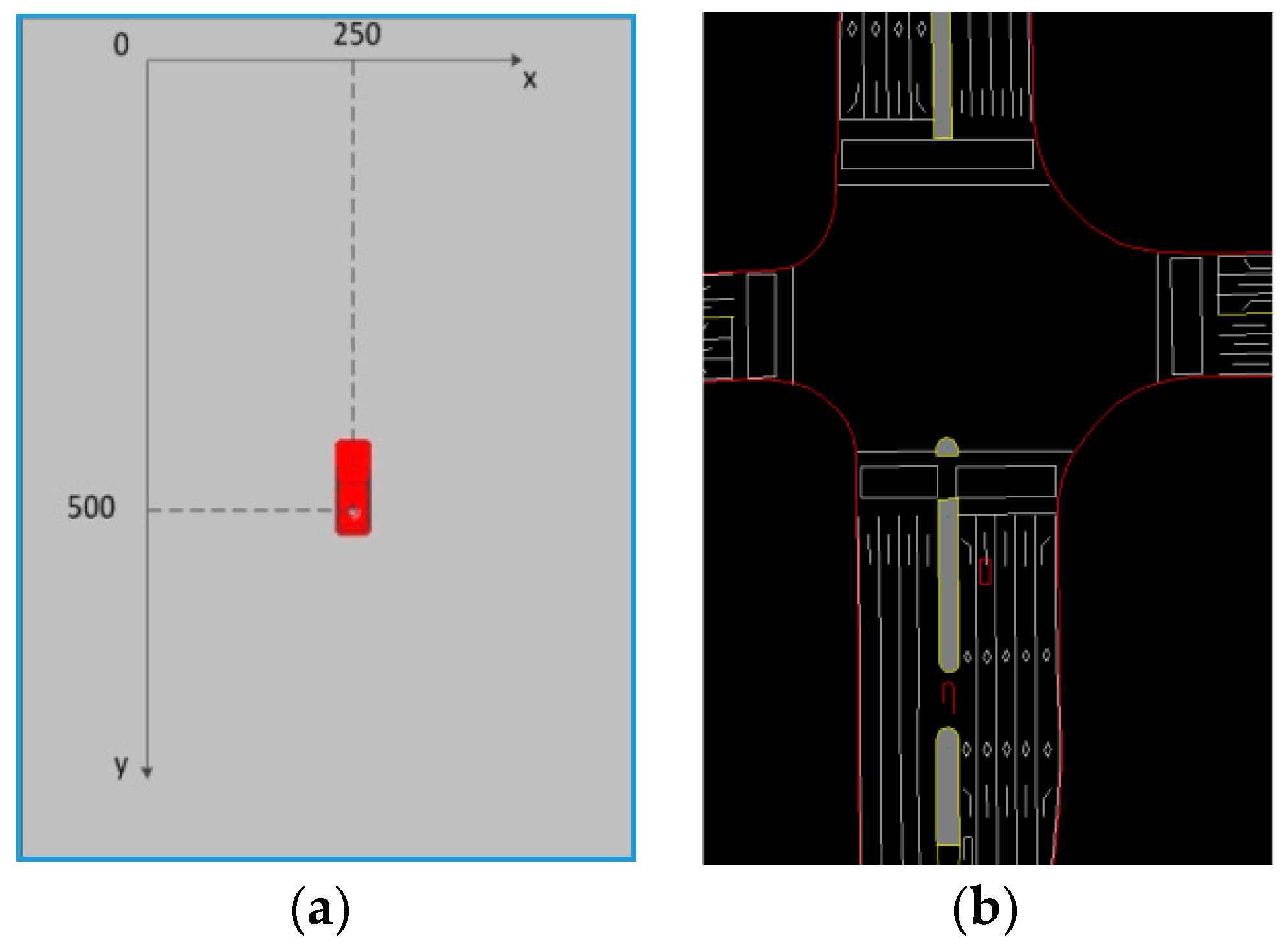

Detected moving vehicles can be extracted from sensor information, and their moving features become accessible. All of the detected vehicles can be modelled with a state vector in the form of Equation (1), as shown in

Figure 2.

where

is the position,

represents the moving direction of the vehicle,

and

are the velocity and acceleration, respectively, and

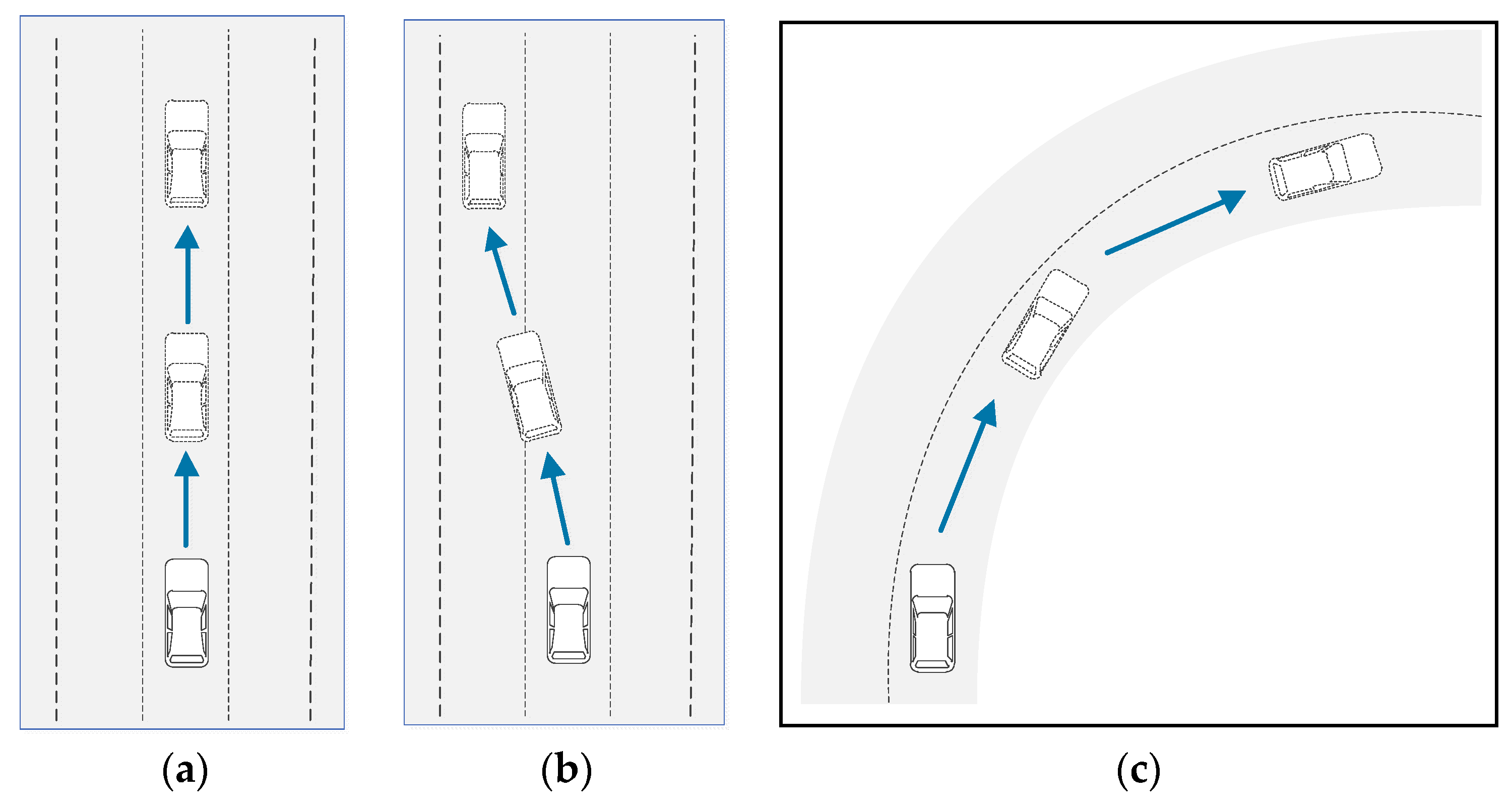

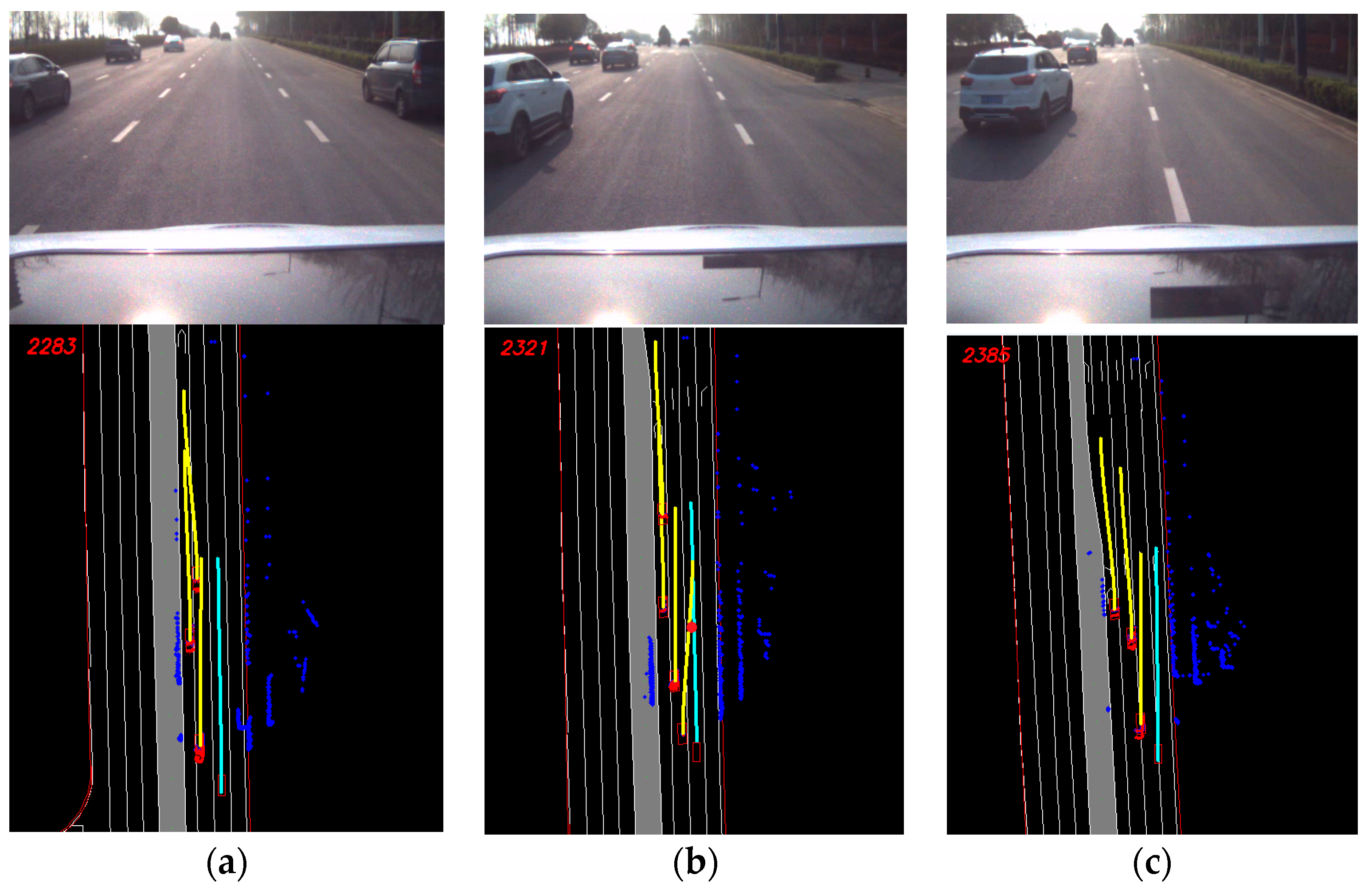

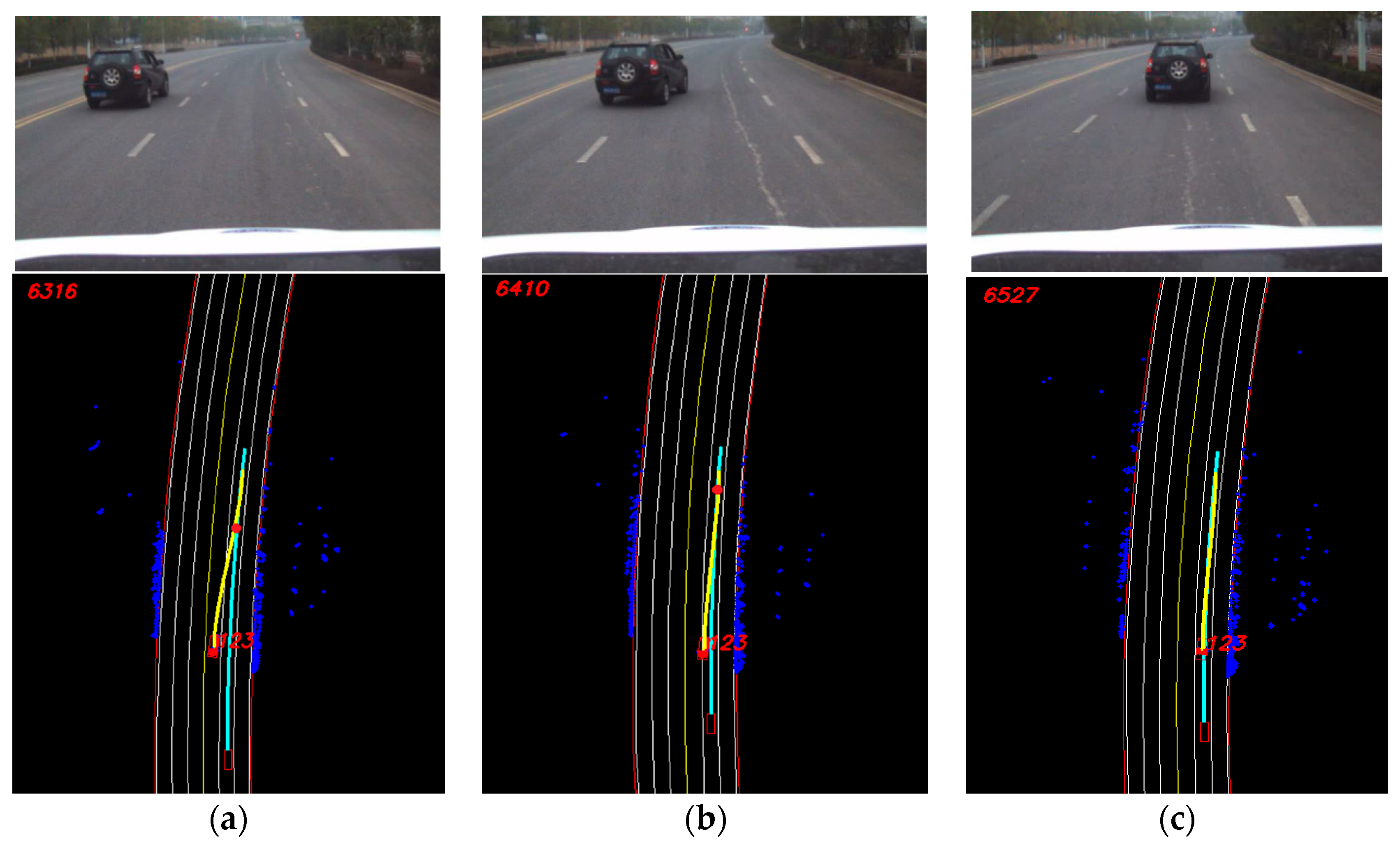

is the yaw rate of the vehicle. For a vehicle moving along a straight lane, as shown in

Figure 3a, the trajectory of the vehicle can be predicted with its moving state, although the trajectory may fail when subject to the conditions in

Figure 3b,c. In

Figure 3b, the vehicle performs a left lane change, and the vehicle in

Figure 3c moves along a winding road. In addition to the moving state, the road structure should be considered when estimating the driving intents of detected vehicles.

By matching the lane marks and road edges with an accurate lane-level map, the accurate position of the vehicle on the map is accessible, as well as the lane number and the road shape. The trajectories of the moving vehicles are strongly influenced by the road shape because drivers tend to follow the center line of a lane, which can be modeled with a quadratic curve, as shown in Equation (2).

where

,

and

are coefficients. In urban environments, the trajectory of a vehicle is decided by the driver and can be divided into the following categories.

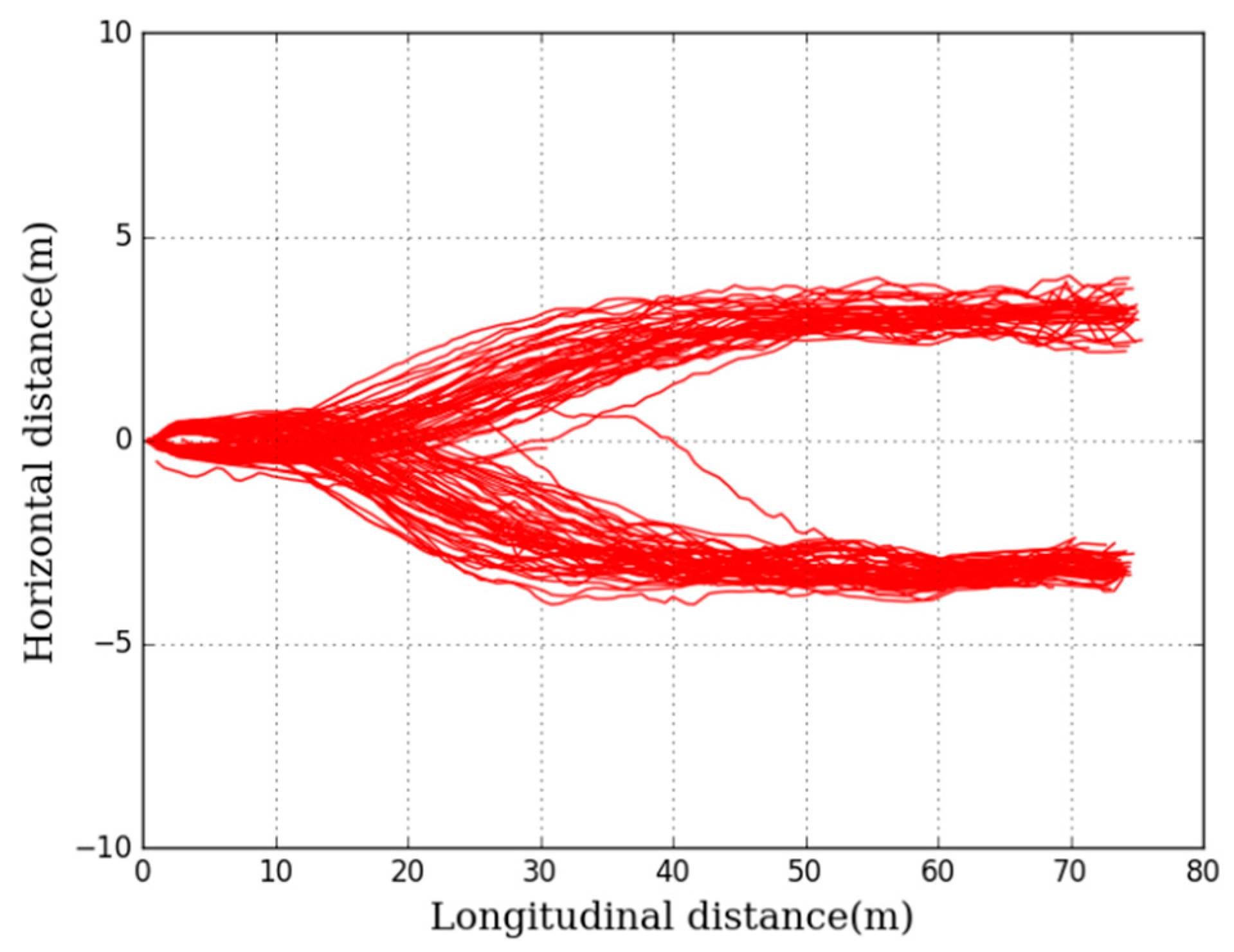

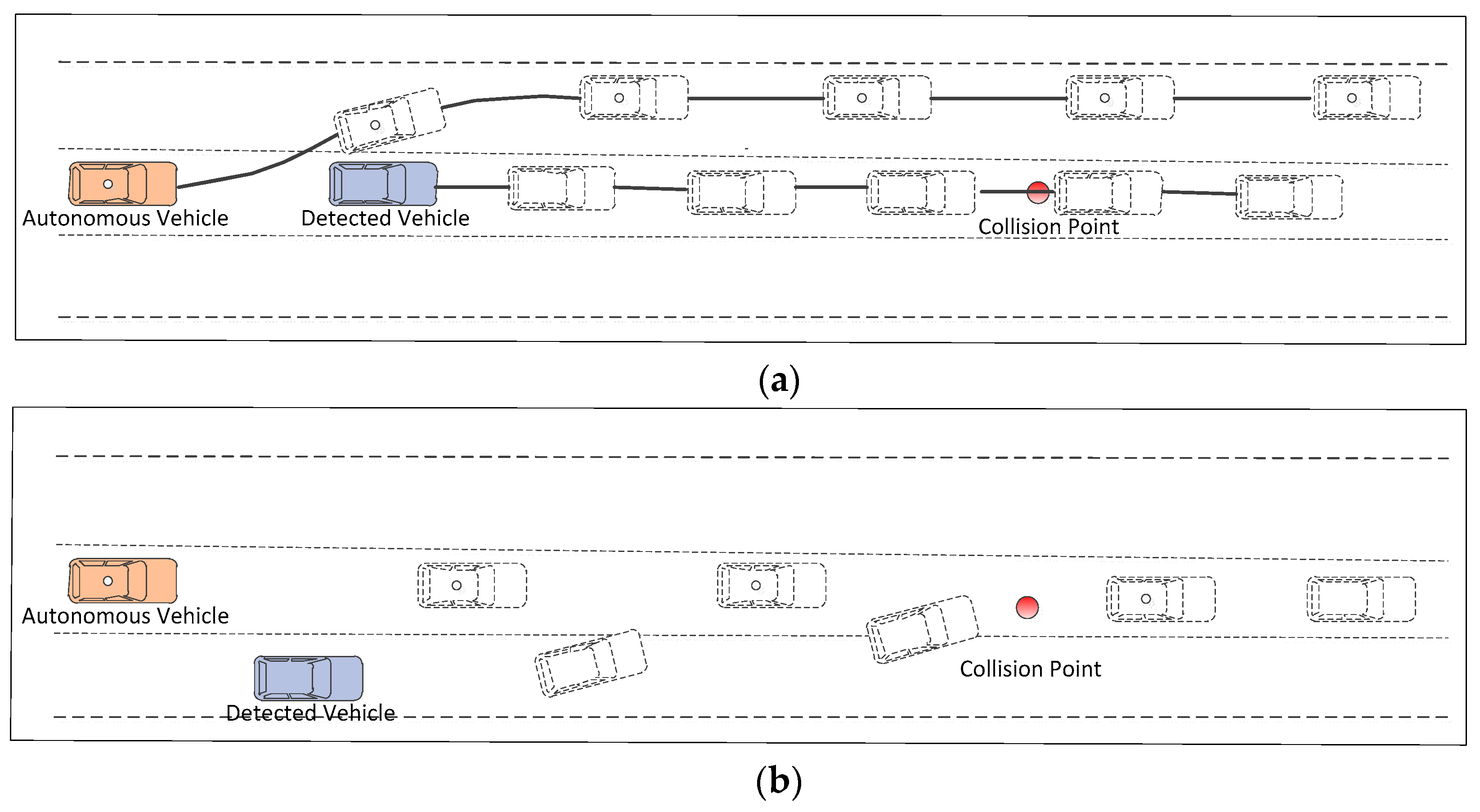

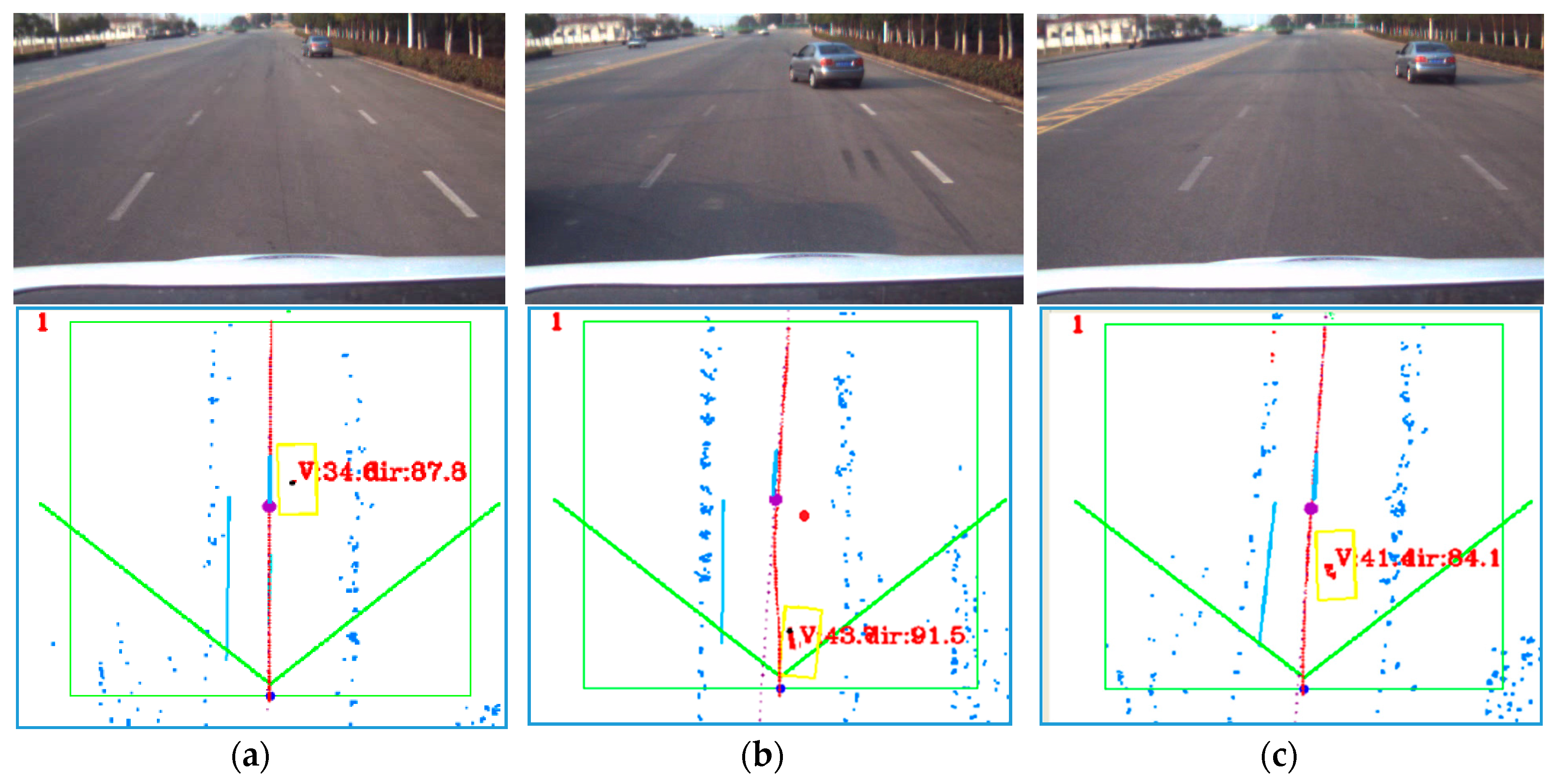

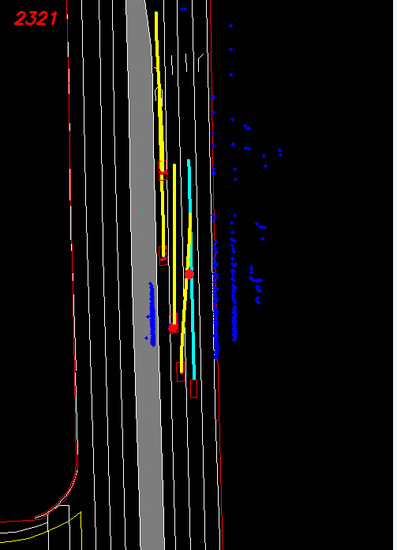

For most turns at intersections, the driving intent can be estimated by identifying the lane being driven on. For a driver who wants to turn left, he/she will enter the left-turn lane before entering the intersection. Therefore, we mainly discuss normal moving and lane changes in this paper. For a normally moving vehicle, the trajectory is similar to the center line of the lane. On the other hand, a vehicle that is changing its lane is leaving the center line and will enter an adjacent lane. A set of lane change trajectories, obtained from the onboard sensors, are shown in

Figure 4. It can be seen that the shapes of the change trajectories are different in both longitudinal and horizontal directions, which increases the difficulty of intent estimation. To estimate the intent of the driver, a state vector of the moving vehicle is constructed.

where

are distances between the vehicle and the left lane mark and

corresponds to the distance between the vehicle and the right lane mark.

is the heading direction of the vehicle, and

is the current curvature of its path.

The center line of the lane where the vehicle is moving can also be represented with a state vector:

where

is the road direction and

is the road curvature. Besides,

and

are set as half of the lane width. These variables can be obtained with the mentioned quadratic curve mentioned in Equation (1) and road information from the map. The computation of

and

is attached in

Appendix A, and the results are shown in Equations (6) and (7).

Then, the deviation of the vehicle from the lane center can be presented as follows:

where

is the chi-square distance between the two vectors,

and

.

is the covariance matrix of the lane state vector, and

is the covariance matrix of the vehicle state vector. The moving process is continuous, whereas the maneuvering space is discrete. Intent recognition is performed by mapping a set of continuous features into the discrete maneuvering space. In [

13], the value of D is used to estimate the maneuvering intent of the vehicle. If the value is small, the vehicle is assumed to maintain its lane, whereas the vehicle is performing a lane change if the value is larger than a fixed threshold. This method faces a challenge, i.e., a fixed threshold would introduce a high false detection rate. To improve the robustness, a feature vector is constructed as follows:

where

is the number of samples that we use in the intent-estimating process and

represents the acceleration in the direction of the lane. This vector contains the relationship between the vehicle and lane, as well as the moving state of the detected vehicle. As for a lane change, the prediction is successful if the intention of changing lane is estimated before any part of the vehicle enters the target lane.

Data in

Table 1 are used to illustrate the computation of the feature vector. To eliminate the effect of different dimensions, acceleration is measured with m/s

2 and yaw is measured with degree/s; the feature vector is normalized with Z-score standardization, which is introduced in

Appendix A. The third row and the forth row show the means

and standard deviation

of the features. Two examples, extracted from a right lane change and a normal moving event, are shown in the fourth row and the fifth row. The Z-scores of the samples are shown in the last two rows.

To obtain accurate driving intent, a learning method that utilizes Gaussian mixture models (GMM) [

14] is applied. GMM consists of a basis Gaussian distribution with a linear method. For a feature vector

M of length

n, the probability distribution function is as follows:

where

K is the number of mixture Gaussian distributions and

denotes the mixture weights of the

mixture function, which satisfy the condition

. The GMM can be represented with a set of components

and Equation (11):

The expectation maximization algorithm [

15] is used to learn the parameters of the GMM, namely,

. In this paper, we use a GMM of three possible mixtures related to the three mentioned maneuvers of interest, i.e., normal moving, left lane change and right lane change.

For each state vector

M, the posterior probability with each component

of the GMM can be obtained, and the best matched component satisfies the following equation:

where

is the subscript of the component.

To obtain an intent predictor, trajectories of 200 drivers were collected, including trajectories on straight roads and winding roads, and used in the training process. The data used are shown in

Table 2.

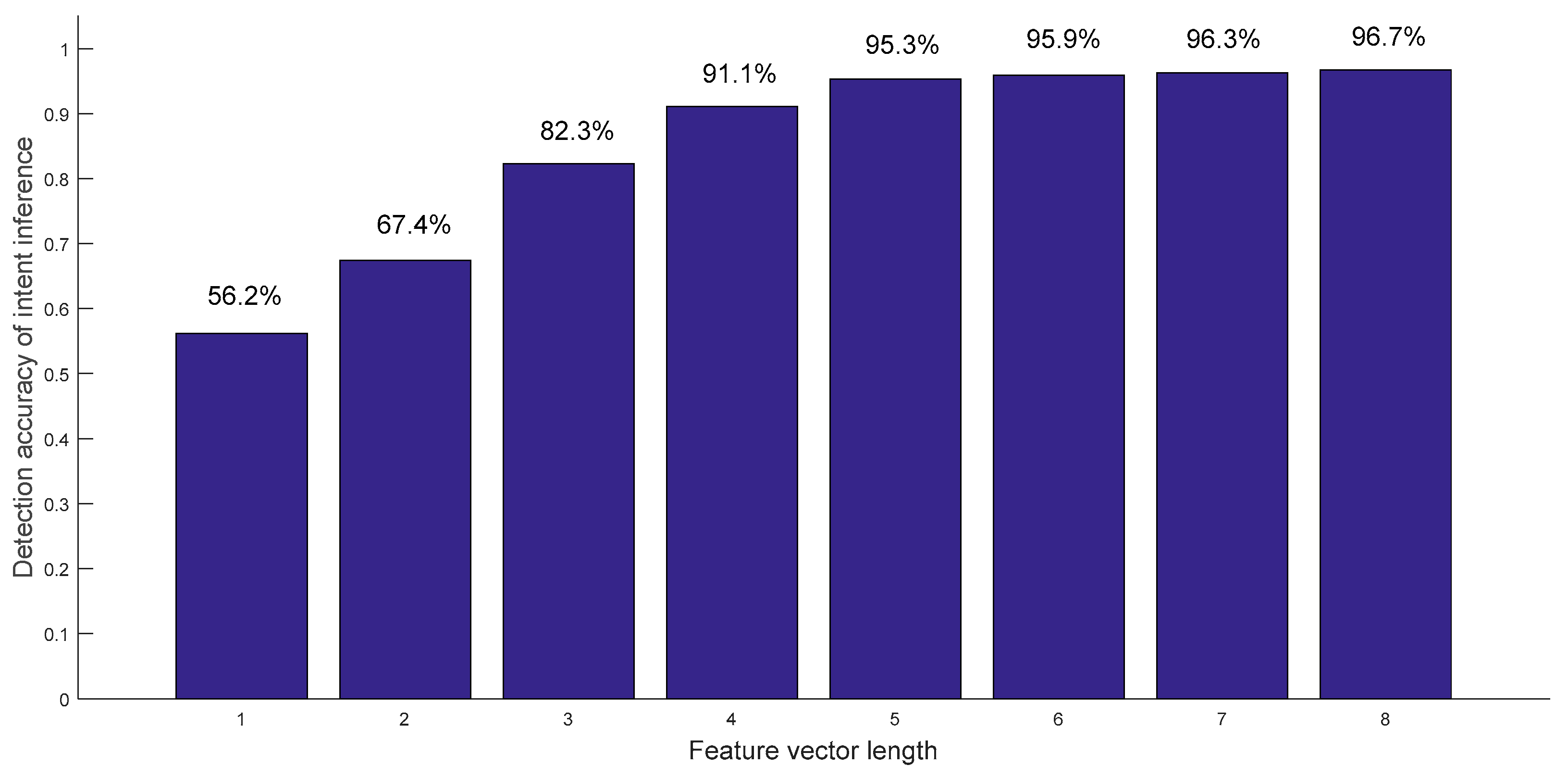

To obtain ideal results, the length of

M, i.e., the value of

n, should be chosen carefully. The performance is improved with increased

n, although the real-time ability of the system decreases, as shown in

Figure 5.

The growth rate of the accuracy decreases, and it is limited when n is larger than five. The accuracy reaches 93.5% when n is five. In this paper, the system runs at 25 Hz, and n is set as five. It means that the intent-estimation process needs approximately 200 ms, which is acceptable.

2.3. Trajectory Prediction with Intent and Motion Model

The maneuver intent can be estimated with the GMM-based method, but it is difficult to predict the real trajectory as there are too many realizations for a maneuver. With the initial state, the intended state and the maneuvering information, an ideal trajectory can be obtained. The prediction can be divided into two steps. First, a set of trajectories is generated considering the randomness of driving habits. Then, an appropriate trajectory is selected by considering comfort and duration. The Frenet frame [

16] along the lane center line is used to predict the trajectories and then converted to the initial Cartesian coordinates. The lateral component is represented with

, and the longitudinal component is

. The initial state and final state of the maneuver can be represented with

and

.

Going a step further, the initial state can be represented with Equation (15):

where

is the distance between the initial position of the vehicle and the lane center line. The orientation of the lane center line’s tangent vector

is represented by

. The normal acceleration of the vehicle is represented as

. For the final state of the trajectory, the vehicle is supposed to be moving along the lane center line, and the longitudinal acceleration is a constant value during the maneuvering time. The state can be modeled with the following vector:

If the vehicle is performing a lane change, the value of

is the lane width; otherwise, it is set to zero. The duration of a maneuver is limited, and it is set as

. A set of trajectories can be obtained by sampling the duration:

. The lateral movement of the vehicle can be modeled with a quintic curve [

17], as represented in Equation (17):

Combing Equation (16) and (17), the following equation can be obtained:

For the longitudinal movement, a quartic line is used, which can be represented as follows:

The following equation can then be established:

where

and a set of trajectories can be obtained by solving the previous two equations with different ending times

. To choose the most likely trajectory, two conditions should be considered: the normal acceleration and the duration of the maneuver. The cost function can be summarized into Equation (21):

where

is a coefficient that is used to measure the effects of comfort and time, which is selected by analyzing collected data. In this paper, the value of

is set as 0.5 to achieve the best result. The selected maneuver trajectory

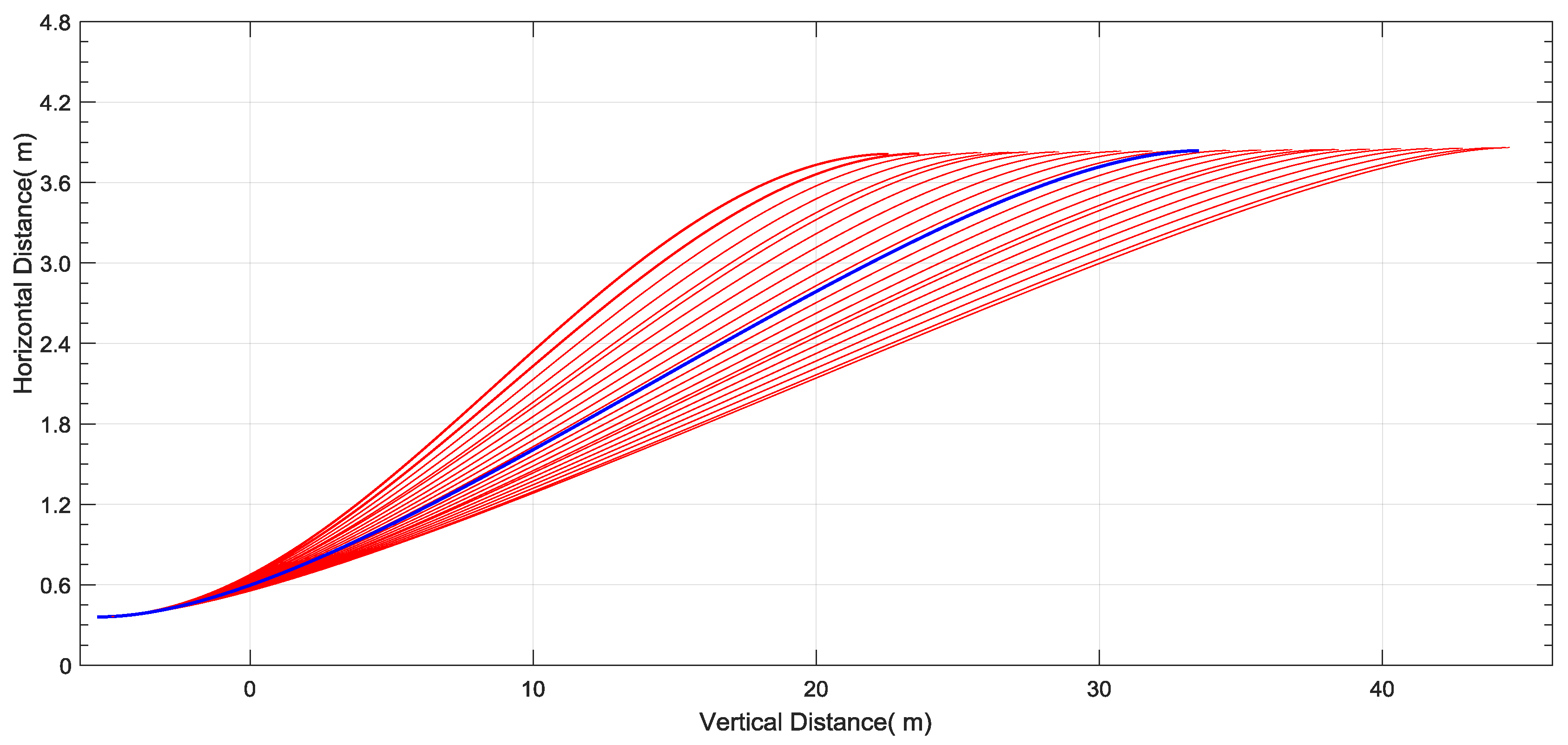

is the trajectory with the smallest cost. All of the generated trajectories are shown in

Figure 6. The selected trajectory is marked with the blue dotted line, while the other trajectories are in red.

The long-term trajectory prediction is a desirable result that ignores the current moving state, which is indispensable for trajectory prediction. To increase the accuracy, the long-term predictions and the short-term predictions should be combined. The short-term is achieved by taking advantage of the current moving state of the vehicle and the constant yaw rate and acceleration motion model [

18]. The velocity can be obtained as follows:

where

and

are initial acceleration and velocity of the detected vehicle;

and

represent the initial yaw rate and moving direction of the detected vehicle. The trajectory

can be obtained by integrating the velocity:

where

and

can be obtained by substituting the initial position into the equation.

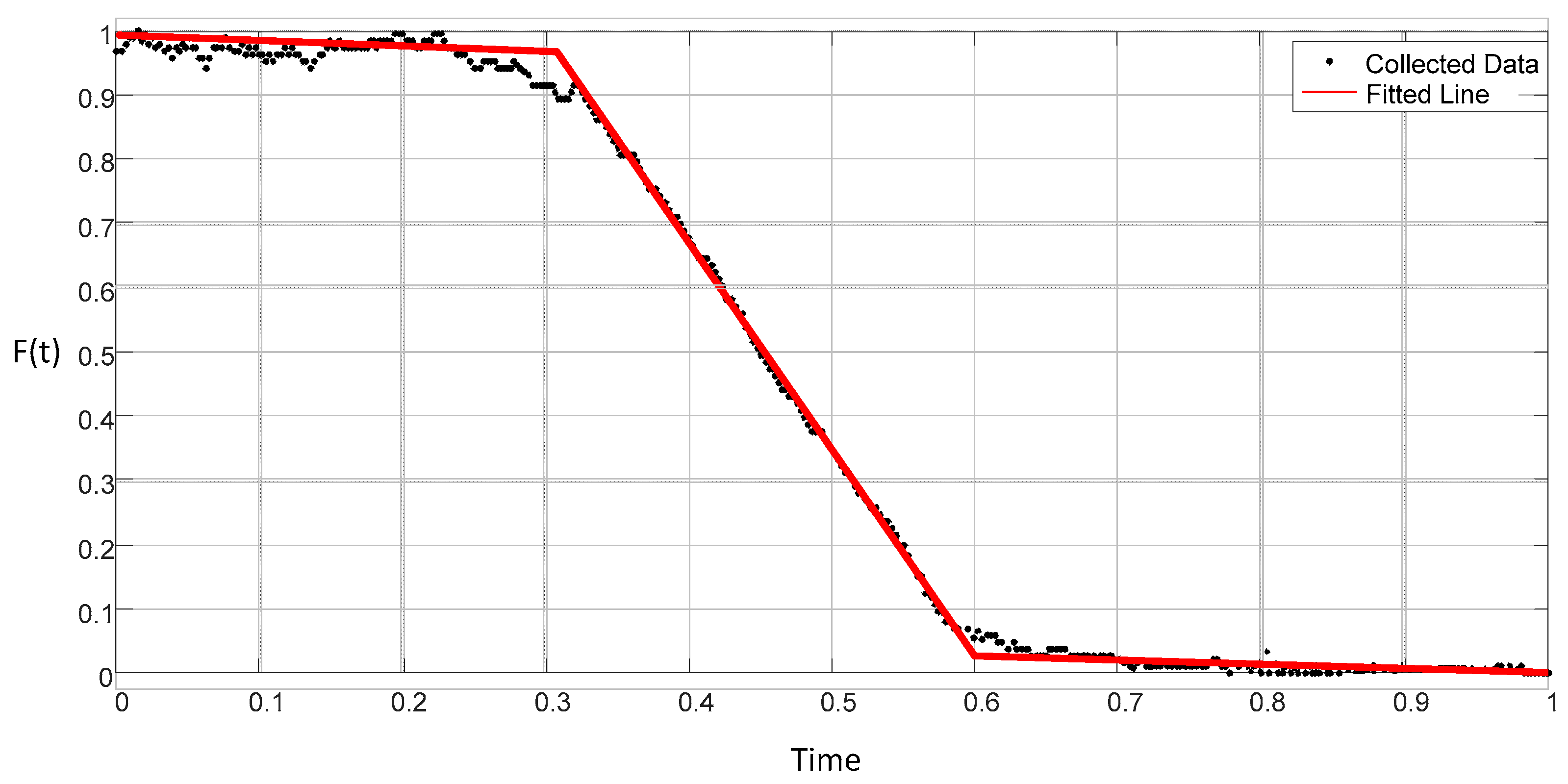

We proposed a method that combines the short-term and long-term trajectory predictions by generating a fit. The collected data show that the influence of the ideal trajectory

increases with

, whereas the influence of the current moving state decreases, and the trajectory can be obtained with Equation (25):

Using this function, the problem becomes a curve-fitting problem, which is related by the parameter

t, a proportion of the maneuvering time ranging from zero to one. With our collected data, the influence curve is shown as a red line in

Figure 7.

decreases much more slowly in the first and third segments than in the second segment, which is in agreement with the practical situation.

The trajectory prediction error of a typical lane change, which fluctuates with t, is shown in

Figure 8. The error in

increases from 0 to more than 5 m, which is too large for collision avoidance, and the error in

first increases to more than 1 m, but then decreases. On the other hand, the maximum error in

is approximately 0.8 m, which is acceptable during the collision detection process. The average errors of

,

and

are 1.98 m, 0.72 m and 0.41 m, which indicate that the accuracy of the fused trajectory is higher.