1. Introduction

Nowadays, in computer science research is important to offer optimal techniques for a variety of problems, nevertheless, for most of these problems are difficult to formalize a mathematical model to optimize. Soft-computing optimization techniques, such as Evolutionary Computing (EC) [

1], Artificial Neural Networks (ANN) [

2] and Artificial Immune Systems (AIS) [

3,

4,

5], approach these kinds of problems by offering good approximate solutions in an affordable time. EC algorithms offer an analogy of the competitive process in natural selection applied to multi-agent search for multi-variate problems, in the same way, AIS are based on the adaptive properties of the vertebrates immune system.

The vertebrates immune system has been developed through time by natural selection to overcome many diseases, although some of this protection mechanisms are inheritable, the immune system is capable of adapting to a new variety of Antigens (AGs) (foreign particles) in order to acquire specific protection [

6]. This specific protection is given by Antibodies (ABs) that attach to AGs with certain affinity in the so-called humoral immunity. ABs are produced by the differentiation of the lymphocyte B (B-cell). In the case that the body does not have a specific AB for an AG, the B-cells compete for producing a better affinity AB with the help of lymphocyte T CD4

(Th-cell), this competition is the inspiration of the Clonal Selection algorithm [

7], which has many variants and improvements [

8,

9].

When an infection prevails, the innate immune response is not capable of managing it. In this case, the adaptive immune response starts a process called clonal expansion of B-cells, looking for a B-cell with high-affinity ABs [

6]. The highest affinity of ABs is achieved by a biological process called Germinal Center reaction. The Germinal Centers are temporal sites in the secondary lymph nodes histologically recognizable, where inactive B-cell enclose active B-cells, Follicular Dendritic Cells (FDC) and Th-cells with the objective of maturating the affinity through a competitive process. For a better understanding of the biological phenomenon, we refer the interested readers to [

10].

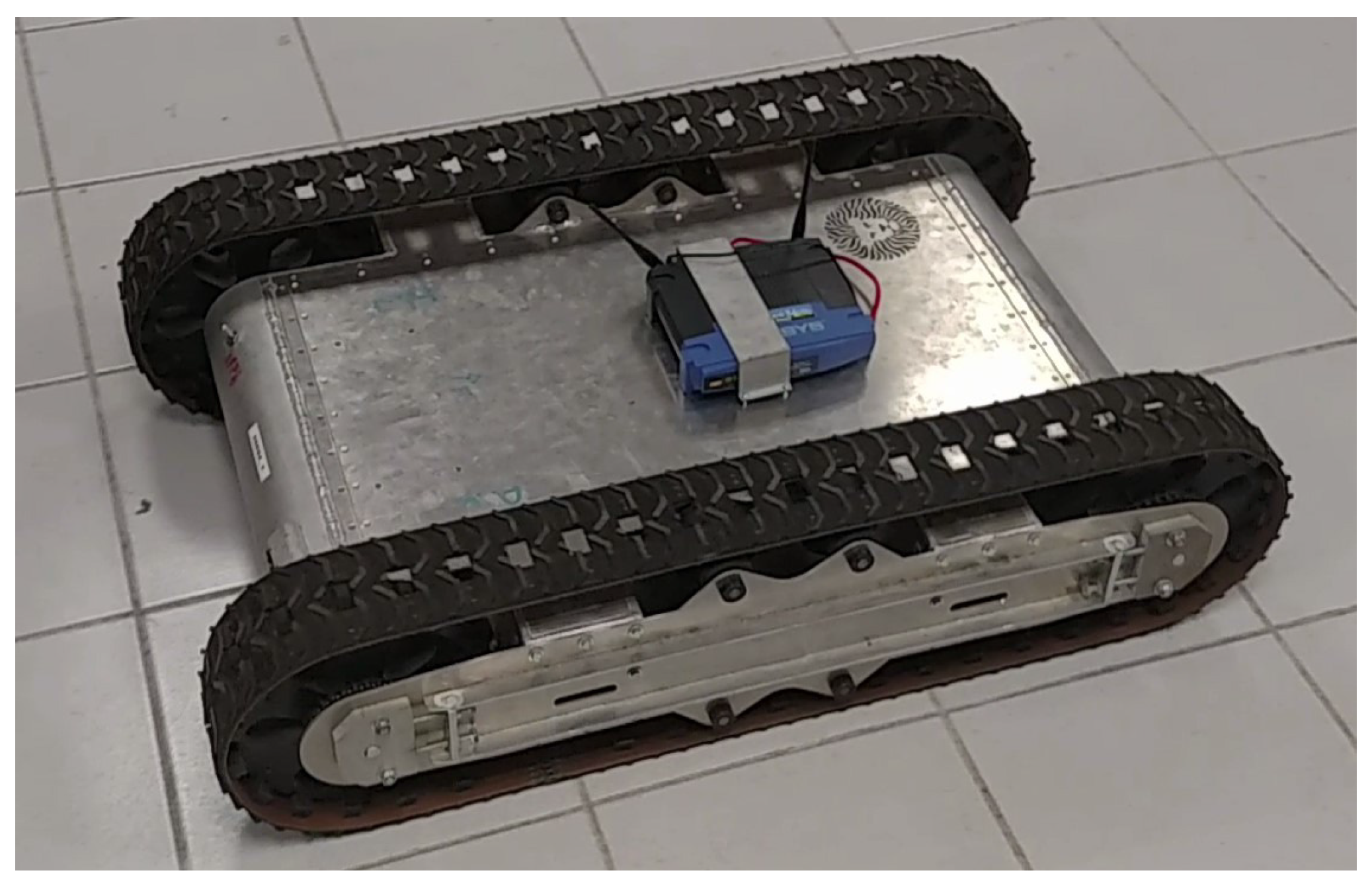

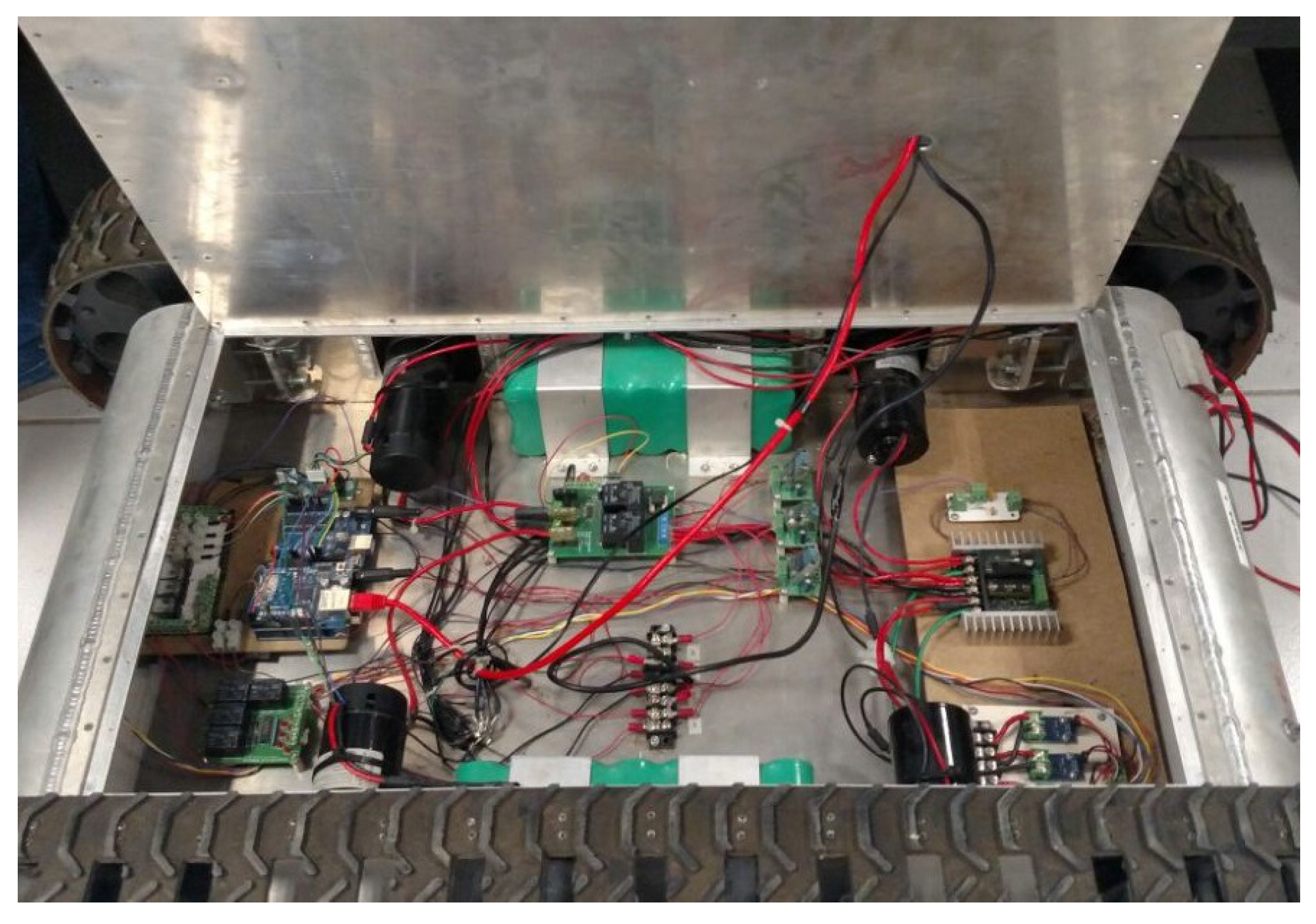

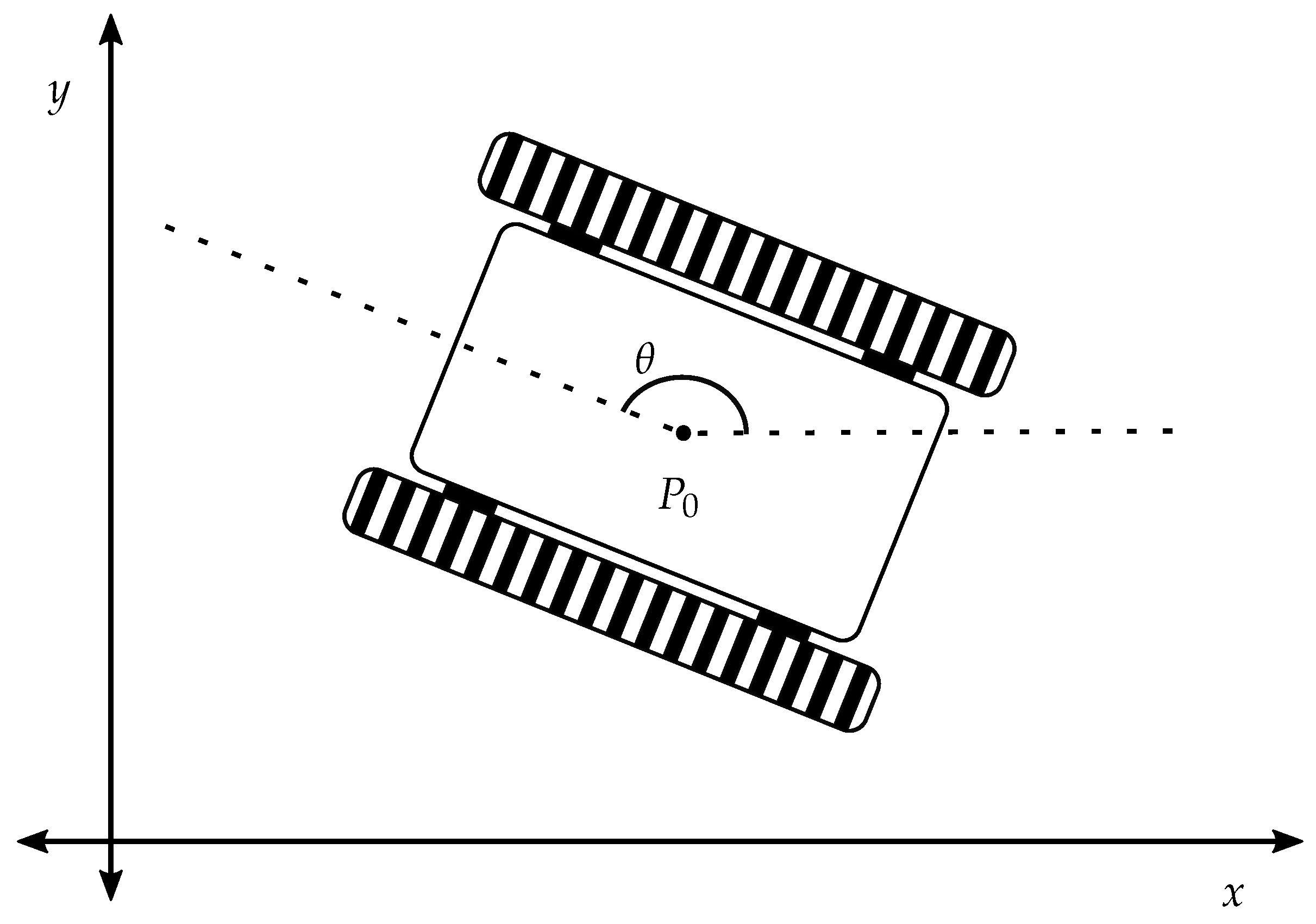

In this paper, we use Germinal Center Optimization (GCO), a new multi-variate optimization algorithm, inspired by the germinal center reaction, that hybridizes some concepts of EC and AIS, for optimization of an inverse optimal controller applied to an all-terrain tracked robot. The principal feature of GCO is that the particle selection for crossover is guided by a competitive-based non-uniform distribution, this embedded the idea of temporal leadership, as we explain in

Section 2.3.

On the other hand, most of the modern control techniques need the knowledge of a mathematical model of the system to be controlled. This model can be obtained using system identification in which the model is obtained using a set of data obtained from practical experiments with the system. Even when the system identification technique does not obtain an exact model, satisfactory models can be obtained with reasonable effort. There is a number of system identification techniques, to name a few: neural networks, fuzzy logic, auxiliary model, hierarchical identification. Among these system identification techniques, system identification using neural networks stands out, especially using recurrent neural networks which have a dynamic behavior [

2,

11,

12].

The Recurrent High Order Neural Networks (RHONNs) are a generalization of the first order Hopfield network [

11,

12]. The presence of recurrent and high order connections gives the RHONN compared to a first order feedforward neural networks [

11,

12,

13]: strong approximation capabilities, a faster convergence, greater storage capacity, a Higher fault tolerance, robustness against noise and dynamic behavior. Also, the RHONNs have the following characteristics [

11,

12,

14]:

They allow an efficient modeling o complex dynamic systems, even those with time-delays.

They are good candidates for identification, state estimation, and control.

Easy implementation.

A priori information of the system to be identified can be added to the RHONN model.

On-line or off-line training is possible.

The goal of the inverse optimal control is to determine a control law which forces the system to satisfy some restrictions and at the same time to minimize a cost functional. The difference with the optimal control methodology is that the inverse optimal control avoids the need of solving the associated Hamilton-Jacobi-Bellman (HJB) equation which is not an easy task and it has not been solved for general nonlinear systems. Furthermore, for the inverse approach, a stabilizing feedback control law, based on a priori knowledge of a Control Lyapunov Function (CLF), is designed first and then it is established that this control law optimizes a cost functional [

15].

The control scheme consisting of a neural identifier and an inverse optimal control technique is named neural inverse optimal control (NIOC), this control scheme has shown good results in the literature for trajectory tracking [

15,

16,

17]. However, the designer has to tune the appropriate value of some parameters of the controller discuss later in this work, the quality of the controller depends directly on this selection.

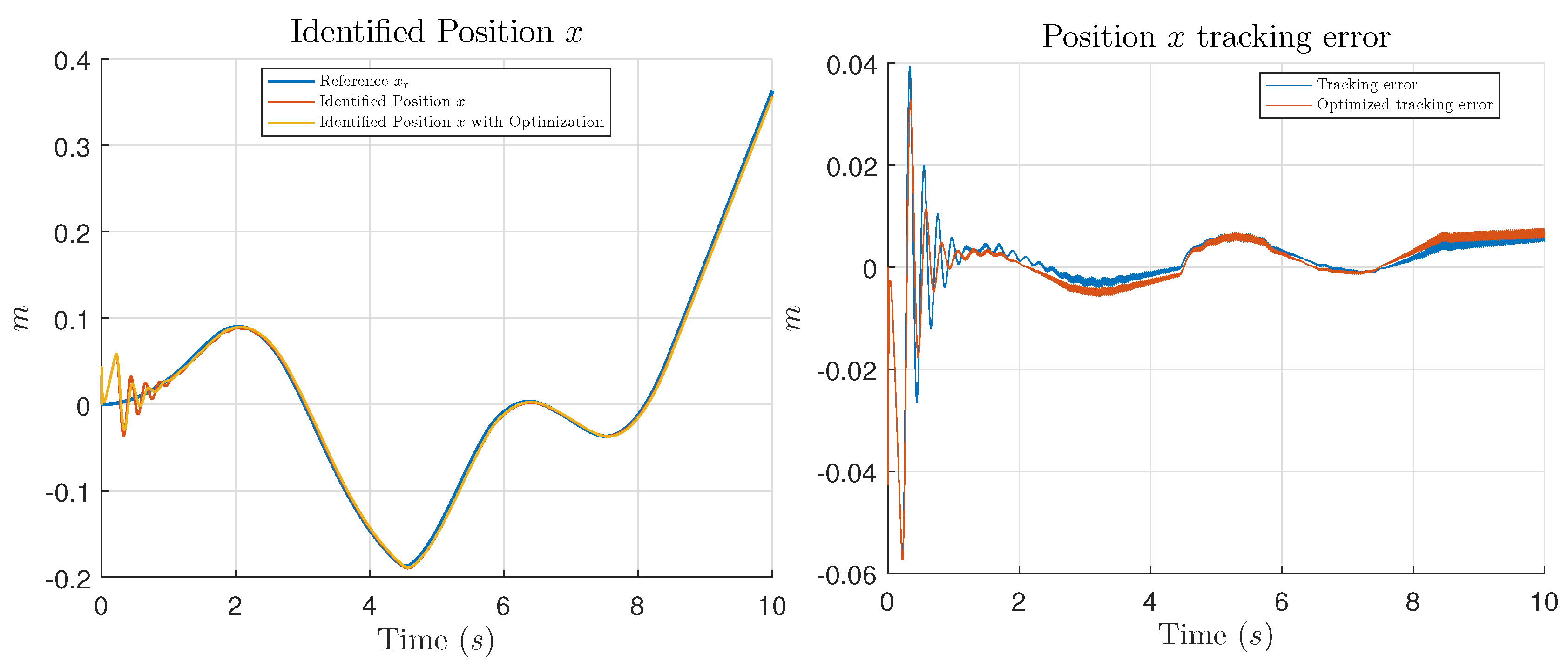

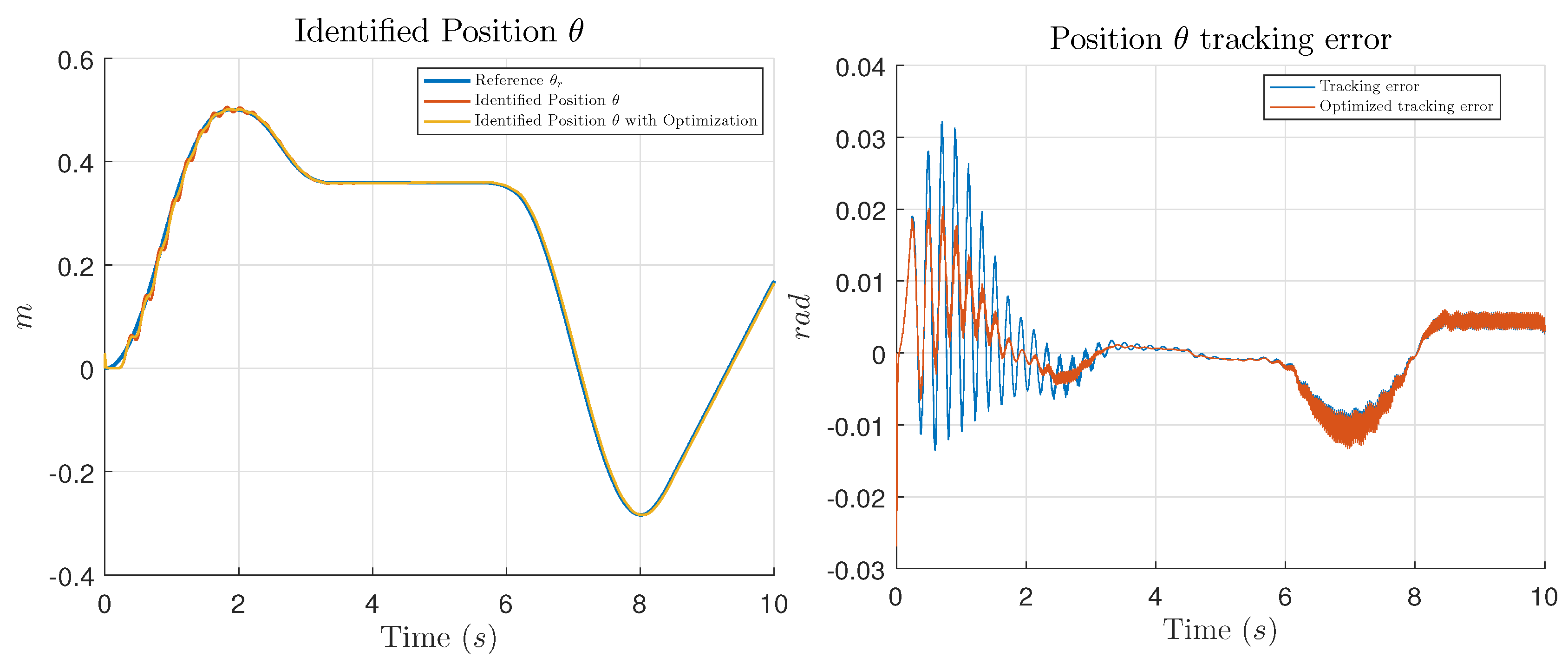

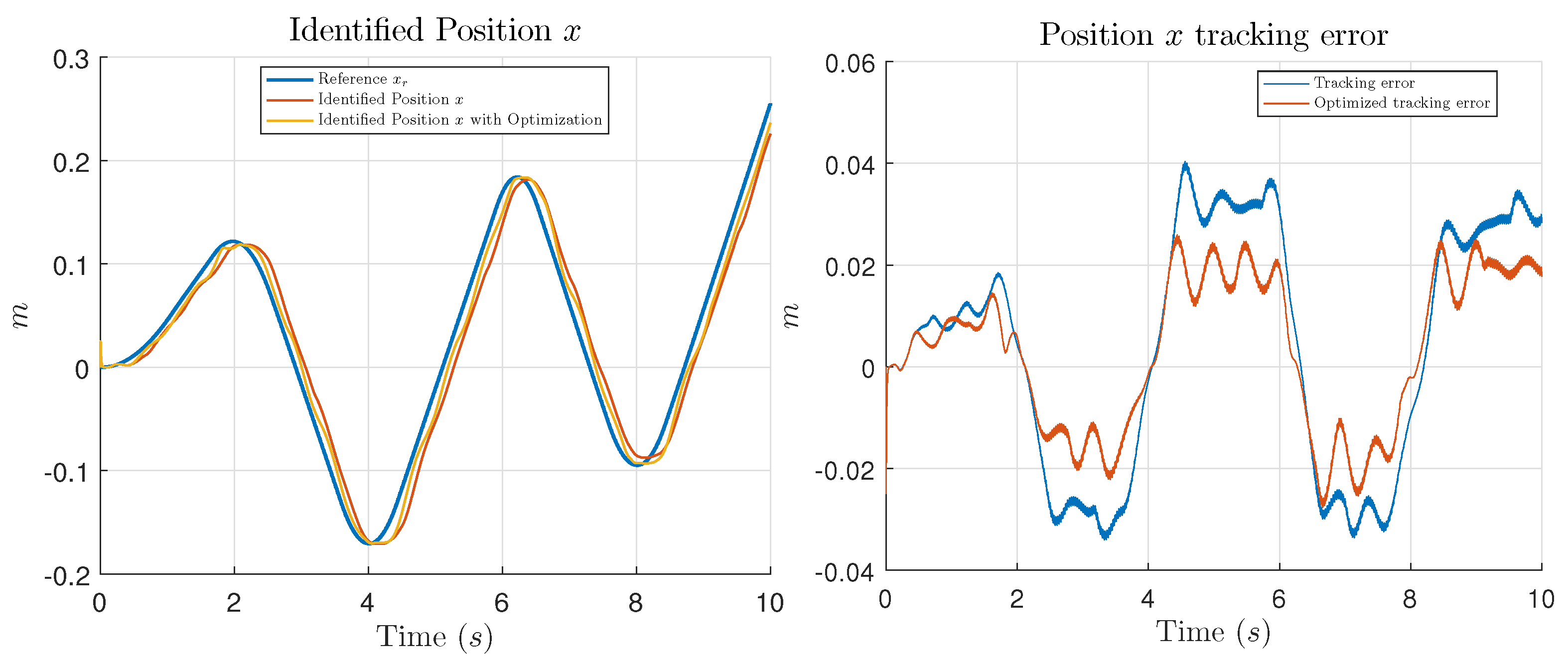

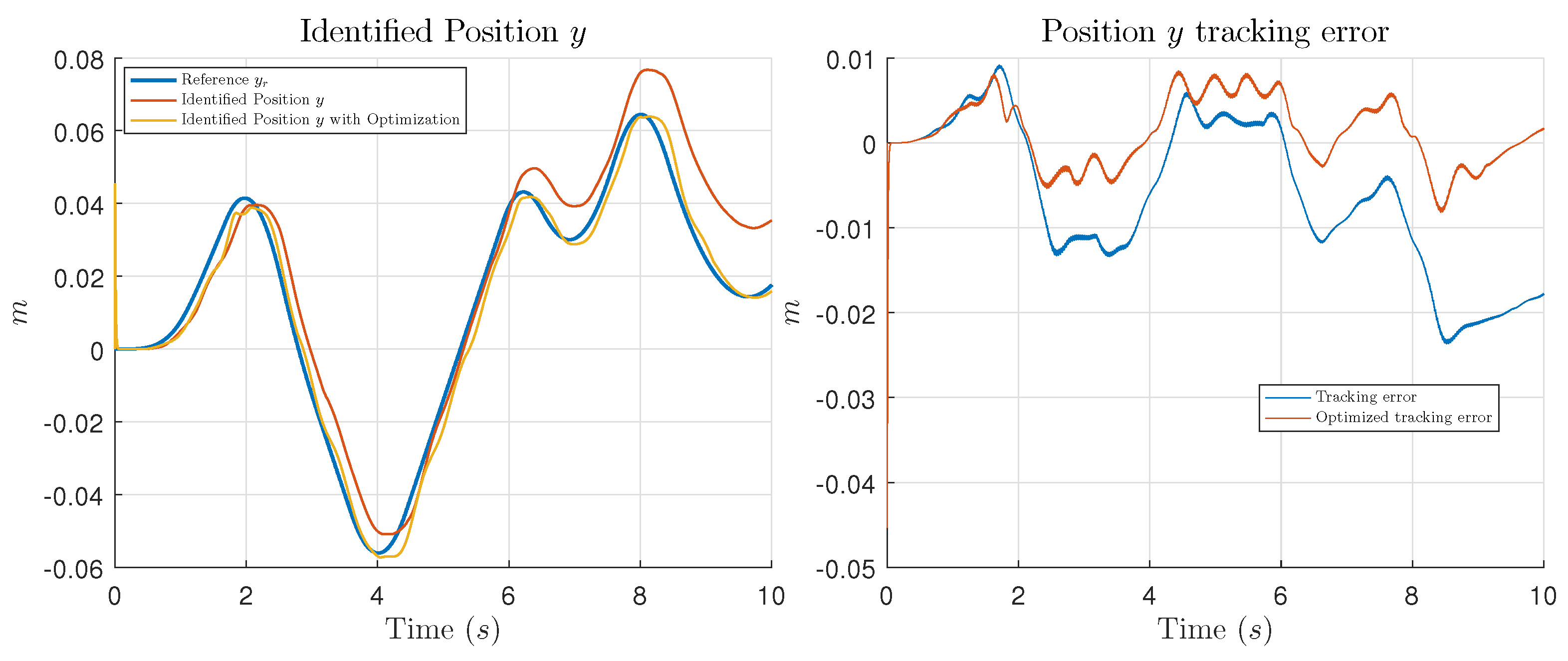

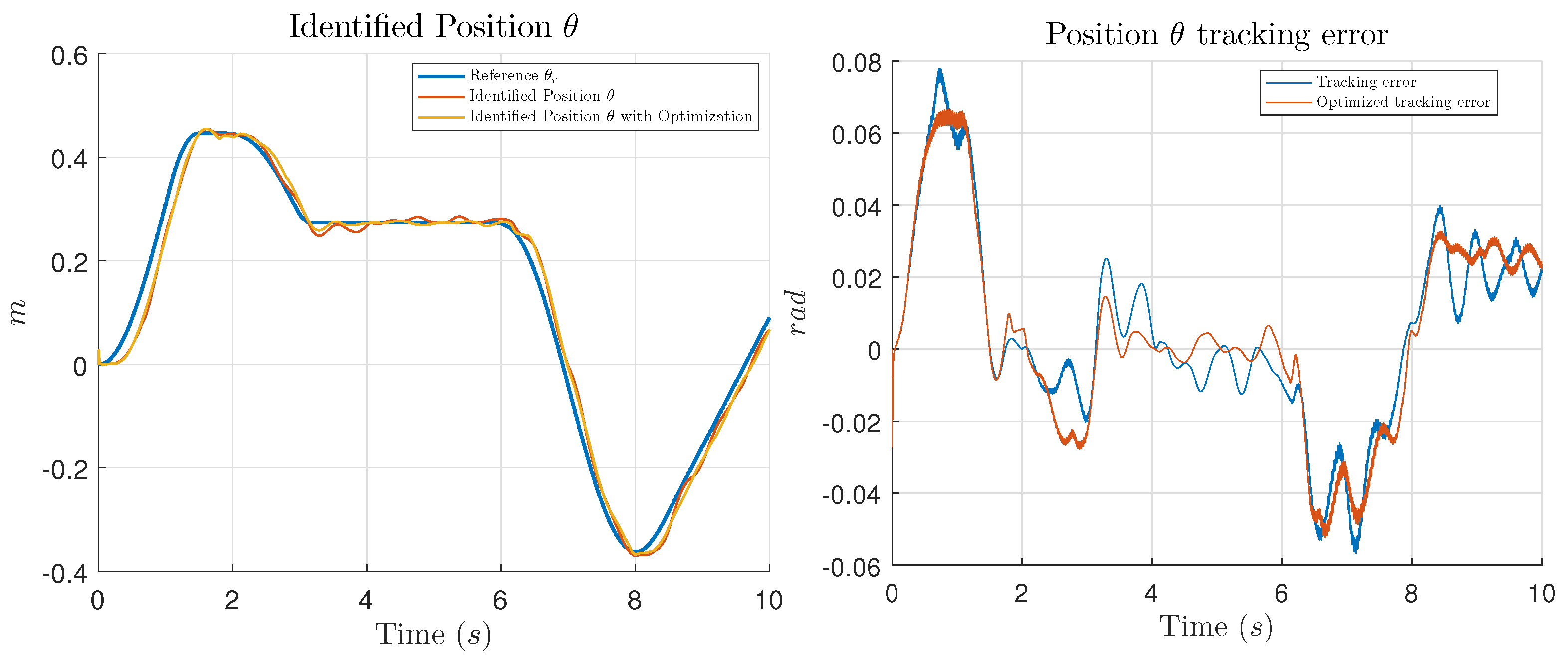

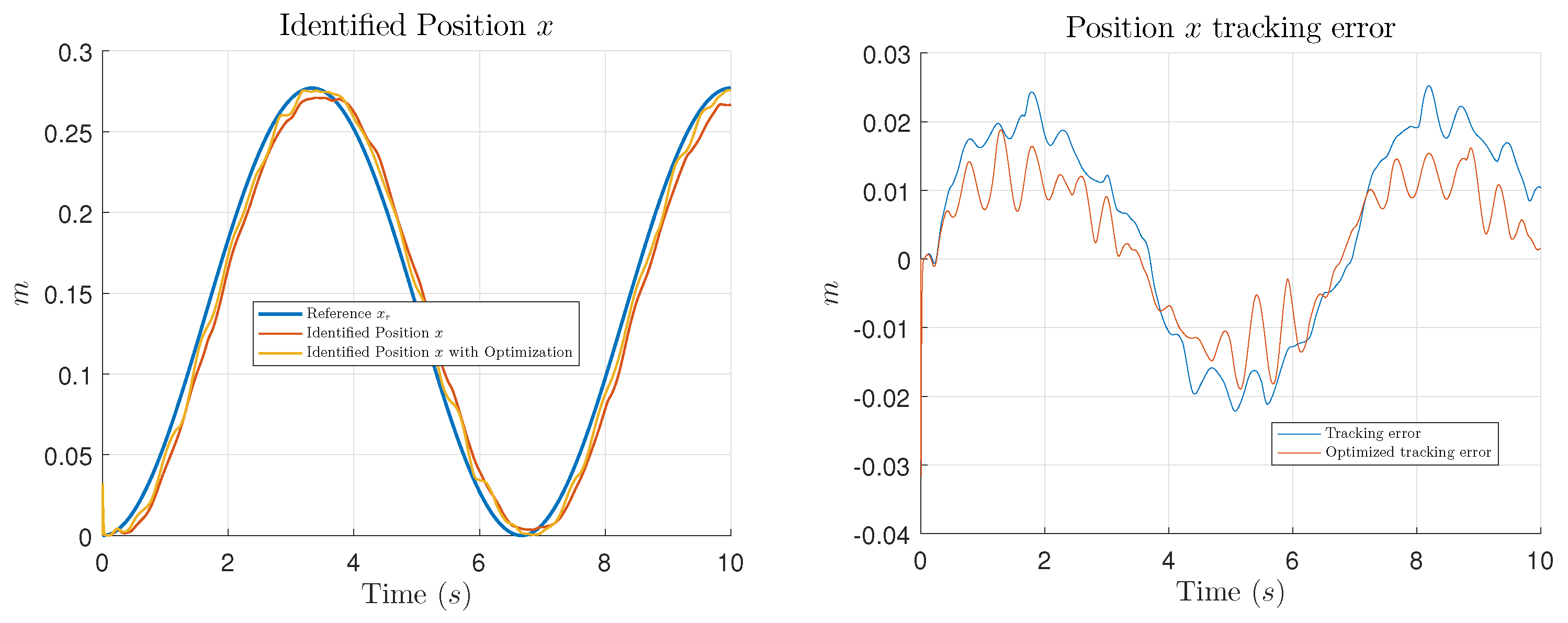

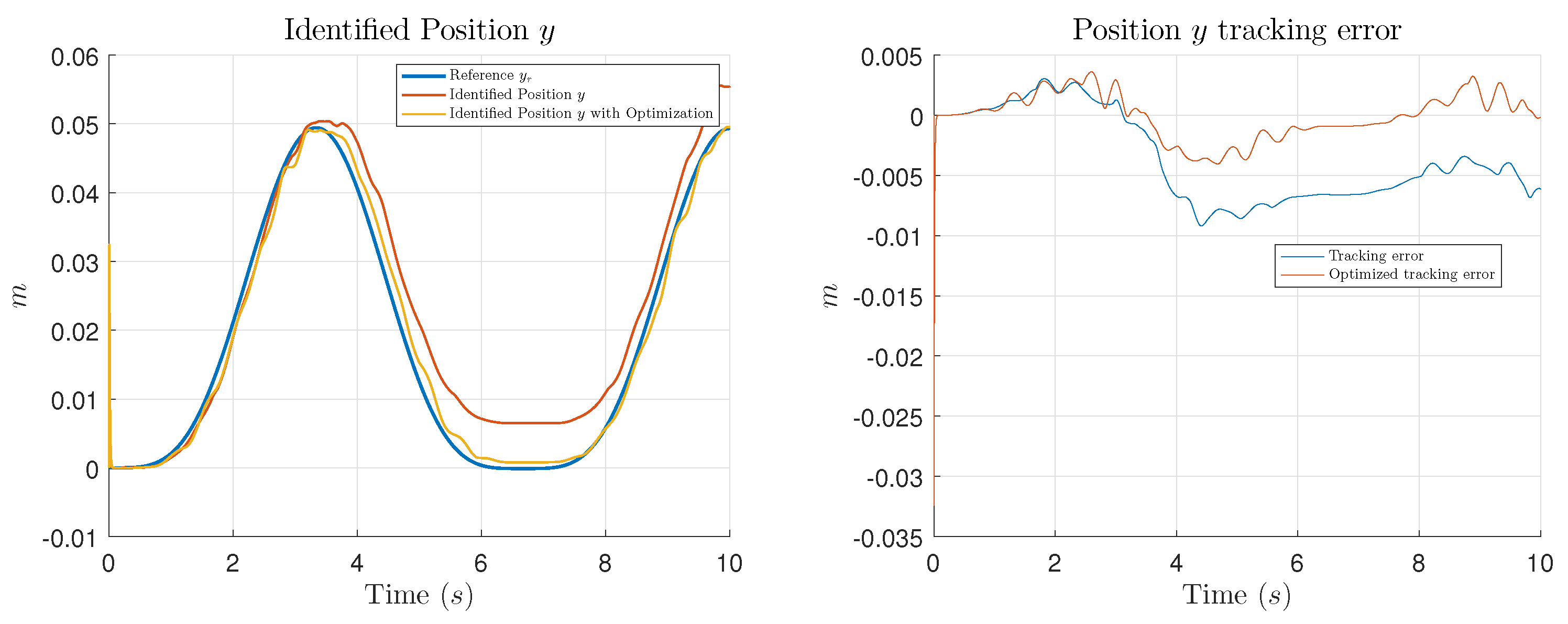

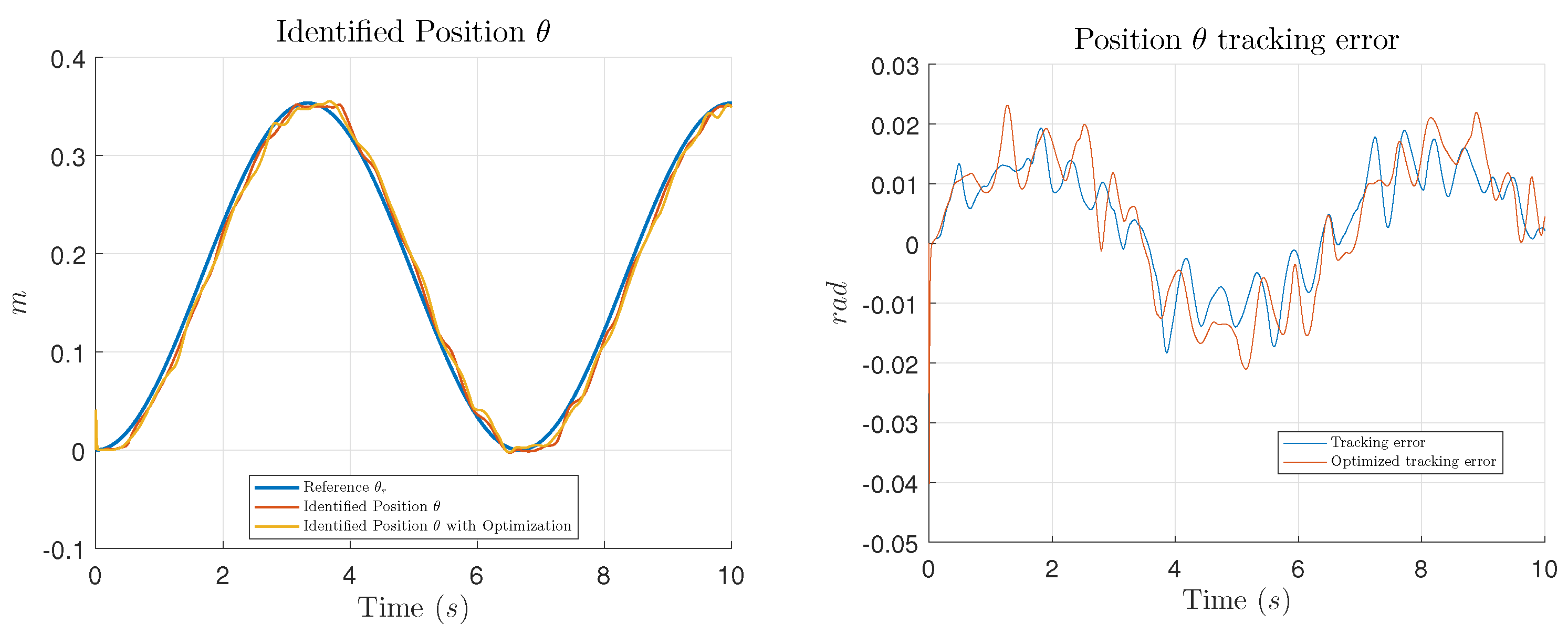

In this work, the main contribution is the introduction of an optimization process using GCO, in order to find the appropriate values for the controller parameters which minimize the tracking error of the system to be controlled. Performance of the optimization is shown presenting simulation and experimental tests comparing the results of the trajectory tracking using the NIOC with the parameters selected by the designer and the results using the parameters given by the GCO algorithm.

This work is organized as follows: In

Section 2 the Germinal Center Optimization algorithm is described. In

Section 2.1 the vertebrates adaptive immune system is briefly explained, in

Section 2.2 we detail the germinal center reaction and in

Section 2.3 the computation analogy of the germinal center reaction is presented along with the algorithm description.

Section 3 introduces the Neural Inverse Optimal Control (NIOC) scheme for this work, where

Section 3.1 presents the RHONN identifier and the extended kalman filter (EKF) training, and in

Section 3.1.2 the design of the inverse optimal control law is discussed.

Section 4 unveils comparative simulations (

Section 4.2) and experimental (

Section 4.3) results between the selection of the parameter of the controller using the GCO algorithm and the classic way which is let completely to the designer for an application of the NIOC to an All-Terrain Tracked Robot. Conclusions of this work are included in

Section 5.

2. Germinal Center Optimization

In this section, we briefly overview the principal processes in the vertebrates immune system, and we detail the germinal center reaction. After that, we propose the computational analogy for multi-variate optimization, with the proper algorithm description.

2.1. Adaptive Immune System

The vertebrates immune system (VIS) is the biological mechanism for protecting the body from AG. There are two types of immunities, the innate immunity, and the adaptive one. The innate immunity is conformed by epithelial barriers that prevent the entrance of AG, phagocytes that swallow AG, FDCs that capture antigen and lymphocytes NKs (Natural Killers) that destroy any non-self cell.

The innate immunity is an inheritable protection that has been developed through natural selection, but if a new type of AG gets inside the body could overcome this basic protections, in this case, the adaptive immunity takes place. The adaptive immunity is conformed by B-cells whose main functions are to internalize AG for presentation and generate ABs, Th-cells that give a life signal to high-affinity B-cells and cytotoxic T-cells that kills own cells that are already infected or kills carcinogenic cells [

6].

The affinity of the innate immunity is not diverse because it is coded in the germinal line, in the other hand the adaptive immunity has a high-affinity diversity because the receptors are produced for somatic recombination and variate with somatic hyper-mutation [

6,

18].

There are two types of adaptive immune response, the humoral immunity, and the cellular immunity. The first one is based on ABs that travel in the bloodstream, attaching to every compatible particle. The B-cells compete for antigen internalization and presentation to the Th-cell, whose reward them with a life signal, then the B-cell proliferates by clonal expansion and differentiates into plasmatic cells, releasing higher affinity ABs in the bloodstream.

There are some AGs that infect the owner cells and hide inside them, these cells are destroyed by the cellular immunity with the cytotoxic T-cell. The adaptive immune response has the following features, as is shown in [

6]:

Specificity: Ensure to produce a specific AB for a specific AG

Diversity: The immune system is capable of responding to a great variety of AGs

Memory: Using memory B-cells, the immune system is capable of fighting repeated infections

Clonal expansion: Increase the number of lymphocytes with high affinity of certain AGs

Homeostasis: The immune system recover from an infection by itself

No self-reactivity: The immune system does not attack the host body in the present of AGs

2.2. Germinal Center

When the body does not have a specific AB for an infection, the body starts the process of affinity maturation with the germinal center reaction. Germinal centers are micro-anatomical regions in the secondary lymph nodes, that form in the present of antigen [

10]. The AGs that survive the other immunity mechanism arrives at the secondary lymph nodes, where are capture for the FDCs. The FDCs activate near B-cells. The active B-cells end up being enclosed by the inactive ones forming a natural barrier that allow the active cells to proliferate, mutate and be selected.

The B-cells start to proliferate inside the GC, and compete for the antigen, this competition polarizes the GC in two distinct zones, the dark zone and the light zone. The dark zone is where B-cells proliferate through clonal expansion and somatic hyper-mutation, this process ensures the diversity of ABs. On the other hand, the light zone is where the B-cells are selected in accordance with their affinity.

On the light zone, the B-cells must find AG and internalize it, with the final purpose of digest the AG and expose their peptides to the Th-cell. The Th-cell gives a life signal allowing B-cells high affinity to live more time and therefore, proliferate and mutate with higher success.

In

Figure 1, we show a schematic summary of the process. The GC reaction ensures the diversity through clonal expansion and somatic hyper-mutation in the dark zone, while in the light zone is a competitive process that reward the more adapted B-cells. The B-cells reentry to dark zone making this process an iterative refinement of the affinity [

19,

20].

Finally, when the GC generates high affinity B-cells for certain AG, some B-cells differentiate into plasmatic cells and release their ABs. A few B-cells become Memory B-cells that could live a long time and keeps information about this particular AG. Then, the GCs are capable of generating specific AB for a specific AG, and keep this information in Memory B-cells for future infections [

21].

2.3. Algorithm Description

In this section, we explain the GCO algorithm. In

Table 1, we present the computational analogy between germinal center and the optimization problem.

The GC reaction has multiple competitive processes, the GCO algorithm does not try to simulate GC reaction per se but to use some of its competitive mechanisms. A key factor in the GC reaction is the distinction between the dark zone and the light zone. The dark zone represents the diversification of the solutions that could be understood like a mutation process, many algorithms, such as Differential evolution and Genetic algorithms [

1], already have the idea of mutation, but the dark zone includes not only a mutation process (somatic hyper-mutation), but also the clonal expansion. This clonal expansion is guided by the life signal of the B-cell, denoted by

. The B-cells with greater life signal are more likely to clone, increasing the B-cell multiplicity.

In the light zone, B-cells with the best affinity are rewarded and the other cells age (lower their life signal). Then, the affinity-based selection in the light zone changes the probability of clone or death of a B-cell. In

Figure 2, we present the GCO algorithm flowchart, where “For each B-cell” indicates that the process is applied in every candidate solution.

As we are dealing with a population-based algorithm [

1], there is a particular interest in the cells mutation and which information we use for crossover particles. In the GCO algorithm, we use the distribution of the cells multiplicity, denoted with

, to select three individuals for crossover. It is important to note that initially, all the cells have a multiplicity of one, then the individuals are uniformly selected, but this distribution changes through iterations modeled by the competitive process. This kind of distribution offers different types of leadership behaviors in the collective intelligence, for example, initially the GCO algorithm behaves like Differential Evolution in the DE/rand/1 strategy [

1], and when a particle wins for many iterations, the algorithm behaves like Particle Swarm optimization [

1]. However, the leadership in GCO is not only dynamic, but it also includes temporal leadership, this is implemented when a particle mutates to a better solution, this new candidate substitutes the actual particle resetting the cell multiplicity to one.

Then, GCO algorithm offers a bio-inspired technique of adaptive leadership in collective intelligence algorithms for multivariate optimization problems. In Algorithm 1, we include an explicit pseudocode, where is the i-esim B-cell, M is a mutant B-cell and a new candidate solution, is the mutation factor and is the cross-ratio, is the distribution of cells multiplicity, and is the life signal.

| Algorithm 1: GCO algorithm |

![Applsci 08 00031 i001]() |