A Mixed Property-Based Automatic Shadow Detection Approach for VHR Multispectral Remote Sensing Images

Abstract

:1. Introduction

2. Materials and Methods

2.1. Property Analysis

2.1.1. Analysis of Chromaticity and Luminance

- (1)

- (2)

- Lower intensity value due to the direct light from the sun being obstructed, and only the ambient part is illuminating the shadowed areas, rather than both the ambient and the diffusion part of the light source, which are illuminating the nonshadow regions.

- (3)

- Higher differences between the hue component and the intensity component for shadow regions than those of nonshadow regions.

2.1.2. Analysis of Multispectral Bands

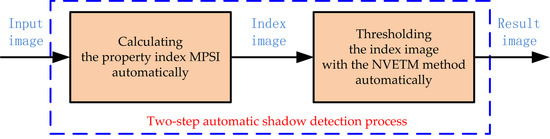

2.2. Proposed Shadow Detection Approach

2.3. Threshold Method

3. Results

4. Discussion

4.1. Subjective Assessment

4.1.1. Assessment of Test Image A

4.1.2. Assessment of Test Image B

4.1.3. Assessment of Test Image C

4.1.4. Analogy of Our Proposal among Test Images A, B, and C

4.2. Objective Assessment

4.2.1. Assessment of Test Image A

4.2.2. Assessment of Test Image B

4.2.3. Assessment of Test Image C

4.2.4. Analogy of Our Proposal among Test Images A, B and C

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Murthy, K.; Shearn, M.; Smiley, B.D.; Chau, A.H.; Levine, J.; Robinson, M.D. Skysat-1: Very high-resolution imagery from a small satellite. In Proceedings of the Sensors, Systems, and Next-Generation Satellites XVII, Amsterdam, The Netherlands, 22–25 September 2014. [Google Scholar] [CrossRef]

- Qu, H.S.; Zhang, Y.; Jin, G. Improvement of performance for CMOS area image sensors by TDI algorithm in digital domain. Opt. Precis. Eng. 2010, 18, 1896–1903. [Google Scholar] [CrossRef]

- Lan, T.J.; Xue, X.C.; Li, J.L.; Han, C.S.; Long, K.H. A high-dynamic-range optical remote sensing imaging method for digital TDI CMOS. Appl. Sci. 2017, 7, 1089. [Google Scholar] [CrossRef]

- Fauvel, M.; Chanussot, J.; Benedictsson, J.A. A spatial-spectral kernel-based approach for the classification of remote-sensing images. Pattern Recognit. 2012, 45, 381–392. [Google Scholar] [CrossRef]

- Marcello, J.; Medina, A.; Eugenio, F. Evaluation of spatial and spectral effectiveness of pixel-level fusion techniques. IEEE Geosci. Remote Sens. Lett. 2013, 10, 432–436. [Google Scholar] [CrossRef]

- Eugenio, F.; Marcello, J.; Martin, J. High-resolution maps of bathymetry and benthic habits in shallow-water environments using multispectral remote sensing imagery. IEEE Trans. Geosci. Remote Sens. 2015, 53, 3539–3549. [Google Scholar] [CrossRef]

- Martin, J.; Eugenio, F.; Marcello, J.; Medina, A. Automatic sun glint removal of multispectral high-resolution WorldView-2 imagery for retrieving coastal shallow water parameters. Remote Sens. 2016, 8, 37. [Google Scholar] [CrossRef]

- Marcello, J.; Eugenio, F.; Perdomo, U.; Medina, A. Assessment of atmospheric to retrieve vegetation in natural protected areas using multispectral high resolution imagery. Sensors 2016, 16, 1624. [Google Scholar] [CrossRef] [PubMed]

- Zhao, J.; Zhong, Y.F.; Shu, H.; Zhang, L.P. High-resolution image classification integrating spectral-spatial-location cues by conditional random fields. IEEE Trans. Image Process. 2016, 25, 4033–4045. [Google Scholar] [CrossRef] [PubMed]

- Huang, S.Y.; Miao, Y.X.; Yuan, F.; Gnyp, M.L.; Yao, Y.K.; Cao, Q.; Wang, H.Y.; Lenz_Wiedemann, V.I.; Bareth, G. Potential of RapidEye and WorldView-2 satellite data for improving rice nitrogen status monitoring at different growth stages. Remote Sens. 2017, 9, 227. [Google Scholar] [CrossRef]

- Tsai, V.J. A comparative study on shadow compensation of color aerial images in invariant color models. IEEE Trans. Geosci. Remote Sens. 2006, 44, 1661–1671. [Google Scholar] [CrossRef]

- Khekade, A.; Bhoyar, K. Shadow detection based on RGB and YIQ color models in color aerial images. In Proceedings of the 1st International Conference on Futuristic Trend in Computational Analysis and Knowledge Management (ABLAZE 2015), Greater Noida, India, 25–27 February 2015. [Google Scholar]

- Liu, J.H.; Fang, T.; Li, D.R. Shadow detection in remotely sensed images based on self-adaptive feature selection. IEEE Trans. Geosci. Remote Sens. 2011, 49, 5092–5103. [Google Scholar] [CrossRef]

- Chen, H.S.; He, H.; Xiao, H.Y.; Huang, J. Shadow detection in high spatial resolution remote sensing images based on spectral features. Opt. Precis. Eng. 2015, 23, 484–490. [Google Scholar] [CrossRef]

- Kim, D.S.; Arsalan, M.; Park, K.R. Convolutional neural network-based shadow detection in images using visible light camera sensor. Sensors 2018, 18, 960. [Google Scholar] [CrossRef] [PubMed]

- Schläpfer, D.; Hueni, A.; Richter, R. Cast shadow detection to quantify the aerosol optical thickness for atmospheric correction of high spatial resolution optical imagery. Remote Sens. 2018, 10, 200. [Google Scholar] [CrossRef]

- Wu, J.; Bauer, M.E. Evaluating the effects of shadow detection on QuickBird image classification and spectroradiometric restoration. Remote Sens. 2013, 5, 4450–4469. [Google Scholar] [CrossRef]

- Ma, H.J.; Qin, Q.M.; Shen, X.Y. Shadow segmentation and compensation in high resolution satellite images. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS 2008), Boston, MA, USA, 7–11 July 2008. [Google Scholar]

- Mostafa, Y.; Abdelhafiz, A. Accurate shadow detection from high-resolution satellite images. IEEE Geosci. Remote Sens. Lett. 2017, 14, 494–498. [Google Scholar] [CrossRef]

- Besheer, M.; Abdelhafiz, A. Modified invariant color model for shadow detection. Int. J. Remote Sens. 2015, 36, 6214–6223. [Google Scholar] [CrossRef]

- Arevalo, V.; Gonzalez, J.; Ambrosio, G. Shadow detection in color high-resolution satellite images. Int. J. Remote Sens. 2008, 29, 1945–1963. [Google Scholar] [CrossRef]

- Kang, X.D.; Huang, Y.F.; Li, S.T.; Lin, H.; Benediktsson, J.A. Extended random walker for shadow detection in very high resolution remote sensing images. IEEE Trans. Geosci. Remote Sens. 2018, 55, 867–876. [Google Scholar] [CrossRef]

- Wang, Q.J.; Yan, L.; Yuan, Q.Q.; Ma, Z.L. An automatic shadow detection method for VHR remote sensing orthoimagery. Remote Sens. 2017, 9, 469. [Google Scholar] [CrossRef]

- Li, J.Y.; Hu, Q.W.; Ai, M.Y. Joint model and observation cues for single-image shadow detection. Remote Sens. 2016, 8, 484. [Google Scholar] [CrossRef]

- Salvador, E.; Cavallaro, A.; Ebrahimi, T. Cast shadow segmentation using invariant color features. Comput. Vis. Image Understand. 2004, 95, 238–259. [Google Scholar] [CrossRef] [Green Version]

- Huang, J.J.; Xie, W.X.; Tang, L. Detection of and compensation for shadows in colored urban aerial images. In Proceedings of the 5th World Congress on Intelligent Control and Automation, Hangzhou, China, 15–19 June 2004. [Google Scholar]

- Song, H.H.; Huang, B.; Zhang, K.H. Shadow detection and reconstruction in high-resolution satellite images via morphological filtering and example-based learning. IEEE Trans. Geosci. Remote Sens. 2014, 52, 2545–2554. [Google Scholar] [CrossRef]

- Zhang, H.Y.; Sun, K.M.; Li, W.Z. Object-oriented shadow detection and removal from urban high-resolution remote sensing images. IEEE Trans. Geosci. Remote Sens. 2014, 52, 6972–6982. [Google Scholar] [CrossRef]

- Chung, K.L.; Lin, Y.R.; Huang, Y.H. Efficient shadow detection of color aerial images based on successive thresholding scheme. IEEE Trans. Geosci. Remote Sens. 2009, 47, 671–682. [Google Scholar] [CrossRef]

- Otsu, N. A threshold selection method from gray-level histograms. IEEE Tans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

- Sarabandi, P.; Yamazaki, F.; Matsuoka, M.; Kiremidjian, A. Shadow detection and radiometric restoration in satellite high resolution images. In Proceedings of the 2004 IEEE International Geoscience and Remote Sensing Symposium (IGARSS 2004), Anchorage, AK, USA, 20–24 September 2004. [Google Scholar]

- Gevers, T.; Smeulders, A.W. Color-based object recognition. Pattern Recognit. 2001, 32, 453–464. [Google Scholar] [CrossRef]

- Fan, J.L.; Lei, B. A modified valley-emphasis method for automatic thresholding. Pattern Recognit. Lett. 2012, 33, 703–708. [Google Scholar] [CrossRef]

- Gonzalez, R.C.; Woods, R.E. Digital Image Processing, 3rd ed.; Publishing House of Electronics Industry: Beijing, China, 2010; pp. 58–65. ISBN 978-7-121-10207-3. [Google Scholar]

- Phong, B.T. Illumination for computer generated pictures. Commun. ACM 1975, 18, 311–317. [Google Scholar] [CrossRef] [Green Version]

- Shafer, S.A. Using color to separate reflection component. Color Res. Appl. 2010, 10, 210–218. [Google Scholar] [CrossRef]

- Gevers, T.; Smeulders, A.W. PicToSeek: Combining color and shape invariant features for image retrieval. IEEE Trans. Image Process. 2000, 9, 102–119. [Google Scholar] [CrossRef] [PubMed]

- Sun, J.B. Principles and Applications of Remote Sensing, 3rd ed.; Wuhan University Press: Wuhan, China, 2013; pp. 18–21, 220–222. ISBN 978-7-307-10761-8. [Google Scholar]

- Janesick, B.J. Dueling Detectors. SPIE Newsroom 2002, 30–33. [Google Scholar] [CrossRef]

- DG2017_WorldView-3_DS. Available online: https://dg-cms-uploads-production.s3.amazonaws.com/up-loads/document/file/95/DG2017_WorldView-3_DS.pdf (accessed on 25 July 2018).

- Ng, H.F. Automatic thresholding for defect detection. In Proceedings of the Third International Conference on Image and Graphics (ICIG’04), Hong Kong, China, 18–20 December 2004. [Google Scholar]

- Story, M.; Congalton, R.G. Accuracy assessment: A user’s perspective. Photogramm. Eng. Remote Sens. 1986, 52, 397–399. [Google Scholar]

- Congalton, R.G.; Green, K. Assessing the Accuracy of Remotely Sensed Data: Principles and Practices, 2nd ed.; CRC Press: Boca Raton, FL, USA, 1999; pp. 56–61. ISBN 0-87371-986-7. [Google Scholar]

| Method | PA 1 (%) | SP 2 (%) | EO 3 (%) | EC 4 (%) | OA 5 (%) |

|---|---|---|---|---|---|

| SRI [11] | 75.40 | 92.98 | 24.60 | 7.02 | 83.28 |

| NSVDI [18] | 94.29 | 81.52 | 5.71 | 18.48 | 88.56 |

| MC3 [20] | 86.28 | 73.06 | 13.72 | 26.94 | 80.35 |

| SI [14] | 62.64 | 97.03 | 37.36 | 2.97 | 78.07 |

| SDI [19] | 96.18 | 86.16 | 3.82 | 13.84 | 91.69 |

| MPSI6 | 95.78 | 94.77 | 4.22 | 5.23 | 95.33 |

| Method | PA (%) | SP (%) | EO (%) | EC (%) | OA (%) |

|---|---|---|---|---|---|

| SRI [11] | 97.16 | 68.75 | 2.84 | 31.25 | 86.94 |

| NSVDI [18] | 99.94 | 11.15 | 0.06 | 88.85 | 68.01 |

| MC3 [20] | 79.20 | 88.52 | 20.80 | 11.48 | 82.55 |

| SI [14] | 62.08 | 93.70 | 37.92 | 6.30 | 73.45 |

| SDI [19] | 94.99 | 90.21 | 5.01 | 9.79 | 93.27 |

| MPSI | 95.63 | 91.76 | 4.37 | 8.24 | 94.24 |

| Method | PA (%) | SP (%) | EO (%) | EC (%) | OA (%) |

|---|---|---|---|---|---|

| SRI [11] | 98.88 | 28.69 | 1.12 | 71.31 | 75.60 |

| NSVDI [18] | 99.72 | 24.87 | 0.28 | 75.13 | 74.89 |

| MC3 [20] | 84.52 | 76.17 | 15.48 | 23.83 | 81.75 |

| SI [14] | 64.19 | 96.94 | 35.81 | 3.06 | 75.06 |

| SDI [19] | 86.68 | 94.45 | 13.32 | 5.55 | 89.26 |

| MPSI | 97.19 | 92.10 | 2.81 | 7.90 | 95.50 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Han, H.; Han, C.; Xue, X.; Hu, C.; Huang, L.; Li, X.; Lan, T.; Wen, M. A Mixed Property-Based Automatic Shadow Detection Approach for VHR Multispectral Remote Sensing Images. Appl. Sci. 2018, 8, 1883. https://doi.org/10.3390/app8101883

Han H, Han C, Xue X, Hu C, Huang L, Li X, Lan T, Wen M. A Mixed Property-Based Automatic Shadow Detection Approach for VHR Multispectral Remote Sensing Images. Applied Sciences. 2018; 8(10):1883. https://doi.org/10.3390/app8101883

Chicago/Turabian StyleHan, Hongyin, Chengshan Han, Xucheng Xue, Changhong Hu, Liang Huang, Xiangzhi Li, Taiji Lan, and Ming Wen. 2018. "A Mixed Property-Based Automatic Shadow Detection Approach for VHR Multispectral Remote Sensing Images" Applied Sciences 8, no. 10: 1883. https://doi.org/10.3390/app8101883

APA StyleHan, H., Han, C., Xue, X., Hu, C., Huang, L., Li, X., Lan, T., & Wen, M. (2018). A Mixed Property-Based Automatic Shadow Detection Approach for VHR Multispectral Remote Sensing Images. Applied Sciences, 8(10), 1883. https://doi.org/10.3390/app8101883