Transfer Learning with Deep Recurrent Neural Networks for Remaining Useful Life Estimation

Abstract

:1. Introduction

- (1)

- We developed a bidirection LSTM recurrent neural network model for RUL prediction;

- (2)

- We proposed and demonstrated for the first time that the transfer learning-based prognostic model can boost the performance of RUL estimation by making full use of different but more or less related datasets;

- (3)

- We showed that datasets of mixed working conditions can be used to improve the performance of single working condition RUL prediction while the opposite is not true. This can give good guidance in real-world application where samples of certain working conditions are hard to obtain.

2. Methods

2.1. The Turbofan Engine RUL Prediction Problem and the C-MAPSS Datasets

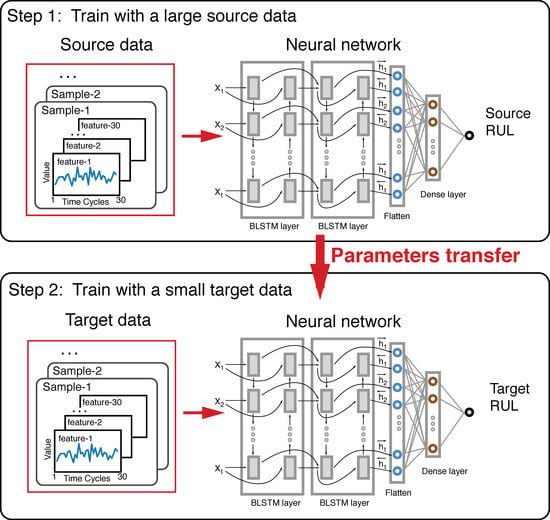

2.2. Transfer Learning for RUL Prediction

2.3. The Transfer Learning Framework for RUL Prediction

2.3.1. The BLSTM Neural Networks

2.3.2. Input Data and Parameter Settings

2.3.3. Evaluation

2.4. Data Preprocessing

2.4.1. Data Normalization

2.4.2. Operating Conditions

2.4.3. RUL Target Function

3. Experiments and Results

4. Discussion

4.1. How Working Conditions Affect Transfer Learning Performance

4.2. Negative Transfer

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Lei, Y.; Li, N.; Guo, L.; Li, N.; Yan, T.; Lin, J. Machinery health prognostics: A systematic review from data acquisition to RUL prediction. Mech. Syst. Signal Process. 2018, 104, 799–834. [Google Scholar] [CrossRef]

- Lu, C.; Wang, Z.Y.; Qin, W.L.; Ma, J. Fault diagnosis of rotary machinery components using a stacked denoising autoencoder-based health state identification. Signal Process. 2017, 130, 377–388. [Google Scholar] [CrossRef]

- Zhu, S.P.; Huang, H.Z.; Peng, W.; Wang, H.K.; Mahadevan, S. Probabilistic physics of failure-based framework for fatigue life prediction of aircraft gas turbine discs under uncertainty. Reliab. Eng. Syst. Saf. 2016, 146, 1–12. [Google Scholar] [CrossRef]

- Yin, S.; Li, X.; Gao, H.; Kaynak, O. Data-based techniques focused on modern industry: An overview. IEEE Trans. Ind. Electron. 2015, 62, 657–667. [Google Scholar] [CrossRef]

- Lee, J.; Wu, F.; Zhao, W.; Ghaffari, M.; Liao, L.; Siegel, D. Prognostics and health management design for rotary machinery systems—Reviews, methodology and applications. Mech. Syst. Signal Process. 2014, 42, 314–334. [Google Scholar] [CrossRef]

- Lu, J.; Lu, F.; Huang, J. Performance Estimation and Fault Diagnosis Based on Levenberg–Marquardt Algorithm for a Turbofan Engine. Energies 2018, 11, 181. [Google Scholar] [Green Version]

- Fumeo, E.; Oneto, L.; Anguita, D. Condition based maintenance in railway transportation systems based on big data streaming analysis. Procedia Comput. Sci. 2015, 53, 437–446. [Google Scholar] [CrossRef]

- Stetter, R.; Witczak, M. Degradation Modelling for Health Monitoring Systems. In Journal of Physics: Conference Series; IOP Publishing: Bristol, UK, 2014; Volume 570, p. 062002. [Google Scholar]

- Khelassi, A.; Theilliol, D.; Weber, P.; Ponsart, J.C. Fault-tolerant control design with respect to actuator health degradation: An LMI approach. In Proceedings of the 2011 IEEE International Conference on Control Applications (CCA), Denver, CO, USA, 28–30 September 2011; pp. 983–988. [Google Scholar]

- Jardine, A.K.; Lin, D.; Banjevic, D. A review on machinery diagnostics and prognostics implementing condition-based maintenance. Mech. Syst. Signal Process. 2006, 20, 1483–1510. [Google Scholar] [CrossRef]

- Eker, O.F.; Camci, F.; Jennions, I.K. Major Challenges in Prognostics: Study on Benchmarking Prognostics Datasets. In Proceedings of the First European Conference of the Prognostics and Health Management Society 2012, Dresden, German, 3–5 July 2012. [Google Scholar]

- Liu, K.; Chehade, A.; Song, C. Optimize the signal quality of the composite health index via data fusion for degradation modeling and prognostic analysis. IEEE Trans. Autom. Sci. Eng. 2017, 14, 1504–1514. [Google Scholar] [CrossRef]

- Lei, Y.; Li, N.; Gontarz, S.; Lin, J.; Radkowski, S.; Dybala, J. A model-based method for remaining useful life prediction of machinery. IEEE Trans. Reliab. 2016, 65, 1314–1326. [Google Scholar] [CrossRef]

- Camci, F.; Medjaher, K.; Zerhouni, N.; Nectoux, P. Feature evaluation for effective bearing prognostics. Qual. Reliab. Eng. Int. 2013, 29, 477–486. [Google Scholar] [CrossRef] [Green Version]

- Camci, F.; Chinnam, R.B. Health-state estimation and prognostics in machining processes. IEEE Trans. Autom. Sci. Eng. 2010, 7, 581–597. [Google Scholar] [CrossRef] [Green Version]

- Diamanti, K.; Soutis, C. Structural health monitoring techniques for aircraft composite structures. Prog. Aerosp. Sci. 2010, 46, 342–352. [Google Scholar] [CrossRef]

- Gebraeel, N.; Elwany, A.; Pan, J. Residual life predictions in the absence of prior degradation knowledge. IEEE Trans. Reliab. 2009, 58, 106–117. [Google Scholar] [CrossRef]

- Eker, O.F.; Camci, F.; Guclu, A.; Yilboga, H.; Sevkli, M.; Baskan, S. A simple state-based prognostic model for railway turnout systems. IEEE Trans. Ind. Electron. 2011, 58, 1718–1726. [Google Scholar] [CrossRef] [Green Version]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436. [Google Scholar] [CrossRef] [PubMed]

- Li, S.; Liu, G.; Tang, X.; Lu, J.; Hu, J. An ensemble deep convolutional neural network model with improved ds evidence fusion for bearing fault diagnosis. Sensors 2017, 17, 1729. [Google Scholar] [CrossRef] [PubMed]

- Li, S.; Yao, Y.; Hu, J.; Liu, G.; Yao, X.; Hu, J. An ensemble stacked convolutional neural network model for environmental event sound recognition. Appl. Sci. 2018, 8, 1152. [Google Scholar] [CrossRef]

- Yao, Y.; Wang, H.; Li, S.; Liu, Z.; Gui, G.; Dan, Y.; Hu, J. End-to-end convolutional neural network model for gear fault diagnosis based on sound signals. Appl. Sci. 2018, 8, 1584. [Google Scholar] [CrossRef]

- Babu, G.S.; Zhao, P.; Li, X.L. Deep Convolutional Neural Network Based Regression Approach for Estimation of Remaining Useful Life. In International Conference on Database Systems for Advanced Applications; Springer: Cham, Switzerland, 2016; pp. 214–228. [Google Scholar]

- Zheng, S.; Ristovski, K.; Farahat, A.; Gupta, C. Long short-term memory network for remaining useful life estimation. In Proceedings of the 2017 IEEE International Conference on IEEE Prognostics and Health Management (ICPHM), Allen, TX, USA, 19–21 June 2017; pp. 88–95. [Google Scholar]

- Zhao, R.; Yan, R.; Wang, J.; Mao, K. Learning to monitor machine health with convolutional Bi-directional LSTM networks. Sensors (Switzerland) 2017, 17, 273. [Google Scholar] [CrossRef] [PubMed]

- Wu, Y.; Yuan, M.; Dong, S.; Lin, L.; Liu, Y. Remaining useful life estimation of engineered systems using vanilla LSTM neural networks. Neurocomputing 2018, 275, 167–179. [Google Scholar] [CrossRef]

- Li, Z.; Wu, D.; Hu, C.; Terpenny, J. An ensemble learning-based prognostic approach with degradation-dependent weights for remaining useful life prediction. Reliab. Eng. Syst. Saf. 2018. [Google Scholar] [CrossRef]

- Pan, S.J.; Yang, Q. A survey on transfer learning. IEEE Trans. Knowl. Data Eng. 2010, 22, 1345–1359. [Google Scholar] [CrossRef]

- Weiss, K.; Khoshgoftaar, T.M.; Wang, D.D. A Survey of Transfer Learning. J. Big Data 2016. [Google Scholar] [CrossRef]

- Meng, Z.; Chen, Z.; Mazalov, V.; Li, J.; Gong, Y. Unsupervised adaptation with domain separation networks for robust speech recognition. In Proceedings of the 2017 IEEE Automatic Speech Recognition and Understanding Workshop (ASRU), Okinawa, Japan, 16–20 December 2017; pp. 214–221. [Google Scholar]

- Singh, K.K.; Divvala, S.; Farhadi, A.; Lee, Y.J. DOCK: Detecting Objects by Transferring Common-Sense Knowledge. In European Conference on Computer Vision; Springer: Berlin, Germany, 2018; pp. 506–522. [Google Scholar]

- Cao, H.; Bernard, S.; Heutte, L.; Sabourin, R. Improve the performance of transfer learning without fine-tuning using dissimilarity-based multi-view learning for breast cancer histology images. In International Conference Image Analysis and Recognition; Springer: Berlin, Germany, 2018; pp. 779–787. [Google Scholar]

- Ramasso, E.; Saxena, A. Performance Benchmarking and Analysis of Prognostic Methods for CMAPSS Datasets. Int. J. Prognostics Health Manag. 2014, 5, 1–15. [Google Scholar]

- Saxena, A.; Goebel, K. Turbofan Engine Degradation Simulation Data Set. NASA Ames Prognostics Data Repository. 2008. Available online: https://ti.arc.nasa.gov/tech/dash/groups/pcoe/prognostic-data-repository/ (accessed on 20 November 2018).

- Hochreiter, S.; Urgen Schmidhuber, J. LONG SHORT-TERM MEMORY. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Graves, A.; Schmidhuber, J. Framewise phoneme classification with bidirectional LSTM and other neural network architectures. Neural Netw. 2005, 18, 602–610. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Heimes, F. Recurrent Neural Networks for Remaining Useful Life Estimation. In Proceedings of the International Conference on 2008 Prognostics and Health Management, Denver, CO, USA, 6–9 October 2008; pp. 1–6. [Google Scholar] [CrossRef]

| Dataset | FD001 | FD002 | FD003 | FD004 |

|---|---|---|---|---|

| Dataset | FD001 | FD002 | FD003 | FD004 |

| Train trajectories | 100 | 260 | 100 | 249 |

| Test trajectories | 100 | 259 | 100 | 248 |

| Maximum life span (Cycles) | 362 | 378 | 525 | 543 |

| Average life span (Cycles) | 206 | 206 | 247 | 245 |

| Minimum life span (Cycles) | 128 | 128 | 145 | 128 |

| Operating Conditions | 1 | 6 | 1 | 6 |

| Fault conditions | 1 | 1 | 2 | 2 |

| Label | Transfer (From To) | Operating Conditions | Fault Conditions |

|---|---|---|---|

| E1 | FD001 no transfer | 1 | 1 |

| E2 | FD003→FD001 | 1→1 | 2→1 |

| E3 | FD002→FD001 | 6→1 | 1→1 |

| E4 | FD002 no transfer | 6 | 1 |

| E5 | FD004→FD002 | 6→6 | 2→1 |

| E6 | FD001→FD002 | 1→6 | 1→1 |

| E7 | FD003 no transfer | 1 | 2 |

| E8 | FD001→FD003 | 1→1 | 1→2 |

| E9 | FD004→FD003 | 6→1 | 2→2 |

| E10 | FD004 no transfer | 6 | 2 |

| E11 | FD002→FD004 | 6→6 | 1→2 |

| E12 | FD003→FD004 | 1→6 | 2→2 |

| Label | From To/IMP | Mean Score | RMSE | ||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 10 | 20 | 30 | 40 | 50 | 60 | 70 | 80 | 90 | 100 | 10 | 20 | 30 | 40 | 50 | 60 | 70 | 80 | 90 | 100 | ||

| E1 | FD001 no transfer | 21.71 | 10.06 | 12.35 | 4.17 | 3.07 | 2.9 | 2.76 | 2.65 | 2.54 | 2.65 | 26.36 | 22.07 | 21.18 | 16.62 | 15.12 | 15.06 | 14.64 | 14.62 | 14.2 | 14.26 |

| E2 | FD003→FD001 | 3.23 | 3.45 | 2.67 | 2.63 | 2.37 | 2.42 | 2.37 | 2.36 | 2.26 | 2.24 | 15.79 | 16.17 | 14.4 | 14.61 | 13.69 | 14.02 | 14.11 | 14.08 | 13.73 | 13.65 |

| IMP (%) | 85.12 | 65.7 | 78.38 | 36.9 | 23.01 | 16.41 | 13.97 | 11.15 | 10.9 | 15.65 | 40.09 | 26.7 | 31.99 | 12.12 | 9.41 | 6.88 | 3.63 | 3.66 | 3.31 | 4.22 | |

| E3 | FD002→FD001 | 43.36 | 46.51 | 7.2 | 3.84 | 5.39 | 3.52 | 6.55 | 17.38 | 3.82 | 4.3 | 39.89 | 40.89 | 21.05 | 17.15 | 18.94 | 16.45 | 19.78 | 26.73 | 17.24 | 18.3 |

| IMP (%) | −99.7 | −362 | 41.69 | 7.77 | −75.3 | −21.4 | −137 | −555 | −50.4 | −62.2 | −51.3 | −85.3 | 0.6 | −3.19 | −25.3 | −9.21 | −35.2 | −82.9 | −21.4 | −28.4 | |

| E4 | FD002 no transfer | 23.79 | 17.5 | 13.78 | 13.11 | 11.55 | 9.68 | 9.64 | 9.07 | 9.04 | 8.37 | 28.44 | 26.35 | 24.69 | 25.1 | 23.32 | 22.29 | 22.16 | 22.35 | 21.98 | 21.7 |

| E5 | FD004→FD002 | 13.51 | 12.06 | 10.22 | 10.45 | 9.72 | 8.88 | 9.27 | 8.87 | 7.78 | 8.45 | 22.94 | 23.1 | 21.57 | 21.56 | 22.15 | 21.03 | 21.35 | 21.15 | 20.5 | 20.83 |

| IMP (%) | 43.19 | 31.1 | 25.84 | 20.31 | 15.85 | 8.29 | 3.83 | 2.21 | 13.9 | −1.04 | 19.36 | 12.34 | 12.62 | 14.11 | 5.03 | 5.64 | 3.67 | 5.35 | 6.75 | 4.04 | |

| E6 | FD001→FD002 | 11.6 | 11.39 | 8.97 | 8.98 | 7.87 | 7.81 | 8.03 | 8.6 | 7.46 | 7.04 | 24.46 | 23.48 | 22.35 | 21.66 | 21.26 | 20.79 | 21.05 | 21.81 | 20.96 | 20.77 |

| IMP (%) | 51.24 | 34.88 | 34.87 | 31.55 | 31.84 | 19.31 | 16.72 | 5.16 | 17.41 | 15.87 | 13.99 | 10.89 | 9.45 | 13.7 | 8.85 | 6.72 | 5.03 | 2.43 | 4.63 | 4.28 | |

| E7 | FD003 no transfer | 24.47 | 12.48 | 9.4 | 10.25 | 10.91 | 7.33 | 6.26 | 4.41 | 3.28 | 4.79 | 26.52 | 22.99 | 21.09 | 21.14 | 21.92 | 19.23 | 17.65 | 16.33 | 14.71 | 16.33 |

| E8 | FD001→FD003 | 14.24 | 7.65 | 7.72 | 7.08 | 5.45 | 5.06 | 4.06 | 4.41 | 3.69 | 4 | 23.66 | 19.8 | 19.14 | 17.96 | 16.57 | 16.96 | 15.56 | 16.11 | 14.44 | 14.34 |

| IMP (%) | 41.81 | 38.69 | 17.92 | 30.92 | 50.05 | 30.9 | 35.17 | 0.05 | −12.5 | 16.51 | 10.8 | 13.86 | 9.28 | 15.03 | 24.39 | 11.81 | 11.84 | 1.34 | 1.85 | 12.18 | |

| E9 | FD004→FD003 | 41.14 | 36.97 | 12.55 | 19.65 | 8.85 | 18.83 | 8.35 | 8.91 | 8.71 | 9.6 | 36.95 | 35.46 | 24.91 | 29.34 | 23.05 | 28.58 | 22.62 | 22.97 | 22.58 | 24.1 |

| IMP (%) | −68.1 | −196 | −33.5 | −91.8 | 18.86 | −157 | −33.4 | −102 | −166 | −101 | −39.3 | −54.2 | −18.1 | −38.8 | −5.16 | −48.7 | −28.2 | −40.7 | −53.5 | −47.7 | |

| E10 | FD004 no transfer | 38.52 | 31.79 | 24.73 | 22.78 | 25.06 | 18.62 | 21.77 | 18.17 | 16.66 | 18.2 | 33.15 | 30.56 | 28.74 | 28.62 | 28.17 | 27.2 | 27.29 | 26.75 | 26.23 | 25.9 |

| E11 | FD002→FD004 | 27 | 23.3 | 20.98 | 18.22 | 17.59 | 15.59 | 16.26 | 15.12 | 15.73 | 16.16 | 29.21 | 29.14 | 27.2 | 27.25 | 26.86 | 26.22 | 25.75 | 25.64 | 25.55 | 25.44 |

| IMP (%) | 29.9 | 26.71 | 15.18 | 20.02 | 29.8 | 16.26 | 25.29 | 16.77 | 5.57 | 11.19 | 11.89 | 4.62 | 5.35 | 4.8 | 4.66 | 3.62 | 5.66 | 4.14 | 2.6 | 1.75 | |

| E12 | FD003→FD004 | 25.45 | 16.3 | 13.91 | 13.32 | 13.37 | 12.43 | 13.1 | 12.53 | 11.71 | 11.75 | 26.39 | 25.76 | 25.07 | 25.66 | 25.34 | 24.69 | 24.36 | 24.36 | 23.67 | 23.4 |

| IMP (%) | 33.92 | 48.73 | 43.76 | 41.52 | 46.64 | 33.23 | 39.83 | 31.06 | 29.69 | 35.47 | 20.39 | 15.71 | 12.75 | 10.36 | 10.03 | 9.25 | 10.74 | 8.94 | 9.75 | 9.65 | |

| Figure | Label | Transfer (From To) | Operating Conditions | Fault Conditions |

|---|---|---|---|---|

| Figure 9a | E8 | FD001→FD003 | 1→1 | 1→2 |

| E2 | FD003→FD001 | 1→1 | 2→1 | |

| Figure 9b | E11 | FD002→FD004 | 6→6 | 1→2 |

| E5 | FD004→FD002 | 6→6 | 2→1 | |

| Figure 9c | E6 | FD001→FD002 | 1→6 | 1→1 |

| E3 | FD002→FD001 | 6→1 | 1→1 | |

| Figure 9d | E12 | FD003→FD004 | 1→6 | 2→2 |

| E9 | FD004→FD003 | 6→1 | 2→2 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, A.; Wang, H.; Li, S.; Cui, Y.; Liu, Z.; Yang, G.; Hu, J. Transfer Learning with Deep Recurrent Neural Networks for Remaining Useful Life Estimation. Appl. Sci. 2018, 8, 2416. https://doi.org/10.3390/app8122416

Zhang A, Wang H, Li S, Cui Y, Liu Z, Yang G, Hu J. Transfer Learning with Deep Recurrent Neural Networks for Remaining Useful Life Estimation. Applied Sciences. 2018; 8(12):2416. https://doi.org/10.3390/app8122416

Chicago/Turabian StyleZhang, Ansi, Honglei Wang, Shaobo Li, Yuxin Cui, Zhonghao Liu, Guanci Yang, and Jianjun Hu. 2018. "Transfer Learning with Deep Recurrent Neural Networks for Remaining Useful Life Estimation" Applied Sciences 8, no. 12: 2416. https://doi.org/10.3390/app8122416