Artificial Flora (AF) Optimization Algorithm

Abstract

:Featured Application

Abstract

1. Introduction

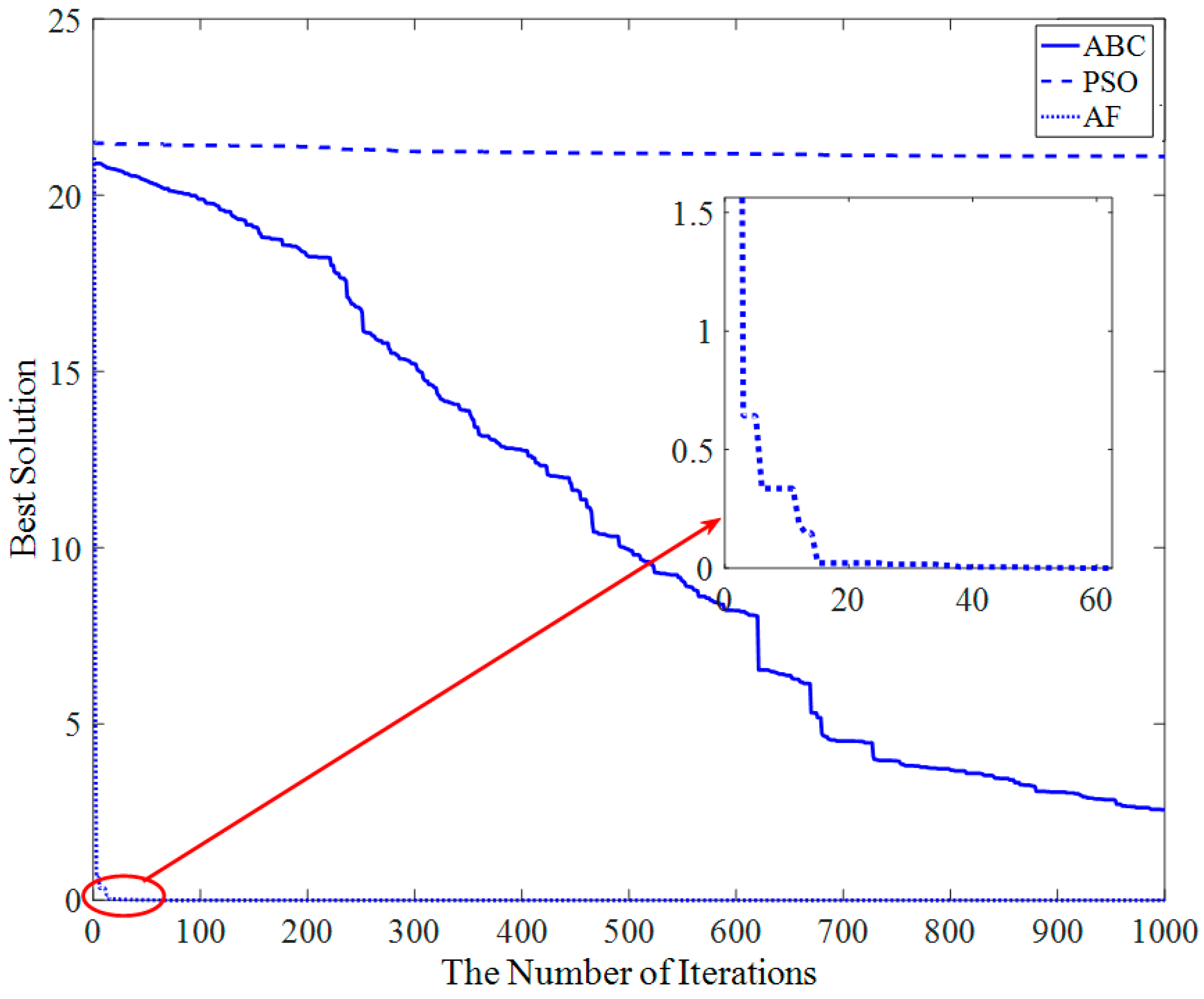

- AF is multi-parent techniques, the movement in AF is related to the past two generation plants. So, it can balance more updating information. This can help algorithm avoid running into the local extremum.

- AF algorithm selects the alive offspring plants as new original plants each iteration. It can take the local optimal position as the center to explore around space. It can converge to optimal point rapidly.

- Original plants can spread seeds to any place within their propagation range. This guarantees the local search capability of the algorithm. The classical optimization problem is an important application of the AF algorithm. Function optimization is a direct way to verify intelligent algorithm performance. In this paper, we successfully apply it to unconstrained multivariate function optimization problems. We try to apply it to multi-objective, combinatorial, and more complex problems. In addition, a lot of practical problems, such as wireless sensor network optimization and parameter estimation, can be converted to optimization problems, and we can use AF to find a satisfactory solution.

2. Artificial Flora Algorithm

2.1. Biological Fundamentals

2.2. Artificial Flora Algorithm Theory

- Rule 1:

- Because of a sudden change in the environment or some kind of artificial action, a species may be randomly distributed in a region where there is no such species and then become the most primitive original plant.

- Rule 2:

- Plants will evolve to adapt to the new environment as the environment changes. Therefore, the propagation distance of offspring plants is not a complete inheritance to the parent plant but rather evolves on the basis of the distance of the previous generation of plants. In addition, in the ideal case, the offspring can only learn from the nearest two generations.

- Rule 3:

- In the ideal case, when the original plant spreads seeds around autonomously, the range is a circle whose radius is the maximum propagation distance. Offspring plants can be distributed anywhere in the circle (include the circumference).

- Rule 4:

- Environmental factors such as climate and temperature vary from one position to another, so plants have different probability of survival. The probability of survival is related to the fitness of plant in the position, fitness refers to how well plants can adapt to the environment. That is, fitness is the survival probability of a plant in the position. The higher the fitness, the greater the probability of survival is. However, inter-specific competition may cause plant with high fitness to die.

- Rule 5:

- The further the distance from the original plants, the lower the probability of survival because the difference between the current environment and the previous environment will be greater as the offspring plan farther from the original plant in the same generation.

- Rule 6:

- When seeds spread by an external way, the spread distance cannot exceed the maximum limit area because of constraints such as the range of animal activity.

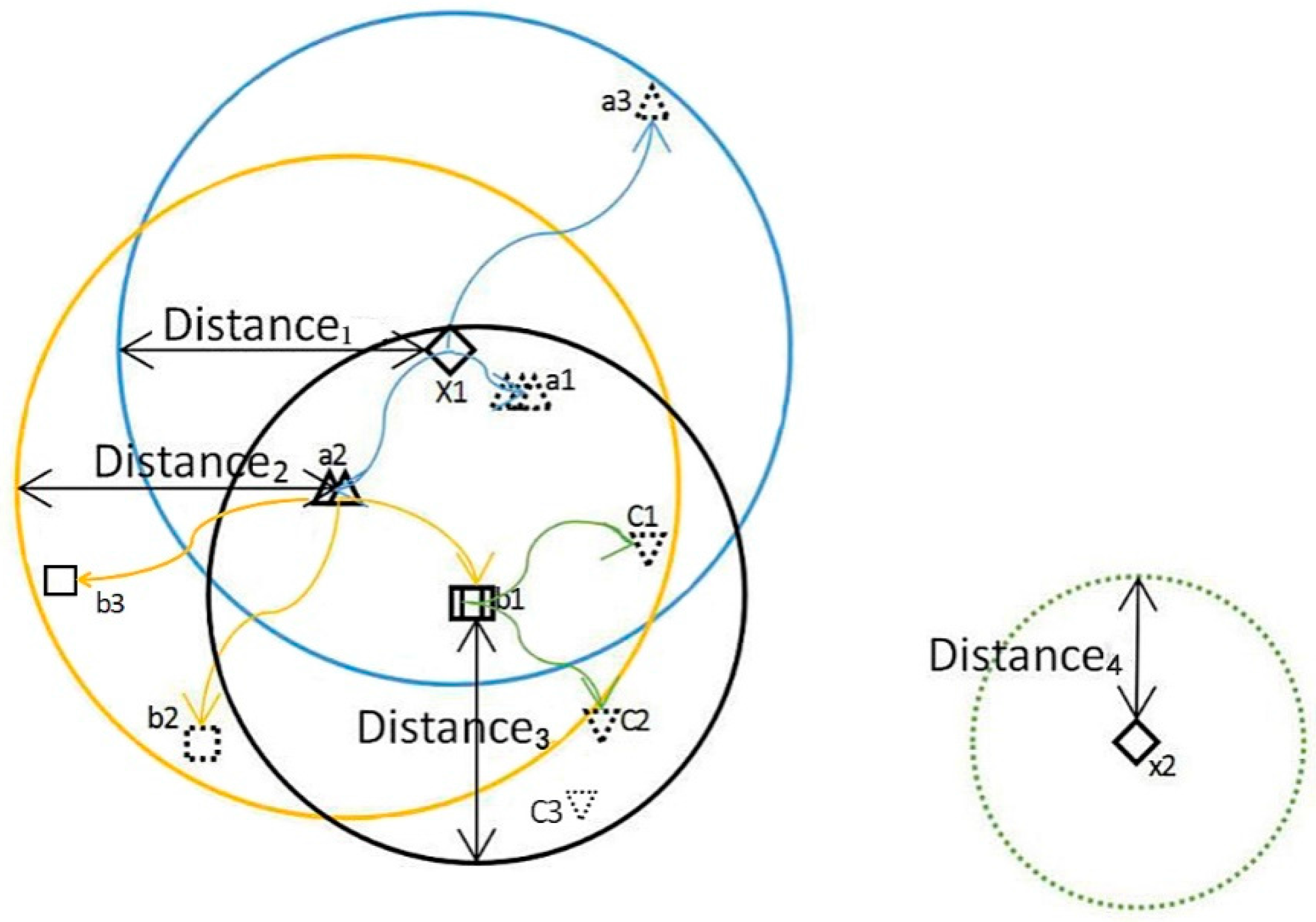

- According to Rule 1, there was no such species in the region, due to sudden environmental changes or some kind of artificial action, original plants were spread over a random location in the region, as the ◇(x1) shows in Figure 1.

- The number of

stand for the fitness. The higher the number, the higher the offspring’s fitness. It can be seen from the Figure 1 that if the offspring is closer to the original plant, the fitness is higher: fitness(a1) > fitness(a2) > fitness(a3). This matches Rule 5.

- According to Rule 4, only some of the offspring plant survive because the fitness is different. As shown in Figure 1, the solid line indicates a living plant and the dotted line indicates that the plant is not living. Due to competition and other reasons, the offspring a1 with highest fitness did not survive, but a2 with the fitness less than a1 is alive and becomes a new original plant.

- Distance2and Distance3 are the propagation distance of

(a2) and □(b1), respectively. According to Rule2, Distance2 evolves based on Distance1, and Distance3 is learning from Distance2 and Distance1. If b1 spreads seeds, the distance of b1’s offspring is based on Distance2 and Distance3.

- Plants are constantly spreading seeds around and causing flora to migrate so that flora can find the best area to live.

- If all the offspring plants do not survive, as

(c1,c2,c3) shown in Figure 1, a new original plant can be randomly generated in the region by allochory.

2.2.1. Evolution Behavior

2.2.2. Spreading Behavior

2.2.3. Select Behavior

2.3. The Proposed Algorithm Flow and Complexity Analysis

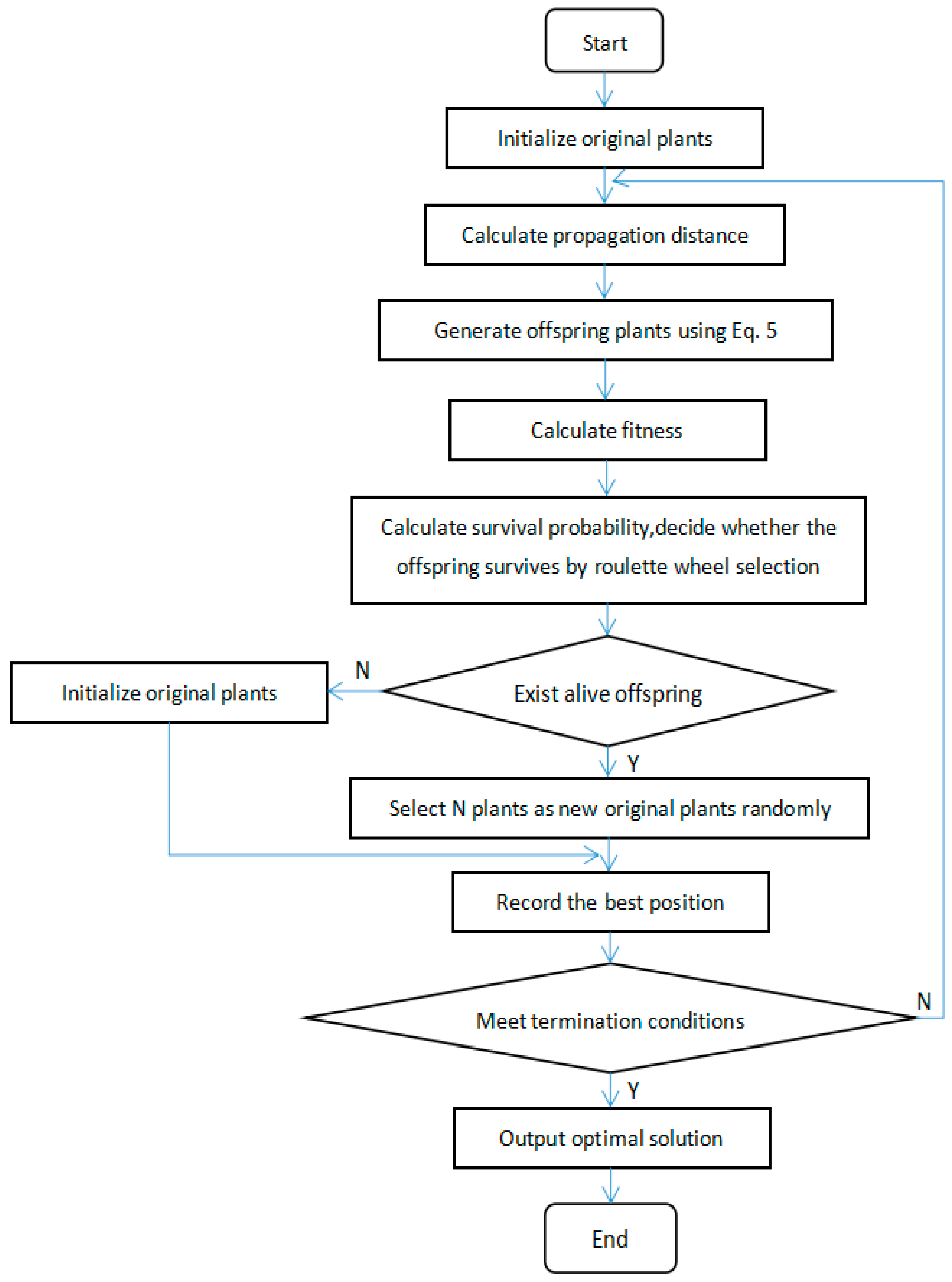

- (1)

- (Initialization according Equation (4), generate N original plants;

- (2)

- Calculate propagation distance according Equation (1), Equation (2) and Equation (3);

- (3)

- Generate offspring plants according Equation (5) and calculate their fitness;

- (4)

- Calculate the survival probability of offspring plants according to Equation (6)—whether the offspring survives or not is decided by the roulette wheel selection method;

- (5)

- If there are plants that survive, randomly select N plants as new original plants. If there are no surviving plant, generate new original plants according to Equation (4);

- (6)

- Record the best solution;

- (7)

- Estimate whether this meets the termination conditions. If so, output the optimal solution, otherwise goto step 2.

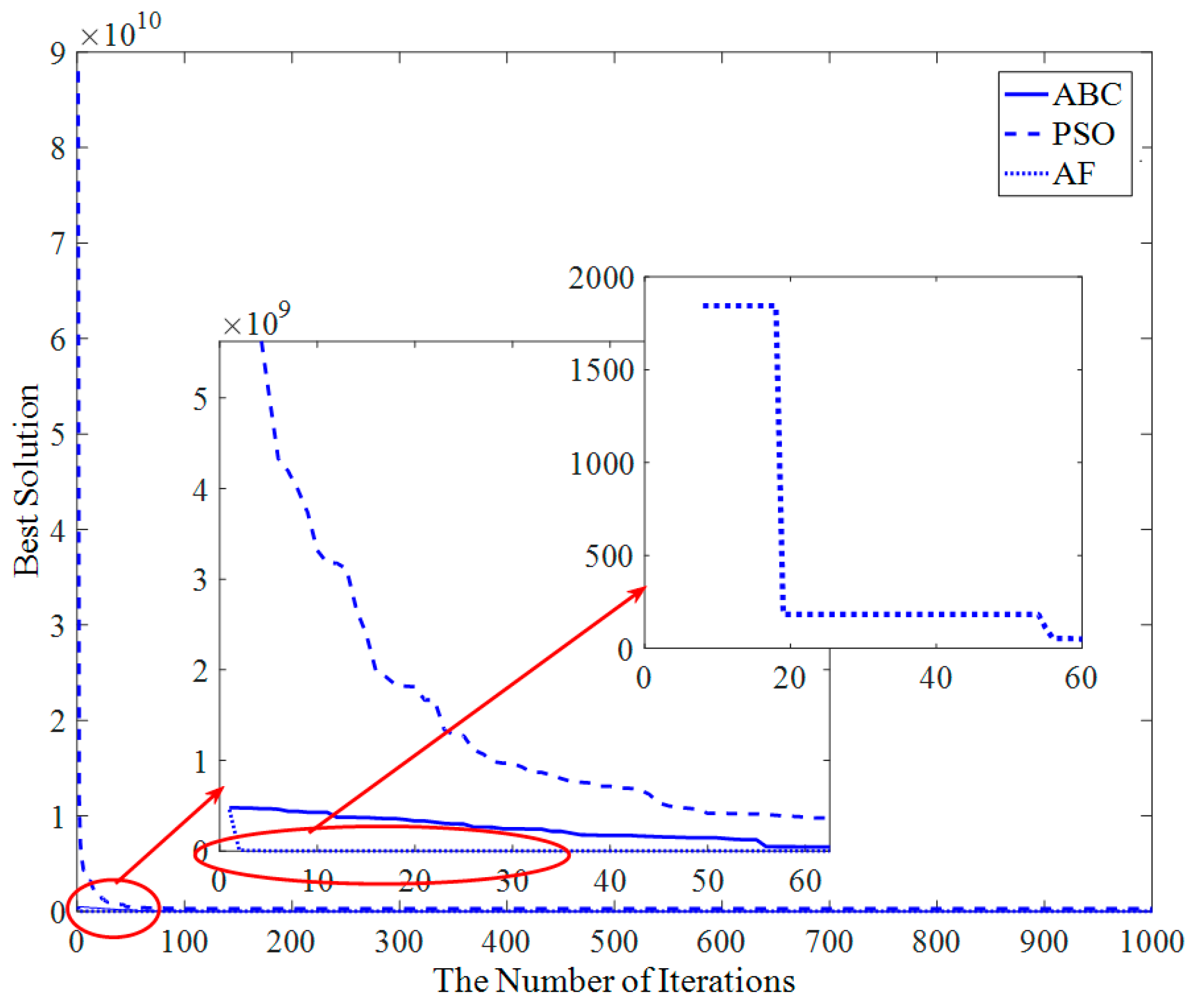

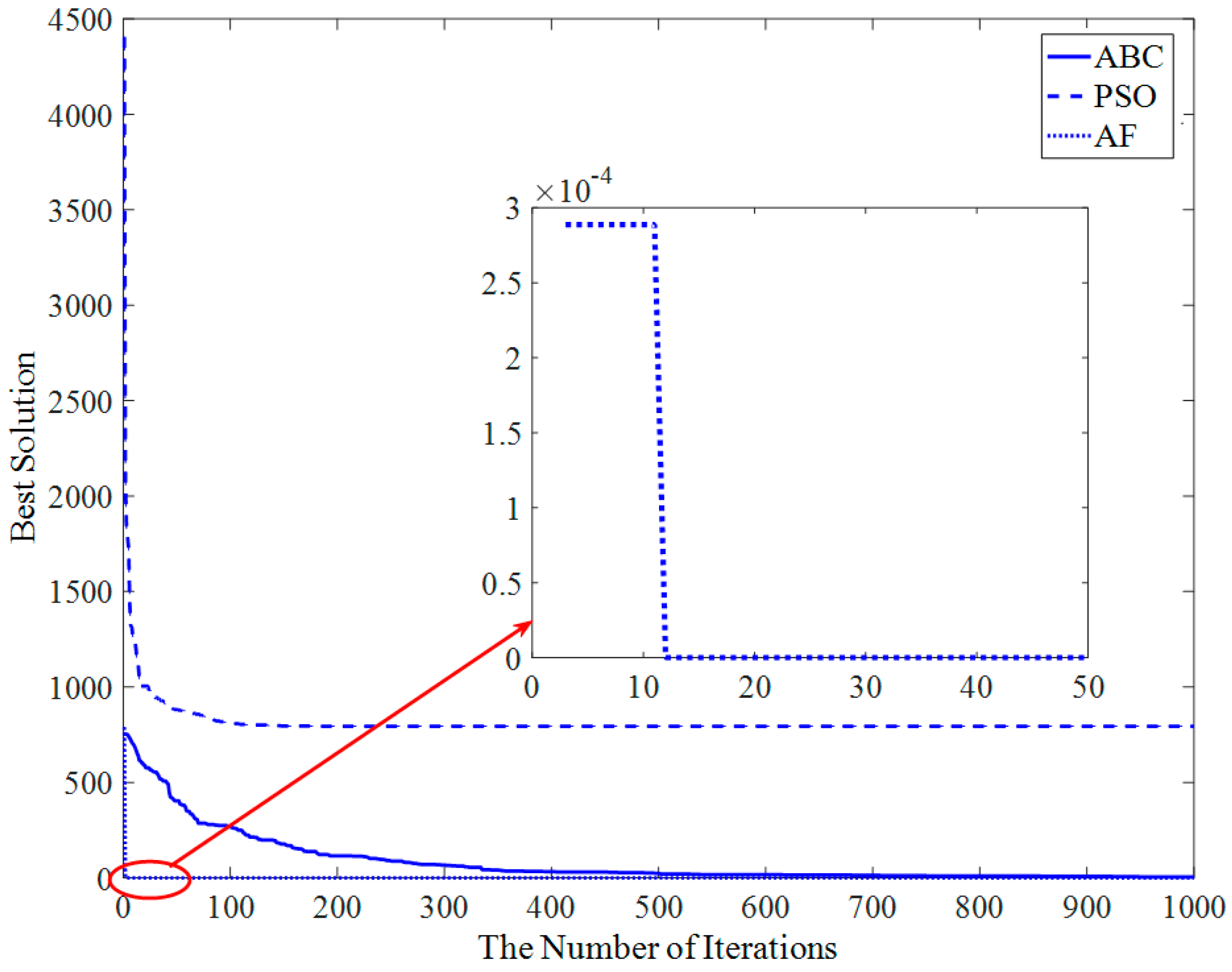

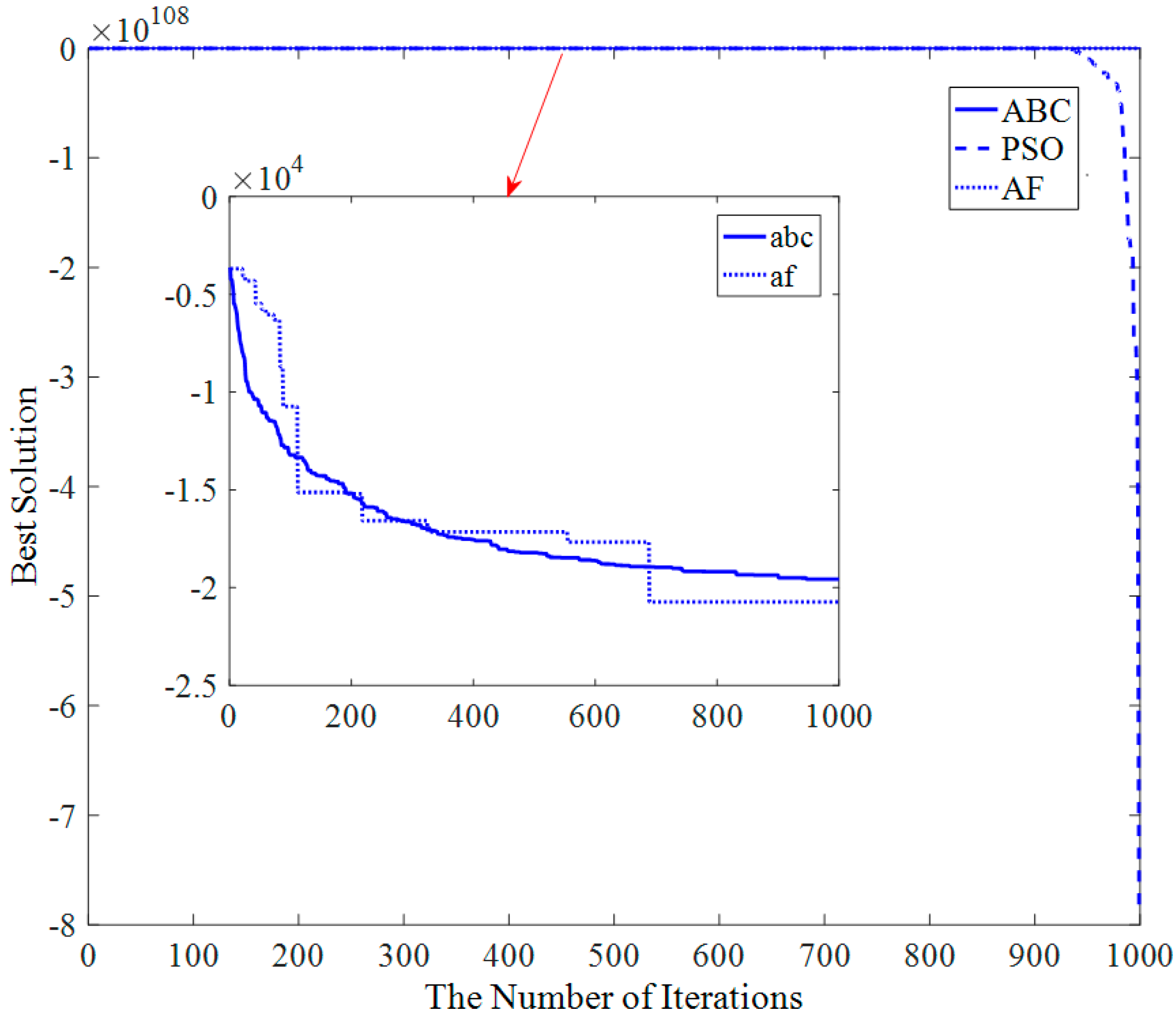

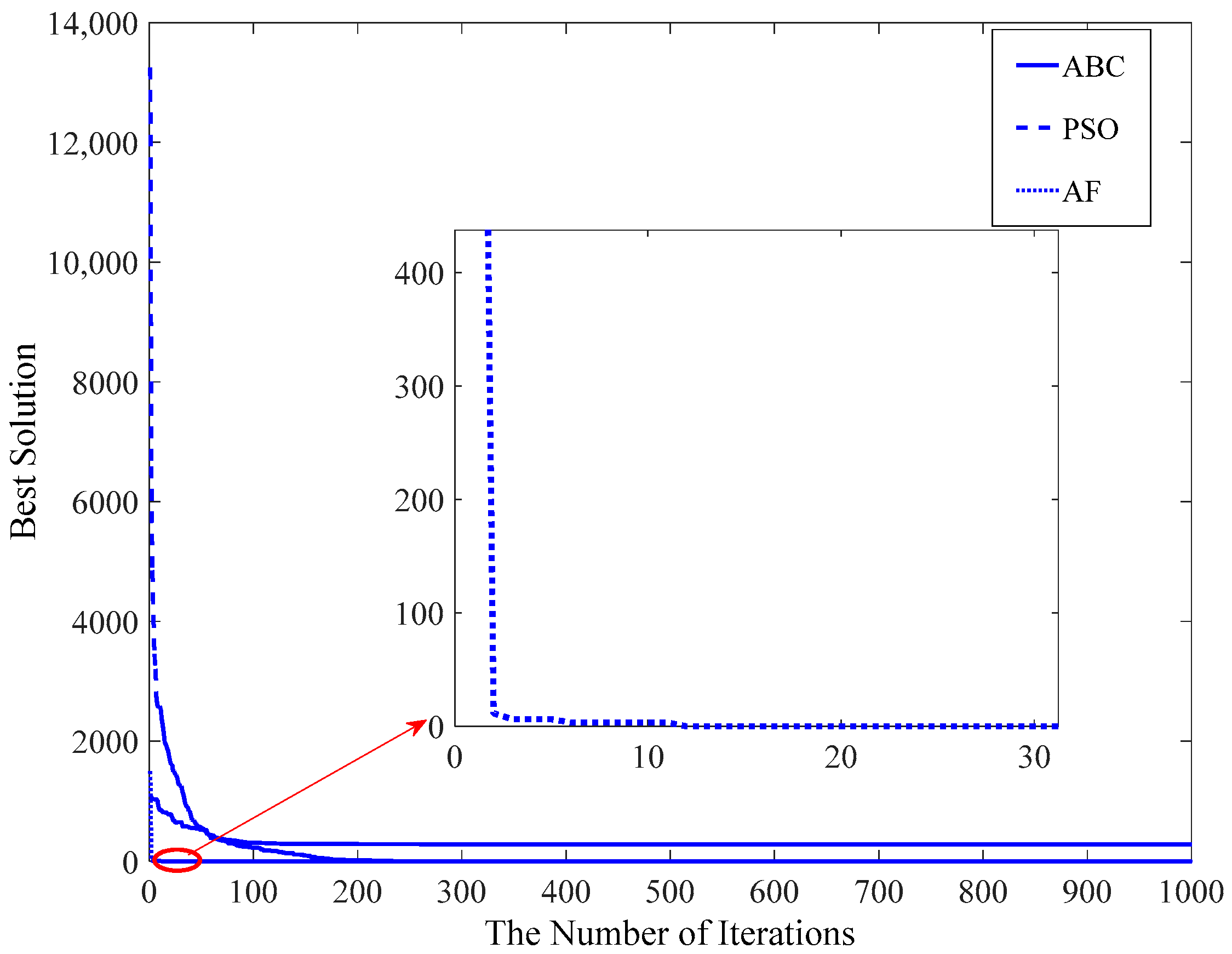

3. Validation and Comparison

4. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Cao, Z.; Wang, L. An effective cooperative coevolution framework integrating global and local search for large scale optimization problems. In Proceedings of the 2015 IEEE Congress on Evolutionary Computation, Sendai, Japan, 25–28 May 2015; pp. 1986–1993. [Google Scholar]

- Battiti, R. First- and Second-Order Methods for Learning: Between Steepest Descent and Newton’s Method; MIT Press: Cambridge, MA, USA, 1992. [Google Scholar]

- Liang, X.B.; Wang, J. A recurrent neural network for nonlinear optimization with a continuously differentiable objective function and bound constraints. IEEE Trans. Neural Netw. 2000, 11, 1251–1262. [Google Scholar] [PubMed]

- Li, J.; Fong, S.; Wong, R. Adaptive multi-objective swarm fusion for imbalanced data classification. Inf. Fusion 2018, 39, 1–24. [Google Scholar] [CrossRef]

- Han, G.; Liu, L.; Chan, S.; Yu, R.; Yang, Y. HySense: A Hybrid Mobile CrowdSensing Framework for Sensing Opportunities Compensation under Dynamic Coverage Constraint. IEEE Commun. Mag. 2017, 55, 93–99. [Google Scholar] [CrossRef]

- Navalertporn, T.; Afzulpurkar, N.V. Optimization of tile manufacturing process using particle swarm optimization. Swarm Evol. Comput. 2011, 1, 97–109. [Google Scholar] [CrossRef]

- Pan, Q.; Tasgetiren, M.; Suganthan, P. A discrete artificial bee colony algorithm for the lot-streaming flow shop scheduling problem. Inf. Sci. 2011, 181, 2455–2468. [Google Scholar] [CrossRef]

- Duan, H.; Luo, Q. New progresses in swarm intelligence-based computation. Int. J. Bio-Inspired Comput. 2015, 7, 26–35. [Google Scholar] [CrossRef]

- Tang, Q.; Shen, Y.; Hu, C. Swarm Intelligence: Based Cooperation Optimization of Multi-Modal Functions. Cogn. Comput. 2013, 5, 48–55. [Google Scholar] [CrossRef]

- Demertzis, K.; Iliadis, L. Adaptive elitist differential evolution extreme learning machines on big data: Intelligent recognition of invasive species. In Advances in Big Data; Springer International Publishing: Berlin/Heidelberg, Germany, 2016. [Google Scholar]

- Du, X.P.; Cheng, L.; Liu, L. A Swarm Intelligence Algorithm for Joint Sparse Recovery. IEEE Signal Process. Lett. 2013, 20, 611–614. [Google Scholar]

- Zaman, F.; Qureshi, I.M.; Munir, F. Four-dimensional parameter estimation of plane waves using swarming intelligence. Chin. Phys. B 2014, 23, 078402. [Google Scholar] [CrossRef]

- Jain, C.; Verma, H.K.; Arya, L.D. A novel statistically tracked particle swarm optimization method for automatic generation control. J. Mod. Power Syst. Clean Energy 2014, 2, 396–410. [Google Scholar] [CrossRef]

- Torabi, A.J.; Er, M.J.; Li, X. A Survey on Artificial Intelligence-Based Modeling Techniques for High Speed Milling Processes. IEEE Syst. J. 2015, 9, 1069–1080. [Google Scholar] [CrossRef]

- Nebti, S.; Boukerram, A. Swarm intelligence inspired classifiers for facial recognition. Swarm Evol. Comput. 2017, 32, 150–166. [Google Scholar] [CrossRef]

- Teodorovic, D. Swarm intelligence systems for transportation engineering: Principles and applications. Transp. Res. Part C-Emerg. Technol. 2008, 16, 651–667. [Google Scholar] [CrossRef]

- Drechsler, R.; Gockel, N. Genetic algorithm for data sequencing. Electron. Lett. 1997, 33, 843–845. [Google Scholar] [CrossRef]

- Jong, E.D.; Watson, R.A.; Pollack, J.B. Reducing Bloat and Promoting Diversity using Multi-Objective Methods. In Proceedings of the Genetic and Evolutionary Computation Conference, San Francisco, CA, USA, 7–11 July 2001; pp. 1–8. [Google Scholar]

- Pornsing, C.; Sodhi, M.S.; Lamond, B.F. Novel self-adaptive particle swarm optimization methods. Soft Comput. 2016, 20, 3579–3593. [Google Scholar] [CrossRef]

- Karaboga, D.; Gorkemli, B. A quick artificial bee colony (qABC) algorithm and its performance on optimization problems. Appl. Soft Comput. 2014, 23, 227–238. [Google Scholar] [CrossRef]

- Luh, G.C.; Lin, C.Y. Structural topology optimization using ant colony optimization algorithm. Appl. Soft Comput. 2009, 9, 1343–1353. [Google Scholar] [CrossRef]

- Wang, H.B.; Fan, C.C.; Tu, X.Y. AFSAOCP: A novel artificial fish swarm optimization algorithm aided by ocean current power. Appl. Intell. 2016, 45, 992–1007. [Google Scholar] [CrossRef]

- Wang, H.; Wang, W.; Zhou, X. Firefly algorithm with neighborhood attraction. Inf. Sci. 2017, 382, 374–387. [Google Scholar] [CrossRef]

- Gandomi, A.H.; Talatahari, S.; Tadbiri, F. Krill herd algorithm for optimum design of truss structures. Int. J. Bio-Inspired Comput. 2013, 5, 281–288. [Google Scholar] [CrossRef]

- Yang, X.S. Flower Pollination Algorithm for Global Optimization. In Proceedings of the 11th International Conference on Unconventional Computation and Natural Computation, Orléans, France, 3–7 September 2012; Springer: Berlin/Heidelberg, Germany, 2012; pp. 240–249. [Google Scholar]

- Holland, J.H. Adaptation in Natural and Artificial Systems; MIT Press: Cambridge, MA, USA, 1992. [Google Scholar]

- Dick, G.; Whigham, P. The behaviour of genetic drift in a spatially-structured evolutionary algorithm. In Proceedings of the IEEE Congress on Evolutionary Computation, Edinburgh, UK, 2–5 September 2005; Volume 2, pp. 1855–1860. [Google Scholar]

- Ashlock, D.; Smucker, M.; Walker, J. Graph based genetic algorithms. In Proceedings of the Congress on Evolutionary Computation, Washington, DC, USA, 6–9 July 1999; Volume 2, p. 1368. [Google Scholar]

- Gasparri, A. A Spatially Structured Genetic Algorithm over Complex Networks for Mobile Robot Localisation. In Proceedings of the IEEE International Conference on Robotics and Automation, Roma, Italy, 10–14 April 2007; pp. 4277–4282. [Google Scholar]

- Srinivas, M.; Patnaik, L.M. Adaptive probabilities of crossover and mutation in genetic algorithms. IEEE Trans. Syst. Man Cybern. 2002, 24, 656–667. [Google Scholar] [CrossRef]

- Kennedy, J.; Eberhart, R. Particle swarm optimization. In Proceedings of the IEEE International Conference on Neural Networks, Perth, Australia, 27 November–1 December 1995; IEEE: Middlesex County, NJ, USA, 1995; Volume 4, pp. 1942–1948. [Google Scholar]

- Clerc, M.; Kennedy, J. The particle swarm—Explosion, stability, and convergence in a multidimensional complex space. IEEE Trans. Evol. Comput. 2002, 6, 58–73. [Google Scholar] [CrossRef]

- Suganthan, P. Particle swarm optimizer with neighborhood operator. In Proceedings of the IEEE Congress on Evolutionary Computation, Washington, DC, USA, 6–9 July 1999; pp. 1958–1961. [Google Scholar]

- Parsopoulos, K.E.; Vrahatis, M.N. On the computation of all global minimizers through particle swarm optimization. IEEE Trans. Evol. Comput. 2004, 8, 211–224. [Google Scholar] [CrossRef]

- Voss, M.S. Principal Component Particle Swarm Optimization (PCPSO). In Proceedings of the IEEE Swarm Intelligence Symposium, Pasadena, CA, USA, 8–10 June 2005; pp. 401–404. [Google Scholar]

- Karaboga, D. An Idea Based on Honey Bee Swarm for Numerical Optimization; Erciyes University: Kayseri, Turkey, 2005. [Google Scholar]

- Alam, M.S.; Kabir, M.W.U.; Islam, M.M. Self-adaptation of mutation step size in Artificial Bee Colony algorithm for continuous function optimization. In Proceedings of the 2013 IEEE International Conference on Computer and Information Technology, Dhaka, Bangladesh, 23–25 December 2011; IEEE: Middlesex County, NJ, USA, 2011; pp. 69–74. [Google Scholar]

- Zhang, D.; Guan, X.; Tang, Y. Modified Artificial Bee Colony Algorithms for Numerical Optimization. In Proceedings of the 2011 3rd International Workshop on Intelligent Systems and Applications, Wuhan, China, 28–29 May 2011; pp. 1–4. [Google Scholar]

- Zhong, F.; Li, H.; Zhong, S. A modified ABC algorithm based on improved-global-best-guided approach and adaptive-limit strategy for global optimization. Appl. Soft Comput. 2016, 46, 469–486. [Google Scholar] [CrossRef]

- Rajasekhar, A.; Abraham, A.; Pant, M. Levy mutated Artificial Bee Colony algorithm for global optimization. In Proceedings of the 2011 IEEE International Conference on Systems, Man, and Cybernetics, Anchorage, AK, USA, 9–12 October 2011; pp. 655–662. [Google Scholar]

- Pagie, L.; Mitchell, M. A comparison of evolutionary and coevolutionary search. Int. J. Comput. Intell. Appl. 2002, 2, 53–69. [Google Scholar] [CrossRef]

- Wiegand, R.P.; Sarma, J. Spatial Embedding and Loss of Gradient in Cooperative Coevolutionary Algorithms. In Proceedings of the International Conference on Parallel Problem Solving from Nature, Berlin, Germany, 22–26 September 1996; Springer: Berlin/Heidelberg, Germany, 2004; pp. 912–921. [Google Scholar]

- Rosin, C.D.; Belew, R.K. Methods for Competitive Co-Evolution: Finding Opponents Worth Beating. In Proceedings of the International Conference on Genetic Algorithms, Pittsburgh, PA, USA, 15–19 June 1995; pp. 373–381. [Google Scholar]

- Cartlidge, J.P.; Bulloc, S.G. Combating coevolutionary disengagement by reducing parasite virulence. Evol. Comput. 2004, 12, 193–222. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Williams, N.; Mitchell, M. Investigating the success of spatial coevolution. In Proceedings of the 7th Annual Conference on Genetic And Evolutionary Computation, Washington, DC, USA, 25–29 June 2005; pp. 523–530. [Google Scholar]

- Hillis, W.D. Co-evolving Parasites Improve Simulated Evolution as an Optimization Procedure. Phys. D Nonlinear Phenom. 1990, 42, 228–234. [Google Scholar] [CrossRef]

- Ling, S.H.; Leung, F.H.F. An Improved Genetic Algorithm with Average-bound Crossover and Wavelet Mutation Operations. Soft Comput. 2007, 11, 7–31. [Google Scholar] [CrossRef]

- Akay, B.; Karaboga, D. A modified Artificial Bee Colony algorithm for real-parameter optimization. Inf. Sci. 2012, 192, 120–142. [Google Scholar] [CrossRef]

- Meng, X.B.; Gao, X.Z.; Lu, L. A new bio-inspired optimisation algorithm: Bird Swarm Algorithm. J. Exp. Theor. Artif. Intell. 2016, 38, 673–687. [Google Scholar] [CrossRef]

- Shi, Y.; Eberhart, R. A modified particle swarm optimizer. In Proceedings of the 1998 IEEE International Conference on Evolutionary Computation Proceedings, Anchorage, AK, USA, 4–9 May 1998; pp. 69–73. [Google Scholar]

| Input: times: Maximum run time M: Maximum branching number N: Number of original plants p: survival probability of offspring plants t = 0; Initialize the population and define the related parameters Evaluate the N individuals’ fitness value, and find the best solution While (t < times) For i = 1:N*M New original plants evolve propagation distance (According to Equation (1), Equation (2) and Equation (3)) Original plants spread their offspring (According to Equation (5)) If rand(0,1) >p Offspring plant is alive Else Offspring is died End if End for Evaluate new solutions, and select N plants as new original plants randomly. If the new solutionis better than their previous one, new plant will replace the old one. Find the current best solution t = t + 1; End while Output: Optimal solution |

| Operation | Time | Time Complexity |

|---|---|---|

| Initialize | N × t1 | O(N) |

| Calculate propagation distance | 2N × t2 | O(N) |

| Update the position | N × t3 | O(N) |

| Calculate fitness | N × M × t4 | O(N·M) |

| Calculate survival probability | N × M × t5 | O(N·M) |

| Decide alive plant using roulette | N × M × t6 | O(N·M) |

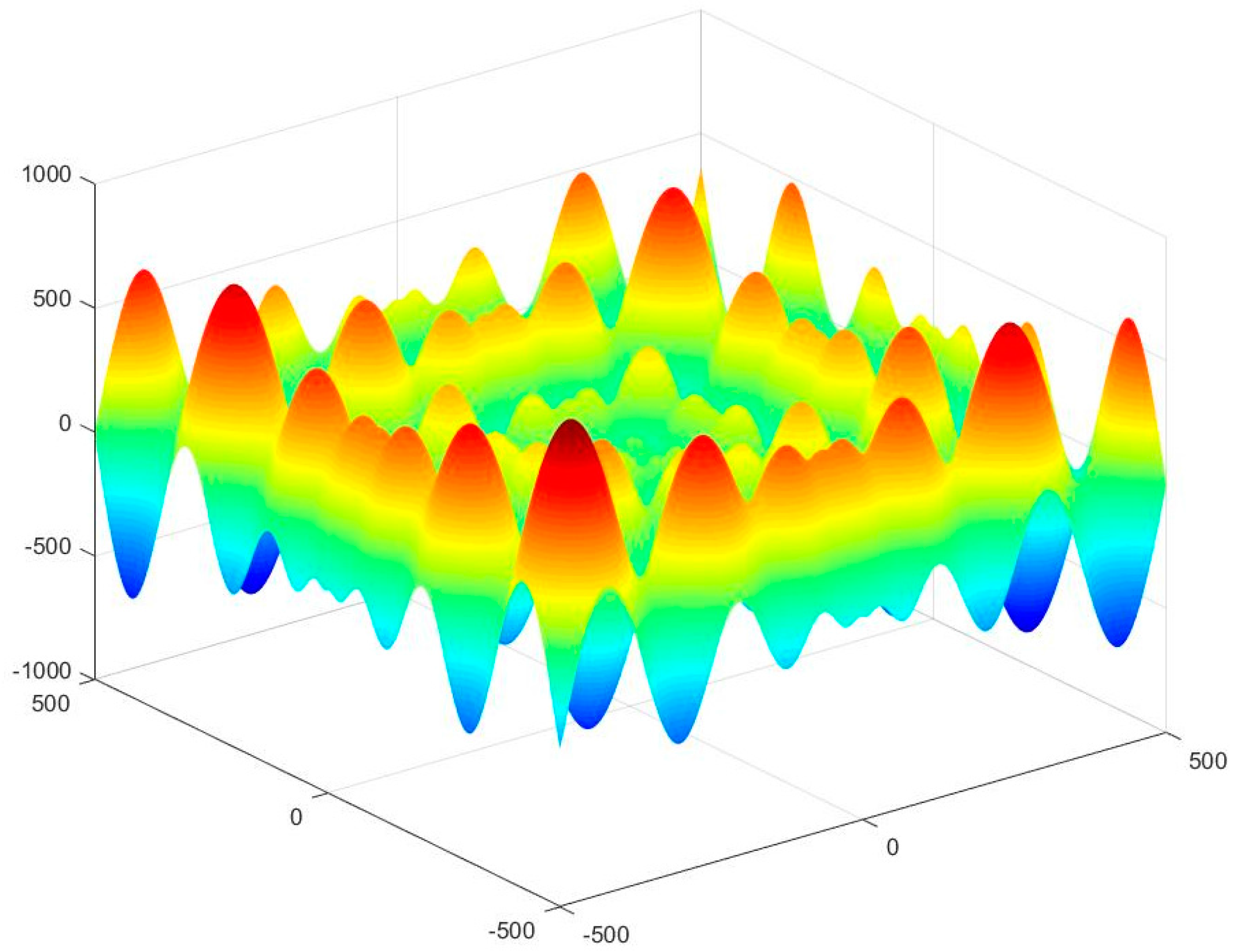

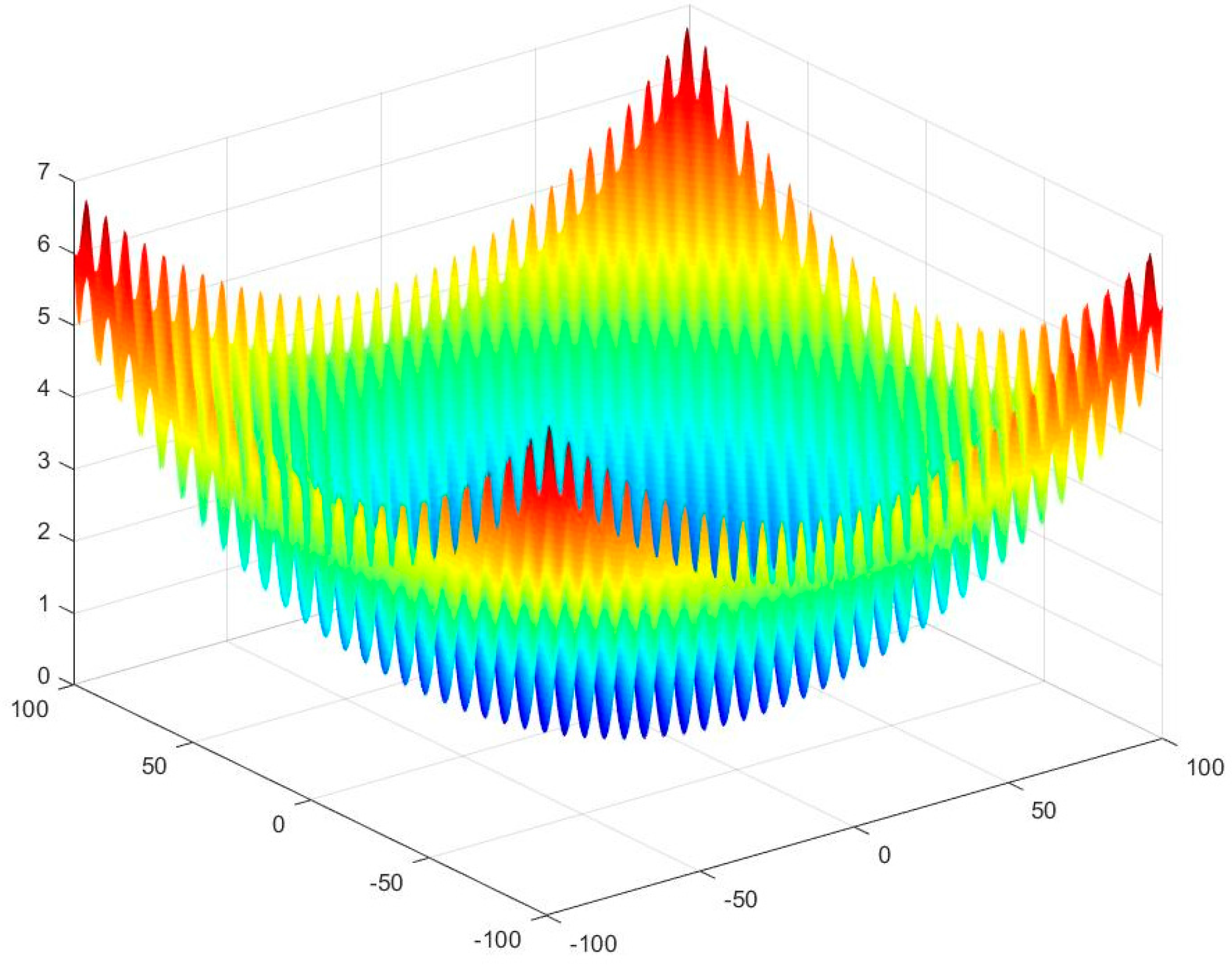

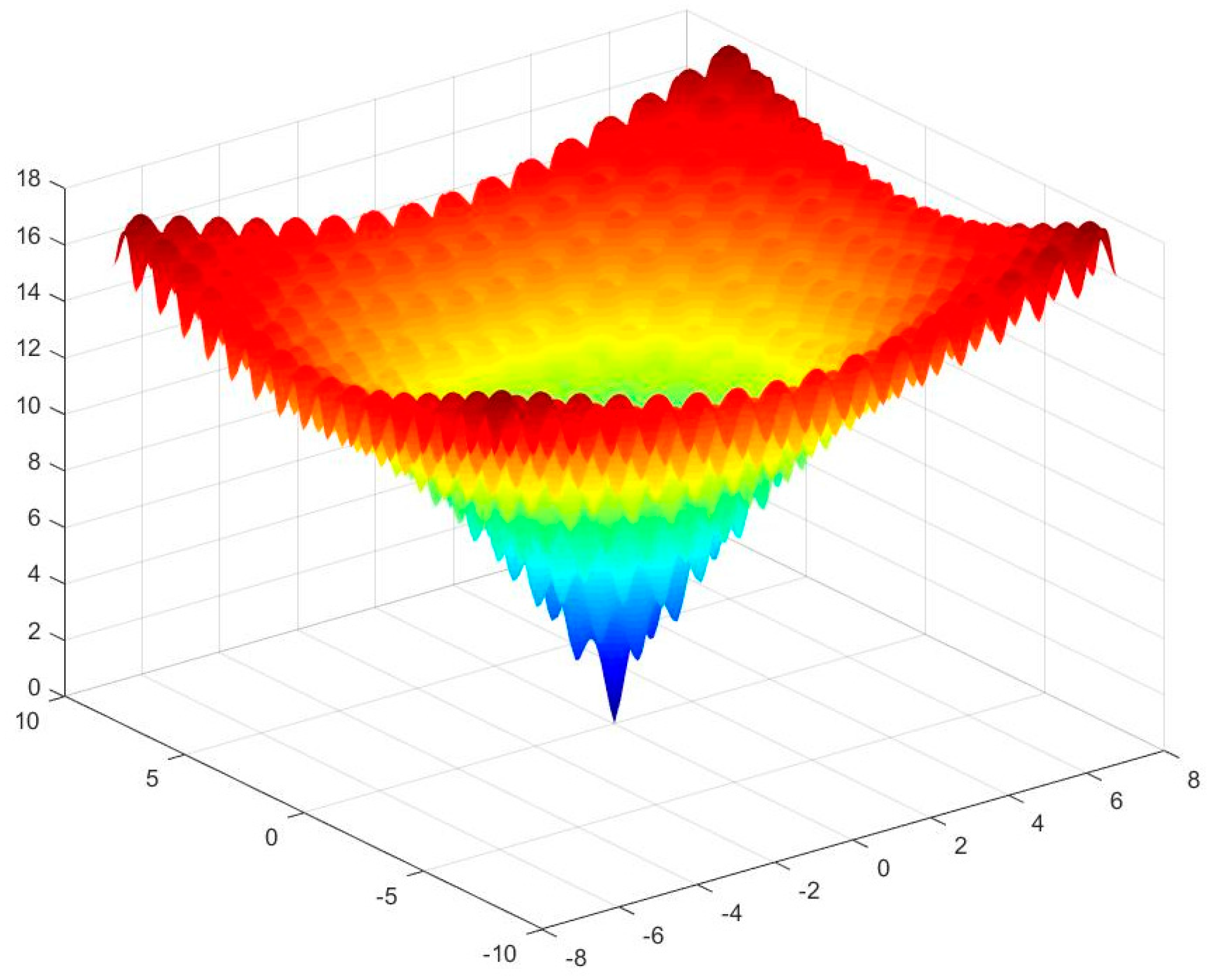

| Functions | Expression formula | Bounds | Optimum Value |

|---|---|---|---|

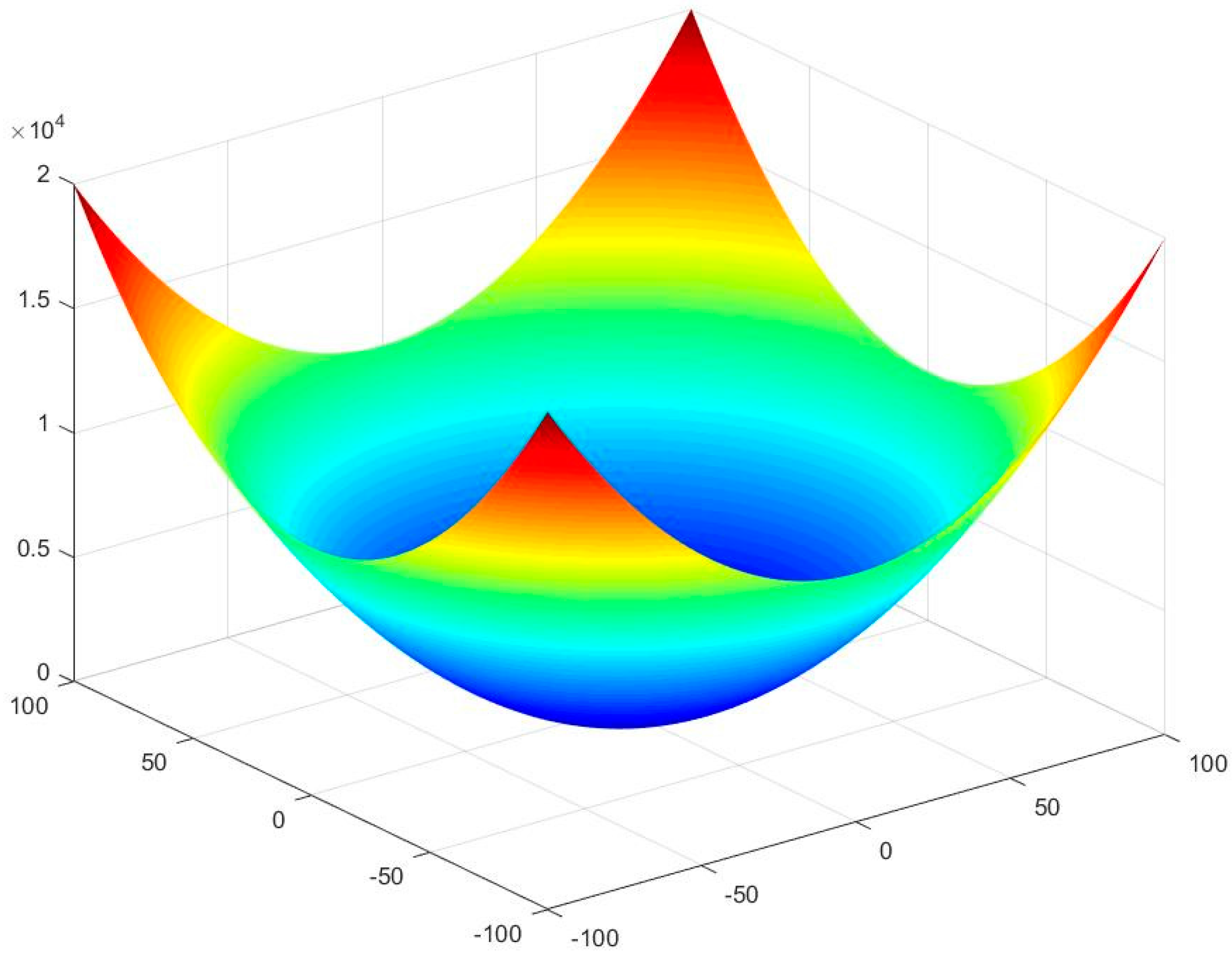

| Sphere | [−100,100] | 0 | |

| Rosenbrock | [−30,30] | 0 | |

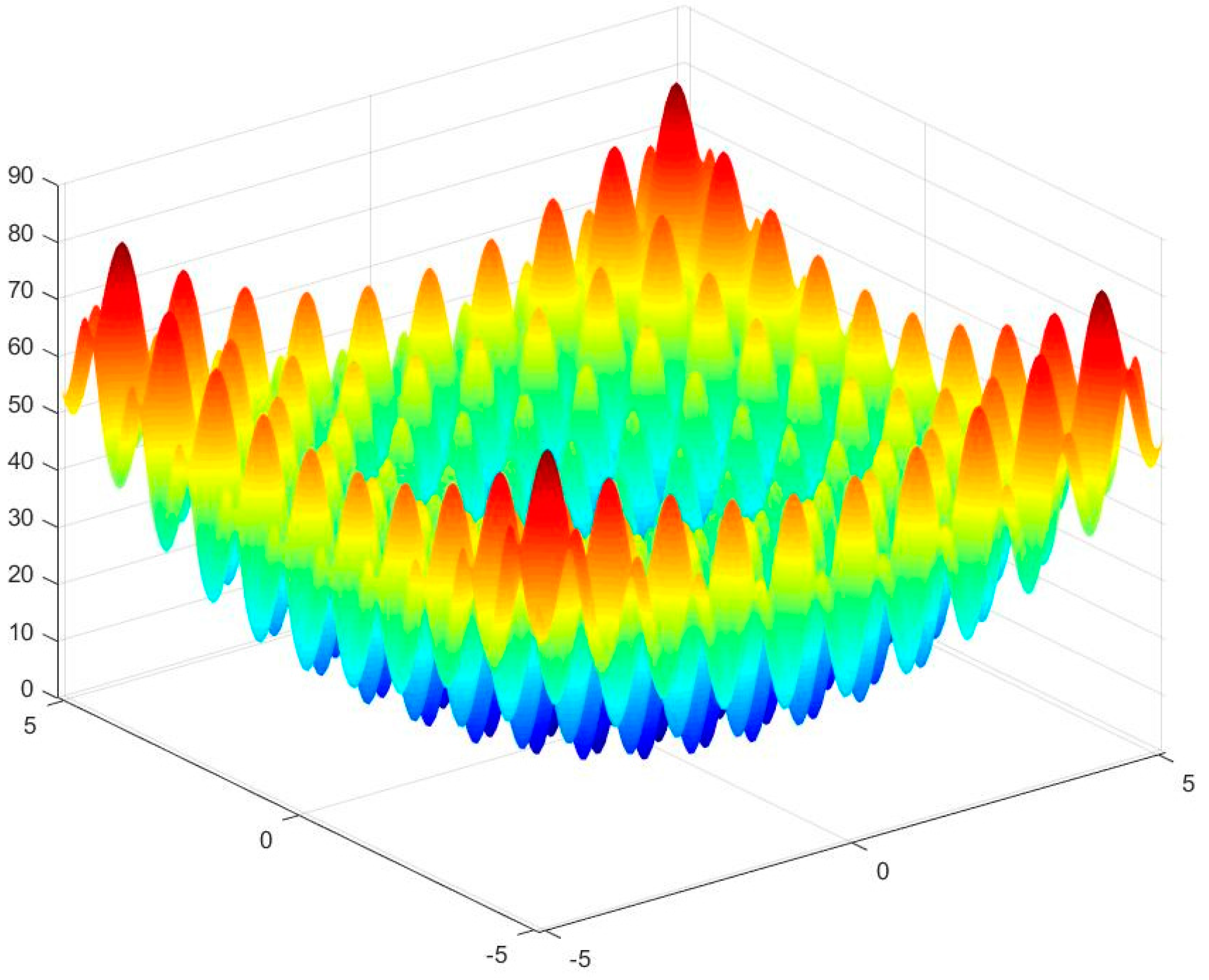

| Rastrigin | [−5.12,5.12] | 0 | |

| Schwefel | [−500,500] | −418.9829 × D | |

| Griewank | [−600,600] | 0 | |

| Ackley | [−32,32] | 0 |

| Algorithm | Parameter Values |

|---|---|

| PSO | N = 100,c1 = c2 = 1.4962,w = 0.7298 |

| ABC | N = 200,limit = 1000 |

| AF | N = 1,M = 100,c1 = 0.75,c2= 1.25 |

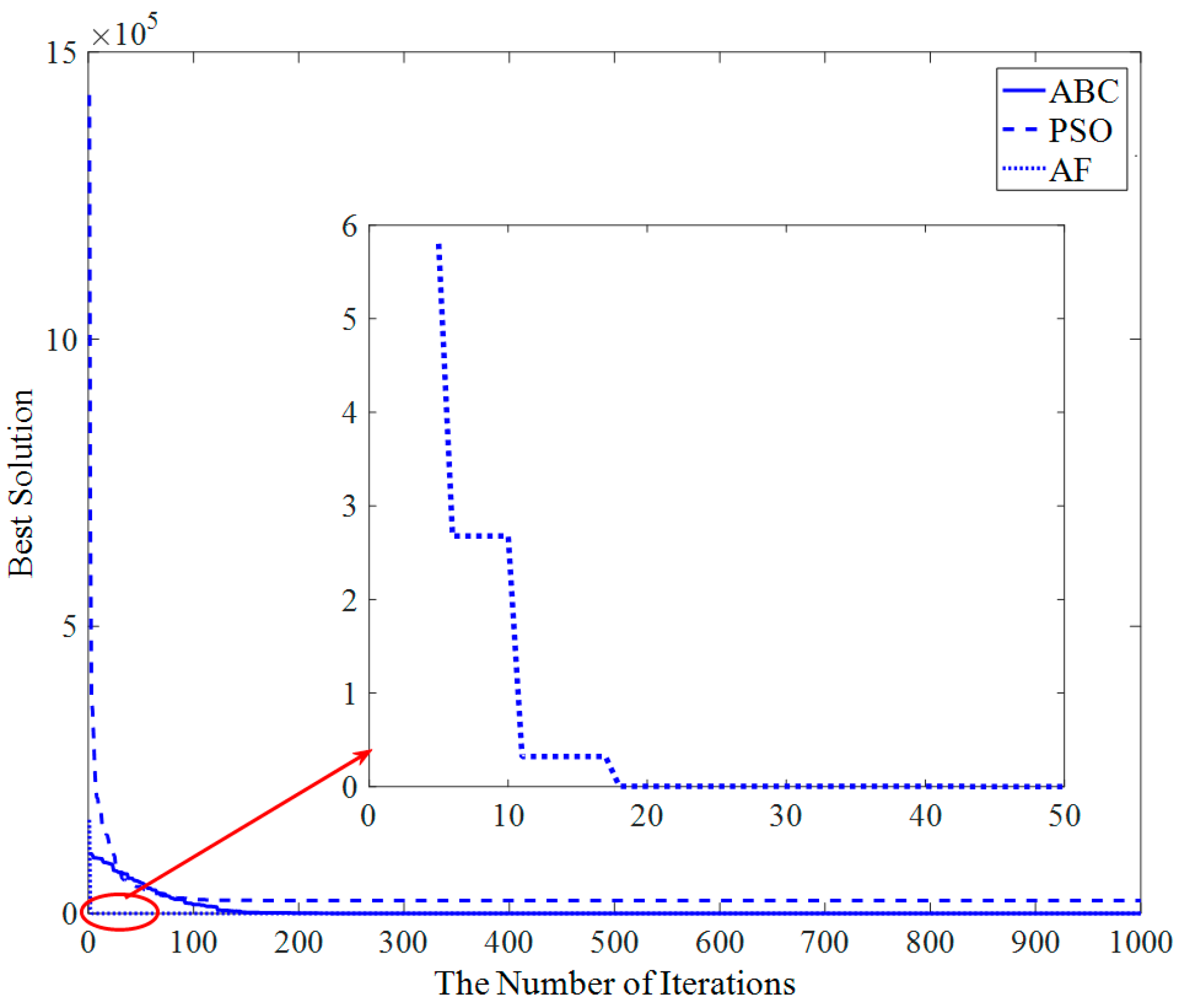

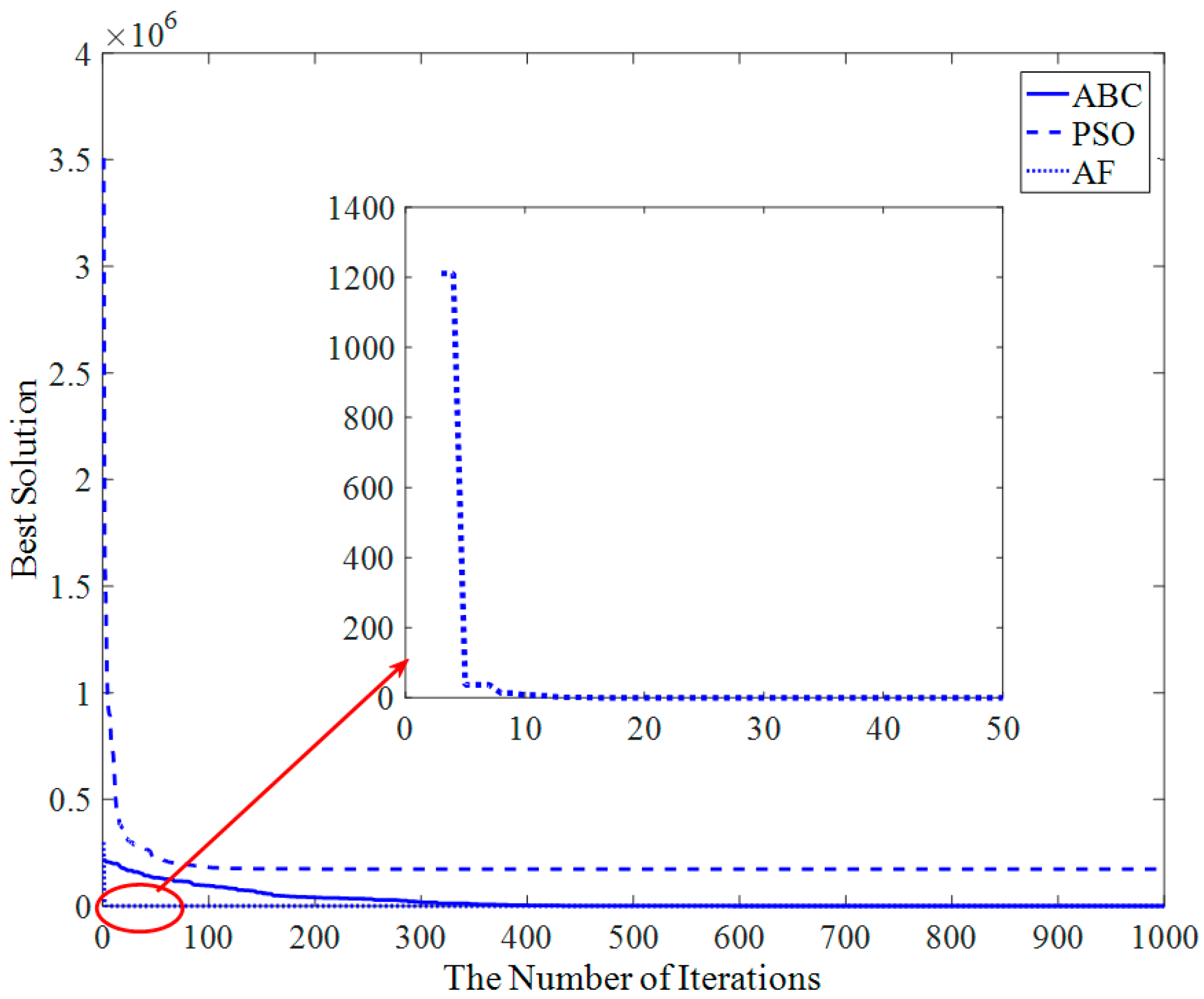

| Functions | Algorithm | Best | Mean | Worst | SD | Runtime |

|---|---|---|---|---|---|---|

| Sphere | PSO | 0.022229639 | 10.36862151 | 110.8350423 | 21.05011998 | 0.407360 |

| ABC | 2.22518 × 10−16 | 3.03501 × 10−16 | 4.3713 × 10−16 | 5.35969 × 10−17 | 2.988014 | |

| AF | 0 | 0 | 0 | 2.536061 | ||

| Rosenbrock | PSO | 86.00167369 | 19,283.23676 | 222,601.751 | 43,960.73321 | 0.578351 |

| ABC | 0.004636871 | 0.071185731 | 0.245132443 | 0.065746751 | 3.825228 | |

| AF | 17.93243086 | 18.44894891 | 18.77237391 | 0.238206854 | 4.876399 | |

| Rastrigin | PSO | 60.69461471 | 124.3756019 | 261.6735015 | 43.5954195 | 0.588299 |

| ABC | 0 | 1.7053 × 10−14 | 5.68434 × 10−14 | 1.90442 × 10−14 | 3.388325 | |

| AF | 0 | 0 | 0 | 0 | 2.730699 | |

| Schwefel | PSO | −1.082 × 10105 | −2.0346 × 10149 | −1.0156 × 10151 | 1.4362 × 10150 | 1.480785 |

| ABC | −8379.657745 | −8379.656033 | −8379.618707 | 0.007303823 | 3.462507 | |

| AF | −7510.128926 | −11,279.67966 | −177,281.186 | 25,371.33579 | 3.144982 | |

| Griewank | PSO | 0.127645871 | 0.639982775 | 1.252113282 | 0.31200235 | 1.097885 |

| ABC | 0 | 7.37654 × 10−14 | 2.61158 × 10−12 | 3.70604 × 10−13 | 6.051243 | |

| AF | 0 | 0 | 0 | 0 | 2.927380 | |

| Ackley | PSO | 19.99906463 | 20.04706409 | 20.46501638 | 0.103726728 | 0.949812 |

| ABC | 2.0338 × 10−10 | 4.5334 × 10−10 | 1.02975 × 10−9 | 1.6605 × 10−10 | 3.652016 | |

| AF | 8.88 × 10−16 | 8.88 × 10−16 | 8.88 × 10−16 | 0 | 3.023296 |

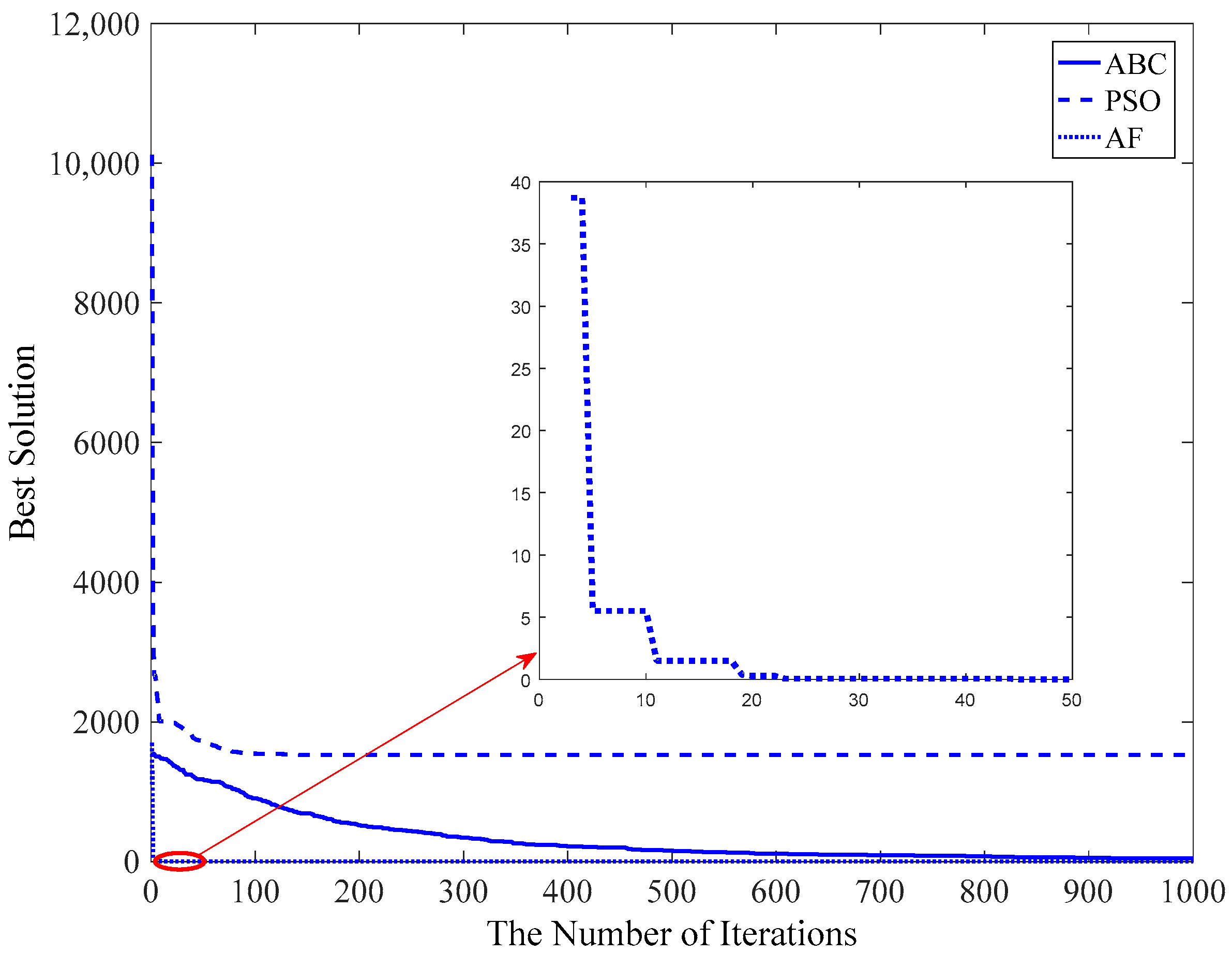

| Functions | Algorithm | Best | Mean | Worst | SD | Runtime |

|---|---|---|---|---|---|---|

| Sphere | PSO | 13,513.53237 | 29,913.32912 | 55,187.50413 | 9279.053897 | 0.515428 |

| ABC | 6.73535 × 10−8 | 3.56859 × 10−7 | 1.17148 × 10−6 | 2.37263 × 10−7 | 3.548339 | |

| AF | 0 | 4.22551 × 10−32 | 2.11276 × 10−30 | 2.95786 × 10−31 | 3.476036 | |

| Rosenbrock | PSO | 9,137,632.795 | 53,765,803.92 | 313,258,238.9 | 49,387,420.59 | 0.707141 |

| ABC | 0.409359085 | 13.87909385 | 49.83380808 | 9.973291581 | 4.094523 | |

| AF | 47.95457 | 48.50293 | 48.87977 | 0.246019 | 6.674920 | |

| Rastrigin | PSO | 500.5355119 | 671.5528998 | 892.8727757 | 98.8516628 | 1.036802 |

| ABC | 0.995796171 | 3.850881679 | 7.36921061 | 1.539235109 | 3.661335 | |

| AF | 0 | 0 | 0 | 0 | 3.753900 | |

| Schwefel | PSO | −1.6819 × 10127 | −5.9384 × 10125 | −2.5216 × 105 | 2.7148 × 10126 | 2.672258 |

| ABC | −20,111.1655 | −19,720.51324 | −19,318.44458 | 183.7240198 | 3.433517 | |

| AF | −20,680.01223 | −23,796.53666 | −93,734.38905 | 16,356.3483 | 4.053411 | |

| Griewank | PSO | 118.8833865 | 283.810608 | 524.5110849 | 101.3692096 | 1.609482 |

| ABC | 1.63 × 10−6 | 1.29 × 10−3 | 3.30 × 10−2 | 0.005265446 | 6.573567 | |

| AF | 0 | 0 | 0 | 0 | 3.564724 | |

| Ackley | PSO | 20.169350 | 20.452818 | 21.151007 | 0.2030427 | 1.499010 |

| ABC | 0.003030661 | 0.009076649 | 0.033812712 | 0.005609339 | 4.315255 | |

| AF | 8.88 × 10−16 | 8.88 × 10−16 | 8.88 × 10−16 | 0 | 5.463588 |

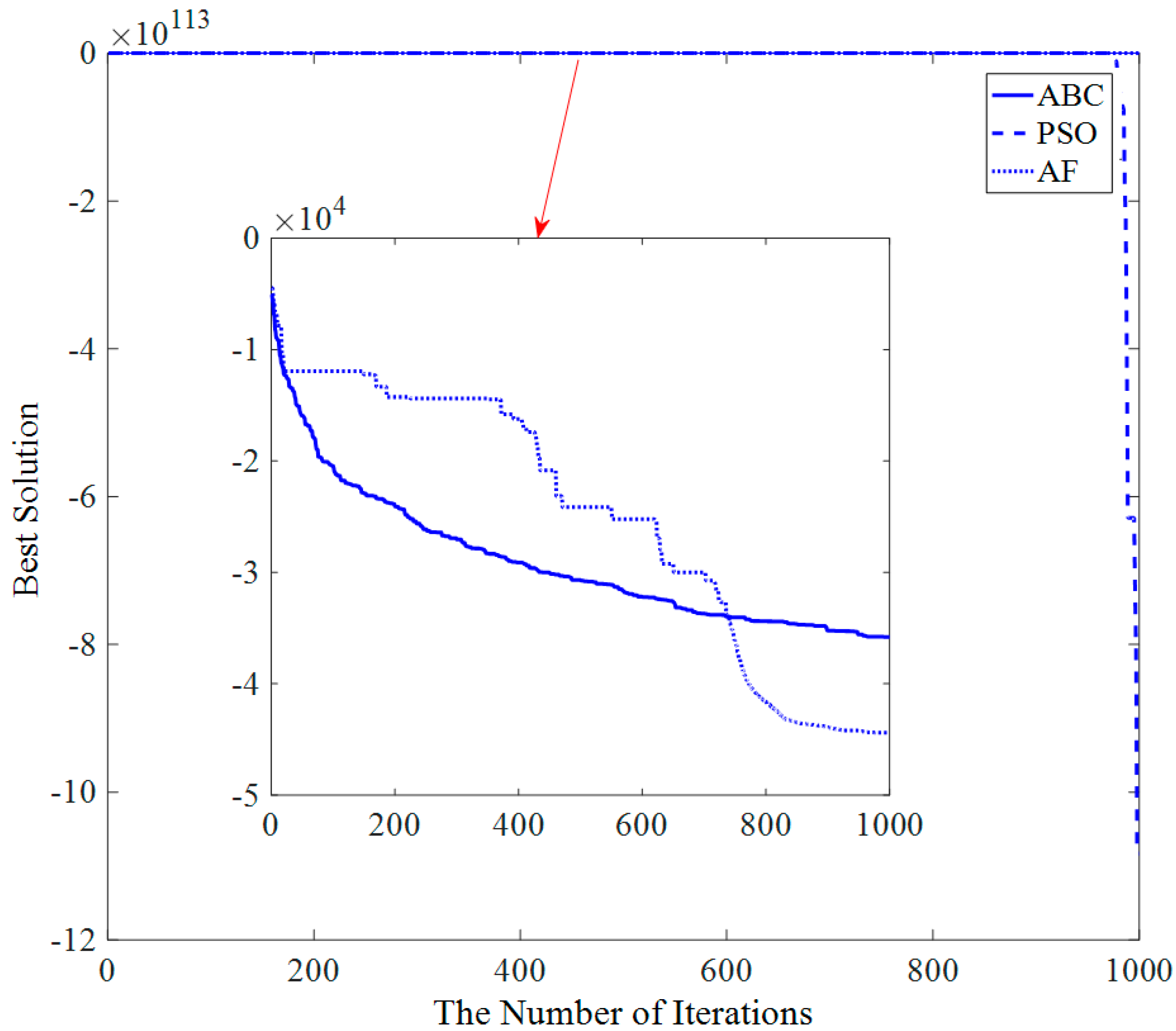

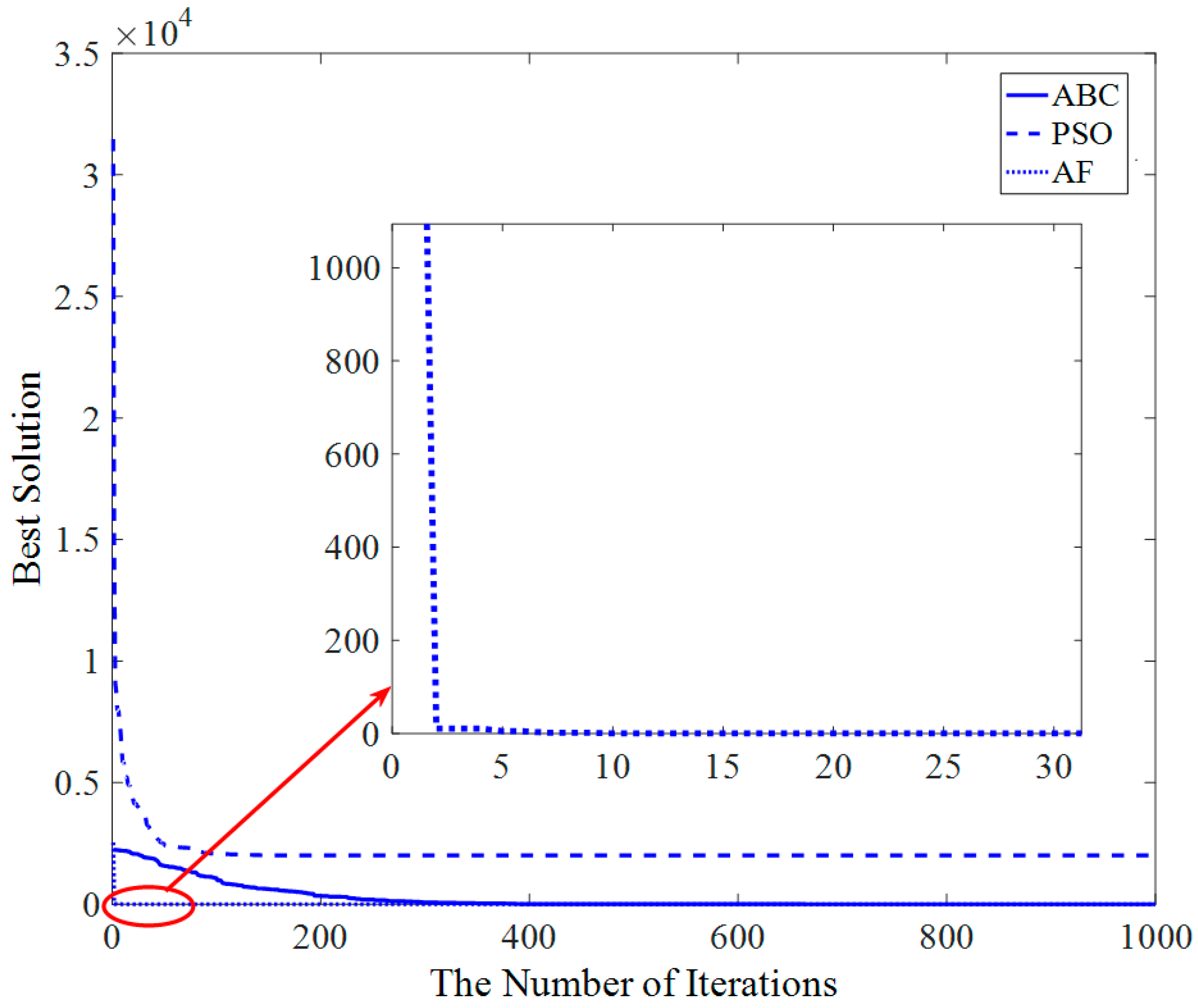

| Functions | Algorithm | Best | Mean | Worst | SD | Runtime |

|---|---|---|---|---|---|---|

| Sphere | PSO | 115,645.5342 | 195,135.2461 | 278,094.825 | 38,558.16575 | 0.711345 |

| ABC | 0.000594137 | 0.001826666 | 0.004501827 | 0.000839266 | 3.461872 | |

| AF | 0 | 3.13781 × 10−16 | 1.34675 × 10−14 | 1.88902 × 10−15 | 5.147278 | |

| Rosenbrock | PSO | 335,003,051.6 | 886,456,293.7 | 2,124,907,403 | 386,634,404.3 | 1.053024 |

| ABC | 87.31216327 | 482.9875993 | 3159.533172 | 660.8862246 | 4.249927 | |

| AF | 98.16326 | 98.75210 | 98.91893 | 0.143608 | 9.205695 | |

| Rastrigin | PSO | 1400.13738 | 1788.428575 | 2237.676158 | 190.5442307 | 1.711449 |

| ABC | 38.31898075 | 57.90108742 | 71.66147576 | 7.625052886 | 4.177761 | |

| AF | 0 | 3.55271 × 10−17 | 1.77636 × 10−15 | 2.4869 × 10−16 | 5.526152 | |

| Schwefel | PSO | −1.8278 × 10130 | −3.6943 × 10128 | −9.38464 × 1085 | 2.5844 × 10129 | 4.541757 |

| ABC | −36,633.02634 | −35,865.45846 | −35,018.41908 | 428.4258428 | 3.776740 | |

| AF | −42,305.38762 | −43,259.38057 | −212,423.8294 | 42,713.19955 | 5.638101 | |

| Griewank | PSO | 921.4736939 | 1750.684535 | 2954.013327 | 393.6416257 | 2.117832 |

| ABC | 0.006642796 | 0.104038042 | 0.349646578 | 0.098645373 | 6.995976 | |

| AF | 0 | 1.71 × 10−11 | 4.96314 × 10−10 | 7.66992 × 10−11 | 5.440354 | |

| Ackley | PSO | 20.58328517 | 20.83783825 | 21.17117933 | 0.152682198 | 2.011021 |

| ABC | 2.062017063 | 2.669238251 | 3.277291002 | 0.28375453 | 4.611272 | |

| AF | 8.88 × 10−16 | 8.88 × 10−16 | 8.88 × 10−16 | 0 | 6.812734 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cheng, L.; Wu, X.-h.; Wang, Y. Artificial Flora (AF) Optimization Algorithm. Appl. Sci. 2018, 8, 329. https://doi.org/10.3390/app8030329

Cheng L, Wu X-h, Wang Y. Artificial Flora (AF) Optimization Algorithm. Applied Sciences. 2018; 8(3):329. https://doi.org/10.3390/app8030329

Chicago/Turabian StyleCheng, Long, Xue-han Wu, and Yan Wang. 2018. "Artificial Flora (AF) Optimization Algorithm" Applied Sciences 8, no. 3: 329. https://doi.org/10.3390/app8030329