Field Navigation Using Fuzzy Elevation Maps Built with Local 3D Laser Scans

Abstract

:1. Introduction

- A complete field navigation system developed on the onboard computer under the Robot Operating System (ROS) [27] is presented.

- 3D scan acquisition with a continuous rotating 2D laser scanner [28] is performed with a local SLAM scheme during vehicle motion.

- The computation of FEMs and FRMs is sped up using least squares fuzzy modeling [29] and multithreaded execution of nodes among the cores of the processor.

2. The Mobile Robot Andabata

3. Obtaining Leveled 3D Point Clouds

4. Filtering 3D Point Clouds

5. Building FEMs and FRMs

6. Multithreaded Computation of FEMs and FRMs

7. Field Navigation

7.1. Global Localization

7.2. Direction Selection

7.3. Computing Tread Speeds

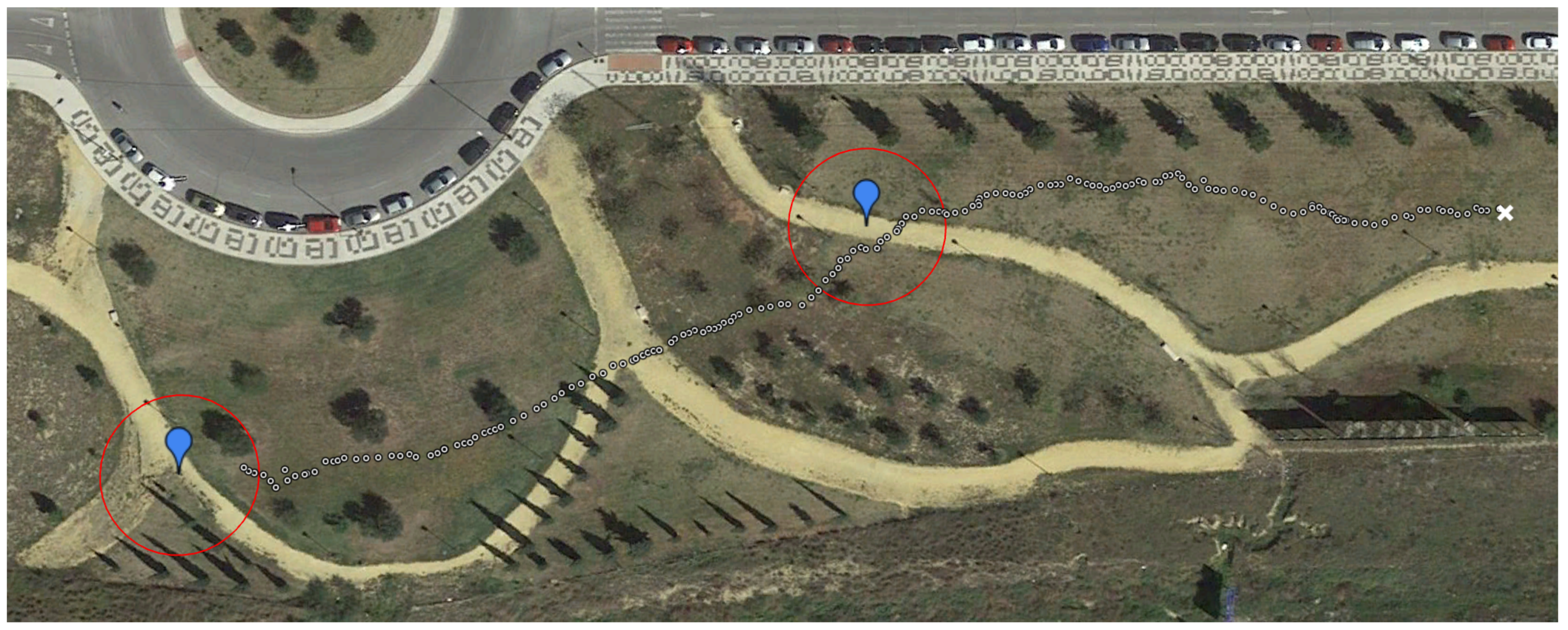

7.4. Experimental Results

8. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Sanfilippo, F.; Azpiazu, J.; Marafioti, G.; Transeth, A.A.; Stavdahl, Ø.; Liljebäck, P. Perception-driven obstacle-aided locomotion for snake robots: the state of the art, challenges and possibilities. Appl. Sci. 2017, 7, 336. [Google Scholar] [CrossRef]

- Larson, J.; Trivedi, M.; Bruch, M. Off-road terrain traversability analysis and hazard avoidance for UGVs. In Proceedings of the IEEE Intelligent Vehicles Symposium (IV), Baden-Baden, Germany, 6 February 2011; pp. 1–7. [Google Scholar]

- Tong, C.H.; Barfoot, T.D.; Dupuis, E. Three-dimensional SLAM for mapping planetary work site environments. J. Field Robot. 2012, 29, 381–412. [Google Scholar] [CrossRef]

- Sinha, A.; Papadakis, P. Mind the gap: Detection and traversability analysis of terrain gaps using LIDAR for safe robot navigation. Robotica 2013, 31, 1085–1101. [Google Scholar] [CrossRef]

- Weiss, U.; Biber, P. Plant detection and mapping for agricultural robots using a 3D LIDAR sensor. Robot. Auton. Syst. 2011, 59, 265–273. [Google Scholar] [CrossRef]

- Chen, L.C.; Hoang, D.C.; Lin, H.I.; Nguyen, T.H. Innovative methodology for multi-view point cloud registration in robotic 3D object scanning and reconstruction. Appl. Sci. 2016, 6, 132. [Google Scholar] [CrossRef]

- Macher, H.; Landes, T.; Grussenmeyer, P. From Point Clouds to Building Information Models: 3D Semi-Automatic Reconstruction of Indoors of Existing Buildings. Appl. Sci. 2017, 7, 1030. [Google Scholar] [CrossRef]

- Lalonde, J.F.; Vandapel, N.; Huber, D.F.; Hebert, M. Natural terrain classification using three-dimensional ladar data for ground robot mobility. J. Field Robot. 2006, 23, 839–861. [Google Scholar] [CrossRef]

- Plaza-Leiva, V.; Gomez-Ruiz, J.A.; Mandow, A.; García-Cerezo, A. Voxel-Based Neighborhood for Spatial Shape Pattern Classification of Lidar Point Clouds with Supervised Learning. Sensors 2017, 17, 594. [Google Scholar] [CrossRef] [PubMed]

- Rekleitis, I.; Bedwani, J.L.; Dupuis, E.; Lamarche, T.; Allard, P. Autonomous over-the-horizon navigation using LIDAR data. Auton. Robots 2013, 34, 1–18. [Google Scholar] [CrossRef]

- Schadler, M.; Stückler, J.; Behnke, S. Rough terrain 3D mapping and navigation using a continuously rotating 2D laser scanner. Künstliche Intell. 2014, 28, 93–99. [Google Scholar] [CrossRef]

- Almqvist, H.; Magnusson, M.; Lilienthal, A. Improving point cloud accuracy obtained from a moving platform for consistent pile attack pose estimation. J. Intell. Robot. Syst. Theory Appl. 2014, 75, 101–128. [Google Scholar] [CrossRef]

- Zlot, R.; Bosse, M. Efficient large-scale three-dimensional mobile mapping for underground mines. J. Field Robot. 2014, 31, 731–752. [Google Scholar] [CrossRef]

- Brenneke, C.; Wulf, O.; Wagner, B. Using 3D Laser range data for SLAM in outdoor environments. In Proceedings of the IEEE International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 27–31 October 2003; Volume 1, pp. 188–193. [Google Scholar]

- Pfaff, P.; Triebel, R.; Burgard, W. An efficient extension to elevation maps for outdoor terrain mapping and loop closing. Int. J. Robot. Res. 2007, 26, 217–230. [Google Scholar] [CrossRef]

- Mandow, A.; Cantador, T.J.; García-Cerezo, A.; Reina, A.J.; Martínez, J.L.; Morales, J. Fuzzy modeling of natural terrain elevation from a 3D scanner point cloud. In Proceedings of the 7th IEEE International Symposium on Intelligent Signal Processing (WISP), Floriana, Malta, 19–21 September 2011; pp. 171–175. [Google Scholar]

- Hou, J.F.; Chang, Y.Z.; Hsu, M.H.; Lee, S.T.; Wu, C.T. Construction of fuzzy map for autonomous mobile robots based on fuzzy confidence model. Math. Probl. Eng. 2014, 2014, 1–8. [Google Scholar] [CrossRef]

- Martínez, J.L.; Mandow, A.; Reina, A.J.; Cantador, T.J.; Morales, J.; García-Cerezo, A. Navigability analysis of natural terrains with fuzzy elevation maps from ground-based 3D range scans. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Tokyo, Japan, 3–7 November 2013; pp. 1576–1581. [Google Scholar]

- Mandow, A.; Cantador, T.J.; Reina, A.J.; Martínez, J.L.; Morales, J.; García-Cerezo, A. Building Fuzzy Elevation Maps from a Ground-Based 3D Laser Scan for Outdoor Mobile Robots. In Advances in Intelligent Systems and Computing; Springer: Basel, Switzerland, 2016; Volume 417, pp. 29–41. [Google Scholar]

- Leedy, B.M.; Putney, J.S.; Bauman, C.; Cacciola, S.; Webster, J.M.; Reinholtz, C.F. Virginia Tech’s twin contenders: A comparative study of reactive and deliberative navigation. J. Field Robot. 2006, 23, 709–727. [Google Scholar] [CrossRef]

- Morales, J.; Martínez, J.; Mandow, A.; García-Cerezo, A.; Pedraza, S. Power Consumption Modeling of Skid-Steer Tracked Mobile Robots on Rigid Terrain. IEEE Trans. Robot. 2009, 25, 1098–1108. [Google Scholar] [CrossRef]

- Silver, D.; Sofman, B.; Vandapel, N.; Bagnell, J.A.; Stentz, A. Experimental analysis of overhead data processing to support long range navigation. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and System (IROS), Beijing, China, 9–13 October 2006; pp. 2443–2450. [Google Scholar]

- Howard, A.; Seraji, H.; Werger, B. Global and regional path planners for integrated planning and navigation. J. Field Robot. 2005, 22, 767–778. [Google Scholar] [CrossRef]

- Lacroix, S.; Mallet, A.; Bonnafous, D.; Bauzil, G.; Fleury, S.; Herrb, M.; Chatila, R. Autonomous rover navigation on unknown terrains: Functions and integration. Int. J. Robot. Res. 2002, 21, 917–942. [Google Scholar] [CrossRef]

- Seraji, H. SmartNav: A rule-free fuzzy approach to rover navigation. J. Field Robot. 2005, 22, 795–808. [Google Scholar] [CrossRef]

- Yi, Y.; Mengyin, F.; Xin, Y.; Guangming, X.; Gong, J. Autonomous Ground Vehicle Navigation Method in Complex Environment. In Proceedings of the IEEE Intelligent Vehicles Symposium, San Diego, CA, USA, 21–24 June 2010; pp. 1060–1065. [Google Scholar]

- Quigley, M.; Conley, K.; Gerkey, B.; Faust, J.; Foote, T.; Leibs, J.; Wheeler, R.; Ng, A.Y. ROS: An open-source robot operating system. In Proceedings of the IEEE International Conference on Robotics and Automation: Workshop on Open Source Software (ICRA), Kobe, Japan, 12–17 May 2009. [Google Scholar]

- Martínez, J.L.; Morales, J.; Reina, A.J.; Mandow, A.; Pequeño-Boter, A.; García-Cerezo, A. Construction and calibration of a low-cost 3D laser scanner with 360∘ field of view for mobile robots. In Proceedings of the IEEE International Conference on Industrial Technology (ICIT), Seville, Spain, 17–19 March 2015; pp. 149–154. [Google Scholar]

- García-Cerezo, A.; López-Baldán, M.J.; Mandow, A. An efficient Least Squares Fuzzy Modelling Method for Dynamic Systems. In Proceedings of the IMACS Multiconference on Computational Engineering in Systems applications (CESA), Symposium on Modelling, Analysis and Simulation, Lille, France, 9–12 July 1996; pp. 885–890. [Google Scholar]

- Bovbel, P.; Andargie, F. Android-Based Mobile Robotics Platform, Mover-Bot. Available online: https://code.google.com/archive/p/mover-bot/ (accessed on 18 October 2017).

- Rockey, C.; Furlan, A. ROS Android Sensors Driver. Available online: https://github.com/chadrockey/android_sensors_driver (accessed on 18 October 2017).

- Mandow, A.; Martínez, J.L.; Morales, J.; Blanco, J.L.; García-Cerezo, A.; González, J. Experimental kinematics for wheeled skid-steer mobile robots. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), San Diego, CA, USA, 29 October–2 November 2007; pp. 1222–1227. [Google Scholar]

- Moore, T.; Stouch, D. A Generalized Extended Kalman Filter Implementation for the Robot Operating System. In Proceedings of the 13th International Conference on Intelligent Autonomous Systems (IAS), Padua, Italy, 15–18 July 2014. [Google Scholar]

- Reina, A.J.; Martínez, J.L.; Mandow, A.; Morales, J.; García-Cerezo, A. Collapsible Cubes: Removing Overhangs from 3D Point Clouds to Build Local Navigable Elevation Maps. In Proceedings of the IEEE/ASME International Conference on Advanced Intelligent Mechatronics (AIM), Besancon, France, 8–11 July 2014; pp. 1012–1017. [Google Scholar]

- Marder-Eppstein, E.; Pradeep, V. ROS Actionlib. Available online: https://github.com/ros/actionlib (accessed on 18 October 2017).

- Google. Google Earth. Available online: https://www.google.com/earth/ (accessed on 18 October 2017).

| 3D Point | Map | Forced | Map | Standard | Map | Standard |

|---|---|---|---|---|---|---|

| Cloud | Nodes | Spacing | Age (s) | Deviation (s) | Interval (s) | Deviation (s) |

| previous | 1 | 0 | 14.20 | 1.31 | 12.56 | 0.48 |

| 2 | 0 | 18.21 | 1.90 | 8.27 | 5.76 | |

| 1 | 15.30 | 1.08 | 7.45 | 1.37 | ||

| 2 | 15.73 | 1.62 | 11.37 | 0.50 | ||

| 3 | 0 | 27.34 | 3.41 | 6.60 | 6.09 | |

| 1 | 20.21 | 0.45 | 7.55 | 0.77 | ||

| 2 | 23.95 | 2.11 | 11.13 | 2.30 | ||

| current | 1 | 0 | 13.81 | 0.68 | 14.91 | 0.98 |

| 2 | 0 | 14.83 | 1.79 | 8.36 | 4.50 | |

| 1 | 15.21 | 1.33 | 8.13 | 2.20 | ||

| 2 | 12.06 | 1.58 | 11.70 | 2.40 | ||

| 3 | 0 | 28.44 | 4.16 | 6.88 | 2.65 | |

| 1 | 20.89 | 1.24 | 7.70 | 0.98 | ||

| 2 | 23.18 | 0.72 | 11.35 | 0.59 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Martínez, J.L.; Morán, M.; Morales, J.; Reina, A.J.; Zafra, M. Field Navigation Using Fuzzy Elevation Maps Built with Local 3D Laser Scans. Appl. Sci. 2018, 8, 397. https://doi.org/10.3390/app8030397

Martínez JL, Morán M, Morales J, Reina AJ, Zafra M. Field Navigation Using Fuzzy Elevation Maps Built with Local 3D Laser Scans. Applied Sciences. 2018; 8(3):397. https://doi.org/10.3390/app8030397

Chicago/Turabian StyleMartínez, Jorge L., Mariano Morán, Jesús Morales, Antonio J. Reina, and Manuel Zafra. 2018. "Field Navigation Using Fuzzy Elevation Maps Built with Local 3D Laser Scans" Applied Sciences 8, no. 3: 397. https://doi.org/10.3390/app8030397

APA StyleMartínez, J. L., Morán, M., Morales, J., Reina, A. J., & Zafra, M. (2018). Field Navigation Using Fuzzy Elevation Maps Built with Local 3D Laser Scans. Applied Sciences, 8(3), 397. https://doi.org/10.3390/app8030397