Hierarchical Guided-Image-Filtering for Efficient Stereo Matching

Abstract

:Featured Application

Abstract

1. Introduction

- (1)

- We created an innovative aggregation approach that efficiently combines the model parameters of PGIF [25] to allow the features of the image pairs in different resolutions to be considered;

- (2)

- The scheme is unique in its parameter-based aggregation, rather than the cost-volume-based approaches in the current literature, allowing efficient calculation with superior performance;

- (3)

- The proposed scheme outperforms most of the state-of-art algorithms in terms of disparity accuracy even without the refinement procedure.

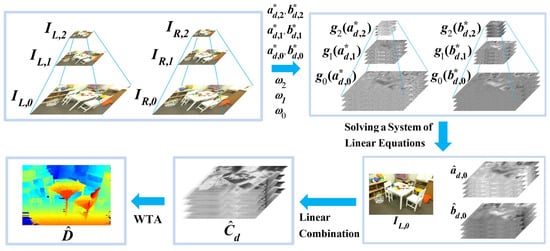

2. Proposed Method

2.1. The Cost Aggregation Based on the Pervasive Guided-Image-Filtering (PGIF)

2.2. Stereo Matching Based on Hierarchical Guided-Image-Filtering (HGIF)

3. Experimental Results

- The fast cost volume filtering scheme of Reference [19], denoted as FCVF;

- The pervasive guided-image-filter scheme of Reference [25], denoted as PGIF;

- The deep self-guided cost aggregation scheme of Reference [8], denoted as DSG;

- The sparse representation over discriminative dictionary scheme of Reference [13], denoted as SRDD;

- The proposed scheme, which implements a hierarchical guided-image-filter, denoted as HGIF.

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Scharstein, D.; Szeliski, R. A taxonomy and evaluation of dense two-frame stereo correspondence algorithms. Int. J. Comput. Vis. 2002, 47, 7–42. [Google Scholar] [CrossRef]

- Zbontar, J.; LeCun, Y. Stereo matching by training a convolutional neural network to compare image patches. J. Mach. Learn. Res. 2016, 17, 1–32. [Google Scholar]

- Nahar, S.; Joshi, M.V. A learned sparseness and IGMRF-based regularization framework for dense disparity estimation using unsupervised feature learning. IPSJ Trans. Comput. Vis. Appl. 2017, 9, 1–15. [Google Scholar] [CrossRef]

- Mayer, N.; Ilg, E.; Hausser, P.; Fischer, P.; Cremers, D.; Dosovitskiy, A.; Brox, T. A large dataset to train convolutional networks for disparity, optical flow, and scene flow estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 4040–4048. [Google Scholar]

- Pang, J.; Sun, W.; Ren, J.S.; Yang, C.; Yan, Q. Cascade residual learning: A two-stage convolutional neural network for stereo matching. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Venice, Italy, 22–29 October 2017; pp. 878–886. [Google Scholar]

- Kendall, A.; Martirosyan, H.; Dasgupta, S.; Henry, P.; Kennedy, R.; Bachrach, A.; Bry, A. End-to-end learning of geometry and context for deep stereo regression. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 66–75. [Google Scholar]

- Chang, J.R.; Chen, Y.S. Pyramid stereo matching network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 5410–5418. [Google Scholar]

- Park, I.K. Deep self-guided cost aggregation for stereo matching. Pattern Recognit. Lett. 2018, 112, 168–175. [Google Scholar]

- Pan, C.; Liu, Y.; Huang, D. Novel belief propagation algorithm for stereo matching with a robust cost computation. IEEE Access 2019, 7, 29699–29708. [Google Scholar] [CrossRef]

- Yao, P.; Zhang, H.; Xue, Y.; Chen, S. As-global-as-possible stereo matching with adaptive smoothness prior. IET Image Process. 2019, 13, 98–107. [Google Scholar] [CrossRef]

- Gupta, R.K.; Cho, S.Y. Stereo correspondence using efficient hierarchical belief propagation. Neural Comput. Appl. 2012, 21, 1585–1592. [Google Scholar] [CrossRef]

- Hu, T.; Qi, B.; Wu, T.; Xu, X.; He, H. Stereo matching using weighted dynamic programming on a single-direction four-connected tree. Comput. Vis. Image Underst. 2012, 116, 908–921. [Google Scholar] [CrossRef]

- Yin, J.; Zhu, H.; Yuan, D.; Xue, T. Sparse representation over discriminative dictionary for stereo matching. Pattern Recognit. 2017, 71, 278–289. [Google Scholar] [CrossRef]

- Yoon, K.J.; Kweon, I.S. Adaptive support-weight approach for correspondence search. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 28, 650–656. [Google Scholar] [CrossRef] [PubMed]

- Yang, Q. Stereo matching using tree filtering. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 834–846. [Google Scholar] [CrossRef]

- Pham, C.C.; Jeon, J.W. Domain transformation-based efficient cost aggregation for local stereo matching. IEEE Trans. Circuits Syst. Video Technol. 2013, 23, 1119–1130. [Google Scholar] [CrossRef]

- Çığla, C.; Alatan, A.A. An efficient recursive edge-aware filter. Signal Process. Image Commun. 2014, 29, 998–1014. [Google Scholar] [CrossRef]

- Yang, Q.; Li, D.; Wang, L.; Zhang, M. Full-image guided filtering for fast stereo matching. IEEE Signal Process. Lett. 2013, 20, 237–240. [Google Scholar] [CrossRef]

- Hosni, A.; Rhemann, C.; Bleyer, M.; Rother, C.; Gelautz, M. Fast cost-volume filtering for visual correspondence and beyond. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 504–511. [Google Scholar] [CrossRef]

- He, K.; Sun, J.; Tang, X. Guided image filtering. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1397–1409. [Google Scholar] [CrossRef] [PubMed]

- Li, Z.; Zheng, J.; Zhu, Z.; Yao, W.; Wu, S. Weighted guided image filtering. IEEE Trans. Image Process. 2014, 24, 120–129. [Google Scholar]

- Kou, F.; Chen, W.; Wen, C.; Li, Z. Gradient domain guided image filtering. IEEE Trans. Image Process. 2015, 24, 4528–4539. [Google Scholar] [CrossRef] [PubMed]

- Hong, G.S.; Kim, B.G. A local stereo matching algorithm based on weighted guided-Image-filtering for improving the generation of depth range images. Displays 2017, 49, 80–87. [Google Scholar] [CrossRef]

- Zhu, S.; Yan, L. Local stereo matching algorithm with efficient matching cost and adaptive guided image filter. Vis. Comput. 2017, 33, 1087–1102. [Google Scholar] [CrossRef]

- Zhu, C.; Chang, Y.Z. Efficient stereo matching based on pervasive guided image filtering. Math. Probl. Eng. 2019, 2019, 1–11. [Google Scholar] [CrossRef]

- Hu, W.; Zhang, K.; Sun, L.; Yang, S. Comparisons reducing for local stereo matching using hierarchical structure. In Proceedings of the IEEE International Conference on Multimedia and Expo, San Jose, CA, USA, 15–19 July 2013; pp. 1–6. [Google Scholar]

- Tang, L.; Garvin, M.K.; Lee, K.; Alward, W.L.M.; Kwon, Y.H.; Abràmoff, M.D. Robust multiscale stereo matching from fundus images with radiometric differences. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 2245–2258. [Google Scholar] [CrossRef] [PubMed]

- Li, R.; Ham, B.; Oh, C.; Sohn, K. Disparity search range estimation based on dense stereo matching. In Proceedings of the IEEE Conference on Industrial Electronics and Applications, Melbourne, Australia, 19–21 June 2013; pp. 753–759. [Google Scholar]

- Zhang, K.; Fang, Y.; Min, D.; Sun, L.; Yang, S.; Yan, S.; Tian, Q. Cross-scale cost aggregation for stereo matching. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1590–1597. [Google Scholar]

- Moritz, M.; Geiger, A. Object Scene Flow for Autonomous Vehicles. In Proceedings of the Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 8–10 June 2015; pp. 1–10. [Google Scholar]

- Scharstein, D.; Hirschmüller, H.; Kitajima, Y.; Krathwohl, G.; Nesic, N.; Wang, X.; Westling, P. High-resolution stereo datasets with subpixel-accurate ground truth. In Proceedings of the German Conference on Pattern Recognition (GCPR 2014), Münster, Germany, 12–15 September 2014; pp. 1–12. [Google Scholar]

| Image Sets | FCVF | CS-FCVF | PGIF | CS-PGIF | DSG | SRDD | Proposed |

|---|---|---|---|---|---|---|---|

| Adirondack | 8.78 | 7.93 | 6.43 | 6.30 | 8.98 | 6.53 | 5.73 |

| ArtL | 12.22 | 12.20 | 10.90 | 10.78 | 13.79 | 13.17 | 10.85 |

| Jadeplant | 21.81 | 22.85 | 20.03 | 20.43 | 21.22 | 18.98 | 19.67 |

| Motorcycle | 9.87 | 9.58 | 9.01 | 8.88 | 8.66 | 9.02 | 8.45 |

| MotorcycleE | 9.72 | 9.27 | 8.93 | 8.60 | 7.78 | 7.69 | 8.47 |

| Piano | 16.20 | 14.65 | 14.01 | 13.53 | 17.55 | 14.88 | 14.02 |

| PianoL | 33.44 | 33.10 | 30.25 | 30.61 | 31.41 | 26.65 | 29.32 |

| Pipes | 10.45 | 10.87 | 9.96 | 10.49 | 12.38 | 11.67 | 9.85 |

| Playroom | 22.68 | 19.41 | 16.62 | 15.63 | 23.98 | 16.25 | 15.18 |

| Playtable | 41.89 | 20.04 | 39.29 | 20.77 | 36.67 | 18.34 | 16.71 |

| PlaytableP | 13.81 | 12.10 | 13.08 | 11.25 | 19.91 | 10.55 | 10.98 |

| Recycle | 10.52 | 10.75 | 8.38 | 9.16 | 11.44 | 9.18 | 8.28 |

| Shelves | 39.52 | 34.52 | 36.11 | 31.92 | 41.13 | 37.88 | 31.63 |

| Teddy | 7.18 | 6.60 | 6.33 | 5.27 | 8.24 | 6.26 | 5.57 |

| Vintage | 33.22 | 29.73 | 30.05 | 29.83 | 33.53 | 27.30 | 26.97 |

| Weighted Average | 16.47 | 14.82 | 14.66 | 13.53 | 17.06 | 13.69 | 12.94 |

| Image Sets | FCVF | CS-FCVF | PGIF | CS-PGIF | DSG | SRDD | Proposed |

|---|---|---|---|---|---|---|---|

| Adirondack | 10.09 | 9.64 | 8.39 | 8.82 | 15.58 | 8.77 | 7.96 |

| ArtL | 21.90 | 22.02 | 21.64 | 21.23 | 30.79 | 22.86 | 21.17 |

| Jadeplant | 34.51 | 35.87 | 33.45 | 33.89 | 38.02 | 32.47 | 33.00 |

| Motorcycle | 13.50 | 13.34 | 13.23 | 13.18 | 17.52 | 13.54 | 12.62 |

| MotorcycleE | 13.68 | 13.13 | 13.25 | 13.17 | 16.68 | 11.96 | 12.92 |

| Piano | 19.99 | 18.61 | 18.29 | 17.74 | 23.48 | 19.30 | 18.11 |

| PianoL | 36.43 | 36.14 | 33.91 | 34.13 | 36.15 | 30.49 | 33.09 |

| Pipes | 21.45 | 22.01 | 21.52 | 22.10 | 26.28 | 22.88 | 21.49 |

| Playroom | 30.61 | 27.88 | 25.38 | 24.32 | 33.93 | 25.11 | 23.79 |

| Playtable | 44.65 | 24.39 | 42.63 | 25.57 | 42.70 | 23.29 | 21.23 |

| PlaytableP | 17.29 | 15.00 | 17.55 | 15.43 | 27.74 | 14.39 | 14.28 |

| Recycle | 12.30 | 12.58 | 10.50 | 11.59 | 17.50 | 11.51 | 10.33 |

| Shelves | 40.14 | 35.43 | 36.89 | 32.91 | 44.43 | 39.15 | 32.64 |

| Teddy | 12.59 | 12.14 | 11.94 | 10.96 | 17.48 | 11.75 | 11.19 |

| Vintage | 37.18 | 33.86 | 34.01 | 33.67 | 38.47 | 31.51 | 30.94 |

| Weighted Average | 21.74 | 20.26 | 20.49 | 19.47 | 26.31 | 19.54 | 18.71 |

| Methods | Adirondack | Playroom | Playtable | Shelves |

|---|---|---|---|---|

| FCVF | 15 | 16 | 14 | 15 |

| CS-FCVF | 20 | 21 | 18 | 18 |

| PGIF | 23 | 24 | 22 | 22 |

| CS-PGIF | 28 | 29 | 26 | 26 |

| Proposed | 31 | 31 | 29 | 29 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhu, C.; Chang, Y.-Z. Hierarchical Guided-Image-Filtering for Efficient Stereo Matching. Appl. Sci. 2019, 9, 3122. https://doi.org/10.3390/app9153122

Zhu C, Chang Y-Z. Hierarchical Guided-Image-Filtering for Efficient Stereo Matching. Applied Sciences. 2019; 9(15):3122. https://doi.org/10.3390/app9153122

Chicago/Turabian StyleZhu, Chengtao, and Yau-Zen Chang. 2019. "Hierarchical Guided-Image-Filtering for Efficient Stereo Matching" Applied Sciences 9, no. 15: 3122. https://doi.org/10.3390/app9153122