Pitch and Plasticity: Insights from the Pitch Matching of Chords by Musicians with Absolute and Relative Pitch

Abstract

:1. Introduction

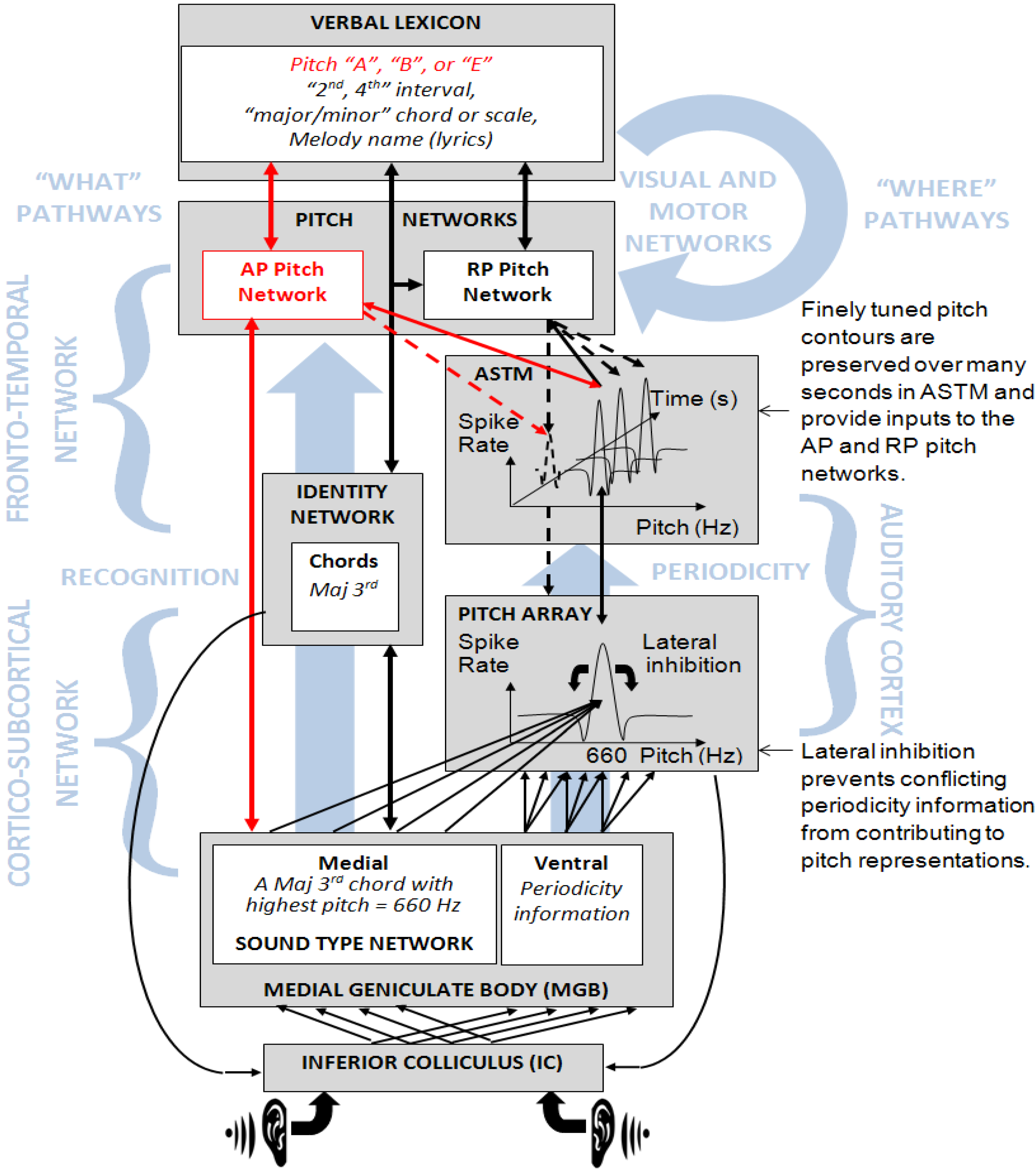

1.1. The Evolution of Pitch Models

1.2. Concurrent Pitch Processing

1.3. Models of Absolute Pitch

1.4. The Present Research

2. Experimental Section

2.1. Participants

| Musician Group | N | Pitch Naming Performance/50 | Mean Year of Music Training (SD) |

|---|---|---|---|

| RP | 12 | 0–7 | 19.04 (4.9) |

| QAP | 12 | 10–35 | 18.0 (9.7) |

| AP | 9 | 40–50 | 21.2 (8.2) |

2.2. Materials

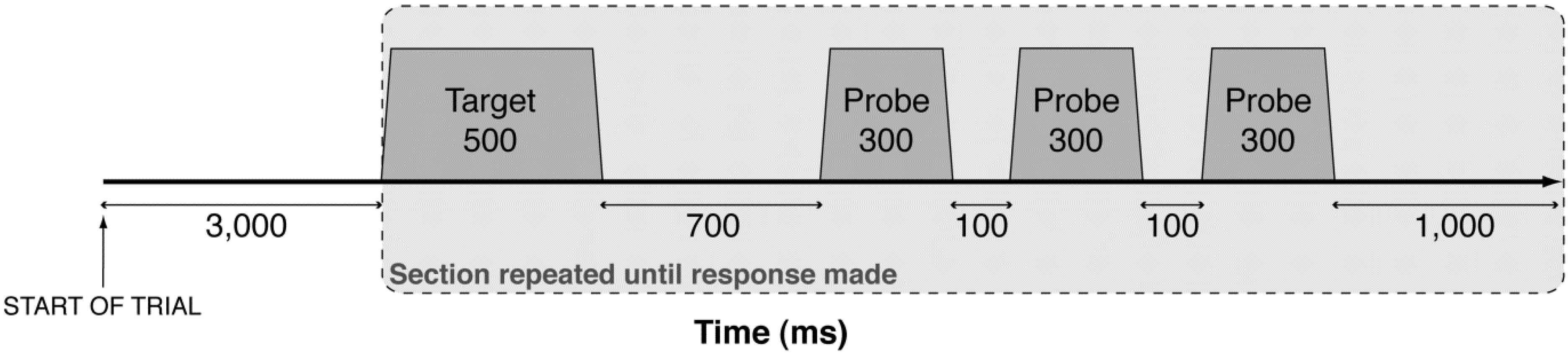

2.3. Procedure

| Interval (Semitones) | Frequency Difference (%) | Chord Names | |

|---|---|---|---|

| 2 | 12.2 | major 2nd | |

| 3 | 18.9 | minor 3rd | |

| 4 | 26 | major 3rd | |

| 6 | 41.4 | tritone | |

| 7 | 49.8 | perfect 5th | |

| 8 | 58.7 | minor 6th | |

| 2 and 7 | 12.2 | 49.8 | suspended 2nd triad |

| 3 and 6 | 18.9 | 41.4 | diminished 5th triad |

| 3 and 7 | 18.9 | 49.8 | minor triad |

| 4 and 6 | 26 | 41.4 | flattened 5th triad |

| 4 and 7 | 26 | 49.8 | major triad |

| 4 and 8 | 26 | 58.7 | augmented 5th triad |

2.4. Pitch Matching Accuracy and Data Analysis

| Chord | Semitone Intervals | Familiarity Rating Mean (SD) | t | Effect size (R2) |

|---|---|---|---|---|

| major triad | 4 and 7 | 4.45 (0.79) | 9.89 | 0.25 |

| major 3rd | 4 | 4.32 (0.93) | 4.87 | 0.11 |

| perfect 5th | 7 | 4.21 (0.94) | 3.11 | 0.05 |

| minor 6th | 8 | 4.13 (1.01) | 1.83 | 0.02 |

| minor triad | 3 and 7 | 4.02 (1.07) | 0.38 | 0.00 |

| minor 3rd | 3 | 3.85 (1.05) | −1.97 | 0.02 |

| tritone | 6 | 3.61 (1.17) | −4.64 | 0.10 |

| diminished 5th triad | 3 and 6 | 3.64 (1.11) | −5.55 | 0.09 |

| suspended 2nd triad | 2 and 7 | 3.60 (1.21) | −5.66 | 0.10 |

| augmented 5th triad | 4 and 8 | 3.48 (1.30) | −6.92 | 0.14 |

| major 2nd | 2 | 3.31 (1.39) | −6.96 | 0.20 |

| flattened 5th triad | 4 and 6 | 3.35 (1.28) | −8.74 | 0.21 |

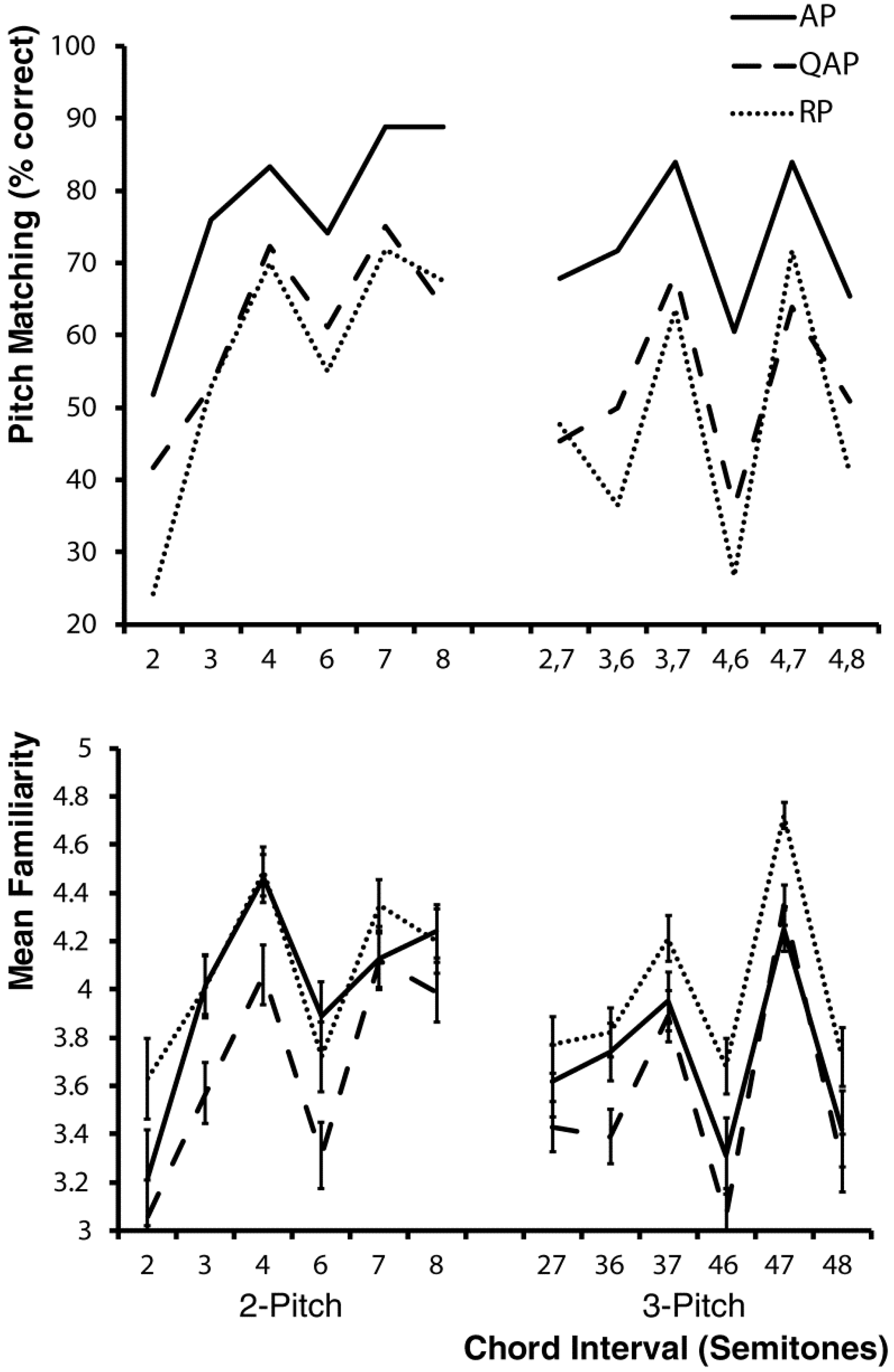

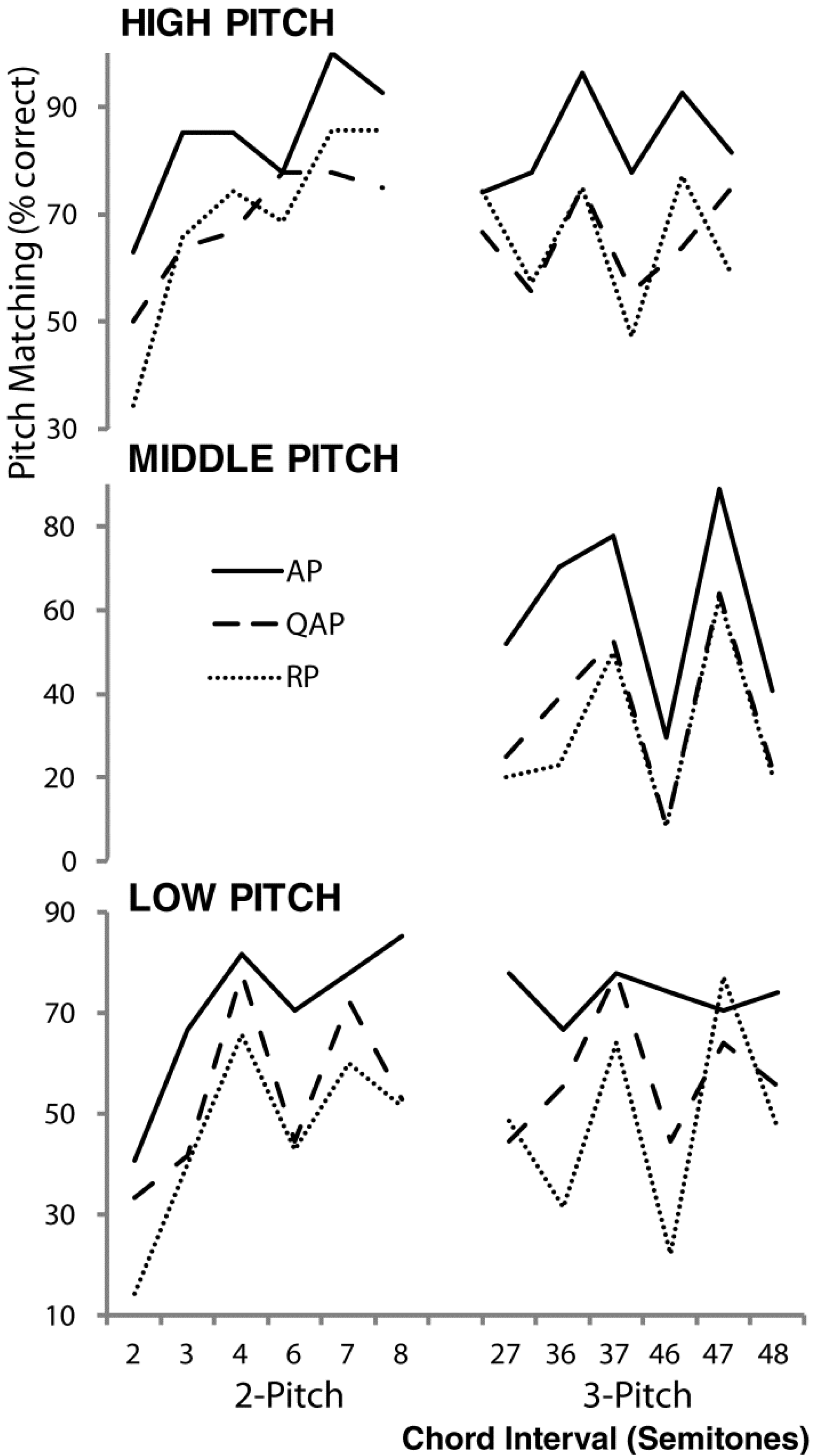

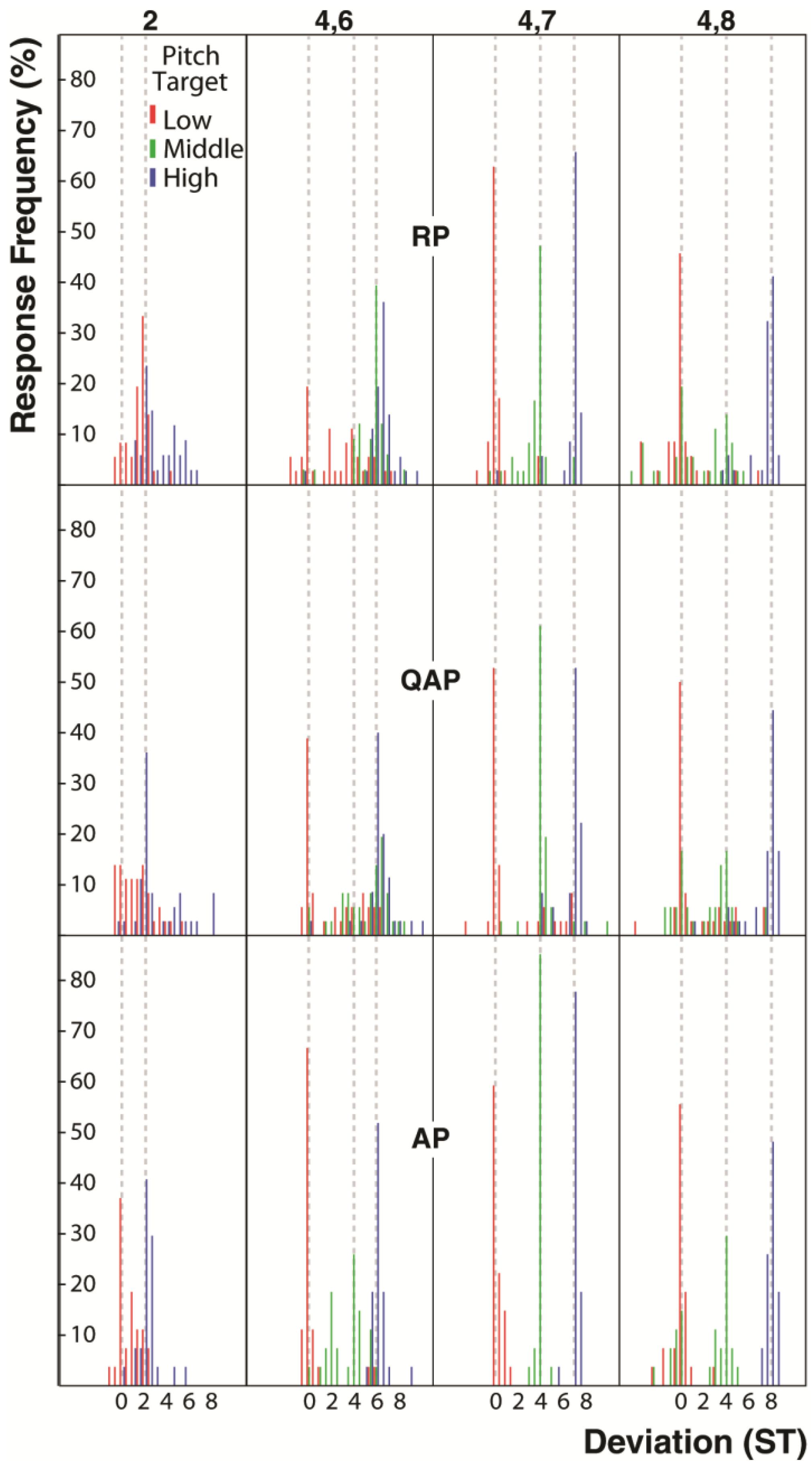

3. Results and Discussion

4. Discussion

Absolute Pitch in the Dual Mechanism Model of Pitch

5. Conclusions

Acknowledgments

Conflicts of Interest

References and Notes

- Bachem, A. Various types of absolute pitch. J. Acoust. Soc. Am. 1937, 9, 146–151. [Google Scholar] [CrossRef]

- McLachlan, N.M. A computational model of human pitch strength and height judgments. Hear. Res. 2009, 249, 23–35. [Google Scholar] [CrossRef]

- McLachlan, N.M.; Wilson, S.W. The central role of recognition in auditory perception: A neurobiological model. Psychol. Rev. 2010, 117, 175–196. [Google Scholar] [CrossRef]

- McLachlan, N.M. A neurocognitive model of recognition and pitch segregation. J. Acoust. Soc. Am. 2011, 130, 2845–2854. [Google Scholar] [CrossRef]

- McLachlan, N.M.; Marco, D.J.T.; Wilson, S.J. Consonance and pitch. J. Exp. Psychol. Gen. 2013, 142, 1142–1158. [Google Scholar] [CrossRef]

- McLachlan, N.M.; Marco, D.J.T.; Wilson, S.J. The musical environment and auditory plasticity: Hearing the pitch of percussion. Front. Psychol. 2013. [Google Scholar] [CrossRef]

- De Cheveigné, A. Pitch Perception Models. In Pitch—Neural Coding and Perception; Plack, C., Oxenham, A., Fay, R.R., Popper, A.N., Eds.; Springer Verlag: New York, NY, USA, 2005; pp. 169–233. [Google Scholar]

- Terhardt, E.; Stoll, G.; Seewann, M. Algorithm for extraction of pitch and pitch salience from complex tonal signals. J. Acoust. Soc. Am. 1982, 71, 679–688. [Google Scholar] [CrossRef]

- Cohen, M.A.; Grossberg, S.; Wyse, L.L. A spectral network model of pitch perception. J. Acoust. Soc. Am. 1995, 98, 862–879. [Google Scholar] [CrossRef]

- Parncutt, R. Harmony: A Psychoacoustical. Approach; Springer: Berlin, Germany, 1989; pp. 30–42. [Google Scholar]

- Licklider, J.C.R. A duplex theory of pitch perception. Experientia 1951, 8, 128–134. [Google Scholar] [CrossRef]

- Meddis, R.; Hewitt, M.J. Virtual pitch and phase sensitivity of a computer model of the auditory periphery I: Pitch identification. J. Acoust. Soc. Am. 1991, 89, 2866–2882. [Google Scholar] [CrossRef]

- Cariani, P.A.; Delgutte, B. Neural correlates of the pitch of complex tones. I. Pitch and pitch salience. J. Neurophysiol. 1996, 76, 1698–1716. [Google Scholar]

- Langner, G.; Albert, M.; Briede, T. Temporal and spatial coding of periodicity information in the inferior colliculus of awake chinchilla (Chinchilla laniger). Hear. Res. 2002, 168, 110–130. [Google Scholar] [CrossRef]

- Meddis, R.; O’Mard, L.P. Virtual pitch in a computational physiological model. J. Acoust. Soc. Am. 2006, 120, 3861–3869. [Google Scholar] [CrossRef]

- Moore, B.C.J. Frequency difference limens for short duration tones. J. Acoust. Soc. Am. 1973, 54, 610–619. [Google Scholar] [CrossRef]

- Oxenham, A.J.; Micheyl, C.; Keebler, M.V.; Loper, A.; Santurette, S. Pitch perception beyond the traditional existence region of pitch. Proc. Natl. Acad. Sci. USA 2011, 108, 7629–7634. [Google Scholar] [CrossRef]

- Carlyon, R.P.; Shackelton, T.M. Comparing the fundamental frequencies of resolved and unresolved harmonics: Evidence for two pitch mechanisms? J. Acoust. Soc. Am. 1994, 95, 3541–3554. [Google Scholar] [CrossRef]

- Gockel, H.; Carlyon, R.P.; Plack, C.J. Across-frequency interference effects in fundamental frequency discrimination: Questioning evidence for two pitch mechanisms. J. Acoust. Soc. Am. 2004, 116, 1092–1104. [Google Scholar] [CrossRef]

- Smith, Z.M.; Delgutte, B.; Oxenham, A.J. Chimaeric sounds reveal dichotomies in auditory perception. Nature 2002, 416, 87–90. [Google Scholar] [CrossRef]

- Assmann, P.F.; Paschall, D.D. Pitches of concurrent vowels. J. Acoust. Soc. Am. 1998, 103, 1150–1160. [Google Scholar] [CrossRef]

- Thompson, W.F.; Parncutt, R. Perceptual judgments of triads and dyads: Assessment of a psychoacoustic model. Music Percept. 1997, 14, 263–280. [Google Scholar] [CrossRef]

- Platt, J.R.; Racine, R.J. Perceived pitch class of isolated musical triads. J. Exp. Psychol. Hum. Percept. Perform. 1990, 16, 415–428. [Google Scholar] [CrossRef]

- Micheyl, C.; Keebler, M.V.; Oxenham, A.J. Pitch perception for mixtures of spectrally overlapping harmonic complex tones. J. Acoust. Soc. Am. 2010, 128, 257–269. [Google Scholar] [CrossRef]

- Assmann, P.F.; Summerfield, Q. Modeling the perception of concurrent vowels: Vowels with different fundamental frequencies. J. Acoust. Soc. Am. 1990, 88, 680–697. [Google Scholar] [CrossRef]

- De Cheveigné, A.; Kawahara, H. Multiple period estimation and pitch perception model. Speech Commun. 1999, 27, 175–185. [Google Scholar] [CrossRef]

- Shackleton, T.M.; Meddis, R.; Hewitt, M.J. The role of binaural and fundamental frequency difference cues in the identification of concurrently presented vowels. Q. J. Exp. Psychol. A 1994, 47, 545–563. [Google Scholar]

- McLachlan, N.M.; Grayden, D. Enhancement of speech perception in noise by periodicity processing: A neurobiological model and signal processing algorithm. Speech Commun. 2014, 57, 114–125. [Google Scholar] [CrossRef]

- Robinson, K.; Patterson, R.D. The stimulus duration required to identify vowels, their octave, and their pitch chroma. Speech Commun. 1995, 98, 1858–1865. [Google Scholar]

- Alain, C. Breaking the wave: Effects of attention and learning on concurrent sound perception. Hear. Res. 2007, 228, 225–236. [Google Scholar] [CrossRef]

- Piston, W. Harmony, 5th ed.; Gollancz: London, UK, 1948. [Google Scholar]

- Lee, K.M.; Skoe, E.; Kraus, N.; Ashley, R. Selective subcortical enhancement of musical intervals in musicians. J. Neurosci. 2009, 29, 5832–5840. [Google Scholar] [CrossRef]

- Levitin, D.J.; Rogers, S.E. Absolute pitch: Perception, coding, and controversies. Trends Cogn. Sci. 2005, 9, 26–33. [Google Scholar] [CrossRef]

- Deutsch, D. The puzzle of absolute pitch. Curr. Dir. Psychol. Sci. 2002, 11, 200–204. [Google Scholar]

- Halpern, A.R. Memory for the absolute pitch of familiar songs. Mem. Cogn. 1989, 17, 572–581. [Google Scholar] [CrossRef]

- Saffran, J.R.; Griepentrog, G.J. Absolute pitch in infant auditory learning: Evidence for developmental reorganization. Dev. Psychol. 2001, 37, 74–85. [Google Scholar] [CrossRef]

- Zatorre, R.J. Absolute pitch: A model for understanding the influence of genes and development on neural and cognitive function. Nat. Neurosci. 2003, 6, 692–695. [Google Scholar] [CrossRef]

- Terhardt, E.; Ward, W.D. Recognition of musical key: Exploratory study. J. Acoust. Soc. Am. 1982, 72, 26–33. [Google Scholar] [CrossRef]

- Bermudez, P.; Zatorre, R.J. A distribution of absolute pitch ability as revealed by computerized testing. Music Percept. 2009, 27, 89–101. [Google Scholar] [CrossRef]

- Wilson, S.J.; Lusher, D.; Wan, C.Y.; Dudgeon, P.; Reutens, D.C. The neurocognitive components of pitch processing: Insights from absolute pitch. Cereb. Cortex 2009, 19, 724–732. [Google Scholar] [CrossRef]

- Wilson, S.J.; Lusher, D.; Martin, C.L.; Rayner, G.; McLachlan, N.M. Intersecting factors lead to absolute pitch acquisition that is maintained in a “fixed do” environment. Music Percept. 2012, 29, 285–296. [Google Scholar] [CrossRef]

- Hsieh, I.-H.; Saberi, K. Temporal integration in absolute pitch identification of absolute pitch. Hear. Res. 2007, 233, 108–116. [Google Scholar]

- Krumhansl, C.L. Cognitive Foundations of Musical Pitch; Oxford University Press: New York, NY, USA, 1990. [Google Scholar]

- Moore, B.C.J.; Glasberg, B.R. Relative dominance of individual partials in determining the pitch of complex tones. J. Acoust. Soc. Am. 1985, 77, 1853–1860. [Google Scholar] [CrossRef]

- Wilson, S.J.; Pressing, J.; Wales, R.; Pattison, P. Cognitive models of music psychology and the lateralisation of musical function within the brain. Aust. J. Psychol. 1999, 51, 125–139. [Google Scholar] [CrossRef]

- Sergeant, D.C. Experimental investigation of absolute pitch. J. Res. Music Educ. 1969, 17, 135–143. [Google Scholar] [CrossRef]

- Ross, D.A.; Gore, J.C.; Marks, L.E. Absolute pitch: Music and beyond. Epilepsy Behav. 2005, 7, 578–601. [Google Scholar] [CrossRef]

- Arnott, S.R.; Binns, M.A.; Grady, C.L.; Alain, C. Assessing the auditory dual-pathway model in humans. Neuroimage 2004, 22, 401–408. [Google Scholar] [CrossRef]

- Ballas, J.A. Common factors in the identification of an assortment of brief everyday sounds. J. Exp. Psychol. Hum. Percept. Perform. 1993, 19, 250–267. [Google Scholar]

- Bitterman, Y.; Mukamel, R.; Malach, R.; Fried, I.; Nelken, I. Ultra-fine frequency tuning revealed in single neurons of human auditory cortex. Nature 2008, 451, 197–202. [Google Scholar] [CrossRef]

- Smith, N.A.; Schmuckler, M.A. Dial A440 for absolute pitch: Absolute pitch memory by non-absolute pitch possessors. J. Acoust. Soc. Am. 2008, 123, EL77–EL84. [Google Scholar] [CrossRef]

- Levitin, D.J. Absolute memory for musical pitch: Evidence from the production of learned melodies. Percept. Psychophys. 1994, 56, 414–423. [Google Scholar] [CrossRef]

- Frieler, K.; Fischinger, T.; Schlemmer, K.; Lothwesen, K.; Jakubowski, K.; Müllensiefen, D. Absolute memory for pitch: A comparative replication of Levitin’s 1994 study in six European labs. Music Sci. 2013, 17, 334–349. [Google Scholar] [CrossRef]

- Deutsch, D. The Processing of Pitch Combinations. In The Psychology of Music; Deutsch, D., Ed.; Academic Press: San Diego, CA, USA, 1999; pp. 349–404. [Google Scholar]

- Klein, M.; Coles, M.G.H.; Donchin, E. People with absolute pitch process tones without producing a P300. Science 1984, 223, 1306–1309. [Google Scholar]

- Wayman, J.W.; Frisina, R.D.; Walton, J.P.; Hantz, E.C.; Crummer, G.C. Effects of musical training and absolute pitch ability on event-related activity in response to sine tones. J. Acoust. Soc. Am. 1992, 91, 3527–3531. [Google Scholar] [CrossRef]

- Schlaug, G.; Jäncke, L.; Huang, Y.; Steinmetz, H. In vivo evidence of structural brain asymmetry in musicians. Science 1995, 267, 699–701. [Google Scholar] [CrossRef]

- Zatorre, R.J.; Belin, P.; Penhune, V. Structure and function of the auditory cortex: Music and speech. Trends Cogn. Sci. 2002, 6, 37–46. [Google Scholar] [CrossRef]

- Petrides, M.; Milnar, B. Deficits on subject-ordered tasks after frontal- and temporal-lobe lesions in man. Neuropsychologia 1982, 20, 249–262. [Google Scholar] [CrossRef]

- Keenan, J.P.; Thangaraj, V.; Halpern, A.R.; Schlaug, G. Absolute pitch and planum temporale. Neuroimage 2001, 14, 1402–1408. [Google Scholar] [CrossRef]

- Greco, L. Absolute Pitch and Calendrical Calculation in Aspergers. Syndrome: A Case Study. M.Psy. Thesis, Melbourne School of Psychological Sciences, University of Melbourne, Melbourne, Australia, 1 April 2011. [Google Scholar]

- Miyazaki, K. Perception of musical intervals by absolute pitch possessors. Music Percept. 1992, 9, 413–426. [Google Scholar] [CrossRef]

- Zatorre, R.J.; Perry, D.W.; Beckett, C.A.; Westbury, C.F.; Evans, A.C. Functional anatomy of musical processing in listeners with absolute pitch and relative pitch. Proc. Natl. Acad. Sci. USA 1998, 95, 3172–3177. [Google Scholar] [CrossRef]

- Levitin, D.J.; Zatorre, R.J. On the nature of early music training and absolute pitch: A reply to Brown, Sachs, Cammuso, and Folstein. Music Percept. 2003, 21, 105–110. [Google Scholar]

- Chin, C.S. The development of absolute pitch: A theory concerning the roles of music training at an early developmental age and individual cognitive style. Psychol. Music 2003, 31, 155–171. [Google Scholar] [CrossRef]

- Hedger, S.C.; Heald, S.L.M.; Nusbaum, H.C. Absolute pitch may not be so absolute. Psychol. Sci. 2013, 24, 1496–1502. [Google Scholar] [CrossRef]

- Dooley, K.; Deutsch, D. Absolute pitch correlates with high performance on musical dictation. J. Acoust. Soc. Am. 2010, 128, 890–893. [Google Scholar] [CrossRef]

- Ross, D.A.; Olson, I.R.; Marks, L.E.; Gore, J.C. A non-musical paradigm for identifying absolute pitch possessors. J. Acoust. Soc. Am. 2004, 116, 1793–1799. [Google Scholar] [CrossRef]

- Hsieh, I.; Saberi, K. Dissociation of procedural and semantic memory in absolute-pitch processing. Hear. Res. 2008, 240, 73–79. [Google Scholar] [CrossRef]

© 2013 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/3.0/).

Share and Cite

McLachlan, N.M.; Marco, D.J.T.; Wilson, S.J. Pitch and Plasticity: Insights from the Pitch Matching of Chords by Musicians with Absolute and Relative Pitch. Brain Sci. 2013, 3, 1615-1634. https://doi.org/10.3390/brainsci3041615

McLachlan NM, Marco DJT, Wilson SJ. Pitch and Plasticity: Insights from the Pitch Matching of Chords by Musicians with Absolute and Relative Pitch. Brain Sciences. 2013; 3(4):1615-1634. https://doi.org/10.3390/brainsci3041615

Chicago/Turabian StyleMcLachlan, Neil M., David J. T. Marco, and Sarah J. Wilson. 2013. "Pitch and Plasticity: Insights from the Pitch Matching of Chords by Musicians with Absolute and Relative Pitch" Brain Sciences 3, no. 4: 1615-1634. https://doi.org/10.3390/brainsci3041615