Application of Linear Mixed-Effects Models in Human Neuroscience Research: A Comparison with Pearson Correlation in Two Auditory Electrophysiology Studies

Abstract

:1. Introduction

2. Study 1

2.1. Statistical Methods

2.2. Results

3. Study 2

3.1. Statistical Methods

3.2. Results

4. Discussion

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

Abbreviations

| LME | Linear mixed-effects |

| GLME | Generalized linear mixed-effects |

| EEG | Electroencephalography |

| ERP | Event related potential |

| SNR | Signal-to-noise ratio |

| N1 | Negative-going ERP response that peaks at approximately 100 ms after auditory stimulus onset |

| P2 | Positive-going ERP response that follows the N1 |

| ANOVA | Analysis of variance |

| nlme | A R package for linear and nonlinear mixed effects models |

| CV | Syllable structure consisting of a consonant and vowel (e.g., /ba/) |

| F | F statistic, a value from ANOVA or regression analysis to indicate differences between two means |

| β | Vector of fixed effect slopes in the linear mixed effects models |

| μV | Microvolt |

| dB | Decibel |

| VIF | Variance inflation factor |

References

- Pernet, C.; Wilcox, R.; Rousselet, G. Robust correlation analyses: False positive and power validation using a new open source matlab toolbox. Front. Psychol. 2013, 3, 606. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- McElreath, R. Statistical Rethinking: A Bayesian Course with Examples in R and Stan; Chapman & Hall/CRC Press: Boca Raton, FL, USA, 2016. [Google Scholar]

- Baayen, R.H.; Davidson, D.J.; Bates, D.M. Mixed-effects modeling with crossed random effects for subjects and items. J. Mem. Lang. 2008, 59, 390–412. [Google Scholar] [CrossRef]

- Bagiella, E.; Sloan, R.P.; Heitjan, D.F. Mixed-effects models in psychophysiology. Psychophysiology 2000, 37, 13–20. [Google Scholar] [CrossRef] [PubMed]

- Magezi, D.A. Linear mixed-effects models for within-participant psychology experiments: An introductory tutorial and free, graphical user interface (lmmgui). Front. Psychol. 2015, 6, 1–7. [Google Scholar] [CrossRef] [PubMed]

- Andersson-Roswall, L.; Engman, E.; Samuelsson, H.; Malmgren, K. Cognitive outcome 10 years after temporal lobe epilepsy surgery: A prospective controlled study. Neurology 2010, 74, 1977–1985. [Google Scholar] [CrossRef] [PubMed]

- Ard, M.C.; Raghavan, N.; Edland, S.D. Optimal composite scores for longitudinal clinical trials under the linear mixed effects model. Pharm. Stat. 2015, 14, 418–426. [Google Scholar] [CrossRef] [PubMed]

- Bilgel, M.; Prince, J.L.; Wong, D.F.; Resnick, S.M.; Jedynak, B.M. A multivariate nonlinear mixed effects model for longitudinal image analysis: Application to amyloid imaging. Neuroimage 2016, 134, 658–670. [Google Scholar] [CrossRef] [PubMed]

- Hasenstab, K.; Sugar, C.A.; Telesca, D.; McEvoy, K.; Jeste, S.; Senturk, D. Identifying longitudinal trends within eeg experiments. Biometrics 2015, 71, 1090–1100. [Google Scholar] [CrossRef] [PubMed]

- Maneshi, M.; Moeller, F.; Fahoum, F.; Gotman, J.; Grova, C. Resting-state connectivity of the sustained attention network correlates with disease duration in idiopathic generalized epilepsy. PLoS ONE 2012, 7, e50359. [Google Scholar] [CrossRef] [PubMed]

- Martin, H.R.; Poe, M.D.; Provenzale, J.M.; Kurtzberg, J.; Mendizabal, A.; Escolar, M.L. Neurodevelopmental outcomes of umbilical cord blood stransplantation in metachromatic leukodystrophy. Biol. Blood Marrow Transplant. 2013, 19, 616–624. [Google Scholar] [CrossRef] [PubMed]

- Mistridis, P.; Krumm, S.; Monsch, A.U.; Berres, M.; Taylor, K.I. The 12 years prededing mild cognitive impairment due to Alzheimer’s disease: The temporal emergence of cognitive decline. J. Alzheimers Dis. 2015, 48, 1095–1107. [Google Scholar] [CrossRef] [PubMed]

- Pedapati, E.V.; Gilbert, D.L.; Erickson, C.A.; Horn, P.S.; Shaffer, R.C.; Wink, L.K.; Laue, C.S.; Wu, S.W. Abnormal cortical plasticity in youth with autism spectrum disorder: A transcranial magnetic stimulation case-control pilot study. J. Child Adolesc. Psychopharmacol. 2016, 26, 625–631. [Google Scholar] [CrossRef] [PubMed]

- Cuthbert, J.P.; Pretz, C.R.; Bushnik, T.; Fraser, R.T.; Hart, T.; Kolakowsky-Hayner, S.A.; Malec, J.F.; O’Neil-Pirozzi, T.M.; Sherer, M. Ten-year employment patterns of working age individuals after moderate to severe traumatic brain injury: A national institute on disability and rehabilitation research traumatic brain injury model systems study. Arch. Phys. Med. Rehab. 2015, 96, 2128–2136. [Google Scholar] [CrossRef] [PubMed]

- Agresti, A.; Booth, J.G.; Hobert, J.P.; Caffo, B. Random-effects modeling of categorical response data. Sociol. Methodol. 2000, 30, 27–80. [Google Scholar] [CrossRef]

- Berger, M.P.F.; Tan, F.E.S. Robust designs for linear mixed effects models. J. R. Stat. Soc. Ser. C Appl. Stat. 2004, 53, 569–581. [Google Scholar] [CrossRef]

- Cheung, M.W.L. A model for integrating fixed-, random-, and mixed-effects meta-analyses into structural equation modeling. Psychol. Methods 2008, 13, 182–202. [Google Scholar] [CrossRef] [PubMed]

- Luger, T.M.; Suls, J.; Vander Weg, M.W. How robust is the association between smoking and depression in adults? A meta-analysis using linear mixed-effects models. Addict. Behav. 2014, 39, 1418–1429. [Google Scholar] [CrossRef] [PubMed]

- Parzen, M.; Ghosh, S.; Lipsitz, S.; Sinha, D.; Fitzmaurice, G.M.; Mallick, B.K.; Ibrahim, J.G. A generalized linear mixed model for longitudinal binary data with a marginal logit link function. Ann. Appl. Stat. 2011, 5, 449–467. [Google Scholar] [CrossRef] [PubMed]

- Billings, C.J.; Mcmillan, G.P.; Penman, T.M.; Gille, S.M. Predicting perception in noise using cortical auditory evoked potentials. J. Assoc. Res. Otolaryngol. 2013, 14, 891–903. [Google Scholar] [CrossRef] [PubMed]

- Billings, C.J.; Penman, T.M.; Mcmillan, G.P.; Ellis, E.M. Electrophysiology and perception of speech in noise in older listeners: Effects of hearing impairment and age. Ear Hear. 2015, 36, 710–722. [Google Scholar] [CrossRef] [PubMed]

- Cahana-Amitay, D.; Spiro, A., III; Sayers, J.T.; Oveis, A.C.; Higby, E.; Ojo, E.A.; Duncan, S.; Goral, M.; Hyun, J.; Albert, M.L.; et al. How older adults use cognition in sentence-final word recognition. Neuropsychol. Dev. Cogn. B Aging 2016, 23, 418–444. [Google Scholar] [CrossRef]

- Canault, M.; le Normand, M.T.; Foudil, S.; Loundon, N.; Hung, T.V. Reliability of the language environment analysis system (lena (tm)) in european french. Behav. Res. Methods 2016, 48, 1109–1124. [Google Scholar] [CrossRef] [PubMed]

- Cunnings, I. An overview of mixed-effects statistical models for second language researchers. Second Lang. Res. 2012, 28, 369–382. [Google Scholar] [CrossRef]

- Davidson, D.J.; Martin, A.E. Modeling accuracy as a function of response time with the generalized linear mixed effects model. Acta Psychol. 2013, 144, 83–96. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- De Kegel, A.; Maes, L.; van Waelvelde, H.; Dhooge, I. Examining the impact of cochlear implantation on the early gross motor development of children with a hearing loss. Ear Hear. 2015, 36, e113–e121. [Google Scholar] [CrossRef] [PubMed]

- Evans, J.; Chu, M.N.; Aston, J.A.; Su, C.Y. Linguistic and human effects on F0 in a tonal dialect of Qiang. Phonetica 2010, 61, 82–99. [Google Scholar] [CrossRef] [PubMed]

- Gfeller, K.; Turner, C.; Oleson, J.; Zhang, X.; Gantz, B.; Froman, R.; Olszewski, C. Accuracy of cochlear implant recipients on pitch perception, melody recognition, and speech reception in noise. Ear Hear. 2007, 28, 412–423. [Google Scholar] [CrossRef] [PubMed]

- Haag, N.; Roppelt, A.; Heppt, B. Effects of mathematics items’ language demands for language minority students: Do they differ between grades? Learn. Individ. Differ. 2015, 42, 70–76. [Google Scholar] [CrossRef]

- Hadjipantelis, P.Z.; Aston, J.A.; Muller, H.G.; Evans, J.P. Unifying amplitude and phase analysis: A compositional data approach to functional multivariate mixed-effects modeling of mandarin chinese. J. Am. Stat. Assoc. 2015, 110, 545–559. [Google Scholar] [CrossRef] [PubMed]

- Humes, L.E.; Burk, M.H.; Coughlin, M.P.; Busey, T.A.; Strauser, L.E. Auditory speech recognition and visual text recognition in younger and older adults: Similarities and differences between modalities and the effects of presentation rate. J. Speech Lang. Hear. Res. 2007, 50, 283–303. [Google Scholar] [CrossRef]

- Jouravlev, O.; Lupker, S.J. Predicting stress patterns in an unpredictable stress language: The use of non-lexical sources of evidence for stress assignment in russian. J. Cogn. Psychol. 2015, 27, 944–966. [Google Scholar] [CrossRef]

- Kasisopa, B.; Reilly, R.G.; Luksaneeyanawin, S.; Burnham, D. Child readers’ eye movements in reading thai. Vis. Res. 2016, 123, 8–19. [Google Scholar] [CrossRef] [PubMed]

- Linck, J.A.; Cunnings, I. The utility and application of mixed-effects models in second language research. Lang. Learn. 2015, 65, 185–207. [Google Scholar] [CrossRef]

- Murayama, K.; Sakaki, M.; Yan, V.X.; Smith, G.M. Type 1 error inflation in the traditional by-participant analysis to metamemory accuracy: A generalized mixed-effects model perspective. J. Exp. Psychol. Learn. Mem. Cogn. 2014, 40, 1287–1306. [Google Scholar] [CrossRef] [PubMed]

- Picou, E.M. How hearing loss and age affect emotional responses to nonspeech sounds. J. Speech Lang. Hear. Res. 2016, 59, 1233–1246. [Google Scholar] [CrossRef] [PubMed]

- Poll, G.H.; Miller, C.A.; Mainela-Arnold, E.; Adams, K.D.; Misra, M.; Park, J.S. Effects of children’s working memory capacity and processing speech on their sentence imitation performance. Int. J. Lang. Commun. Disord. 2013, 48, 329–342. [Google Scholar] [CrossRef] [PubMed]

- Quene, H.; van den Bergh, H. Examples of mixed-effects modeling with crossed random effects and with binomal data. J. Mem. Lang. 2008, 59, 413–425. [Google Scholar] [CrossRef]

- Rong, P.; Yunusova, Y.; Wang, J.; Green, J.R. Predicting early bulbar decline in amyotrophic lateral sclerosis: A speech subsystem approach. Behav. Neurol. 2015, 2015, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Stuart, A.; Cobb, K.M. Reliability of measures in transient evoked otoacoustic emissions with contralateral suppression. J. Commun. Disord. 2015, 58, 35–42. [Google Scholar] [CrossRef] [PubMed]

- Van de Velde, M.; Meyer, A.S. Syntactic flexibility and planning scope: The effect of verb bias on advance planning during sentence recall. Front. Psychol. 2014, 5, 1174. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Amsel, B.D. Tracking real-time neural activation of conceptual knowledge using single-trial event-related potentials. Neuropsychologia 2011, 49, 970–983. [Google Scholar] [CrossRef] [PubMed]

- Bornkessel-Schlesewsky, I.; Philipp, M.; Alday, P.M.; Kretzschmar, F.; Grewe, T.; Gumpert, M.; Schumacher, P.B.; Schlesewsky, M. Age-realted changes in predictive capacity versus internal model adaptability: Electrophysiological evidence taht individual differences outweigh effects of age. Front. Aging Neurosci. 2015, 7, 217. [Google Scholar] [CrossRef] [PubMed]

- Bramhall, N.; Ong, B.; Ko, J.; Parker, M. Speech perception ability in noise is correlated with auditory brainstem response wave i amplitude. J. Am. Acad. Audiol. 2015, 26, 509–517. [Google Scholar] [CrossRef] [PubMed]

- Hsu, C.H.; Lee, C.Y.; Marantz, A. Effects of visual complexity and sublexical information in the occipitotemporal cortex in the reading of chinese phonograms: A signle-trial analysis with meg. Brain Lang. 2011, 117, 1–11. [Google Scholar] [CrossRef] [PubMed]

- McEvoy, K.; Hasenstab, K.; Senturk, D.; Sanders, A.; Jeste, S.S. Physiologic artifacts in resting state oscillations in young children: Methodoloical considerations for noisy data. Brain Imaging Behav. 2015, 9, 104–114. [Google Scholar] [CrossRef] [PubMed]

- Payne, B.R.; Lee, C.L.; Federmeier, K.D. Revisiting the incremental effects of context on word processing: Evidence from single-word event-related brain potentials. Psychophysiology 2015, 52, 1465–1469. [Google Scholar] [CrossRef] [PubMed]

- Spinnato, J.; Roubaud, M.C.; Burle, B.; Torresani, B. Detecting single-trial eeg evoked potential using an wavelet domain linear mixed model: Application to error potential sclassification. J. Neural Eng. 2015, 12, 036013. [Google Scholar] [CrossRef] [PubMed]

- Tremblay, A.; Newman, A.J. Modeling nonlinear relationships in erp data using mixed-effects regression with r examples. Psychophysiology 2015, 52, 124–139. [Google Scholar] [CrossRef] [PubMed]

- Visscher, K.M.; Miezin, F.M.; Kelly, J.E.; Buckner, R.L.; Donaldson, D.I.; McAvoy, M.P.; Bhalodia, V.M.; Petersen, S.E. Mixed blocked/event-related designs separate transient and sustained activity in fMRI. Neuroimage 2003, 19, 1694–1708. [Google Scholar] [CrossRef]

- Wang, X.F.; Yang, Q.; Fan, Z.; Sun, C.K.; Yue, G.H. Assessing time-dependent association between scalp EEG and muscle activation: A functional random-effects model approach. J. Neurosci. Methods 2009, 177, 232–240. [Google Scholar] [CrossRef] [PubMed]

- Zenon, A.; Klein, P.A.; Alamia, A.; Boursoit, F.; Wilhelm, E.; Duque, J. Increased reliance on value-based decision processes following motor cortex disruption. Brain Stimul. 2015, 8, 957–964. [Google Scholar] [CrossRef] [PubMed]

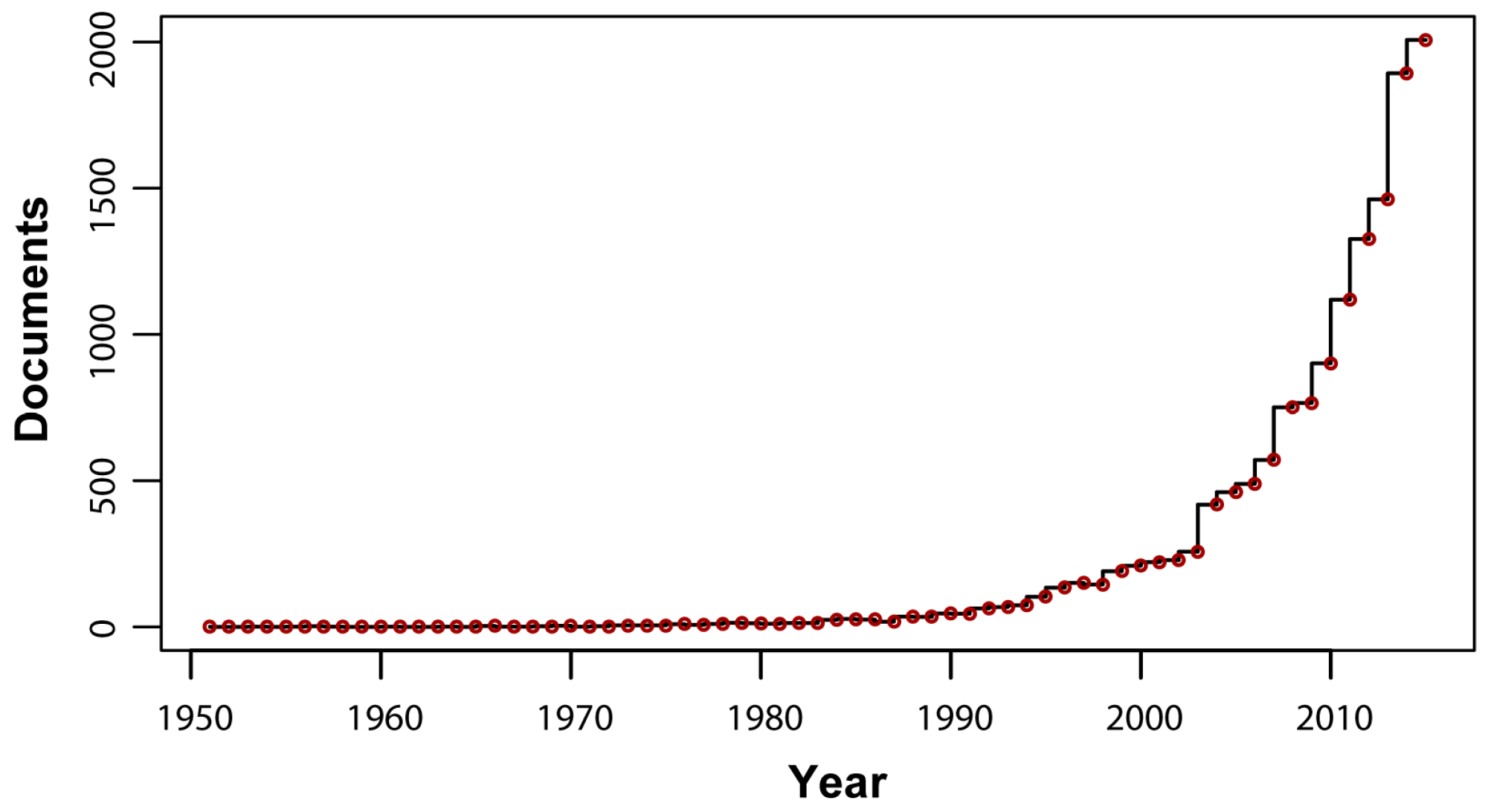

- Scopus. Available online: https://www.scopus.com/home.uri (accessed on 31 December 2016).

- Koerner, T.K.; Zhang, Y. Effects of background noise on inter-trial phase coherence and auditory N1-P2 responses to speech. Hear. Res. 2015, 328, 113–119. [Google Scholar] [CrossRef] [PubMed]

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2014. [Google Scholar]

- Pinheiro, J.; Bates, D.; DebRoy, S.; Sarkar, D.; R Core Team. Nlme: Linear and Nonlinear Mixed Effects Models; R Core Team: Vienna, Austria, 2016. [Google Scholar]

- Koerner, T.K.; Zhang, Y.; Nelson, P.B.; Wang, B.; Zou, H. Neural indices of phonemic discrimination and sentence-level speech intelligibility in quiet and noise: A mismatch negativity study. Hear. Res. 2016, 339, 40–49. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Cheng, B.; Koerner, T.K.; Schlauch, R.S.; Tanaka, K.; Kawakatsu, M.; Nemoto, I.; Imada, T. Perceputal temporal asymmetry associated with distinct on and off responses to time-varying sounds with rising versus falling intensity: A magnetoencephalography study. Brain Sci. 2016, 6, 1–25. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Koerner, T.; Miller, S.; Grice-Patil, Z.; Svec, A.; Akbari, D.; Tusler, L.; Carney, E. Neural coding of formant-exaggerated speech in the infant brain. Dev. Sci. 2011, 14, 566–581. [Google Scholar] [CrossRef] [PubMed]

| N1 | P2 | |||

|---|---|---|---|---|

| Frequency Band | Latency | Amplitude | Latency | Amplitude |

| Delta | −0.586 ** | −0.780 *** | −0.468 * | 0.666 ** |

| Theta | −0.521 * | −0.765 *** | −0.575 ** | 0.612 ** |

| Alpha | −0.510 * | −0.720 *** | −0.586 ** | 0.599 ** |

| Variable | N1 Latency | N1 Amplitude | P2 Latency | P2 Amplitude | ||||

|---|---|---|---|---|---|---|---|---|

| F | β | F | β | F | β | F | β | |

| Intercept | 964.79 *** | - | 155.62 *** | - | 568.62 *** | - | 31.64 *** | - |

| Condition | 106.88 *** | - | 16.58 ** | - | 31.93 *** | - | 4.13 | - |

| Delta | 0.06 | −0.30 | 16.12 ** | −1.05 | 0.46 | 0.48 | 10.72 * | 0.96 |

| Theta | 0.46 | −0.45 | 0.17 | −1.82 | 4.01 | −0.23 | 0.00 | −0.11 |

| Alpha | 12.51 ** | 0.80 | 3.24 | 2.01 | 0.68 | −0.41 | 0.00 | 0.09 |

| Latency (ms) | Amplitude (μV) | Power (dB) | ||||

|---|---|---|---|---|---|---|

| /bu/ | /da/ | /bu/ | /da/ | /bu/ | /da/ | |

| Phoneme Detection (%) | −0.53 * | −0.47 * | −0.50 * | −0.17 | 0.41 * | 0.13 |

| Reaction Time (ms) | 0.34 | 0.39 | 0.56 ** | 0.02 | −0.49 * | 0.01 |

| Sentence Recognition (%) | −0.40 * | −0.53 ** | −0.66 ** | −0.07 | 0.59 ** | 0.18 |

| Variable | Percent Correct Phoneme Detection | Phoneme Detection Reaction Time | Percent Correct Sentence Recognition (/bu/) | Percent Correct Sentence Recognition (/da/) | ||||

|---|---|---|---|---|---|---|---|---|

| F | β | F | β | F | β | F | β | |

| Intercept | 161.51 *** | - | 4199.98 *** | - | 431.41 *** | - | 335.12 *** | - |

| Condition | 131.68 *** | - | 61.92 *** | - | 291.32 *** | - | 247.69 *** | - |

| Stimulus | 114.20 *** | - | 21.05 *** | - | - | - | - | - |

| Latency | 7.86 ** | 0.61 | 0.000 | 0.03 | 1.24 | −0.19 | 0.44 | −0.21 |

| Amplitude | 3.10 | −0.09 | 0.002 | 0.02 | 7.21 * | 0.24 | 0.41 | 0.05 |

| Theta Power | 6.61 * | 0.05 | 0.368 | 0.01 | 0.46 | −0.01 | 1.50 | −0.02 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license ( http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Koerner, T.K.; Zhang, Y. Application of Linear Mixed-Effects Models in Human Neuroscience Research: A Comparison with Pearson Correlation in Two Auditory Electrophysiology Studies. Brain Sci. 2017, 7, 26. https://doi.org/10.3390/brainsci7030026

Koerner TK, Zhang Y. Application of Linear Mixed-Effects Models in Human Neuroscience Research: A Comparison with Pearson Correlation in Two Auditory Electrophysiology Studies. Brain Sciences. 2017; 7(3):26. https://doi.org/10.3390/brainsci7030026

Chicago/Turabian StyleKoerner, Tess K., and Yang Zhang. 2017. "Application of Linear Mixed-Effects Models in Human Neuroscience Research: A Comparison with Pearson Correlation in Two Auditory Electrophysiology Studies" Brain Sciences 7, no. 3: 26. https://doi.org/10.3390/brainsci7030026