Adaptive Weighted Multi-Discriminator CycleGAN for Underwater Image Enhancement

Abstract

:1. Introduction

2. Materials and Methods

2.1. Image Acquisition and Analysis

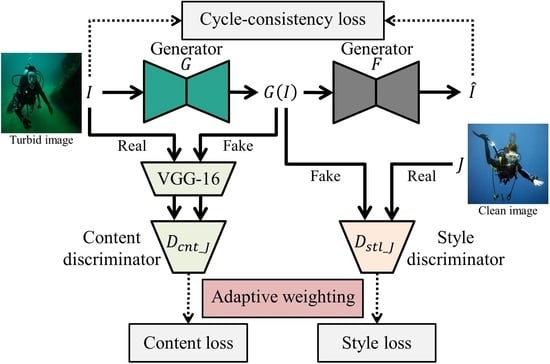

2.2. Architecture of Multi-Discriminator CycleGAN

2.2.1. Style Discriminator

2.2.2. Content Discriminator

2.3. Overall Loss Function

Adaptive Weighted Adversarial Loss

3. Results

3.1. Experimental Settings

3.2. Ablation Study

3.3. Quantitative Evaluation

3.4. Qualitative Evaluation

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Kocak, D.M.; Dalgleish, F.R.; Caimi, F.M.; Schechner, Y.Y. A focus on recent developments and trends in underwater imaging. Mar. Technol. Soc. J. 2008, 42, 52–67. [Google Scholar] [CrossRef]

- Bazeille, S.; Quidu, I.; Jaulin, L.; Malkasse, J.P. Automatic underwater image pre-processing. In Proceedings of the Characterisation du Milieu Marin (CMM’06), Brest, France, 16–19 October 2006. [Google Scholar]

- Ancuti, C.; Ancuti, C.O.; Haber, T.; Bekaert, P. Enhancing underwater images and videos by fusion. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012. [Google Scholar]

- Lu, H.; Li, Y.; Serikawa, S. Underwater image enhancement using guided trigonometric bilateral filter and fast automatic color correction. In Proceedings of the International Conference Image Processing, Melbourne, VIC, Australia, 15–18 September 2013. [Google Scholar]

- Tomasi, C.; Manduchi, R. Bilateral filtering for gray and color images. In Proceedings of the International Conference Image Processing, Bombay, India, 7 January 1998. [Google Scholar]

- Li, C.; Quo, J.; Pang, Y.; Chen, S.; Wang, J. Single underwater image restoration by blue-green channels dehazing and red channel correction. In Proceedings of the IEEE International Conference Acoustics, Speech and Signal Processing, Shanghai, China, 20–25 March 2016. [Google Scholar]

- He, K.; Sun, J.; Tang, X. Single image haze removal using dark channel prior. IEEE Trans. Pattern. Anal. Mach. Intell. 2011, 33, 2341–2353. [Google Scholar] [PubMed]

- Provenzi, E.; Gatta, C.; Fierro, M.; Rizzi, A. A spatially variant white-patch and gray-world method for color image enhancement driven by local contrast. IEEE Trans. Pattern. Anal. Mach. Intell. 2008, 30, 1757–1770. [Google Scholar] [CrossRef] [PubMed]

- Chen, Z.; Wang, H.; Shen, J.; Li, X.; Xu, L. Region-specialized underwater image restoration in inhomogeneous optical environments. Optik 2014, 125, 2090–2098. [Google Scholar] [CrossRef]

- Park, D.; Han, D.K.; Ko, H. Enhancing underwater color images via optical imaging model and non-local means denoising. IEICE Trans. Inf. Syst. 2017, 100, 1475–1483. [Google Scholar] [CrossRef]

- Peng, Y.T.; Cosman, P.C. Underwater image restoration based on image blurriness and light absorption. IEEE Trans. Image Process. 2017, 26, 1579–1594. [Google Scholar] [CrossRef] [PubMed]

- Akkaynak, D.; Treibitz, T. A revised underwater image formation model. In Proceedings of the IEEE Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Zhang, M.; Peng, J. Underwater image restoration based on a new underwater image formation model. IEEE Access 2018, 6, 58634–58644. [Google Scholar] [CrossRef]

- Li, J.; Skinner, K.A.; Eustice, R.M.; Johnson-Roberson, M. WaterGAN: Unsupervised generative network to enable real-time color correction of monocular underwater images. IEEE Robot. Autom. Lett. 2018, 3, 387–394. [Google Scholar] [CrossRef]

- Chen, X.; Yu, J.; Kong, S.; Wu, Z.; Fang, X.; Wen, L. Towards real-time advancement of underwater visual quality with GAN. IEEE Trans. Ind. Electron. 2019. [Google Scholar] [CrossRef]

- Zhu, J.Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE International Conference Computer Vision, Venice, Italy, 22–29 October 2017. [Google Scholar]

- Li, C.; Guo, J.; Guo, C. Emerging from water: Underwater image color correction based on weakly supervised color transfer. IEEE Signal. Process. Lett. 2018, 25, 323–327. [Google Scholar] [CrossRef]

- Lu, J.; Li, N.; Zhang, S.; Yu, Z.; Zheng, H.; Zheng, B. Multi-scale adversarial network for underwater image restoration. Opt. Laser. Technol. 2019, 110, 105–113. [Google Scholar] [CrossRef]

- Fabbri, C.; Islam, M.J.; Sattar, J. Enhancing underwater imagery using generative adversarial networks. In Proceedings of the IEEE International Conference on Robotics and Automation, Brisbane, Australia, 21–25 May 2018. [Google Scholar]

- Zhou, B.; Lapedriza, A.; Khosla, A.; Oliva, A.; Torralba, A. Places: A 10 million image database for scene recognition. IEEE Trans. Pattern. Anal. Mach. Intell. 2018, 40, 1452–1464. [Google Scholar] [CrossRef] [PubMed]

- Li, C.; Guo, C.; Ren, W.; Cong, R.; Hou, J.; Kwong, S.; Tao, D. An underwater image enhancement benchmark dataset and beyond. arXiv 2019, arXiv:1901.05495. [Google Scholar]

- Yang, M.; Sowmya, A. An underwater color image quality evaluation metric. IEEE Trans. Image Process. 2015, 24, 6062–6071. [Google Scholar] [CrossRef] [PubMed]

- Ancuti, C.O.; Ancuti, C.; De Vleeschouwer, C.; Bekaert, P. Color balance and fusion for underwater image enhancement. IEEE Trans. Image Process. 2018, 27, 379–393. [Google Scholar] [CrossRef] [PubMed]

- Huang, X.; Liu, M.Y.; Belongie, S.; Kautz, J. Multimodal unsupervised image-to-image translation. In Proceedings of the European Conference on Computer Vision, Beijing, China, 20–24 August 2018. [Google Scholar]

- Gatys, L.A.; Ecker, A.S.; Bethge, M. Image style transfer using convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Zhu, Q.; Mai, J.; Shao, L. A fast single image haze removal algorithm using color attenuation prior. IEEE Trans. Image Process. 2015, 24, 3522–3533. [Google Scholar] [PubMed]

- Ancuti, C.O.; Ancuti, C. Single image dehazing by multi-scale fusion. IEEE Trans. Image Process. 2013, 22, 3271–3282. [Google Scholar] [CrossRef] [PubMed]

- Galdran, A.; Pardo, D.; Picón, A.; Alvarez-Gila, A. Automatic red-channel underwater image restoration. J. Vis. Commun. Image Represent. 2015, 26, 132–145. [Google Scholar] [CrossRef]

- Emberton, S.; Chittka, L.; Cavallaro, A. Hierarchical rank-based veiling light estimation for underwater dehazing. In Proceedings of the BMVC, Swansea, UK, 8–10 September 2015. [Google Scholar]

| Input | GW | DCP | CAP | CycleGAN | Ours | |

|---|---|---|---|---|---|---|

| UCIQE | 26.08 | 26.83 | 30.44 | 29.71 | 31.73 | 32.00 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Park, J.; Han, D.K.; Ko, H. Adaptive Weighted Multi-Discriminator CycleGAN for Underwater Image Enhancement. J. Mar. Sci. Eng. 2019, 7, 200. https://doi.org/10.3390/jmse7070200

Park J, Han DK, Ko H. Adaptive Weighted Multi-Discriminator CycleGAN for Underwater Image Enhancement. Journal of Marine Science and Engineering. 2019; 7(7):200. https://doi.org/10.3390/jmse7070200

Chicago/Turabian StylePark, Jaihyun, David K. Han, and Hanseok Ko. 2019. "Adaptive Weighted Multi-Discriminator CycleGAN for Underwater Image Enhancement" Journal of Marine Science and Engineering 7, no. 7: 200. https://doi.org/10.3390/jmse7070200