Forecasting Monthly Electricity Demands: An Application of Neural Networks Trained by Heuristic Algorithms

Abstract

:1. Introduction

2. Literature Review

3. Heuristic Algorithms

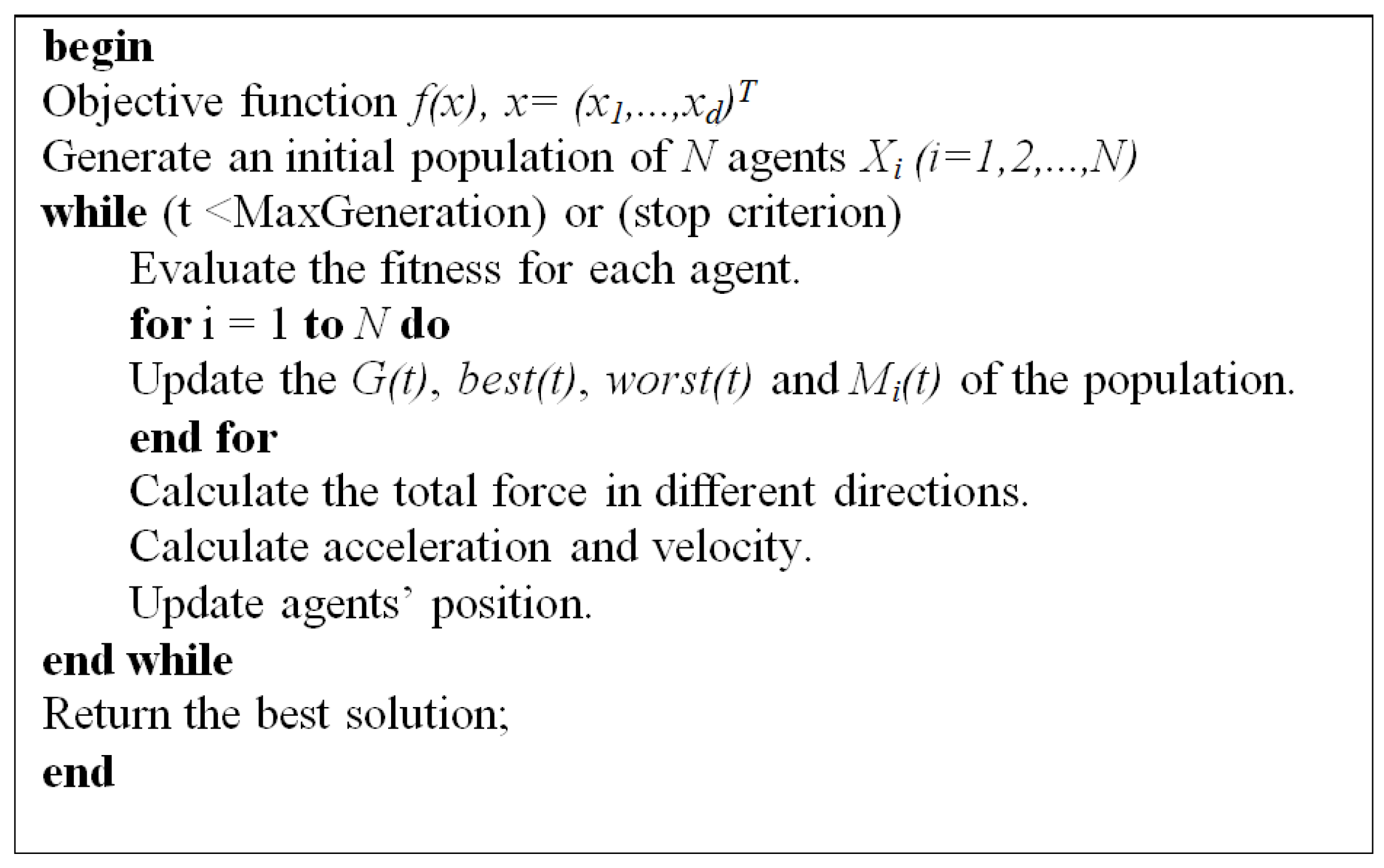

3.1. Gravitational Search Algorithm

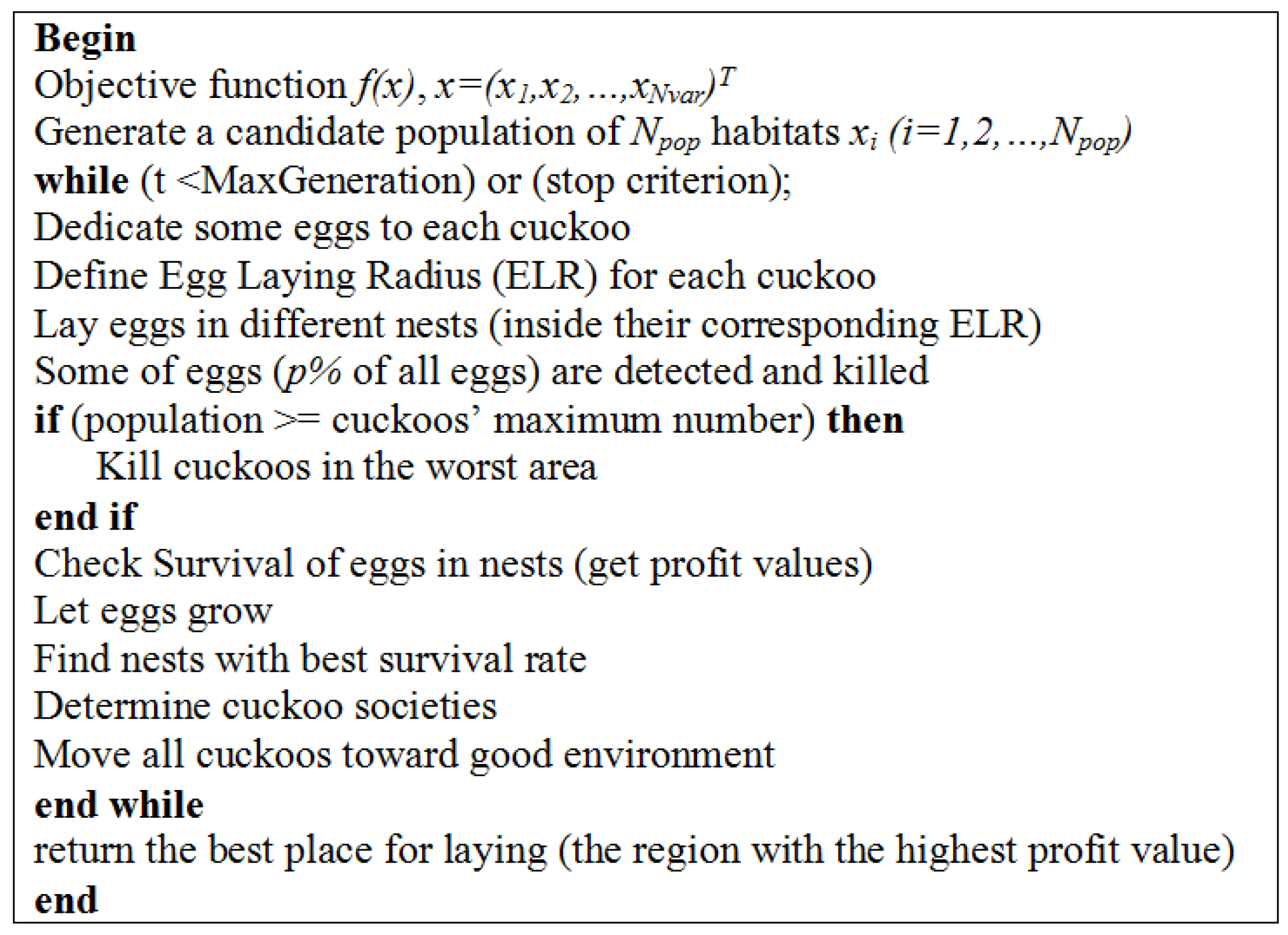

3.2. Cuckoo Optimization Algorithm

4. Research Design

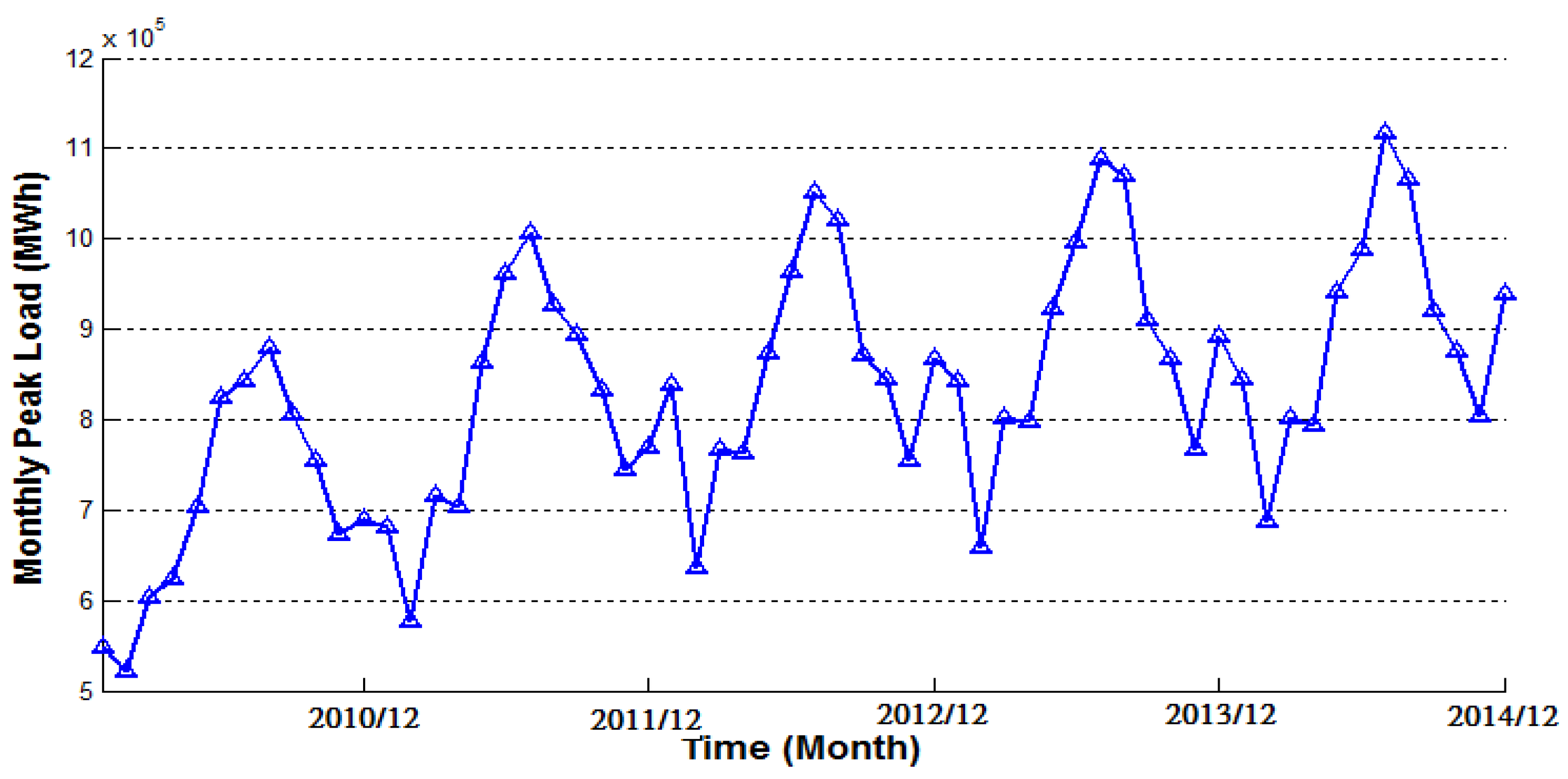

4.1. Historical Data

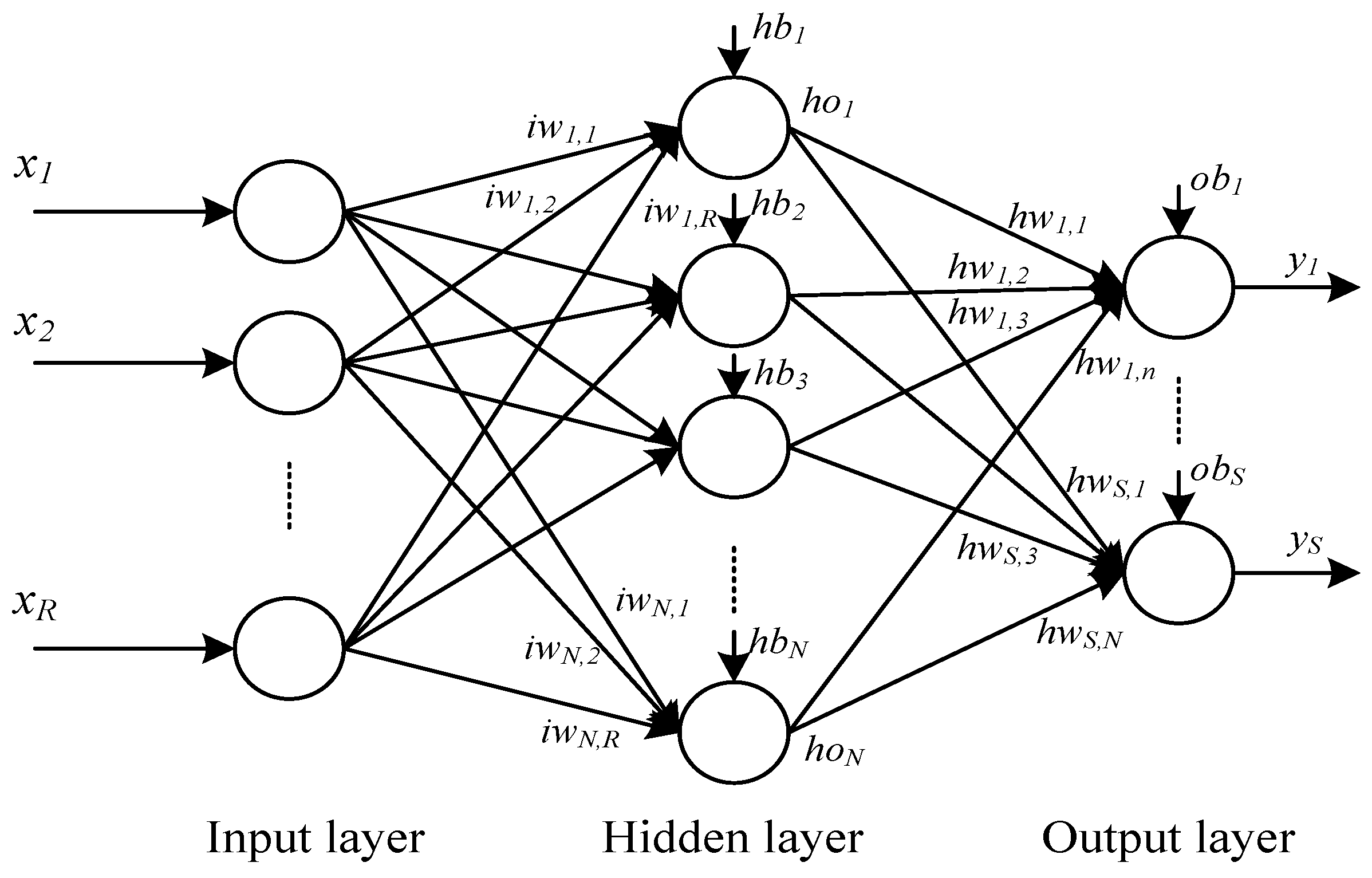

4.2. Structure of the Neural Network

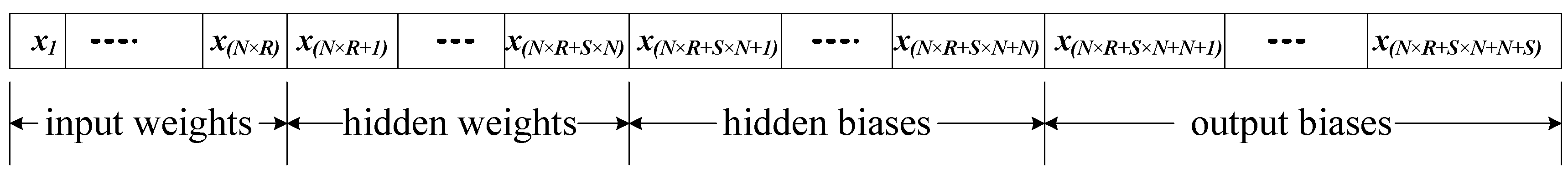

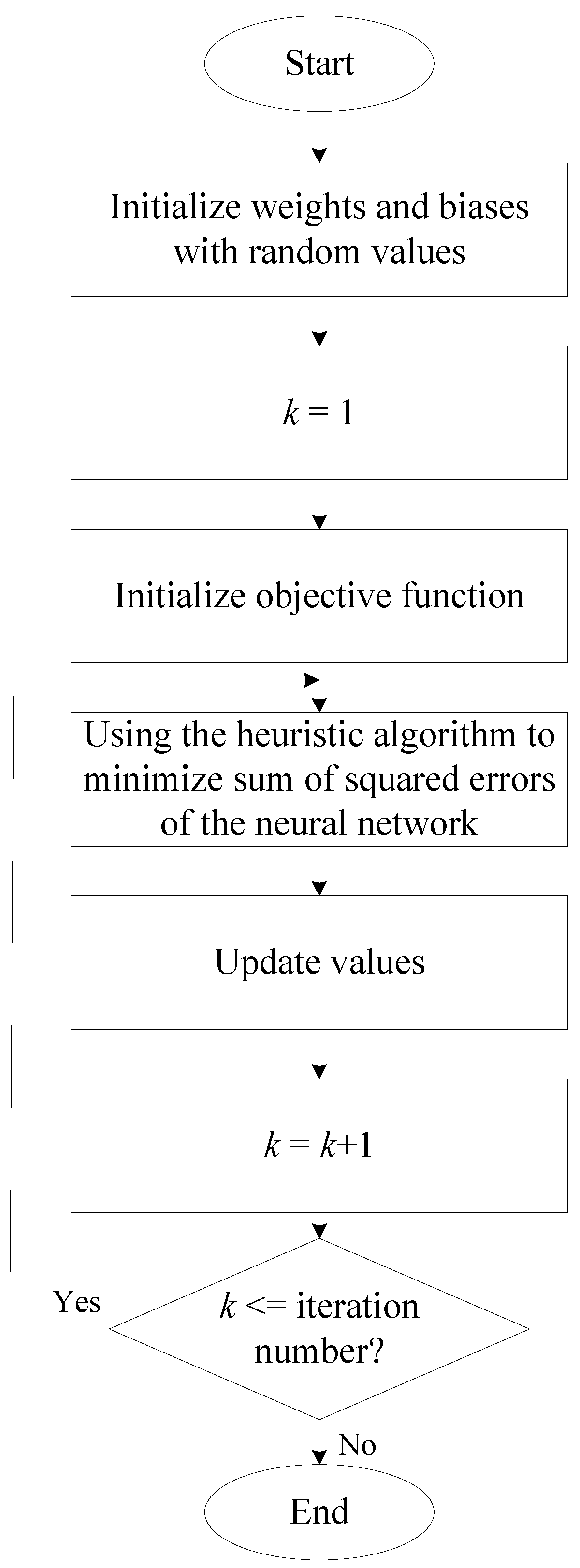

4.3. Training Neural Networks by Heuristic Algorithms

4.4. Examining the Performance

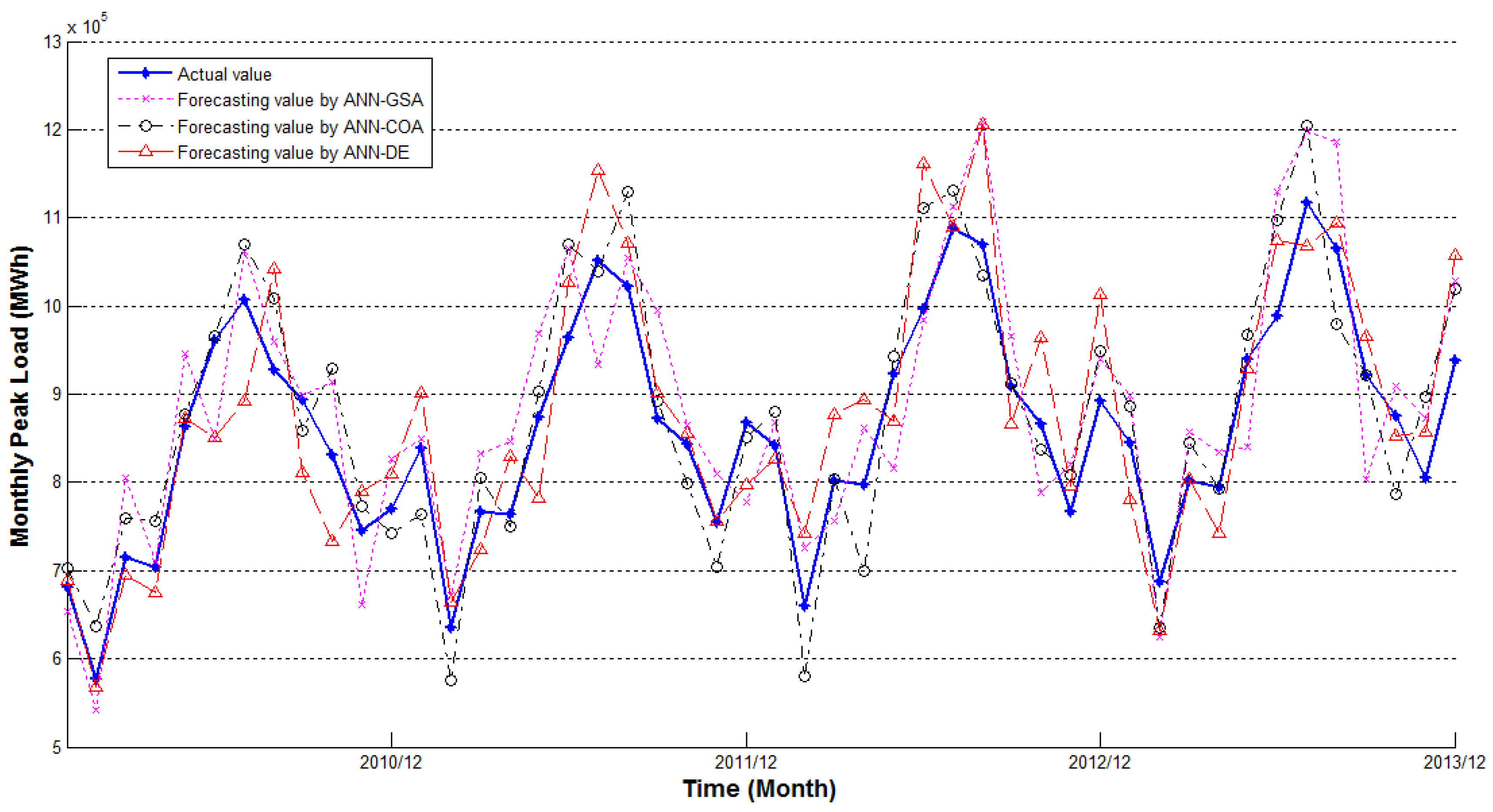

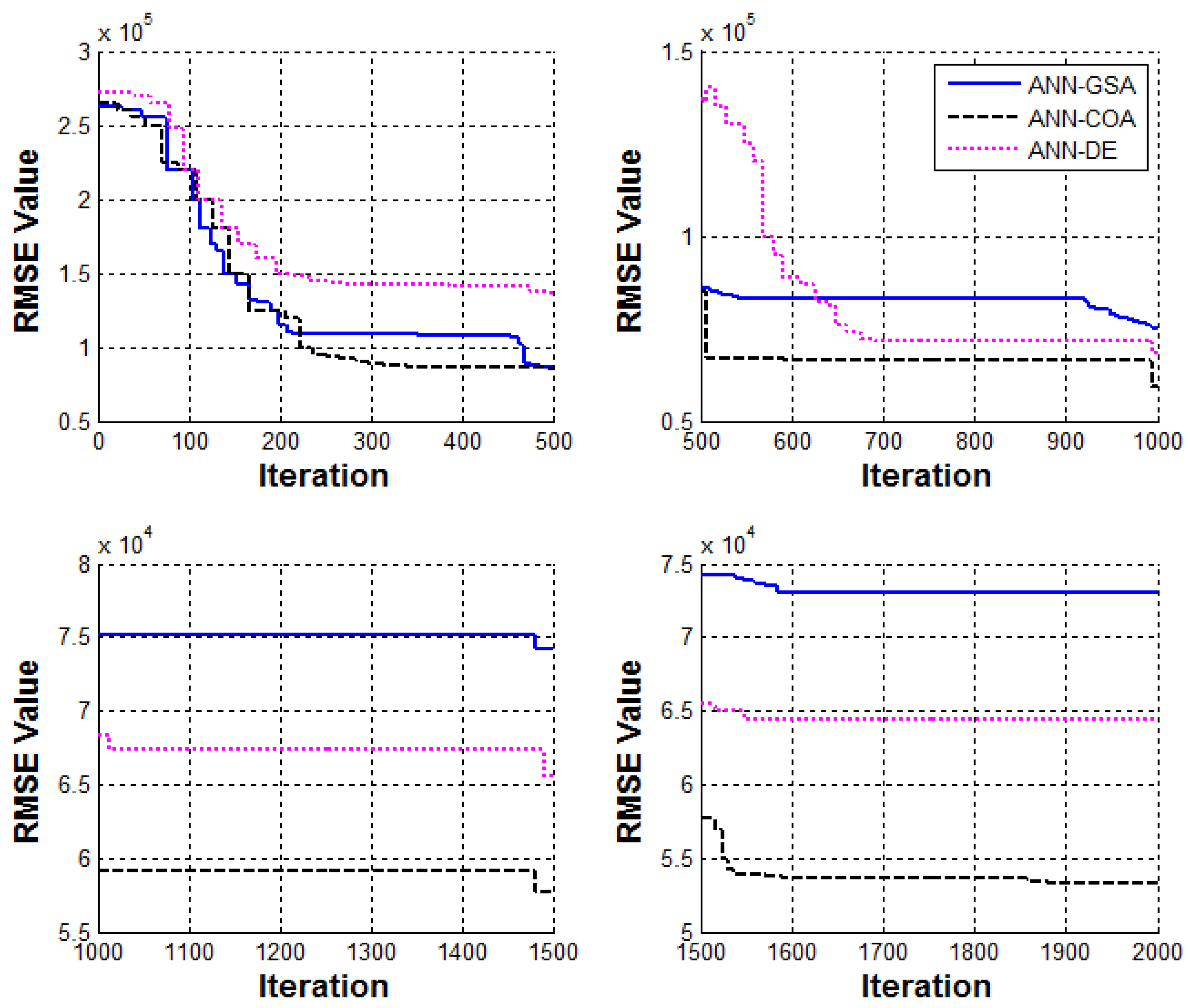

5. Experimental Results and Discussion

6. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Suganthia, L.; Samuelb, A.A. Energy models for demand forecasting—A review. Renew. Sustain. Energy Rev. 2012, 16, 1223–1240. [Google Scholar] [CrossRef]

- Dogan, E.; Akgungor, A. Forecasting highway casualties under the effect of railway development policy in Turkey using artificial neural networks. Neural Comput. Appl. 2013, 22, 869–877. [Google Scholar] [CrossRef]

- Erzin, Y.; Oktay Gul, T. The use of neural networks for the prediction of the settlement of one-way footings on cohesionless soils based on standard penetration test. Neural Comput. Appl. 2014, 24, 891–900. [Google Scholar] [CrossRef]

- Bhattacharya, U.; Chaudhuri, B. Handwritten Numeral Databases of Indian Scripts and Multistage Recognition of Mixed Numerals. IEEE Trans. Pattern Anal. Mach. Intell. 2009, 31, 444–457. [Google Scholar] [CrossRef] [PubMed]

- Kandananond, K. Forecasting Electricity Demand in Thailand with an Artificial Neural Network Approach. Energies 2011, 4, 1246–1257. [Google Scholar] [CrossRef]

- Chang, P.C.; Fan, C.Y.; Lin, J.J. Monthly electricity demand forecasting based on a weighted evolving fuzzy neural network approach. Int. J. Electr. Power Energy Syst. 2011, 33, 17–27. [Google Scholar] [CrossRef]

- Feilat, E.A.; Bouzguenda, M. Medium-term load forecasting using neural network approach. In Proceedings of the IEEE PES Conference on Innovative Smart Grid Technologies—Middle East (ISGT Middle East), Jeddah, Saudi Arabia, 17–20 December 2011; pp. 1–5.

- Amjady, N.; Keynia, F. A New Neural Network Approach to Short Term Load Forecasting of Electrical Power Systems. Energies 2011, 4, 488–503. [Google Scholar] [CrossRef]

- Santana, Á.L.; Conde, G.B.; Rego, L.P.; Rocha, C.A.; Cardoso, D.L.; Costa, J.C.W.; Bezerra, U.H.; Francês, C.R.L. PREDICT—Decision support system for load forecasting and inference: A new undertaking for Brazilian power suppliers. Int. J. Electr. Power Energy Syst. 2012, 38, 33–45. [Google Scholar] [CrossRef]

- Azadeh, A.; Ghaderi, S.F.; Sohrabkhani, S. Forecasting electrical consumption by integration of Neural Network, time series and ANOVA. Appl. Math. Comput. 2007, 186, 1753–1761. [Google Scholar] [CrossRef]

- Azadeh, A.; Ghaderi, S.F.; Sohrabkhani, S. Annual electricity consumption forecasting by neural network in high energy consuming industrial sectors. Energy Convers. Manag. 2008, 49, 2272–2278. [Google Scholar] [CrossRef]

- Vahidinasab, V.; Jadid, S.; Kazemi, A. Day-ahead price forecasting in restructured power systems using artificial neural networks. Electr. Power Syst. Res. 2008, 78, 1332–1342. [Google Scholar] [CrossRef]

- Bashir, Z.A.; El-Hawary, M.E. Applying wavelets to short-term load forecasting using PSO-based neural networks. IEEE Trans. Power Syst. 2009, 24, 20–27. [Google Scholar] [CrossRef]

- Gori, M.; Tesi, A. On the problem of local minima in back-propagation. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 76–86. [Google Scholar] [CrossRef]

- Zhang, J.R.; Zhang, J.; Lock, T.; Lyu, M. A hybrid particle swarm optimization–back-propagation algorithm for feed-forward neural network training. Appl. Math. Comput. 2007, 185, 1026–1037. [Google Scholar]

- Goldberg, D.E. Genetic Algorithms in Search, Optimization and Machine Learning; Addison Wesley: Boston, MA, USA, 1989. [Google Scholar]

- Kennedy, J.; Eberhart, R.C. Particle swarm optimization. In Proceedings of the IEEE International Conference on Neural Networks, Perth, Australia, 1995; Volume 4, pp. 1942–1948.

- Dorigo, M.; Maniezzo, V.; Golomi, A. Ant system: Optimization by a colony of cooperating agents. IEEE Trans. Syst. Man Cybern. 1996, 26, 29–41. [Google Scholar] [CrossRef] [PubMed]

- Storn, R.; Price, K.V. Differential evolution—A simple and efficient heuristic for global optimization over continuous Spaces. J. Glob. Optim. 1997, 11, 341–359. [Google Scholar] [CrossRef]

- Rashedi, E.; Nezamabadi-Pour, H.; Saryazdi, S. GSA: A Gravitational Search Algorithm. Inf. Sci. 2009, 179, 2232–2248. [Google Scholar] [CrossRef]

- Duman, S.; Güvenç, U.; Yörükeren, N. Gravitational Search Algorithm for Economic Dispatch with Valve-Point Effects. Int. Rev. Electr. Eng. 2010, 5, 2890–2895. [Google Scholar]

- Rajabioun, R. Cuckoo optimization algorithm. Appl. Soft Comput. 2011, 11, 5508–5518. [Google Scholar] [CrossRef]

- Balochian, S.; Ebrahimi, E. Parameter Optimization via Cuckoo Optimization Algorithm of Fuzzy Controller for Liquid Level Control. J. Eng. 2013, 2013, 1–7. [Google Scholar] [CrossRef]

- Deng, J. Energy Demand Estimation of China Using Artificial Neural Network. In Proceedings of the 2010 Third International Conference on Business Intelligence and Financial Engineering, Hong Kong, China, 13–15 August 2010; pp. 32–34.

- Hotunluoglu, H.; Karakaya, E. Forecasting Turkey’s Energy Demand Using Artificial Neural Networks: Three Scenario Applications. Ege Acad. Rev. 2011, 11, 87–94. [Google Scholar]

- Grant, J.; Eltoukhy, M.; Asfour, S. Short-Term Electrical Peak Demand Forecasting in a Large Government Building Using Artificial Neural Networks. Energies 2014, 7, 1935–1953. [Google Scholar] [CrossRef]

- Hernández, L.; Baladrón, C.; Aguiar, J.M.; Calavia, L.; Carro, B.; Sánchez-Esguevillas, A.; Sanjuán, J.; González, Á.; Lloret, J. Improved Short-Term Load Forecasting Based on Two-Stage Predictions with Artificial Neural Networks in a Microgrid Environment. Energies 2013, 6, 4489–4507. [Google Scholar] [CrossRef]

- Ryu, S.; Noh, J.; Kim, H. Deep Neural Network Based Demand Side Short Term Load Forecasting. Energies 2017, 10, 3. [Google Scholar] [CrossRef]

- Funahashi, K. On the approximate realization of continuous mappings by neural networks. Neural Netw. 1989, 2, 183–192. [Google Scholar] [CrossRef]

- Norgaard, M.; Poulsen, N.; Hansen, L. Neural Networks for Modeling and Control of Dynamic Systems. A Practitioner’s Handbook; Springer: London, UK, 2000. [Google Scholar]

- Caruana, R.; Lawrence, S.; Giles, C. Overfitting in neural networks: Backpropagation, conjugate gradient, and early stopping. Available online: https://papers.nips.cc/paper/1895-overfitting-in-neural-nets-backpropagation-conjugate-gradient-and-early-stopping.pdf (accessed on 8 March 2017).

- Cybenko, G. Approximation by superposition of a sigmoid function. Math. Control Signals Syst. 1989, 2, 303–314. [Google Scholar] [CrossRef]

- Hornik, K.; Stinchombe, M.; White, H. Universal Approximation of an unknown Mapping and its Derivatives Using Multilayer Feed forward Networks. Neural Netw. 1990, 3, 551–560. [Google Scholar] [CrossRef]

- Alallayah, K.; Amin, M.; AbdElwahed, W.; Alhamami, A. Applying Neural Networks for Simplified Data Encryption Standard (SDES) Cipher System Cryptanalysis. Int. Arab J. Inf. Technol. 2012, 9, 163–169. [Google Scholar]

| Factor | |

|---|---|

| x1 | Month index |

| x2 | Average air pressure |

| x3 | Average temperature |

| x4 | Average wind velocity |

| x5 | Rainfall |

| x6 | Rainy time |

| x7 | Average relative humidity |

| x8 | Daylight time |

| No. of Iterations | Model | MAPE | RMSE | MAE | R |

|---|---|---|---|---|---|

| 500 | ANN | 0.1472 | 137,208 | 121,152 | 0.7206 |

| ANN-GSA | 0.0848 | 86,080 | 73,540 | 0.8415 | |

| ANN-COA | 0.0843 | 85,118 | 73,108 | 0.8709 | |

| ANN-DE | 0.1462 | 136,819 | 119,261 | 0.7608 | |

| 1000 | ANN | 0.0826 | 78,652 | 120,242 | 0.7932 |

| ANN-GSA | 0.0764 | 75,148 | 66,267 | 0.8779 | |

| ANN-COA | 0.0577 | 59,073 | 49,238 | 0.9287 | |

| ANN-DE | 0.0671 | 68,325 | 56,409 | 0.9332 | |

| 1500 | ANN | 0.0803 | 75,524 | 93,142 | 0.7988 |

| ANN-GSA | 0.0759 | 74,218 | 63,733 | 0.8842 | |

| ANN-COA | 0.0578 | 57,731 | 49,100 | 0.9372 | |

| ANN-DE | 0.0633 | 65,529 | 56, 937 | 0.9382 | |

| 2000 | ANN | 0.0796 | 73,482 | 76,249 | 0.8020 |

| ANN-GSA | 0.0731 | 72,980 | 64,267 | 0.8892 | |

| ANN-COA | 0.0478 | 53,308 | 48,238 | 0.9386 | |

| ANN-DE | 0.0582 | 64,372 | 55,021 | 0.8921 |

| Method | MAPE | RMSE | MAE | R |

|---|---|---|---|---|

| MLR | 0.1662 | 170,540 | 145,910 | 0.6031 |

| ARIMA (2,1,1) | 0.1603 | 152,070 | 136,260 | 0.7043 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license ( http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, J.-F.; Lo, S.-K.; Do, Q.H. Forecasting Monthly Electricity Demands: An Application of Neural Networks Trained by Heuristic Algorithms. Information 2017, 8, 31. https://doi.org/10.3390/info8010031

Chen J-F, Lo S-K, Do QH. Forecasting Monthly Electricity Demands: An Application of Neural Networks Trained by Heuristic Algorithms. Information. 2017; 8(1):31. https://doi.org/10.3390/info8010031

Chicago/Turabian StyleChen, Jeng-Fung, Shih-Kuei Lo, and Quang Hung Do. 2017. "Forecasting Monthly Electricity Demands: An Application of Neural Networks Trained by Heuristic Algorithms" Information 8, no. 1: 31. https://doi.org/10.3390/info8010031

APA StyleChen, J.-F., Lo, S.-K., & Do, Q. H. (2017). Forecasting Monthly Electricity Demands: An Application of Neural Networks Trained by Heuristic Algorithms. Information, 8(1), 31. https://doi.org/10.3390/info8010031