Scene Semantic Recognition Based on Probability Topic Model

Abstract

:1. Introduction

2. Related Works

3. Proposed Approach

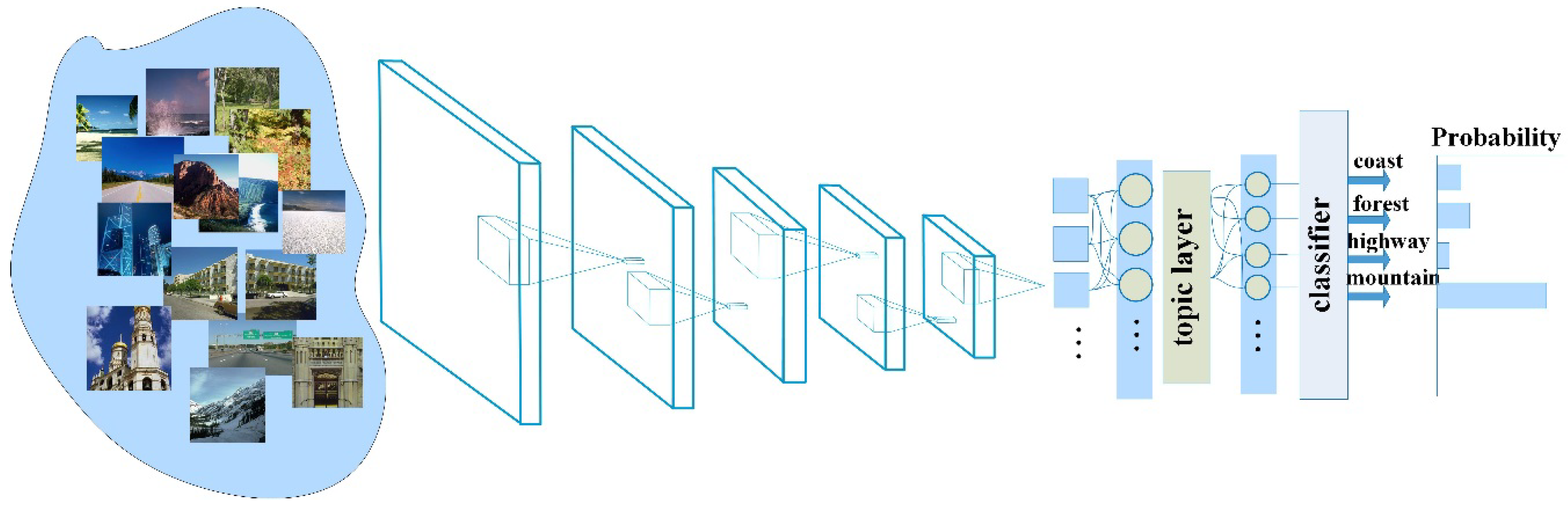

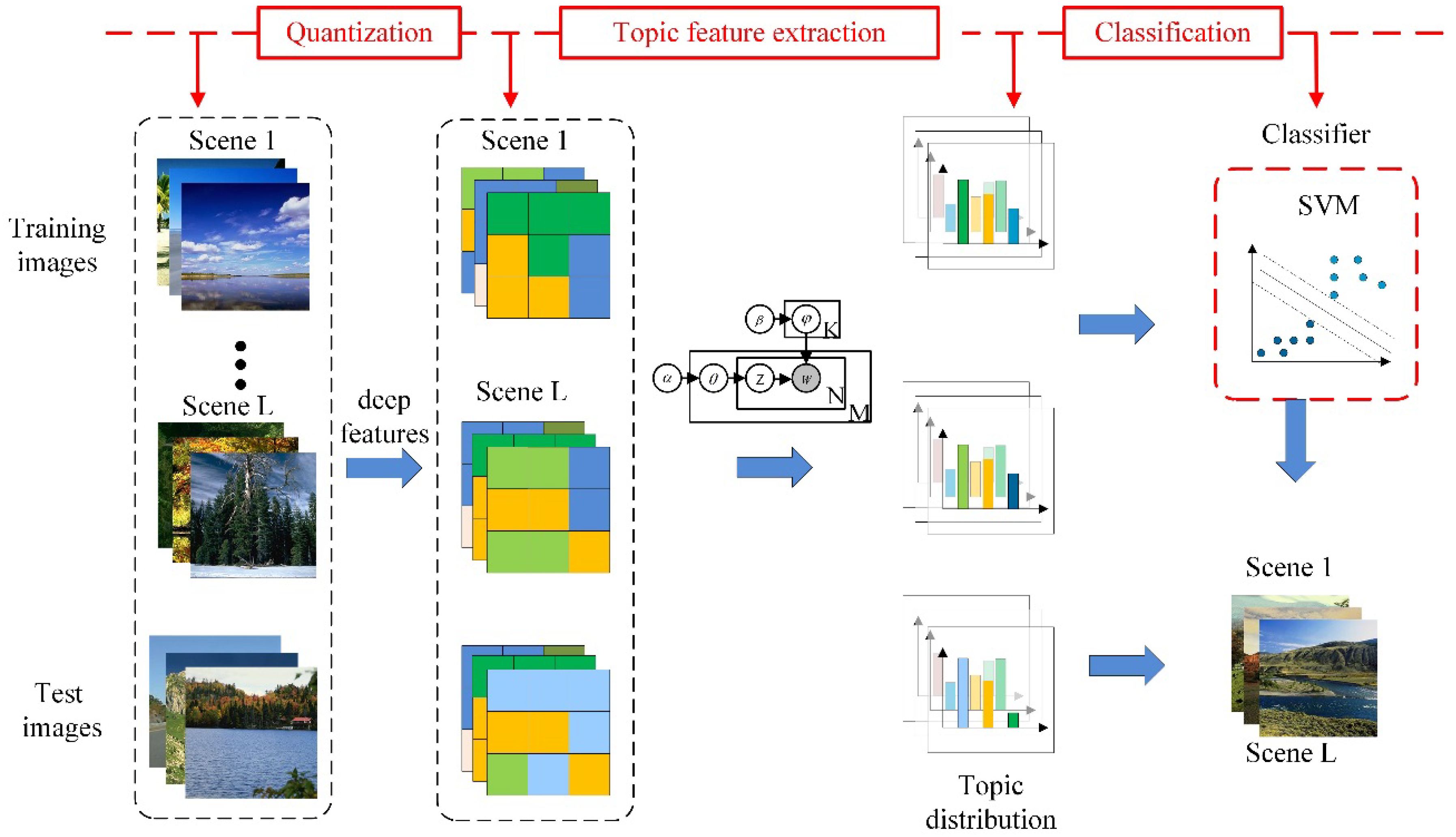

3.1. Overview

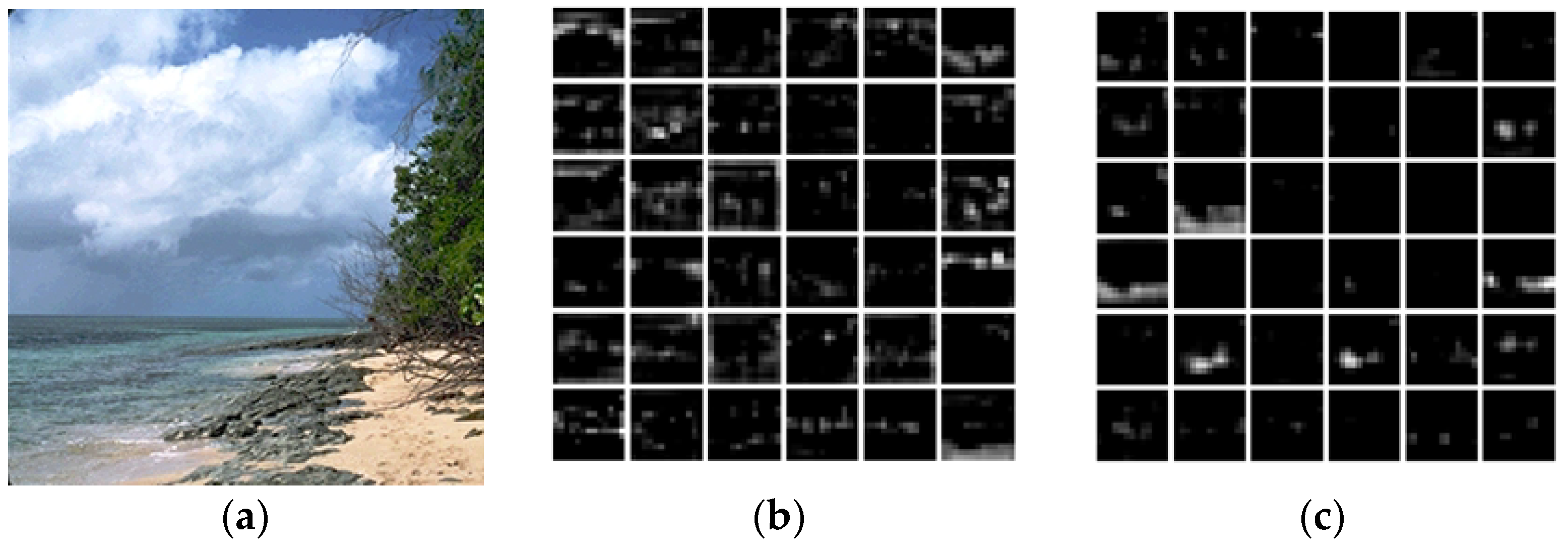

3.2. Deep Feature Extraction and Representation

3.3. Topic Model

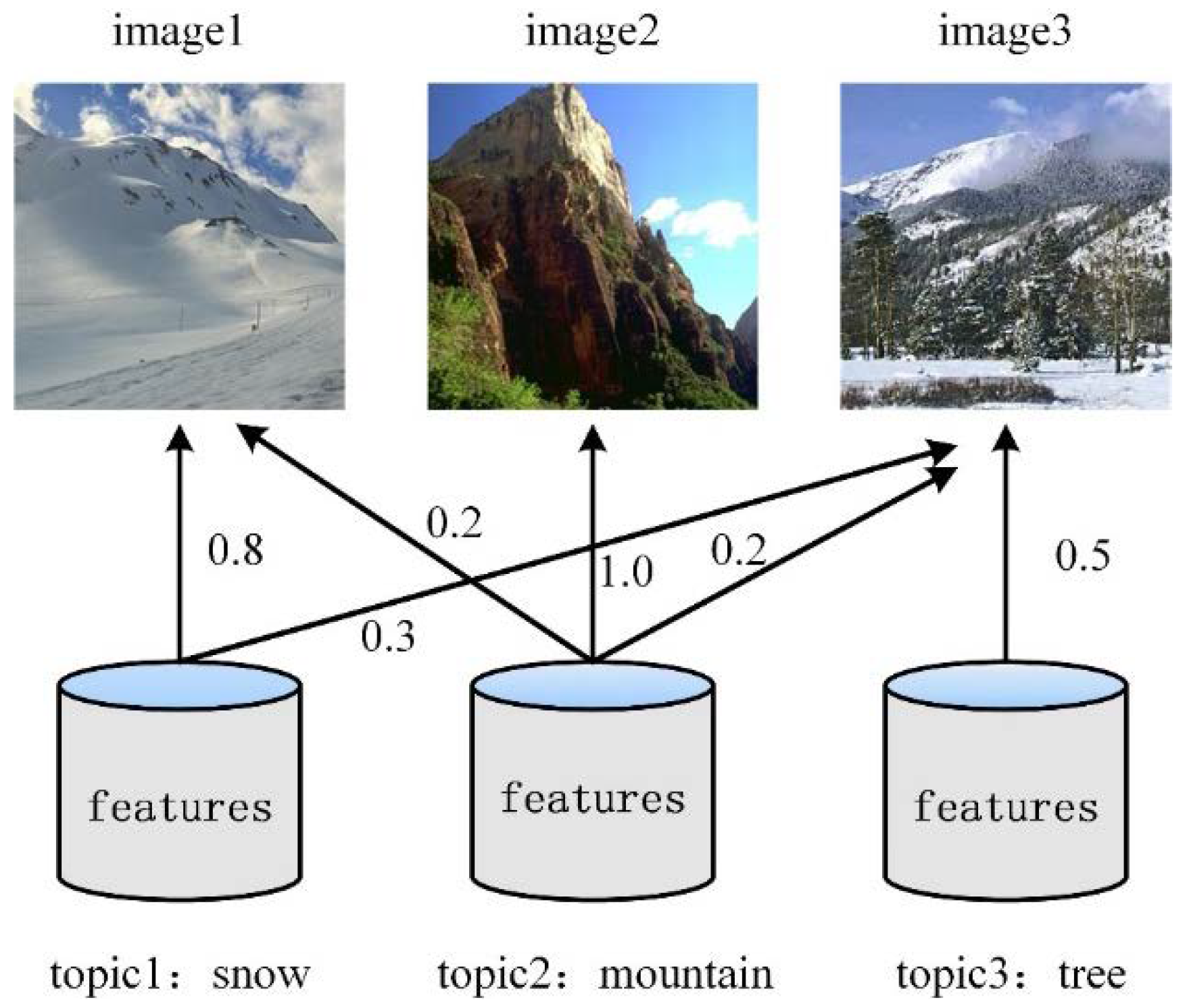

3.3.1. Topic Modeling for Image Representation

3.3.2. Topic Model

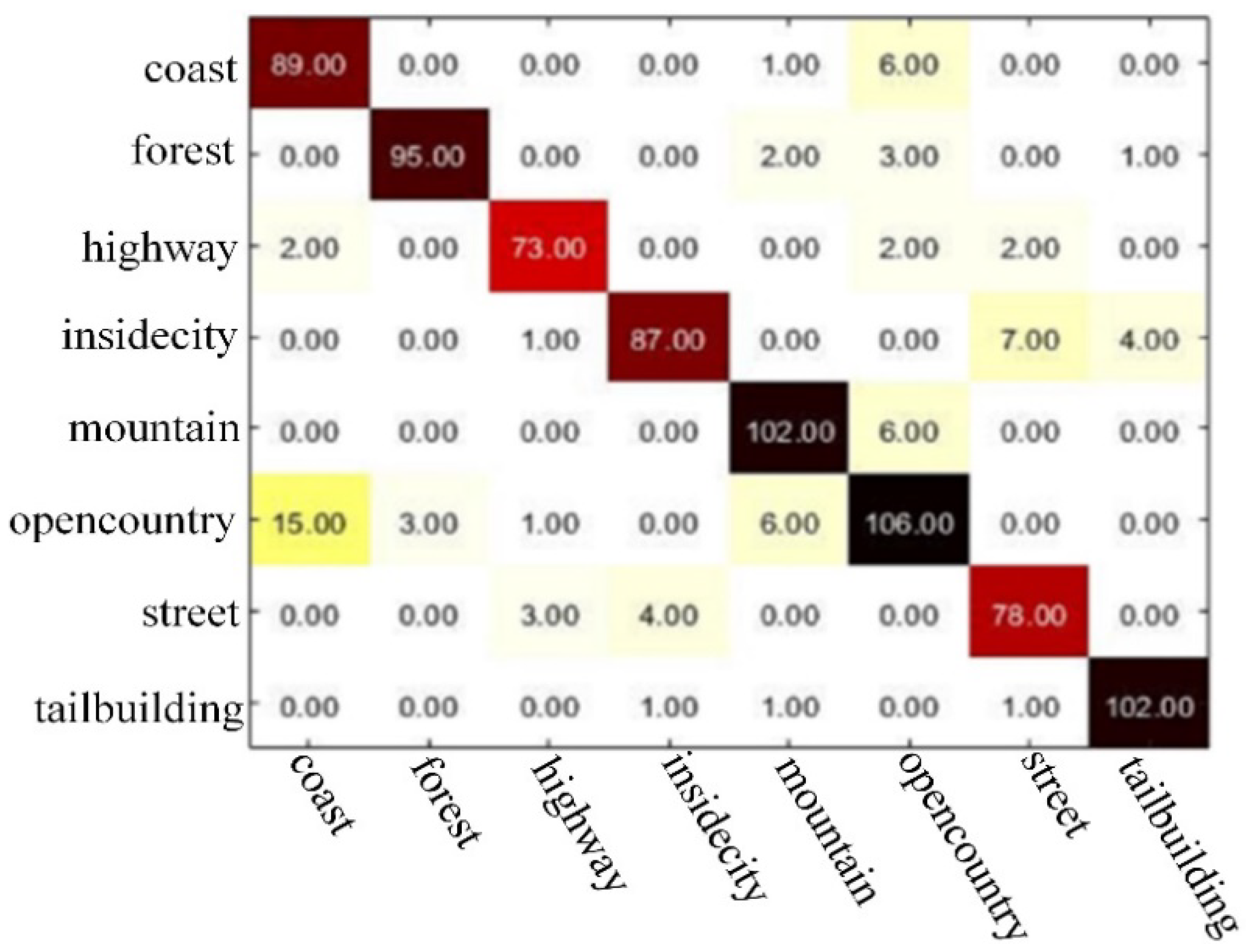

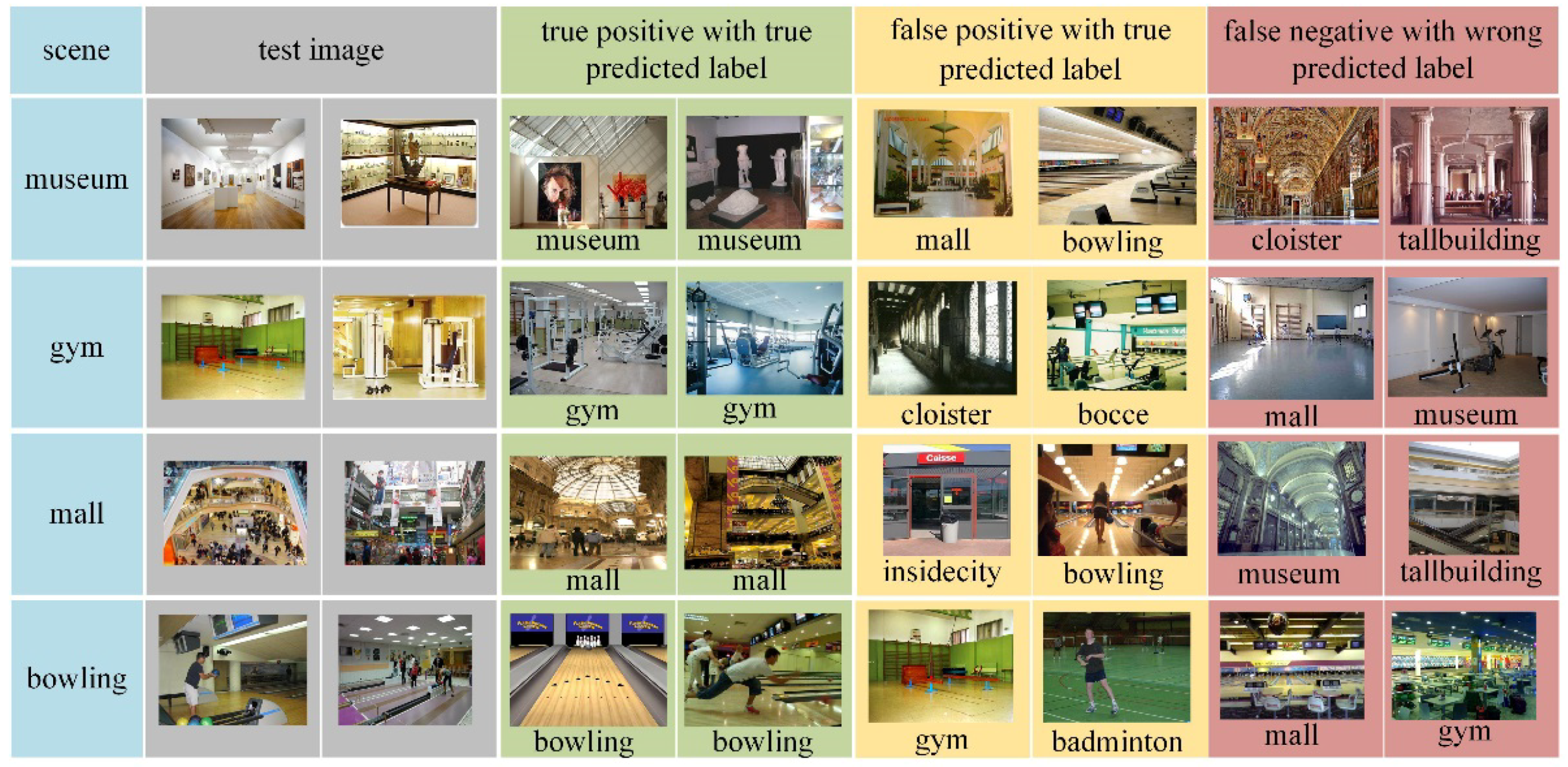

4. Experiments and Results

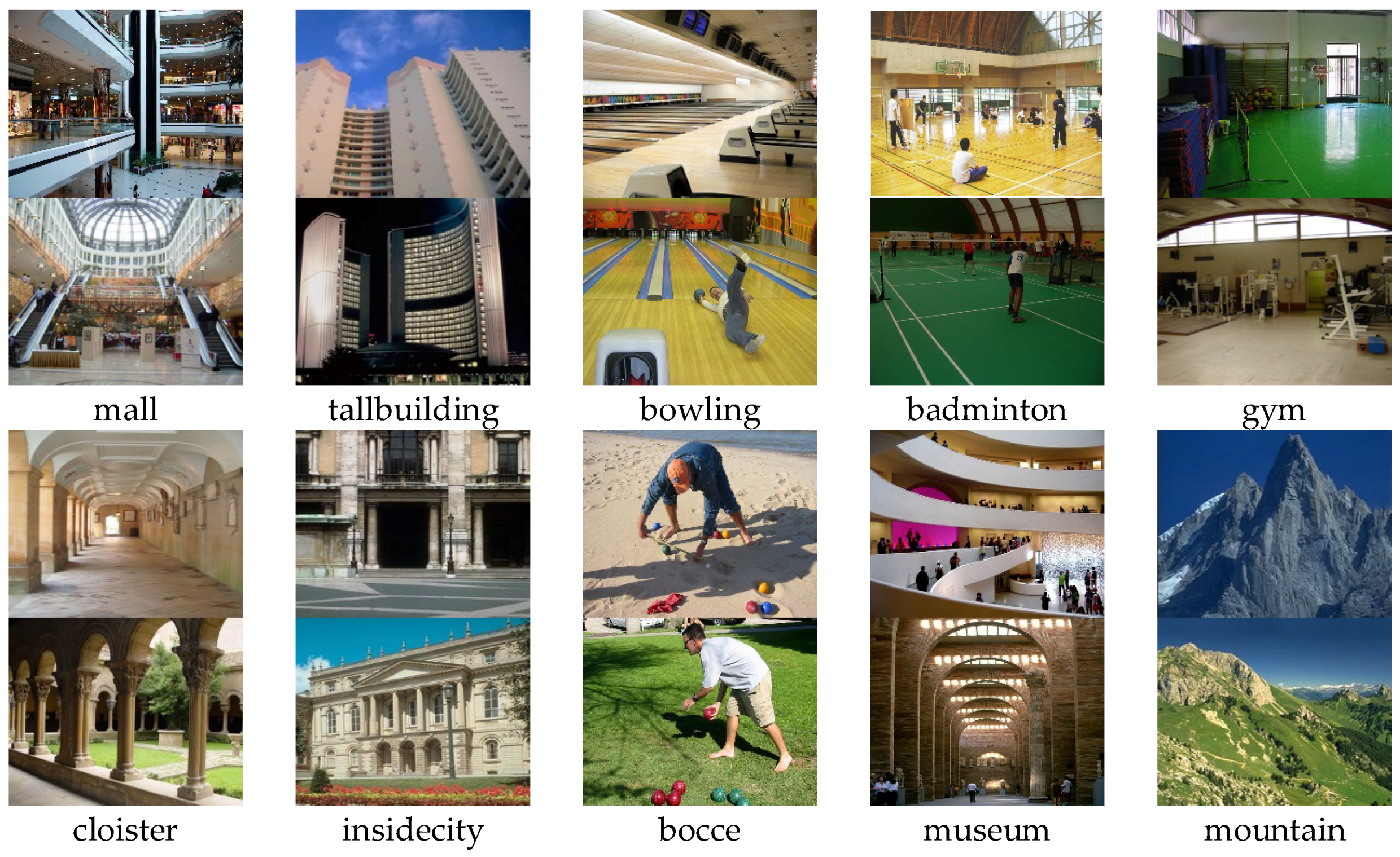

4.1. Description of Datasets

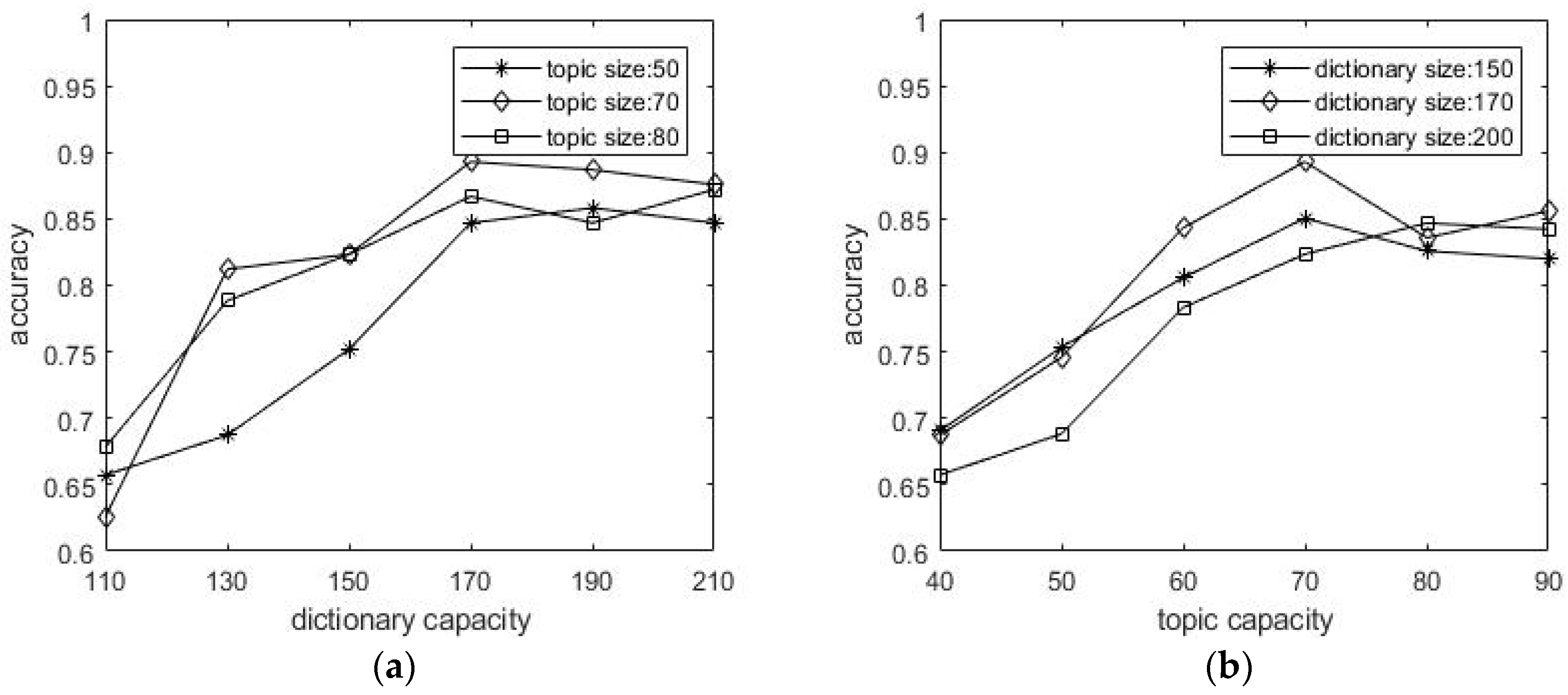

4.2. Parameter Analysis

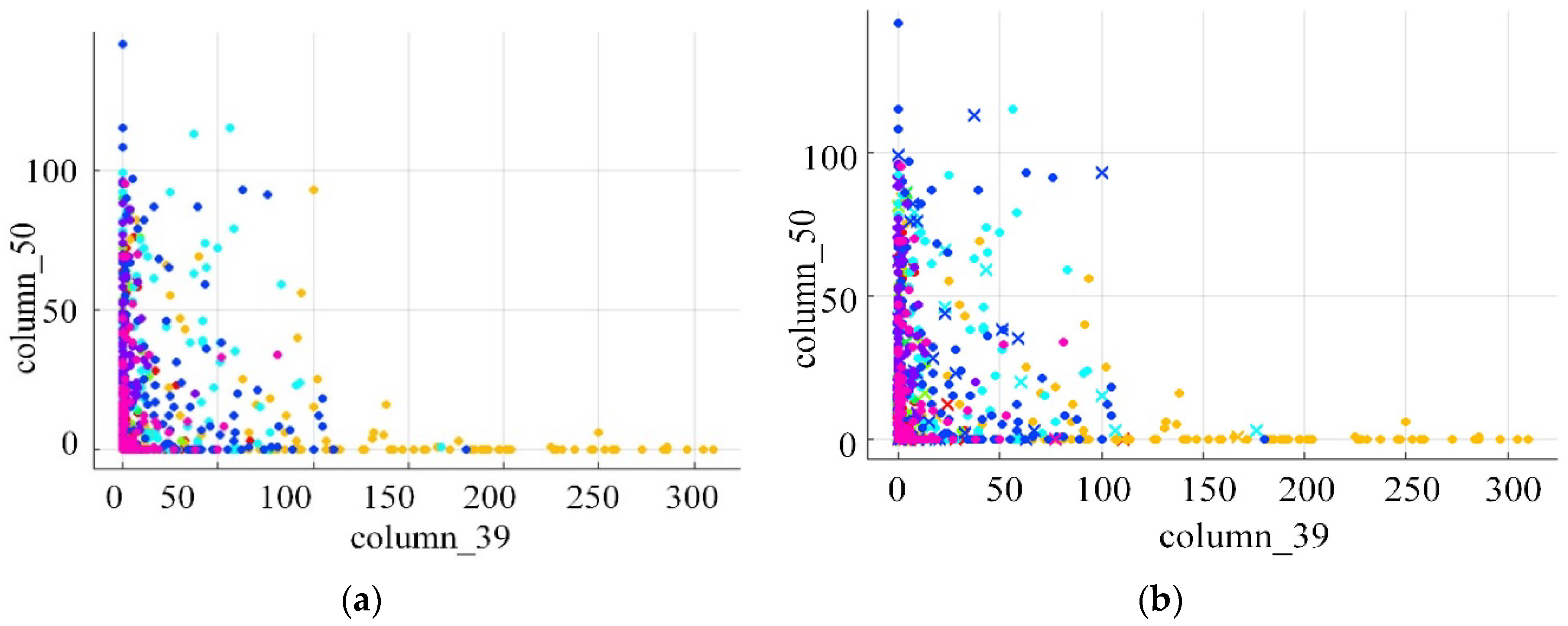

4.3. Results of Different Classifiers

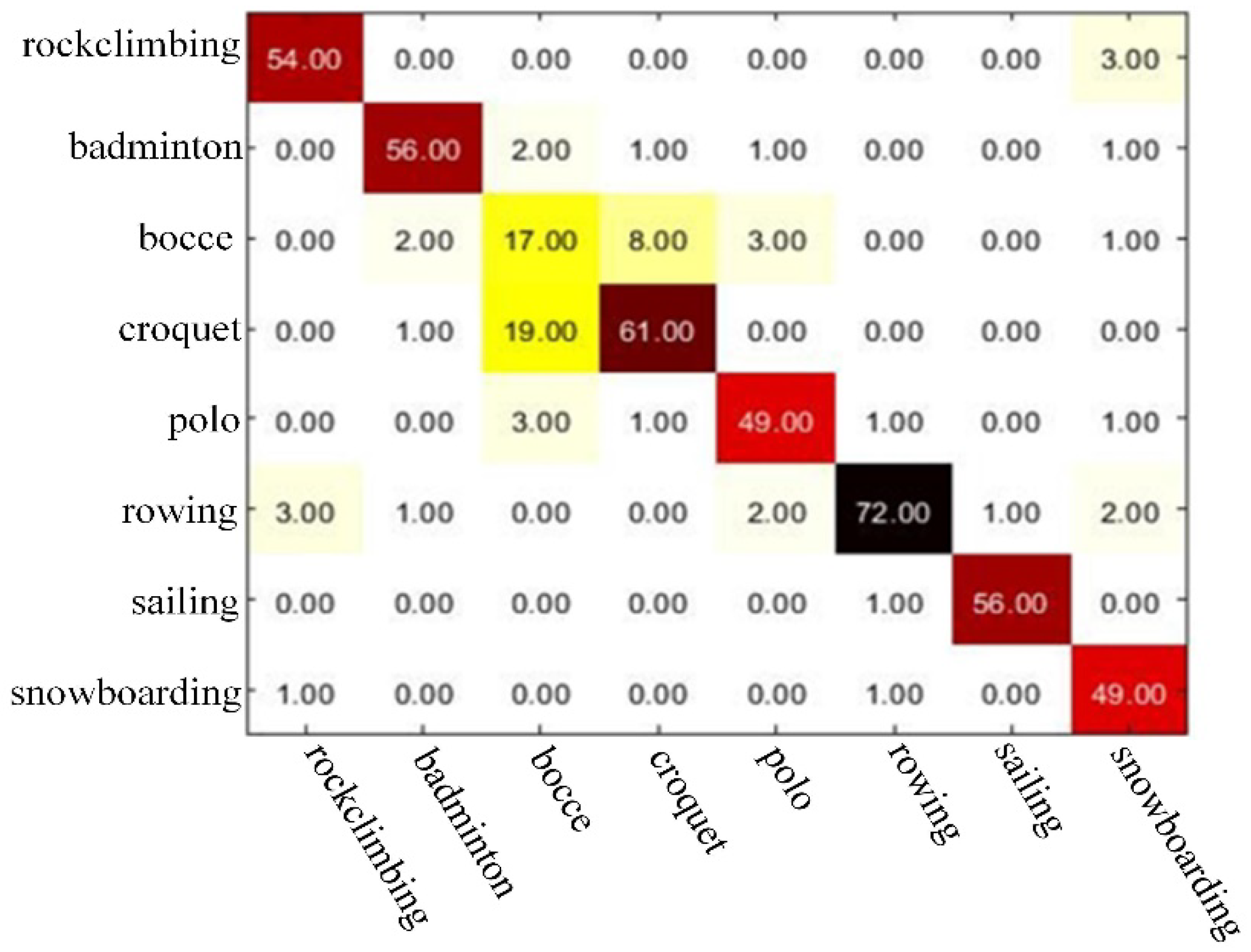

4.4. Results and Discussion

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Blei, D.M.; Ng, A.Y.; Jordan, M.I. Latent Dirichlet Allocation. J. Mach. Learn. Res. 2003, 3, 993–1022. [Google Scholar]

- Lee, Y.-S.; Lo, R.; Chen, C.-Y.; Lin, P.-C.; Wang, J.-C. News topics categorization using latent Dirichlet allocation and sparse representation classifier. In Proceedings of the 2015 IEEE International Conference on Consumer Electronics-Taiwan (ICCE-TW), Taipei, Taiwan, 6–8 June 2015; pp. 136–137. [Google Scholar]

- Ma, Y.; Jiang, Z.; Zhang, H.; Xie, F.; Zheng, Y.; Shi, H.; Zhao, Y. Breast histopathological image retrieval based on latent dirichlet allocation. IEEE J. Biomed. Health Inform. 2017, 21, 1114–1123. [Google Scholar] [CrossRef] [PubMed]

- Zhao, B.; Zhong, Y.; Xia, G.-S.; Zhang, L. Dirichlet-derived multiple topic scene classification model for high spatial resolution remote sensing imagery. IEEE Trans. Geosci. Remote Sens. 2016, 54, 2108–2123. [Google Scholar] [CrossRef]

- Tirunillai, S.; Tellis, G.J. Mining marketing meaning from online chatter: Strategic brand analysis of big data using latent dirichlet allocation. J. Mark. Res. 2014, 51, 463–479. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Advances in Neural Information Processing Systems (NIPS 2012), Proceedings of The Twenty-Sixth Annual Conference on Neural Information Processing Systems (NIPS), Stateline, NV, USA, 3–8 December 2012; Neural Information Processing Systems Foundation, Inc.: San Diego, CA, USA, 2012; pp. 1097–1105. [Google Scholar]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Quattoni, A.; Torralba, A. Recognizing indoor scenes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2009), Miami, FL, USA, 20–25 June 2009; pp. 413–420. [Google Scholar]

- Hofmann, T. Probabilistic latent semantic analysis. In Proceedings of the Fifteenth Conference on Uncertainty in Artificial Intelligence, Stockholm, Sweden, 30 July–1 August 1999; Morgan Kaufmann Publishers Inc.: San Francisco, CA, USA, 1999; pp. 289–296. [Google Scholar]

- Bosch, A.; Zisserman, A.; Muñoz, X. Scene classification via pLSA. In Proceedings of the European Conference on Computer Vision, Graz, Austria, 7–13 May 2006; Springer: Berlin/Heidelberg, Germany, 2006; pp. 517–530. [Google Scholar]

- Fei-Fei, L.; Perona, P. A bayesian hierarchical model for learning natural scene categories. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR 2005), San Diego, CA, USA, 20–25 June 2005; pp. 524–531. [Google Scholar]

- Niu, X.-X.; Suen, C.Y. A novel hybrid CNN–SVM classifier for recognizing handwritten digits. Pattern Recognit. 2012, 45, 1318–1325. [Google Scholar] [CrossRef]

- Zhen, K.; Birla, M.; Crandall, D.; Zhang, B.; Qiu, J. Hybrid supervised-unsupervised image topic visualization with convolutional neural network and LDA. arXiv, 2017; arXiv:preprint/1703.05243. [Google Scholar]

- Wang, Z.; Wang, Y.; Wang, L.; Qiao, Y. Codebook enhancement of VLAD representation for visual recognition. In Proceedings of the 2016 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Shanghai, China, 20–25 March 2016; pp. 1258–1262. [Google Scholar]

- Wang, Z.; Wang, L.; Wang, Y.; Zhang, B.; Qiao, Y. Weakly supervised PatchNets: Describing and aggregating local patches for scene recognition. IEEE Trans. Image Process. 2017, 26, 2028–2041. [Google Scholar] [CrossRef] [PubMed]

- Wang, L.; Wang, Z.; Qiao, Y.; Van Gool, L. Transferring deep object and scene representations for event recognition in still images. Int. J. Comput. Vis. 2018, 126, 390–409. [Google Scholar] [CrossRef]

- Li, Z.; Tian, W.; Li, Y.; Kuang, Z.; Liu, Y. A more effective method for image representation: Topic model based on latent dirichlet allocation. In Proceedings of the 2015 14th International Conference on Computer-Aided Design and Computer Graphics (CAD/Graphics), Xi’an, China, 26–28 August 2015; pp. 143–148. [Google Scholar]

- Razavian, A.S.; Azizpour, H.; Sullivan, J.; Carlsson, S. CNN features off-the-shelf: An astounding baseline for recognition. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Columbus, OH, USA, 23–28 June 2014; pp. 512–519. [Google Scholar]

- Qiao, Y.; Wang, L.; Guo, S.; Wang, Z.; Huang, W.; Wang, Y. Good Practice on Deep Scene Classification: From Local Supervision to Knowledge Guided Disambiguation. Available online: https://wangzheallen.github.io/ papers/SceneGP.pdf (accessed on 17 April 2018).

- Arthur, D.; Vassilvitskii, S. K-means++: The advantages of careful seeding. In Proceedings of the Eighteenth Annual ACM-SIAM Symposium on Discrete Algorithms, New Orleans, LA, USA, 7–9 January 2007; Society for Industrial and Applied Mathematics: Philadelphia, PA, USA, 2007; pp. 1027–1035. [Google Scholar]

- Zhao, B.; Fei-Fei, L.; Xing, E.P. Image segmentation with topic random field. In Proceedings of the European Conference on Computer Vision, Heraklion, Crete, Greece, 5–11 September 2010; Springer: Berlin/Heidelberg, Germany, 2010; pp. 785–798. [Google Scholar]

- Li, L.-J.; Li, F. What, where and who? Classifying events by scene and object recognition. In Proceedings of the IEEE 11th International Conference on Computer Vision (ICCV 2007), Rio de Janeiro, Brazil, 14–21 October 2007; pp. 1–8. [Google Scholar]

- Oliva, A.; Torralba, A. Modeling the shape of the scene: A holistic representation of the spatial envelope. Int. J. Comput. Vis. 2001, 42, 145–175. [Google Scholar] [CrossRef]

- Zang, M.; Wen, D.; Wang, K.; Liu, T.; Song, W. A novel topic feature for image scene classification. Neurocomputing 2015, 148, 467–476. [Google Scholar] [CrossRef]

- Zang, M.; Wen, D.; Liu, T.; Zou, H.; Liu, C. A pooled object bank descriptor for image scene classification. Expert Syst. Appl. 2018, 94, 250–264. [Google Scholar] [CrossRef]

- Jeon, J.; Kim, M. A spatial class LDA model for classification of sports scene images. In Proceedings of the 2015 IEEE International Conference on Image Processing (ICIP), Quebec City, QC, Canada, 27–30 September 2015; pp. 4649–4653. [Google Scholar]

- Gao, S.; Tsang, I.W.-H.; Chia, L.-T.; Zhao, P. Local features are not lonely–Laplacian sparse coding for image classification. In Proceedings of the 2010 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), San Francisco, CA, USA, 13–18 June 2010; pp. 3555–3561. [Google Scholar]

| UIUC Sports | Labelme | |

|---|---|---|

| KNN | 82.36% | 85.58% |

| SVM | 87.34% | 91.04% |

| No. | Method | UIUC Sports (%) | Lamelme (%) |

|---|---|---|---|

| 1. | Topic Feature [24] | 70.63 | 80.75 |

| 2. | OB [25] | 82.30 | 86.35 |

| 3. | scLDA [26] | 81.60 | -- |

| 4. | LScSPM [27] | 85.31 ± 0.51 | -- |

| 5. | CNN + LDA | 85.58 | 88.43 |

| 6. | Our approach | 87.34 | 91.04 |

| No. | Method | Combined (%) |

|---|---|---|

| 1. | SIFT + LDA | 65.83 |

| 2. | Proposed-SIFT + LDA | 68.21 |

| 3. | CNN + LDA | 81.25 |

| 4. | Fine-tuning VGG16 + LDA | 83.58 |

| 5. | Our approach | 82.93 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Feng, J.; Fu, A. Scene Semantic Recognition Based on Probability Topic Model. Information 2018, 9, 97. https://doi.org/10.3390/info9040097

Feng J, Fu A. Scene Semantic Recognition Based on Probability Topic Model. Information. 2018; 9(4):97. https://doi.org/10.3390/info9040097

Chicago/Turabian StyleFeng, Jiangfan, and Amin Fu. 2018. "Scene Semantic Recognition Based on Probability Topic Model" Information 9, no. 4: 97. https://doi.org/10.3390/info9040097