How Metaphors of Organizational Accidents and Their Graphical Representations Can Guide (or Bias) the Understanding and Analysis of Risks

Abstract

:1. Introduction

- How do metaphors influence the comprehension of organizational accidents?

- How can the graphical representation of metaphors shape the observers’ comprehension of accidents?

2. Accident Metaphors

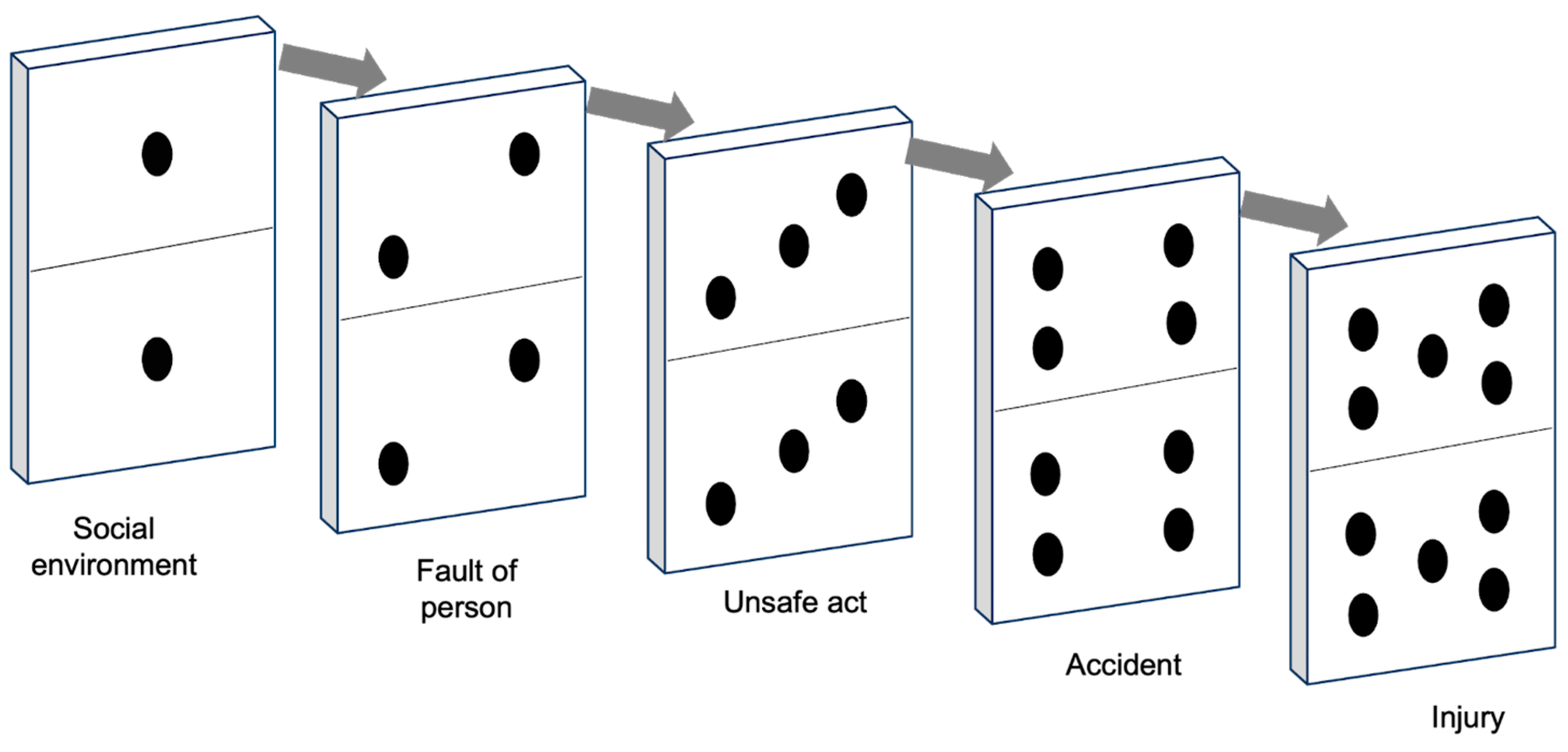

- Simple sequential linear accident models: The basic linear models operate under the assumption that accidents occur as a result of a chain of interconnected events or circumstances that occur in a sequential and straightforward manner. Therefore, by removing one of the causes in this linear chain, accidents can be prevented.

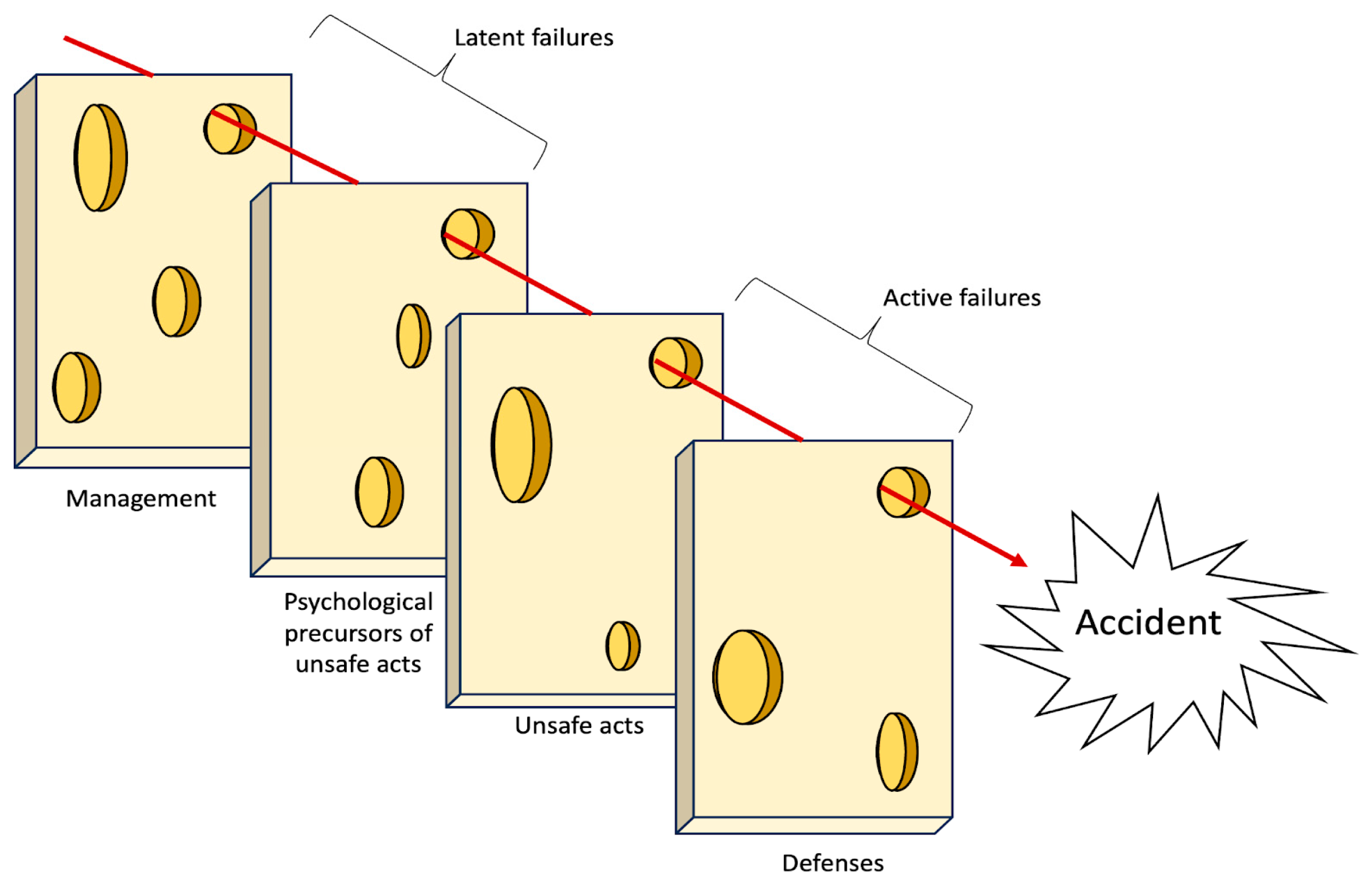

- Complex linear models: They operate under the assumption that accidents arise from a blend of hazardous conditions within the system and unsafe actions. These factors align along a linear trajectory, with those farther from the accident associated with organizational or environmental actions and those closer to the accident tied to human interactions. The underlying belief is that accidents can be averted by reinforcing barriers and defenses.

- Complex non-linear models: their fundamental assumption states that accidents could be best understood as outcomes of multiple variables that interact with one another in real-world settings; only by comprehending the combination and interaction of these various factors can accidents be genuinely understood and effectively prevented.

2.1. Linear Models: The Domino Metaphor

How Does the Domino Metaphor Guide (and Bias) the Comprehension of Organizational Accidents?

2.2. Complex Linear Models: The Swiss Cheese Model

2.2.1. How Does the Swiss Cheese Metaphor Guide (and Bias) the Comprehension of Organizational Accidents?

2.2.2. How Can the Graphical Representation of Swiss Cheese Model Shape (and Bias) the Observers’ Comprehension of Accidents?

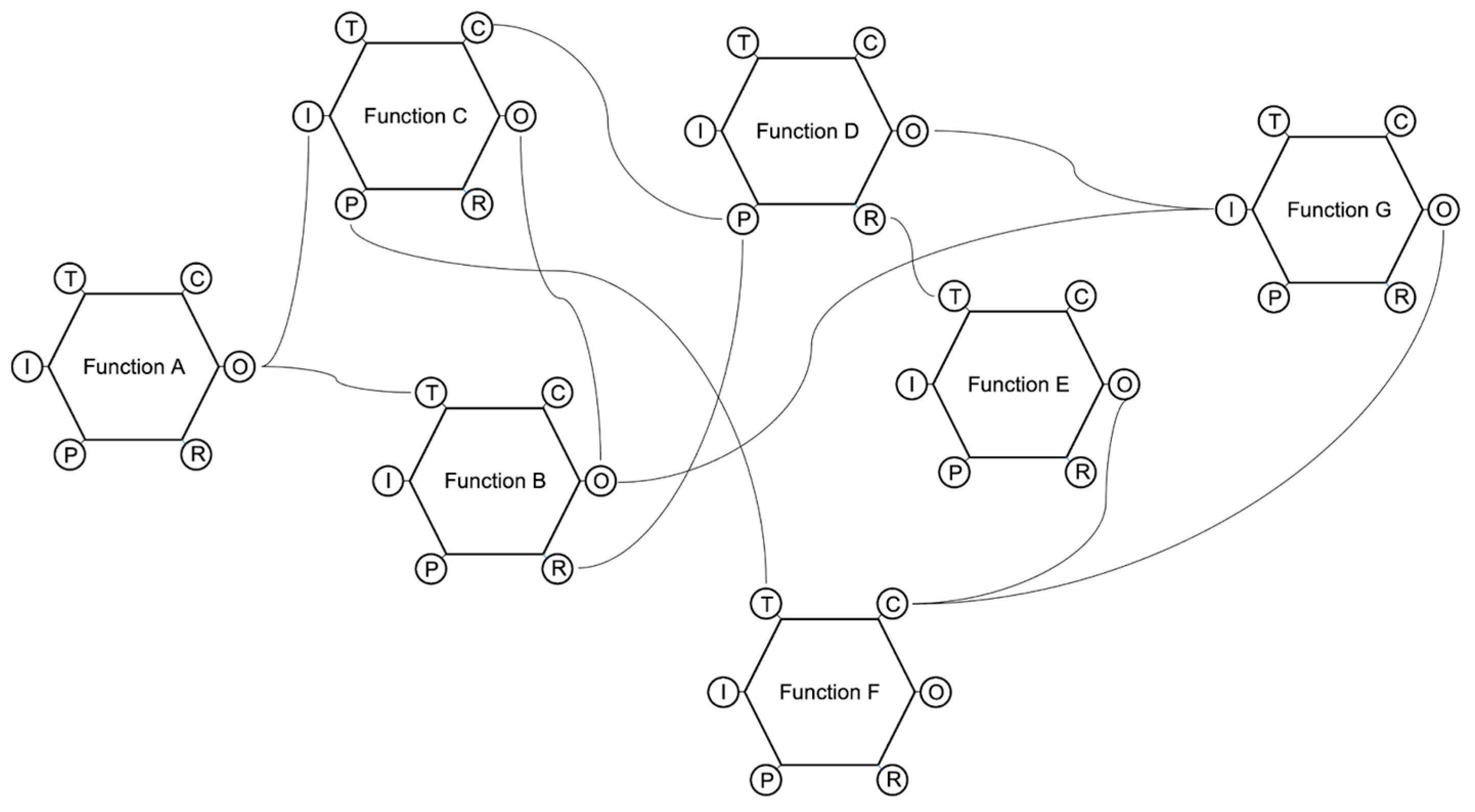

2.3. Complex Non-Linear Models: The Functional Resonance Analysis Method (FRAM)

- (a)

- Input: the starting point of the function; what the function receives to begin its activity.

- (b)

- Output: the result of the function.

- (c)

- Preconditions: conditions that must be in place before the function can be performed.

- (d)

- Resources: what the function needs to have in order to be executed.

- (e)

- Time: time constraints that limit the function.

- (f)

- Control: how the function is monitored and controlled.

How Does the Graphical Representation of the FRAM Metaphor Guide the Comprehension of Organizational Accidents?

3. A Framework for Developing Models of Organizational Accidents

- create the ground for a shared interpretation of accidents through a simplified description of the relevant phenomena;

- provide a tool for framing and communicating safety issues to all levels of an organization;

- enable people to analyze the accident, preventing personal biases and opening the door for effective solutions;

- guide investigations in terms of which data to collect and analyze and how to process them;

- highlight and facilitate the analysis of interactions between factors and conditions behind an accident.

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- Barner, Robert. 2008. The dark tower: Using visual metaphors to facilitate emotional expression during organizational change. Journal of Organizational Change Management 21: 120–37. [Google Scholar] [CrossRef]

- Bird, Frank E., George L. Germain, and M. Douglas Clark. 1990. Practical Loss Control Leadership. Loganville: International Loss Control Institute. [Google Scholar]

- Brazier, Andy. 2018. Investigation and bias-procedures. Loss Prevention Bulletin, 9–11. [Google Scholar]

- Bryan, Rachel, Jeffrey K. Aronson, Alison Williams, and Sue Jordan. 2021. The problem of look-alike, sound-alike name errors: Drivers and solutions. British Journal of Clinical Pharmacology 87: 386–94. [Google Scholar] [CrossRef] [PubMed]

- Catino, Maurizio. 2008. A review of literature: Individual blame vs. organizational function logics in accident analysis. Journal of Contingencies and Crisis Management 16: 53–62. [Google Scholar] [CrossRef]

- Chen, Chao, Genserik Reniers, and Nima Khakzad. 2020. Cost-benefit management of intentional domino effects in chemical industrial areas. Process Safety and Environmental Protection 134: 392–405. [Google Scholar] [CrossRef]

- Coëgnarts, Maarten, and Peter Kravanja. 2014. A study in cinematic subjectivity: Metaphors of perception in film. Metaphor and the Social World 4: 149–73. [Google Scholar] [CrossRef]

- Dallat, Clare, Paul M. Salmon, and Natassia Goode. 2019. Risky systems versus risky people: To what extent do risk assessment methods consider the systems approach to accident causation? A review of the literature. Safety Science 119: 266–79. [Google Scholar] [CrossRef]

- Dekker, Sidney. 2002. Reconstructing human contributions to accidents: The new view on error and performance. Journal of Safety Research 33: 371–85. [Google Scholar] [CrossRef]

- Dekker, Sidney. 2003. When human error becomes a crime. Human Factors and Aerospace Safety 3: 83–92. [Google Scholar]

- Dekker, Sidney. 2010. In the system view of human factors, who is accountable for failure and success? In Human Factors: A System View of Human, Technology and Organization. Maastricht: Shaker Publishing BV, pp. 9–28. [Google Scholar]

- Dekker, Sidney. 2011. Drift into Failure: From Hunting Broken Components to Understanding Complex Systems. Surry: Ashgate. ISBN 9781409422228. [Google Scholar]

- Dekker, Sidney. 2017. Just Culture: Restoring Trust and Accountability in Your Organization, 3rd ed. Boca Raton: CRC Press. [Google Scholar]

- Dekker, Sidney. 2019. Foundations of Safety Science: A Century of Understanding Accidents and Disasters. London: Routledge. ISBN 9781138481787. [Google Scholar]

- Dekker, Sidney, Erik Hollnagel, David Woods, and Richard Cook. 2008. Resilience Engineering: New directions for measuring and maintaining safety in complex systems. Lund University School of Aviation 1: 1–6. [Google Scholar]

- Dekker, Sidney, Paul Cilliers, and Jan-Hendrik Hofmeyr. 2011. The complexity of failure: Implications of complexity theory for safety investigations. Safety Science 49: 939–45. [Google Scholar] [CrossRef]

- Dekker, Sidney W. 2005. Why We Need New Accident Models. Lund: School of Aviation, Lund Univ. [Google Scholar]

- Di Simone, Emanuele, Fabio Fabbian, Noemi Giannetta, Sara Dionisi, Erika Renzi, Rosaria Cappadona, Marco Di Muzio, and Roberto Manfredini. 2020. Risk of medication errors and nurses’ quality of sleep: A national cross-sectional web survey study. European Review for Medical and Pharmacological Sciences 24: 7058–62. [Google Scholar]

- Eisen, Lewis A. A., and Richard H. Savel. 2009. What went right: Lessons for the intensivist from the crew of US Airways Flight 1549. Chest 136: 910–17. [Google Scholar] [CrossRef] [PubMed]

- Eppler, Martin J. 2006. A comparison between concept maps, mind maps, conceptual diagrams, and visual metaphors as complementary tools for knowledge construction and sharing. Information Visualization 5: 202–10. [Google Scholar] [CrossRef]

- Ferjencik, Milos. 2011. An integrated approach to the analysis of incident causes. Safety Science 49: 886–905. [Google Scholar] [CrossRef]

- Fischhoff, Baruch. 1975. Hindsight is not equal to foresight: The effect of outcome knowledge on judgment under uncertainty. Journal of Experimental Psychology: Human Perception and Performance 1: 288. [Google Scholar] [CrossRef]

- Forceville, Carles. 2006. Non-verbal and multimodal metaphor in a cognitivist framework: Agendas for research. Multimodal Metaphor 2: 19–35. [Google Scholar]

- Fu, Gui, Xuecai Xie, Qingsong Jia, Zonghan Li, Ping Chen, and Ying Ge. 2020. The development history of accident causation models in the past 100 years: 24Model, a more modern accident causation model. Process Safety and Environmental Protection 134: 47–82. [Google Scholar] [CrossRef]

- Gao, Jonathan, and Sidney Dekker. 2016. Heroes and villains in complex socio-technical systems. In Disaster Forensics: Understanding Root Cause and Complex Causality. London: Springer, pp. 47–62. [Google Scholar]

- Gentner, Dedre, and Donald R. Gentner. 1983. Flowing waters or teeming crowds: Mental models of electricity. Mental Models 99: 129. [Google Scholar]

- Heinrich, Herbert W. 1941. Industrial Accident Prevention: A Scientific Approach. New York: McGraw-Hill. [Google Scholar]

- Hoff, Timothy, Lottie Jameson, Edward Hannan, and Ellen Flink. 2004. A review of the literature examining linkages between organizational factors, medical errors, and patient safety. Medical Care Research and Review 61: 3–37. [Google Scholar] [CrossRef] [PubMed]

- Holden, Richard J. 2009. People or systems? To blame is human. The fix is to engineer. Professional Safety 54: 34. [Google Scholar] [PubMed]

- Hollnagel, Erik. 2002. Understanding accidents-from root causes to performance variability. Paper presented at the IEEE 7th Conference on Human Factors and Power Plants, Scottsdale, AZ, USA, September 19. [Google Scholar]

- Hollnagel, Erik. 2004. Barriers and Accident Prevention. Burlington: VT Ashgate. ISBN 9781138247352. [Google Scholar]

- Hollnagel, Erik. 2008. The changing nature of risks. Ergonomics Australia 22: 33–46. [Google Scholar] [CrossRef]

- Hollnagel, Erik. 2009. The ETTO Principle. Efficiency-Thoroughness-Trade-Off. London: Ashgate. ISBN 9780754676782. [Google Scholar]

- Hollnagel, Erik. 2012. FRAM—The Functional Resonance Analysis Method. Farnham: Ashgate. ISBN 9781409445524. [Google Scholar]

- Hollnagel, Erik. 2014. Safety-I and Safety-II: The Past and Future of Safety Management. Farnham: Ashgate. ISBN 9781317059806. [Google Scholar]

- Hollnagel, Erik. 2021. Safer systems: People training or system tuning? European Journal of Investigation in Health, Psychology and Education 11: 990–98. [Google Scholar] [CrossRef] [PubMed]

- Hollnagel, Erik, and David D. Woods. 2017. Epilogue: Resilience engineering precepts. In Resilience Engineering. Boca Raton: CRC Press, pp. 347–58. [Google Scholar]

- Hollnagel, Erik, David D. Woods, and Nancy Leveson. 2006. Resilience Engineering. Concepts and Precepts. Ashgate: Aldershot. ISBN 9780754646419. [Google Scholar]

- Hollnagel, Erik, and Örjan Goteman. 2004. The functional resonance accident model. Proceedings of Cognitive System Engineering in Process Plant 2004: 155–61. [Google Scholar]

- Hovden, Jan, Eirik Abrechtsen, and Ivonne A. Herrera. 2010. Is there a need for new theories, models and approaches to occupational accident prevention? Safety Science 48: 950–56. [Google Scholar] [CrossRef]

- Hudson, Patrick. 2014. Accident causation models, management and the law. Journal of Risk Research 17: 749–64. [Google Scholar] [CrossRef]

- Johansson, Björn, and Mattias Lindgren. 2008. A quick and dirty evaluation of resilience enhancing properties in safety critical systems. Paper presented at the Third Resilience Engineering Symposium, École des mines, Paris, October 28–30. [Google Scholar]

- Lakoff, George, and Mark Johnson. 1980. Metaphors We Live by. Chicago: University of Chicago. ISBN 9780226468013. [Google Scholar]

- Larouzée, Justin. 2017. Human error and defense in depth: From the “Clambake” to the “Swiss Cheese”. In Resilience: A New Paradigm of Nuclear Safety: From Accident Mitigation to Resilient Society Facing Extreme Situations. Cham: Springer International Publishing, pp. 257–67. [Google Scholar]

- Larouzée, Justin, and Jean-Christophe Le Coze. 2020. Good and bad reasons: The Swiss cheese model and its critics. Safety Science 126: 104660. [Google Scholar] [CrossRef]

- Le Coze, Jean-Christophe, ed. 2019. Safety Science Research: Evolution, Challenges and New Directions. Boca Raton: CRC Press. [Google Scholar]

- Le Coze, Jean-Christophe. 2022. The ‘new view’ of human error. Origins, ambiguities, successes and critiques. Safety Science 154: 105853. [Google Scholar] [CrossRef]

- Lee, Joohee, Wan C. Yoon, and Hyun Chung. 2020. Formal or informal human collaboration approach to maritime safety using FRAM. Cognition, Technology and Work 22: 861–75. [Google Scholar] [CrossRef]

- Leveson, Nancy. 2004. A new accident model for engineering safer systems. Safety Science 42: 237–70. [Google Scholar] [CrossRef]

- Leveson, Nancy. 2011. Engineering a Safer World: Systems Thinking Applied to Safety. London: The MIT Press. ISBN 9780262533690. [Google Scholar]

- Lundberg, Jonas, Carl Rollenhagen, and Erik Hollnagel. 2009. What-You-Look-For-Is-What-You-Find—The consequences of underlying accident models in eight accident investigation manuals. Safety Science 47: 1297–311. [Google Scholar] [CrossRef]

- Luxhøj, James T., and Kimberlee Kauffeld. 2003. Evaluating the Effect of Technology Insertion into the National Airspace System. The Rutgers Scholar 5. [Google Scholar]

- Luxhøj, James T., and Michele Maurino. 2001. An Aviation System Risk Model (ASRM) Case Study: Air Ontario 1363. The Rutgers Scholar 3. [Google Scholar]

- Marsden, Eric. 2017. Heinrich’s Domino Model of Accident Causation. Risk Engineering. Available online: https://risk-engineering.org/concept/Heinrich-dominos (accessed on 1 October 2023).

- Morgan, Gareth. 2011. Reflections on images of organization and its implications for organization and environment. Organization & Environment 24: 459–78. [Google Scholar] [CrossRef]

- Nuutinen, Maaria, and Leena Norros. 2009. Core task analysis in accident investigation: Analysis of maritime accidents in piloting situations. Cognition, Technology & Work 11: 129–50. [Google Scholar] [CrossRef]

- O’Hara, Jane K., Ruth Baxter, and Natasha Hardicre. 2020. ‘Handing over to the patient’: A FRAM analysis of transitional care combining multiple stakeholder perspectives. Applied Ergonomics 85: 103060. [Google Scholar] [CrossRef] [PubMed]

- Okstad, Eivind, Erik Jersin, and Ranveig K. Tinmannsvik. 2012. Accident investigation in the Norwegian petroleum industry—Common features and future challenges. Safety Science 50: 1408–14. [Google Scholar] [CrossRef]

- Öztel, Hülya, and Ole Hinz. 2001. Changing organisations with metaphors. The Learning Organization 8: 153–68. [Google Scholar] [CrossRef]

- Pasman, Hans J., William J. Rogers, and Sam M. Mannan. 2018. How can we improve process hazard identification? What can accident investigation methods contribute and what other recent developments? A brief historical survey and a sketch of how to advance. Journal of Loss Prevention in the Process Industries 55: 80–106. [Google Scholar] [CrossRef]

- Patriarca, Riccardo, Giulio Di Gravio, Rogier Woltjer, Francesco Costantino, Gesa Praetorius, P. Ferreira, and Erik Hollnagel. 2020. Framing the FRAM: A literature review on the functional resonance analysis method. Safety Science 129: 104827. [Google Scholar] [CrossRef]

- Patriarca, Riccardo, Johan Bergström, and Giulio Di Gravio. 2017. Defining the functional resonance analysis space: Combining Abstraction Hierarchy and FRAM. Reliability Engineering & System Safety 165: 34–46. [Google Scholar] [CrossRef]

- Perneger, Thomas V. 2005. The Swiss cheese model of safety incidents: Are there holes in the metaphor? BMC Health Services Research 5: 71. [Google Scholar] [CrossRef] [PubMed]

- Pescaroli, Gianluca, and David Alexander. 2015. A definition of cascading disasters and cascading effects: Going beyond the “toppling dominos” metaphor. Planet@ Risk 3: 58–67. [Google Scholar]

- Petridis, Saavas, and Lydia B. Chilton. 2019. Human errors in interpreting visual metaphor. Paper presented at the 2019 Conference on Creativity and Cognition, San Diego, CA, USA, June 23–26; pp. 187–97. [Google Scholar] [CrossRef]

- Pillay, Manikam. 2015. Accident causation, prevention and safety management: A review of the state-of-the-art. Procedia Manufacturing 3: 1838–45. [Google Scholar] [CrossRef]

- Rad, Mona A., Lianne M. Lefsrud, and Micheal T. Hendry. 2023. Application of systems thinking accident analysis methods: A review for railways. Safety Science 160: 106066. [Google Scholar] [CrossRef]

- Rasmussen, Jens. 1997. Risk management in a dynamic society: A modelling problem. Safety Science 27: 183–213. [Google Scholar] [CrossRef]

- Reason, James. 1990. Human Error. Cambridge: Cambridge University Press. ISBN 9780521306690. [Google Scholar]

- Reason, James. 1997. Managing the Risks of Organizational Accidents. Aldershot: Ashgate. ISBN 9781840141047. [Google Scholar]

- Reason, James. 2000. Human error: Models and management. BMJ 320: 768–70. [Google Scholar] [CrossRef] [PubMed]

- Reason, James, Jean Paries, and Erik Hollnagel. 2006. Revisiting the «Swiss Cheese» Model of Accidents. EEC Note No. 13/06. Brétigny-sur-Orge: EUROCONTROL Experimental Centre. [Google Scholar]

- Refaie, Elisabeth E. 2003. Understanding visual metaphor: The example of newspaper cartoons. Visual Communication 2: 75–95. [Google Scholar] [CrossRef]

- Roelen, Alfred L. C., Pei H. Lin, and Andrew R. Hale. 2011. Accident models and organizational factors in air transport: The need for multi-method models. Safety Science 49: 5–10. [Google Scholar] [CrossRef]

- Rosness, Ragnar, Geir Guttormsen, Trygve Steiro, Ranveig K. Tinmannsvik, and Ivonne A. Herrera. 2004. Organisational Accidents and Resilient Organisations: Five Perspectives Revision. Trondheim: SINTEF Industrial Management. [Google Scholar]

- Salehi, Vahid, Duuh Smith, Brian Veitch, and Natasha Hanson. 2021. A dynamic version of the FRAM for capturing variability in complex operations. MethodsX 8: 101333. [Google Scholar] [CrossRef]

- Saleh, Joseph H., Karen B. Marais, Efstathios Bakolas, and Raghvendra V. Cowlagi. 2010. Highlights from the literature on accident causation and system safety: Review of major ideas, recent contributions, and challenges. Reliability Engineering & System Safety 95: 1105–16. [Google Scholar] [CrossRef]

- Salmon, Paul M., Miranda Cornelissen, and Margaret J. Trotter. 2012. Systems-based accident analysis methods: A comparison of Accimap, HFACS, and STAMP. Safety Science 50: 1158–70. [Google Scholar] [CrossRef]

- Serig, Daniel. 2006. A conceptual structure of visual metaphor. Studies in Art Education 47: 229–47. [Google Scholar] [CrossRef]

- Shappell, Scott A., and Douglas A. Wiegmann. 2000. The Human Factors Analysis and Classification System—HFACS. Office of Aviation Medicine Washington, Final Report. Available online: https://commons.erau.edu/cgi/viewcontent.cgi?article=1777&context=publication (accessed on 1 October 2023).

- Svedung, Inge, and Jens Rasmussen. 2002. Graphic representation of accidents cenarios: Mapping system structure and the causation of accidents. Safety Science 40: 397–417. [Google Scholar] [CrossRef]

- Swuste, Paul, Coen Van Gulijk, Walter Zwaard, and Yvette Oostendorp. 2014. Occupational safety theories, models and metaphors in the three decades since World War II, in the United States, Britain and the Netherlands: A literature review. Safety Science 62: 16–27. [Google Scholar] [CrossRef]

- Tsoukas, Haridimos. 1991. The missing link: A transformational view of metaphors in organizational science. Academy of Management Review 16: 566–85. [Google Scholar] [CrossRef]

- Underwood, Peter, and Patrick Waterson. 2012. A critical review of the STAMP, FRAM and Accimap systemic accident analysis models. Advances in Human Aspects of Road and Rail Transportation, 385–94. [Google Scholar]

- Underwood, Peter, and Patrick Waterson. 2013. Systemic accident analysis: Examining the gap between research and practice. Accident Analysis & Prevention 55: 154–64. [Google Scholar] [CrossRef]

- Underwood, Peter, and Patrick Waterson. 2014. Systems thinking, the Swiss Cheese Model and accident analysis: A comparative systemic analysis of the Grayrigg train derailment using the ATSB, AcciMap and STAMP models. Accident Analysis & Prevention 68: 75–94. [Google Scholar] [CrossRef]

- Van Hekken, Anemarie, and Enny Das. 2019. Getting the picture: A visual metaphor increases the effectiveness of retirement communication. Futures 107: 59–73. [Google Scholar] [CrossRef]

- Weick, Karl E. 1987. Organizational culture as a source of high reliability. California Management Review 29: 112–27. [Google Scholar] [CrossRef]

- Woods, David D., Sidney Dekker, Richard Cook, Leila Johannesen, and Nadine Sarter. 2010. Behind Human Error, 2nd ed. Boca Raton: CRC Press. [Google Scholar] [CrossRef]

- Woods, John, and Douglas Walton. 1977. Post hoc, ergo propter hoc. The Review of Metaphysics 30: 569–93. [Google Scholar]

- Yorio, Patrick L., and Susan M. Moore. 2018. Examining factors that influence the existence of Heinrich’s safety triangle using site-specific H&S data from more than 25,000 establishments. Risk Analysis 38: 839–52. [Google Scholar] [CrossRef] [PubMed]

- Young, Mark, Steven Shorrock, and John Faulkner. 2004. Who moved my (Swiss) cheese? The (R) evolution of human factors in transport safety investigation. ISASI 2004. Human Factors in Investigations 28: 10–13. [Google Scholar]

- Ziemkiewicz, Caroline, and Robert Kosara. 2008. The shaping of information by visual metaphors. IEEE Transactions on Visualization and Computer Graphics 14: 1269–76. [Google Scholar] [CrossRef]

| Feature | Rationale | Model’s Functions |

|---|---|---|

| System structure | Complex systems are often hierarchically organized | The model should be able to:

|

| System components relationships | Accidents are emergent properties of the system several components | The model should be able to:

|

| System behavior | Socio-technical systems dynamically balance production and protection | The model should be able to:

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bracco, F.; Ivaldi, M. How Metaphors of Organizational Accidents and Their Graphical Representations Can Guide (or Bias) the Understanding and Analysis of Risks. J. Intell. 2023, 11, 199. https://doi.org/10.3390/jintelligence11100199

Bracco F, Ivaldi M. How Metaphors of Organizational Accidents and Their Graphical Representations Can Guide (or Bias) the Understanding and Analysis of Risks. Journal of Intelligence. 2023; 11(10):199. https://doi.org/10.3390/jintelligence11100199

Chicago/Turabian StyleBracco, Fabrizio, and Martina Ivaldi. 2023. "How Metaphors of Organizational Accidents and Their Graphical Representations Can Guide (or Bias) the Understanding and Analysis of Risks" Journal of Intelligence 11, no. 10: 199. https://doi.org/10.3390/jintelligence11100199