A Log File Analysis on the Validity of Partial Solutions in Figural Matrices Tests

Abstract

:1. Introduction

1.1. Reasoning as a Key Component of General Intelligence

1.2. Assessment of Reasoning through Matrices Tests

1.3. Partial Scoring in Matrices Tests

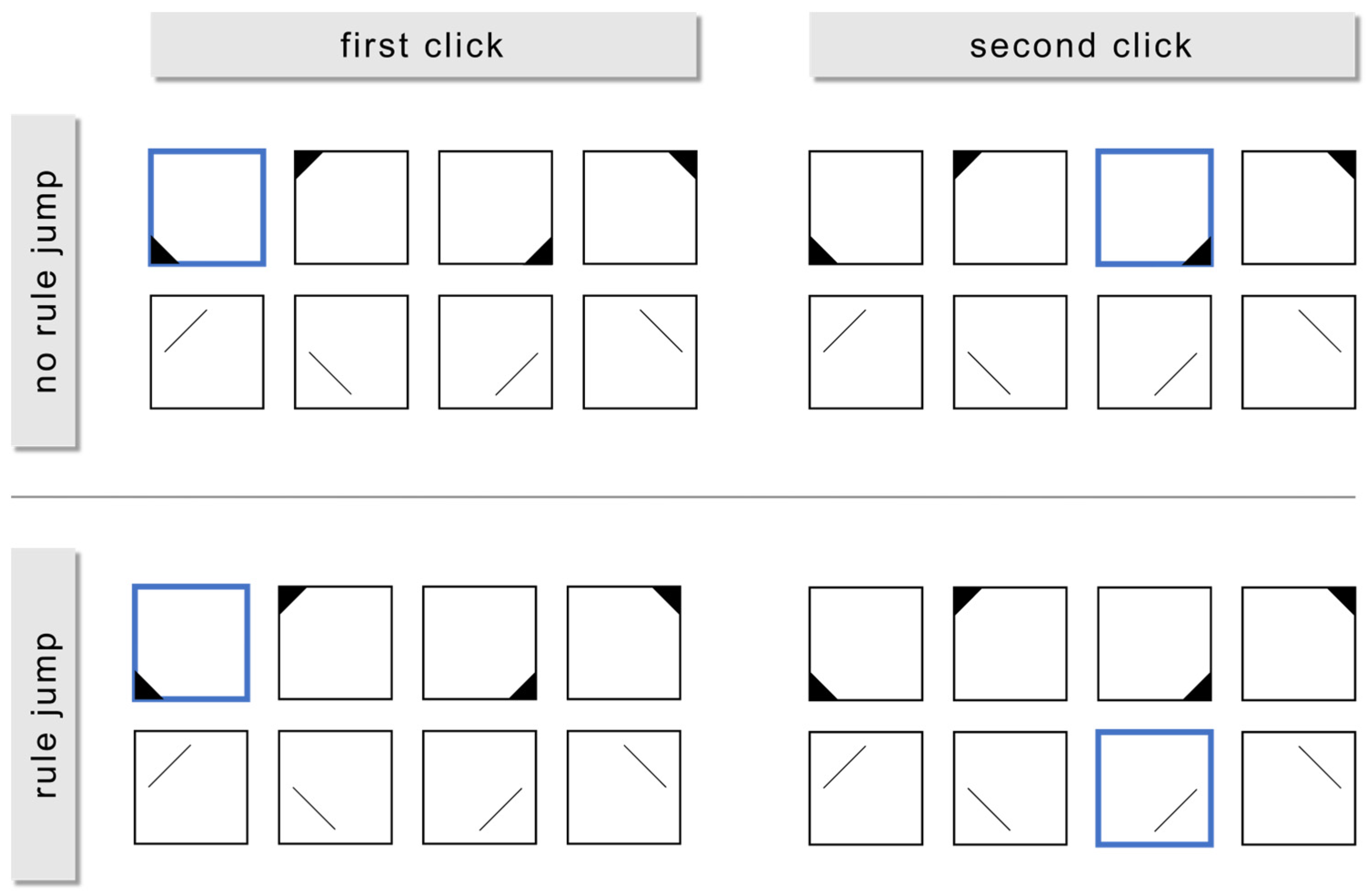

1.4. Structuredness in Item Processing

1.5. External Perspective on Partial Solutions

1.6. Research Goals

2. Study 1

2.1. Method

2.1.1. Sample and Test Procedure

2.1.2. Statistical Analysis

2.2. Results

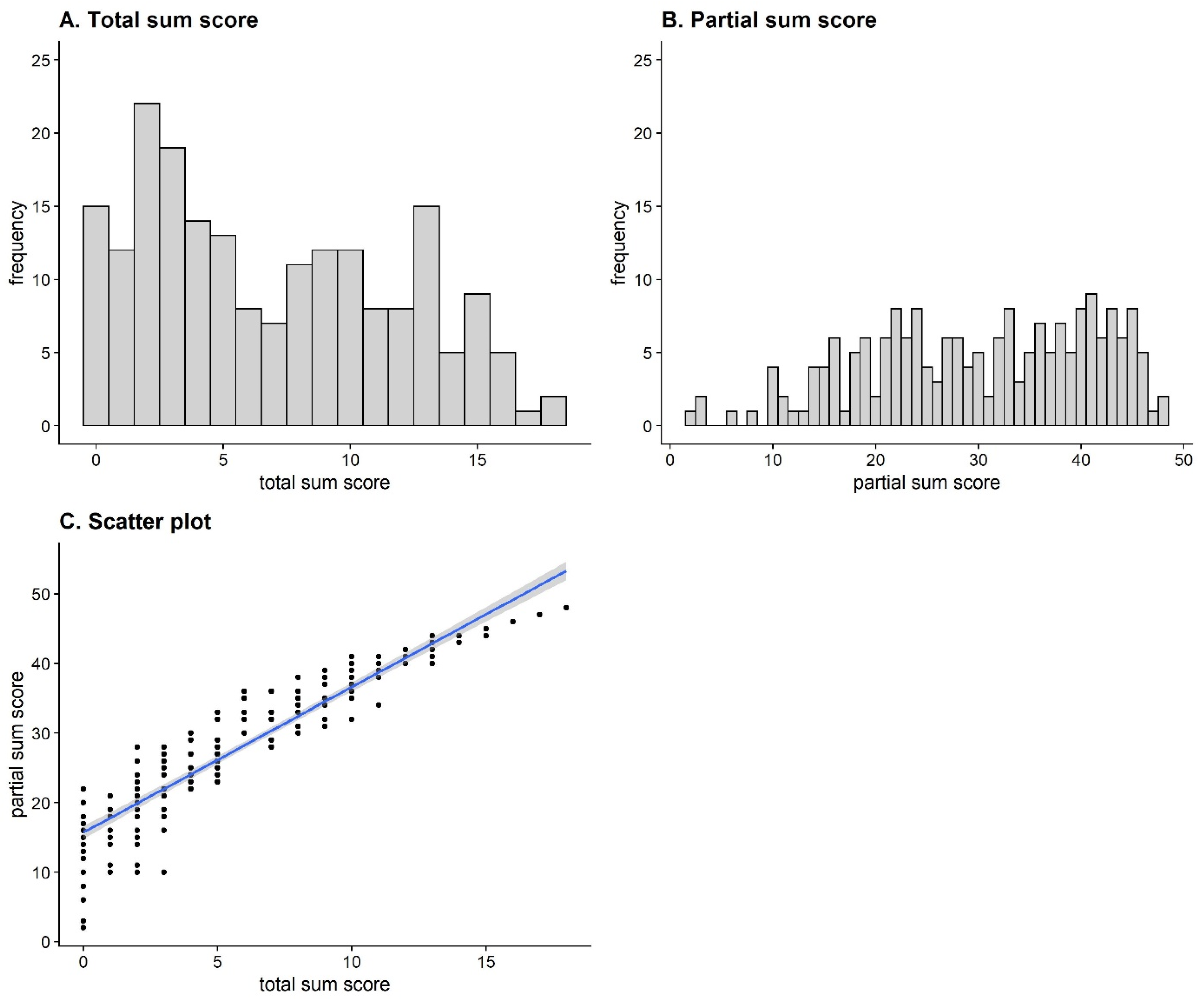

2.2.1. Descriptive Statistics and Internal Consistencies

2.2.2. Relationship between Total and Partial Sum Scores (RQ1)

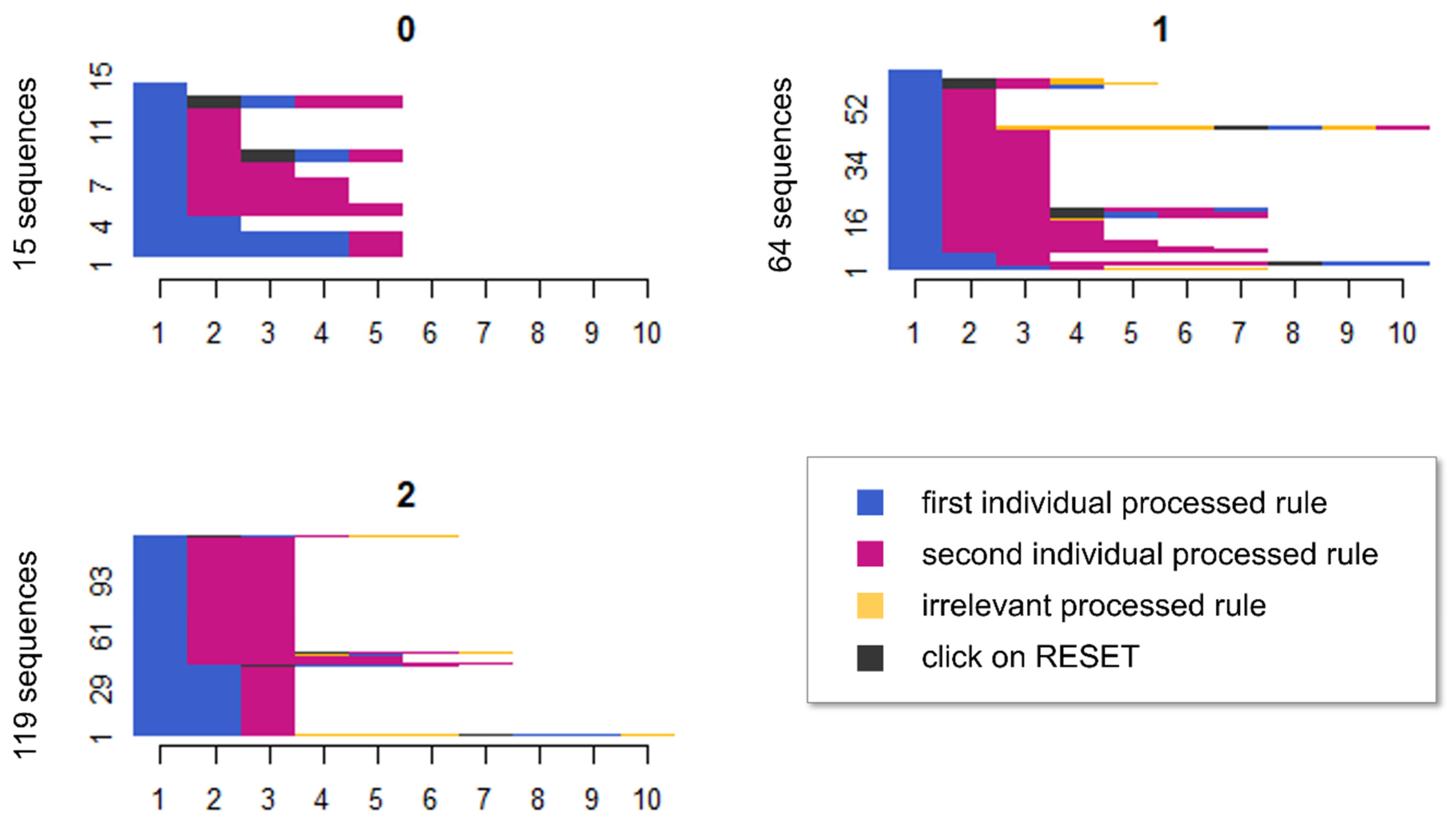

2.2.3. Sequence Analyses (RQ2a)

2.2.4. Rule Jumps (RQ2b)

2.3. Discussion

3. Study 2

3.1. Method

3.1.1. Sample and Test Procedure

3.1.2. Statistical Analysis

3.2. Results

3.2.1. Descriptive Statistics and Internal Consistencies

3.2.2. Relationship between Total and Partial Sum Scores

3.2.3. Practical Value of the Partial Solution Procedure Compared to the Total Solution Procedure

3.3. Discussion

4. General Discussion

4.1. Consolidation of the Studies

4.2. Limitations and Future Prospects

4.3. Applicability of the Partial Solution Procedure to Other Intelligence Components

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Arendasy, Martin E., and Markus Sommer. 2013. Reducing response elimination strategies enhances the construct validity of figural matrices. Intelligence 41: 234–43. [Google Scholar] [CrossRef]

- Arendasy, Martin E., Markus Sommer, Georg Gittler, and Andreas Hergovich. 2006. Automatic Generation of Quantitative Reasoning Items: A Pilot Study. Journal of Individual Differences 27: 2–14. [Google Scholar] [CrossRef]

- Bates, Timothy C., and Gilles E. Gignac. 2022. Effort impacts IQ test scores in an minor way: A multi-study investigation with healthy adult volunteers. Intelligence 92: 101652. [Google Scholar] [CrossRef]

- Beauducel, André, Detlev Liepmann, Sierk Horn, and Burkhard Brocke. 2010. Intelligence Structure Test 2000 R (IST). Göttingen: Hogrefe. [Google Scholar]

- Becker, Nicolas, and Frank M. Spinath. 2014. Design a Matrix Test. Ein Distraktorfreier Matrizentest zur Erfassung der Allgemeinen Intelligenz (DESIGMA). Göttingen: Hogrefe. [Google Scholar]

- Bethell-Fox, Charles. E., David F. Lohman, and Richard E. Snow. 1984. Adaptive reasoning: Componential and eye movement analysis of geometric analogy performance. Intelligence 8: 205–38. [Google Scholar] [CrossRef]

- Borella, Erika, Barbara Carretti, Micaela Mitolo, Michaela Zavagnin, Paolo Caffarra, Nicola Mammarella, Beth Fairfield, Nadia Gamboz, and Federica Piras. 2017. Characterizing cognitive inhibitory deficits in mild cognitive impairment. Psychiatry Research 251: 342–48. [Google Scholar] [CrossRef] [PubMed]

- Carpenter, Patricia. A., Marcel A. Just, and Peter Shell. 1990. What one intelligence test measures: A theoretical account of the processing in the Raven Progressive Matrices Test. Psychological Review 97: 404–31. [Google Scholar] [CrossRef] [PubMed]

- Carroll, John B. 1993. Human Cognitive Abilities: A Survey of Factor-Analytic Studies. Cambridge: Cambridge University Press. [Google Scholar]

- Cattell, Raymond B. 1949. Culture Free Intelligence Test, Scale 1, Handbook. Champaign, IL: Institute of Personality and Ability. [Google Scholar]

- Cattell, Raymond B. 1963. Theory of fluid and crystallized intelligence: A critical experiment. Journal of Educational Psychology 54: 1–22. [Google Scholar] [CrossRef]

- Chang, Yu-Ling, Ruei-Yi Chao, Yung-Chin Hsu, Ta-Fu Chen, and Wen-Yih Isaac Tseng. 2021. White matter network disruption and cognitive correlates underlying impaired memory awareness in mild cognitive impairment. NeuroImage: Clinical 30: 102626. [Google Scholar] [CrossRef]

- Chao, Ruei-Yi, Ta-Fu Chen, and Yu-Ling Chang. 2020. Executive Function Predicts the Validity of Subjective Memory Complaints in Older Adults beyond Demographic, Emotional, and Clinical Factors. The Journal of Prevention of Alzheimer’s Disease 8: 161–68. [Google Scholar] [CrossRef]

- Chow, Ricky, Rahel Rabi, Shahier Paracha, Brandon P. Vasquez, Lynn Hasher, Claude Alain, and Nicole D. Anderson. 2022. Reaction Time Intraindividual Variability Reveals Inhibitory Deficits in Single- and Multiple-Domain Amnestic Mild Cognitive Impairment. The Journals of Gerontology: Series B 77: 71–83. [Google Scholar] [CrossRef]

- Domnick, Florian, Hubert Zimmer, Nicolas Becker, and Frank M. Spinath. 2017. Is the Correlation between Storage Capacity and Matrix Reasoning Driven by the Storage of Partial Solutions? A Pilot Study of an Experimental Approach. Journal of Intelligence 5: 21. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Downey, Luke A., Justine Lomas, Clare Billings, Karen Hansen, and Con Stough. 2014. Scholastic Success: Fluid Intelligence, Personality, and Emotional Intelligence. Canadian Journal of School Psychology 29: 40–53. [Google Scholar] [CrossRef]

- Embretson, Susan E. 1998. A cognitive design system approach to generating valid tests: Application to abstract reasoning. Psychological Methods 3: 380–96. [Google Scholar] [CrossRef]

- Formann, Anton. K., Karin Waldherr, and Karl Piswanger. 2011. Wiener Matrizen-Test 2 (WMT-2)—Ein Rasch-skalierter sprachfreier Kurztest zur Erfassung der Intelligenz. Göttingen: Beltz Test. [Google Scholar]

- Gabadinho, Alexis, Gilbert Ritschard, Nicolas S. Müller, and Matthias Studer. 2011. Analyzing and Visualizing State Sequences in R with TraMineR. Journal of Statistical Software 40: 1–37. [Google Scholar] [CrossRef] [Green Version]

- Gauthier, Serge, Barry Reisberg, Michael Zaudig, Ronald C. Petersen, Karen Ritchie, Karl Broich, Sylvie Belleville, Henry Brodaty David Bennett, Howard Chertkow, Jeffrey L. Cummings, and et al. 2006. Mild cognitive impairment. The Lancet 367: 1262–70. [Google Scholar] [CrossRef] [PubMed]

- Gralewski, Jacek, and Maciej Karwowski. 2012. Creativity and school grades: A case from Poland. Thinking Skills and Creativity 7: 198–208. [Google Scholar] [CrossRef]

- Hayes, Taylor R., Alexander A. Petrov, and Per B. Sederberg. 2011. A novel method for analyzing sequential eye movements reveals strategic influence on Raven’s Advanced Progressive Matrices. Journal of Vision 11: 1–11. [Google Scholar] [CrossRef] [Green Version]

- Horn, John L. 1968. Organization of abilities and the development of intelligence. Psychological Review 75: 242–59. [Google Scholar] [CrossRef]

- Jefferson, Angela. L., Susan Lambe, David J. Moser, Laura K. Byerly, Al Ozonoff, and Jason H. Karlawish. 2008. Decisional Capacity for Research Participation in Individuals with Mild Cognitive Impairment: Decisional Capacity and MCI. Journal of the American Geriatrics Society 56: 1236–43. [Google Scholar] [CrossRef] [Green Version]

- Jensen, Arthur R. 1998. The g Factor: The Science of Mental Ability. Westport: Praeger. [Google Scholar]

- Just, Marcel A., Patricia A. Carpenter, Mandy Maguire, Vaibhav Diwadkar, and Stephanie McMains. 2001. Mental rotation of objects retrieved from memory: A functional MRI study of spatial processing. Journal of Experimental Psychology: General 130: 493–504. [Google Scholar] [CrossRef]

- Keith, Timothy Z. 1990. Confirmatory and Hierarchical Confirmatory Analysis of the Differential Ability Scales. Journal of Psychoeducational Assessment 8: 391–405. [Google Scholar] [CrossRef]

- Koch, Marco, Corina Möller, and Frank M. Spinath. 2021. Are you Swiping, or Just Marking? Explor-ing the Feasibility of Psychological Testing on Mobile Devices. Psychological Test and Assessment Modeling 63: 507–24. [Google Scholar]

- Koch, Marco, Frank M. Spinath, Samuel Greiff, and Nicolas Becker. 2022. Development and Validation of the Open Matrices Item Bank. Journal of Intelligence 10: 41. [Google Scholar] [CrossRef] [PubMed]

- Kreuzpointner, Ludwig, Helmut Lukesch, and Wolfgang Horn. 2013. Leistungsprüfsystem 2 (LPS-2). Göttingen: Hogrefe. [Google Scholar]

- Krieger, Florian, Hubert D. Zimmer, Samuel Greiff, Frank M. Spinath, and Nicolas Becker. 2019. Why are difficult figural matrices hard to solve? The role of selective encoding and working memory capacity. Intelligence 72: 35–48. [Google Scholar] [CrossRef]

- Krieger, Florian, Nicolas Becker, Samuel Greiff, and Frank M. Spinath. 2022. Design a Matrix—Standard. Ein distraktorfreier Matrizen-Screening-Test zur Erfassung der allgemeinen Intelligenz. Göttingen: Hogrefe. [Google Scholar]

- Kultusministerkonferenz. 2009. Zentrale oder dezentrale Abiturprüfungen in den Ländern. Berlin: Sekretariat der Ständigen Konferenz der Kultusminister der Länder in der Bundesrepublik Deutschland. [Google Scholar]

- Kultusministerkonferenz. 2022. Abiturnoten 2021 an Gymnasien, Integrierten Gesamtschulen, Fachgymnasien, Fachoberschulen und Berufsoberschulen—endgültige Ergebnisse—(Schuljahr 2020/2021). Berlin: Sekretariat der Ständigen Konferenz der Kultusminister der Länder in der Bundesrepublik Deutschland. [Google Scholar]

- Levacher, Julie, Marco Koch, Johanna Hissbach, Frank M. Spinath, and Nicolas Becker. 2021. You Can Play the Game Without Knowing the Rules—But You’re Better Off Knowing Them: The Influence of Rule Knowledge on Figural Matrices Tests. European Journal of Psychological Assessment 38: 15–23. [Google Scholar] [CrossRef]

- Lichtenberger, Elizabeth O., and Alan S. Kaufman. 2010. Kaufman Assessment Battery for Children—Second Edition (KABC-II). In Encyclopedia of Cross-Cultural School Psychology, 1st ed. Edited by Caroline S. Clauss-Ehlers. Boston: Springer, pp. 557–60. [Google Scholar] [CrossRef]

- Loesche, Patrick, Jennifer Wiley, and Marcus Hasselhorn. 2015. How knowing the rules affects solving the Raven Advanced Progressive Matrices Test. Intelligence 48: 58–75. [Google Scholar] [CrossRef]

- Marquardt, Donald W. 1970. Generalized Inverses, Ridge Regression, Biased Linear Estimation, and Nonlinear Estimation. Technometrics 12: 591–612. [Google Scholar] [CrossRef]

- Marshalek, Brachia, David F. Lohman, and Richard E. Snow. 1983. The complexity continuum in the radex and hierarchical models of intelligence. Intelligence 7: 107–27. [Google Scholar] [CrossRef]

- McGrew, Kevin S. 2009. CHC theory and the human cognitive abilities project: Standing on the shoulders of the giants of psychometric intelligence research. Intelligence 37: 1–10. [Google Scholar] [CrossRef]

- Meo, Maria, Maxwell J. Roberts, and Francesco S. Marucci. 2007. Element salience as a predictor of item difficulty for Raven’s Progressive Matrices. Intelligence 35: 359–68. [Google Scholar] [CrossRef]

- Mitchum, Ainsley L., and Colleen M. Kelley. 2010. Solve the problem first: Constructive solution strategies can influence the accuracy of retrospective confidence judgments. Journal of Experimental Psychology: Learning, Memory, and Cognition 36: 699–710. [Google Scholar] [CrossRef] [PubMed]

- Mõttus, René, Juri Guljajev, Jüri Allik, Kaia Laidra, and Helle Pullmann. 2012. Longitudinal Associations of Cognitive Ability, Personality Traits and School Grades with Antisocial Behaviour. European Journal of Personality 26: 56–62. [Google Scholar] [CrossRef] [Green Version]

- Neter, John, William Wasserman, and Michael H. Kutner. 1989. Applied Linear Regression Models. Irwin: Homewood. [Google Scholar]

- Petersen, Ronald C. 2011. Mild Cognitive Impairment. New England Journal of Medicine 364: 2227–34. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Preckel, Franzis. 2003. Diagnostik intellektueller Hochbegabung. Testentwicklung zur Erfassung der fluiden Intelligenz. Göttingen: Hogrefe. [Google Scholar]

- Primi, Ricardo. 2001. Complexity of geometric inductive reasoning tasks. Intelligence 30: 41–70. [Google Scholar] [CrossRef]

- R Core Team. 2021. R: A Language and Environment for Statistical Computing. Vienna: R Foundation for Statistical Computing. Available online: https://www.R-project.org/ (accessed on 15 February 2023).

- Raven, John, and Jean Raven. 2003. Raven Progressive Matrices. In Handbook of Nonverbal Assessment, 1st ed. Edited by R. Steve McCallum. Boston: Springer, pp. 223–37. [Google Scholar] [CrossRef]

- Revelle, William. 2021. psych: Procedures for Personality and Psychological Research. Evanston: Northwestern University. Available online: https://CRAN.R-project.org/package=psych (accessed on 15 February 2023).

- Roth, Bettina, Nicolas Becker, Sara Romeyke, Sarah Schäfer, Florian Domnick, and Frank M. Spinath. 2015. Intelligence and school grades: A meta-analysis. Intelligence 53: 118–37. [Google Scholar] [CrossRef]

- Schmidt, Frank L., and John E. Hunter. 2004. General Mental Ability in the World of Work: Occupational Attainment and Job Performance. Journal of Personality and Social Psychology 86: 162–73. [Google Scholar] [CrossRef] [Green Version]

- Shepard, Roger N., and Jacqueline Metzler. 1971. Mental rotation of three-dimensional objects. Science 171: 701–3. [Google Scholar] [CrossRef] [Green Version]

- Snow, Richard. E. 1980. Aptitude processes. In Aptitude, Learning, and Instruction, 1st ed. Edited by Richard E. Snow, Pat-Anthony Federico and William E. Montague. Hillsdale: Erlbaum, vol. 1, pp. 27–63. [Google Scholar]

- Spearman, Charles. 1904. “General Intelligence”, objectively determined and measured. American Journal of Psychiatry 15: 201–293. [Google Scholar]

- Stadler, Matthias, Sarah Hofer, and Samuel Greiff. 2020. First among equals: Log data indicates ability differences despite equal scores. Computers in Human Behavior 111: 106442. [Google Scholar] [CrossRef]

- Studierendenauswahl-Verbund. 2021. Der Studierendenauswahl-Verbund. Available online: https://www.projekt-stav.de/stav/index.php (accessed on 15 February 2023).

- Thissen, Alica, Frank M. Spinath, and Nicolas Becker. 2020. Manipulate Me: The Cube Construction Task Allows for a Better Manipulation of Item Difficulties Than Current Cube Rotation Tasks. European Journal of Psychological Assessment 36: 554–62. [Google Scholar] [CrossRef]

- Unsworth, Nash, and Randall W. Engle. 2005. Working memory capacity and fluid abilities: Examining the correlation between Operation Span and Raven. Intelligence 33: 67–81. [Google Scholar] [CrossRef]

- Vandenberg, Steven G., and Allan R. Kuse. 1978. Mental rotations, a group test of three-dimensional spatial visualization. Perceptual and motor skills 47: 599–604. [Google Scholar] [CrossRef]

- Vigneau, François, André F. Caissie, and Douglas A. Bors. 2006. Eye-movement analysis demonstrates strategic influences on intelligence. Intelligence 34: 261–72. [Google Scholar] [CrossRef]

- Vodegel Matzen, Linda B. L., Maurits W. van der Molen, and Ad C. M. Dudink. 1994. Error analysis of raven test performance. Personality and Individual Differences 16: 433–45. [Google Scholar] [CrossRef]

- Wechsler, David. 2003. Wechsler Intelligence Scale for Children—Fourth Edition (WISC-IV). San Antonio: The Psychological Corporation. [Google Scholar]

- Wechsler, David. 2008. Wechsler Adult Intelligence Scale—Fourth Edition (WAIS-IV). San Antonio: NCS Pearson. [Google Scholar]

- Wiley, Jennifer, Andrew F. Jarosz, Patrick J. Cushen, and Gregory J. H. Colflesh. 2011. New rule use drives the relation between working memory capacity and Raven’s Advanced Progressive Matrices. Journal of Experimental Psychology: Learning, Memory, and Cognition 37: 256–63. [Google Scholar] [CrossRef]

| Item | NoR | Total Solutions | Partial Solutions | |||||

|---|---|---|---|---|---|---|---|---|

| p | αdropped | ri(t−i) | M | SD | α | ri(t−i) | ||

| 1 | 2 | .54 | .89 | .31 | 1.49 | 0.59 | .92 | .37 |

| 2 | 2 | .57 | .89 | .44 | 1.50 | 0.63 | .92 | .46 |

| 3 | 2 | .53 | .89 | .52 | 1.44 | 0.70 | .92 | .62 |

| 4 | 2 | .33 | .89 | .63 | 1.16 | 0.69 | .92 | .66 |

| 5 | 2 | .30 | .88 | .41 | 1.19 | 0.62 | .92 | .44 |

| 6 | 2 | .39 | .89 | .47 | 1.20 | 0.73 | .92 | .54 |

| 7 | 2 | .51 | .89 | .49 | 1.26 | 0.83 | .92 | .56 |

| 8 | 2 | .13 | .89 | .47 | 0.91 | 0.59 | .92 | .48 |

| 9 | 2 | .15 | .89 | .49 | 0.69 | 0.72 | .92 | .54 |

| 10 | 2 | .59 | .89 | .64 | 1.53 | 0.63 | .92 | .64 |

| 11 | 3 | .32 | .88 | .51 | 2.03 | 0.88 | .92 | .58 |

| 12 | 3 | .46 | .89 | .58 | 2.22 | 0.88 | .92 | .63 |

| 13 | 3 | .43 | .88 | .62 | 2.21 | 0.87 | .92 | .69 |

| 14 | 3 | .35 | .88 | .65 | 1.85 | 0.99 | .92 | .74 |

| 15 | 3 | .61 | .88 | .63 | 2.16 | 1.17 | .92 | .75 |

| 16 | 4 | .21 | .89 | .50 | 2.23 | 1.31 | .91 | .81 |

| 17 | 4 | .26 | .88 | .66 | 2.22 | 1.38 | .91 | .81 |

| 18 | 5 | .21 | .89 | .51 | 2.83 | 1.61 | .92 | .79 |

| Item | NoR | Total Solutions | Partial Solutions | |||||

|---|---|---|---|---|---|---|---|---|

| p | αdropped | ri(t−i) | M | SD | α | ri(t−i) | ||

| 1 | 1 | .82 | .93 | .21 | 0.82 | 0.38 | .95 | .18 |

| 2 | 1 | .89 | .93 | .29 | 0.89 | 0.31 | .95 | .21 |

| 3 | 1 | .42 | .92 | .45 | 0.42 | 0.50 | .95 | .38 |

| 4 | 2 | .75 | .92 | .44 | 1.72 | 0.51 | .95 | .38 |

| 5 | 2 | .64 | .93 | .35 | 1.54 | 0.67 | .95 | .34 |

| 6 | 2 | .56 | .92 | .42 | 0.91 | 0.29 | .95 | .28 |

| 7 | 2 | .89 | .93 | .28 | 1.86 | 0.43 | .95 | .37 |

| 8 | 2 | .72 | .92 | .44 | 1.68 | 0.57 | .95 | .49 |

| 9 | 2 | .74 | .92 | .55 | 1.64 | 0.65 | .95 | .58 |

| 10 | 3 | .49 | .92 | .52 | 2.12 | 1.04 | .95 | .61 |

| 11 | 3 | .53 | .92 | .54 | 2.13 | 1.11 | .95 | .54 |

| 12 | 3 | .64 | .92 | .64 | 2.39 | 0.98 | .95 | .73 |

| 13 | 3 | .70 | .92 | .54 | 2.45 | 0.98 | .95 | .67 |

| 14 | 3 | .75 | .92 | .58 | 2.52 | 0.96 | .95 | .70 |

| 15 | 3 | .73 | .92 | .65 | 2.49 | 0.97 | .95 | .74 |

| 16 | 3 | .50 | .92 | .63 | 2.12 | 1.05 | .95 | .74 |

| 17 | 3 | .76 | .92 | .63 | 2.53 | 0.95 | .95 | .74 |

| 18 | 3 | .59 | .92 | .58 | 2.20 | 1.14 | .95 | .75 |

| 19 | 3 | .62 | .92 | .70 | 2.17 | 1.20 | .94 | .83 |

| 20 | 4 | .49 | .92 | .47 | 2.11 | 1.10 | .95 | .77 |

| 21 | 4 | .46 | .92 | .63 | 2.71 | 1.55 | .94 | .84 |

| 22 | 4 | .64 | .92 | .63 | 2.24 | 1.24 | .94 | .81 |

| 23 | 4 | .56 | .92 | .56 | 2.74 | 1.70 | .94 | .79 |

| 24 | 4 | .43 | .92 | .60 | 2.46 | 1.71 | .94 | .84 |

| 25 | 4 | .59 | .92 | .67 | 1.95 | 1.36 | .94 | .82 |

| 26 | 5 | .22 | .92 | .46 | 2.71 | 2.06 | .94 | .82 |

| 27 | 5 | .45 | .92 | .69 | 2.76 | 2.34 | .95 | .78 |

| 28 | 5 | .47 | .92 | .60 | 2.70 | 2.39 | .94 | .70 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Weber, D.; Krieger, F.; Spinath, F.M.; Greiff, S.; Hissbach, J.; Becker, N. A Log File Analysis on the Validity of Partial Solutions in Figural Matrices Tests. J. Intell. 2023, 11, 37. https://doi.org/10.3390/jintelligence11020037

Weber D, Krieger F, Spinath FM, Greiff S, Hissbach J, Becker N. A Log File Analysis on the Validity of Partial Solutions in Figural Matrices Tests. Journal of Intelligence. 2023; 11(2):37. https://doi.org/10.3390/jintelligence11020037

Chicago/Turabian StyleWeber, Dominik, Florian Krieger, Frank M. Spinath, Samuel Greiff, Johanna Hissbach, and Nicolas Becker. 2023. "A Log File Analysis on the Validity of Partial Solutions in Figural Matrices Tests" Journal of Intelligence 11, no. 2: 37. https://doi.org/10.3390/jintelligence11020037