Implementing a Gaze Tracking Algorithm for Improving Advanced Driver Assistance Systems

Abstract

:1. Introduction

2. Related Research

3. Proposed Eye Tracking Model

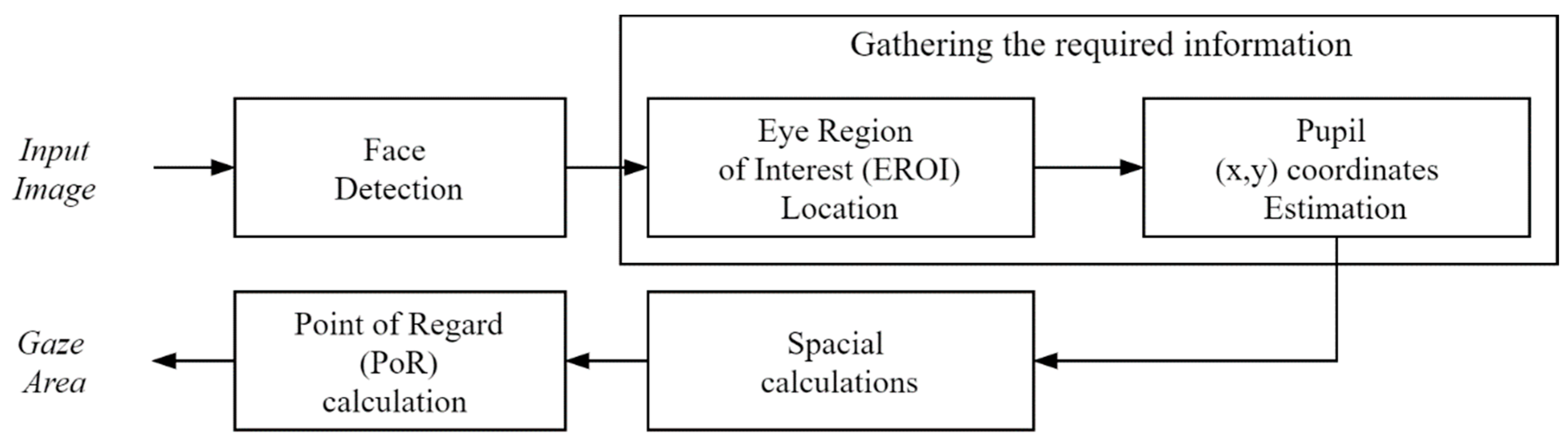

3.1. Gaze Tracking Approach

3.2. Implementation of the Model

- Gathering the required information

- Performing the corresponding spatial calculations

- Calculating the Point of Regard (PoR)

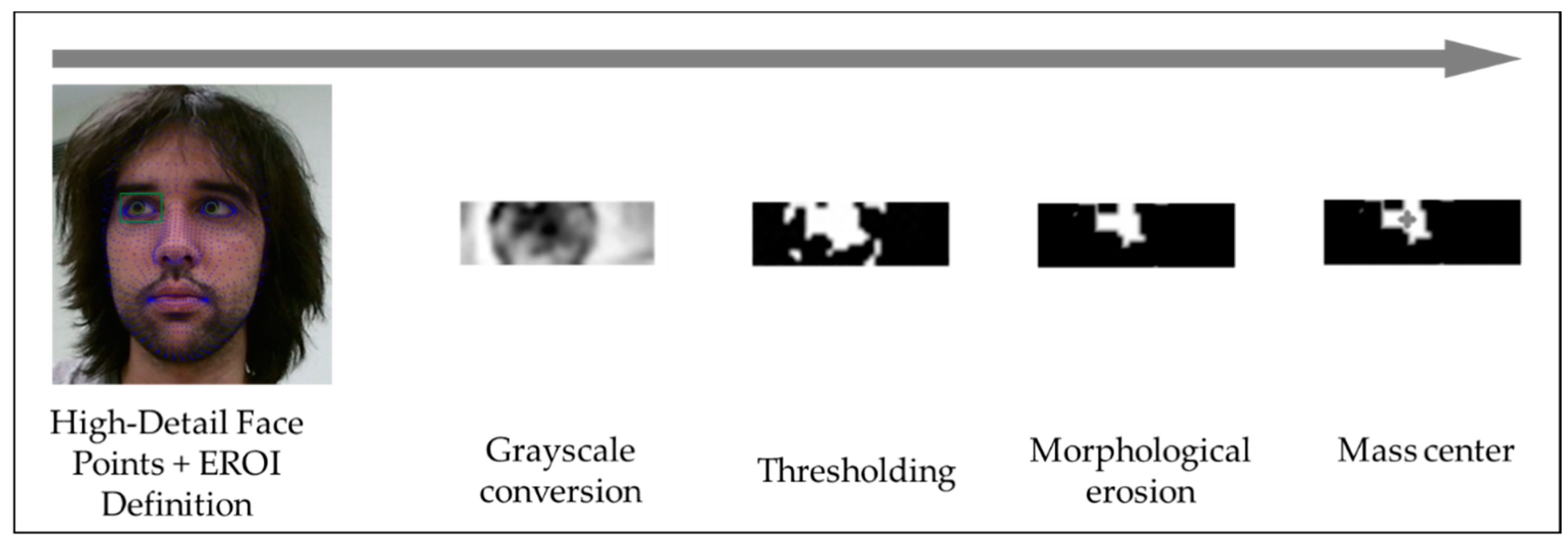

3.2.1. Gathering the Required Information

- 1.

- Extraction of the Eye Region of Interest (EROI)

- 2.

- Estimation of the Pupil Center Coordinates.

3.2.2. Performing the Corresponding Spatial Calculations

3.2.3. Calculating the Point of Regard

- Front. The driver is looking ahead through the car’s windshield and it includes the inspection of the rear-view mirror.

- Front-Left. Driver is looking to the left-side of the windshield, also looking indirectly ahead by their peripheral vision. In this case, the driver focuses their visual attention on dynamic environment entities such as pedestrians and vehicles that are moving transversely to the vehicle’s trajectory. Therefore, while the drivers are inspecting one side, they are not aware of the other side (right).

- Front-Right. Analogous to the previous case, the driver is looking to the right side of the windshield, also looking indirectly ahead using their peripheral vision. Consequently, while drivers are inspecting the events that occur in this area, they are not aware of the other side (left).

- Left. The driver’s vision is focused on inspecting the left-side rear mirror; thus, the pupil rotates towards the lateral canthus of the eye, so they are not aware of what is happening ahead, on the front of the car and on the right side.

- Right. Inversely to the previous case, the driver’s vision is focused on inspecting the right-side rear mirror that requires the pupil rotation towards the outer eye canthus and even turning their head. Therefore, they are not aware of what is happening ahead, on the front of the car, or on the left side.

4. Experimental Design

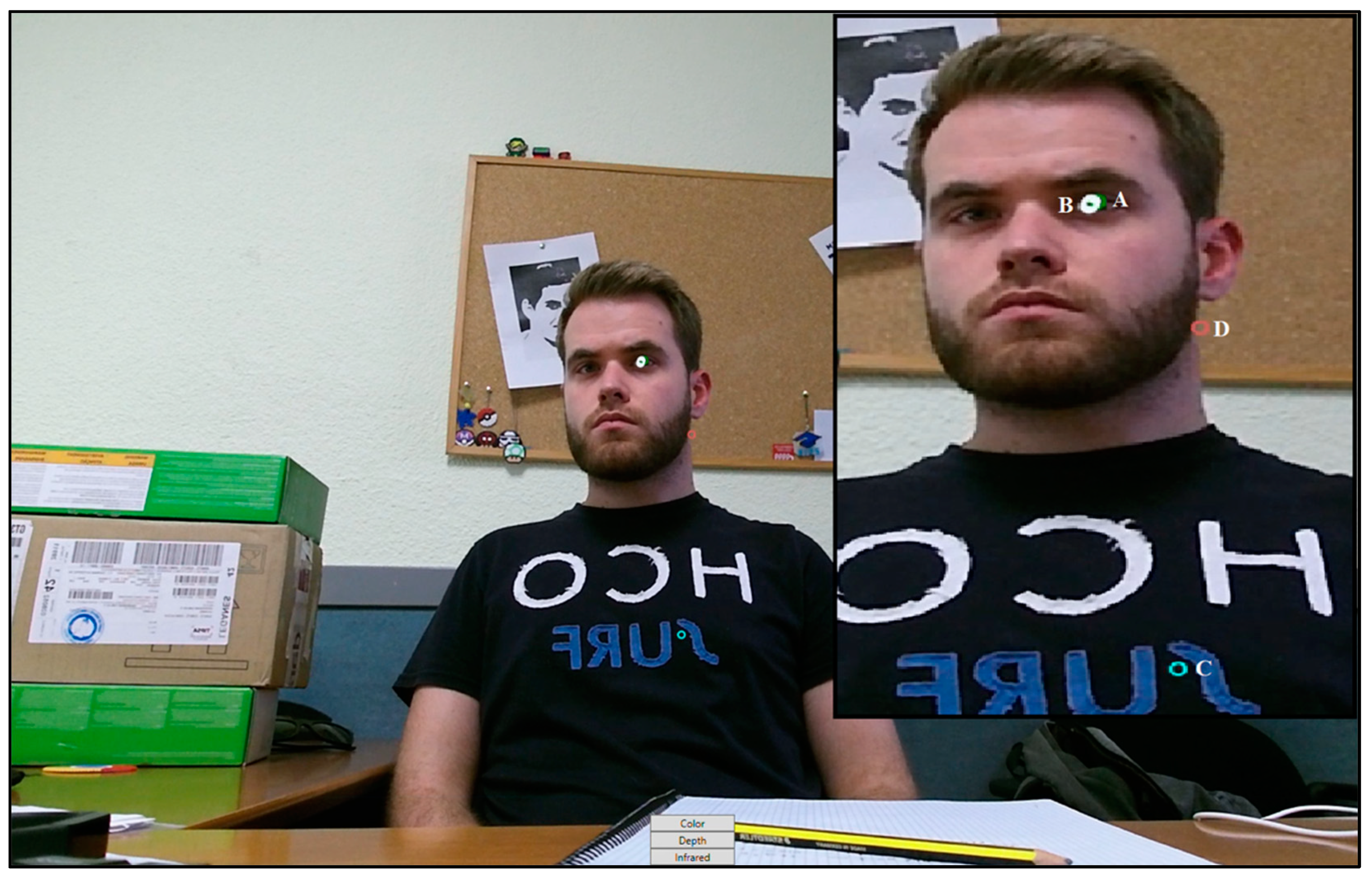

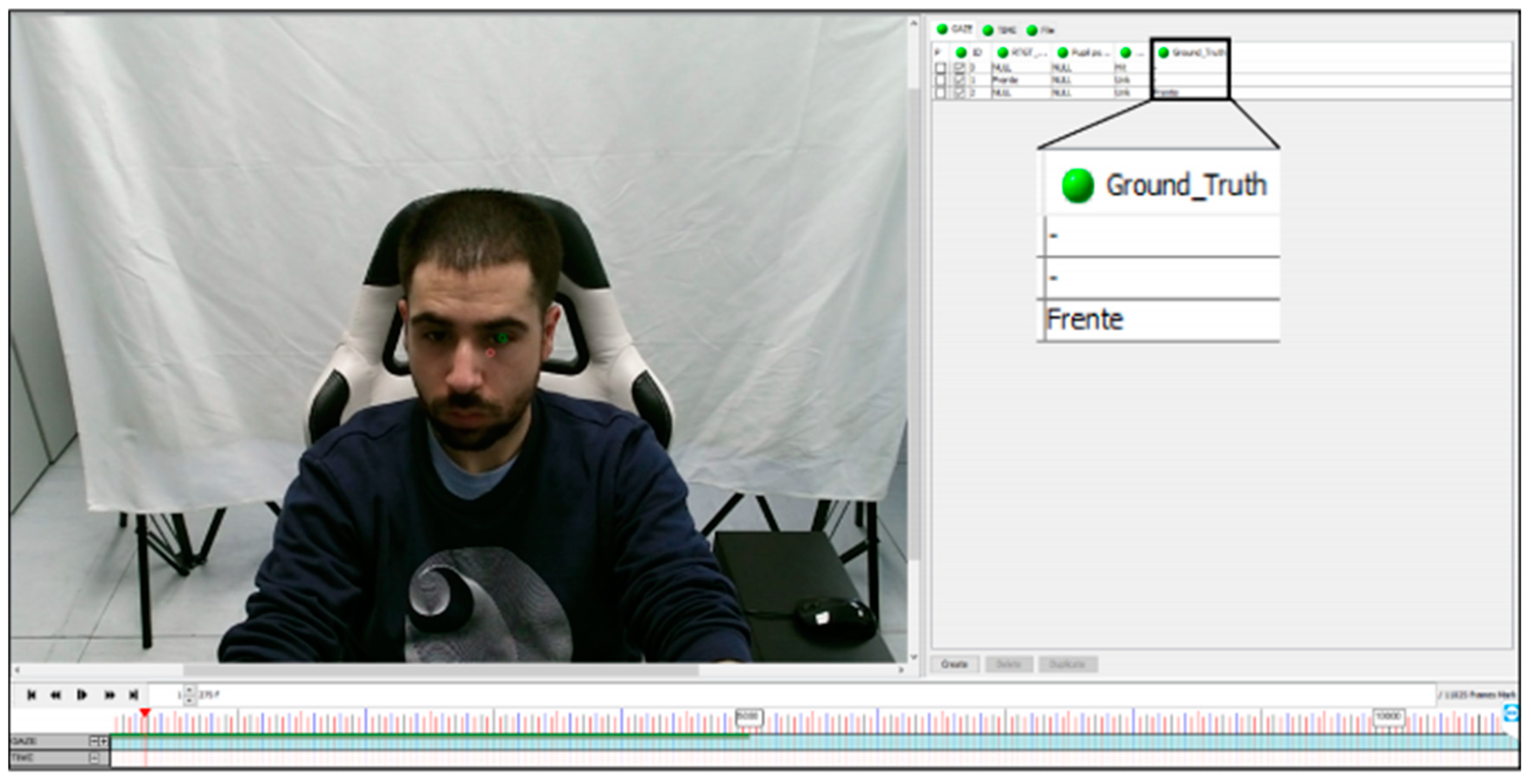

4.1. Data Acquisition on Set-Up Environment

4.2. Experiment Designing in Driving Simulator Environment

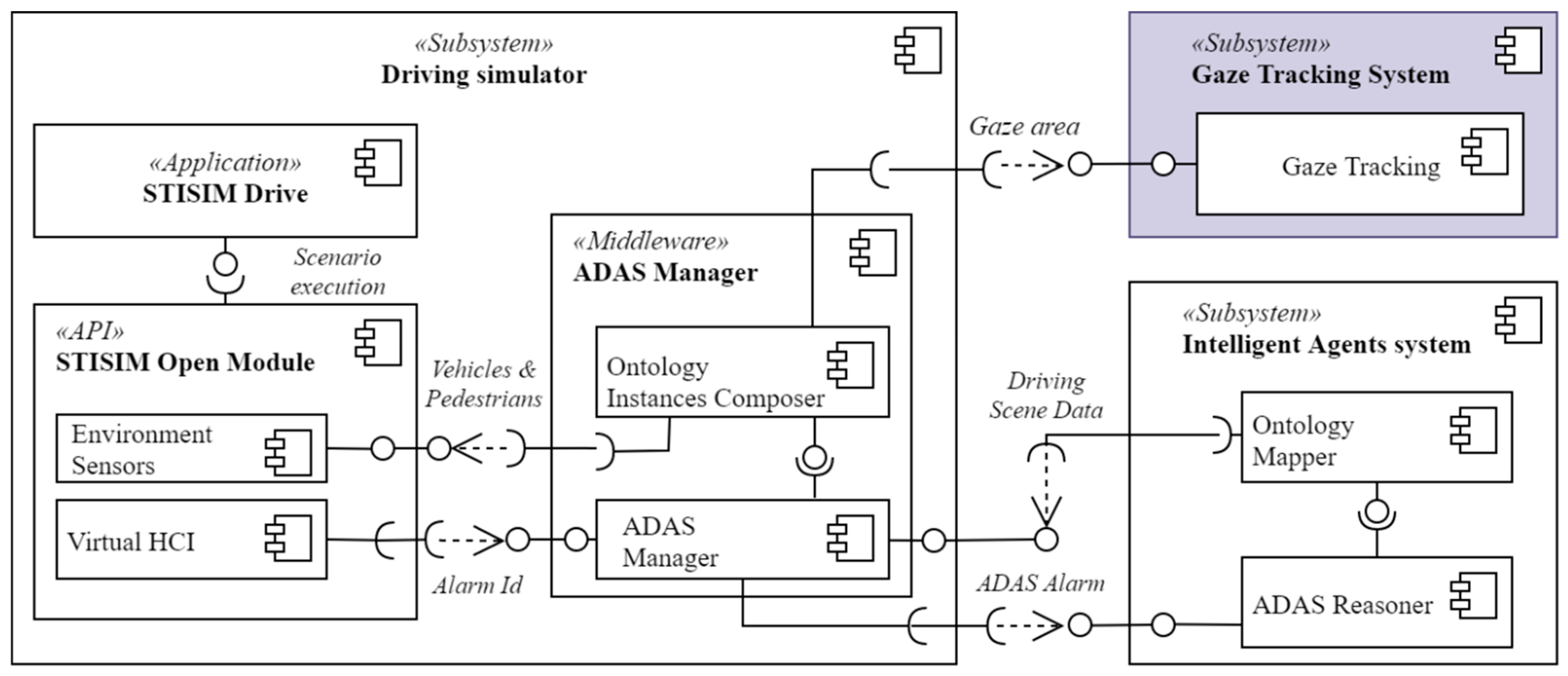

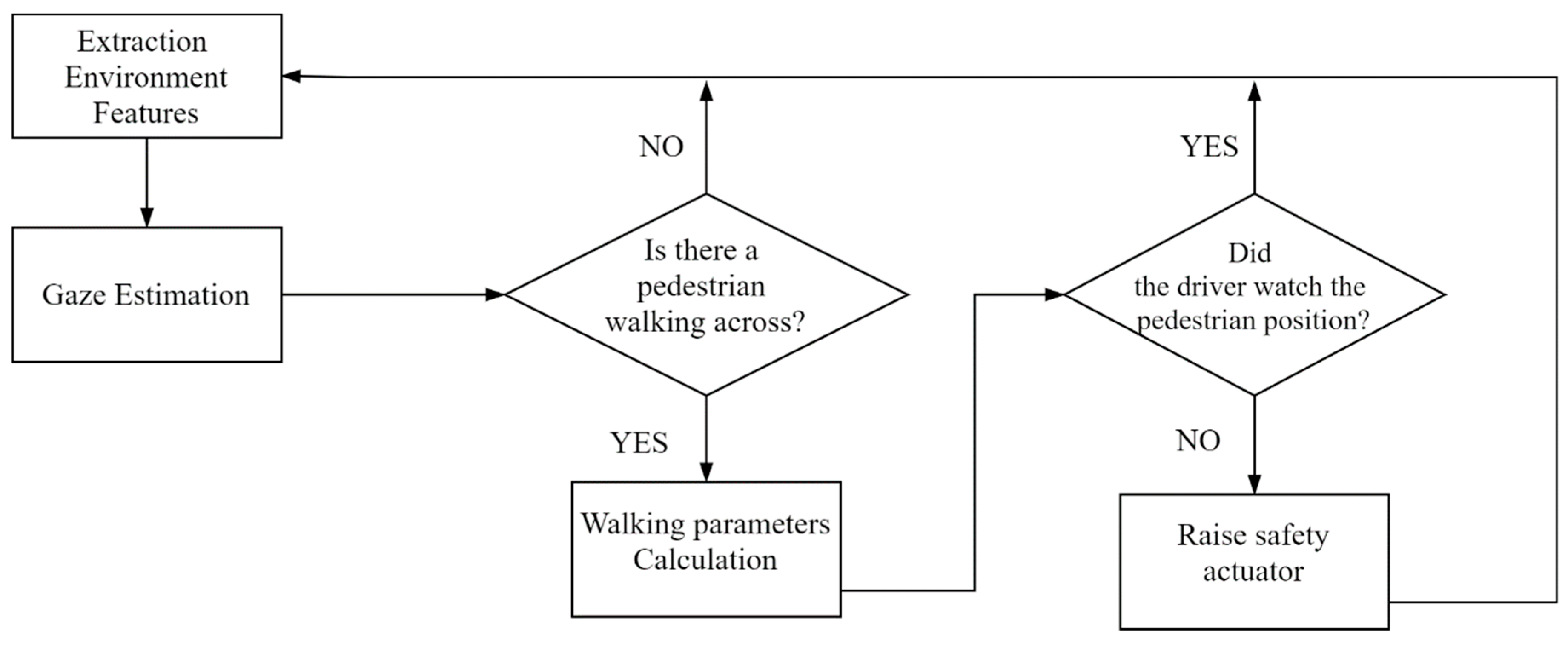

4.2.1. Integration of Gaze Area in the ADAS Based on Data Fusion

4.2.2. Vision System Configuration

4.2.3. Driving Scenario Designing

4.3. Experiment Protocol

5. Results

5.1. Set-Up Environment

- Unknownratio: a fraction of the total frames that are not recognized.

- Hit ratio (absolute): hit ratio without discarding the not recognized frames.

- Hit ratio (relative): hit ratio discarding the not recognized frames.

- Mean error: mean error discarding not recognized frames. Error is given by calculating the distance among the different classes (10):

5.2. Driving Simulator Environment

6. Discussion

7. Conclusions and Future Works

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- World Health Organization. Mobile Phone Use: A Growing Problem of Driver Distraction; World Health Organization: Geneva, Switzerland, 2011. [Google Scholar]

- World Health Organization. Global Status Report on Road Safety; World Health Organization: Geneva, Switzerland, 2015. [Google Scholar]

- Sugiyanto, G.; Santi, M. Road traffic accident cost using human capital method (case study in purbalingga, central java, indonesia). J. Teknol. 2017, 79, 107–116. [Google Scholar] [CrossRef] [Green Version]

- Braitman, K.A.; Chaudhary, N.K.; McCartt, A.T. Effect of Passenger Presence on Older Drivers’ Risk of Fatal Crash Involvement. Traffic Inj. Prev. 2014, 15, 451–456. [Google Scholar] [CrossRef]

- Rueda-Domingo, T.; Lardelli-Claret, P.; Luna-Del-Castillo, J.D.D.; Jimenez-Moleon, J.J.; García-Martín, M.; Bueno-Cavanillas, A. The influence of passengers on the risk of the driver causing a car collision in Spain. Accid. Anal. Prev. 2004, 36, 481–489. [Google Scholar] [CrossRef]

- Bengler, K.; Dietmayer, K.; Farber, B.; Maurer, M.; Stiller, C.; Winner, H. Three Decades of Driver Assistance Systems: Review and Future Perspectives. IEEE Intell. Transp. Syst. Mag. 2014, 6, 6–22. [Google Scholar] [CrossRef]

- TEXA S.p.A. ADAS Solutions. Maintenance of Advanced Driver Assistance Systems (v6). Treviso. 2017. Available online: https://www.texa.ru/PressArea/Brochure/pdf/pieghevole-adas-en-gb-v6.pdf (accessed on 18 June 2021).

- Morales-Fernández, J.M.; Díaz-Piedra, C.; Rieiro, H.; Roca-González, J.; Romero, S.; Catena, A.; Fuentes, L.J.; Di Stasi, L.L. Monitoring driver fatigue using a single-channel electroencephalographic device: A validation study by gaze-based, driving performance, and subjective data. Accid. Anal. Prev. 2017, 109, 62–69. [Google Scholar] [CrossRef] [PubMed]

- Yassine, N.; Barker, S.; Hayatleh, K.; Choubey, B.; Nagulapalli, R. Simulation of driver fatigue monitoring via blink rate detection, using 65 nm CMOS technology. Analog. Integr. Circuits Signal. Process. 2018, 95, 409–414. [Google Scholar] [CrossRef] [Green Version]

- Vahidi, A.; Eskandarian, A. Research advances in intelligent collision avoidance and adaptive cruise control. IEEE Trans. Intell. Transp. Syst. 2003, 4, 143–153. [Google Scholar] [CrossRef] [Green Version]

- Lee, J.; Hoffman, J.D.; Hayes, E. Collision warning design to mitigate driver distraction. In Proceedings of the 2004 Conference on Applications, Technologies, Architectures, and Protocols for Computer Communications—SIGCOMM ’04; Association for Computing Machinery: New York, NY, USA, 2004; pp. 65–72. [Google Scholar]

- Gutierrez, G.; Iglesias, J.A.; Ordoñez, F.J.; Ledezma, A.; Sanchis, A. Agent-based framework for Advanced Driver Assistance Systems in urban environments. In Proceedings of the 17th International Conference on Information Fusion, Salamanca, Spain, 7–10 July 2014; pp. 1–8. [Google Scholar]

- Sipele, O.; Zamora, V.; Ledezma, A.; Sanchis, A. Testing ADAS though simulated driving situations analysis: Environment configuration. In Proceedings of the First Symposium SEGVAUTO-TRIES-CM. Technologies for a Safe, Accessible and Sustainable Mobility; R&D+I in Automotive: RESULTS: Madrid, Spain, 2016; pp. 23–26. [Google Scholar]

- Zamora, V.; Sipele, O.; Ledezma, A.; Sanchis, A. Intelligent Agents for Supporting Driving Tasks: An Ontology-based Alarms System. In Proceedings of the 3rd International Conference on Vehicle Technology and Intelligent Transport Systems; Science and Technology Publications: Setúbal, Portugal, 2017; pp. 165–172. [Google Scholar]

- Dickmanns, E.D.; Mysliwetz, B.D. Recursive 3-D road and relative ego-state recognition. IEEE Trans. Pattern Anal. Mach. Intell. 1992, 14, 199–213. [Google Scholar] [CrossRef]

- Viktorová, L.; Šucha, M. Learning about advanced driver assistance systems—The case of ACC and FCW in a sample of Czech drivers. Transp. Res. Part. F Traffic Psychol. Behav. 2019, 65, 576–583. [Google Scholar] [CrossRef]

- Singh, S. Critical Reasons for Crashes Investigated in the National Motor Vehicle Crash Causation Survey. 2015. Available online: http://www-nrd.nhtsa.dot.gov/Pubs/812115.pdf (accessed on 30 April 2021).

- Khan, M.Q.; Lee, S. Gaze and Eye Tracking: Techniques and Applications in ADAS. Sensors 2019, 19, 5540. [Google Scholar] [CrossRef] [Green Version]

- Dong, Y.; Hu, Z.; Uchimura, K.; Murayama, N. Driver Inattention Monitoring System for Intelligent Vehicles: A Review. IEEE Trans. Intell. Transp. Syst. 2011, 12, 596–614. [Google Scholar] [CrossRef]

- Sigari, M.-H.; Pourshahabi, M.-R.; Soryani, M.; Fathy, M. A Review on Driver Face Monitoring Systems for Fatigue and Distraction Detection. Int. J. Adv. Sci. Technol. 2014, 64, 73–100. [Google Scholar] [CrossRef]

- Morimoto, C.H.; Mimica, M.R. Eye gaze tracking techniques for interactive applications. Comput. Vis. Image Underst. 2005, 98, 4–24. [Google Scholar] [CrossRef]

- Jiang, J.; Zhou, X.; Chan, S.; Chen, S. Appearance-Based Gaze Tracking: A Brief Review. In Transactions on Petri Nets and Other Models of Concurrency XV; Springer: Berlin/Heidelberg, Germany, 2019; Volume 11745, pp. 629–640. [Google Scholar]

- Wang, Y.; Yuan, G.; Mi, Z.; Peng, J.; Ding, X.; Liang, Z.; Fu, X. Continuous Driver’s Gaze Zone Estimation Using RGB-D Camera. Sensors 2019, 19, 1287. [Google Scholar] [CrossRef] [Green Version]

- Wang, Y.; Zhao, T.; Ding, X.; Peng, J.; Bian, J.; Fu, X. Learning a gaze estimator with neighbor selection from large-scale synthetic eye images. Knowl.-Based Syst. 2018, 139, 41–49. [Google Scholar] [CrossRef]

- Zhang, Y.; Bulling, A.; Gellersen, H. Discrimination of gaze directions using low-level eye image features. In Proceedings of the 1st International Workshop on Real World Domain Specific Languages; Association for Computing Machinery: New York, NY, USA, 2011; pp. 9–14. [Google Scholar]

- Wang, Y.; Shen, T.; Yuan, G.; Bian, J.; Fu, X. Appearance-based gaze estimation using deep features and random forest regression. Knowl.-Based Syst. 2016, 110, 293–301. [Google Scholar] [CrossRef]

- Huang, Q.; Veeraraghavan, A.; Sabharwal, A. TabletGaze: Dataset and analysis for unconstrained appearance-based gaze estimation in mobile tablets. Mach. Vis. Appl. 2017, 28, 445–461. [Google Scholar] [CrossRef]

- Wu, Y.-L.; Yeh, C.-T.; Hung, W.-C.; Tang, C.-Y. Gaze direction estimation using support vector machine with active appearance model. Multimedia Tools Appl. 2014, 70, 2037–2062. [Google Scholar] [CrossRef]

- Baluja, S.; Pomerleau, D. Non-Intrusive Gaze Tracking Using Artificial Neural Networks. In Proceedings of the Advances in Neural Information Processing Systems 6, 7th {NIPS} Conference, Denver, Colorado, USA, 1993; Cowan, J.D., Tesauro, G., Alspector, J., Eds.; Morgan Kaufmann, 1993; pp. 753–760. Available online: https://www.aaai.org/Papers/Symposia/Fall/1993/FS-93-04/FS93-04-032.pdf. (accessed on 26 April 2021).

- Vora, S.; Rangesh, A.; Trivedi, M.M. On generalizing driver gaze zone estimation using convolutional neural networks. In Proceedings of the 2017 IEEE Intelligent Vehicles Symposium (IV), Los Angeles, CA, USA, 11–14 June 2017; pp. 849–854. [Google Scholar]

- Palmero, C.; Selva, J.; Bagheri, M.A.; Escalera, S. Recurrent CNN for 3D Gaze Estimation using Appearance and Shape Cues. arXiv 2018. Available online: http://arxiv.org/abs/1805.03064 (accessed on 24 May 2021).

- Gu, S.; Wang, L.; He, L.; He, X.; Wang, J. Gaze Estimation via a Differential Eyes’ Appearances Network with a Reference Grid. Engineering 2021. [Google Scholar] [CrossRef]

- Cazzato, D.; Leo, M.; Distante, C.; Voos, H. When I Look into Your Eyes: A Survey on Computer Vision Contributions for Human Gaze Estimation and Tracking. Sensors 2020, 20, 3739. [Google Scholar] [CrossRef] [PubMed]

- Sesma, L.S. Gaze Estimation: A Mathematical Challenge; Public University of Navarre: Navarre, Spain, 2017. [Google Scholar]

- Chen, J.; Tong, Y.; Gray, W.; Ji, Q. A robust 3D eye gaze tracking system using noise reduction. In Proceedings of the 2008 Symposium on Eye Tracking Research & Applications—ETRA ’08; ACM: New York, NY, USA, 2008; pp. 189–196. [Google Scholar]

- Guestrin, E.D.; Eizenman, M. General Theory of Remote Gaze Estimation Using the Pupil Center and Corneal Reflections. IEEE Trans. Biomed. Eng. 2006, 53, 1124–1133. [Google Scholar] [CrossRef] [PubMed]

- Lee, E.C.; Park, K.R. A robust eye gaze tracking method based on a virtual eyeball model. Mach. Vis. Appl. 2009, 20, 319–337. [Google Scholar] [CrossRef]

- Wang, K.; Su, H.; Ji, Q. Neuro-Inspired Eye Tracking With Eye Movement Dynamics. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; Volume 2019, pp. 9823–9832. [Google Scholar]

- Brousseau, B.; Rose, J.; Eizenman, M. Accurate Model-Based Point of Gaze Estimation on Mobile Devices. Vision 2018, 2, 35. [Google Scholar] [CrossRef] [Green Version]

- iMotions ASL Eye Tracking Glasses. 2018. Available online: https://imotions.com/hardware/argus-science-eye-tracking-glasses/ (accessed on 1 May 2021).

- Improving Your Research with Eye Tracking Since 2001|Tobii Pro. Available online: https://www.tobiipro.com/ (accessed on 1 May 2021).

- Wang, K.; Ji, Q. Real time eye gaze tracking with Kinect. In Proceedings of the 2016 23rd International Conference on Pattern Recognition (ICPR), Cancun, Mexico, 4–8 December 2016; pp. 2752–2757. [Google Scholar]

- Zhou, X.; Cai, H.; Li, Y.; Liu, H. Two-eye model-based gaze estimation from a Kinect sensor. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 1646–1653. [Google Scholar]

- Al-Naser, M.; Siddiqui, S.A.; Ohashi, H.; Ahmed, S.; Katsuyki, N.; Takuto, S.; Dengel, A. OGaze: Gaze Prediction in Egocentric Videos for Attentional Object Selection. In Proceedings of the 2019 Digital Image Computing: Techniques and Applications (DICTA), Perth, WA, Australia, 2–4 December 2019; pp. 1–8. [Google Scholar] [CrossRef]

- Fathi, A.; Li, Y.; Rehg, J. Learning to Recognize Daily Actions Using Gaze. In Proceedings of the 12th European Conference on Computer Vision, Florence, Italy, 7–13 October 2012. [Google Scholar]

- Zhang, M.; Ma, K.T.; Lim, J.H.; Zhao, Q.; Feng, J. Deep Future Gaze: Gaze Anticipation on Egocentric Videos Using Adversarial Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; Volume 2017, pp. 3539–3548. [Google Scholar]

- Liu, G.; Yu, Y.; Mora, K.A.F.; Odobez, J.-M. A Differential Approach for Gaze Estimation. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 1092–1099. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Deng, H.; Zhu, W. Monocular Free-Head 3D Gaze Tracking with Deep Learning and Geometry Constraints. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; Volume 2017, pp. 3162–3171. [Google Scholar]

- Hagl, M.; Kouabenan, D.R. Safe on the road—Does Advanced Driver-Assistance Systems Use affect Road Risk Perception? Transp. Res. Part. F Traffic Psychol. Behav. 2020, 73, 488–498. [Google Scholar] [CrossRef]

- Biondi, F.; Strayer, D.L.; Rossi, R.; Gastaldi, M.; Mulatti, C. Advanced driver assistance systems: Using multimodal redundant warnings to enhance road safety. Appl. Ergon. 2017, 58, 238–244. [Google Scholar] [CrossRef]

- Narote, S.P.; Bhujbal, P.N.; Narote, A.S.; Dhane, D. A review of recent advances in lane detection and departure warning system. Pattern Recognit. 2018, 73, 216–234. [Google Scholar] [CrossRef]

- Voinea, G.-D.; Postelnicu, C.C.; Duguleana, M.; Mogan, G.-L.; Socianu, R. Driving Performance and Technology Acceptance Evaluation in Real Traffic of a Smartphone-Based Driver Assistance System. Int. J. Environ. Res. Public Health 2020, 17, 7098. [Google Scholar] [CrossRef]

- Systems Technology Incorporated STISIM Drive: Car Driving Simulator. Available online: https://stisimdrive.com/ (accessed on 1 May 2021).

- Haugen, I.-B.K. Structural Organisation of Proprioceptors in the Oculomotor System of Mammals. 2002. Available online: https://openarchive.usn.no/usn-xmlui/bitstream/handle/11250/142006/3702haugen.pdf?sequence=1 (accessed on 21 April 2021).

- Toquero, S.O.; Cristóbal, A.d.S.; Herranz, R.M.; Herráez, V.d.; Zarzuelo, G.R. Relación de los parámetros biométricos en el ojo miope. Gac. Optom. Óptica Oftálmica 2012, 474, 16–22. [Google Scholar]

- García, J.P. Estrabismos. Artes Gráficas Toledo. 2008. Available online: http://www.doctorjoseperea.com/libros/estrabismos.html (accessed on 21 April 2021).

- Emgu CV: OpenCV in .NET (C#, VB, C++ and More). Available online: https://www.emgu.com/wiki/index.php/Main_Page (accessed on 4 May 2021).

- Ulbrich, S.; Menzel, T.; Reschka, A.; Schuldt, F.; Maurer, M. Defining and Substantiating the Terms Scene, Situation, and Scenario for Automated Driving. In Proceedings of the 2015 IEEE 18th International Conference on Intelligent Transportation Systems, Gran Canaria, Spain, 15–18 September 2015; pp. 982–988. [Google Scholar]

- Sipele, O.; Zamora, V.; Ledezma, A.; Sanchis, A. Advanced Driver’s Alarms System through Multi-agent Paradigm. In Proceedings of the 2018 3rd IEEE International Conference on Intelligent Transportation Engineering (ICITE), Singapore, 3–5 September 2018; pp. 269–275. [Google Scholar]

- ViPER-GT. Available online: http://viper-toolkit.sourceforge.net/products/gt/ (accessed on 4 May 2021).

- Ethical Aspects|UC3M. Available online: https://www.uc3m.es/ss/Satellite/LogoHRS4R/en/TextoMixta/1371234170165/Ethical_Aspects (accessed on 1 May 2021).

- Lugaresi, C.; Tang, J.; Nash, H.; McClanahan, C.; Uboweja, E.; Hays, M.; Zhang, F.; Chang, C.L.; Yong, M.G.; Lee, J.; et al. MediaPipe: A Framework for Building Perception Pipelines. arXiv 2019, arXiv:1906.08172. [Google Scholar]

| Method/Year | Sensor | Frame Rate | Limitations | Domain | Accuracy | Advantages | Disadvantages |

|---|---|---|---|---|---|---|---|

| Our approach | RGBD | 30 fps at 1920 × 1080 | Camera location | Simulated driving environment | 81.84% over inspectioned areas | Calibration process is not required | Pupil location detection is improvable |

| 3D data available | |||||||

| data | |||||||

| [42] 2016 | RGBD | 30 fps at 640 × 480 | Person calibration | Screen display | 4.0 degrees | 3D data available | Low resolution affects the operation distance (800 mm) |

| [43] 2017 | RGBD | - | Person calibration | Points on a monitor | 1.99 degrees | 3D data available data | Not reproducible |

| Camera limitations (e.g., distance) | |||||||

| [44] 2019 | Glasses | 13–18 fps | Person calibration | Industrial Scenario | 7.39 Average Angular Error | Consider gaze as a classification and regression problem | Not reproducible |

| High precision | Intrusive | ||||||

| [47] 2019 | RGB | 666 fps | Person calibration | Public datasets | 3~5.82 Average Angular Error | Robust to eyelid closing or illumination perturbations | High run-time |

| Not reproducible | |||||||

| 3D data not available | |||||||

| [48] 2017 | RGB | 1000 fps | - | Public datasets, screen display | - | More usability | Face alignment time to be considered |

| Less precision | |||||||

| 3D only estimable |

| True Zone | Recognized Gaze Zone | ||||

|---|---|---|---|---|---|

| Left | 100 | 0 | 0 | 0 | 0 |

| Front-Left | 6.91 | 93.09 | 0 | 0 | 0 |

| Front | 0 | 3.08 | 96.57 | 0.35 | 0 |

| Front-Right | 0 | 0 | 4.37 | 93.40 | 2.23 |

| Right | 0 | 0 | 0 | 0.44 | 99.56 |

| Unknown ratio: 0.79% | |||||

| Hit ratio (absolute): 95.60% | |||||

| Hit ratio (relative): 96.37% | |||||

| Mean error: 3.63% | |||||

| True Zone | Recognized Gaze Zone | ||||

|---|---|---|---|---|---|

| Left | 68.77 | 21.33 | 9.90 | 0 | 0 |

| Front-Left | 2.57 | 51.51 | 44.09 | 1.39 | 0.44 |

| Front | 0 | 2.08 | 93.05 | 4.65 | 0.22 |

| Front-Right | 0 | 2.35 | 31.93 | 64.78 | 0.94 |

| Right | 0 | 0 | 20.61 | 13.74 | 65.65 |

| Unknown ratio: 11.16% | |||||

| Hit ratio (absolute): 72.69% | |||||

| Hit ratio (relative): 81.84% | |||||

| Mean error: 19.14% | |||||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ledezma, A.; Zamora, V.; Sipele, Ó.; Sesmero, M.P.; Sanchis, A. Implementing a Gaze Tracking Algorithm for Improving Advanced Driver Assistance Systems. Electronics 2021, 10, 1480. https://doi.org/10.3390/electronics10121480

Ledezma A, Zamora V, Sipele Ó, Sesmero MP, Sanchis A. Implementing a Gaze Tracking Algorithm for Improving Advanced Driver Assistance Systems. Electronics. 2021; 10(12):1480. https://doi.org/10.3390/electronics10121480

Chicago/Turabian StyleLedezma, Agapito, Víctor Zamora, Óscar Sipele, M. Paz Sesmero, and Araceli Sanchis. 2021. "Implementing a Gaze Tracking Algorithm for Improving Advanced Driver Assistance Systems" Electronics 10, no. 12: 1480. https://doi.org/10.3390/electronics10121480