GateRL: Automated Circuit Design Framework of CMOS Logic Gates Using Reinforcement Learning

Abstract

:1. Introduction

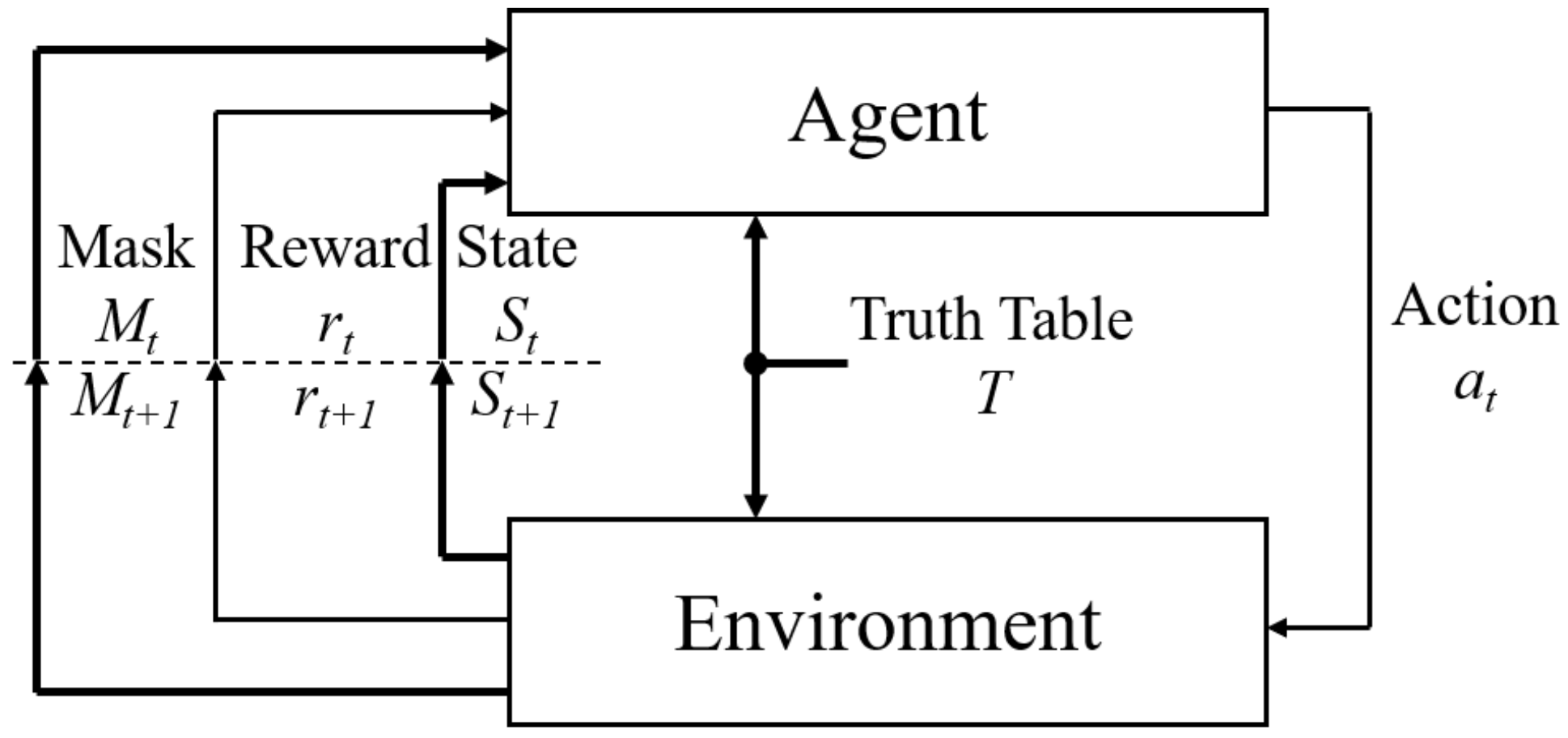

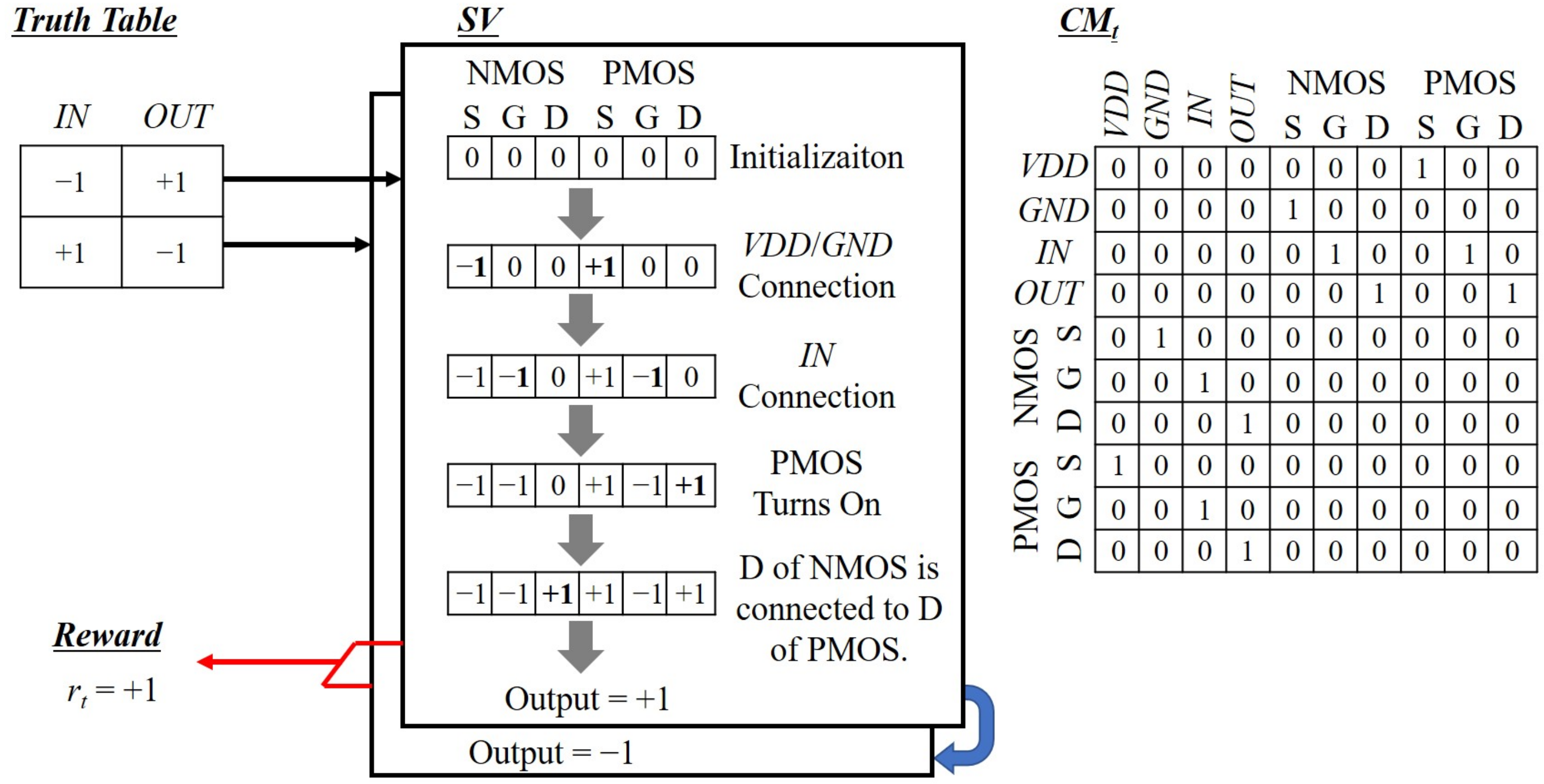

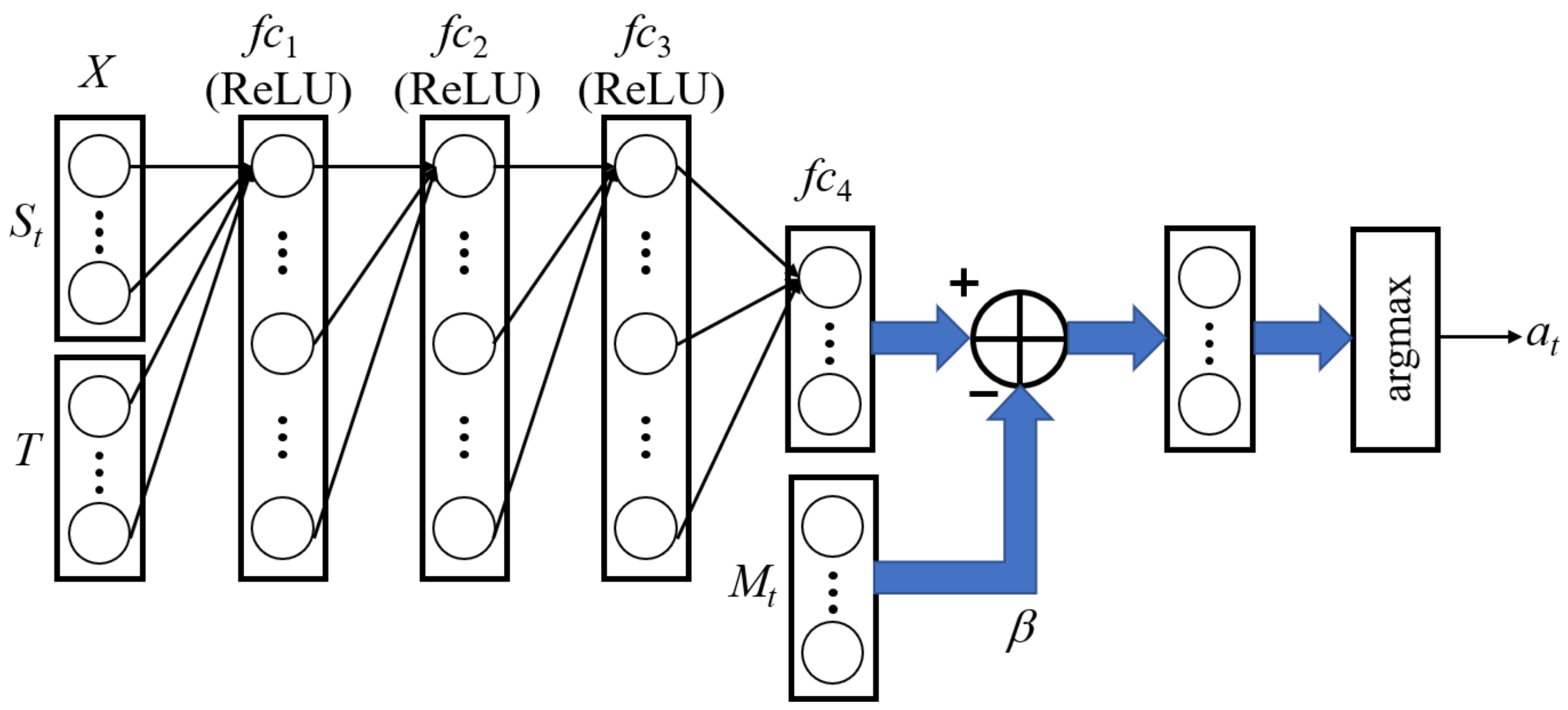

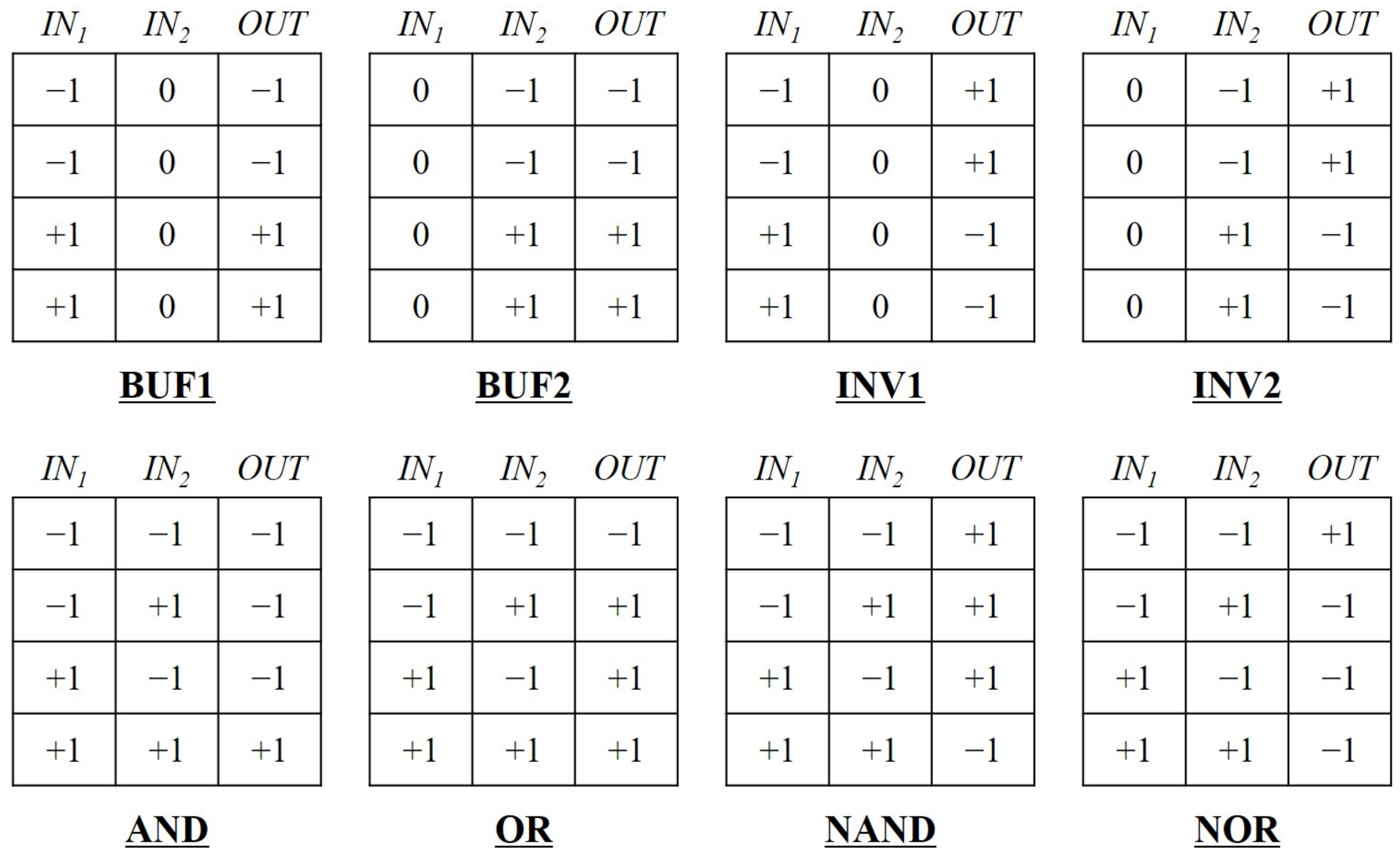

2. Proposed GateRL Architecture

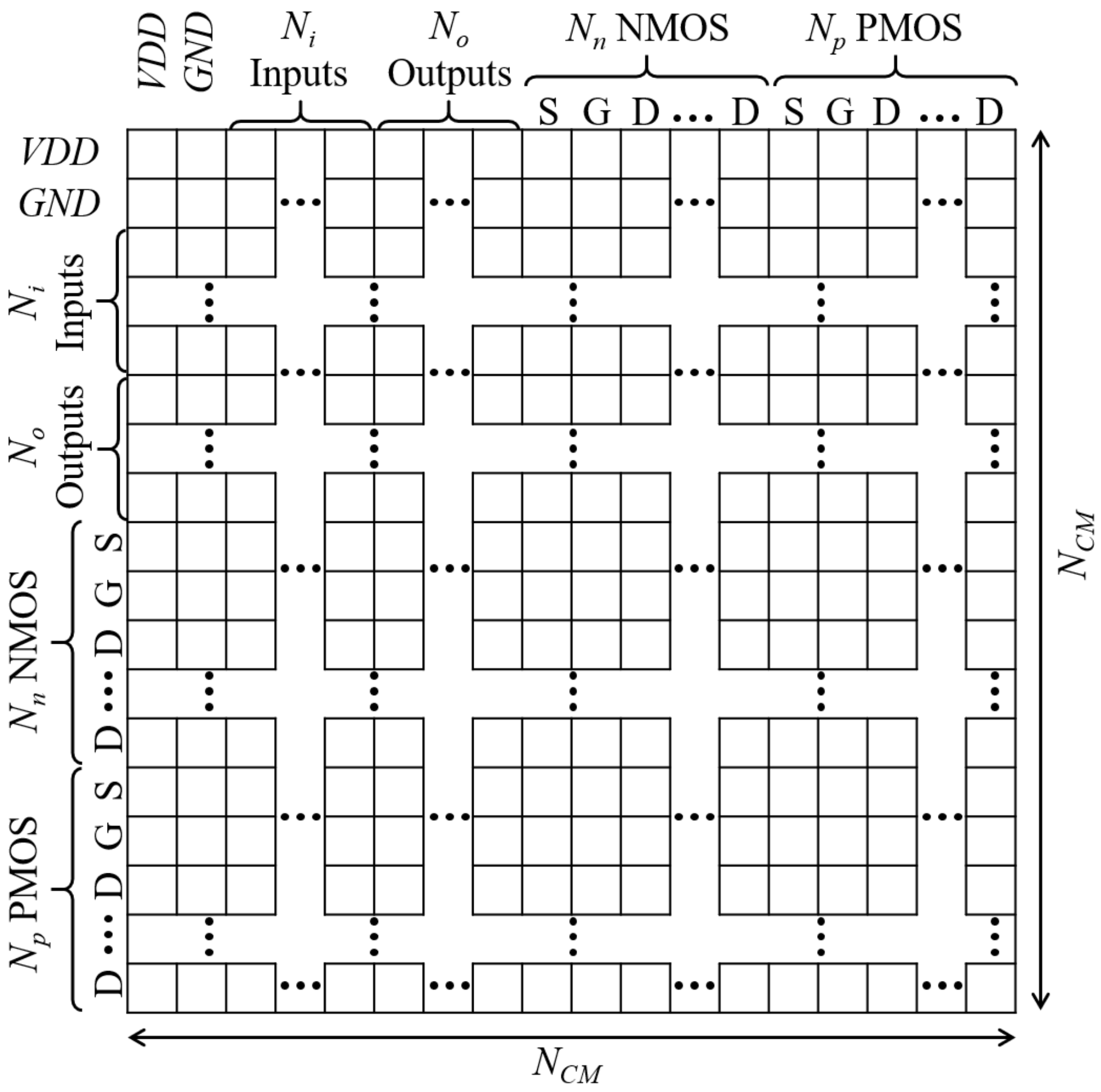

2.1. State ()

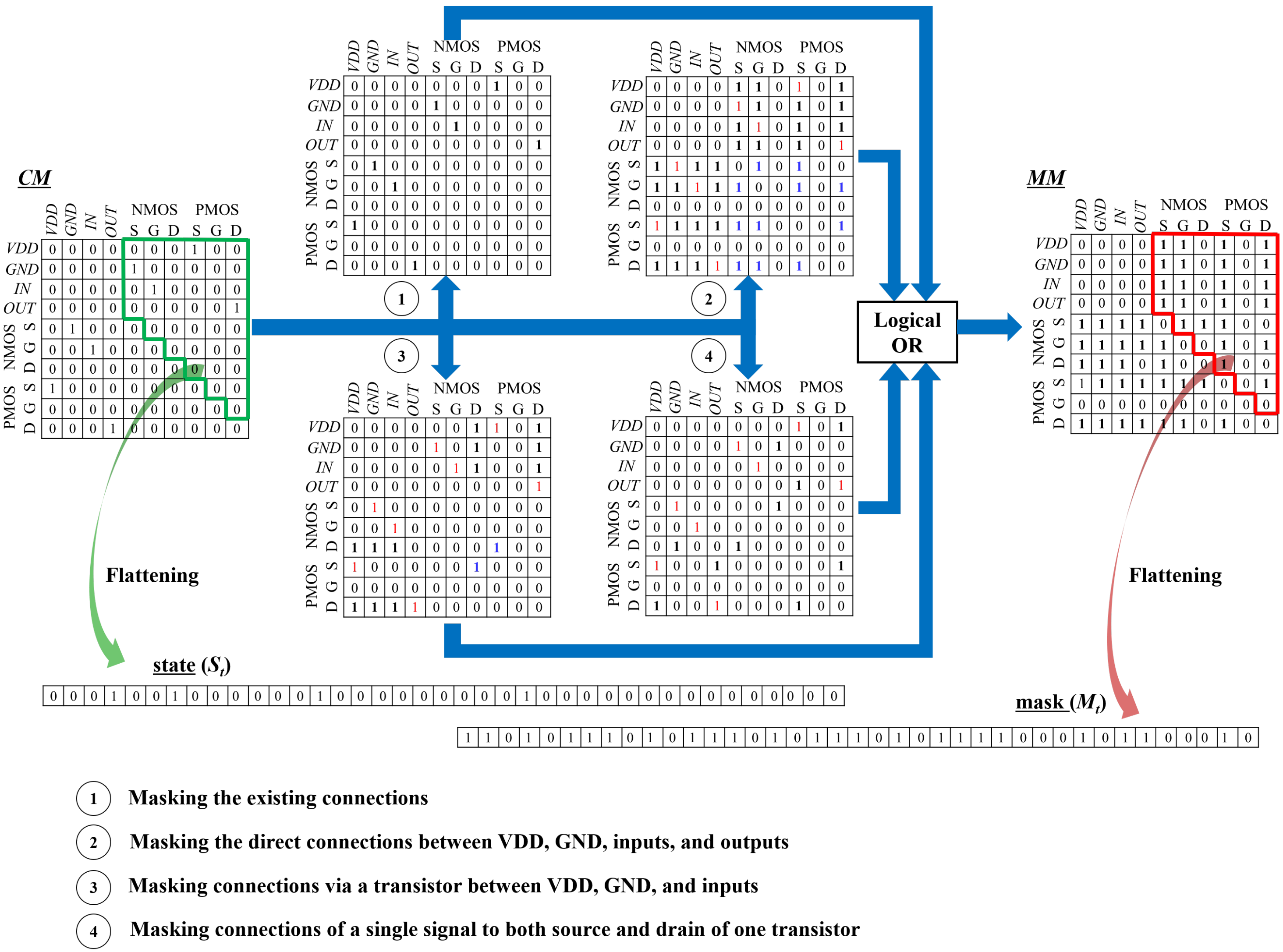

2.2. Mask ()

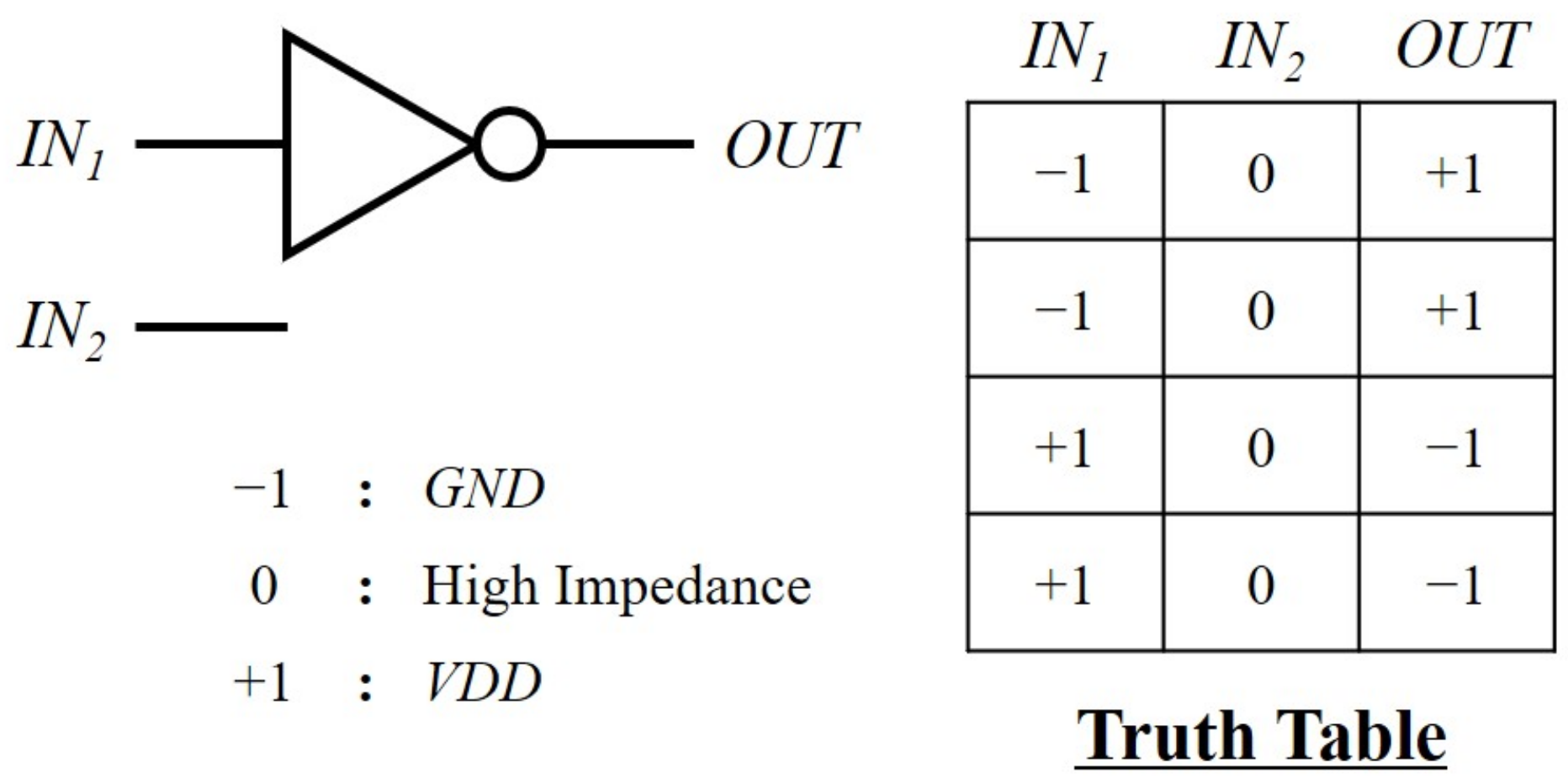

2.3. Reward ()

2.4. Action ()

| Algorithm 1 DQN |

|

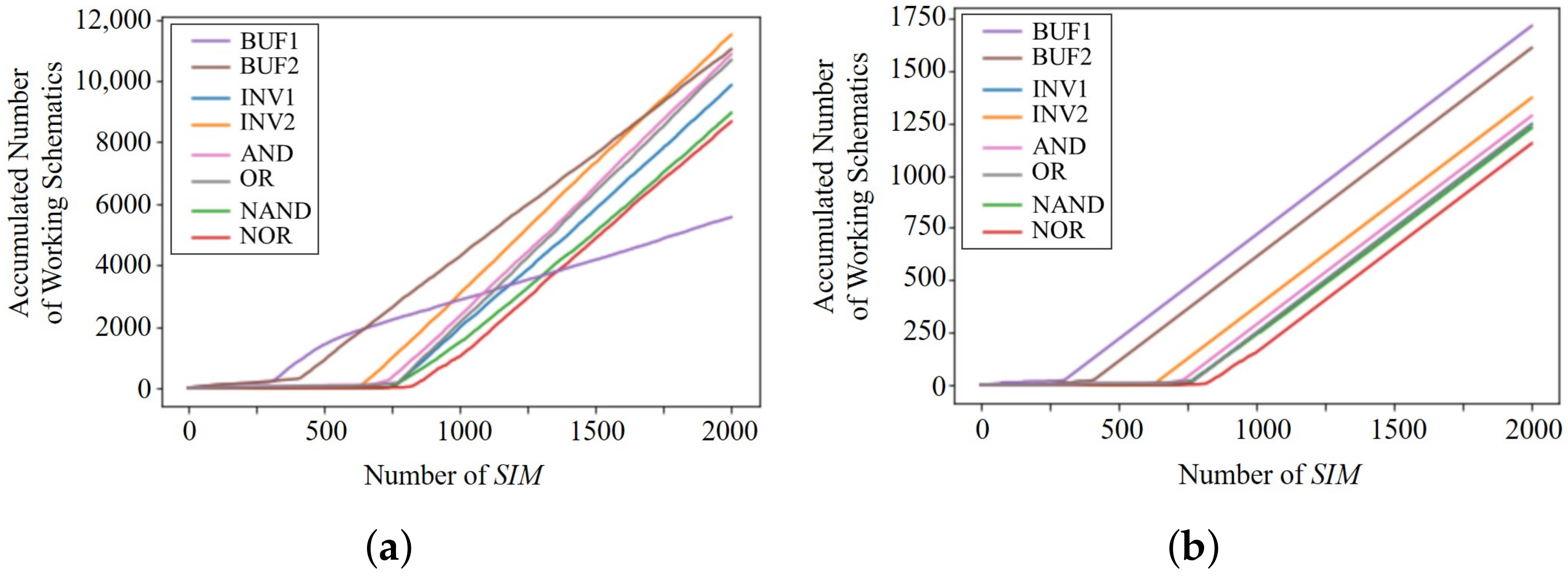

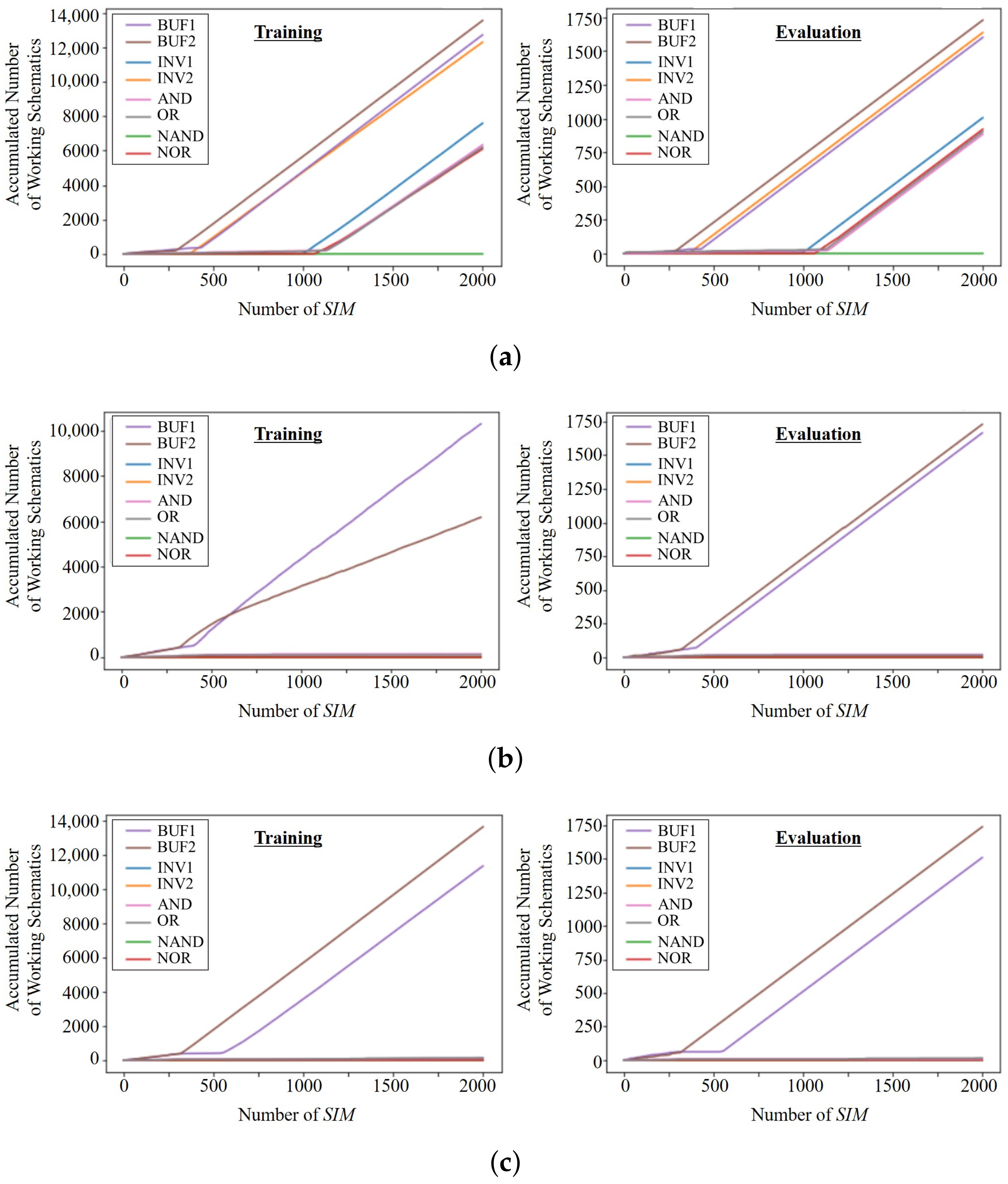

3. Experimental Results

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Rawat, W.; Wang, Z. Deep Convolutional Neural Networks for Image Classification: A Comprehensive Review. Neural Comput. 2017, 29, 2352–2449. [Google Scholar] [CrossRef] [PubMed]

- Zhao, Z.Q.; Zheng, P.; Xu, S.T.; Wu, X. Object Detection with Deep Learning: A Review. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 3212–3232. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Saritha, R.R.; Paul, V.; Kumar, P.G. Content based image retrieval using deep learning process. Clust. Comput. 2019, 22, 4187–4200. [Google Scholar] [CrossRef]

- Wang, Z.; Chen, J.; Hoi, S.C.H. Deep Learning for Image Super-resolution: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2019. in Press. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Otter, D.W.; Medina, J.R.; Kalita, J.K. A Survey of the Usages of Deep Learning for Natural Language Processing. IEEE Trans. Neural Netw. Learn. Syst. 2020, in press. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Nassif, A.B.; Shahin, I.; Attili, I.; Azzeh, M.; Shaalan, K. Speech Recognition Using Deep Neural Networks: A Systematic Review. IEEE Access 2019, 7, 19143–19165. [Google Scholar] [CrossRef]

- Hoseinzade, E.; Haratizadeh, S. CNNpred: CNN-based stock market prediction using a diverse set of variables. Expert Syst. Appl. 2019, 129, 273–285. [Google Scholar] [CrossRef]

- LeCun, Y. Deep Learning Hardware: Past, Present, and Future. In Proceedings of the 2019 IEEE International Solid-State Circuits Conference-(ISSCC), San Francisco, CA, USA, 17–21 February 2019; pp. 12–19. [Google Scholar]

- Dean, J. The Deep Learning Revolution and Its Implications for Computer Achitecture and Chip Design. In Proceedings of the 2020 IEEE International Solid-State Circuits Conference-(ISSCC), San Francisco, CA, USA, 16–20 February 2020; pp. 8–14. [Google Scholar]

- Zhang, C.; Li, P.; Sun, G.; Guan, Y.; Xiao, B.; Cong, J. Optimizing FPGA-based Accelerator Design for Deep Convolutional Neural Networks. In Proceedings of the 2015 ACM/SIGDA International Symposium on Field-Programmable Gate Arrays, Monterey, CA, USA, 22–24 February 2015; pp. 161–170. [Google Scholar]

- Han, S.; Liu, X.; Mao, H.; Pu, J.; Pedram, A.; Horowitz, M.A.; Dally, W.J. EIE: Efficient Inference Engine on Compressed Deep Neural Network. ACM SIGARCH Comput. Archit. News 2016, 44, 243–254. [Google Scholar] [CrossRef]

- Chang, J.W.; Kang, K.W.; Kang, S.J. An Energy-Efficient FPGA-Based Deconvolutional Neural Networks Accelerator for Single Image Super-Resolution. IEEE Trans. Circuits Syst. Video Technol. 2020, 30, 281–295. [Google Scholar] [CrossRef] [Green Version]

- Jouppi, N.P.; Young, C.; Patil, N.; Patterson, D.; Agrawal, G.; Bajwa, R.; Bates, S.; Bhatia, S.; Boden, N.; Borchers, A.; et al. In-Datacenter Performance Analysis of a Tensor Processing Unit. In Proceedings of the 44th Annual International Symposium on Computer Architecture, Toronto, ON, Canada, 24–28 June 2017; pp. 1–12. [Google Scholar]

- Shin, D.; Lee, J.; Lee, J.; Yoo, H.J. DNPU: An 8.1TOPS/W reconfigurable CNN-RNN processor for general-purpose deep neural networks. In Proceedings of the 2017 IEEE International Solid-State Circuits Conference (ISSCC), San Francisco, CA, USA, 5–9 February 2017; pp. 240–241. [Google Scholar]

- Wang, H.; Yang, J.; Lee, H.S.; Han, S. Learning to Design Circuits. arXiv 2018, arXiv:1812.02734. [Google Scholar]

- Settaluri, K.; Haj-Ali, A.; Huang, Q.; Hakhamaneshi, K.; Nikolic, B. AutoCkt: Deep Reinforcement Learning of Analog Circuit Designs. In Proceedings of the 2020 Design, Automation & Test in Europe Conference & Exhibition, Grenoble, France, 9–13 March 2020; pp. 490–495. [Google Scholar]

- Pui, C.W.; Chen, G.; Ma, Y.; Young, E.F.Y.; Yu, B. Clock-aware ultrascale FPGA placement with machine learning routability prediction. In Proceedings of the 2017 IEEE/ACM International Conference on Computer-Aided Design, Irvine, CA, USA, 13–16 November 2017. [Google Scholar]

- Li, Y.; Lin, Y.; Madhusudan, M.; Sharma, A.; Xu, W.; Sachin Sapatnekar, R.H.; Hu, J. Exploring a Machine Learning Approach to Performance Driven Analog IC Placement. In Proceedings of the 2020 IEEE Computer Society Annual Symposium on VLSI, Limassol, Cyprus, 6–8 July 2020. [Google Scholar]

- Crossley, J.; Puggelli, A.; Le, H.P.; Yang, B.; Nancollas, R.; Jung, K.; Kong, L.; Narevsky, N.; Lu, Y.; Sutardja, N.; et al. BAG: A designer-oriented integrated framework for the development of AMS circuit generators. In Proceedings of the IEEE/ACM International Conference on Computer-Aided Design (ICCAD), Santa Clara, CA, USA, 18–21 November 2013; pp. 74–81. [Google Scholar]

- Chang, E.; Han, J.; Bae, W.; Wang, Z.; Narevsky, N.; Nikolic, B.; Alon, E. BAG2: A process-portable framework for generator-based AMS circuit design. In Proceedings of the IEEE Custom Integrated Circuits Conference (CICC), San Diego, CA, USA, 8–11 April 2018. [Google Scholar]

- Hakhamaneshi, K.; Werblun, N.; Abbeel, P.; Stojanović, V. BagNet: Berkeley Analog Generator with Layout Optimizer Boosted with Deep Neural Networks. In Proceedings of the IEEE/ACM International Conference on Computer-Aided Design (ICCAD), Westminster, CO, USA, 4–7 November 2019. [Google Scholar]

- Song, E.; Nam, H. Shoot-through current reduction scheme for low power LTPS TFT programmable shift register. J. Soc. Inf. Disp. 2014, 22, 18–22. [Google Scholar] [CrossRef]

- Song, E.; Nam, H. Low Power Programmable Shift Register with Depletion Mode Oxide TFTs for High Resolution and High Frame Rate AMFPDs. J. Disp. Technol. 2014, 10, 834–838. [Google Scholar] [CrossRef]

- Song, E.; Song, S.J.; Nam, H. Pulse-width-independent low power programmable low temperature poly-Si thin-film transistor shift register. Solid State Electron. 2015, 107, 35–39. [Google Scholar] [CrossRef]

- Kim, Y.I.; Nam, H. Clocked control scheme of separating TFTs for a node-sharing LTPS TFT shift register with large number of outputs. J. Soc. Inf. Disp. 2020, 28, 825–830. [Google Scholar] [CrossRef]

- Sutton, R.S.; Barto, A.G. Reinforcement Learning: An Introduction, 2nd ed.; MIT Press: Cambridge, MA, USA, 2018. [Google Scholar]

- Huang, S.; Ontanon, S. A Closer Look at Invalid Action Masking in Policy Gradient Algorithms. arXiv 2020, arXiv:2006.14171. [Google Scholar]

- Zhang, S.; Tong, H.; Xu, J.; Maciejewski, R. Graph convolutional networks: A comprehensive review. Comput. Soc. Netw. 2019, 6, 1–23. [Google Scholar] [CrossRef] [Green Version]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Rusu, A.A.; Veness, J.; Bellemare, M.G.; Graves, A.; Riedmiller, M.; Fidjeland, A.K.; Ostrovski, G.; et al. Human-level control through deep reinforcement learning. Nature 2015, 518, 529–533. [Google Scholar] [CrossRef] [PubMed]

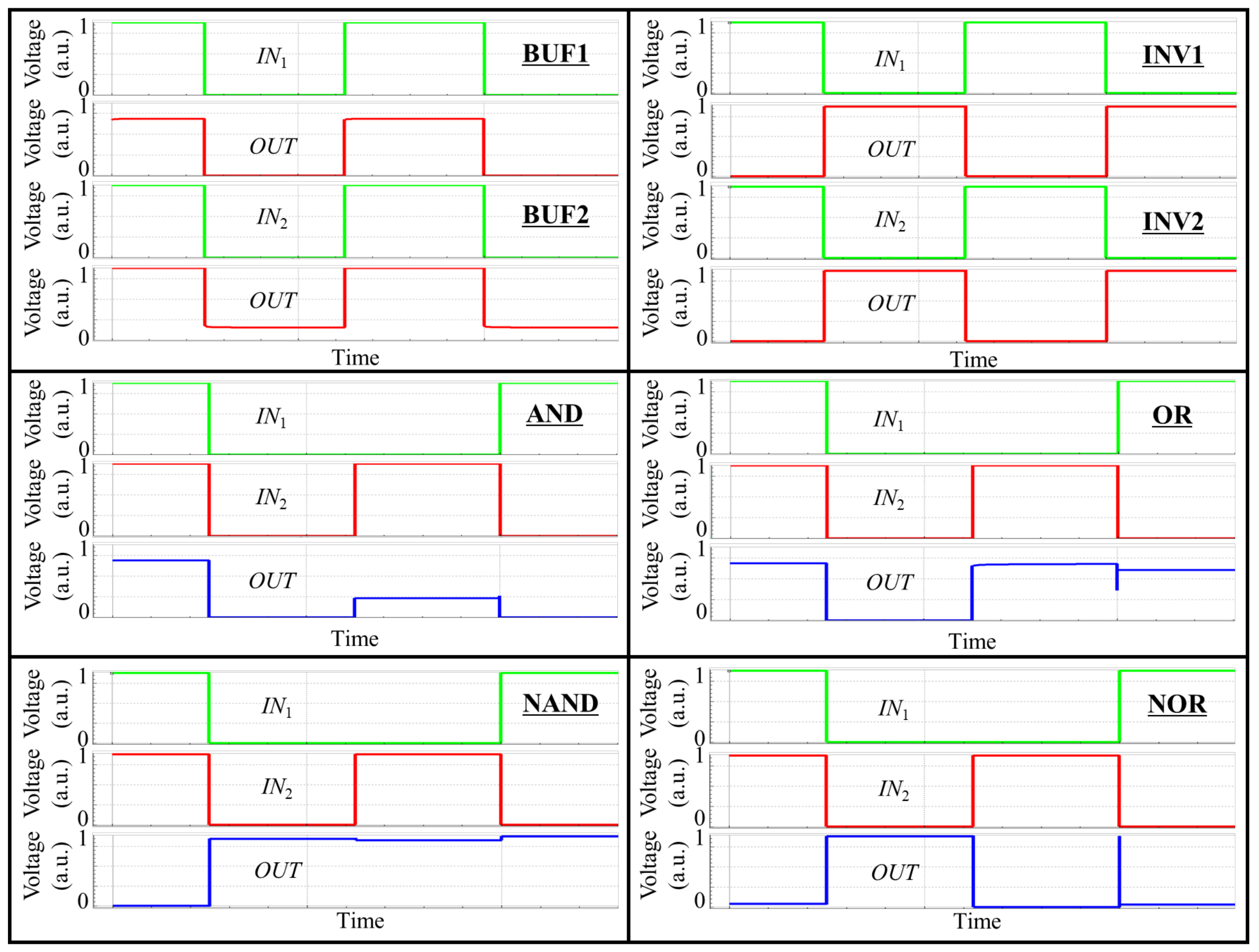

| Target Logic Gate | Components | Episode Length |

|---|---|---|

| BUF1 | 1 NMOS | 3 Steps |

| BUF2 | 1 PMOS | 3 Steps |

| INV1 | 1 NMOS, 1 PMOS | 6 Steps |

| INV2 | 1 NMOS, 1 PMOS | 6 Steps |

| AND | 1 NMOS, 1 PMOS | 6 Steps |

| OR | 2 NMOS | 6 Steps |

| NAND | 1 NMOS, 2 PMOS | 9 Steps |

| NOR | 2 NMOS, 1 PMOS | 9 Steps |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nam, H.; Kim, Y.-I.; Bae, J.; Lee, J. GateRL: Automated Circuit Design Framework of CMOS Logic Gates Using Reinforcement Learning. Electronics 2021, 10, 1032. https://doi.org/10.3390/electronics10091032

Nam H, Kim Y-I, Bae J, Lee J. GateRL: Automated Circuit Design Framework of CMOS Logic Gates Using Reinforcement Learning. Electronics. 2021; 10(9):1032. https://doi.org/10.3390/electronics10091032

Chicago/Turabian StyleNam, Hyoungsik, Young-In Kim, Jina Bae, and Junhee Lee. 2021. "GateRL: Automated Circuit Design Framework of CMOS Logic Gates Using Reinforcement Learning" Electronics 10, no. 9: 1032. https://doi.org/10.3390/electronics10091032