1. Introduction

In recent years, traffic scene analysis has become a hot topic due to the large investment into autonomous vehicles and advanced driver-assistance systems. Two of its key components are traffic sign detection (TSD) and recognition (TSR). TSD is the process of localizing signs on an input image. In other worlds, TSD methods generate candidate regions of interest (ROIs) that are likely to contain traffic signs. Then, the detected image regions are used to feed the traffic sign recognizer (or classifier), which tries to identify the exact type of sign. Therefore, the efficiency of sign detection has a huge impact on the output of the whole sign recognition process.

TSD is still a challenging problem. Although the industrial and academic research community has achieved remarkable results in TSD, it is not a fully accomplished task yet. The difficulty comes from complex traffic scenes, including weather conditions, variable illumination, environmental noise, sign color fading, etc.

The information content of road signs is coded into their visual appearance. They are designed to be unique and to have distinguishable features, such as color and shape. In order to standardize traffic signs in different countries, an international agreement (Vienna Convention of Road Signs and Signals) was accepted in 1968. The agreement was signed by 52 countries, of which 31 are European countries [

1]. The Vienna Convention classified road signs into eight categories, such as danger, priority, mandatory, etc.

Typically, signs have a simple shape and eye-catching color. For example, the most common colors of European traffic signs are red and blue. These features are the basis of several earlier published TSD methods [

2]. However, color and shape features are vulnerable for various reasons. The original color slightly changes due to damage over the years or moving cameras. In addition, color perception is sensitive to other factors, such as distance from the sign, reflection, time of day, and weather conditions. Such effects make the image segmentation process hard.

In order to overcome these difficulties, researchers have used different image enhancement techniques to suppress backgrounds and highlight traffic signs on images. In some cases, image enhancement is based on color-channel thresholding in the RGB (red, green, and blue channels) or other color spaces, such as HSI (hue, saturation, and intensity channels) [

3]. In this case, the efficiency of image enhancement strongly depends on a set of thresholds defined by the researcher. In other cases, researchers have used color-channel normalization or even machine learning methods for image enhancement. Since we have more choices for image enhancement, a question may arise: which of these methods are the most efficient?

In this paper, we are focus on image enhancement and propose an improved probability-model-based method. Its efficiency was tested in the traffic sign detection problem. The purpose of this method is to reject uninteresting regions (background) and keep the original traffic sign shape. Our experimental results show that the proposed image enhancement method improves the performance of an object detector.

3. Related Work

In recent years, a large number of TSD approaches based on machine learning and machine vision have been published. These approaches can be classified according to the used region (or object) proposal algorithm. Several earlier methods use the sliding-window approach, where a fixed-size “window” goes through horizontally and vertically on different scaled versions of an image. For example, Wang et al. [

7] used this region proposal technique twice (coarse and fine filtering) to detect candidate image regions where signs could be located. In the coarse-filtering step, the window size was 20 × 20 pixels, whereas in the fine-filtering step, it was 40 × 40 pixels. Both steps were performed 22 times on downscaled images with a scaling factor of 1.1.

In most sliding-window-based object localization approaches, the authors have not used image enhancement; therefore, features have been extracted from the original image regions. Although the sliding-window approach has high precision if both the window and the step size are relatively low, its time requirement is high as a result of the huge amount of image patches that need to be analyzed. As an example, a sliding window with a 1.1 scale factor, 5 pixel step size, and 20 × 20 kernel generates 242,748 image patches on a 1360 × 800 pixel image. Therefore, this is not the best option for real-time traffic sign detection in today’s embedded systems.

On the other hand, the researchers have combined image enhancement method(s) with shape matching, Maximum Stable Region (MSER), Selective Search, EdgeBox, or other types of object proposal algorithms to filter out traffic signs from the image.

Other researchers have applied color-channel thresholding for image enhancement. Berkaya et al. [

8] applied different color thresholding for red and blue colors in the RGB color space. Some researchers converted RGB images into other color spaces because the RGB space is easily affected by lighting conditions. Yakimov [

9] used three threshold values in the HSV color space to filter out the red color of traffic signs. Other researchers used thresholding in the HSI rather than the HSV color space [

10]. Ellehyani et al. [

11] defined different threshold values to the red and blue colors for all channels of the HSI space. In the below formulas,

fh(

x,

y),

fs(

x,

y), and

fi(

x,

y) are the hue, saturation, and intensity values, respectively, of a pixel in the HSI color space, whereas

f*(

x,

y) is the output image.

Another group of researchers think that simple thresholding is not effective and fixed threshold values may cause failure in the ROI extraction step. Therefore, they applied different color-channel normalization techniques in order to highlight the (typically red and blue) colors of traffic signs.

The authors of [

12,

13] tested RGB normalization. In addition to RGB normalization, Salti et al. [

12] applied separated-channel normalization image enhancement approaches with and without color stretching. In (3) and (4),

fr(

x,

y),

fg(

x,

y), and

fb(

x,

y) are the red, green, and blue intensities of a pixel.

Contrast stretching was applied to all channels of an RGB image to deal with under- or overexposure. In both cases, the RGB image was transformed into a single-channel image where the suitable channel (that characterizes the sought signal) was enhanced (e.g., blue in the case of mandatory signs). The authors concluded that in the case of red and white signs, color stretching is not necessary and both image enhancement techniques have the same efficiency. However, in the case of mandatory signs, channel normalization brought detection improvement. In their work, the enhanced image was the input for MSER segmentation. MSER can detect stable regions or blobs on gray-valued images wherein the distribution of pixel values has low variance.

The authors of [

14,

15] followed a similar approach as that used in [

12], with an RB-channel-normalized image as the input for the MSER segmentation algorithm. Since blue and red are the two dominant background colors of traffic signs, Kurnianggoro et al. [

15] tried to leave only the maximum value of those color channels (5).

Some researchers have used more complex approaches for image enhancement, with a shallow machine learning algorithm responsible for pixel categorization. Liang et al. [

16] and Wu et al. [

17] transformed an original RGB color image to a grayscale image with a Support Vector Machine (SVM). In their approach, positive colors (e.g., red in the danger category) are mapped to high intensities, and negative colors (e.g., all colors except red in the danger category) are mapped to low intensities. They categorized all colors as positive or negative (binary classification). The colors of the traffic signs belong to the positive category, labelled +1, whereas other colors belong to the negative category, labelled −1. In the case of prohibitory and danger signs, the red border pixels are treated as the positive color, whereas in the case of mandatory signs, the blue background pixels are treated as positive pixels. The binary training data generated in this way was used to train an SVM. After that, color pixel mapping was performed by the trained SVM according to (6), where

frgb(

x,

y) is the red, green, and blue intensities; and

f*(

x,

y) is the gray intensity of a pixel at coordinate (

x,

y).

This image enhancement method helped to them in the multiscale template matching process. Our image enhancement algorithm also requires such a training dataset, but its training and decision time is less than that of an SVM.

Beyond SVMs, other shallow machine learning methods are also used for pixel classification. For example, Ellahyani and Ansari [

18] combined mean-shift (MS) clustering and random forest. MS is used as an image preprocessing step because the direct usage of random forest for all pixels would be a time-consuming process. Yang et al. [

19,

20] defined a probability model to compute the likelihood that a pixel falls into a particular color category. To construct the model, they manually collected training samples (pixel values) and converted them into the Ohta space. Their probability model is based on the Bayes rule, where the color class distribution is modelled by Gaussian distribution (7). In (7),

foh(

x,

y) is the normalized components of a pixel (features) in the Ohta space, and

μi and Σ

i are the mean vector and covariance matrix, respectively, of class

Ci.

Our proposed approach is a similar naïve Bayes model. However, in spite of [

19,

20], we discretized all used features (color representation), and their distributions are modelled by their probability mass function. In addition, used features are selected according to their Kullback-Leibler (KL) divergence between classes.

A small group of researchers have focused only on specific traffic sign categories. For example, Wang et al. [

21] and Gudigar et al. [

22] proposed specific image enhancement methods for the detection of traffic signs with red rim. This condition covers prohibitory and danger signs. The authors of [

21] converted RGB images into the HSV color space, where they exploited the color information of neighboring pixels. In the hue and saturation color planes, they determined the red degree of a color (

fd(

x,

y)) with the following calculation:

Thereafter, for each pixel, they have taken a square window

wx,y centered on

fd(

x,

y) with radius

Br and calculated the normalized red degree (

fnd(

x,

y)) with the following formula:

where

μ(

wx,y) and

σ(

wx,y) are the mean and variance of the red degrees of all the pixels in

wx,y. However, this method works only for a subset of traffic signs. Moreover, the window-wise calculation slows down the image enhancement process. In this article, we propose a generally usable image enhancement method.

4. Materials and Methods

In most images, the color of traffic signs is rather distinct from the background, making it an intuitive feature for traffic sign detection. In order to highlight traffic signs in images, we propose an improved probability model for image enhancement. Its task is to compute the likelihood that a pixel falls into one of the N classes, where one of them is the background.

The model requires a training set wherein image pixels are categorized into

N classes. To test the efficiency of the model, 150 training images of the GTSDB and 100 images from the first labelled set of the Swedish Traffic Signs dataset were used as training data. All images were manually annotated with the Labelme software.

Figure 1 shows such an annotated image with three classes (

N = 3): red-colored traffic sign (

c1), blue-colored traffic sign (

c2), and background (

c3). As can be seen in the image, rectangular regions were selected wherein all pixels belong to one of the three classes.

Before training, all of the collected pixels were converted from the RGB color space into other color representations for use as feature vectors. Formally, an

frgb(

x,

y) pixel is represented with an

fx,y vector, where

fi is the

i-th feature:

Our probability model is actually a naïve Bayes machine learning algorithm. It calculates the probability that the

fx,y feature vector belongs to class

ci with the Bayesian rule:

In the above formula, the probability of a feature vector (

P(

fx,y)) is a constant value in an image. Therefore,

P(

fx,y) can be eliminated. In addition, if we decompose vector elements, (11) can be rewritten as:

In (12),

P(

ci) is the prior class probability, whereas

P(

fk|

ci) is the class-conditional density. The class-conditional density is approximated with the probability mass function of

fk for

ci.

P(

ci) can be easily computed from the number of samples in classes

n(

ci):

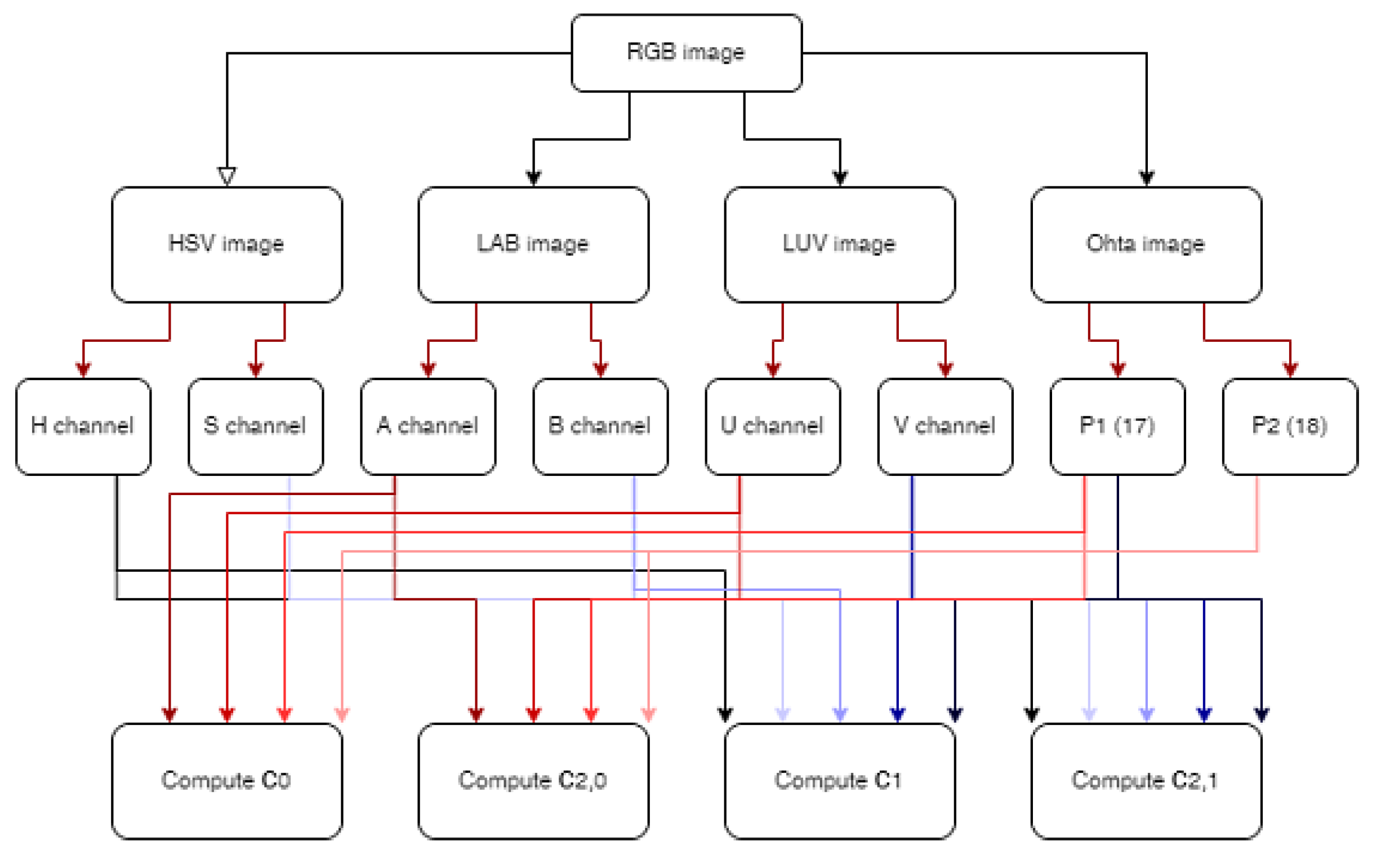

This model generates twice as many probability maps (matrices with the same dimension as the original image) as classes other than the background if we use different features in different classes. Since we used different features to separate red and blue colors from background (

Section 5), we have four probability maps. The class probability maps are denoted with

C0,

C1,

C2,0, C2,1, where

C2,i is the background to class

i. Those

C values can be calculated by a simple algorithm shown in Algorithm 1. It is important to note that the above equations describe pixel-wise classification, whereas the algorithm below uses matrix operations in order to speed up the execution time.

| Algorithm 1: Get class probability map. |

Require:

Ensure: |

|

|

| … |

|

|

|

| … |

|

|

|

The elements of maps indicate the probability that a pixel at coordinate (

x,

y) belongs to class

ci. Therefore, we need to keep only those probability values in

C0 and

C1 that are higher than the probability of background:

To construct the grayscale output image, we add the normalized forms of

C*0 to

C*1 and rescale the sum with the following formula, where

ε is a very small number to avoid division by zero:

6. Conclusions

In this paper, we proposed a probability-model-based image enhancement method to further improve the precision of traffic sign detection. It is worth highlighting that the presented method can be used not just for traffic sign detection but also for other object detection problems.

In terms of image enhancement, the model requires a training phase wherein the used feature probabilities are calculated on an annotated training dataset. In most cases, it is better to perform the training phase in a general computer environment. If we transfer the precalculated feature probability values to embedded systems, the image enhancement process can also be performed on those devices due to the relatively low computation cost.

Compared to existing machine learning-based image enhancement methods, the proposed probability model provides faster color image enhancement than other SVMs or artificial neural network-based approaches and requires less training time. Since features are discretized and modelled by their probability mass function, the model’s decision speed is increased compared to those probability models where continuous feature distributions are used.

To find “good” features for the preferred color and background separation, we measured the KL divergence of color-channel distributions between classes. This analysis showed that the channels of the HSV, LAB, and LUV color spaces, as well as the discretized Ohta space components, can be used with different efficiency for the separation of red and blue colors of traffic signs from the background.

To measure the efficiency of the proposed image enhancement method, the Selective Search algorithm was used as object proposal in combination with the IoU metric. Although Selective Search is a relatively slow object proposal algorithm, it has high precision, which is the main reason why we chose it. Our experimental results on the public datasets demonstrate that the presented probability model can improve the precision of object detection and efficiently eliminates background. The average detection accuracy was 98.64% and 99.1% on the GTSDB and Swedish Traffic Signs datasets, respectively.