1. Introduction

Wafer surface defect detection is a key link in the semiconductor-manufacturing process. It can provide timely feedback of product-quality information, determine causes of defects in accordance with each defect type and location, and correct operations as early as possible to avoid huge losses [

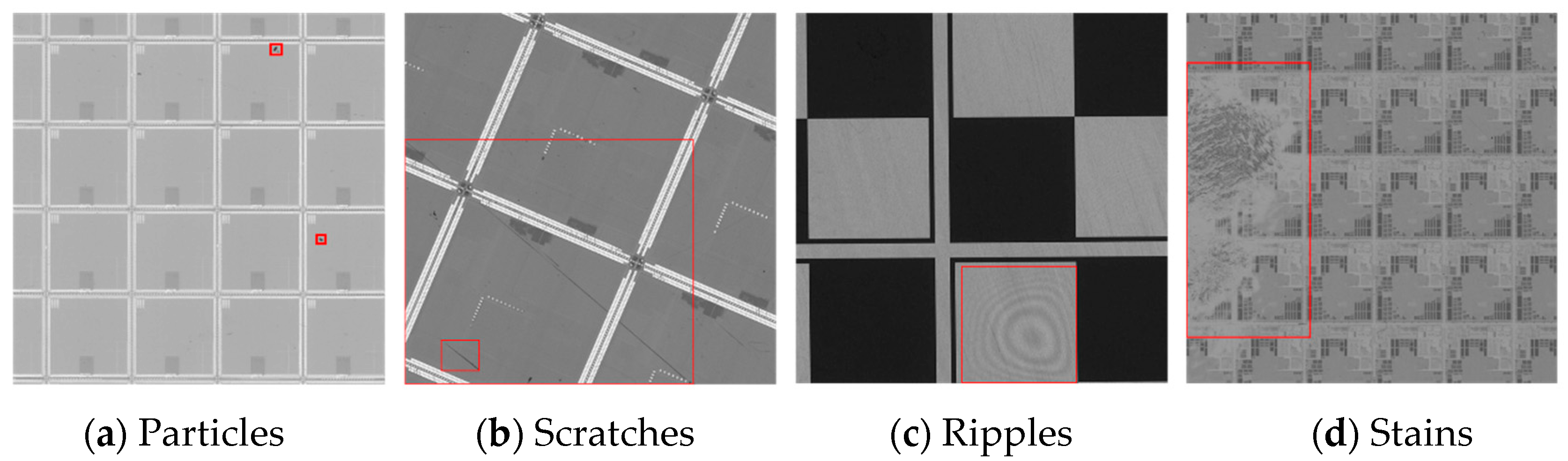

1]. Wafer surface defects can be divided into the following categories: (1) Particles: they may be caused by dust in the air that adheres to the wafer surface or impacts of external sharp objects, and they are mostly round and characterized by small scale; (2) Scratches: most of them are caused by improper instrument operation, and they are linear and exhibit large spans and discontinuity; (3) Ripples: they are mainly caused by film interference due to the coating defects of each layer, and they are characterized by wavy edges and low contrast with the background; (4) Stains: they are irregular in shape and large in area due to the residual dirt of the etching solution. These defect types are shown in

Figure 1. With the rapid development of the semiconductor industry, chip structure is becoming smaller, and the wafer manufacturing process is becoming increasingly complex. Many detection methods are no longer applicable [

2,

3]. Therefore, wafer surface defect detection research is crucial to improvement of industrial production efficiency and meeting the development needs of the industry. How to improve detection accuracy and reduce false and missed detection has become the focus of wafer detection research at this stage.

Early wafer surface defect detection methods were mainly based on image processing technology [

4,

5,

6,

7]. In these methods, through the difference between a template image without defects and an image to be tested, each defect area is obtained using the threshold segmentation method, and the texture and shape features of the defect areas are extracted. Then, the defect areas are classified using manually designed classification criteria. Although detection methods based on image processing can detect most defects, the threshold often needs to be changed or the algorithm needs to be redesigned when the imaging environment or defect type changes.

In recent years, object-detection algorithms [

8,

9,

10] have developed rapidly. Some methods based on deep learning have been used in wafer surface defect detection. Haddad et al. [

11] proposed a three-stage wafer surface defect detection method that involves candidate area generation, defect detection, and refinement stages. It can achieve accurate detection, but it has the disadvantages of high time consumption and no end-to-end training. Kim et al. [

12] proposed a detection model based on the conditional generative adversarial network. In this model, PixelGan [

13] is used as a discriminator to improve the detection accuracy for small-scale defects on the wafer surface, but its adaptability to industrial environment changes is poor. Han et al. [

14] presented a wafer surface defect segmentation method based on U-Net [

15]. In this method, a region proposal network is used to generate potential defect areas, and a dilated convolution module was introduced to improve the U-Net network structure and enhance the model’s segmentation ability for small-scale defects. However, the model’s detection speed is slow. Yang et al. [

16] proposed a quantum classical hybrid model for wafer defect detection using the quantum deep learning method. However, due to the development of quantum computing, model training is difficult.

Although detection methods based on deep learning have achieved some useful results, the following problems still exist: First, the sizes of particle defects are small, and after multiple convolution and downsampling operations, semantic information can be seriously lost, which can cause missed detection. Second, scratch defects have large spans and discontinuity, and the model easily outputs predicted results for discontinuous scratch fragments, which can result in multiple detections. To solve these problems, this study implements improvements on the basis of Faster RCNN [

17], which has achieved good effects in various fields [

18,

19,

20]. The main contributions of this work are as follows:

(1) We propose a feature enhancement module to extract high-frequency image feature information, improve the feature extraction ability of the shallow network, and resolve the problem of small objects missing detection. To avoid causing heavy computation cost, dynamic convolution [

21] is used instead of ordinary convolution to achieve balance between detection accuracy and speed.

(2) A predicted box aggregation method is proposed and used to aggregate the predicted boxes of repeated multiple detections and solve the problem of multiple detections. A directed graph structure is applied to describe the dependency between predicted boxes. In the aggregation process, the location information of redundant bounding boxes is used to fine-tune a real predicted bounding box and further improve the positioning accuracy of the model.

The rest of this paper is organized as follows:

Section 2 introduces the proposed method, including the general framework and the details of the improved modules.

Section 3 describes the dataset and reports the experimental results. Finally,

Section 4 summarizes this paper and points out future research directions.

2. Wafer Surface Defect Detection Algorithm

2.1. General Framework

The task of wafer surface defect detection requires high defect-positioning accuracy, so this study proposes an enhanced Faster RCNN model with an improved network structure. The overall framework of the improved network is shown in

Figure 2, where the red boxes are improved parts. The network structure consists of four modules, which are backbone, neck, head, and postprocess.

A backbone network is used to extract features from an image. In this study, ResNet50 [

22] was selected as the backbone network. ResNet50 has a powerful feature extraction capability and is composed of four residual modules. Each time the feature map passes through a residual module, the scale is reduced to half of the original, and the receptive field is enlarged so semantic information becomes increasingly abundant. When the input image size is 640 × 640 and the sizes of the characteristic maps outputted by the four residual modules are 160 × 160, 80 × 80, 40 × 40, and 20 × 20, the downsampling steps are 4, 8, 16, and 32, respectively. To improve the model-detection performance for small objects, this study added a feature enhancement module to the backbone network to extract high-frequency image features and uses dynamic convolution in the module to improve the feature extraction capability of that module.

The feature pyramid network (FPN) [

23], as the neck network, takes the feature maps of the four residual modules as input and uses the convolution kernel of 1 × 1 to unify the number of channels. Then, the deep-layer feature map is superimposed on the shallow-layer feature map via upsampling. The shallow feature map has many details, and its loss of small-object information is less; hence, it is suitable for small-object detection. After multiple convolution and downsampling operations, the deep feature map achieves a large receptive field and extracted semantic information is abundant, which are favorable for detecting large objects.

The head network is used for prediction based on the information processed from the neck network. The multiple feature maps outputted by the neck network are sent to the regional proposal network (RPN) to generate proposal boxes, and the RoI pooling method is used to extract the corresponding feature maps for prediction. The prediction part of the model flattens the feature maps and uses two full-connection layers for further feature extraction; afterward, it inputs the maps to the classification and regression branches for final prediction. The output results include predicted-box width and height, center-point coordinates, confidence level, and the probability of each category.

The role of the postprocess module is to refine the prediction results. All predicted boxes are post-treated; this includes softmax processing of category results, removal of low-probability and out-of-bounds bounding boxes, and nonmaximum suppression. This study added a predicted box aggregation method to the postprocess module, determined the dependency between predicted boxes by constructing a directed graph structure, removed redundant predicted boxes, and used the location information of redundant boxes to update the location information of effective predicted boxes. Thus, the positioning accuracy of the predicted results was effectively improved.

2.2. Feature Enhancement Module

The convolutional neural network usually increases the depth of a model to increase the receptive field. However, small-scale objects have low resolution and small proportions of pixels, and semantic information is easily lost after multiple downsampling operations; thus, transferring to the deep network is difficult. The key to detecting small-scale defects lies in the shallow network, but that network has a few convolution operations, has weak feature extraction ability, and only obtains limited semantic information, so the defect may be confused with the background in the prediction process. To effectively detect small-scale defects, this study added a feature enhancement module to the shallow network to improve the shallow network’s feature extraction ability for small objects while avoiding any considerable increase in computing cost.

The feature enhancement module aims to extract high-frequency information from a feature map. High-frequency features, such as the edges and textures of defects, can help in defect detection [

24]. The structure of the feature enhancement module is shown in

Figure 3. Given an input feature map,

F, a pooling kernel with a size of 5 × 5 and a stride of 5 are used for average pooling processing. Then, the size of the pooled feature map is adjusted to the original size via upsampling to obtain the low-frequency feature description,

Flow, of feature map

F. Feature map

F and low-frequency feature map

Flow are used in the difference operation to obtain the high-frequency feature information,

Fhigh, of feature map

F. The mathematical description thereof is as follows:

To make the network focus on effective defect-feature information and suppress invalid background information, the high-frequency feature map needs to be convolved, and useful information must be extracted from it. The use of a dense convolution module increases the computational overhead considerably because of the large scale of the high-frequency feature map. Therefore, this study introduces dynamic convolution to replace ordinary convolution; the former can effectively reduce the number of layers of ordinary convolution and demonstrate the same feature extraction capability. The high-frequency feature map, Fhigh, is fed into two ordinary convolutions and one dynamic convolution to extract useful feature information.

Dynamic convolution can adaptively adjust convolution-kernel parameters in accordance with the content of an input image. It is robust to changes in environment and product type in industrial scenes, and it neither increases the depth of the network model nor incurs too much computation cost. Dynamic convolution is composed of an attention module and a convolution module. The convolution module has K convolution kernels (

Wk) and corresponding weights (

πk). The attention module adopts the squeeze-and-extraction attention mechanism [

25], extracts global spatial information through global average pooling, and calculates K attention weights (

πk) through the full connected layer and the softmax layer. The K convolution kernels (

Wk) in the convolution module are multiplied by the corresponding weight (

πk) to form a new convolution kernel,

W, and the new convolution kernel is used for the convolution operation. The mathematical expression of the dynamic convolution process is as follows:

where

Attention represents the attention module;

πk is the weight calculated by the attention module;

Wk and

bk are the parameter and the bias of the Kth convolution kernel, respectively;

W(

x) and

b(

x) are the convolution-kernel parameter and bias after weight aggregation, respectively; and

g represents activation function. Dynamic convolution makes the weight of the convolution kernel relevant to the input through the attention mechanism and aggregates multiple convolution kernels in a nonlinear manner, which increases the feature extraction ability of the model at a small cost. Thus, the feature enhancement module can enrich the feature information of shallow feature maps, reduce information loss and data corruption, and help retain the features of small-scale defects.

2.3. Predicted Box Aggregation

Scratch defects are characterized by large spans and discontinuity due to image background interference, console failure, and other reasons. The object-detection model detects not only complete scratches but also discontinuous scratches, which reduce the accuracy of the model and affect the subsequent operation of the industrial production process. The reason for this phenomenon is related to the FPN structure of the model. To improve multiscale object-detection ability, object-detection models usually integrate the FPN to extract multiscale features, adopt shallow feature maps to detect small-scale objects, and apply deep feature maps to detect large-scale objects. As shown in

Figure 4, feature maps with different depths are extracted and visualized in the Faster RCNN network model.

Figure 4 shows that the receptive field of the deep feature map is large, and it can capture information from a long distance. In addition, the preset anchor-box scale is also large, so complete scratch defects can be easily detected. In a shallow feature map, the receptive field and preset anchor-box scale are both small, so only part of any defect can be detected. In addition, the semantic information of a deep feature map is rich, but its detail information is insufficient, leading to low position accuracy of predicted boxes. Meanwhile, shallow feature maps are rich in detail information, so bounding boxes predicted via a shallow feature map are highly accurate.

To solve the problem of multiple detections caused by discontinuous scratches, this study proposes an aggregation method of predicted boxes. First, a directed graph structure was constructed to represent the dependency between predicted boxes. Second, adjacent nodes were aggregated in accordance with the hierarchical relationship of the graph structure. Lastly, to make full use of the location information of the predicted boxes, the real predicted box was fine-tuned based on the confidence level and relative distance of the redundant boxes in the aggregation process to further improve the positioning accuracy.

2.3.1. Constructing a Directed Graph Structure

To describe the relationship between predicted boxes, all predicted boxes were divided into multiple graph structures in accordance with their categories and each intersection over union (IoU). IoU is a value that quantifies the degree of overlap between two boxes. The IoU value can be calculated via the following equation:

where ∩ represents the intersection area of two boxes and ∪ represents the union area of those two boxes.

β = {b

1, b

2, b

3, …} is defined as a set of predicted boxes after nonmaximum suppression processing. It is classified based on predicted category and arranged in ascending order in accordance with the areas of the predicted boxes to derive

βi = {b

i,1, b

i,2, b

i,3, …}, where

i represents the

ith category. Dividing based on category can prevent aggregation of predicted boxes between different categories. For predicted box b

i,j, an attempt is made to find the predicted box, b

i,k, with the largest IoU; the area of b

i,k is bigger than the area of b

i,j in set

βi. If the IoU value exceeds the set threshold,

T (0.15 in this study), a directed edge from b

i,j, will be connected to b

i,k. As indicated in

Figure 5a, the Faster RCNN model outputs four predicted boxes (

β = {A, B, C, D}) for a scratch defect, where A is the effective predicted box of the defect and the rest refers to the partial-content predicted boxes of the defect. In accordance with directed-graph-structure construction rules, the directed graph structure in

Figure 5b was obtained.

Figure 5b shows that no corresponding edge exists between the predicted boxes without intersection (between B and C, D), so they will not affect each other in the subsequent aggregation process. The transfer order of the directed graph is based on area size, and the node with an in-degree of 0 is used as the starting node. From C to D and finally to A, the area of the predicted boxes increases in turn.

2.3.2. Fine Adjustment of the Predicted Box

In the directed graph, the node with an out-degree of 0 is the real predicted box, and the rest of the nodes are redundant predicted boxes with multiple repeated detections. If only the redundant predicted boxes were removed, the prediction information of the model would not be fully utilized. Redundant predicted boxes are mostly generated via the shallow feature map. The shallow feature map is rich in detail information, and the position of a bounding box predicted by the shallow feature map is usually accurate. Therefore, using the shallow bounding boxes to adjust the deep bounding boxes can increase the positioning accuracy of the bounding boxes and effectively improve prediction-information utilization. From the node with an in-degree of zero in the directed graph structure, the position of the bounding box for the subsequent nodes is adjusted in turn, and some compensation is made for confidence. Specifically, bs and be are defined as the start node and the end node of a directed edge, and the position information of bs is used to fine-tune be. The specific steps are as follows:

(1) Select the coordinate position (x, y) to be adjusted: The coordinate position to be adjusted is determined based on the center positions (cs, ce) of predicted boxes bs and be. The corresponding adjustment position is selected based on the position of cs relative to ce. For example, if cs is in the upper-left corner from ce, then the upper-left corner of the predicted box will be chosen to be adjusted.

(2) Adjust the coordinate of the bounding box,

be: Take the

x-coordinate, for example; the coordinate adjustment distance is defined as |

xs−

xe|. In consideration of the influences of the distance and confidence between predicted boxes on coordinate adjustment, the adjustment distance should be multiplied by a correlation coefficient. If the confidence level of

bs is high, a large adjustment range should be provided; otherwise, the adjustment range should be reduced. Therefore, the square of

be’s confidence level is introduced as the constraint factor (

scoree2). If the boundary distance between

bs and

be is large,

bs may be a predicted box of the object center position and therefore cannot provide the location information of the boundary, so the adjustment range should be reduced. The result of dividing the boundary distance by the center distance is utilized as the distance constraint and normalized to the range of (0–1) via the tanh function. Euclidean distance is used to calculate center distance. The specific calculation formula of coordinate adjustment is as follows:

(3) Confidence compensation: The high-confidence prediction box represents highly accurate and reliable location information. After the location information adjustment is received,

be should improve the corresponding confidence. This study uses the following formula to compensate for the confidence of

be. In this formula,

γ represents the degree of compensation (in this study,

γ is set to 0.25).

(4) Remove redundant box: Predicted box bs is removed, and be is utilized as a new starting node to adjust the subsequent nodes.

In Algorithm 1, the aggregation method of predicted boxes is summarized.

| Algorithm 1 Predicted Box Aggregation |

Inputs: Predicted box set β

1. Classify β as βi by the predicted category

2. For i in category do

3. Construct a directed graph set G from βi

4. For all graphs, g, in G, do

5. Select the node with an in-degree of 0 as start node bs

6. Adjust the subsequent node, be, using Equation (3)

7. Compensate for the confidence of be using Equation (4)

8. Remove bs and the corresponding box from β

9. End for

10. End for |

4. Conclusions

To solve the problems of missing small-scale defects and multiple detections of discontinuous defects, this study proposes an improved Faster RCNN algorithm for wafer surface defect detection. A feature enhancement module based on high-frequency features and dynamic convolution was employed to improve the feature extraction abilities of shallow networks and enrich the semantic information of multiscale feature maps without greatly increasing computation burden. Through addition of a predicted box aggregation method to the postprocessing stage, repeated and multidetected predicted boxes were aggregated to generate highly accurate predicted boxes, which improved the model-detection accuracy. The experimental results showed that compared with the mAP value of the original Faster RCNN algorithm, the mAP value of the improved algorithm in this study increased by 8.2%. The detection performance of the proposed algorithm was also better than that of other object-detection models, but the inference speed of this algorithm was relatively poor. Our future work will further optimize the network structure, use a lightweight backbone network, reduce the number of parameters and the computation cost, increase the detection speed, and ensure detection accuracy.