BSTC: A Fake Review Detection Model Based on a Pre-Trained Language Model and Convolutional Neural Network

Abstract

1. Introduction

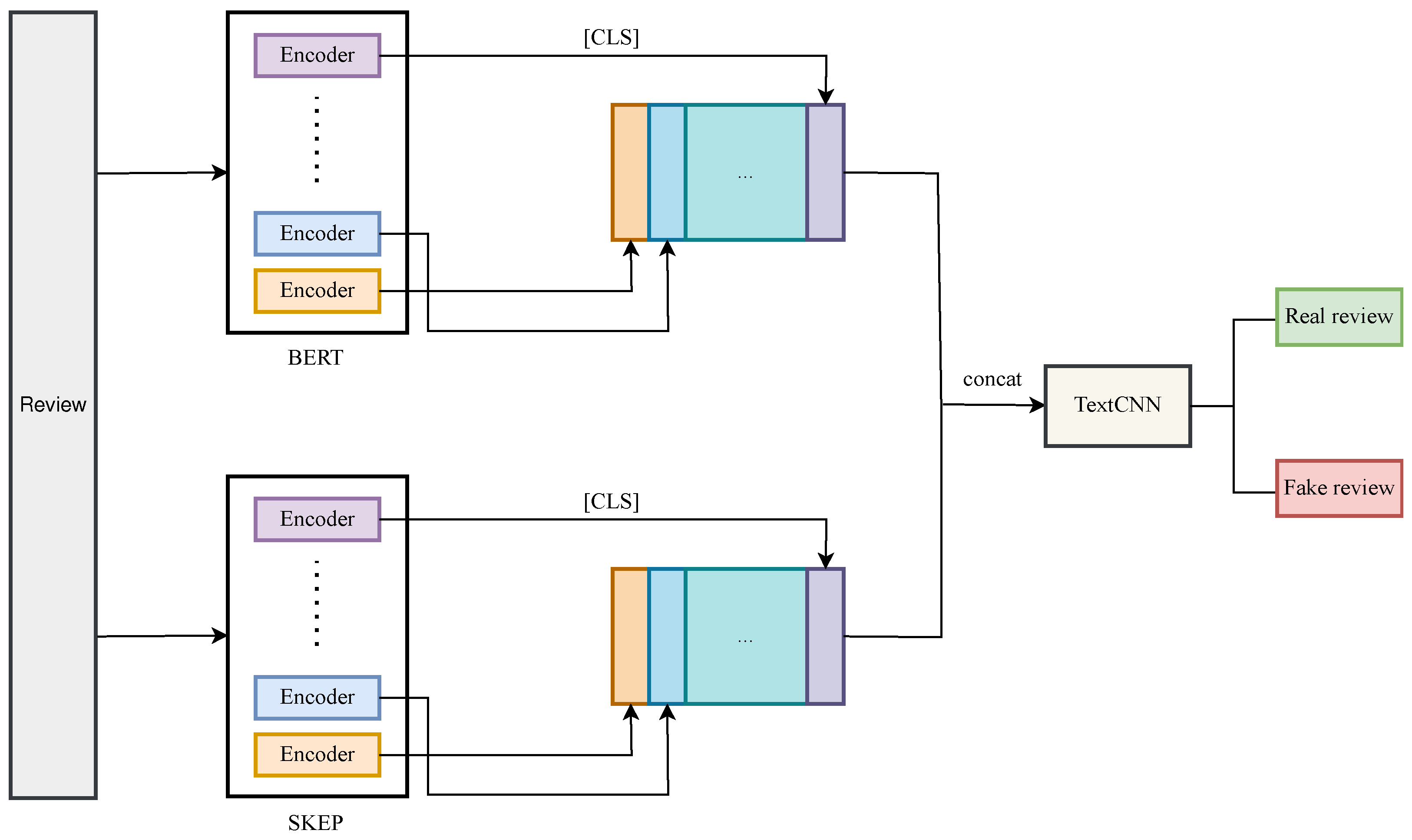

- The advantages of pre-trained language models and convolutional neural networks are combined in our newly suggested model. The output of the pre-trained model is input into TextCNN [12], and TextCNN is utilized to further extract the local features and critical information of reviews to enhance the model’s performance in fake review detection.

- Finally, we executed a variety of experiments to assess the performance of BSTC, and the findings illustrate that our model outperformed others.

2. Related Work

3. Proposed Method

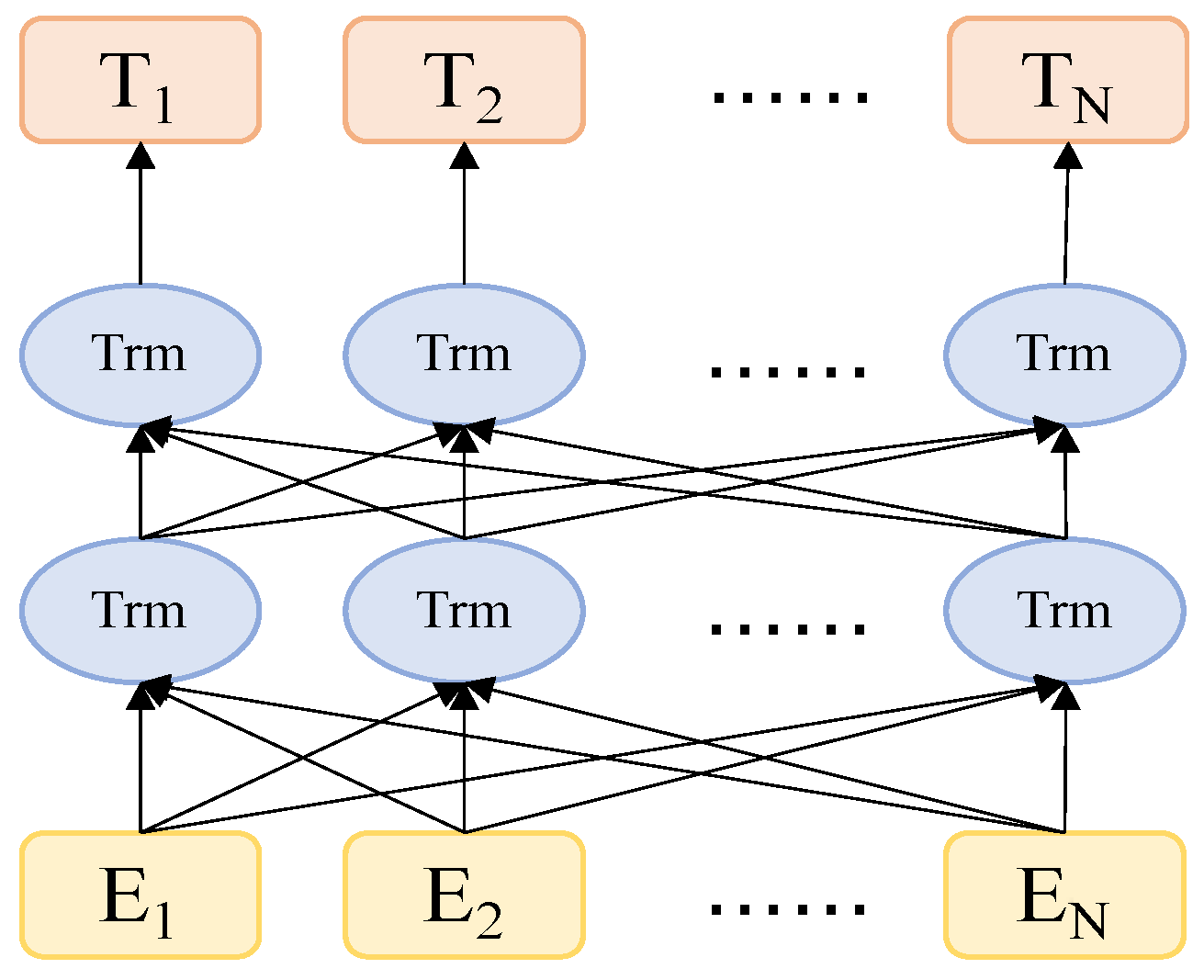

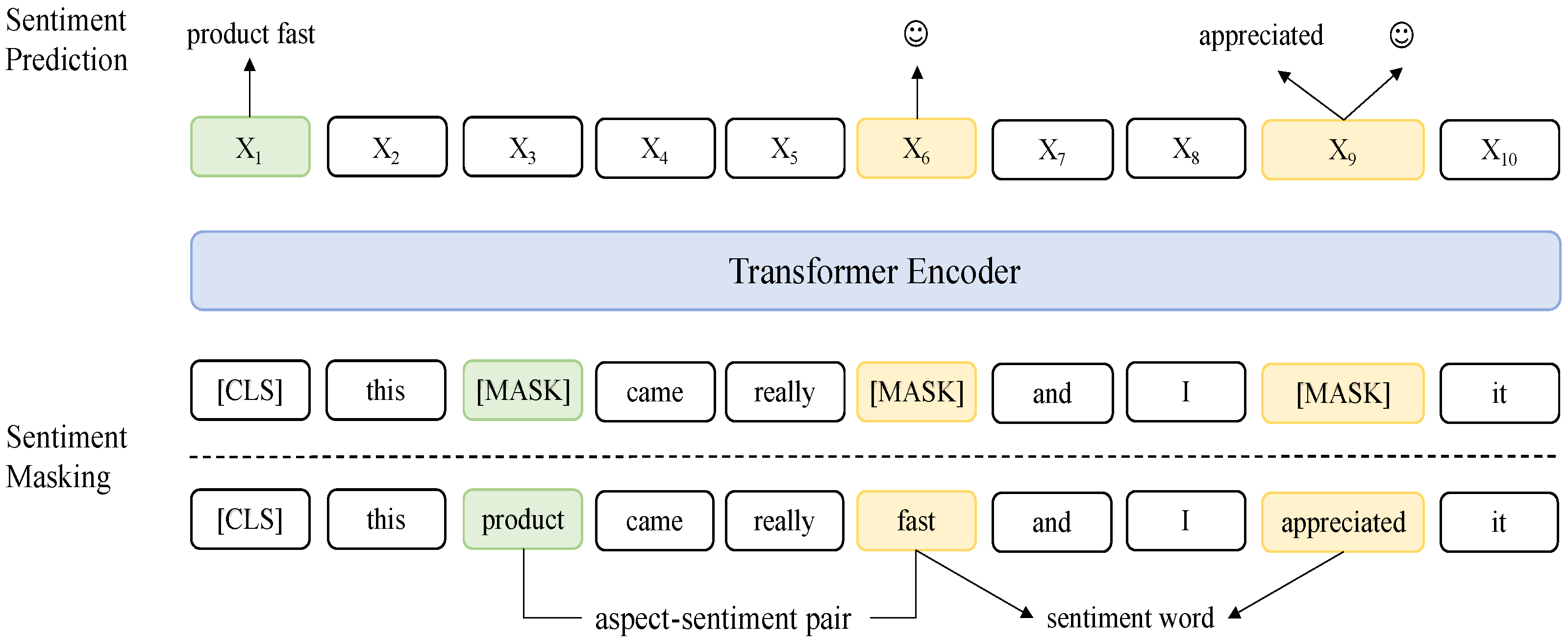

3.1. BERT–SKEP Layer

3.2. TextCNN Layer

4. Experiments and Discussion

4.1. Datasets and Experimental Setup

4.2. Evaluation Metrics

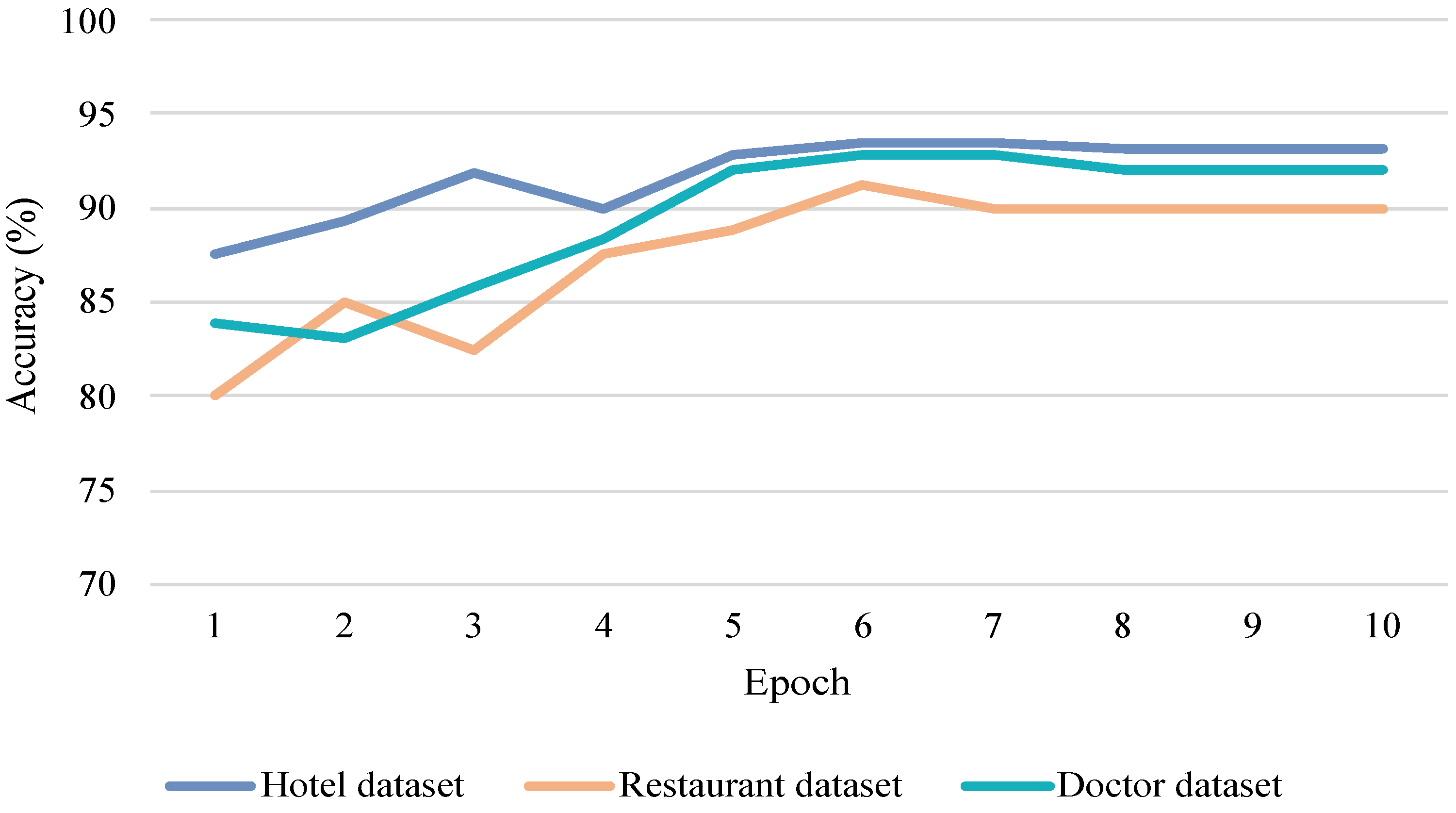

4.3. Effect of the Training Epochs

4.4. Experimental Results

- SAGE [36] is a generative Bayesian technique that combines the topic model and the generalized additive model.

- CNN [20] uses a pre-trained CBOW model, which includes 100 pre-trained word embeddings.

- The SCNN [19] model is made up of two convolution layers. The synthesis of each sentence through a fixed-length window is known as sentence convolution. The sentence vector is transformed into a document vector by using document convolution.

- SWNN [19] is an improved document representation model based on a CNN. SWNN learns the matching text representation and weight vector from the sentence and document levels, respectively, and combines them to generate a document representation vector, which is then employed to classify fake reviews.

- ST-MFLC [37] captures local, temporal, and weighted semantic information from reviews by using three different models. They are then combined to generate the final representation of the document.

- DFFNN [21] is a multilayer perceptron neural network that can deal with sophisticated sparse text representations. DFFNN learns document-level representations by utilizing n-grams, word embeddings, and three types of lexicon-based sentiment indicators.

- DSRHA [26] is a two-level hierarchical attention architecture used to detect fake reviews.

- EKI-SM [38] incorporates the TF-IDF algorithm. The n-gram model is used to capture high-dimensional sparse characteristics and sentiment features from reviews, and neural networks are used to classify the reviews.

- The BERT [10] model used in this paper is BERT, which contains 24 encoder layers, and the hidden dimension is 1024. By adding an additional output layer, BERT is employed for fake review detection.

4.5. Ablation Study

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Jindal, N.; Liu, B. Analyzing and Detecting Review Spam. In Proceedings of the Seventh IEEE International Conference on Data Mining (ICDM 2007), Omaha, NE, USA, 28–31 October 2007; pp. 547–552. [Google Scholar] [CrossRef]

- Jindal, N.; Liu, B. Review Spam Detection. In Proceedings of the 16th International Conference on World Wide Web, WWW’07, Banff, AB, Canada, 8–12 May 2007; Association for Computing Machinery: New York, NY, USA, 2007; pp. 1189–1190. [Google Scholar] [CrossRef]

- Jindal, N.; Liu, B. Opinion Spam and Analysis. In Proceedings of the 2008 International Conference on Web Search and Data Mining, WSDM’08, Palo Alto, CA, USA, 11–12 February 2008; Association for Computing Machinery: New York, NY, USA, 2008; pp. 219–230. [Google Scholar] [CrossRef]

- Ott, M.; Cardie, C.; Hancock, J. Estimating the Prevalence of Deception in Online Review Communities. In Proceedings of the 21st International Conference on World Wide Web, WWW’12, Lyon, France, 16–20 April 2012; Association for Computing Machinery: New York, NY, USA, 2012; pp. 201–210. [Google Scholar] [CrossRef]

- Ullrich, S.; Brunner, C.B. Negative online consumer reviews: Effects of different responses. J. Prod. Brand Manag. 2015, 24, 66–77. [Google Scholar] [CrossRef]

- Zhang, Y.; Jin, R.; Zhou, Z.H. Understanding bag-of-words model: A statistical framework. Int. J. Mach. Learn. Cybern. 2010, 1, 43–52. [Google Scholar] [CrossRef]

- Sudhakaran, P.; Hariharan, S.; Lu, J. A framework investigating the online user reviews to measure the biasness for sentiment analysis. Asian J. Inf. Technol. 2016, 15, 1890–1898. [Google Scholar]

- Wu, Y.; Ngai, E.W.; Wu, P.; Wu, C. Fake online reviews: Literature review, synthesis, and directions for future research. Decis. Support Syst. 2020, 132, 113280. [Google Scholar] [CrossRef]

- Qiu, X.; Sun, T.; Xu, Y.; Shao, Y.; Dai, N.; Huang, X. Pre-trained models for natural language processing: A survey. Sci. China Technol. Sci. 2020, 63, 1872–1897. [Google Scholar] [CrossRef]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Tian, H.; Gao, C.; Xiao, X.; Liu, H.; He, B.; Wu, H.; Wang, H.; Wu, F. SKEP: Sentiment Knowledge Enhanced Pre-training for Sentiment Analysis. arXiv 2020, arXiv:2005.05635. [Google Scholar]

- Kim, Y. Convolutional Neural Networks for Sentence Classification. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing, Doha, Qatar, 25–29 October 2014. [Google Scholar] [CrossRef]

- Rubin, T.N.; Chambers, A.; Smyth, P.; Steyvers, M. Statistical topic models for multi-label document classification. Mach. Learn. 2012, 88, 157–208. [Google Scholar] [CrossRef]

- Wu, F.; Huberman, B.A. Opinion Formation under Costly Expression. ACM Trans. Intell. Syst. Technol. 2010, 1, 5. [Google Scholar] [CrossRef]

- Li, F.; Huang, M.; Yang, Y.; Zhu, X. Learning to Identify Review Spam. In Proceedings of the Twenty-Second International Joint Conference on Artificial Intelligence—Volume Volume Three, IJCAI’11, Barcelona, Spain, 16–22 July 2011; AAAI Press: Menlo Park, CA, USA, 2011; pp. 2488–2493. [Google Scholar]

- Feng, S.; Banerjee, R.; Choi, Y. Syntactic Stylometry for Deception Detection. In Proceedings of the 50th Annual Meeting of the Association for Computational Linguistics: Short Papers, ACL’12, Jeju Island, Republic of Korea, 8–14 July 2012; Association for Computational Linguistics: Stroudsburg, PA, USA, 2012; Volume 2, pp. 171–175. [Google Scholar]

- Elmurngi, E.; Gherbi, A. An empirical study on detecting fake reviews using machine learning techniques. In Proceedings of the 2017 Seventh International Conference on Innovative Computing Technology (INTECH), Luton, UK, 16–18 August 2017; pp. 107–114. [Google Scholar] [CrossRef]

- Harris, C.G. Detecting Deceptive Opinion Spam Using Human Computation. In Proceedings of the AAAI Workshop on Human Computation, Virtual, 6–10 November 2012. [Google Scholar]

- Li, L.; Qin, B.; Ren, W.; Liu, T. Document representation and feature combination for deceptive spam review detection. Neurocomputing 2017, 254, 33–41. [Google Scholar] [CrossRef]

- Ren, Y.; Ji, D. Neural networks for deceptive opinion spam detection: An empirical study. Inf. Sci. 2017, 385–386, 213–224. [Google Scholar] [CrossRef]

- Hajek, P.; Barushka, A.; Munk, M. Fake consumer review detection using deep neural networks integrating word embeddings and emotion mining. Neural Comput. Appl. 2020, 32, 17259–17274. [Google Scholar] [CrossRef]

- Lai, S.; Xu, L.; Liu, K.; Zhao, J. Recurrent Convolutional Neural Networks for Text Classification. In Proceedings of the AAAI Conference on Artificial Intelligence, Austin, TX, USA, 25–30 January 2015; Volume 29. [Google Scholar] [CrossRef]

- Severyn, A.; Moschitti, A. UNITN: Training Deep Convolutional Neural Network for Twitter Sentiment Classification. In Proceedings of the 9th International Workshop on Semantic Evaluation (SemEval 2015), Denver, CO, USA, 4–5 June 2015. [Google Scholar] [CrossRef]

- Nguyen, T.; Shirai, K. PhraseRNN: Phrase Recursive Neural Network for Aspect-based Sentiment Analysis. In Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing, Lisbon, Portugal, 17–21 September 2015; pp. 2509–2514. [Google Scholar] [CrossRef]

- Shahi, T.; Sitaula, C.; Paudel, N. A Hybrid Feature Extraction Method for Nepali COVID-19-Related Tweets Classification. Comput. Intell. Neurosci. 2022, 2022, 5681574. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y.; Wang, L.; Shi, T.; Li, J. Detection of spam reviews through a hierarchical attention architecture with N-gram CNN and Bi-LSTM. Inf. Syst. 2022, 103, 101865. [Google Scholar] [CrossRef]

- Pennington, J.; Socher, R.; Manning, C. Glove: Global Vectors for Word Representation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014. [Google Scholar] [CrossRef]

- Mikolov, T.; Corrado, G.; Chen, K.; Dean, J. Efficient Estimation of Word Representations in Vector Space. arXiv 2013, arXiv:1301.3781. [Google Scholar]

- Zhu, Y.; Zheng, W.; Tang, H. Interactive Dual Attention Network for Text Sentiment Classification. Comput. Intell. Neurosci. 2020, 2020, 8858717. [Google Scholar] [CrossRef] [PubMed]

- Li, X.; Bing, L.; Zhang, W.; Lam, W. Exploiting BERT for End-to-End Aspect-based Sentiment Analysis. arXiv 2019, arXiv:1910.00883. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.u.; Polosukhin, I. Attention is All you Need. In Proceedings of the Advances in Neural Information Processing Systems; Guyon, I., Luxburg, U.V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2017; Volume 30. [Google Scholar]

- Turney, P.D. Thumbs up or Thumbs down? Semantic Orientation Applied to Unsupervised Classification of Reviews. In Proceedings of the 40th Annual Meeting on Association for Computational Linguistics, ACL’02, Philadelphia, PA, USA, 6–12 July 2002; Association for Computational Linguistics: Stroudsburg, PA, USA, 2002; pp. 417–424. [Google Scholar] [CrossRef]

- Christlein, V.; Spranger, L.; Seuret, M.; Nicolaou, A.; Král, P.; Maier, A. Deep Generalized Max Pooling. In Proceedings of the 2019 International Conference on Document Analysis and Recognition (ICDAR), Sydney, Australia, 20–25 September 2019; pp. 1090–1096. [Google Scholar] [CrossRef]

- Ott, M.; Choi, Y.; Cardie, C.; Hancock, J.T. Finding Deceptive Opinion Spam by Any Stretch of the Imagination. In Proceedings of the 49th Annual Meeting of the Association for Computational Linguistics: Human Language Technologies, HLT’11, Portland, OR, USA, 19–24 June 2011; Association for Computational Linguistics: Stroudsburg, PA, USA, 2011; Volume 1, pp. 309–319. [Google Scholar]

- Ott, M.; Cardie, C.; Hancock, J.T. Negative deceptive opinion spam. In Proceedings of the 2013 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Atlanta, GA, USA, 9–14 June 2013; pp. 497–501. [Google Scholar]

- Li, J.; Ott, M.; Cardie, C.; Hovy, E. Towards a General Rule for Identifying Deceptive Opinion Spam. In Proceedings of the 52nd Annual Meeting of the Association for Computational Linguistics, Baltimore, MD, USA, 23–24 June 2014; Volume 1, pp. 1566–1576. [Google Scholar] [CrossRef]

- Cao, N.; Ji, S.; Chiu, D.K.; Gong, M. A deceptive reviews detection model: Separated training of multi-feature learning and classification. Expert Syst. Appl. 2022, 187, 115977. [Google Scholar] [CrossRef]

- Han, S.; Wang, H.; Li, W.; Zhang, H.; Zhuang, L. Explainable knowledge integrated sequence model for detecting fake online reviews. Appl. Intell. 2023, 53, 6953–6965. [Google Scholar] [CrossRef]

| Method | Description |

|---|---|

| NB [15], K-NN [17], and SVM [18] | These traditional methods require manual feature extraction, which leads to a large amount of manual participation when processing large datasets, and they are prone to feature redundancy, which makes them difficult to expand and limited in accuracy. |

| SWNN [19] | SWNN is an improved document representation model based on a CNN. To better learn the semantics of documents, SWNN captures the importance of different sentences by synthesizing sentence representations into document representations. |

| CNN-GRNN [20] | This model uses a CNN to acquire sentence-level representations before integrating document-level representations of sentences through GRNN. |

| DFFNN [21] | Previous neural-network-based methods only considered word embedding and ignored the sentiment index of reviews. The improved DFFNN model proposed by Hajek et al. learns document-level representations of reviews by using n-grams, word embeddings, and three lexicon-based emotion indicators. |

| BERT [10] | BERT is a popular pre-trained language model. BERT can build dynamic word embedding representations to better represent contextual semantics and maintain the best results in most NLP fields. Although BERT has shown a great ability to learn the general semantics of texts, it does not explicitly study the emotional information of texts in the pre-training process, so it is difficult to expect it to provide the best results for sentiment analysis of review texts. |

| BSTC (this study) | BSTC is based on BERT, SKEP, and TextCNN. BSTC not only considers general semantic information, but also captures emotional features through SKEP. To obtain an emotional semantic representation, SKEP employs unsupervised approaches to autonomously mine sentiment knowledge. Compared with lexicon-based sentiment analysis methods, SKEP can capture more emotional information more comprehensively and accurately. |

| Dataset | # of Real/Fake Reviews | Polarity | Total |

|---|---|---|---|

| Hotel [34,35] | 800/800 | Positive and negative | 1600 |

| Restaurant [36] | 200/200 | Positive | 400 |

| Doctor [36] | 200/356 | Positive | 556 |

| Model | Hotel Dataset | Restaurant Dataset | Doctor Dataset | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Acc | F1 | P | R | Acc | F1 | P | R | Acc | F1 | P | R | |

| K-NN [17] | 71.38 | 67.80 | – | – | 72.14 | 69.20 | – | – | 71.13 | 78.60 | – | – |

| NB [15] | 81.25 | 81.70 | – | – | 80.58 | 81.30 | – | – | 81.02 | 85.30 | – | – |

| SAGE [36] | 81.80 | 82.60 | 81.20 | 84.00 | 81.70 | 82.80 | 84.20 | 81.60 | 74.50 | 73.50 | 77.20 | 70.10 |

| SWNN [19] | – | 83.70 | 84.10 | 83.30 | – | 87.60 | 87.00 | 88.20 | – | 82.90 | 85.00 | 81.00 |

| CNN [20] | 84.88 | 85.00 | – | – | 79.61 | 80.30 | – | – | 77.96 | 83.90 | – | – |

| SCNN [19] | 86.44 | 86.30 | – | – | 89.30 | 89.80 | – | – | 87.81 | 90.60 | – | – |

| ST-MFLC [37] | 88.00 | 88.00 | 88.10 | 88.00 | 85.00 | 85.00 | 85.30 | 85.00 | 90.30 | 90.20 | 90.30 | 90.30 |

| DFFNN [21] | 89.56 | 89.60 | – | – | 88.31 | 88.40 | – | – | 86.21 | 89.30 | – | – |

| DSRHA [26] | – | – | – | – | 77.50 | 80.90 | 90.50 | 73.10 | 91.00 | 92.80 | 97.00 | 88.90 |

| EKI-SM [38] | 90.75 | 90.72 | – | – | – | – | – | – | – | – | – | – |

| BERT | 90.94 | 91.29 | 87.86 | 95.00 | 88.75 | 89.16 | 86.05 | 92.50 | 88.39 | 90.91 | 91.55 | 90.28 |

| BSTC | 93.44 | 93.36 | 90.64 | 96.88 | 91.25 | 91.57 | 88.37 | 95.00 | 92.86 | 94.29 | 97.06 | 91.67 |

| Model | Hotel Dataset | Restaurant Dataset | Doctor Dataset | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Acc | F1 | P | R | Acc | F1 | P | R | Acc | F1 | P | R | |

| BSTC | 93.44 | 93.36 | 90.64 | 96.88 | 91.25 | 91.57 | 88.37 | 95.00 | 92.86 | 94.29 | 97.06 | 91.67 |

| w/o BERT | 92.50 | 92.77 | 89.53 | 96.25 | 91.25 | 91.57 | 88.37 | 95.00 | 91.07 | 93.15 | 91.89 | 94.44 |

| w/o SKEP | 91.87 | 92.17 | 88.95 | 95.63 | 90.00 | 90.24 | 88.10 | 92.50 | 89.29 | 91.43 | 94.12 | 88.89 |

| w/o BERT+TextCNN | 91.87 | 92.07 | 89.88 | 94.37 | 90.00 | 90.24 | 88.10 | 92.50 | 90.18 | 92.41 | 91.78 | 93.06 |

| w/o SKEP+TextCNN | 90.94 | 91.29 | 87.86 | 95.00 | 88.75 | 89.16 | 86.05 | 92.50 | 88.39 | 90.91 | 91.55 | 90.28 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lu, J.; Zhan, X.; Liu, G.; Zhan, X.; Deng, X. BSTC: A Fake Review Detection Model Based on a Pre-Trained Language Model and Convolutional Neural Network. Electronics 2023, 12, 2165. https://doi.org/10.3390/electronics12102165

Lu J, Zhan X, Liu G, Zhan X, Deng X. BSTC: A Fake Review Detection Model Based on a Pre-Trained Language Model and Convolutional Neural Network. Electronics. 2023; 12(10):2165. https://doi.org/10.3390/electronics12102165

Chicago/Turabian StyleLu, Junwen, Xintao Zhan, Guanfeng Liu, Xinrong Zhan, and Xiaolong Deng. 2023. "BSTC: A Fake Review Detection Model Based on a Pre-Trained Language Model and Convolutional Neural Network" Electronics 12, no. 10: 2165. https://doi.org/10.3390/electronics12102165

APA StyleLu, J., Zhan, X., Liu, G., Zhan, X., & Deng, X. (2023). BSTC: A Fake Review Detection Model Based on a Pre-Trained Language Model and Convolutional Neural Network. Electronics, 12(10), 2165. https://doi.org/10.3390/electronics12102165