Unified Object Detector for Different Modalities Based on Vision Transformers

Abstract

:1. Introduction

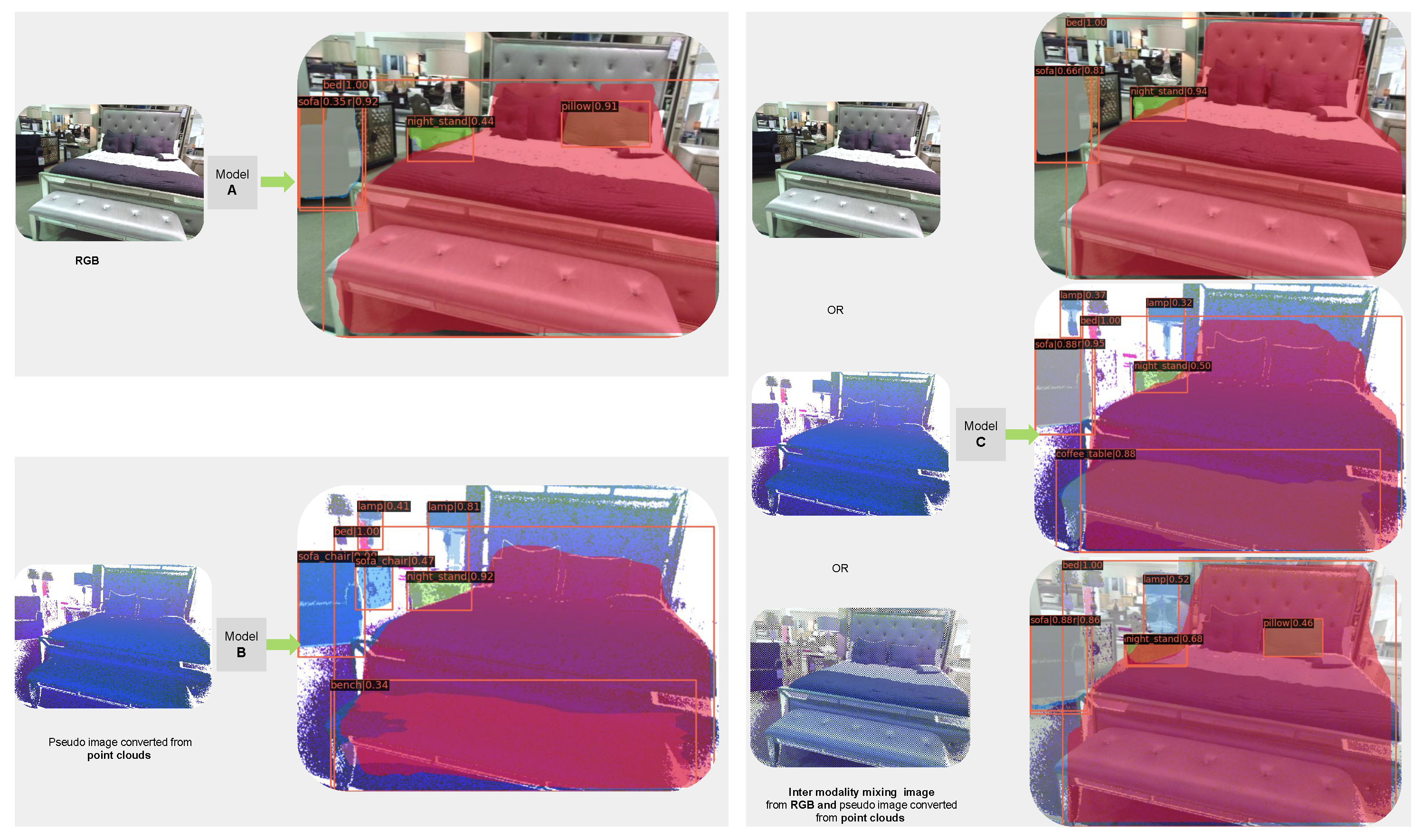

- Can a unified model achieve comparable or superior performance in processing both RGB images and pseudo images converted from point clouds?

- If a unified model that processes both RGB and pseudo images is feasible, can the RGB and pseudo images be further fused to enhance the model’s ability to process both RGB and point cloud data?

- We propose two inter-modality mixing methods which can combine the data from different modalities to further feed to our unified model.

- We propose a unified model which can process any of the following images: RGB images, pseudo images converted from point clouds or inter-modality mixing of RGB image, and pseudo images converted from point clouds. This unified model achieves similar performance to RGB-only models and point-cloud-only models. Meanwhile, by using the inter-modality mixing data as input, our model can achieve a significantly better 2D detection performance.

- We open source our code, training/testing logs, and model checkpoints.

2. Related Work

3. Methodology

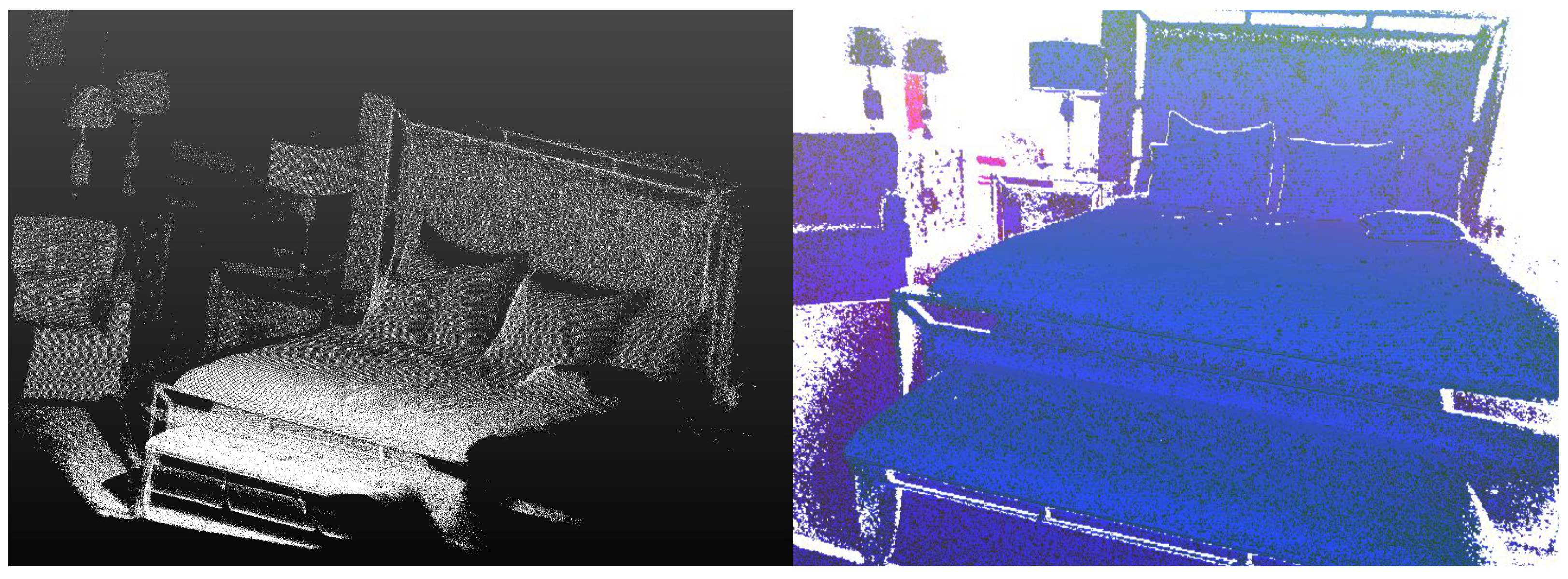

3.1. Convert Point Clouds to Pseudo 2D Images

3.2. Inter-Modality Mixing

- Per Patch Mixing (PPM): divide the whole image into different patches with equal patch size. Randomly or alternatively select one image source for each patch.

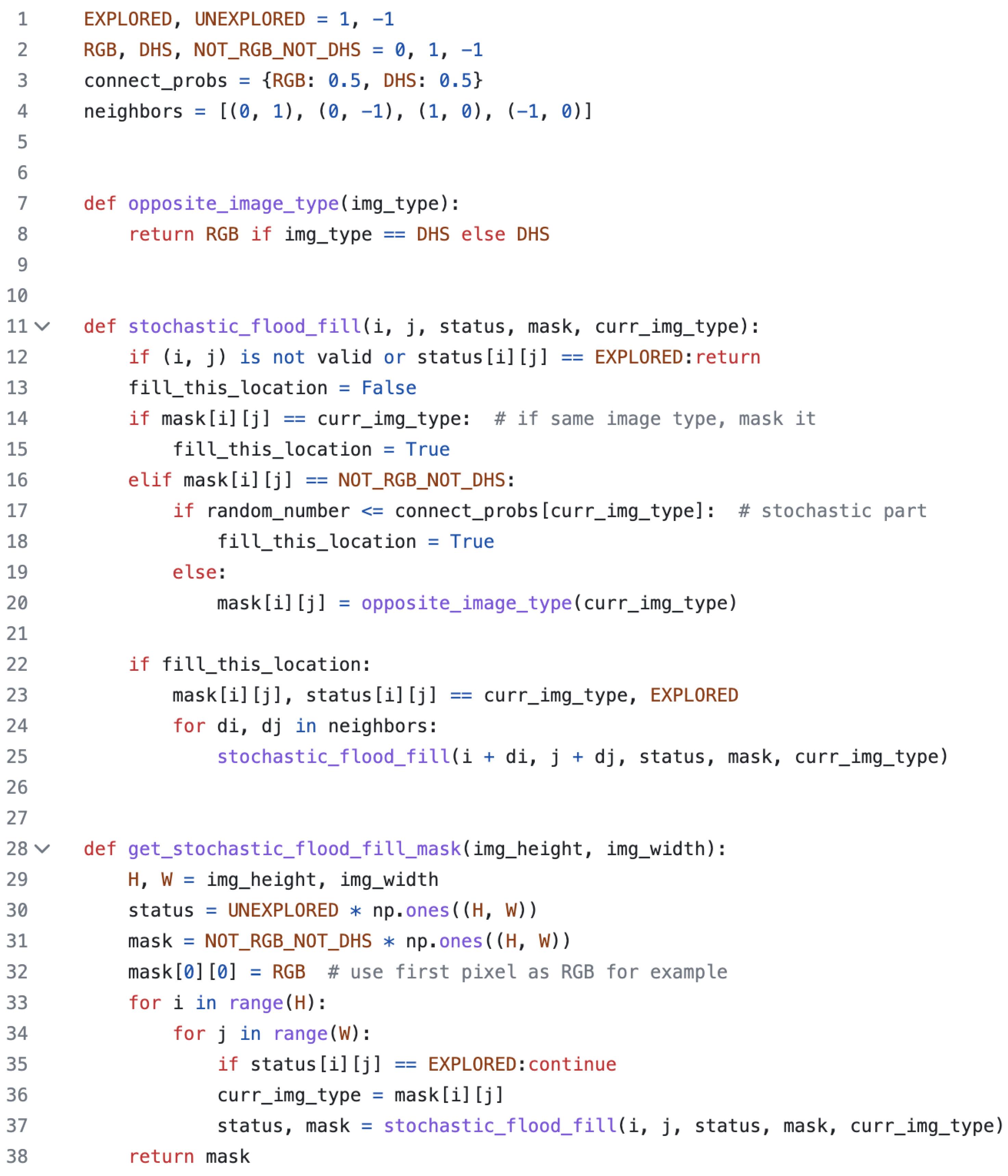

- Stochastic Flood Fill Mixing (SFFM): Using a stochastic way to mix the images from different modalities.

3.3. Two-Dimensional Detection Framework

3.4. Two-Dimensional Detection Backbone Networks

- Swin-T: C = 96, layer numbers = .

- Swin-S: C = 96, layer numbers = .

3.5. SUN RGB-D Dataset Used in This Work

3.6. Pre-Training

3.7. Fine-Tuning

4. Results on the SUN RGB-D Dataset

4.1. Experiments

4.2. Evaluation Metrics

4.3. Evaluation Subgroups

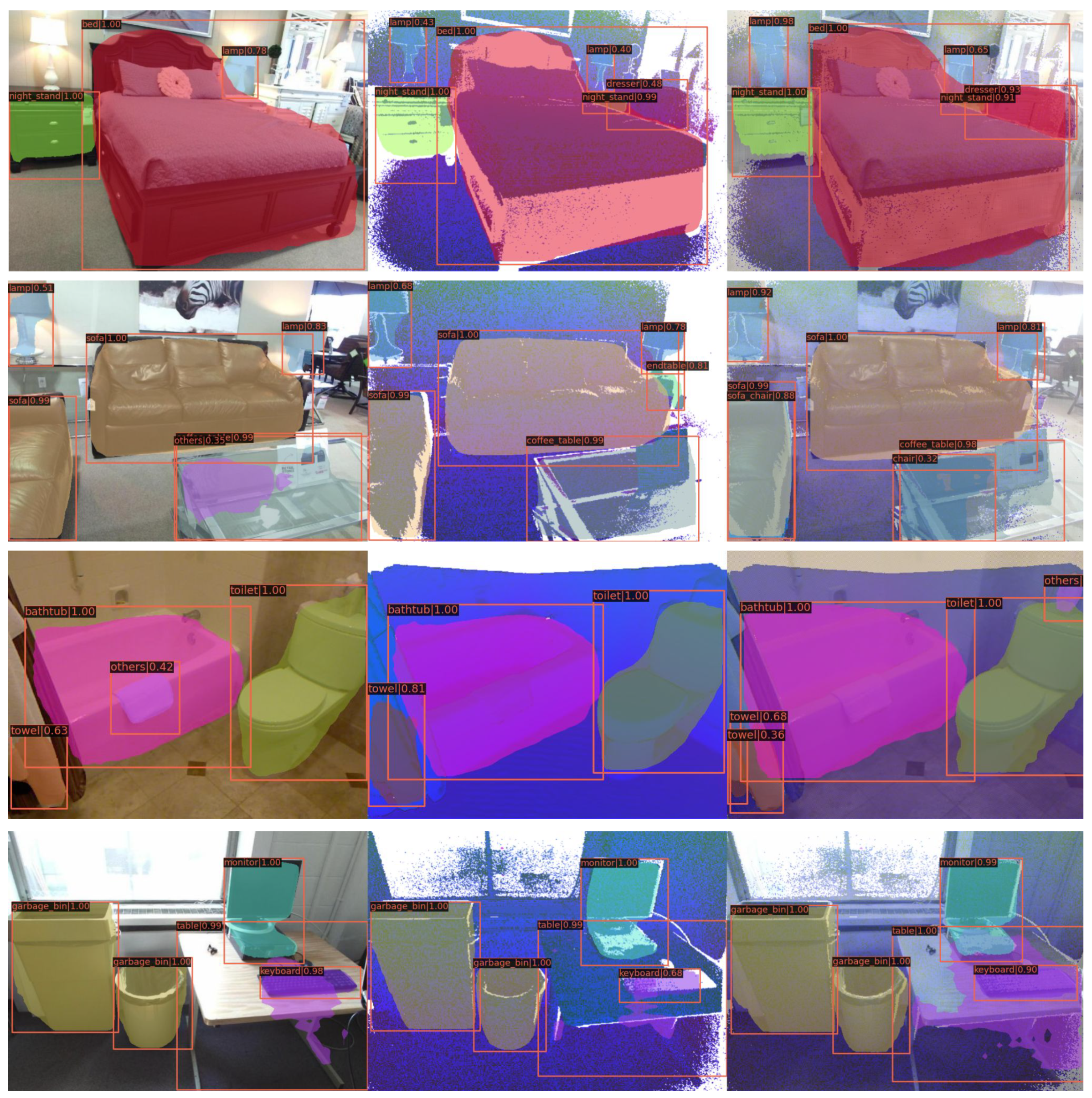

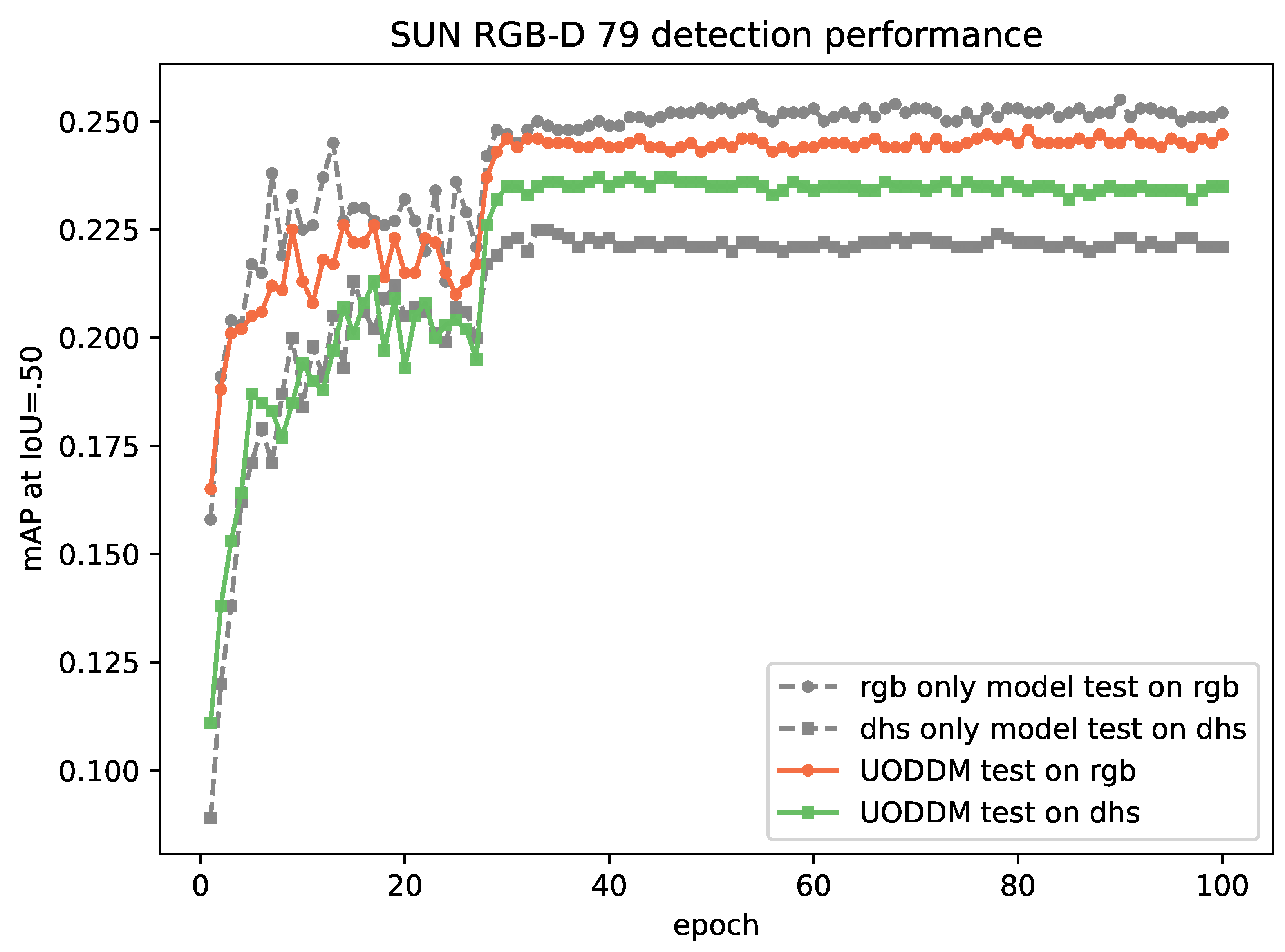

4.4. The Performance of UODDM without Inter-Modality Mixing

4.5. The Performance of UODDM with Inter-Modality Mixing

4.6. Influence of Different Backbone Networks

4.7. Comparison with Other Methods

4.8. More Results Based on Extra Evaluation Metrics

4.9. Number of Parameters and Inference Time

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A. More Results

| Method | Test on | Backbone Network | SUNRGBD10 | SUNRGBD16 | SUNRGBD66 | SUNRGBD79 | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| AP | AP50 | AP75 | AP | AP50 | AP75 | AP | AP50 | AP75 | AP | AP50 | AP75 | APS | APM | APL | |||

| RGB only (Ours) | RGB | Swin-T | 29.6 | 54.2 | 28.9 | 28.6 | 52.3 | 28.3 | 15.4 | 29.3 | 14.3 | 13.1 | 25.2 | 12.1 | 1.0 | 5.2 | 16.8 |

| UODDM (Ours) | RGB | Swin-T | 30.7 | 53.9 | 30.9 | 29.5 | 52.5 | 29.7 | 15.3 | 28.7 | 14.5 | 13.1 | 24.7 | 12.2 | 0.2 | 4.6 | 16.9 |

| UODDM + CPPM (Ours) | RGB | Swin-T | 30.9 | 54.2 | 30.5 | 29.2 | 51.9 | 28.9 | 14.5 | 27.7 | 13.2 | 12.4 | 23.7 | 11.1 | 0.7 | 4.1 | 15.7 |

| UODDM (Ours) | RGB | Swin-S | 31.8 | 54.7 | 32.3 | 30.0 | 52.5 | 29.6 | 15.1 | 28.2 | 13.7 | 12.9 | 24.4 | 11.6 | 0.4 | 4.2 | 16.1 |

| UODDM + CPPM (Ours) | RGB | Swin-S | 31.3 | 54.6 | 31.3 | 29.9 | 52.7 | 29.6 | 14.6 | 27.5 | 13.5 | 12.4 | 23.6 | 11.4 | 0.7 | 3.9 | 15.5 |

| CrossTrans [3] | DHS | Swin-T | 33.3 | 55.8 | 34.7 | 30.7 | 52.7 | 31.5 | 14.3 | 26.1 | 14.0 | 12.0 | 22.1 | 11.7 | 0.6 | 4.7 | 15.2 |

| UODDM (Ours) | DHS | Swin-T | 34.0 | 56.6 | 34.9 | 31.4 | 53.4 | 31.9 | 15.4 | 27.7 | 14.8 | 13.0 | 23.5 | 12.4 | 0.4 | 4.6 | 16.4 |

| UODDM + CPPM (Ours) | DHS | Swin-T | 33.8 | 55.8 | 35.9 | 31.3 | 52.8 | 32.5 | 14.9 | 26.3 | 14.7 | 12.6 | 22.4 | 12.4 | 0.6 | 4.4 | 16.0 |

| UODDM (Ours) | DHS | Swin-S | 34.8 | 57.6 | 37.0 | 31.7 | 53.7 | 32.4 | 14.8 | 26.6 | 14.2 | 12.5 | 22.6 | 12.0 | 0.7 | 4.2 | 15.7 |

| UODDM + CPPM (Ours) | DHS | Swin-S | 34.1 | 57.4 | 35.8 | 30.9 | 52.5 | 31.8 | 14.1 | 24.8 | 14.0 | 11.9 | 21.1 | 11.8 | 1.1 | 4.5 | 15.1 |

| UODDM + CPPM (Ours) | CPPM images | Swin-T | 34.2 | 58.1 | 35.0 | 32.6 | 55.8 | 33.2 | 16.3 | 29.5 | 15.9 | 13.8 | 25.2 | 13.4 | 0.4 | 5.0 | 17.1 |

| UODDM + CPPM (Ours) | CPPM images | Swin-S | 34.6 | 58.4 | 36.0 | 33.0 | 56.1 | 34.4 | 15.9 | 28.4 | 15.7 | 13.7 | 24.5 | 13.4 | 1.2 | 5.4 | 16.8 |

References

- LeCun, Y.; Boser, B.; Denker, J.S.; Henderson, D.; Howard, R.E.; Hubbard, W.; Jackel, L.D. Backpropagation Applied to Handwritten Zip Code Recognition. Neural Comput. 1989, 1, 541–551. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is All you Need. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Guyon, I., Luxburg, U.V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2017; Volume 30. [Google Scholar]

- Shen, X.; Stamos, I. simCrossTrans: A Simple Cross-Modality Transfer Learning for Object Detection with ConvNets or Vision Transformers. arXiv 2022, arXiv:2203.10456. [Google Scholar] [CrossRef]

- Liu, Z.; Hu, H.; Lin, Y.; Yao, Z.; Xie, Z.; Wei, Y.; Ning, J.; Cao, Y.; Zhang, Z.; Dong, L.; et al. Swin Transformer V2: Scaling Up Capacity and Resolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 12009–12019. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer using Shifted Windows. In Proceedings of the International Conference on Computer Vision (ICCV), Montreal, Canada, 11–17 October 2021. [Google Scholar]

- Gupta, S.; Girshick, R.B.; Arbeláez, P.A.; Malik, J. Learning Rich Features from RGB-D Images for Object Detection and Segmentation. In Proceedings of the Computer Vision-ECCV 2014-13th European Conference, Zurich, Switzerland, 6–12 September 2014; Fleet, D.J., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Springer: Berlin/Heidelberg, Germany, 2014; Volume 8695, pp. 345–360. [Google Scholar] [CrossRef] [Green Version]

- Stamos, I.; Hadjiliadis, O.; Zhang, H.; Flynn, T. Online Algorithms for Classification of Urban Objects in 3D Point Clouds. In Proceedings of the 2012 Second International Conference on 3D Imaging, Modeling, Processing, Visualization Transmission, Zurich, Switzerland, 13–15 October 2012; pp. 332–339. [Google Scholar] [CrossRef]

- Zelener, A.; Stamos, I. CNN-Based Object Segmentation in Urban LIDAR with Missing Points. In Proceedings of the 2016 Fourth International Conference on 3D Vision (3DV), Stanford, CA, USA, 25–28 October 2016; pp. 417–425. [Google Scholar] [CrossRef]

- Shen, X.; Stamos, I. Frustum VoxNet for 3D object detection from RGB-D or Depth images. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Village, CO, USA, 1–5 March 2020. [Google Scholar]

- Shen, X.; Stamos, I. 3D Object Detection and Instance Segmentation from 3D Range and 2D Color Images. Sensors 2021, 21, 1213. [Google Scholar] [CrossRef] [PubMed]

- Girshick, R.B.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. arXiv 2013, arXiv:1311.2524. [Google Scholar]

- Girshick, R.B. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision, ICCV 2015, Santiago, Chile, 7–13 December 2015; IEEE Computer Society: Washington, DC, USA, 2015; pp. 1440–1448. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.B.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. In Proceedings of the Advances in Neural Information Processing Systems 28: Annual Conference on Neural Information Processing Systems 2015, Montreal, QC, Canada, 7–12 December 2015; pp. 91–99. [Google Scholar]

- Lin, T.Y.; Dollar, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 22–25 July 2017. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Honolulu, HI, USA, 22–25 July 2017; pp. 2980–2988. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.K.; Girshick, R.B.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. arXiv 2015, arXiv:1506.02640. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. arXiv 2016, arXiv:1612.08242. [Google Scholar]

- Lin, T.; Goyal, P.; Girshick, R.B.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. arXiv 2017, arXiv:1708.02002. [Google Scholar]

- Everingham, M.; Van Gool, L.; Williams, C.K.I.; Winn, J.; Zisserman, A. The PASCAL Visual Object Classes Challenge 2012 (VOC2012) Results. 2012. Available online: http://www.pascal-network.org/challenges/VOC/voc2012/workshop/index.html (accessed on 31 May 2023).

- Lin, T.; Maire, M.; Belongie, S.J.; Bourdev, L.D.; Girshick, R.B.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. arXiv 2014, arXiv:1405.0312. [Google Scholar]

- Shen, X. A survey of Object Classification and Detection based on 2D/3D data. arXiv 2019, arXiv:1905.12683. [Google Scholar]

- Huang, Z.; Liu, J.; Li, L.; Zheng, K.; Zha, Z. Modality-Adaptive Mixup and Invariant Decomposition for RGB-Infrared Person Re-Identification. arXiv 2022, arXiv:2203.01735. [Google Scholar] [CrossRef]

- Ling, Y.; Zhong, Z.; Luo, Z.; Rota, P.; Li, S.; Sebe, N. Class-Aware Modality Mix and Center-Guided Metric Learning for Visible-Thermal Person Re-Identification. In Proceedings of the 28th ACM International Conference on Multimedia, Seattle, WA, USA, 12–16 October 2020; Association for Computing Machinery: New York, NY, USA, 2020; pp. 889–897. [Google Scholar]

- Enzweiler, M.; Gavrila, D.M. A Multilevel Mixture-of-Experts Framework for Pedestrian Classification. IEEE Trans. Image Process. 2011, 20, 2967–2979. [Google Scholar] [CrossRef] [Green Version]

- Barnum, G.; Talukder, S.; Yue, Y. On the Benefits of Early Fusion in Multimodal Representation Learning. arXiv 2020, arXiv:2011.07191. [Google Scholar]

- Simonyan, K.; Zisserman, A. Two-Stream Convolutional Networks for Action Recognition in Videos. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, USA, 8–13 December 2014; Ghahramani, Z., Welling, M., Cortes, C., Lawrence, N., Weinberger, K., Eds.; Curran Associates, Inc.: Dutchess County, NY, USA, 2014; Volume 27. [Google Scholar]

- Wu, D.; Pigou, L.; Kindermans, P.J.; Le, N.D.H.; Shao, L.; Dambre, J.; Odobez, J.M. Deep Dynamic Neural Networks for Multimodal Gesture Segmentation and Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 1583–1597. [Google Scholar] [CrossRef] [Green Version]

- Kahou, S.E.; Pal, C.; Bouthillier, X.; Froumenty, P.; Gülçehre, C.; Memisevic, R.; Vincent, P.; Courville, A.; Bengio, Y.; Ferrari, R.C.; et al. Combining Modality Specific Deep Neural Networks for Emotion Recognition in Video. In Proceedings of the 15th ACM on International Conference on Multimodal Interaction, ICMI ’13, Sydney, Australia, 9–13 December 2013; Association for Computing Machinery: New York, NY, USA, 2013; pp. 543–550. [Google Scholar] [CrossRef] [Green Version]

- Wu, Z.; Gobichettipalayam, S.; Tamadazte, B.; Allibert, G.; Paudel, D.; Demonceaux, C. Robust RGB-D Fusion for Saliency Detection. In Proceedings of the 2022 International Conference on 3D Vision (3DV), Prague, Czech Republic, 12–16 September 2022; IEEE Computer Society: Los Alamitos, CA, USA, 2022; pp. 403–413. [Google Scholar] [CrossRef]

- Zhou, Z.; Wu, Z.; Boutteau, R.; Yang, F.; Demonceaux, C.; Ginhac, D. RGB-Event Fusion for Moving Object Detection in Autonomous Driving. arXiv 2023, arXiv:2209.08323. [Google Scholar]

- Wu, Z.; Allibert, G.; Meriaudeau, F.; Ma, C.; Demonceaux, C. HiDAnet: RGB-D Salient Object Detection via Hierarchical Depth Awareness. IEEE Trans. Image Process. 2023, 32, 2160–2173. [Google Scholar] [CrossRef]

- Larsson, G.; Maire, M.; Shakhnarovich, G. FractalNet: Ultra-Deep Neural Networks without Residuals. In Proceedings of the International Conference on Learning Representations, Toulon, France, 24–26 April 2017. [Google Scholar]

- Wang, J.; Wei, Z.; Zhang, T.; Zeng, W. Deeply-Fused Nets. arXiv 2016, arXiv:1605.07716. [Google Scholar]

- Chen, X.; Ma, H.; Wan, J.; Li, B.; Xia, T. Multi-View 3D Object Detection Network for Autonomous Driving. arXiv 2016, arXiv:1611.07759. [Google Scholar]

- Li, Y.; Zhang, J.; Cheng, Y.; Huang, K.; Tan, T. Semantics-guided multi-level RGB-D feature fusion for indoor semantic segmentation. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 1262–1266. [Google Scholar] [CrossRef]

- Wikipedia Contributors. Flood Fill—Wikipedia, The Free Encyclopedia. 2022. Available online: https://en.wikipedia.org/w/index.php?title=Flood_fill&oldid=1087894346 (accessed on 26 June 2022).

- Chen, K.; Wang, J.; Pang, J.; Cao, Y.; Xiong, Y.; Li, X.; Sun, S.; Feng, W.; Liu, Z.; Xu, J.; et al. MMDetection: Open MMLab Detection Toolbox and Benchmark. arXiv 2019, arXiv:1906.07155. [Google Scholar]

- Song, S.; Lichtenberg, S.P.; Xiao, J. SUN RGB-D: A RGB-D Scene Understanding Benchmark Suite. In Proceedings of the The IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Song, S.; Xiao, J. Deep Sliding Shapes for Amodal 3D Object Detection in RGB-D Images. arXiv 2015, arXiv:1511.02300. [Google Scholar]

- Lahoud, J.; Ghanem, B. 2D-Driven 3D Object Detection in RGB-D Images. In Proceedings of the the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Qi, C.R.; Liu, W.; Wu, C.; Su, H.; Guibas, L.J. Frustum PointNets for 3D Object Detection From RGB-D Data. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

| Model | Test on | Backbone | SUNRGBD10 | SUNRGBD16 | SUNRGBD66 | SUNRGBD79 |

|---|---|---|---|---|---|---|

| RGB only (ours) | RGB | Swin-T | 54.2 | 52.3 | 29.3 | 25.2 |

| UODDM (ours) | RGB | Swin-T | 53.9 | 52.5 | 28.7 | 24.7 |

| DHS only (CrossTrans [3]) | DHS | Swin-T | 55.8 | 52.7 | 26.1 | 22.1 |

| UODDM (ours) | DHS | Swin-T | 56.6 | 53.4 | 27.7 | 23.5 |

| Model | Test on | Backbone | SUNRGBD10 | SUNRGBD16 | SUNRGBD66 | SUNRGBD79 |

|---|---|---|---|---|---|---|

| UODDM | RGB | Swin-T | 53.9 | 52.5 | 28.7 | 24.7 |

| UODDM + SFFM | RGB | Swin-T | 24.6 | 17.5 | 19.2 | 20.1 |

| UODDM + CPPM | RGB | Swin-T | 54.2 | 51.9 | 27.7 | 23.7 |

| UODDM + CPPM | RGB | Swin-S | 54.6 | 52.7 | 27.5 | 23.6 |

| UODDM | DHS | Swin-T | 56.6 | 53.4 | 27.7 | 23.5 |

| UODDM + SFFM | DHS | Swin-T | 25.6 | 18.7 | 20.0 | 21.3 |

| UODDM + CPPM | DHS | Swin-T | 55.8 | 52.8 | 26.3 | 22.4 |

| UODDM + CPPM | DHS | Swin-S | 57.4 | 52.5 | 24.8 | 21.1 |

| UODDM + CPPM | CPPM | Swin-T | 58.1 | 55.8 | 29.5 | 25.2 |

| UODDM + CPPM | CPPM | Swin-S | 58.4 | 56.1 | 28.4 | 24.5 |

| Image Source (for Testing) | Methods | Backbone | Bed | Toilet | Night Stand | Bathtub | Chair | Dresser | Sofa | Table | Desk | Bookshelf | SUNRGBD10 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| RGB | 2D driven [40] | VGG-16 | 74.5 | 86.2 | 49.5 | 45.5 | 53.0 | 29.4 | 49.0 | 42.3 | 22.3 | 45.7 | 49.7 |

| Frustum PointNets [42] | VGG | 56.7 | 43.5 | 37.2 | 81.3 | 64.1 | 33.3 | 57.4 | 49.9 | 77.8 | 67.2 | 56.8 | |

| F-VoxNet [9] | ResNet 101 | 81.0 | 89.5 | 35.1 | 50.0 | 52.4 | 21.9 | 53.1 | 37.7 | 18.3 | 40.4 | 47.9 | |

| RGB only model (ours) | Swin-T | 83.2 | 93.9 | 51.8 | 54.2 | 60.4 | 23.7 | 51.3 | 46.3 | 22.5 | 54.4 | 54.2 | |

| UODDM (ours) | Swin-T | 83.6 | 87.1 | 53.3 | 58.8 | 62.5 | 22.6 | 54.2 | 46.8 | 22.0 | 48.0 | 53.9 | |

| UODDM + CPPM (ours) | Swin-T | 83.6 | 88.6 | 53.0 | 59.1 | 60.8 | 26.5 | 50.7 | 46.1 | 22.0 | 52.0 | 54.2 | |

| Depth/Point Cloud | F-VoxNet [9] | ResNet 101 | 78.7 | 77.6 | 34.2 | 51.9 | 51.8 | 16.5 | 48.5 | 34.9 | 14.2 | 19.2 | 42.8 |

| CrossTrans [3] | Swin-T | 87.2 | 87.7 | 51.6 | 69.5 | 69.0 | 27.0 | 60.5 | 48.1 | 19.3 | 38.3 | 55.8 | |

| UODDM (ours) | Swin-T | 88.1 | 87.6 | 53.8 | 66.8 | 69.5 | 28.7 | 62.2 | 47.2 | 19.7 | 41.9 | 56.6 | |

| UODDM + CPPM (ours) | Swin-T | 88.0 | 85.6 | 51.8 | 68.3 | 68.6 | 26.9 | 61.6 | 45.5 | 20.2 | 41.7 | 55.8 | |

| RGB and Depth/Point Cloud | RGB-D RCNN [6] | VGG | 76.0 | 69.8 | 37.1 | 49.6 | 41.2 | 31.3 | 42.2 | 43.0 | 16.6 | 34.9 | 44.2 |

| UODDM + CPPM (ours) | Swin-T | 86.5 | 91.0 | 54.4 | 70.2 | 67.2 | 30.3 | 57.5 | 48.7 | 22.8 | 52.7 | 58.1 |

| Image Source (for Testing) | Methods | Backbone | Sofa Chair | Kitchen Counter | Kitchen Cabinet | Garbage Bin | Microwave | Sink | SUNRGBD16 |

|---|---|---|---|---|---|---|---|---|---|

| RGB | F-VoxNet [9] | ResNet 101 | 47.8 | 22.0 | 29.8 | 52.8 | 39.7 | 31.0 | 43.9 |

| RGB-only model (ours) | Swin-T | 60.4 | 32.7 | 39.8 | 67.0 | 48.1 | 47.3 | 52.3 | |

| UODDM (ours) | Swin-T | 63.7 | 28.9 | 38.4 | 67.1 | 57.4 | 46.2 | 52.5 | |

| UODDM + CPPM (ours) | Swin-T | 60.6 | 31.2 | 37.8 | 64.7 | 54.3 | 40.1 | 51.9 | |

| Depth/Point Cloud | F-VoxNet [9] | ResNet 101 | 48.7 | 19.1 | 18.5 | 30.3 | 22.2 | 30.1 | 37.3 |

| CrossTrans [3] | Swin-T | 68.1 | 30.7 | 35.5 | 61.2 | 41.9 | 47.7 | 52.7 | |

| UODDM (ours) | Swin-T | 68.5 | 28.5 | 35.8 | 62.8 | 41.9 | 51.5 | 53.4 | |

| UODDM + CPPM (ours) | Swin-T | 67.6 | 29.2 | 33.0 | 61.6 | 47.4 | 47.5 | 52.8 | |

| RGB and Depth/Point Cloud | UODDM + CPPM (ours) | Swin-T | 66.6 | 29.2 | 41.2 | 68.6 | 57.9 | 48.0 | 55.8 |

| Method | Backbone Network | # Parameters (M) | GFLOPs | Inference Time (ms) | FPS |

|---|---|---|---|---|---|

| F-VoxNet [9] | ResNet-101 | 64 | - | 110 | 9.1 |

| CrossTrans [3] | ResNet-50 | 44 | 472.1 | 70 | 14.3 |

| CrossTrans [3] | Swin-T | 48 | 476.5 | 105 | 9.5 |

| UODDM (ours) | Swin-T | 48 | 476.5 | 105 | 9.5 |

| UODDM (ours) | Swin-S | 69 | 419.7 | 148 | 6.8 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shen, X.; Stamos, I. Unified Object Detector for Different Modalities Based on Vision Transformers. Electronics 2023, 12, 2571. https://doi.org/10.3390/electronics12122571

Shen X, Stamos I. Unified Object Detector for Different Modalities Based on Vision Transformers. Electronics. 2023; 12(12):2571. https://doi.org/10.3390/electronics12122571

Chicago/Turabian StyleShen, Xiaoke, and Ioannis Stamos. 2023. "Unified Object Detector for Different Modalities Based on Vision Transformers" Electronics 12, no. 12: 2571. https://doi.org/10.3390/electronics12122571