SP-YOLO-Lite: A Lightweight Violation Detection Algorithm Based on SP Attention Mechanism

Abstract

:1. Introduction

- Designing a lightweight Backbone network based on the Lightweight Convolutional Block (LC-Block). The network is constructed by directly stacking LC-Blocks based on Depthwise Separable Convolutions, maintaining a single path from input to output. It has lower network fragmentation and no additional computational costs such as kernel startup. Compared to backbone networks built with conventional convolutional modules in general-purpose object detection algorithms, the proposed network significantly reduces computational and parameter requirements while greatly improving inference efficiency.

- Proposing a novel attention mechanism called Segmentation-and-Product (SP) attention mechanism. This mechanism is designed to address the characteristic of violation detection tasks where the detection targets are concentrated in local regions. It innovatively incorporates image segmentation operations to divide the input image into regions and applies attention operations to each region separately. This effectively captures the local features of the image, thereby improving the accuracy of the detection model. Compared to existing attention mechanisms, this attention mechanism focuses more on capturing local spatial feature information, making it more suitable for violation detection tasks.

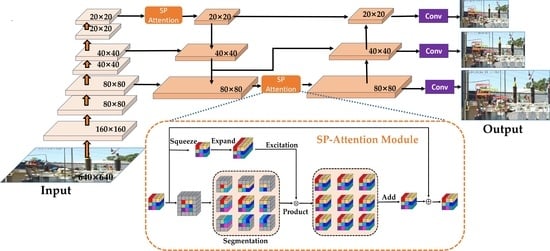

- Proposing a Neck network that is both lightweight and feature-rich. For this network, we use Depthwise Separable Convolution to replace complex CSP modules and conventional convolutional modules as the upsampling operator, significantly reducing the computational and parameter complexity. Additionally, we insert SP attention modules into the network to effectively enhance the model’s ability to extract meaningful features from the targets. Furthermore, we optimize the channel configuration of each layer in the Neck network, reducing the memory access cost. Compared to the feature fusion networks used in general-purpose object detection algorithms, this Neck network achieves a better balance between accuracy, speed, and complexity.

- Collecting and processing monitoring images from different power operation scenes to construct a Security Monitor for Power Construction (SMPC) dataset that includes multiple detection targets such as a safety belt, fence, and seine, and is suitable for violation detection tasks.

2. Related Work

2.1. Violation Behavior Detection

2.2. Lightweight Object Detection Algorithms

2.3. Attention Mechanism

3. SP-YOLO-Lite Network Model

3.1. Lightweight Backbone Network Based on LC-Block

3.2. Segmentation-and-Product Attention Mechanism

- (1)

- Segmentation: The input tensor is passed through the bottom branch. In the bottom branch, the input tensor is segmented into 2 feature maps using a sliding window of size . Each feature map has a size of .

- (2)

- Squeeze, Expand, and Excitation: The input tensor is passed through the top branch. In the top branch, the channel number of the input tensor first is compressed to 1/ through a 1 × 1 convolution and then expanded to 2. Finally, the attention filter is generated through the excitation operation.

- (3)

- Product: The output of the top branch (the attention filter) and the bottom branch (the segmented feature maps) is multiplied element-wise using a product operation. As shown in Figure 4, the product operation between the attention filter and the segmented feature maps can be equivalently expressed as follows: Firstly, the attention filter is sequentially unfolded along the channel dimension and the segmented feature maps are divided into groups according to the channel order. Then, the attention filter is multiplied with each group of the segmented feature maps element-wise, that is, the elements at the same position are multiplied. Finally, the weighted feature maps are output. The product operation realizes the filtering of the attention filter on the feature maps representing different regions.

- (4)

- Add: The resulting feature maps of size 2 × generated by the product operation are added for normalization, and finally added to the input feature map to obtain the output feature map with the same shape as the input.

3.3. Lightweight Neck Network

4. Experimental Results

4.1. Experimental Setting

4.2. Experimental Data

4.2.1. VOC Dataset

4.2.2. Security Monitor for Power Construction Dataset

4.3. Evaluation Indicators

4.3.1. Evaluation Metrics for Accuracy

4.3.2. Evaluation Metrics for Lightweight

4.4. SP Attention Module Experimental Analysis

4.5. Ablation Experiments

4.6. Comparison Experiments

4.7. Visualization Results

4.8. Robustness Study

4.9. Algorithm Deployment

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zhu, M.; Li, F.; Zhou, X. Research on the anti habitual violation of power enterprises. In Proceedings of the 2015 International Conference on Economics Social Science, Arts, Education and Management Engineering, Xi′an China, 12–13 December 2015; pp. 614–617. [Google Scholar]

- Liu, X. Research on Assembly Line Dynamic Target Detection Algorithm Based on YOLOv3. Master’s Thesis, Changchun University of Science and Technology, Changchun, China, June 2021. [Google Scholar]

- Murthy, C.B.; Hashmi, M.F.; Bokde, N.D.; Geem, Z.W. Investigations of object detection in images/videos using various deep learning techniques and embedded platforms—A comprehensive review. Appl. Sci. 2020, 10, 3280. [Google Scholar] [CrossRef]

- Hossain, S.; Lee, D.J. Deep learning-based real-time multiple-object detection and tracking from aerial imagery via a flying robot with GPU-based embedded devices. Sensors 2019, 19, 3371. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Park, M.W.; Elsafty, N.; Zhu, Z. Hardhat-wearing detection for enhancing on-site safety of construction workers. J. Constr. Eng. Manag. 2015, 141, 04015024. [Google Scholar] [CrossRef]

- Wang, L.; Duan, J.; Xin, L. YOLOv5 Helmet Wear Detection Method with Introduction of Attention Mechanism. Comput. Eng. Appl. 2022, 58, 303–312. [Google Scholar]

- Zhang, B.; Sun, C.F.; Fang, S.Q.; Zhao, Y.H.; Su, S. Workshop safety helmet wearing detection model based on SCM-YOLO. Sensors 2022, 22, 6702. [Google Scholar] [CrossRef] [PubMed]

- Wang, L.; Zhang, X.; Yang, H. Safety Helmet Wearing Detection Model Based on Improved YOLO-M. IEEE Access 2023, 11, 26247–26257. [Google Scholar] [CrossRef]

- Shin, D.J.; Kim, J.J. A Deep Learning Framework Performance Evaluation to Use YOLO in Nvidia Jetson Platform. Appl. Sci. 2022, 12, 3734. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Hawaii, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W.; et al. YOLOv6: A single-stage object detection framework for industrial applications. arXiv 2022, arXiv:2209.02976. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; pp. 21–37. [Google Scholar]

- Chen, X.; Gong, Z. YOLOv5-Lite: Lighter, Faster and Easier to Deploy. 2021. Available online: https://pythonawesome.com/yolov5-lite-lighter-faster-and-easier-to-deploy/ (accessed on 25 May 2022).

- Li, J.; Ye, J. Edge-YOLO: Lightweight Infrared Object Detection Method Deployed on Edge Devices. Appl. Sci. 2023, 13, 4402. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient channel attention for deep convolutional neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Online, 23–28 August 2020; pp. 11534–11542. [Google Scholar]

- Cui, C.; Gao, T.; Wei, S.; Du, Y.; Guo, R.; Dong, S.; Lu, B.; Zhou, Y.; Lv, X.; Liu, Q.; et al. PP-LCNet: A lightweight CPU convolutional neural network. arXiv 2021, arXiv:2109.15099. [Google Scholar]

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.C.; Chen, B.; Tan, M.; Wang, W.; Zhu, Y.; Pang, R.; Vasudevan, V.; et al. Searching for mobilenetv3. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1314–1324. [Google Scholar]

- Huang, Z.; Su, L.; Wu, J.; Chen, Y. Rock Image Classification Based on EfficientNet and Triplet Attention Mechanism. Appl. Sci. 2023, 13, 3180. [Google Scholar] [CrossRef]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 8759–8768. [Google Scholar]

- Shi, W.; Shi, Y.; Zhu, D.; Zhang, X.; Li, J. Traffic Sign Instances Segmentation Using Aliased Residual Structure and Adaptive Focus Localizer. In Proceedings of the 2022 26th International Conference on Pattern Recognition (ICPR), Quebec, QC, Canada, 21–25 August 2022; pp. 3676–3685. [Google Scholar]

- Liu, C.; Yang, D.; Tang, L.; Zhou, X.; Deng, Y. A Lightweight Object Detector Based on Spatial-Coordinate Self-Attention for UAV Aerial Images. Remote Sens. 2022, 15, 83. [Google Scholar] [CrossRef]

- Xie, Y.; Zhu, J.; Cao, Y.; Zhang, Y.; Feng, D.; Zhang, Y.; Chen, M. Efficient video fire detection exploiting motion-flicker-based dynamic features and deep static features. IEEE Access 2020, 8, 81904–81917. [Google Scholar] [CrossRef]

- Nahmias, M.A.; De Lima, T.F.; Tait, A.N.; Peng, H.T.; Shastri, B.J.; Prucnal, P.R. Photonic multiply-accumulate operations for neural networks. IEEE J. Sel. Top. Quantum Electron. 2019, 26, 7701518. [Google Scholar] [CrossRef]

- Li, Y.; Chen, Y.; Dai, X.; Chen, D.; Liu, M.; Yuan, L.; Liu, Z.; Zhang, L.; Vasconcelos, N. Micronet: Improving image recognition with extremely low flops. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 468–477. [Google Scholar]

- Meng, C.; Wang, Z.; Shi, L.; Gao, Y.; Tao, Y.; Wei, L. SDRC-YOLO: A Novel Foreign Object Intrusion Detection Algorithm in Railway Scenarios. Electronics 2023, 12, 1256. [Google Scholar] [CrossRef]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar]

| Module | Output Feature Map Size (W × H × C) | Number of Modules | Parameters [K × K, Stride] | Utilization of SE Attention Module |

|---|---|---|---|---|

| Conv + BN + H-Swish | 320 × 320 × 32 | 1 | [3 × 3, 2] | - |

| LC-Block 1 | 160 × 160 × 64 | 1 | [3 × 3, 2] | False |

| LC-Block 2 | 160 × 160 × 64 | 1 | [3 × 3, 1] | False |

| LC-Block 3 | 80 × 80 × 128 | 1 | [3 × 3, 2] | False |

| LC-Block 4 | 80 × 80 × 128 | 3 | [3 × 3, 1] | False |

| LC-Block 5 | 40 × 40 × 256 | 1 | [3 × 3, 2] | False |

| LC-Block 6 | 40 × 40 × 256 | 5 | [5 × 5, 1] | False |

| LC-Block 7 | 20 × 20 × 512 | 1 | [5 × 5, 2] | True |

| LC-Block 8 | 20 × 20 × 512 | 3 | [5 × 5, 1] | True |

| Conv + H-Swish + Dropout | 20 × 20 × 512 | 1 | [1 × 1, 1] | - |

| Model | mAP @0.5(%) | mAP @0.5:0.95(%) | FLOPs (× 109) | Paraments (Byte × 106) | FPS (t/n) |

|---|---|---|---|---|---|

| SP-YOLO-lite (without attention) | 77.1 | 52.1 | 4.8 | 2.32 | 46.5 |

| +SP Attention (18) | 78.2 | 54.1 | 4.9 | 2.39 | 47.4 |

| +SP Attention (26) | 78.1 | 54.1 | 5.0 | 2.33 | 47.8 |

| +SP Attention (18,26) | 78.4 | 54.3 | 5.0 | 2.40 | 43.5 |

| +SE Attention (18,26) | 75.1 | 48.4 | 4.8 | 2.36 | 45.2 |

| +ECA Attention (18,26) | 75.1 | 48.6 | 4.8 | 2.32 | 41.0 |

| +CBAM Attention (18,26) | 75.0 | 48.4 | 4.9 | 2.36 | 37.2 |

| Model | mAP @0.5(%) | mAP @0.5:0.95(%) | FLOPs (×109) | Paraments (Byte × 106) | FPS (t/n) |

|---|---|---|---|---|---|

| SP-YOLO-lite (without attention) | 82.5 | 55.2 | 4.7 | 2.30 | 40.3 |

| +SP Attention (18) | 83.3 | 55.2 | 4.8 | 2.37 | 36.5 |

| +SP Attention (26) | 83.6 | 55.1 | 4.9 | 2.32 | 30.8 |

| +SP Attention (18,26) | 84.8 | 57.6 | 5.0 | 2.39 | 39.4 |

| +SE Attention (18,26) | 82.8 | 55.3 | 4.8 | 2.34 | 37.6 |

| +ECA Attention (18,26) | 83.3 | 56.3 | 4.7 | 2.30 | 37.3 |

| +CBAM Attention (18,26) | 82.8 | 54.6 | 4.8 | 2.34 | 39.8 |

| Model | mAP @0.5(%) | mAP @0.5:0.95(%) | FLOPs (×109) | Paraments (Byte × 106) | FPS (t/n) |

|---|---|---|---|---|---|

| YOLO V5s | 81.5 | 59.6 | 15.9 | 7.06 | 49.3 |

| +Lite-Backbone | 79.3 | 57.0 | 9.5 | 5.03 | 37.5 |

| +Lite-Neck | 75.5 | 50.0 | 11.5 | 4.45 | 43.7 |

| +Lite-Backbone + Lite-Neck | 78.4 | 54.3 | 5.0 | 2.40 | 43.5 |

| Model | mAP @0.5(%) | mAP @0.5:0.95(%) | FLOPs (×109) | Paraments (Byte × 106) | FPS (t/n) |

|---|---|---|---|---|---|

| YOLO V5s | 87.6 | 65.9 | 15.8 | 7.03 | 44.1 |

| +Lite-Backbone | 86.3 | 62.4 | 9.5 | 4.99 | 50.0 |

| +Lite-Neck | 77.3 | 45.3 | 11.4 | 4.43 | 38.9 |

| +Lite-Backbone + Lite-Neck | 84.8 | 57.6 | 5.0 | 2.39 | 39.4 |

| Model | [email protected] (%) | mAP @0.5:0.95(%) | Size (MB) | FLOPs (× 109) | Paraments (Byte × 106) | FPS (t/n) |

|---|---|---|---|---|---|---|

| YOLOv5-Litee | 69.4 | 42.9 | 1.8 | 2.7 | 0.73 | 41.2 |

| YOLOv5-Lites | 74.9 | 49.6 | 3.3 | 3.8 | 1.57 | 32.6 |

| YOLOv5n | 75.7 | 51.7 | 3.9 | 4.2 | 1.79 | 33.9 |

| SP-YOLOv5-Lite (Ours) | 78.4 | 54.3 | 5.1 | 5.0 | 2.40 | 43.5 |

| YOLOv5-Litec | 79.1 | 56.3 | 9.1 | 8.9 | 4.43 | 48.5 |

| YOLOv5s | 81.5 | 59.6 | 13.8 | 15.9 | 7.06 | 49.3 |

| YOLOv5-Liteg | 81.7 | 60.9 | 11.3 | 15.9 | 5.51 | 45.5 |

| YOLOv5m | 84.4 | 65.7 | 40.3 | 48.1 | 20.93 | 47.6 |

| YOLOv5l | 86.6 | 68.8 | 88.7 | 108.0 | 46.21 | 26.2 |

| YOLOv5x | 87.5 | 70.5 | 165.3 | 204.2 | 86.30 | 21.6 |

| Model | [email protected] (%) | mAP @0.5:0.95(%) | Size (MB) | FLOPs (× 109) | Paraments (Byte × 106) | FPS (t/n) |

|---|---|---|---|---|---|---|

| YOLOv5-Litee | 82.0 | 50.5 | 1.8 | 2.7 | 0.72 | 45.0 |

| YOLOv5-Lites | 82.1 | 56.1 | 3.5 | 3.7 | 1.55 | 42.6 |

| YOLOv5n | 83.1 | 56.5 | 3.9 | 4.2 | 1.77 | 47.2 |

| SP-YOLOv5-Lite (Ours) | 84.8 | 57.6 | 4.9 | 5.0 | 2.39 | 39.4 |

| YOLOv5-Litec | 87.3 | 65.2 | 9.2 | 8.7 | 4.39 | 36.6 |

| YOLOv5s | 87.6 | 65.9 | 14.4 | 15.8 | 7.03 | 44.1 |

| YOLOv5-Liteg | 87.5 | 66.7 | 11.4 | 15.8 | 5.48 | 33.6 |

| YOLOv5m | 87.7 | 70.3 | 42.2 | 47.9 | 20.88 | 28.4 |

| YOLOv5l | 88.0 | 73.3 | 92.9 | 107.7 | 46.14 | 23.8 |

| YOLOv5x | 88.1 | 73.9 | 173.1 | 203.9 | 86.21 | 19.6 |

| Name | Configuration Information |

|---|---|

| GPU | NVIDIA Ampere Architecture GPU |

| Maximum GPU Frequency | 930 MHz |

| CPU | Octa-core Arm® Cortex®-A78AE 64-bit CPU |

| Maximum CPU Frequency | 2.2 GHz |

| Memory | 32 GB |

| Storage | 64 GB |

| Power | 15 W–40 W |

| Weight Quantization Precision | mAP @0.5(%) | mAP @0.5:0.95(%) | FPS (t/n) | Power (W) | Efficiency (FPS/W) |

|---|---|---|---|---|---|

| FP32 | 79.80 | 52.30 | 55.25 | 20.112 | 2.747 |

| FP16 | 79.70 | 52.40 | 60.61 | 18.992 | 3.191 |

| INT8 | 70.00 | 40.80 | 57.14 | 17.885 | 3.195 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huang, Z.; Wu, J.; Su, L.; Xie, Y.; Li, T.; Huang, X. SP-YOLO-Lite: A Lightweight Violation Detection Algorithm Based on SP Attention Mechanism. Electronics 2023, 12, 3176. https://doi.org/10.3390/electronics12143176

Huang Z, Wu J, Su L, Xie Y, Li T, Huang X. SP-YOLO-Lite: A Lightweight Violation Detection Algorithm Based on SP Attention Mechanism. Electronics. 2023; 12(14):3176. https://doi.org/10.3390/electronics12143176

Chicago/Turabian StyleHuang, Zhihao, Jiajun Wu, Lumei Su, Yitao Xie, Tianyou Li, and Xinyu Huang. 2023. "SP-YOLO-Lite: A Lightweight Violation Detection Algorithm Based on SP Attention Mechanism" Electronics 12, no. 14: 3176. https://doi.org/10.3390/electronics12143176