Optimal Feature Selection through Search-Based Optimizer in Cross Project

Abstract

1. Introduction

2. Literature Review

2.1. Gap Analysis

2.2. Research Questions

- RQ1: What is the impact of feature selection for multi-class compared with binary class on cross-project defect prediction through F1 measure?

- Null Hypothesis: Feature selection for multi-class compared with binary class has no impact on cross-project defect prediction through F1 measure.

- Alternate Hypothesis: Feature selection for multi-class compared with binary class has an impact on cross-project defect prediction through F1 measure.

- RQ2: What is the impact of ANN filter compared with KNN filter on cross-project defect prediction through F1-measure?

- Null Hypothesis: ANN filter compared with KNN filter has no impact on cross-project defect prediction through F1-measure.

- Alternate Hypothesis: ANN-filter compared with KNN-filter has an impact on cross-project defect prediction through F1-measure.

- RQ3: What is the impact of search-based optimizer i.e., random forest ensemble compared with genetic algorithm, on cross-project defect prediction through F1-measure?

- Null Hypothesis: Search-based optimizer i.e., random forest ensemble compared with Genetic Algorithm, has no impact on cross-project defect prediction through F1-measure.

- Alternate Hypothesis: Search-based optimizer, i.e., random forest ensemble compared with genetic algorithm, has an impact on cross-project defect prediction through F1-measure.

- RQ4: What is the impact of our classifier compared with Naïve Bayes classifier on cross-project defect prediction through F1-measure?

- Null Hypothesis: Our classifier compared with Naïve Bayes classifier has no impact on cross-project defect prediction through F1-measure.

- Alternate Hypothesis: Our classifier compared with Naïve Bayes classifier has an impact on cross-project defect prediction through F1-measure.

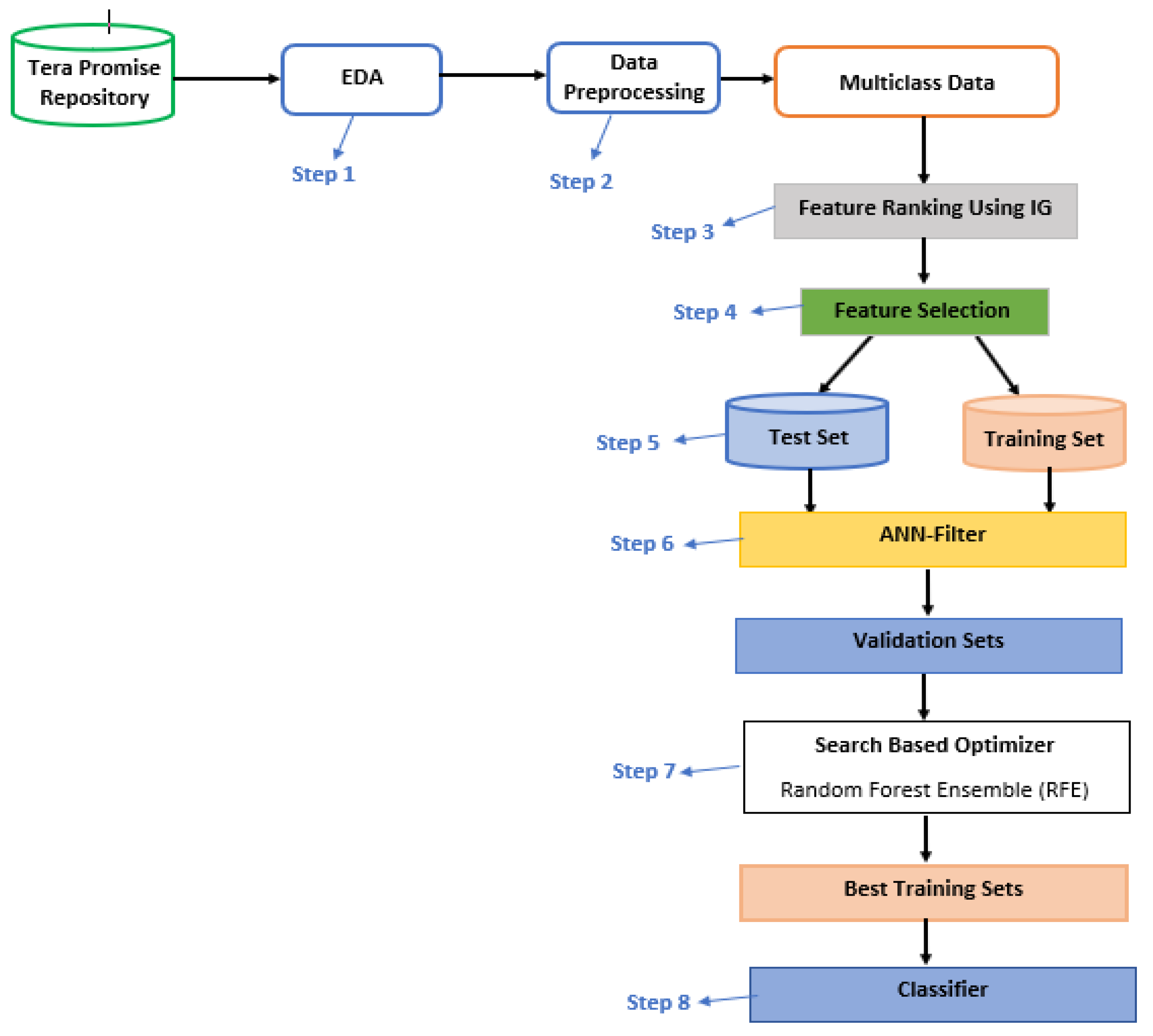

3. Research Methodology

3.1. Data Collection

3.2. Research Method

3.3. Research Design

- RQ1: What is the impact of feature selection for multi-class compared with binary class on cross-project defect prediction through F1 measure? For this question, design is 1 factor and 1 treatment (1F1T) i.e., KNN-filter.

- RQ2: What is the impact of ANN-filter compared with KNN-filter on cross-project defect prediction through F1-measure? For this question, design is 1 factor and 2 treatment (1F2T) i.e., KNN-filter and ANN-filter.

- RQ3: What is the impact of search-based optimizer i.e., random forest ensemble compared with genetic algorithm, on cross-project defect prediction through F1-measure? For this question, design is 1 factor and 2 treatment (1F2T) i.e., random forest ensemble and genetic algorithm.

- RQ4: What is the impact of our classifier compared with Naïve Bayes classifier on cross-project defect prediction through F1-measure? For this question, design is 1 factor and 2 treatment (1F2T) i.e., our classifier and Naïve Bayes classifier.

4. Proposed Methodology

4.1. Promise Repository

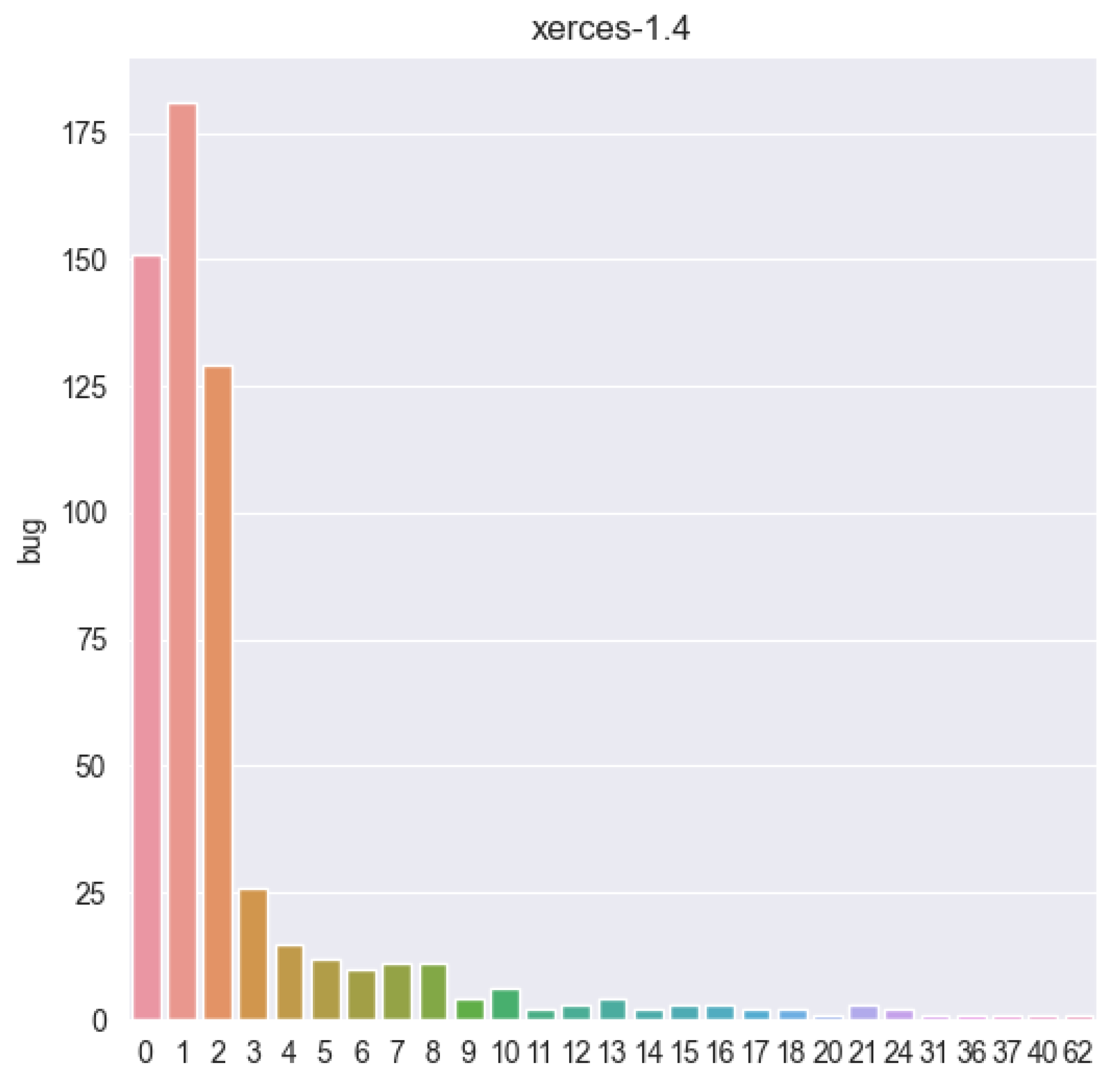

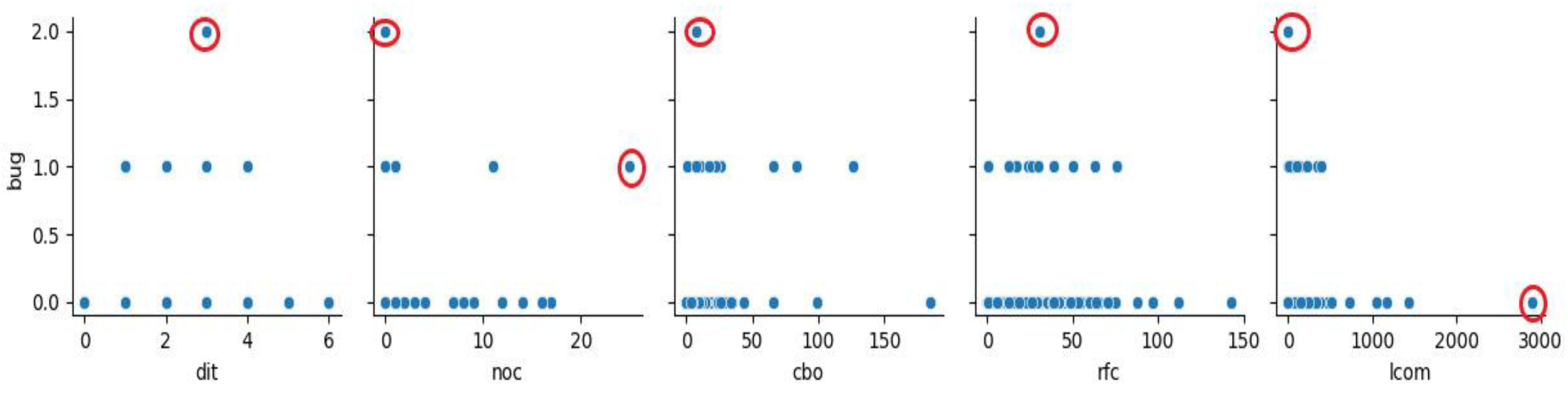

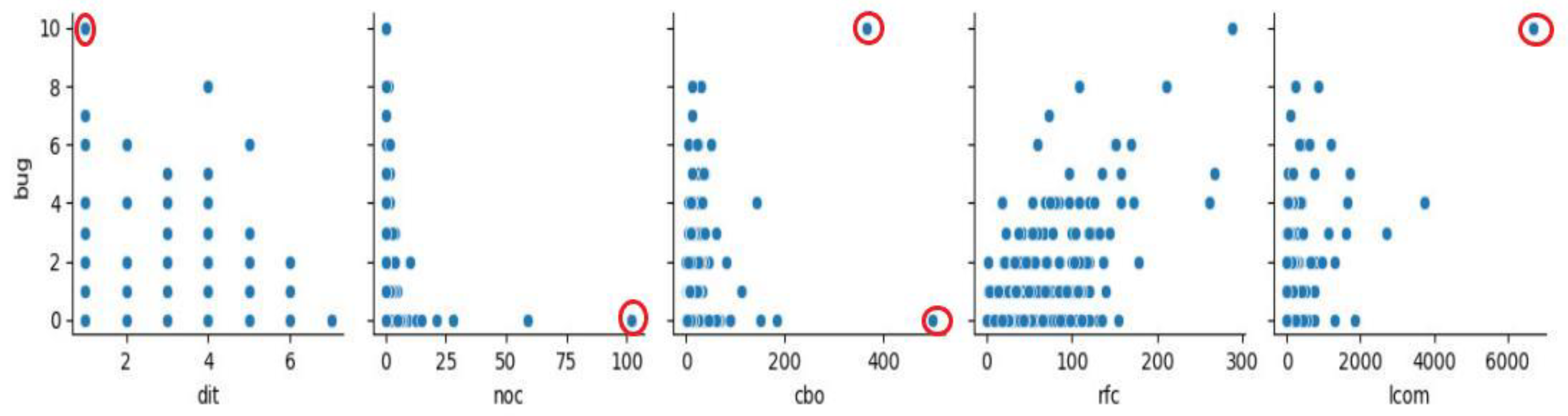

4.2. Exploratory Data Analysis

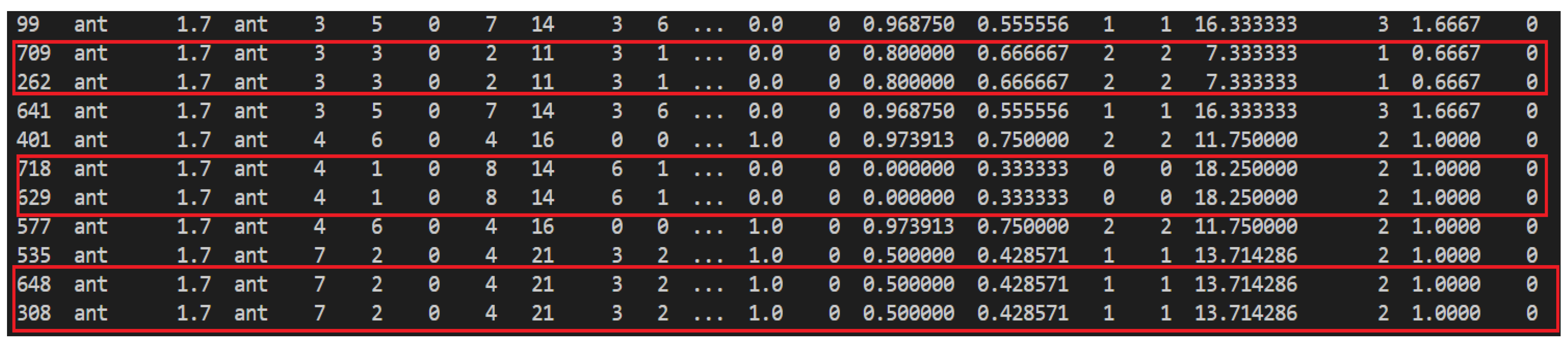

4.3. Data Preprocessing

4.4. Feature Ranking

4.5. Feature Selection

4.6. Search Based Optimizer

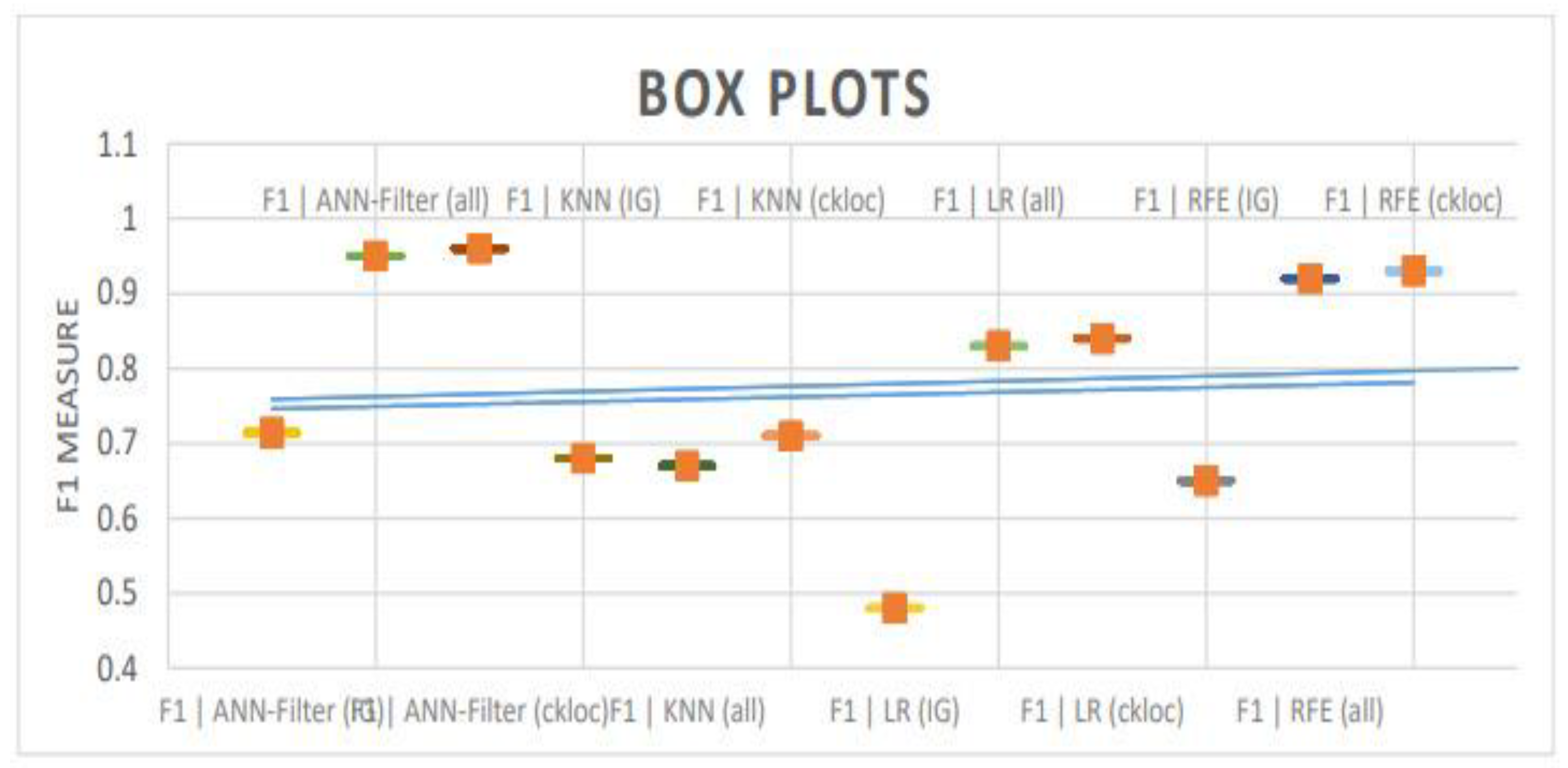

5. Research Validation

5.1. Cohen’s D

5.2. Glass’s Delta and Hedges’ G

5.3. Wilcoxon Test

5.4. Analysis of Validation Test

6. Threats to Validity

6.1. Construct Validity

6.2. External Validity

6.3. Conclusion Validity

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Herbold, S.; Trautsch, A.; Grabowski, J. Global vs. local models for cross project defect prediction: A replication study. Empir. Softw. Eng. 2017, 22, 1866–1902. [Google Scholar] [CrossRef]

- Zimmermann, T.; Nagappan, N.; Gall, H.; Giger, E.; Murphy, B. Cross project defect prediction: A large scale experiment on data vs. domain vs. process. In Proceedings of the 7th Joint Meeting of the European Software Engineering Conference and the ACM SIGSOFT Symposium on the Foundations of Software Engineering, Amsterdam, The Netherlands, 24–28 August 2009; pp. 91–100. [Google Scholar] [CrossRef]

- Basili, R.V.; Briand, L.; Melo, L.W. A validation of object-oriented design metrics as quality indicators. IEEE Trans. Softw. Eng. 1996, 22, 751–761. [Google Scholar] [CrossRef]

- Yu, Q.; Qian, J.; Jiang, S.; Wu, Z.; Zhang, G. An Empirical Study on the Effectiveness of Feature Selection for Cross Project Defect Prediction. IEEE Access 2019, 7, 35710–35718. [Google Scholar] [CrossRef]

- Moser, R.; Pedrycz, W.; Succi, G. A Comparative analysis of the efficiency of change metrics and static code attributes for defect prediction. In Proceedings of the 30th International Conference on Software Engineering, Leipzig, Germany, 10–18 May 2008; pp. 181–190. [Google Scholar] [CrossRef]

- Ostrand, T.J.; Weyuker, E.J.; Bell, R.M. Predicting the location and number of faults in large software systems. IEEE Trans. Softw. Eng. 2005, 31, 340–355. [Google Scholar] [CrossRef]

- Hosseini, S.; Turhan, B.; Mäntylä, M. A benchmark study on the effectiveness of search-based data selection and feature selection for cross project defect prediction. Inf. Softw. Technol. 2018, 95, 296–312. [Google Scholar] [CrossRef]

- Ryu, D.; Jang, J.I.; Baik, J. A transfer cost-sensitive boosting approach for cross-project defect prediction. Softw. Qual. J. 2017, 25, 235–272. [Google Scholar] [CrossRef]

- Shukla, S.; Radhakrishnan, T.; Muthukumaran, K.; Neti, L.B.M. Multi-objective cross-version defect prediction. Soft Comput. 2018, 22, 1959–1980. [Google Scholar] [CrossRef]

- Zhang, F.; Zheng, Q.; Zou, Y.; Hassan, A.E. Cross-project defect prediction using a connectivity-based unsupervised classifier. In Proceedings of the IEEE/ACM 38th International Conference on Software Engineering (ICSE), Austin, TX, USA, 14–22 May 2016; pp. 309–320. [Google Scholar] [CrossRef]

- Turabieh, H.; Mafarja, M.; Li, X. Iterated feature selection algorithms with layered recurrent neural network for software fault prediction. Expert Syst. Appl. 2019, 122, 27–42. [Google Scholar] [CrossRef]

- Cheikhi, L.; Abran, A. Promise and ISBSG Software Engineering Data Repositories: A Survey. In Proceedings of the 2013 Joint Conference of the 23rd International Workshop on Software Measurement and the 8th International Conference on Software Process and Product Measurement, Ankara, Turkey, 23–26 October 2013; pp. 17–24. [Google Scholar] [CrossRef]

- Zhang, F.; Keivanloo, I.; Zou, Y. Data Transformation in Cross-project Defect Prediction. Empir. Softw. Eng. 2018, 22, 3186–3218. [Google Scholar] [CrossRef]

- Wu, F. Empirical validation of object-oriented metrics on NASA for fault prediction. Commun. Comput. Inf. Sci. 2011, 201, 168–175. [Google Scholar] [CrossRef]

- Turhan, B.; Menzies, T.; Bener, A.B.; Stefano, J.D. On the relative value of cross-company and within-company data for defect prediction. Empir. Softw. Eng. 2009, 14, 540–578. [Google Scholar] [CrossRef]

- Gong, L.; Jiang, S.; Bo, L.; Jiang, L.; Qian, J. A Novel Class-Imbalance Learning Approach for Both Within-Project and Cross Project Defect Prediction. IEEE Trans. Reliab. 2020, 69, 40–54. [Google Scholar] [CrossRef]

- Li, Y.; Huang, Z.; Wang, Y.; Fang, B. Evaluating data filter on cross-project defect prediction: Comparison and improvements. IEEE Access 2017, 5, 25646–25656. [Google Scholar] [CrossRef]

- Laradji, I.H.; Alshayeb, M.; Ghouti, L. Software defect prediction using ensemble learning on selected features. Inf. Softw. Technol. 2015, 58, 388–402. [Google Scholar] [CrossRef]

- Hammouri, A.; Hammad, M.; Alnabhan, M.; Alsarayrah, F. Software Bug Prediction using machine learning approach. Int. J. Adv. Comput. Sci. Appl. 2018, 9, 78–83. [Google Scholar] [CrossRef]

- Chen, X.; Shen, Y.; Cui, Z.; Ju, X. Applying Feature Selection to Software Defect Prediction Using Multi-Objective Optimization. In Proceedings of the IEEE 41st Annual Computer Software and Applications Conference (COMPSAC), Turin, Italy, 4–8 July 2017; Volume 2, pp. 54–59. [Google Scholar] [CrossRef]

- Mohammadi, S.; Mirvaziri, H.; Ghazizadeh-Ahsaee, M.; Karimipourb, H. Cyber intrusion detection by combined feature selection algorithm. J. Inf. Secur. Appl. 2019, 44, 80–88. [Google Scholar] [CrossRef]

- Goel, L.; Sharma, M.; Khatri, S.; Damodaran, D. Prediction of Cross Project Defects using Ensemble based Multinomial Classifier. EAI Endorsed Trans. Scalable Inf. Syst. 2019, 7, e5. [Google Scholar] [CrossRef]

- Bommert, A.; Sun, X.; Bischl, B.; Rahnenführer, J.; Lang, M. Benchmark for filter methods for feature selection in high-dimensional classification data. Comput. Stat. Data Anal. 2020, 143, 106839. [Google Scholar] [CrossRef]

- Jalil, A.; Faiz, R.B.; Alyahya, S.; Maddeh, M. Impact of Optimal Feature Selection Using Hybrid Method for a Multiclass Problem in Cross Project Defect Prediction. Appl. Sci. 2022, 12, 12167. [Google Scholar] [CrossRef]

- He, Z.; Shu, F.; Yang, Y.; Li, M.; Wang, Q. An investigation on the feasibility of cross-project defect prediction. Autom. Softw. Eng. 2012, 19, 167–199. [Google Scholar] [CrossRef]

- Giray, G.; Bennin, K.E.; Köksal, Ö.; Babur, Ö.; Tekinerdogan, B. On the use of deep learning in software defect prediction. J. Syst. Softw. 2023, 195, 111537. [Google Scholar] [CrossRef]

| SR # | Attribute | Abbreviations | Description |

|---|---|---|---|

| 1 | WMC | Weighted Methods per class | The number of methods used in a given class |

| 2 | DIT | Depth of Inheritance Tree | The maximum distance from a given class to the root of an inheritance tree |

| 3 | NOC | Number of Children | The number of children of a given class in an inheritance tree |

| 4 | CBO | Coupling between Object Classes | The number of classes that are coupled to a given class |

| 5 | RFC | Response for a Class | The number of distinct methods invoked by code in a given class |

| 6 | LCOM | Lack of Cohesion in Methods | The number of method pairs in a class that do not share access to any class attributes |

| 7 | CA | Afferent Coupling | Afferent coupling, which measures the number of classes that depends upon a given class |

| 8 | CE | Efferent Coupling | Efferent coupling, which measures the number of classes that a given class depends upon |

| 9 | NPM | Number of Public Methods | the number of public methods in a given class |

| 10 | LCOM3 | Normalized Version of LCOM | Another type of lcom metric proposed by Henderson-Sellers |

| 11 | LOC | Lines of Code | The number of lines of code in a given class |

| 12 | DAM | Data Access Metric | The ratio of the number of private/protected attributes to the total number of attributes in a given class |

| 13 | MOA | Measure of Aggregation | The number of attributes in a given class which are of user-defined types |

| 14 | MFA | Measure of Functional Abstraction | The number of methods inherited by a given class divided by the total number of methods that can be accessed by the member methods of the given class |

| 15 | CAM | Cohesion among Methods | The ratio of the sum of the number of different parameter types of every method in a given class to the product of the number of methods in the given class and the number of different method parameter types in the whole class |

| 16 | IC | Inheritance Coupling | The number of parent classes that a given class is coupled to |

| 17 | CBM | Coupling Between Methods | The total number of new or overwritten methods that all inherited methods in a given class are coupled to |

| 18 | AMC | Average Method Complexity | The average size of methods in a given class |

| 19 | MAX_CC | Maximum Values of Methods in the same Class | The maximum McCabe’s cyclomatic complexity (CC) score of methods in a given class |

| 20 | AVG_CC | Mean Values of Methods in the same class | The arithmetic means of the McCabe’s cyclomatic complexity (CC) scores of methods in a given class |

| SR # | Datasets | Info Gain Features |

|---|---|---|

| 1 | Ant-1.3 | rfc, ca, lcom3, cam, avg_cc |

| 2 | Ant-1.4 | ce, loc, moa |

| 3 | Ant-1.5 | wmc, cbo, rfc, ce, cbm |

| 4 | Ant-1.6 | rfc, lcom3, cam, cbm, amc, avg_cc |

| 5 | Ant-1.7 | cbo, ce, loc, cbm, amc, avg_cc |

| 6 | Camel-1.0 | cbo, lcom, ca, npm, mfa |

| 7 | Camel-1.2 | ca, ce, npm, mfa, cbm |

| 8 | Camel-1.4 | rfc, ce, lcom3, loc, mfa, avg_cc |

| 9 | Camel-1.6 | dit, lcom, ca, ce, amc, max_cc |

| 10 | Ivy-1.1 | lcom, ca, amc, max_cc |

| 11 | Ivy-1.4 | ca, ce, lcom3, loc, cam |

| 12 | Ivy-2.0 | cbo, lcom, npm, ic, max_cc |

| 13 | Jedit-3.5 | cbo, rfc, ca, ce, moa, max_cc |

| 14 | Jedit-4.0 | lcom, ca, locm3, loc, moa, cam |

| 15 | Jedit-4.1 | rfc, loc, amc, avg_cc |

| 16 | Jedit-4.2 | rfc, npm, dam, mfa |

| 17 | Jedit-4.3 | ca, ce, npm, loc, moa |

| 18 | Log4j-1.0 | lcom, npm, loc, moa |

| 19 | Log4j-1.1 | wmc, cbo, loc, mfa |

| 20 | Log4j-1.2 | rfc, ca, npm, mfa, ic, cbm, amc |

| 21 | Lucene-2.0 | noc, rfc, moa, mfa, cam, amc |

| 22 | Lucene-2.2 | ca, npm, lcom3, loc, moa, amc |

| 23 | Lucene-2.4 | rfc, ca, ce, lcom3, dam, amc |

| 24 | Poi-1.5 | cbo, rfc, lcom3, loc, cam |

| 25 | Poi-2.0 | wmc, cbo, lcom, loc, mfa, amc, max_cc |

| 26 | Poi-2.5 | loc, dam, cam, cbm, amc |

| 27 | Poi-3.0 | cbo, ce, lcom3, cbm, amc, avg_cc |

| 28 | Synapse-1.0 | dit, rfc, lcom3, mfa, cam |

| 29 | Synapse-1.1 | cbo, rfc, ca, npm, mfa, avg_cc |

| 30 | Synapse-1.2 | rfc, lcom, loc, cbm, amc, avg_cc |

| 31 | Velocity-1.4 | dit, ce, cam, amc, max_cc |

| 32 | Velocity-1.5 | noc, lcom, loc, mfa, cam |

| 33 | Velocity-1.6 | cbo, lcom3, mfa, cam, amc, avg_cc |

| 34 | Xalan-2.4 | cbo, rfc, ca, loc, amc |

| 35 | Xalan-2.5 | lcom, loc, cam, cbm |

| 36 | Xalan-2.6 | rfc, loc, mfa, amc, max_cc |

| 37 | Xalan-2.7 | rfc, loc, mfa, amc, max_cc |

| 38 | Xerces-1.2 | noc, cbo, rfc, npm, moa, cam |

| 39 | Xerces-1.3 | cbo, ca, loc, dam, moa |

| 40 | Xerces-1.4 | cbo, ca, ce, loc, mfa, avg_cc |

| 41 | Xerces-init | wmc, cbo, loc, dam, moa, amc, avg_cc |

| Multi-Class | Binary-Class | ||||||

|---|---|---|---|---|---|---|---|

| SR # | Datasets | ANN (Artificial Neural Network) Filter | KNN (K-Nearest Neighbor) Filter | ||||

| ALL | CKLOC | IG | ALL | CKLOC | IG | ||

| 1 | Ant-1.3 | 0.88 | 0.89 | 0.87 | 0.37 | 0.44 | 0.48 |

| 2 | Ant-1.4 | 0.66 | 0.68 | 0.70 | 0.21 | 0.18 | 0.27 |

| 3 | Ant-1.5 | 0.87 | 0.85 | 0.86 | 0.31 | 0.44 | 0.33 |

| 4 | Ant-1.6 | 0.67 | 0.71 | 0.67 | 0.41 | 0.42 | 0.45 |

| 5 | Ant-1.7 | 0.75 | 0.76 | 0.75 | 0.49 | 0.42 | 0.48 |

| 6 | Camel-1.0 | 0.74 | 0.66 | 0.94 | 0.18 | 0.23 | 0.12 |

| 7 | Camel-1.2 | 0.59 | 0.58 | 0.58 | 0.27 | 0.23 | 0.24 |

| 8 | Camel-1.4 | 0.81 | 0.83 | 0.82 | 0.28 | 0.23 | 0.26 |

| 9 | Camel-1.6 | 0.79 | 0.78 | 0.79 | 0.21 | 0.21 | 0.22 |

| 10 | Ivy-1.1 | 0.60 | 0.65 | 0.43 | 0.37 | 0.22 | 0.22 |

| 11 | Ivy-1.4 | 0.89 | 0.88 | 0.88 | 0.31 | 0.12 | 0.18 |

| 12 | Ivy-2.0 | 0.91 | 0.88 | 0.88 | 0.36 | 0.43 | 0.41 |

| 13 | Jedit-3.5 | 0.78 | 0.75 | 0.68 | 0.33 | 0.22 | 0.30 |

| 14 | Jedit-4.0 | 0.79 | 0.80 | 0.79 | 0.42 | 0.30 | 0.36 |

| 15 | Jedit-4.1 | 0.83 | 0.87 | 0.81 | 0.49 | 0.41 | 0.40 |

| 16 | Jedit-4.2 | 0.89 | 0.89 | 0.87 | 0.44 | 0.37 | 0.42 |

| 17 | Jedit-4.3 | 0.67 | 0.72 | 0.97 | 0.09 | 0.16 | 0.15 |

| 18 | Log4j-1.0 | 0.59 | 0.60 | 0.67 | 0.51 | 0.39 | 0.34 |

| 19 | Log4j-1.1 | 0.55 | 0.58 | 0.55 | 0.57 | 0.50 | 0.46 |

| 20 | Log4j-1.2 | 0.35 | 0.43 | 0.42 | 0.21 | 0.13 | 0.16 |

| 21 | Lucene-2.0 | 0.50 | 0.46 | 0.51 | 0.44 | 0.32 | 0.38 |

| 22 | Lucene-2.2 | 0.38 | 0.36 | 0.36 | 0.28 | 0.22 | 0.23 |

| 23 | Lucene-2.4 | 0.29 | 0.37 | 0.38 | 0.35 | 0.21 | 0.25 |

| 24 | Poi-1.5 | 0.75 | 0.78 | 0.68 | 0.31 | 0.21 | 0.21 |

| 25 | Poi-2.0 | 0.85 | 0.84 | 0.85 | 0.26 | 0.19 | 0.23 |

| 26 | Poi-2.5 | 0.65 | 0.64 | 0.53 | 0.23 | 0.16 | 0.17 |

| 27 | Poi-3.0 | 0.66 | 0.63 | 0.64 | 0.26 | 0.18 | 0.19 |

| 28 | Synapse-1.0 | 0.78 | 0.79 | 0.84 | 0.42 | 0.31 | 0.41 |

| 29 | Synapse-1.1 | 0.63 | 0.64 | 0.63 | 0.46 | 0.31 | 0.44 |

| 30 | Synapse-1.2 | 0.57 | 0.55 | 0.57 | 0.56 | 0.31 | 0.43 |

| 31 | Velocity-1.4 | 0.49 | 0.46 | 0.47 | 0.18 | 0.08 | 0.13 |

| 32 | Velocity-1.5 | 0.54 | 0.51 | 0.51 | 0.22 | 0.11 | 0.18 |

| 33 | Velocity-1.6 | 0.60 | 0.63 | 0.66 | 0.29 | 0.20 | 0.31 |

| 34 | Xalan-2.4 | 0.85 | 0.85 | 0.84 | 0.39 | 0.31 | 0.34 |

| 35 | Xalan-2.5 | 0.61 | 0.56 | 0.58 | 0.37 | 0.30 | 0.300 |

| 36 | Xalan-2.6 | 0.67 | 0.62 | 0.61 | 0.51 | 0.40 | 0.41 |

| 37 | Xalan-2.7 | 0.82 | 0.81 | 0.81 | 0.40 | 0.24 | 0.25 |

| 38 | Xerces-1.2 | 0.80 | 0.82 | 0.79 | 0.24 | 0.17 | 0.20 |

| 39 | Xerces-1.3 | 0.92 | 0.93 | 0.91 | 0.33 | 0.29 | 0.28 |

| 40 | Xerces-1.4 | 0.74 | 0.73 | 0.68 | 0.31 | 0.18 | 0.19 |

| 41 | Xerces-init | 0.55 | 0.56 | 0.55 | 0.31 | 0.25 | 0.27 |

| Mean | 0.689268 | 0.691098 | 0.69122 | 0.344341 | 0.273024 | 0.29778 | |

| Median | 0.67 | 0.71 | 0.68 | 0.331 | 0.239 | 0.277 | |

| Multi-Class | |||||||

|---|---|---|---|---|---|---|---|

| SR # | Datasets | ANN (Artificial Neural Network) Filter | KNN (K-Nearest Neighbor) Filter | ||||

| ALL | CKLOC | IG | ALL | CKLOC | IG | ||

| 1 | Ant-1.3 | 0.95 | 0.57 | 0.50 | 0.88 | 0.89 | 0.87 |

| 2 | Ant-1.4 | 0.94 | 0.85 | 0.33 | 0.66 | 0.68 | 0.70 |

| 3 | Ant-1.5 | 0.93 | 0.90 | 0.75 | 0.87 | 0.85 | 0.86 |

| 4 | Ant-1.6 | 0.92 | 0.94 | 0.72 | 0.67 | 0.71 | 0.67 |

| 5 | Ant-1.7 | 0.99 | 0.97 | 0.92 | 0.75 | 0.76 | 0.75 |

| 6 | Camel-1.0 | 0.93 | 0.96 | 0.85 | 0.74 | 0.66 | 0.94 |

| 7 | Camel-1.2 | 0.97 | 0.92 | 0.53 | 0.59 | 0.58 | 0.58 |

| 8 | Camel-1.4 | 0.97 | 0.99 | 0.68 | 0.81 | 0.83 | 0.82 |

| 9 | Camel-1.6 | 0.97 | 0.98 | 0.83 | 0.79 | 0.78 | 0.79 |

| 10 | Ivy-1.1 | 0.90 | 0.83 | 0.71 | 0.60 | 0.65 | 0.43 |

| 11 | Ivy-1.4 | 0.93 | 0.95 | 0.92 | 0.89 | 0.88 | 0.88 |

| 12 | Ivy-2.0 | 0.97 | 0.98 | 0.92 | 0.91 | 0.88 | 0.88 |

| 13 | Jedit-3.5 | 0.88 | 0.96 | 0.49 | 0.78 | 0.75 | 0.68 |

| 14 | Jedit-4.0 | 0.86 | 0.91 | 0.88 | 0.79 | 0.80 | 0.79 |

| 15 | Jedit-4.1 | 0.90 | 0.96 | 0.90 | 0.83 | 0.87 | 0.81 |

| 16 | Jedit-4.2 | 0.96 | 0.96 | 0.57 | 0.89 | 0.89 | 0.87 |

| 17 | Jedit-4.3 | 0.97 | 0.98 | 0.87 | 0.67 | 0.72 | 0.97 |

| 18 | Log4j-1.0 | 0.92 | 0.96 | 0.62 | 0.59 | 0.60 | 0.67 |

| 19 | Log4j-1.1 | 0.90 | 0.95 | 0.57 | 0.55 | 0.58 | 0.55 |

| 20 | Log4j-1.2 | 0.85 | 0.95 | 0.33 | 0.35 | 0.43 | 0.42 |

| 21 | Lucene-2.0 | 0.92 | 0.90 | 0.18 | 0.50 | 0.46 | 0.51 |

| 22 | Lucene-2.2 | 0.93 | 0.96 | 0.50 | 0.38 | 0.36 | 0.36 |

| 23 | Lucene-2.4 | 0.96 | 0.98 | 0.59 | 0.29 | 0.37 | 0.38 |

| 24 | Poi-1.5 | 0.96 | 0.96 | 0.23 | 0.75 | 0.78 | 0.68 |

| 25 | Poi-2.0 | 0.97 | 0.96 | 0.85 | 0.85 | 0.84 | 0.85 |

| 26 | Poi-2.5 | 0.95 | 0.95 | 0.31 | 0.65 | 0.64 | 0.53 |

| 27 | Poi-3.0 | 0.91 | 0.93 | 0.95 | 0.66 | 0.63 | 0.64 |

| 28 | Synapse-1.0 | 0.89 | 0.96 | 0.97 | 0.78 | 0.79 | 0.84 |

| 29 | Synapse-1.1 | 0.90 | 0.95 | 0.53 | 0.63 | 0.64 | 0.63 |

| 30 | Synapse-1.2 | 0.98 | 0.98 | 0.54 | 0.57 | 0.55 | 0.57 |

| 31 | Velocity-1.4 | 0.97 | 0.96 | 0.71 | 0.49 | 0.46 | 0.47 |

| 32 | Velocity-1.5 | 0.96 | 0.96 | 0.33 | 0.54 | 0.51 | 0.50 |

| 33 | Velocity-1.6 | 0.97 | 0.87 | 0.50 | 0.60 | 0.63 | 0.66 |

| 34 | Xalan-2.4 | 0.93 | 0.98 | 0.85 | 0.85 | 0.85 | 0.84 |

| 35 | Xalan-2.5 | 0.98 | 0.99 | 0.88 | 0.61 | 0.56 | 0.58 |

| 36 | Xalan-2.6 | 0.97 | 0.98 | 0.90 | 0.67 | 0.62 | 0.61 |

| 37 | Xalan-2.7 | 0.98 | 0.98 | 0.80 | 0.82 | 0.81 | 0.81 |

| 38 | Xerces-1.2 | 0.98 | 0.89 | 0.80 | 0.80 | 0.82 | 0.79 |

| 39 | Xerces-1.3 | 0.94 | 0.97 | 0.89 | 0.92 | 0.93 | 0.91 |

| 40 | Xerces-1.4 | 0.99 | 0.97 | 0.94 | 0.74 | 0.73 | 0.68 |

| 41 | Xerces-init | 0.87 | 0.94 | 0.58 | 0.55 | 0.56 | 0.55 |

| Mean | 0.943732 | 0.942707 | 0.678537 | 0.689268 | 0.691098 | 0.69122 | |

| Median | 0.95 | 0.96 | 0.714 | 0.67 | 0.71 | 0.68 | |

| SR # | Datasets | Random Forest Ensemble (RFE) | Genetic Algorithm (GA) | ||||

|---|---|---|---|---|---|---|---|

| ALL | CKLOC | IG | ALL | CKLOC | IG | ||

| 1 | Ant-1.3 | 0.88 | 0.80 | 0.54 | 0.38 | 0.43 | 0.41 |

| 2 | Ant-1.4 | 0.88 | 0.78 | 0.59 | 0.44 | 0.39 | 0.41 |

| 3 | Ant-1.5 | 0.97 | 0.60 | 0.72 | 0.31 | 0.35 | 0.35 |

| 4 | Ant-1.6 | 0.92 | 0.86 | 0.69 | 0.50 | 0.52 | 0.55 |

| 5 | Ant-1.7 | 0.96 | 0.90 | 0.90 | 0.45 | 0.48 | 0.50 |

| 6 | Camel-1.0 | 0.96 | 0.93 | 0.58 | 0.20 | 0.19 | 0.20 |

| 7 | Camel-1.2 | 0.96 | 0.90 | 0.78 | 0.52 | 0.58 | 0.51 |

| 8 | Camel-1.4 | 0.91 | 0.93 | 0.57 | 0.39 | 0.41 | 0.38 |

| 9 | Camel-1.6 | 0.95 | 0.95 | 0.52 | 0.40 | 0.44 | 0.38 |

| 10 | Ivy-1.1 | 0.84 | 0.60 | 0.84 | 0.66 | 0.70 | 0.60 |

| 11 | Ivy-1.4 | 0.94 | 0.93 | 0.78 | 0.24 | 0.27 | 0.27 |

| 12 | Ivy-2.0 | 0.95 | 0.93 | 0.53 | 0.36 | 0.31 | 0.41 |

| 13 | Jedit-3.5 | 0.87 | 0.90 | 0.18 | 0.56 | 0.61 | 0.60 |

| 14 | Jedit-4.0 | 0.89 | 0.88 | 0.65 | 0.46 | 0.50 | 0.51 |

| 15 | Jedit-4.1 | 0.89 | 0.83 | 0.53 | 0.52 | 0.52 | 0.56 |

| 16 | Jedit-4.2 | 0.92 | 0.85 | 0.73 | 0.38 | 0.36 | 0.43 |

| 17 | Jedit-4.3 | 0.89 | 0.88 | 0.84 | 0.11 | 0.09 | 0.12 |

| 18 | Log4j-1.0 | 0.85 | 0.84 | 0.85 | 0.49 | 0.56 | 0.51 |

| 19 | Log4j-1.1 | 0.90 | 0.95 | 0.85 | 0.58 | 0.63 | 0.61 |

| 20 | Log4j-1.2 | 0.67 | 0.93 | 0.48 | 0.74 | 0.69 | 0.79 |

| 21 | Lucene-2.0 | 0.91 | 0.97 | 0.29 | 0.63 | 0.65 | 0.61 |

| 22 | Lucene-2.2 | 0.96 | 0.98 | 0.69 | 0.64 | 0.68 | 0.61 |

| 23 | Lucene-2.4 | 0.96 | 0.97 | 0.76 | 0.69 | 0.71 | 0.66 |

| 24 | Poi-1.5 | 0.88 | 0.88 | 0.40 | 0.68 | 0.70 | 0.74 |

| 25 | Poi-2.0 | 0.93 | 0.97 | 0.80 | 0.28 | 0.31 | 0.32 |

| 26 | Poi-2.5 | 0.99 | 0.98 | 0.55 | 0.76 | 0.76 | 0.80 |

| 27 | Poi-3.0 | 0.98 | 0.98 | 0.90 | 0.76 | 0.80 | 0.79 |

| 28 | Synapse-1.0 | 0.95 | 0.91 | 0.97 | 0.29 | 0.33 | 0.34 |

| 29 | Synapse-1.1 | 0.94 | 0.96 | 0.60 | 0.46 | 0.52 | 0.51 |

| 30 | Synapse-1.2 | 0.97 | 0.98 | 0.58 | 0.55 | 0.57 | 0.57 |

| 31 | Velocity-1.4 | 0.92 | 0.91 | 0.75 | 0.57 | 0.64 | 0.72 |

| 32 | Velocity-1.5 | 0.95 | 0.94 | 0.64 | 0.63 | 0.60 | 0.71 |

| 33 | Velocity-1.6 | 0.90 | 0.94 | 0.27 | 0.51 | 0.53 | 0.56 |

| 34 | Xalan-2.4 | 0.91 | 0.95 | 0.84 | 0.39 | 0.38 | 0.40 |

| 35 | Xalan-2.5 | 0.92 | 0.92 | 0.20 | 0.57 | 0.59 | 0.58 |

| 36 | Xalan-2.6 | 0.89 | 0.92 | 0.31 | 0.52 | 0.58 | 0.59 |

| 37 | Xalan-2.7 | 0.93 | 0.95 | 0.31 | 0.81 | 0.84 | 0.78 |

| 38 | Xerces-1.2 | 0.92 | 0.94 | 0.77 | 0.24 | 0.27 | 0.28 |

| 39 | Xerces-1.3 | 0.97 | 0.97 | 0.88 | 0.42 | 0.35 | 0.40 |

| 40 | Xerces-1.4 | 0.98 | 0.93 | 0.84 | 0.66 | 0.65 | 0.71 |

| 41 | Xerces-init | 0.89 | 0.89 | 0.52 | 0.40 | 0.43 | 0.51 |

| Mean | 0.918293 | 0.902780 | 0.634634 | 0.495878 | 0.515 | 0.524073 | |

| Median | 0.92 | 0.93 | 0.65 | 0.507 | 0.529 | 0.519 | |

| SR # | Datasets | Our Classifier | Benchmark Classifier (NB) | ||||

|---|---|---|---|---|---|---|---|

| ALL | CKLOC | IG | ALL | CKLOC | IG | ||

| 1 | Ant-1.3 | 0.70 | 0.60 | 0.51 | 0.29 | 0.25 | 0.38 |

| 2 | Ant-1.4 | 0.66 | 0.71 | 0.13 | 0.19 | 0.13 | 0.20 |

| 3 | Ant-1.5 | 0.80 | 0.66 | 0.31 | 0.34 | 0.41 | 0.42 |

| 4 | Ant-1.6 | 0.78 | 0.75 | 0.48 | 0.42 | 0.43 | 0.46 |

| 5 | Ant-1.7 | 0.91 | 0.90 | 0.66 | 0.47 | 0.45 | 0.52 |

| 6 | Camel-1.0 | 0.83 | 0.88 | 0.56 | 0.34 | 0.34 | 0.19 |

| 7 | Camel-1.2 | 0.88 | 0.79 | 0.57 | 0.25 | 0.25 | 0.24 |

| 8 | Camel-1.4 | 0.88 | 0.86 | 0.55 | 0.21 | 0.22 | 0.26 |

| 9 | Camel-1.6 | 0.92 | 0.87 | 0.46 | 0.20 | 0.26 | 0.23 |

| 10 | Ivy-1.1 | 0.65 | 0.52 | 0.65 | 0.39 | 0.35 | 0.34 |

| 11 | Ivy-1.4 | 0.74 | 0.75 | 0.48 | 0.30 | 0.28 | 0.30 |

| 12 | Ivy-2.0 | 0.81 | 0.85 | 0.52 | 0.39 | 0.39 | 0.42 |

| 13 | Jedit-3.5 | 0.84 | 0.80 | 0.20 | 0.50 | 0.39 | 0.45 |

| 14 | Jedit-4.0 | 0.71 | 0.76 | 0.38 | 0.46 | 0.47 | 0.51 |

| 15 | Jedit-4.1 | 0.78 | 0.84 | 0.41 | 0.60 | 0.53 | 0.57 |

| 16 | Jedit-4.2 | 0.76 | 0.83 | 0.38 | 0.48 | 0.47 | 0.48 |

| 17 | Jedit-4.3 | 0.75 | 0.80 | 0.49 | 0.14 | 0.17 | 0.16 |

| 18 | Log4j-1.0 | 0.84 | 0.79 | 0.43 | 0.38 | 0.29 | 0.24 |

| 19 | Log4j-1.1 | 0.79 | 0.78 | 0.34 | 0.33 | 0.16 | 0.28 |

| 20 | Log4j-1.2 | 0.60 | 0.84 | 0.39 | 0.25 | 0.19 | 0.19 |

| 21 | Lucene-2.0 | 0.69 | 0.97 | 0.14 | 0.27 | 0.27 | 0.33 |

| 22 | Lucene-2.2 | 0.86 | 0.88 | 0.39 | 0.29 | 0.23 | 0.23 |

| 23 | Lucene-2.4 | 0.94 | 0.97 | 0.78 | 0.37 | 0.34 | 0.31 |

| 24 | Poi-1.5 | 0.88 | 0.88 | 0.25 | 0.38 | 0.30 | 0.33 |

| 25 | Poi-2.0 | 0.88 | 0.97 | 0.28 | 0.23 | 0.21 | 0.25 |

| 26 | Poi-2.5 | 0.91 | 0.93 | 0.48 | 0.35 | 0.26 | 0.34 |

| 27 | Poi-3.0 | 0.92 | 0.94 | 0.79 | 0.36 | 0.30 | 0.39 |

| 28 | Synapse-1.0 | 0.65 | 0.75 | 0.85 | 0.33 | 0.27 | 0.33 |

| 29 | Synapse-1.1 | 0.82 | 0.87 | 0.35 | 0.38 | 0.29 | 0.30 |

| 30 | Synapse-1.2 | 0.96 | 0.99 | 0.47 | 0.45 | 0.32 | 0.33 |

| 31 | Velocity-1.4 | 0.79 | 0.80 | 0.48 | 0.18 | 0.17 | 0.21 |

| 32 | Velocity-1.5 | 0.92 | 0.93 | 0.63 | 0.26 | 0.20 | 0.30 |

| 33 | Velocity-1.6 | 0.77 | 0.88 | 0.66 | 0.32 | 0.32 | 0.34 |

| 34 | Xalan-2.4 | 0.81 | 0.88 | 0.83 | 0.38 | 0.32 | 0.40 |

| 35 | Xalan-2.5 | 0.90 | 0.92 | 0.09 | 0.41 | 0.33 | 0.34 |

| 36 | Xalan-2.6 | 0.86 | 0.88 | 0.16 | 0.50 | 0.44 | 0.44 |

| 37 | Xalan-2.7 | 0.87 | 0.95 | 0.17 | 0.51 | 0.38 | 0.38 |

| 38 | Xerces-1.2 | 0.88 | 0.80 | 0.63 | 0.24 | 0.20 | 0.24 |

| 39 | Xerces-1.3 | 0.83 | 0.82 | 0.74 | 0.33 | 0.33 | 0.29 |

| 40 | Xerces-1.4 | 0.88 | 0.80 | 0.82 | 0.37 | 0.30 | 0.30 |

| 41 | Xerces-init | 0.79 | 0.70 | 0.23 | 0.35 | 0.37 | 0.36 |

| Mean | 0.81561 | 0.83146341 | 0.466341 | 0.350659 | 0.31204878 | 0.334976 | |

| Median | 0.83 | 0.84 | 0.48 | 0.35 | 0.303 | 0.331 | |

| Algorithm | Cohen’s d | Glass’s Delta | Hedges’ g |

|---|---|---|---|

| KNN (all) | 2.537 | 2.195 | 2.537 |

| KNN (ckloc) | 3.180 | 2.725 | 3.180 |

| KNN (IG) | 2.846 | 2.391 | 2.846 |

| ANN filter (all) | 2.232 | 7.037 | 2.232 |

| ANN filter (ckloc) | 2.111 | 3.605 | 2.111 |

| ANN filter (IG) | 0.064 | 0.056 | 0.064 |

| RFE (all) | 3.432 | 7.751 | 3.432 |

| RFE (ckloc) | 2.868 | 4.567 | 2.868 |

| RFE (IG) | 0.586 | 0.531 | 0.586 |

| Classifier (all) | 4.874 | 5.254 | 4.874 |

| Classifier (ckloc) | 5.302 | 5.148 | 5.302 |

| Classifier (IG) | 0.811 | 0.634 | 0.811 |

| Algorithm | p-Value (Wilcoxon Test) |

|---|---|

| KNN vs. ANN filter-all | 0.00364 |

| KNN vs. ANN filter-ckloc | 0.00217 |

| RFE vs. GA-all | 0.00261 |

| RFE vs. GA-ckloc | 0.00564 |

| RFE vs. GA-IG | 0.00013 |

| Classifier vs. NB-ckloc | 0.00024 |

| Classifier vs. NB-IG | 0.00128 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Faiz, R.b.; Shaheen, S.; Sharaf, M.; Rauf, H.T. Optimal Feature Selection through Search-Based Optimizer in Cross Project. Electronics 2023, 12, 514. https://doi.org/10.3390/electronics12030514

Faiz Rb, Shaheen S, Sharaf M, Rauf HT. Optimal Feature Selection through Search-Based Optimizer in Cross Project. Electronics. 2023; 12(3):514. https://doi.org/10.3390/electronics12030514

Chicago/Turabian StyleFaiz, Rizwan bin, Saman Shaheen, Mohamed Sharaf, and Hafiz Tayyab Rauf. 2023. "Optimal Feature Selection through Search-Based Optimizer in Cross Project" Electronics 12, no. 3: 514. https://doi.org/10.3390/electronics12030514

APA StyleFaiz, R. b., Shaheen, S., Sharaf, M., & Rauf, H. T. (2023). Optimal Feature Selection through Search-Based Optimizer in Cross Project. Electronics, 12(3), 514. https://doi.org/10.3390/electronics12030514