Development of Evaluation Criteria for Robotic Process Automation (RPA) Solution Selection

Abstract

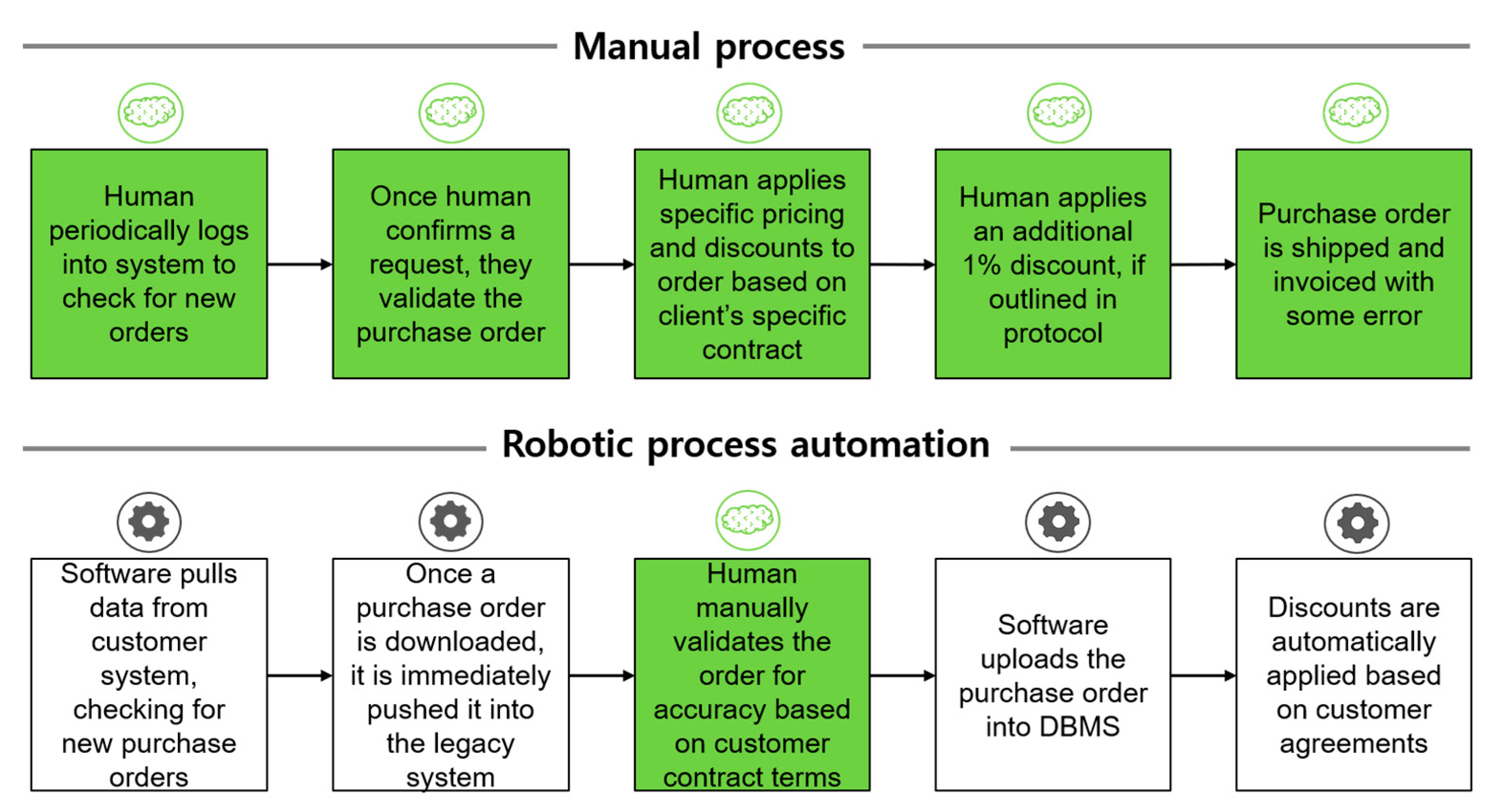

:1. Introduction

2. Preliminary Research

2.1. Screen Scraping

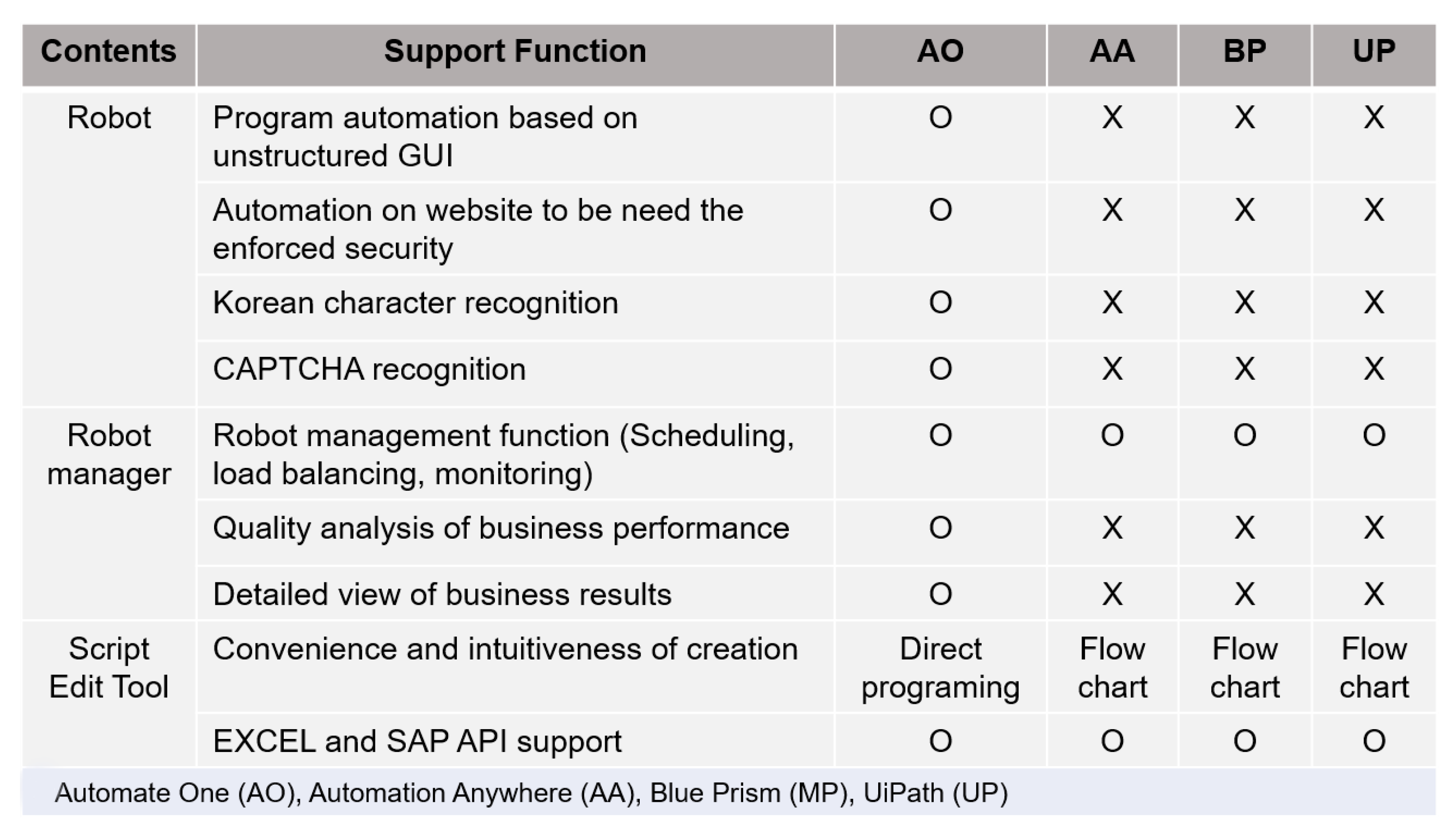

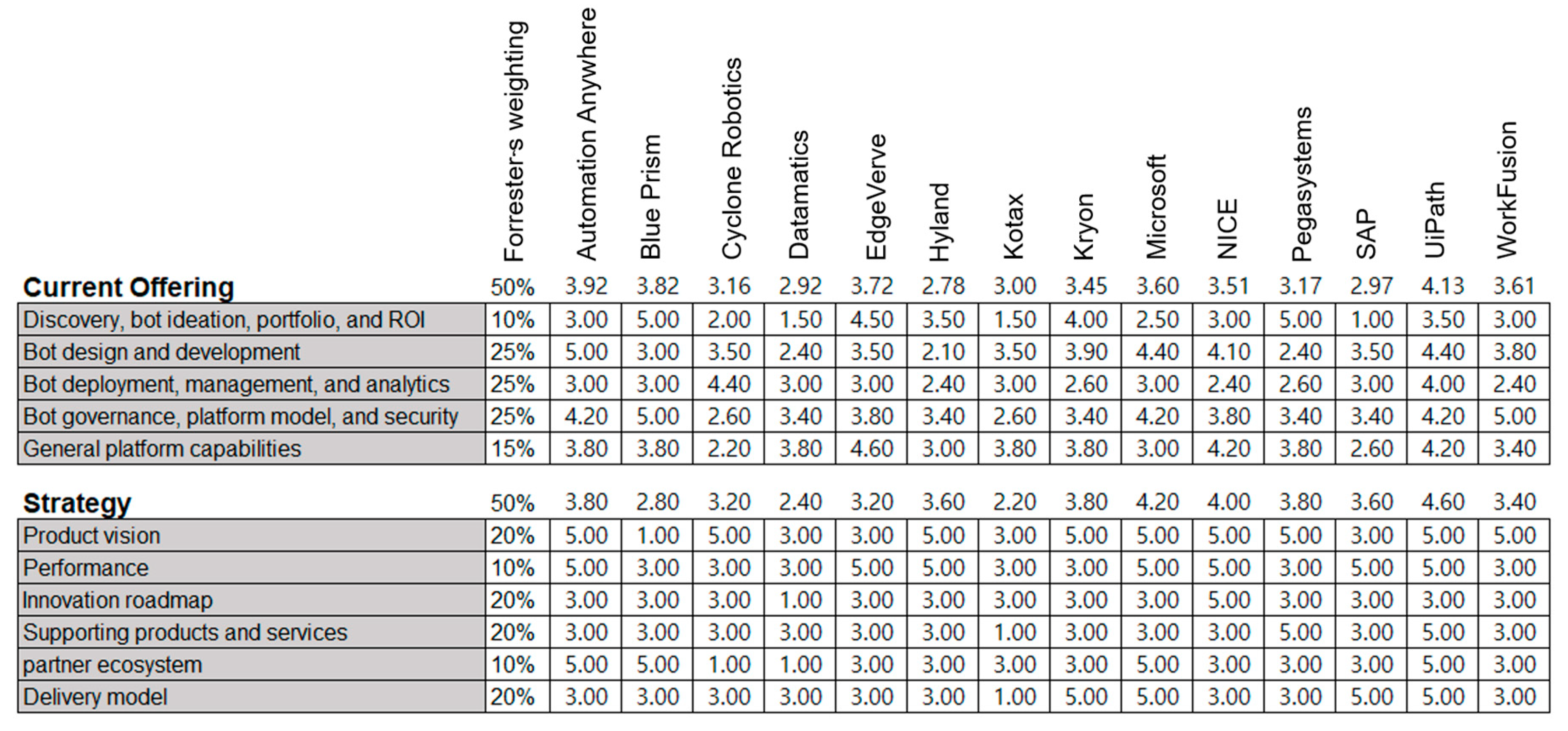

2.2. Comparative Studies on RPA Solutions

2.3. Studies on RPA Solution Evaluation Elements

2.4. Business Structural Optimisation Studies on Improving RPA Operational Efficiency

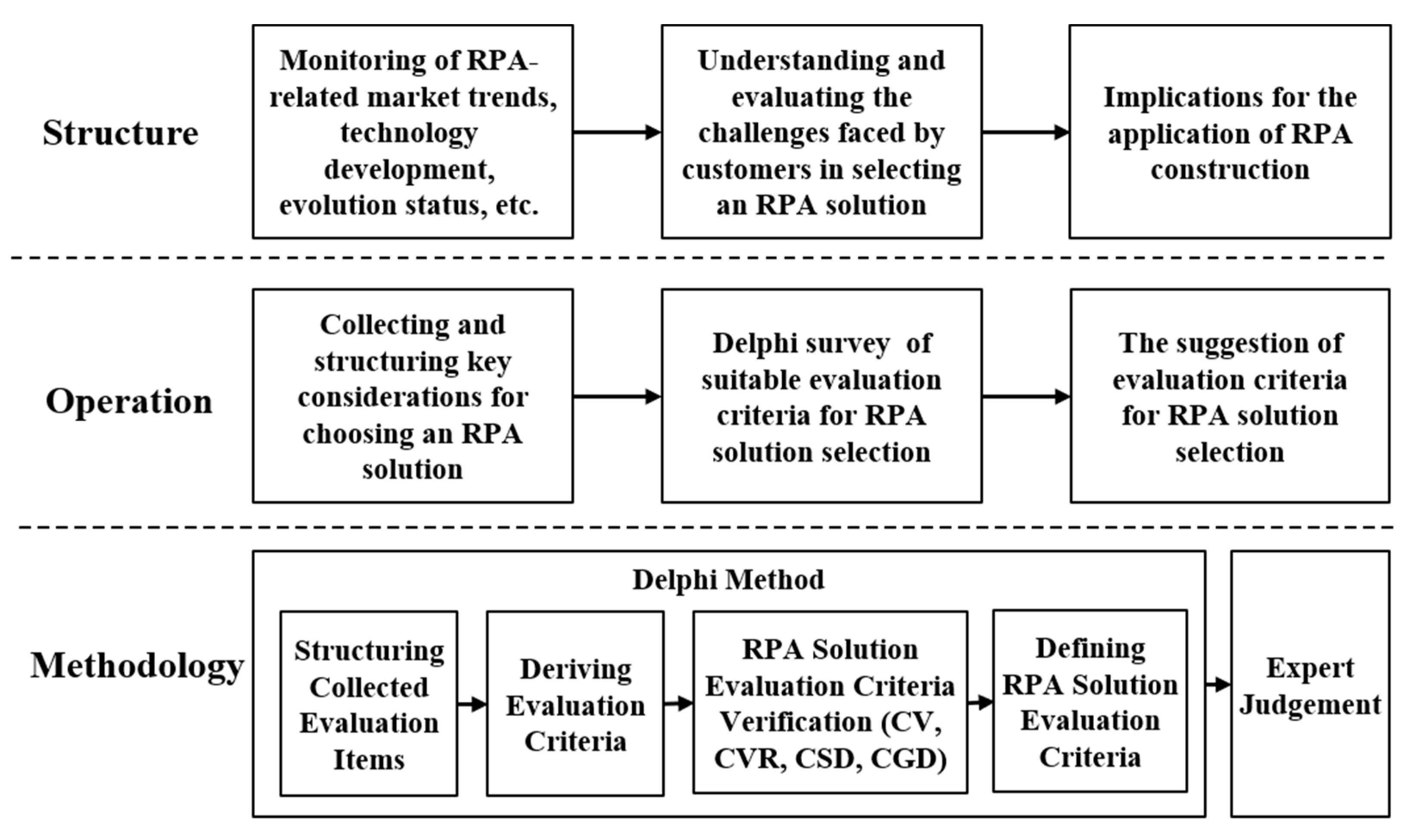

3. Research Procedure and Methodology

3.1. Research Procedure

- (1)

- Stability measured using the CV

- (2)

- CVR

- (3)

- Consensus degree (CSD) and convergence degree (CGD)

- (4)

- CA

3.2. Research Methodology

4. Developing Evaluation Criteria for RPA Solution Selection

4.1. Structuring Selected Evaluation Items

4.2. Deriving Evaluation Criteria for RPA Solution Selection

4.3. RPA Solution Evaluation Criteria Verification

4.4. Final Validated RPA Solution Evaluation Criteria

4.5. Implications

4.6. Potential Threats to the Validity of this Study

5. Conclusions

Funding

Data Availability Statement

Conflicts of Interest

References

- Madakam, S.; Holmukhe, R.M.; Kumar, J.D.K. The future digital work force: Robotic process automation (RPA). JISTEM-J. Inf. Syst. Technol. Manag. 2019, 16, 1–17. [Google Scholar] [CrossRef]

- Kim, K.B. A study of convergence technology in robotic process automation for task automation. J. Converg. Inf. Technol. (JCIT) 2019, 9, 8–13. [Google Scholar] [CrossRef]

- Dossier: The Choice of Leading Companies RPA, How to Choose It Well, and Use It Well. IBM Korea. Available online: https://www.ibm.com/downloads/cas/RRX5GWY1 (accessed on 6 April 2020).

- Ribeiro, J.; Lima, R.; Eckhardt, T.; Paiva, S. Robotic process automation and artificial intelligence in industry 4.0-A literature review. Procedia Comput. Sci. 2021, 181, 51–58. [Google Scholar] [CrossRef]

- van der Aalst, W.M.P.; Bichler, M.; Heinzl, A. Robotic process automation. Bus. Inf. Syst. Eng. 2018, 60, 269–272. [Google Scholar] [CrossRef] [Green Version]

- Aguirre, S.; Rodriguez, A. Automation of a business process using robotic process automation (RPA): A case study. Commun. Comput. Inf. Sci. 2017, 742, 65–71. [Google Scholar] [CrossRef]

- Pramod, D. Robotic process automation for industry: Adoption status, benefits, challenges and research agenda. Benchmarking Int. J. 2021, 29, 1562–1586. [Google Scholar] [CrossRef]

- Kang, S.H.; Lee, H.S.; Ryu, H.S. The Catalysts for Digital Transformation, Low·No-Code and RPA, Issue Report IS-117, Software Policy & Research Institute. Available online: www.spri.kr (accessed on 29 June 2021).

- IEEE. Available online: http://standards.ieee.org/standard/2755-2017.html (accessed on 2 April 2021).

- Hyun, Y.; Lee, D.; Chae, U.; Ko, J.; Lee, J. Improvement of business productivity by applying robotic process automation. Appl. Sci. 2021, 11, 10656. [Google Scholar] [CrossRef]

- Sarilo-Kankaanranta, H.; Frank, L. The Continued Innovation-Decision Process—A Case Study of Continued Adoption of Robotic Process Automation. In Proceedings of the European, Mediterranean, and Middle Eastern Conference on Information Systems, Virtual Event, 8–9 December 2021; Springer: Cham, Switzerland, 2022; pp. 737–755. [Google Scholar] [CrossRef]

- Wewerka, J.; Reichert, M. Robotic Process Automation: A Systematic Literature Review and Assessment Framework [Technical report]. arXiv 2020, arXiv:2012.11951. [Google Scholar]

- Marciniak, P.; Stanisławski, R. Internal determinants in the field of RPA technology implementation on the example of selected companies in the context of Industry 4.0 Assumptions. Information 2021, 12, 222. [Google Scholar] [CrossRef]

- Hyen, Y.G.; Lee, J.Y. Trends analysis and future direction of business process automation, RPA (robotic process automation) in the times of convergence. J. Digit. Converg. 2018, 16, 313–327. [Google Scholar] [CrossRef]

- Asatiani, A.; Penttinen, E. Turning robotic process automation into commercial success—Case opuscapita. J. Inf. Technol. Teach. Cases 2016, 6, 67–74. [Google Scholar] [CrossRef]

- George, A.; Ali, M.; Papakostas, N. Utilising robotic process automation technologies for streamlining the additive manufacturing design workflow. CIRP Ann. 2021, 70, 119–122. [Google Scholar] [CrossRef]

- Lee, T.-L.; Chuang, M.-C. Foresight for public policy of solar energy industry in Taiwan: An application of Delphi method and Q methodology. In Proceedings of the PICMET’12: Technology Management for Emerging Technologies, Vancouver, BC, Canada, 29 July–2 August 2012; IEEE Publications: New York, NY, USA, 2012. [Google Scholar]

- Yoon, S.; Roh, J.; Lee, J. Innovation resistance, satisfaction and performance: Case of robotic process automation. J. Digit. Converg. 2021, 19, 129–138. [Google Scholar]

- Gartner. Top 10 Trends in PaaS and Platform Innovation. Available online: https://discover.opscompass.com/en/top-10-trends-in-paas-and-platform-innovation-2020. (accessed on 10 October 2021).

- McKinsey. The State of AI in 2020. 2020. Available online: https://www.stateof.ai/. (accessed on 10 October 2021).

- Schatsky, D.; Muraskin, C.; Iyengar, K. Robotic Process Automation: A Path to the Cognitive Enterprise; Deloitte N Y Consult: New York, NY, USA, 2016. [Google Scholar]

- Skulmoski, G.J.; Hartman, F.T.; Krahn, J. The Delphi Method for Graduate Research. J. Inf. Technol. Educ. Res. 2021, 6, 1. [Google Scholar] [CrossRef]

- van Oostenrijk, A. Screen Scraping Web Services; Radboud University of Nijmegen, Department of Computer Science: Nijmegen, The Netherlands, 2004. [Google Scholar]

- Schaffrik, B. The Forrester Wave: Robotic Process Automation, Q1 2021. Herausgegeben von Forrester Research. Available online: start.uipath.com/rs/995-XLT-886/images/161538_print_DE.PDF (accessed on 10 October 2021).

- U.S. Fed.: RPA Community Practice RPA Program Playbook. 2020. Available online: https://www.fedscoop.com/rpa-cop-first-playbook/ (accessed on 3 September 2021).

- Etnews, J. RPA Introduction Guide Seminar. Available online: https://m.etnews.com/20190226000165?obj=Tzo4OiJzdGRDbGFzcyI6Mjp7czo3OiJyZWZlcmVyIjtOO3M6NzoiZm9yd2FyZCI7czoxMzoid2ViIHRvIG1vYmlsZSI7fQ%3D%3D (accessed on 10 February 2021).

- Séguin, S.; Tremblay, H.; Benkalaï, I.; Perron-Chouinard, D.; Lebeuf, X. Minimizing the number of robots required for a robotic process automation (RPA) problem. Procedia Comput. Sci. 2021, 192, 2689–2698. [Google Scholar] [CrossRef]

- Agostinelli, S.; Marrella, A.; Mecella, M. Research challenges for intelligent robotic process automation. In Proceedings of the International Conference on Business Process Management, Vienna, Austria, 1–6 September 2019; Springer: Cham, Switzerland, 2019; pp. 12–18. [Google Scholar]

- Choi, D.; R’bigui, H.; Cho, C. Candidate digital tasks selection methodology for automation with robotic process automation. Sustainability 2021, 13, 8980. [Google Scholar] [CrossRef]

- Atencio, E.; Komarizadehasl, S.; Lozano-Galant, J.A.; Aguilera, M. Using RPA for performance monitoring of dynamic SHM applications. Buildings 2022, 12, 1140. [Google Scholar] [CrossRef]

- Kim, S.H. Development of satisfaction evaluation items for degree-linked high skills Meister courses using the Delphi method. J. Inst. Internet Broadcast. Commun. 2020, 20, 163–173. [Google Scholar]

- Mitchell, V.-W.; McGoldrick, P.J. The Role of Geodemographics in Segmenting and Targeting Consumer Markets. Eur. J. Mark. 1994, 28, 54–72. [Google Scholar] [CrossRef]

- Na, Y.-S.; Kim, H.-B. Research articles: A study of developing educational training program for flight attendants using the Delphi technique. J Tourism. Sci. Soc. Korea 2011, 35, 465–488. [Google Scholar]

- Khorramshahgol, R.; Moustakis, V.S. Delphic hierarchy process (DHP): A methodology for priority setting derived from the Delphi method and analytical hierarchy process. Eur. J. Oper. Res. 1988, 37, 347–354. [Google Scholar] [CrossRef]

- Ayre, C.; Scally, A.J. Critical values for Lawshe’s content validity ratio: Revisiting the original methods of calculation. Meas. Eval. Couns. Dev. 2014, 47, 79–86. [Google Scholar] [CrossRef] [Green Version]

- Lawshe, C.H. A quantitative approach to content validity. Pers. Psychol. 1975, 28, 563–575. [Google Scholar] [CrossRef]

- Delbecq, A.L.; Van de Ven, A.H.; Gustafson, D.H. Group Techniques for Program Planning: A Guide to Nominal Group and Delphi Processes. Foresman, Scott Deloitte Analysis dupress.com; Deloitte University Press: Quebec, QC, Canada, 1975. [Google Scholar]

- Murry, J.W., Jr.; Hammons, J.O. Delphi: A versatile methodology for conducting qualitative research. Rev. Higher Educ. 1995, 18, 423–436. [Google Scholar] [CrossRef]

- Völker, M.; Weske, M. Conceptualizing bots in robotic process automation. In Proceedings of the International Conference on Conceptual Modeling, Virtual, 18–21 October 2021; Springer: Cham, Switzerland, 2021; pp. 3–13. [Google Scholar]

- Banta, V.C. Application of RPA Solutions near ERP Systems-in The business Environment Related to the Production Area. A Case Study. Ann. Univ. Craiova Econ. Sci. Ser. 2020, 1, 17–24. [Google Scholar]

- Banta, V.C. The Current Opportunities Offered by AI and RPA near to the ERP Solution-Proposed Economic Models and Processes, Inside Production Area. A Case Study. Ann. Constantin Brancusi' Univ. Targu-Jiu. Econ. Ser. 2022, 1, 159–164. [Google Scholar]

- Banta, V.C. The Impact of the Implementation of AI and RPA Type Solutions in the Area Related to Forecast and Sequencing in the Production Area Using Sap. A Case Study. Ann. Univ. Craiova Econ. Sci. Ser. 2020, 2, 121–126. [Google Scholar]

- Banta, V.C.; Turcan, C.D.; Babeanu, A. The Impact of the Audit Activity, Using AI, RPA and ML in the Activity of Creating the Delivery List and the Production Plan in Case of a Production Range. A Case Study. Ann. Univ. Craiova Econ. Sci. Ser. 2022, 1, 98–104. [Google Scholar]

- Hsiung, H.H.; Wang, J.L. Research on the Introduction of a Robotic Process Automation (RPA) System in Small Accounting Firms in Taiwan. Economies 2022, 10, 200. [Google Scholar] [CrossRef]

- E-Fatima, K.; Khandan, R.; Hosseinian-Far, A.; Sarwar, D.; Ahmed, H.F. Adoption and Influence of Robotic Process Automation in Beef Supply Chains. Logistics 2022, 6, 48. [Google Scholar] [CrossRef]

- Sobczak, A.; Ziora, L. The use of robotic process automation (RPA) as an element of smart city implementation: A case study of electricity billing document management at Bydgoszcz city Hall. Energies 2021, 14, 5191. [Google Scholar] [CrossRef]

- Jaiwani, M.; Gopalkrishnan, S. Adoption of RPA and AI to Enhance the Productivity of Employees and Overall Efficiency of Indian Private Banks: An Inquiry. In Proceedings of the 2022 International Seminar on Application for Technology of Information and Communication (iSemantic), Semarang, Indonesia, 17–18 September 2022; IEEE: New York, NY, USA, 2022; pp. 191–197. [Google Scholar] [CrossRef]

- Agostinelli, S.; Lupia, M.; Marrella, A.; Mecella, M. Reactive synthesis of software robots in RPA from user interface logs. Comput. Ind. 2022, 142, 103721. [Google Scholar] [CrossRef]

- Vijai, C.; Suriyalakshmi, S.M.; Elayaraja, M. The Future of Robotic Process Automation (RPA) in the Banking Sector for Better Customer Experience. Shanlax Int. J. Commer. 2020, 8, 61–65. [Google Scholar] [CrossRef] [Green Version]

- Vinoth, S. Artificial intelligence and transformation to the digital age in Indian banking industry—A case study. Artif. Intell. 2022, 13, 689–695. [Google Scholar]

| Dimensional Classification | Evaluation Factors |

|---|---|

| Process | Workflow management |

| Process recording and reproduction | |

| Self-study capability | |

| Usability | |

| Process engineering and evaluation | |

| Automation | Visual creation tool |

| Instruction library | |

| Full/partial automation capability | |

| Component sharing | |

| Test/debug control method | |

| Usability | |

| Management and Operation | Centralised deployment, management, and scheduling |

| Licensing structure | |

| Scalability, availability, and performance management | |

| Exception management | |

| Dashboard capability | |

| Business and operational analysis capability |

| Classification | Major Consideration Items | |

|---|---|---|

| Introduction stage | Cost | Model deployment, licensing, and operating costs |

| Usability | System interaction and integration | |

| Technical aspect | OS/hardware requirements, and RPA deployment and operational capabilities | |

| Vendor support | Support capabilities, education, customer service, contracts, etc. | |

| Technical architecture establishment | Vendor experience | Pre-evaluated market awareness, existing performance in the same field, customer cases, terms and conditions, and considerations |

| Product function | Applies mutatis mutandis [25] | |

| Security | Legal compliance, account and personal identification management, risk/security assessment, authentication, data encryption/protection, process tracking | |

| Architecture | Review hardware/software requirements and virtual server design, multi-tenancy, on-premise/cloud, permissions, availability/disaster recovery capabilities, network capacity/performance management capabilities | |

| Technological policy | Security policy | Develop risk management strategies, authority management strategies, and code control strategies after evaluating the risks involved in the scope of implementation |

| Account/personal identification management | Service/network and system/ application-level access management required | |

| Privacy protection | Understand system/application lines, capabilities/functionality, and user reviews, and interact with related systems to determine whether current security policies or use cases violate privacy policies depending on the type of data the system handles | |

| Process | Technological compatibility [25] | Consistency of RPA program usage and objectives Appropriateness of RPA service distribution/operation model RPA program technical policy/architecture consistency |

| Strategic compatibility [25] | Corporate and institutional missions and objectives Leadership priorities, strategies, and initiatives Calculations corresponding departmental and corporate objectives | |

| Effect [25] | Compliance/audit functions | |

| Operation and management | Operation | Operational management, change management, automation interruption response, code sharing, automation scheduling, etc. |

| Standardised asset management | Licence management, technical policy updating, RPA lifecycle management | |

| Ref. | Kang et al. [8] | U.S. [25] | Kim [2] | Ribeiro et al. [4] | Lu et al. [24] | Etnews [26] |

|---|---|---|---|---|---|---|

| Number of key criteria | 20 | 17 | 9 | 13 | 10 | 18 |

| I. Category | No. | II. Evaluation Items | III. Evaluation Criteria |

|---|---|---|---|

| 1. Introduction strategy | 1 | Economic validity | Price [26], cost elements (deployment, licensing, and operating expenses) [8], ROI [24], investment efficiency [26] |

| 2 | Supplier maintenance support | Reference customer case holder [8,26], solution provider capability [26], product vision [24], dealer market awareness [8], existing results in the same field [8], terms and conditions [8], operability [26], serviceability [26], product and service support [24], support capabilities, education and customer service [8] | |

| 3 | Technological compatibility | Hardware/software requirements [8], development convenience [26], technical elements (OS requirements and technology, and capabilities required for RPA deployment and operation) [8], technical compatibility [26], performance [24], system interaction and integration [8] | |

| 4 | Security policies | Personal information protection (consideration of personal information protection policies according to system/application lines, capabilities/functionality and user reviews, and interactive system data types) [8], account/personal identification management [8] | |

| 5 | Real-time decision making support | Classification [4], cognition [4], information extraction [4], optimisation [4] | |

| 6 | Strategic compatibility | Portfolio [24], revolutionary roadmap [24], risk management strategies, corporate and institutional missions and objectives [8], and leadership priorities, strategies, and initiatives according to risk analysis evaluation [8] | |

| 7 | Process | Iterative and regular process identification/discovery [8], bot idea [24], delivery model [24], company business characteristics and business areas [26], calculations matching departmental and company-wide objectives [8] | |

| 2. Functionality | 8 | Robot management and operations | Bot platform model, security [24], availability [25], and quality analysis of business performance (provided a graph of business performance) [2], management and analysis [24], dashboard capability [25], licensing structure [25], robot management functions [2], business performance management [25], exception management |

| 9 | Analysis/ categorising/ predicting | Artificial neural network (ANN) [4], neuro-linguistic programming [4], decision tree [4], recommendation system [4], computer vision cognition [4], Text mining [4], statistical technique [4], fuzzy logic [4], fuzzy matching [4] | |

| 10 | Automation | Excel and SAP (ERP Solution) API support [2], instruction library [25], security enhancement site response [26], security character recognition [26], information security [26], bot development [24], bot design and development [24], atypical GUI infrastructure program automation (X-Internet, Active X, Flash) [2], task performance ability in standardised GUI environment [26], Hangul character recognition ability [26], Hangul character recognition [2] | |

| 11 | Process | Technology (RPA service distribution and operational model competency) [8], technology (RPA program technology policy/architecture compatibility) [8], usability [25], architectural requirements are easy to derive [26], application functions [26], workflow [25], self-learning capabilities [25], process greening and reproduction [25], process engineering and evaluation [25] | |

| 3. Technical Architecture | 12 | Security | Compliance with legal systems such as personal information protection [8], account and personal identification management [8], data encryption/protection [8], application security [8], risk/security evaluation [8], authentication [8], process traceability [8] |

| 13 | Architecture | On-premise/cloud [8], virtualisation server design [8], availability/disaster recovery capabilities [8], permissions [8], network capacity [8], multi-tenancy [8], performance management capabilities review [8] | |

| 4. Operational management | 14 | Operation | Change management [2], operation management [2], automation scheduling [8], automation interruption accident response [8] |

| 15 | Standardised asset management | Code sharing method [8], technical policy update, RPA lifecycle management [8], licence management [8] |

| Experts | RPA Construction (Supplier Group) | RPA Operations (Client Group) | |||||

|---|---|---|---|---|---|---|---|

| ⓐ | ⓑ 1 | ⓒ | ⓓ 1 | ⓔ | ⓕ | ⓖ | |

| 1 | 20 | AO, AA, BR | 5 | - | - | - | |

| 2 | 5 | BR | 3 | BR | 10 | 3 | Finance, manufacturing, MIS, IT |

| 3 | - | - | - | AO | 1 | 3 | Public |

| 4 | - | - | - | AA | 20 | 4 | MIS, manufacturing, logistics, R&D |

| 5 | 10 | BR | 3 | - | - | - | |

| 6 | 10 | AA, BP, UP, AO | 5 | - | - | - | - |

| 7 | 7 | AA, AW | 4 | AA, AW | 250 | 3 | FCM, HR, SCM, CRM, MFG |

| 8 | 10 | AO | 3 | - | - | - | - |

| 9 | 20 | UP | 3 | - | - | - | - |

| 10 | 15 | AO | 4 | - | - | - | - |

| 11 | 13 | UP | 4 | UP | 20 | 3 | Aviation, production, pharmaceutical, retail |

| No. | Mean | SD | CV | CVR | CSD | CGD | ⓐ | ⓑ | ⓒ | ⓓ | Selection | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| I. | 1 | 6.27 | 0.75 | 0.12 | 1.00 | 0.83 | 0.50 | 0 | 0 | 0 | 0 | O |

| 2 | 6.09 | 0.51 | 0.08 | 0.82 | 1.00 | 0.00 | 0 | 0 | 0 | 0 | O | |

| 3 | 5.45 | 0.78 | 0.14 | 0.64 | 0.80 | 0.50 | 0 | 0 | 0 | 0 | O | |

| 4 | 5.82 | 1.03 | 0.18 | 0.64 | 0.83 | 0.50 | 0 | 0 | 0 | 0 | O | |

| II. | 1 | 5.73 | 1.66 | 0.29 | 0.64 | 0.75 | 0.75 | 0 | 0 | 0 | 1 | X |

| 2 | 5.18 | 1.75 | 0.34 | 0.45 | 0.40 | 1.50 | 0 | 1 | 1 | 1 | X | |

| 3 | 4.91 | 1.50 | 0.31 | 0.45 | 0.75 | 0.75 | 0 | 1 | 0 | 1 | X | |

| 4 | 4.45 | 1.62 | 0.36 | 0.09 | 0.60 | 1.00 | 0 | 1 | 1 | 1 | X | |

| 5 | 2.64 | 1.30 | 0.49 | −0.82 | 0.67 | 0.50 | 0 | 1 | 1 | 0 | X | |

| 6 | 3.64 | 1.61 | 0.44 | −0.45 | 0.63 | 0.75 | 0 | 1 | 1 | 1 | X | |

| 7 | 4.18 | 1.80 | 0.43 | −0.27 | 0.25 | 1.50 | 0 | 1 | 1 | 1 | X | |

| 8 | 4.73 | 1.54 | 0.33 | 0.27 | 0.60 | 1.00 | 0 | 1 | 1 | 1 | X | |

| 9 | 4.09 | 1.93 | 0.47 | 0.27 | 0.50 | 1.25 | 0 | 1 | 1 | 1 | X | |

| 10 | 4.82 | 1.90 | 0.39 | 0.45 | 0.60 | 1.00 | 0 | 1 | 1 | 1 | X | |

| 11 | 3.73 | 1.48 | 0.40 | −0.27 | 0.38 | 1.25 | 0 | 1 | 1 | 1 | X | |

| 12 | 4.73 | 1.66 | 0.35 | 0.09 | 0.60 | 1.00 | 0 | 1 | 1 | 1 | X | |

| 13 | 5.64 | 0.88 | 0.16 | 0.82 | 0.83 | 0.50 | 0 | 0 | 0 | 0 | O | |

| 14 | 6.00 | 1.04 | 0.17 | 0.82 | 0.67 | 1.00 | 0 | 0 | 1 | 1 | X | |

| 15 | 4.73 | 1.54 | 0.33 | 0.45 | 0.80 | 0.50 | 0 | 1 | 0 | 0 | X | |

| III | 1 | 5.55 | 1.78 | 0.32 | 0.64 | 0.67 | 1.00 | 0 | 0 | 1 | 1 | X |

| 2 | 5.64 | 0.98 | 0.17 | 0.82 | 0.70 | 0.75 | 0 | 0 | 1 | 1 | X | |

| 3 | 5.09 | 1.24 | 0.24 | 0.45 | 0.75 | 0.75 | 0 | 1 | 0 | 1 | X | |

| 4 | 4.82 | 1.53 | 0.32 | 0.45 | 0.70 | 0.75 | 0 | 1 | 1 | 1 | X | |

| 5 | 2.73 | 0.96 | 0.35 | −1.00 | 0.83 | 0.25 | 0 | 1 | 0 | 0 | X | |

| 6 | 4.55 | 1.44 | 0.32 | −0.09 | 0.25 | 1.50 | 0 | 1 | 1 | 1 | X | |

| 7 | 4.18 | 1.53 | 0.37 | −0.27 | 0.38 | 1.25 | 0 | 1 | 1 | 1 | X | |

| 8 | 5.09 | 0.79 | 0.16 | 0.45 | 0.70 | 0.75 | 0 | 1 | 1 | 1 | X | |

| 9 | 3.45 | 1.88 | 0.54 | −0.27 | 0.13 | 1.75 | 0 | 1 | 1 | 1 | X | |

| 10 | 5.82 | 0.94 | 0.16 | 0.82 | 0.75 | 0.75 | 0 | 0 | 0 | 1 | X | |

| 11 | 4.64 | 1.15 | 0.25 | −0.09 | 0.50 | 1.00 | 0 | 1 | 1 | 1 | X | |

| 12 | 5.00 | 1.60 | 0.32 | 0.64 | 0.80 | 0.50 | 0 | 0 | 0 | 0 | O | |

| 13 | 5.00 | 1.54 | 0.31 | 0.45 | 0.70 | 0.75 | 0 | 1 | 1 | 1 | X | |

| 14 | 5.91 | 1.16 | 0.20 | 0.64 | 0.67 | 1.00 | 0 | 0 | 1 | 1 | X | |

| 15 | 4.64 | 1.49 | 0.32 | 0.09 | 0.70 | 0.75 | 0 | 1 | 1 | 1 | X |

| I. Categories | No. | Ⅱ. Evaluation Items | Ⅲ. Evaluation Criteria |

|---|---|---|---|

| 1. Customer deployment strategy N2 | 1 | Economic validity | Expenses (solution, introduction and construction, licence, operations) [8,26] N2, investment value (ROI; [24], EVA, TCO, EVS, TEI, BSC, etc.) N1 |

| 2 | Capabilities of solution suppliers N2 | Reference customer case holder [8,26] solution provider capability [26], product vision [24], dealer market awareness [8], existing results in the same field [8], terms and conditions [8], product and service support [24], support capabilities, education and customer service [8], partner ecosystem [24] | |

| 3 | Technology policy conformity N2 | Purpose of application of RPA introduction N4, hardware/software requirements [8], technical elements (OS/hardware requirements and technical capabilities required for RPA deployment and operation) [8], technical compatibility [26], performance [24], system interaction and integration [8], RPA program application consistency [8], scalability N1, relatedness to other technologies N1, portfolio [24] N4, revolutionary roadmap [24] N4, risk management strategy based on risk analysis evaluation [8,26] N4, mission and objectives of companies and agencies [8] N4, leadership priority and strategy, initiative [8] N4, licensing structure [25] N4 | |

| 4 | Security policy conformity | Personal information protection (considering personal information protection policies based on system/application lines, capabilities, functions and user reviews, interactive system data types) [8], account/personal identification management [8] N3, establishment of code control strategies [8] | |

| 7 | Methodology N2 | Repeated and regular process identification/standardisation [8] (modify benchmark names to reflect primary comments), bot ideas [24] N3, business performance processing procedures [24] N2, characteristics and operations of the company [26] N3, calculations consistent with departmental and enterprise unit objectives [8], process engineering and evaluation [25] N4 | |

| 2. Development and operability N2 | 8 | Robot management and operability N2 | Bot platform model and security [24], availability [25], business performance N3, quality analysis (provided with quality transition graphs) [2], management and analysis [24], dashboard capabilities [25], robot management functions (scheduling, load balancing, monitoring) [2], business and operational analysis functions [25], business performance details [2] N3, performance management [25], exception management [25], centralised deployment management, scheduling [25], operability [26] N4, maintainability [26] N4, self-study capability [25] N4, derive architectural requirements N3, tenancy [8] N4 |

| 10 | Ease of development for automated processes N2 | Excel and SAP (ERP solution) API support [2], command library [25], security enhancement site response [26], security character recognition [2], security [26], bot development [24], bot design and development [24], atypical GUI-based program automation (X-Internet, Active X, Flash) [2], performance ability under standardised GUI environment [26], usability [25], visual creation tools [25], full/partial automation capabilities [25], website automation essential security enhancements (HOMETAX, GOV24, Court, e-car) [2], convenient and intuitive creation (direct programming, flowchart, etc.) [2], component sharing [25], test/debug control methods [25], character recognition ability to handle local language specificities without exception [2,26] N2, OCR N1, development convenience [26] N4, RPA program service distribution/operation model conformity [8] N4, application functions [26] N4, workflow [25] N4, process recording and reproduction [25] N4 | |

| 16 | Collaboration and expansion of AI technology [4] N2 | Classification, cognition, information extraction, optimisation, ANN, natural language processing (NLP), decision tree, recommendation system, computer vision cognition, text mining, statistical technique, fuzzy logic, fuzzy matching N4, process mining N1, scalability [25] N4 | |

| 3. Technical architecture | 12 | Security [8] | Compliance with legal systems such as personal information protection, account and personal identification management, data encryption/protection, application security, risk/security evaluation, authentication, process traceability |

| 13 | Architecture [8] | On-premise/cloud, virtualisation support using VM/content technology N2, availability/disaster recovery capabilities, permissions, network capacity, performance management capabilities review, RPA program technical policy/architecture consistency N4, availability of duplex configuration N1, collaboration structure with customer’s internal system N1 | |

| 4. Operation management system N2 | 14 | Operation policy [8] N2 | Change management, operational management, automation scheduling, automation interruption accident response, bot management, and operational data visualisation policy N1 |

| 15 | Asset management for information assets [8] N2 | Code sharing method, technical policy update, RPA lifecycle management, licence management, standardised operating models N1 |

| No. | Mean | SD | CV | CVR | CSD | CGD | ⓐ | ⓑ | ⓒ | ⓓ | Selection | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| I. | 2 | 6.18 | 0.72 | 0.12 | 1.00 | 0.83 | 0.50 | 0 | 0 | 0 | 0 | O |

| 3 | 5.55 | 0.99 | 0.18 | 0.64 | 0.83 | 0.50 | 0 | 0 | 0 | 0 | O | |

| 4 | 5.55 | 1.08 | 0.19 | 0.64 | 0.70 | 0.75 | 0 | 0 | 1 | 1 | X | |

| II. | 1 | 5.91 | 1.08 | 0.18 | 0.82 | 0.67 | 1.00 | 0 | 0 | 1 | 1 | X |

| 2 | 5.82 | 1.11 | 0.19 | 0.82 | 0.83 | 0.50 | 0 | 0 | 0 | 0 | O | |

| 3 | 5.27 | 0.75 | 0.14 | 0.64 | 0.80 | 0.50 | 0 | 0 | 0 | 0 | O | |

| 4 | 5.09 | 0.90 | 0.18 | 0.27 | 0.60 | 1.00 | 0 | 1 | 1 | 1 | X | |

| 7 | 4.64 | 1.30 | 0.28 | 0.09 | 0.60 | 1.00 | 0 | 1 | 1 | 1 | X | |

| 8 | 5.55 | 0.89 | 0.16 | 0.82 | 0.80 | 0.50 | 0 | 0 | 0 | 0 | O | |

| 10 | 5.27 | 1.21 | 0.23 | 0.45 | 0.70 | 0.75 | 0 | 1 | 1 | 1 | X | |

| 16 | 5.91 | 1.08 | 0.18 | 0.64 | 0.75 | 0.75 | 0 | 0 | 0 | 1 | X | |

| 12 | 5.55 | 1.08 | 0.19 | 0.45 | 0.75 | 0.75 | 0 | 1 | 0 | 1 | X | |

| 13 | 5.45 | 0.89 | 0.16 | 0.64 | 0.83 | 0.50 | 0 | 0 | 0 | 0 | O | |

| 14 | 5.64 | 0.98 | 0.17 | 0.82 | 0.70 | 0.75 | 0 | 0 | 1 | 1 | X | |

| 15 | 5.09 | 1.16 | 0.23 | 0.27 | 0.60 | 1.00 | 0 | 1 | 1 | 1 | X | |

| III | 1 | 6.36 | 0.77 | 0.12 | 1.00 | 0.86 | 0.50 | 0 | 0 | 0 | 0 | O |

| 2 | 6.00 | 0.85 | 0.14 | 1.00 | 0.67 | 1.00 | 0 | 0 | 1 | 1 | X | |

| 3 | 5.36 | 0.77 | 0.14 | 0.64 | 0.83 | 0.50 | 0 | 0 | 0 | 0 | O | |

| 4 | 5.00 | 0.95 | 0.19 | 0.27 | 0.70 | 0.75 | 0 | 1 | 1 | 1 | X | |

| 7 | 4.55 | 1.30 | 0.29 | −0.27 | 0.75 | 0.50 | 0 | 1 | 0 | 0 | X | |

| 8 | 5.36 | 0.64 | 0.12 | 0.82 | 0.80 | 0.50 | 0 | 0 | 0 | 0 | O | |

| 10 | 5.36 | 0.77 | 0.14 | 0.82 | 0.80 | 0.50 | 0 | 0 | 0 | 0 | O | |

| 16 | 5.45 | 1.23 | 0.23 | 0.45 | 0.75 | 0.75 | 0 | 1 | 0 | 1 | X | |

| 12 | 5.73 | 1.14 | 0.20 | 0.64 | 0.67 | 1.00 | 0 | 0 | 1 | 1 | X | |

| 13 | 5.36 | 0.88 | 0.16 | 0.64 | 0.80 | 0.50 | 0 | 0 | 0 | 0 | O | |

| 14 | 5.82 | 1.03 | 0.18 | 0.82 | 0.67 | 1.00 | 0 | 0 | 1 | 1 | X | |

| 15 | 4.91 | 1.44 | 0.29 | 0.09 | 0.60 | 1.00 | 0 | 1 | 1 | 1 | X |

| Type | Results of Changes in Evaluation Criteria by Evaluation Item (Example: Evaluation Item Number (No.) of II: Evaluation Criteria Elements of III) |

| Added | 4: Consistency with customer security architecture 7: Automated process development and evaluation methodology 13: Review cloud architecture implementation standards (customer versus supplier) 14: Customer feedback management, script management, logging/upgrading/migration policies 15: Company-wide common module standardisation and product repository (output/result storage) management methods |

| Modified | 4: Possibility of integration with information access authorisation, issue management, and code control systems 8: Robot operation status aggregation function 12: Application security (authentication, authorisation, encryption, logging, security testing, etc.) |

| Deleted | 2: Solution provider capabilities, terms, and conditions 3: RPA introduction objectives, technical conformity, consistency of RPA program application, risk management strategy through risk analysis assessment, corporate and organisational mission and objectives, leadership priorities and strategies, initiative 13: RPA program technology policy/architecture conformity 15: Technological policy update 16: Classification, cognition, information extraction, optimisation |

| Moved | 14: Operability 16: Character recognition ability regardless of language specialties, OCR, scalability, relatedness to other technologies |

| No. | Mean | SD | CV | CVR | CSD | CGD | ⓐ | ⓑ | ⓒ | ⓓ | Selection | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| I. | 4 | 5.73 | 0.62 | 0.11 | 1.00 | 0.83 | 0.50 | 0 | 0 | 0 | 0 | O |

| II. | 1 | 6.00 | 0.74 | 0.12 | 1.00 | 0.83 | 0.50 | 0 | 0 | 0 | 0 | O |

| 4 | 5.27 | 0.75 | 0.14 | 0.82 | 0.80 | 0.50 | 0 | 0 | 0 | 0 | O | |

| 7 | 4.91 | 1.00 | 0.20 | −0.09 | 0.60 | 1.00 | 0 | 1 | 1 | 1 | X | |

| 10 | 5.55 | 0.89 | 0.16 | 1.00 | 0.80 | 0.50 | 0 | 0 | 0 | 0 | O | |

| 16 | 5.91 | 0.67 | 0.11 | 1.00 | 0.92 | 0.25 | 0 | 0 | 0 | 0 | O | |

| 12 | 5.55 | 0.78 | 0.14 | 1.00 | 0.83 | 0.50 | 0 | 0 | 0 | 0 | O | |

| 14 | 5.55 | 0.78 | 0.14 | 0.82 | 0.83 | 0.50 | 0 | 0 | 0 | 0 | O | |

| 15 | 5.27 | 0.86 | 0.16 | 0.45 | 0.80 | 0.50 | 0 | 1 | 0 | 0 | X | |

| III. | 2 | 6.09 | 0.67 | 0.11 | 1.00 | 0.92 | 0.25 | 0 | 0 | 0 | 0 | O |

| 4 | 5.36 | 0.88 | 0.16 | 0.64 | 0.80 | 0.50 | 0 | 0 | 0 | 0 | O | |

| 7 | 4.45 | 0.99 | 0.22 | −0.09 | 0.75 | 0.50 | 0 | 1 | 0 | 0 | X | |

| 16 | 5.55 | 0.78 | 0.14 | 0.82 | 0.83 | 0.50 | 0 | 0 | 0 | 0 | O | |

| 12 | 5.64 | 0.77 | 0.14 | 1.00 | 0.83 | 0.50 | 0 | 0 | 0 | 0 | O | |

| 14 | 5.45 | 0.78 | 0.14 | 0.82 | 0.80 | 0.50 | 0 | 0 | 0 | 0 | O | |

| 15 | 4.55 | 0.78 | 0.17 | −0.27 | 0.75 | 0.50 | 0 | 1 | 0 | 0 | X |

| Type | Results of Changes in Evaluation Criteria by Evaluation Item (Example: Evaluation Item Number (No.) of II: Evaluation Criteria Elements of III) |

| Added | 16: AI/ML level of optimisation for cognitive automation 10: Customer’s existing business performance procedures (manual, automation), steps/tools required to automate from RPA suppliers 14: Operational model, operational product, operational standard policy, operational rules, logging/dashboard management |

| Modified | 12: Security management 16: OCR (printed), OCR (written) 14: Script code shape and change management, operational data policy |

| Deleted | 10: Process recording and reproduction [25] 16: ANN [4], NLP [4], decision tree [4], recommendation system [4], computer vision cognition [4], text mining [4], statistical technique [4], fuzzy logic [4], fuzzy matching [4], 14: Operability [26] |

| Moved | 14: Code sharing [8], RPA lifecycle management [8], licence management [8], common module standardisation and product repository (output and result storage) management, upgrading/migration |

| I. Category | No. | II. Evaluation Items | III. Evaluation Criteria |

|---|---|---|---|

| 1. Customer deployment strategy | 1 | Economic validity | Expense (solution, introduction and construction, licence, operation expenses) [8,26], investment value (ROI, EVA, TCO, EVS, TEI, BSC, etc.) [24] |

| 2 | Capabilities of solution suppliers | Companies with reference client case [8,26], product vision [24], awareness of manufacturer’s market [8], existing performance in the same field [8], product and service support capabilities, education and customer service [8], partner ecosystem [24] | |

| 3 | Technology policy conformity | Hardware/software requirement [8], technological elements (technology and ability for fulfilling OS/hardware requirements and RPA deployment and operations) [8], performance [24], system interaction and integration [8], portfolio [24], innovation roadmap [24], automation process development and evaluation methodology | |

| 4 | Security policy conformity | Personal information protection (system/application line, capability and user review, information access and issue management strategies, interactive data types) [8], account/personal identification management (service/network and system/application-level access management) [8], consistency with customer security architecture | |

| 2. Development and operability | 8 | Robot management and operability | Bot platform model and security [24], availability [25], quality analysis (quality transition graph provided) [2], management and analysis [24], dashboard capability [25], robot management functions (scheduling, load balancing, monitoring) [2], robot operation status aggregation function [25], performance management [25], exception management [25], centralised deployment management, scheduling [25], maintainability [26], self-learning capability [25], Multi-tenancy [8] |

| 10 | Automation process development and convenience | Excel and SAP (ERP solution) API support [2], command library [25], security enhancement site response [26], security character recognition [2], security [26], bot development [24], bot design and development [24], atypical GUI-based program automation (X-Internet, Active X, Flash) [2], performance ability under standardised GUI environment [26], usability [25], visual creation tools [25], full/partial automation capabilities [25], website automation essential security enhancements (HOMETAX, GOV24, Court, e-car) [2], Convenient and intuitive creation (direct programming, flowchart, etc.) [2], component sharing [25], test/debug control methods [25], development convenience [26], RPA program service distribution/operation model conformity [8], application functions [26], workflow [25], process recording and reproduction [25], customer’s existing business performance procedures (manual, automation), steps/tools required to automate from RPA suppliers | |

| 16 | Collaboration and expansion of AI technology | AI/ML optimisation level, process mining and scalability for cognitive automation [25], OCR (printed), OCR (written), relatedness to other technologies | |

| 3. Technical Architecture | 12 | Security management | Compliance with legal systems such as personal information protection [8], account and personal identification management [8], data encryption/protection [8], application security [8], risk/security evaluation [8], authentication [8], process traceability [8] |

| 13 | Architecture | On-premise/cloud [8], virtualisation support using VM/container technology, availability/disaster recovery capabilities [8], permission [8], network capacity [8], performance management capabilities [8], dual configuration availability, collaboration structure with customer internal systems, cloud architecture deployment standards (customer versus supplier) | |

| 4. Operation and management systems | 14 | Automation process operation systems | Script code shape and change management, operational management, automation scheduling [8], automation interruption accident response [8], bot management and operational data policy, supplier-customer technical support system, operational model, operational product policy, operational standard |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, S.-H. Development of Evaluation Criteria for Robotic Process Automation (RPA) Solution Selection. Electronics 2023, 12, 986. https://doi.org/10.3390/electronics12040986

Kim S-H. Development of Evaluation Criteria for Robotic Process Automation (RPA) Solution Selection. Electronics. 2023; 12(4):986. https://doi.org/10.3390/electronics12040986

Chicago/Turabian StyleKim, Seung-Hee. 2023. "Development of Evaluation Criteria for Robotic Process Automation (RPA) Solution Selection" Electronics 12, no. 4: 986. https://doi.org/10.3390/electronics12040986