2D Camera-Based Air-Writing Recognition Using Hand Pose Estimation and Hybrid Deep Learning Model

Abstract

:1. Introduction

2. Related Works

3. Materials and Methods

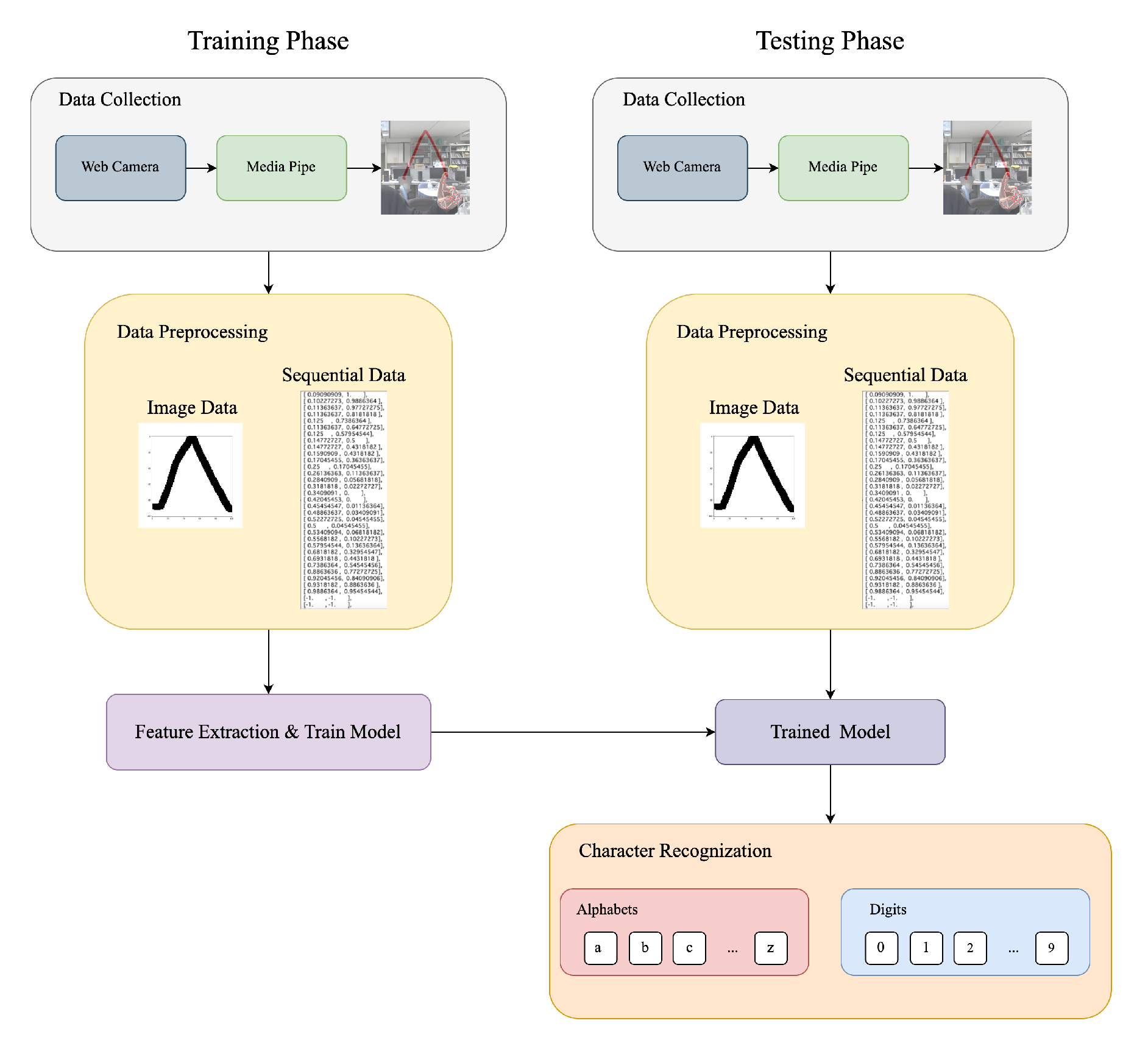

3.1. Proposed Air-Writing System

3.2. Dataset

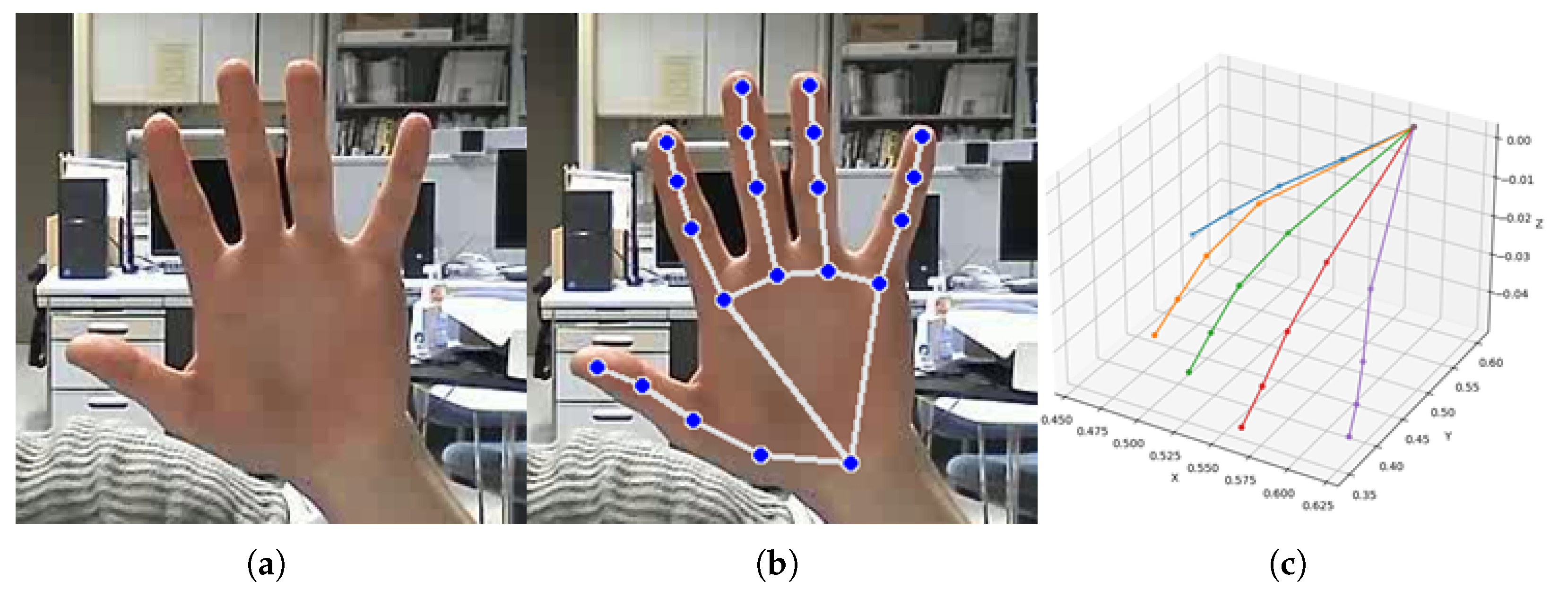

3.2.1. Hand Pose Estimation

3.2.2. Data Collection Procedure

3.2.3. Graffiti Characters used for Character Recognition

3.2.4. Dataset Formation

3.3. Dataset Pre-Processing

3.3.1. Min-Max Normalization

3.3.2. Moving Average Calculation

3.3.3. Image Creation

3.3.4. Dilation

3.3.5. Padding

3.4. Hybrid Deep Neural Network Architecture

4. Experimental Setting and Evaluation Metrics

5. Experimental Results

5.1. Comparison Performance between Our Proposed System and Similar Existing Methods

5.2. Validation of Our Proposed System

6. Discussion

7. Conclusions and Future Work Direction

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Amma, C.; Schultz, T. Airwriting: Bringing text entry to wearable computers. XRDS Crossroads, ACM Mag. Stud. 2013, 20, 50–55. [Google Scholar] [CrossRef]

- Yanay, T.; Shmueli, E. Air-writing recognition using smart-bands. Pervasive Mob. Comput. 2020, 66, 101183. [Google Scholar] [CrossRef]

- Garg, P.; Aggarwal, N.; Sofat, S. Vision based hand gesture recognition. Int. J. Comput. Inf. Eng. 2009, 3, 186–191. [Google Scholar] [CrossRef]

- Chen, M.; AlRegib, G.; Juang, B.H. Air-writing recognition—Part I: Modeling and recognition of characters, words, and connecting motions. IEEE Trans. Hum.-Mach. Syst. 2015, 46, 403–413. [Google Scholar] [CrossRef]

- Alam, M.S.; Kwon, K.C.; Alam, M.A.; Abbass, M.Y.; Imtiaz, S.M.; Kim, N. Trajectory-based air-writing recognition using deep neural network and depth sensor. Sensors 2020, 20, 376. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Murata, T.; Shin, J. Hand gesture and character recognition based on kinect sensor. Int. J. Distrib. Sens. Netw. 2014, 10, 278460. [Google Scholar] [CrossRef]

- Amma, C.; Gehrig, D.; Schultz, T. Airwriting recognition using wearable motion sensors. In Proceedings of the 1st Augmented Human International Conference, Megève, France, 2–3 April 2010; pp. 1–8. [Google Scholar] [CrossRef]

- Hayakawa, S.; Goncharenko, I.; Gu, Y. Air Writing in Japanese: A CNN-based character recognition system using hand tracking. In Proceedings of the 2022 IEEE 4th Global Conference on Life Sciences and Technologies (LifeTech), Osaka, Japan, 7–9 March 2022; pp. 437–438. [Google Scholar] [CrossRef]

- Bastas, G.; Kritsis, K.; Katsouros, V. Air-writing recognition using deep convolutional and recurrent neural network architectures. In Proceedings of the 2020 17th International Conference on Frontiers in Handwriting Recognition (ICFHR), Dortmund, Germany, 8–10 September 2020; pp. 7–12. [Google Scholar] [CrossRef]

- Amma, C.; Georgi, M.; Schultz, T. Airwriting: Hands-free mobile text input by spotting and continuous recognition of 3D-space handwriting with inertial sensors. In Proceedings of the 2012 16th International Symposium on Wearable Computers, Newcastle, UK, 18–22 June 2012; pp. 52–59. [Google Scholar] [CrossRef]

- Arsalan, M.; Santra, A. Character recognition in air-writing based on network of radars for human-machine interface. IEEE Sens. J. 2019, 19, 8855–8864. [Google Scholar] [CrossRef]

- Sonoda, T.; Muraoka, Y. A letter input system based on handwriting gestures. Electron. Commun. Jpn. (Part III Fundam. Electron. Sci.) 2006, 89, 53–64. [Google Scholar] [CrossRef]

- Setiawan, A.; Pulungan, R. Deep Belief Networks for Recognizing Handwriting Captured by Leap Motion Controller. Int. J. Electr. Comput. Eng. 2018, 8, 4693–4704. [Google Scholar] [CrossRef] [Green Version]

- Chen, H.; Ballal, T.; Muqaibel, A.H.; Zhang, X.; Al-Naffouri, T.Y. Air writing via receiver array-based ultrasonic source localization. IEEE Trans. Instrum. Meas. 2020, 69, 8088–8101. [Google Scholar] [CrossRef]

- Saez-Mingorance, B.; Mendez-Gomez, J.; Mauro, G.; Castillo-Morales, E.; Pegalajar-Cuellar, M.; Morales-Santos, D.P. Air-Writing Character Recognition with Ultrasonic Transceivers. Sensors 2021, 21, 6700. [Google Scholar] [CrossRef] [PubMed]

- Alam, M.; Kwon, K.C.; Md Imtiaz, S.; Hossain, M.B.; Kang, B.G.; Kim, N. TARNet: An Efficient and Lightweight Trajectory-Based Air-Writing Recognition Model Using a CNN and LSTM Network. Hum. Behav. Emerg. Technol. 2022, 2022, 6063779. [Google Scholar] [CrossRef]

- Zhang, F.; Bazarevsky, V.; Vakunov, A.; Tkachenka, A.; Sung, G.; Chang, C.L.; Grundmann, M. Mediapipe hands: On-device real-time hand tracking. arXiv 2020, arXiv:2006.10214. [Google Scholar] [CrossRef]

- Yoon, H.S.; Soh, J.; Min, B.W.; Yang, H.S. Recognition of alphabetical hand gestures using hidden Markov model. IEICE Trans. Fundam. Electron. Commun. Comput. Sci. 1999, 82, 1358–1366. [Google Scholar]

- Költringer, T.; Grechenig, T. Comparing the immediate usability of Graffiti 2 and virtual keyboard. In Proceedings of the CHI’04 Extended Abstracts on Human Factors in Computing Systems, Vienna, Austria, 24–29 April 2004; pp. 1175–1178. [Google Scholar] [CrossRef]

- Lee, S.K.; Kim, J.H. Air-Text: Air-Writing and Recognition System. In Proceedings of the 29th ACM International Conference on Multimedia, Virtual, 20–24 October 2021; pp. 1267–1274. [Google Scholar] [CrossRef]

- Salman, A.G.; Kanigoro, B. Visibility forecasting using autoregressive integrated moving average (ARIMA) models. Procedia Comput. Sci. 2021, 179, 252–259. [Google Scholar] [CrossRef]

- Kothapalli, S.; Totad, S. A real-time weather forecasting and analysis. In Proceedings of the 2017 IEEE International Conference on Power, Control, Signals and Instrumentation Engineering (ICPCSI), Chennai, India, 21–22 September 2017; pp. 1567–1570. [Google Scholar] [CrossRef]

- Choudhury, A.; Sarma, K.K. A CNN-LSTM based ensemble framework for in-air handwritten Assamese character recognition. Multimed. Tools Appl. 2021, 80, 35649–35684. [Google Scholar] [CrossRef]

- Chen, M.; AlRegib, G.; Juang, B.H. 6dmg: A new 6d motion gesture database. In Proceedings of the 3rd Multimedia Systems Conference, Chapel Hill, NC, USA, 22–24 February 2012; pp. 83–88. [Google Scholar]

- Xu, S.; Xue, Y. A long term memory recognition framework on multi-complexity motion gestures. In Proceedings of the 2017 14th IAPR International Conference on Document Analysis and Recognition (ICDAR), Kyoto, Japan, 9–15 November 2017; Volume 1, pp. 201–205. [Google Scholar]

| Authors | Device | Methods | Recognition Target | Accuracy (%) |

|---|---|---|---|---|

| Murata et al. [6] | Kinect | DP matching | Alphabet | 98.9 |

| Digit | 95.0 | |||

| Amma et al. [7] | Wearable | HMM | Alphabet | 94.8 |

| Digit | 95.0 | |||

| Hayakawa et al. [8] | PS Move | CNN | Japanese | 98.2 |

| Bastas et al. [9] | Leap Motion | LSTM | Digit | 99.5 |

| Amma et al. [10] | Wearable | HMM | Word | Recall: 99.0 Precision: 25.0 |

| Arsalan and Santra [11] | 60-GHzwave Radars | ConvLSTM-CT | Alphabet Digit | 98.3 |

| DCNN | 98.3 | |||

| Yanay and Shmueli [2] | Smart-bands | DTW + KNN | Alphabet | 98.2 |

| Sonpda and Muraoka [12] | Video Camera Wearable Comput | DP Matching | Alphabet Digit | 75.3 |

| Setiawan and Pulungan [13] | Leap Motion | DBN | Alphabet | 99.7 |

| Digit | 96.3 | |||

| Chen et al. [14] | Ultrasonic Transmitter | ORM | Alphabet | 96.3 |

| Saez-Mingorance et al. [15] | Ultrasonic Transceivers | ConvLSTM | Digit Alphbet | 99.5 |

| Alam et al. [16] | Intel RealSence Camer | TARNet | Alphabet | 98.7 |

| Digit | 99.6 | |||

| Smart Band | Alphabet | 95.6 | ||

| Leap Motion | Alphabet | 99.9 | ||

| Alam et al. [5] | Intel RealSence Camera | LSTM | Digit | 99.2 |

| CNN | Digit | 99.1 | ||

| Wii Remote Controller | LSTM | Alphabet | 99.3 | |

| CNN | Alphabet | 99.3 | ||

| Chen et al. [4] | Wii Remote Controller | HMM | Word Alphabet | 99.2 98.1 |

| Character | Number of Samples | Character | Number of Samples |

|---|---|---|---|

| A | 127 | N | 121 |

| B | 125 | O | 126 |

| C | 126 | p | 116 |

| D | 126 | Q | 116 |

| E | 126 | R | 121 |

| F | 122 | S | 122 |

| G | 120 | T | 122 |

| H | 121 | U | 121 |

| I | 121 | V | 121 |

| J | 120 | W | 121 |

| K | 121 | X | 123 |

| L | 120 | Y | 121 |

| M | 120 | Z | 122 |

| Digit | Number of Samples |

|---|---|

| 0 | 116 |

| 1 | 122 |

| 2 | 122 |

| 3 | 121 |

| 4 | 122 |

| 5 | 121 |

| 6 | 123 |

| 7 | 123 |

| 8 | 121 |

| 9 | 121 |

| Total | 1212 |

| Recognition Target | Accuracy (%) |

|---|---|

| Alphabet | 99.3 |

| Digit | 99.5 |

| Authors | Method | Recognition Target | Accuracy (%) |

|---|---|---|---|

| Lee and Kim [20] | TPS-ResNet- BiLSTM-Attn | Digit | 96.0 |

| Word | 79.7 | ||

| Choudhury et al. [23] | CNN-LSTM | Assamese | 98.1 |

| Digit | 98.6 | ||

| Yoon et al. [18] | HMM | Alphabet | 93.8 |

| Proposed method | CNN-BiLSTM | Alphabet | 99.3 |

| Digit | 99.5 |

| Characters | Number of Samples | Total Samples |

|---|---|---|

| A–Z | About 250 | 6501 |

| 0–9 | 60 | 600 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Watanabe, T.; Maniruzzaman, M.; Hasan, M.A.M.; Lee, H.-S.; Jang, S.-W.; Shin, J. 2D Camera-Based Air-Writing Recognition Using Hand Pose Estimation and Hybrid Deep Learning Model. Electronics 2023, 12, 995. https://doi.org/10.3390/electronics12040995

Watanabe T, Maniruzzaman M, Hasan MAM, Lee H-S, Jang S-W, Shin J. 2D Camera-Based Air-Writing Recognition Using Hand Pose Estimation and Hybrid Deep Learning Model. Electronics. 2023; 12(4):995. https://doi.org/10.3390/electronics12040995

Chicago/Turabian StyleWatanabe, Taiki, Md. Maniruzzaman, Md. Al Mehedi Hasan, Hyoun-Sup Lee, Si-Woong Jang, and Jungpil Shin. 2023. "2D Camera-Based Air-Writing Recognition Using Hand Pose Estimation and Hybrid Deep Learning Model" Electronics 12, no. 4: 995. https://doi.org/10.3390/electronics12040995