A Formally Reliable Cognitive Middleware for the Security of Industrial Control Systems

Abstract

:1. Introduction

2. A Specification Language of ARMET

- The control level describes the control structure of each of the component (e.g., sub-components, control flow and data flow links), which is:

- Defined by the syntactic domain “StrModSeq”, while the control flow can further be elaborated with syntactic domain “SplModSeq”

- The behavior level describes the actual method’s behavioral specification of each of component, which is defined by the syntactic domain “BehModSeq”.

- The description of each component type consists of

- (a)

- Its interface, which is comprised of:

- a list of inputs

- a list of its outputs

- a list of the resources it uses (e.g., files it reads, the code in memory that represents this component)

- a list of sub-components required for the execution of the subject component

- a list of events that represent entry into the component

- a list of events that represent exit from the component

- a list of events that are allowed to occur during any execution of this component

- a set of conditional probabilities between the possible modes of the resources and the possible modes of the whole component

- a list of known vulnerabilities occurred to the component

- (b)

- and a structural model that is a list of sub-components, some of which might be splits or joins of:

- data-flows between linking ports of the sub-components (outputs of one to inputs of another)

- control-flow links between cases of a branch and a component that will be enabled if that branch is taken.

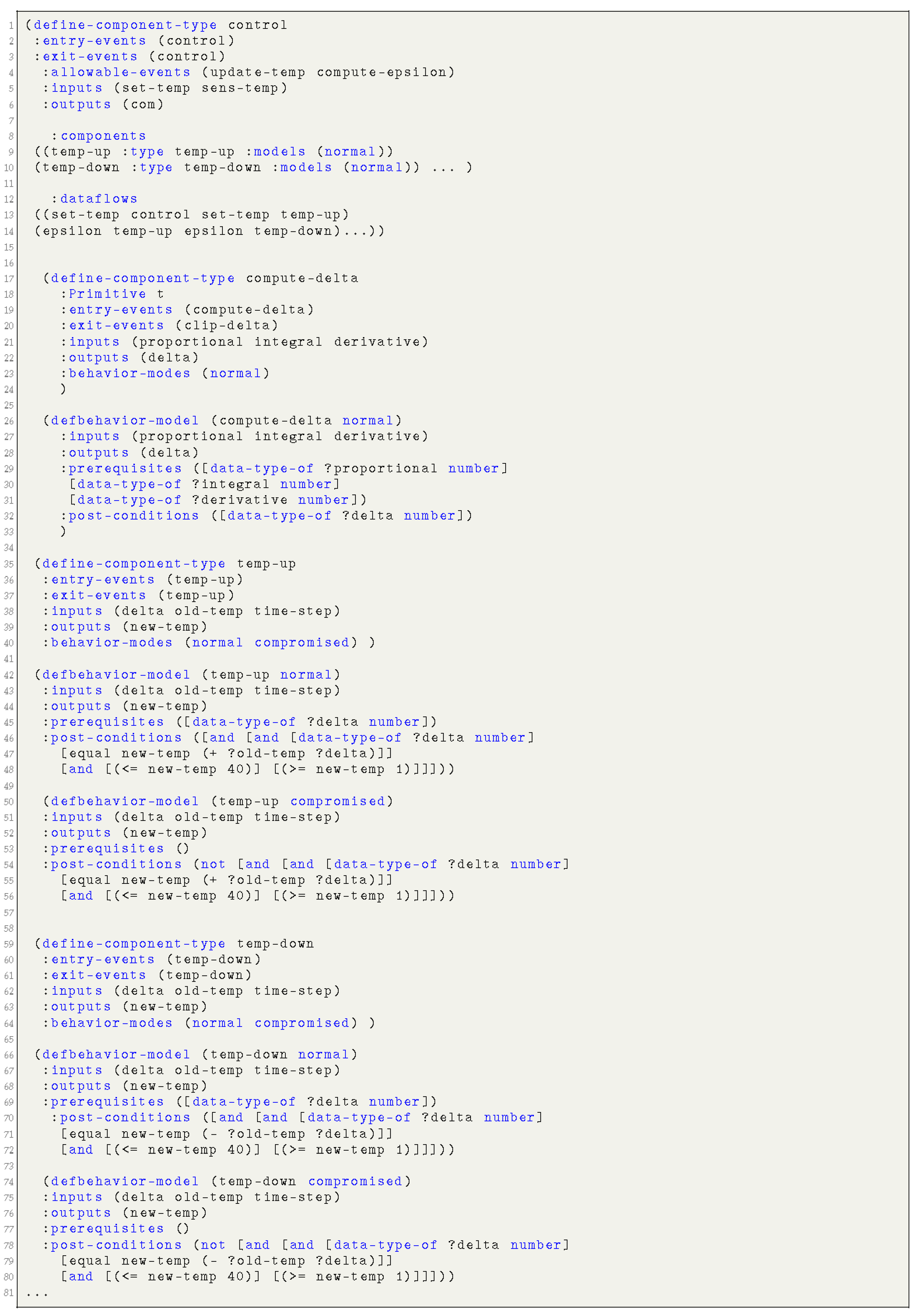

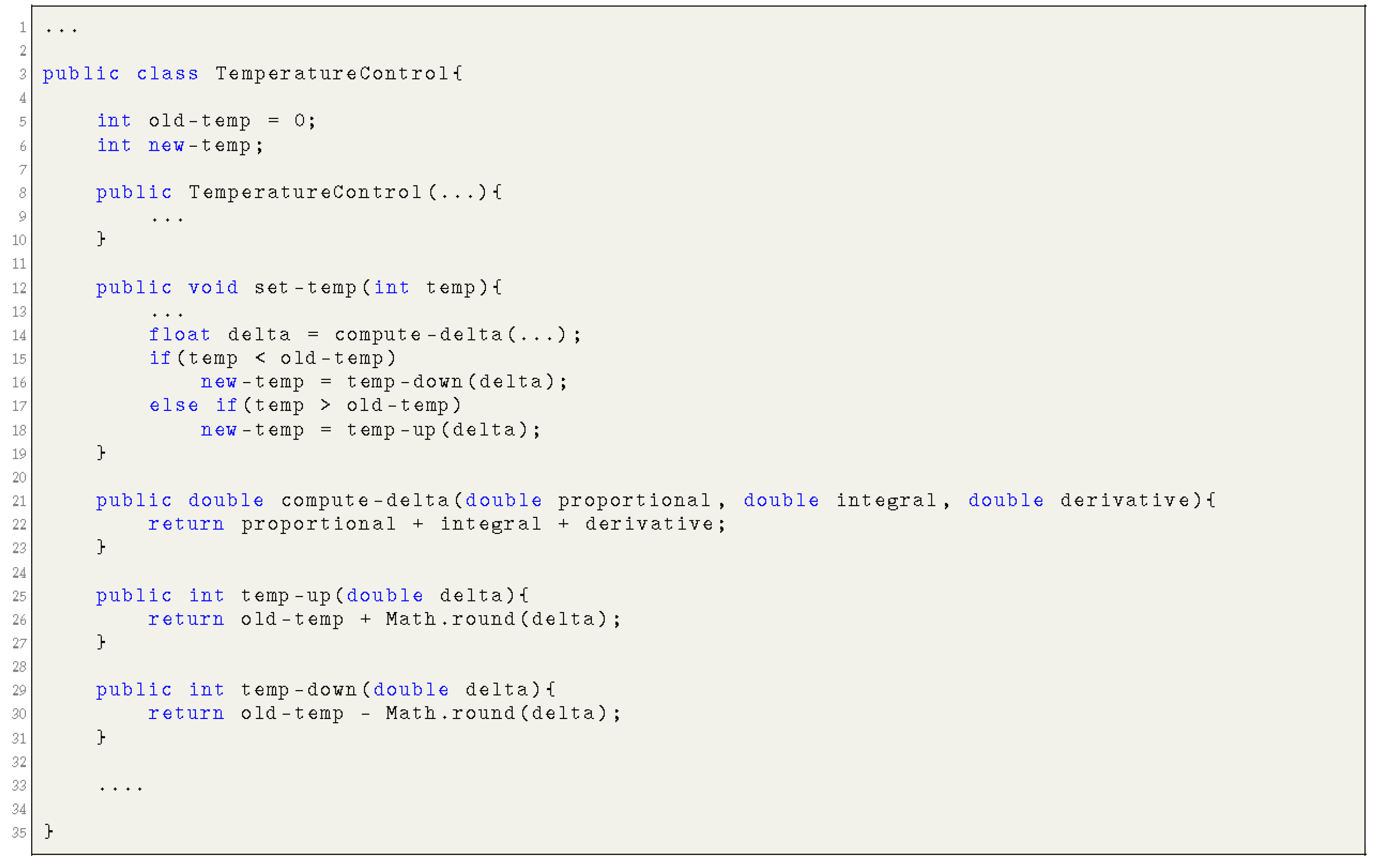

The description of the component type is represented by the syntactical domain “StrMod”, which is defined as follows:StrMod ::= define-ensemble CompName :entry-events :auto| (EvntSeq) :exit-events (EvntSeq) :allowable-events (EvntSeq) :inputs (ObjNameSeq) :outputs (ObjNameSeq) :components (CompSeq) :controlflows (CtrlFlowSeq) :splits (SpltCFSeq) :joins (JoinCFSeq) :dataflows (DataFlowSeq) :resources (ResSeq) :resource-mapping (ResMapSeq) :model-mappings (ModMapSeq) :vulnerabilities (VulnrabltySeq) Example 1.For instance, a room temperature controller: control, periodically receives the current temperature value (sens-temp) from a sensor. The controller may also receive a user command (set-temp) to set (either increase or decrease) the current temperature of the room. Based on the received user command, the controller either raises the temperature through the sub-component temp-up or reduces the temperature through the sub-component temp-down, after computing the error compute-epsilon of the given command as shown in Figure 2. Furthermore, the controller issues a command as an output (com), which contains an updated temperature value. Figure 3 reflects the corresponding implementation parts of the controller. - The behavioral specification of a component (a component type may have one normal behavioral specification and many abnormal behavioral specifications, each one representing some failure mode) consists of:

- inputs and outputs

- preconditions on the inputs (logical expressions involving one or more of the inputs)

- postconditions (logical expressions involving one or more of the outputs and the inputs)

- allowable events during the execution in this mode

The behavioral specification of a component is represented by a corresponding syntactical domain “BehMod”, as follows:BehMod ::= defbehavior-model (CompName normal | compromised) :inputs (ObjNameSeq) :outputs (ObjNameSeq) :allowable-events (EvntSeq) :prerequisites (BehCondSeq) :post-conditions (BehCondSeq) Example 2.For instance, in our temperature control example, the normal and compromised behavior of a controller component temp-up is modeled in Figure 2. The normal behavior of a temperature raising component temp-up describes the: (i) input condition prerequisites, i.e., the component receives a valid input new-temp; and (ii) output conditions post-conditions, i.e., the new computed temperature new-temp is equal to the sum of the current temperature old-temp and the computed delta (error). The computed temperature respects the temperature range (1-40). Similarly, the compromised behavior of the component illustrates the corresponding input and output conditions. Figure 3 reflects the corresponding implementation parts of the temp-up component.

| ⟦BehMod⟧(e)(e’, s, s’) ⇔ |

| ∀ e∈ Environment, nseq ∈ EvntNameSeq, eseq ∈ ObsEvent*, inseq, outseq ∈ Value: |

| ⟦ObjNameSeq⟧(e)(inState(s), inseq) ∧ ⟦BehCondSeq⟧(e) (inState(s)) ∧ |

| ⟦EvntSeq⟧(e) (e, s, s’, nseq, eseq) |

| ⟦ObjNameSeq⟧(e)(s’, outseq) ∧ ⟦BehCondSeq⟧(e) (s’) ∧ |

| ∃ c ∈ Component: ⟦CompName⟧(e)(inValue(c)) ∧ |

| IF eqMode(inState(s’), “normal”) THEN |

| LET sbeh = c[1], nbeh = <inseq, outseq, s, s’>, cbeh = c[3] IN |

| e’ = push(e store(inState(s’))(⟦CompName⟧(e)), c(sbeh, nbeh, cbeh, s, s’)) |

| END |

| ELSE |

| LET sbeh = c[1], nbeh = c[2], cbeh = <inseq, outseq, s, s’> IN |

| e’ = push(e store(inState(s’))(⟦CompName⟧(e)), c(sbeh, nbeh, cbeh, s, s’)) |

| END |

| END |

- The inputs “ObjNameSeq” evaluate to a sequence of values in a given environment e and a given state s, which satisfies the corresponding pre-conditions “BehCondSeq” in the same e and s.

- The allowable events happen and their evaluation results in new environment and a given post-state with some auxiliary sequences and .

- The outputs “ObjNameSeq” evaluates to a sequence of values in an environment and given post-state , which satisfies the corresponding post-conditions “BehCondSeq” in the same environment . State and the given environment may be constructed such that:

- -

- If the post-state is “normal” then is an update to the normal behavior “nbeh” of the component “CompName” in environment , otherwise

- -

- is an update to the compromised behavior “cbeh” of the component.

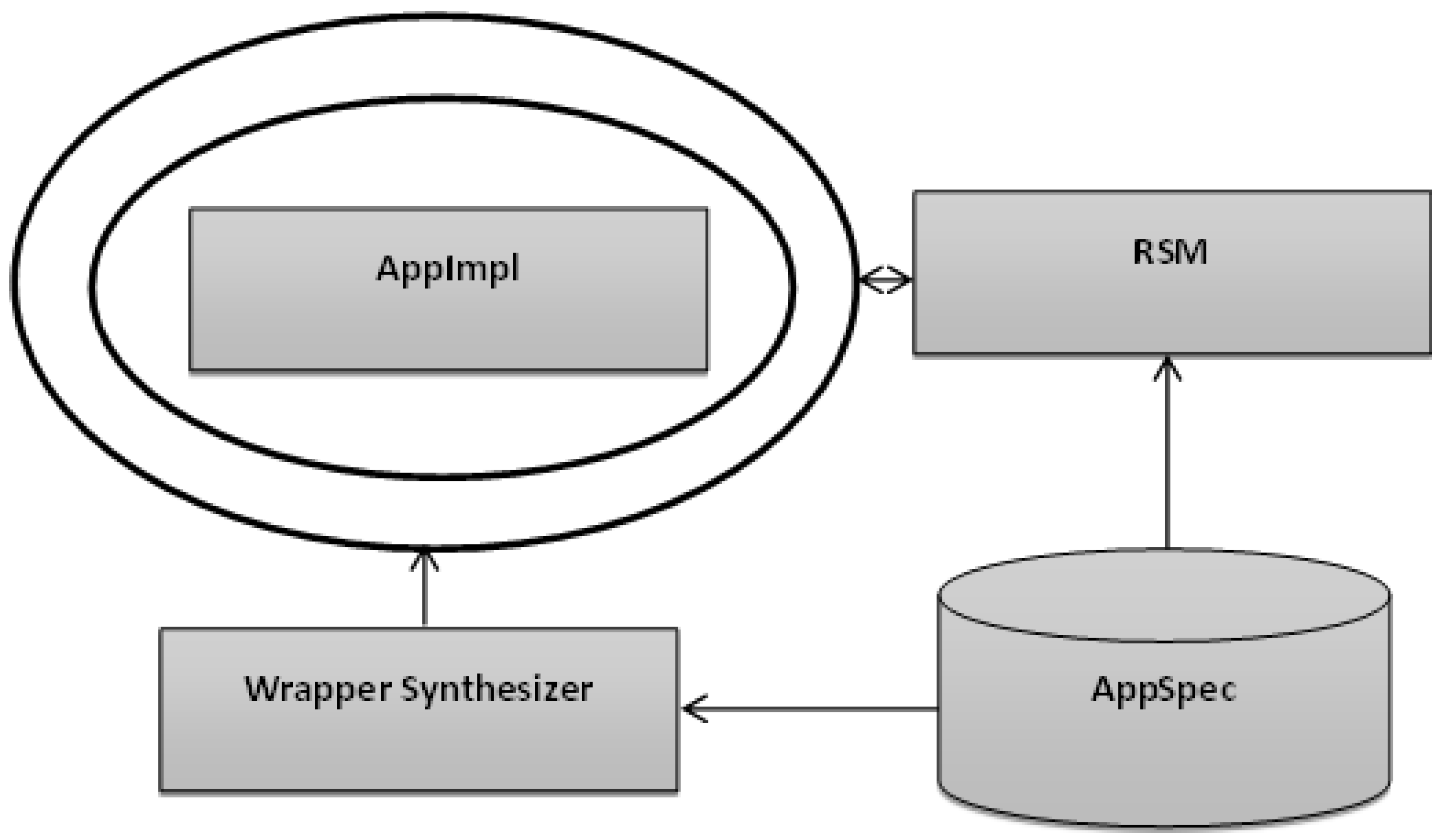

3. An Execution Monitor of ARMET

- If the event is a “method entry”, the execution monitor checks if this is one of the “entry events” of the corresponding component in the “ready” state. If so, after receiving the data and when the respective preconditions are checked, if they succeed, the data is applied on the input port of the component and the mode of the execution state is changed to “running”.

- If the event is a “method exit”, the execution monitor checks if this one of the “exit events” of the component is in the “running” state. If so, it changes its state into “completed” mode, collects the data from the output port of the component, and checks for corresponding postconditions. Should the checks fail, the execution monitor enables the diagnosis engine.

- If the event is one of the “allowable events” of the component, it continues execution.

- If the event is an unexpected event (i.e., it is neither an “entry event”, an “exit event”, nor in the “allowable events”), the execution monitor starts its diagnosis.

| ∀ app ∈ AppImpl, sam ∈ AppSpec, c ∈ Component, |

| s, s’ ∈ State, t, t’ ∈ States, d, d’ ∈ Environments, e, e’ ∈ Environment, rte ∈ RTEvent: |

| ⟦sam⟧(d)(d’, t, t’) ∧ ⟦app⟧(e)(e’, s, s’) ∧ startup(s, app) ∧ isTop(c, ⟦app⟧(e)(e’, s, s’)) ∧ |

| setMode(s, “running”) ∧ arrives(rte, s) ∧ equals(t, s) ∧ equals(d, e) |

| ⇒ |

| ∀ p, p’ ∈ Environment*, m, n ∈ : equals(m(0), s) ∧ equals(p(0), e) |

| ⇒ |

| ∃ k ∈ , p, p’ ∈ Environment*, m, n ∈ : |

| ∀ i ∈ : monitors(i, rte, c, p, p’, m, n) ∧ |

| ( ( eqMode(n(k), “completed”) ∧ eqFlag(n(k), “normal”) ∧ equals(s’, n(k) ) |

| ∨ |

| eqFlag(n(k), “compromised”) ) |

| ⇒ |

| enableDiagnosis(p’(k))(n(k), inBool(true)) ∧ equals(s’, n(k)) ) |

| monitors ⊂ × RTEvent × Component × Environment* × Environment* × State* × monitors(i, ⟦rte⟧, ⟦c⟧, e, e’, s, s’) ⇔ |

| ( eqMode(s(i), “running”) ∨ eqMode(s(i), “ready”) ) ∧ ⟦c⟧(e(i))(e’(i), s(i), s’(i)) ∧ |

| ∃ oe ∈ ObEvent: equals(rte, store(⟦name(rte)⟧)(e(i))) ∧ |

| IF entryEvent(oe, c) THEN |

| data(c, s(i), s’(i)) ∧ |

| ( preconditions(c, e(i), e’(i), s(i), s’(i), “compromised”) ⇒ equals(s(i+1), s(i)) ∧ equals(s’(i+1), s(i+1)) |

| ∧ setFlag(inState(s’(i+1)), “compromised”) ) ∨ ( preconditions(c, e(i), e’(i), s(i), s’(i), “normal”) |

| ⇒ setMode(s(i), “running”) ∧ |

| LET cseq = components(c) IN |

| equals(s(i+1), s’(i)) ∧ equals(e(i+1), e’(i)) ∧ |

| ∀ c1 ∈ cseq, rte1 ∈ RTEvent: |

| arrives(rte1, s(i+1)) ∧ monitor(i+1, rte1, c1, e(i+1), e’(i+1), s(i+1), s’(i+1)) |

| END ) |

| ELSE IF exitEvent(oe, c) THEN |

| data(c, s(i), s’(i)) ∧ eqMode(inState(s’(i)), “completed”) ∧ |

| ( postconditions(c, e(i), e’(i), s(i), s’(i), “compromised”) ⇒ equals(s(i+1), s(i)) ∧ equals(s’(i+1), s(i+1)) |

| ∧ setFlag(inState(s’(i+1)), “compromised”) ) ∨ |

| ( postconditions(c, e(i), e’(i), s(i), s’(i), “normal”) ⇒ equals(s(i+1), s’(i)) ∧ equals(e(i+1), e’(i)) ∧ |

| setMode(inState(s’(i+1), “completed”) ) |

| ELSE IF allowableEvent(oe, c) THEN equals(s(i+1), s’(i)) ∧ equals(e(i+1), e’(i)) |

| ELSE equals(s(i+1), s(i)) ∧ equals(s’(i+1), s(i+1)) ∧ setFlag(inState(s’(i+1)), “compromised”) |

| END |

- the number of observation i, with respect to the iteration of a component

- an observation (runtime event)

- the corresponding component c under observation

- a sequence of pre-environments e

- a sequence of post-environments

- a sequence of pre-states s

- a sequence of post-states

- The prediction or observation is an entry event of the component c and it waits until the complete data for the component c arrives. If this occurs then either:

- -

- Preconditions of “normal” behavior of the component hold.If so, the subnetwork of the component is initiated and the components in the subnetwork are monitored iteratively with the corresponding arrival of the observation, or

- -

- Preconditions of “compromised” behavior of the component hold. In this case, the state is marked as “compromised” and returns.

- The observation is an exit event and, after the completion of the data arrival, the postconditions hold and the resulting state is marked as “completed”.

- The observation is an allowable event and just continues the execution.

- The observation is an unexpected event (or any of the above does not hold) and the state is marked as “compromised”, and returns.

4. Proof of Behavioral Correctness

4.1. Soundness

- for the pre-state of the program, there is an equivalent safe pre-state for which the specification can be executed and the monitor can be observed and

- if we execute the specification in an equivalent safe pre-state and observe the monitor at any arbitrary (combined) post-state, then

- -

- either there is no alarm, and then the post-state is safe and the program execution (post-state) is semantically consistent with the specification execution (post-state)

- -

- or there is an alarm, and then the post-state is compromised and the program execution (post-state) and the specification execution (post-state) are semantically inconsistent.

| Soundness ⊆(AppImpl × AppSpec × Bool) |

| Soundness(, , b) ⇔ |

| ∀ e∈ Environment, e, e’ ∈ Environment, s, s’ ∈ State: consistent(e, e) ∧ consistent(, ) ∧ |

| ⟦⟧(e)(e’, s, s’) ∧ eqMode(s, "normal") |

| ⇒ |

| ∃ t, t’ ∈ State, e’ ∈ Environment: equals(s, t) ∧ ⟦⟧(e)(e’, t, t’) ∧ monitor(, )(e;e)(s;t, s’;t’) ∧ |

| ∀ t, t’ ∈ State, e’ ∈ Environment: equals(s, t) ∧ ⟦⟧(e)(e’, t, t’) ∧ monitor(, )(e;e)(s;t, s’;t’) |

| ⇒ |

| LET b = eqMode(s’, "normal") IN |

| IF b = True THEN equals(s’, t’) ELSE ¬ equals(s’, t’) |

- a specification environment (e) is consistent with a run-time environment (e), and

- a target system () is consistent with its specification (), and

- in a given run-time environment (e), execution of the system () transforms the pre-state (s) into a post-state (s’), and

- the pre-state (s) is safe, i.e., the state is in “normal” mode,

- there exist the pre- and post-states (t and t’, respectively) and the environment (e’) of the specification execution such that in a given specification environment (e), the execution of the specification () transforms the pre-state (t) into a post-state (t’)

- the pre-states s and t are equal and monitoring of the system () transforms the combined pre-state (s; t) into a combined post-state (s’; t’)

- if both the following occur: in a given specification environment (e), execution of the specification () transforms pre-state (t) into a post-state (t’); and the pre-states s and t are equal and monitoring of the system () transforms the pre-state (s) into a post-state (s’), then either:

- -

- there is no alarm (b is True), the post-state s’ of a program execution is safe, and the resulting states s’ and t’ are semantically equal, or

- -

- the security monitor alarms (b is False), the post-state s’ of program execution is compromised, and the resulting states s’ and t’ are semantically not equal.

4.2. Completeness

- for the pre-state of the program, there is an equivalent safe pre-state for which the specification can be executed and the monitor can be observed and

- if we execute the specification in an equivalent safe pre-state and observe the monitor at any arbitrary (combined) post-state, then

- -

- either the program execution (post-state) is semantically consistent with the specification execution (post-state), then there is no alarm and the program execution is safe

- -

- or the program execution (post-state) and the specification execution (post-state) are semantically inconsistent, then there is an alarm and the program execution has been compromised.

| Completeness ⊆(AppImpl × AppSpec × Bool) |

| Completeness(, , b) ⇔ |

| ∀ e∈ Environment, e, e’ ∈ Environment, s, s’ ∈ State: consistent(e, e) ∧ consistent(, ) ∧ |

| ⟦⟧(e)(e’, s, s’) ∧ eqMode(s, "normal") |

| ⇒ |

| ∃ t, t’ ∈ State, e’ ∈ Environment: equals(s, t) ∧ ⟦⟧(e)(e’, t, t’) ∧ monitor(, )(e;e)(s;t, s’;t’) ∧ |

| ∀ t, t’ ∈ State, e’ ∈ Environment: equals(s, t) ∧ ⟦⟧(e)(e’, t, t’) ∧ monitor(, )(e;e)(s;t, s’;t’) |

| ⇒ |

| IF equals(s’, t’) THEN b = True∧ b = eqMode(s’, “normal”) |

| ELSE b = False ∧ b = eqMode(s’, "normal") |

- a specification environment (e) is consistent with a run-time environment (e), and

- a target system () is consistent with its specification (), and

- in a given run-time environment (e), execution of the system () transforms the pre-state (s) into a post-state (s’), and

- the pre-state (s) is safe, i.e., the state is in "normal" mode,

- there exist the pre- and post-states (t and t’, respectively) and the environment (e’) of specification execution such that, in a given specification environment (e), execution of the specification () transforms the pre-state (t) into a post-state (t’)

- the pre-states s and t are equal and monitoring of the system () transforms the combined pre-state (s; t) into a combined post-state (s’; t’)

- if both: in a given specification environment (e), the execution of the specification () transforms the pre-state (t) into a post-state (t’); and the pre-states s and t are equal and monitoring of the system () transforms the pre-state (s) into a post-state (s’), then either

- -

- the resulting two post-states s’ and t’ are semantically equal and there is no alarm, or

- -

- the resulting two post-states s’ and t’ are semantically not equal and the security monitor alarms.

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Khan, M.T.; Serpanos, D.; Shrobe, H. A Rigorous and Efficient Run-time Security Monitor for Real-time Critical Embedded System Applications. In Proceedings of the 2016 IEEE 3rd World Forum on Internet of Things (WF-IoT), Reston, VA, USA, 12–14 December 2016; pp. 100–105. [Google Scholar]

- Shrobe, H.; Laddaga, R.; Balzer, B.; Goldman, N.; Wile, D.; Tallis, M.; Hollebeek, T.; Egyed, A. AWDRAT: A Cognitive Middleware System for Information Survivability. In Proceedings of the IAAI’06, 18th Conference on Innovative Applications of Artificial Intelligence, Boston, MA, USA, 16–20 July 2006; AAAI Press: San Francisco, CA, USA, 2006; pp. 1836–1843. [Google Scholar]

- Shrobe, H.E. Dependency Directed Reasoning for Complex Program Understanding; Technical Report; Massachusetts Institute of Technology: Cambridge, MA, USA, 1979. [Google Scholar]

- Lynx Software Technologies. LynxOS. Available online: http://www.lynx.com/industry-solutions/industrial-control/ (accessed on 20 July 2017).

- Khan, M.T.; Serpanos, D.; Shrobe, H. On the Formal Semantics of the Cognitive Middleware AWDRAT; Technical Report MIT-CSAIL-TR-2015-007; Computer Science and Artificial Intelligence Laboratory, MIT: Cambridge, MA, USA, 2015. [Google Scholar]

- Lamport, L. The temporal logic of actions. ACM Trans. Program. Lang. Syst. 1994, 16, 872–923. [Google Scholar] [CrossRef]

- Khan, M.T.; Schreiner, W. Towards the Formal Specification and Verification of Maple Programs. In Intelligent Computer Mathematics; Jeuring, J., Campbell, J.A., Carette, J., Reis, G.D., Sojka, P., Wenzel, M., Sorge, V., Eds.; Springer: Berlin, Germany, 2012; pp. 231–247. [Google Scholar]

- Schmidt, D.A. Denotational Semantics: A Methodology for Language Development; William, C., Ed.; Brown Publishers: Dubuque, IA, USA, 1986. [Google Scholar]

- Hoare, C.A.R. Proof of correctness of data representations. Acta Inform. 1972, 1, 271–281. [Google Scholar] [CrossRef]

- Khan, M.T.; Serpanos, D.; Shrobe, H. Sound and Complete Runtime Security Monitor for Application Software; Technical Report MIT-CSAIL-TR-2016-017; Computer Science and Artificial Intelligence Laboratory, MIT: Cambridge, MA, USA, 2016. [Google Scholar]

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Khan, M.T.; Serpanos, D.; Shrobe, H. A Formally Reliable Cognitive Middleware for the Security of Industrial Control Systems. Electronics 2017, 6, 58. https://doi.org/10.3390/electronics6030058

Khan MT, Serpanos D, Shrobe H. A Formally Reliable Cognitive Middleware for the Security of Industrial Control Systems. Electronics. 2017; 6(3):58. https://doi.org/10.3390/electronics6030058

Chicago/Turabian StyleKhan, Muhammad Taimoor, Dimitrios Serpanos, and Howard Shrobe. 2017. "A Formally Reliable Cognitive Middleware for the Security of Industrial Control Systems" Electronics 6, no. 3: 58. https://doi.org/10.3390/electronics6030058

APA StyleKhan, M. T., Serpanos, D., & Shrobe, H. (2017). A Formally Reliable Cognitive Middleware for the Security of Industrial Control Systems. Electronics, 6(3), 58. https://doi.org/10.3390/electronics6030058