1. Introduction

The current generation of the Internet network enables user participation, collaboration and interaction through two-way communication and improves the intelligence of machines and search engines by translating the network information from a high-level to a low-level standardized format. Advancement in other technologies, such as wireless communication and electronic systems’ design merged with this network, leads to a framework that provides the participation and interaction of a massive number of electronic devices over the world-wide network. This framework is called the Internet of Things (IoT) in which everything (i.e., every object) becomes smart by attaching/embedding an electronic module to/inside it and has access to the world-wide network, using its unique features and address, at any time and any place for interaction with other things, as well as network services [

1]. The electronic module of everything makes the object capable of identifying, sensing, localizing, connecting and communicating, processing information, predicting, making decisions and invoking action. Furthermore, everything can be controlled and managed remotely through the Internet servers. This enormous exchange of information between everything (which means among devices and servers) helps influence every action in this world in real time. Consequently, every smart thing in this promising smart world (also known as the IoT world) can be positioned anywhere, such as military systems, transportation systems and visual systems.

The smart visual system (or the IoT visual system) has attracted the research community in spite of the increased concern and worries of privacy advocates. The state of the art technologies, namely biometrics, crowd monitoring and automated human activity recognition, can assist the system in the handling of the video feeds from a large number of surveillance cameras. Due to the vital demand to provide a safer environment along with the increased anxiety about combating crimes and terror attacks, the number of surveillance systems, such as automated closed-circuit television (CCTV), installed in the metropolitan areas in many countries has increased tremendously. The biometric technology has been leveraged in many of these systems to ease the management and monitoring of the operations of the cameras, especially for its capability to screen a large number of video feeds across different places in a real-time, remote, non-cooperative and non-invasive way.

The importance of biometric technology for surveillance purposes is extremely high [

2,

3]. The military forces of the United States, besides the country intelligence agencies, have utilized this technology for their intelligence, surveillance, target acquisition and reconnaissance (ISTR) activities. The incident of the September 11th attacks (coordinated by the al-Qaeda Islamic terrorist group) accelerated and extended the usage of this technology in different areas of national security such as surveillance applications in counter-terrorism and counter-piracy operations, identity management systems, crime detection, international border crossing, time and attendance management systems, government and law enforcement, DNA profiling, passport-free automated border crossings, collecting security intelligence information and the determination of friend or enemy in military installation.

In this regard, we study an application of biometric technology named the biometric recognition system here. The contributions of this paper can be stated as: (1) proposing a cross-layer biometric recognition system suitable for mobile IoT devices; and (2) proposing four Hardware Trojans (HTs) for the advanced encryption standard (AES) hardware unit positioned within this system.

Section 2 presents the background on the area of the biometric recognition system. The security challenges for a hardware-software (cross-layer) biometric recognition system are discussed in

Section 3. The system architecture is introduced in

Section 4. The description of the designed Hardware Trojans is provided in

Section 5. The results, analysis and discussion are presented in

Section 6. The conclusion is given in

Section 7.

2. The Background on the Biometric Recognition System

The process of acquiring, measuring and analyzing a range of human physiological characteristics is called biometrics. The measurement is defined as deriving descriptive or quantitative measurements from the characteristics for usage in a more accurate analysis. The appearance, behavior and cognition state of a human being can all be included in the scope of characteristics. The major properties of a biometric characteristic are uniqueness (which means having exclusive traits), permanence (which means being consistent across time) and universality (which means it is obtainable from all the individuals in the population). The primary advantages of biometric identifiers compared to their traditional counterparts (i.e., user-name and password) are their precise identification, highest level of security, mobility, difficulty in forging, being unforgettable, not being transferable and user friendliness.

In this context, a biometric system is defined as a pattern recognition machine that acquires physiological characteristic data from an individual person, extracts a notable feature set from the data, compares the feature set of input data (from an unknown source) against the feature set of the reference data (from a trustworthy source) stored in the database and provides the comparison results. The system can have two functionality modes depending on its application: identification and verification/authentication [

4]. In identification mode, it identifies an individual by searching the feature sets of all the individuals by conducting a one-to-many comparison, while the comparison is one-to-one in verification mode. Furthermore, the system should be initialized before being prepared to deliver its functionalities. The initialization phase is called enrollment. In this phase, the authentic biometric characteristics are captured and processed. Next, their features are extracted and stored on the database.

Commonly, this machine consists of five main modules to deliver this functionality: (a) the sensor module, which acquires the raw biometric data of an individual by scanning and reading; every type of biometric characteristic or data needs to be measured by a certain type of sensor; (b) the quality assessment and enhancement module, which assesses and enhances the data quality by the respective algorithms; (c) the feature extraction module, which extracts a set of discriminatory features from the data to represent the underlying trait and structure; (d) the matching and decision making module, which compares the input feature set against the reference feature set and calculates a matching score for identity validation; the higher matching score demonstrates more accordance between the two feature sets; the assessment afterward of all the matching scores renders the recognition of all the individuals; (e) the system database module, which is the storage for all the reference feature sets (obtained during the enrollment process). If there is a storage limitation, a data compression and data decompression can be positioned in the biometric system architecture. It should be mentioned that the biometric systems usually identify individuals based on the nearest matches rather than the exact matches due to the variations among different instances of a biometric characteristic of an individual.

An individual characteristic should have seven factors to be a candidate for a biometric system: (1) universality, which means it is possessed by every individual; (2) uniqueness, which means a sufficient difference exists across individuals comprising the population; (3) permanence, which means it should not change significantly over a period of time; (4) measurable, which means it should be easy to digitize and compatible with the computing process; (5) performance, which means it should comply with the recognition accuracy requirements; (6) acceptability, which means all the individuals in the population are willing to present this characteristic; and (7) circumvention, which means the system should be immune to fake characteristics.

Examples of biometric characteristics that have been successfully used in security applications include face, fingerprint, palm print, iris, palm/finger vasculature structure and voice [

5]. Among these characteristics, fingerprint and iris have captured remarkable attention for security checking purposes. Fingerprint has been used in criminal investigation for a long period of time and is known to provide good accuracy, good execution time and good security. A fingerprint is the pattern of friction ridges and valleys on the surface of a fingertip, the formation of which is determined during the first seven months of fetal development. A common way to compare two fingerprints is locating all their unique minutiae and ridge points and running a matching algorithm on the extracted feature sets to calculate the similarity score.

The initial deployment of iris recognition was at airports to replace passport presentation (or any other means for identity assessment) and is reputed for its excellent accuracy, fair execution time and excellent security. The iris is the annular region of the eye bounded by the pupil and the sclera (which is the white part of the eye) on either side. The visual texture of the iris is formed during fetal development and is stabilized during the first two years of life. It contains very distinctive and useful information for personal recognition. The texture features of two irises can be input to a matching algorithm in order to compare them.

3. The Security Challenges for a Cross-Layer Biometric Recognition System

An important challenge faced by a biometric system relates to the possibility of identity theft. What if a malicious person gets access to the biometric characteristic of an individual and performs an active or a passive attack (such as damaging the information or stealing the information)? Considering the fact that biometric data cannot be changed and canceled (easily), the security issue becomes worse. The intrusion can happen by any software or hardware means in different scenarios. In one scenario, all the enrolled users in a network can identify each other through their fingerprint and/or iris to be taken by their own cellphones. In another scenario, a small portable surveillance system transmits the biometric information to the operator’s cellphone. One solution for this security issue is encrypting the biometric data at the hardware- or the software-level before being sent to the involved units for the identification/authentication functionality.

About ten years ago, the hardware implementation of security algorithms could be considered as a more trustworthy element for security provision. Nowadays, any hardware or integrated circuit (IC) chip can be a target of various attacks. ICs usually adopt the system-on-chip (SoC) technique as their design principle for acceleration of their design-to-usage process. According to this technique, the IC designer (or SoC integrator) builds and implements a specified main function and its circuit by forming the essential interconnections between the delivered analog and digital intellectual property (IP) cores (or sub-circuits) by third parties (external sources). The chip designs by unknown external sources in the diverse environment of the semiconductor supply chain may not be trustworthy. It is probable for a design to render an alternative function due to an applied malicious change to its circuit, which is known as a Hardware Trojan (HT). A Trojan should be activated by a rare event (to be sneaky), and it can be designed and inserted during design or fabrication processes by untrusted people, design tools or modules. In other words, it manipulates the functionality for a certain data pattern and/or after a certain number of clock cycles. Hardware Trojans can create catastrophic and life-threatening situations in surveillance systems such as corrupting the biometric data to dismantle an authorized access or achieving an illegal access through misidentification/misauthentication.

4. The Cross-Layer Biometric Recognition System

In this section, a cross-layer biometric recognition system with small computational complexity suitable for mobile IoT devices is presented. In a cross-layer (hardware-software) system, the entire design problem is partitioned into manageable areas of expertise, which means small, separate and interchangeable components. Having well-defined, strict and logical interfaces within the system allows each of the leveraged layers in the system to operate independently. They are designed by different sets of experts, such as control theorists, compiler designers, software engineers, operating system designers, embedded system designers, computer architects, circuit designers and semiconductor experts. All the layers in this system architecture are employed to store information and coordinate their actions in order to jointly improve the system performance. This helps achieve phenomenal advancements in the design and development of biometric recognition systems.

The software components run on the computing cores, and the hardware components are used to accelerate some parts of the application, as well as providing interfaces to the environment. This helps extract benefits from further scaled transistor and memory technologies (such as tunnel field effect transistors or magnetic tunnel junctions) within these systems. The system modularity makes the optimization, management and maintenance processes easier. With these features, a higher level of robustness is provided in front of unpredictable events, such as node failure, loss of connectivity, security attack, reduction of channel capacity or anomalies in communications. Furthermore, the quality of the system service can be tuned according to different applications. In this heterogeneous architecture, the software elements can be changed without affecting the hardware elements, and the hardware elements can simply reuse the functionality provided by the software elements. However, the system has its disadvantages, as well: (a) suboptimal, which means layering brings redundancy to the system (i.e., multiple elements performing the same function); (b) information hiding, which means each layer may not easily access the processing information within another layer (while it can have security privileges); and (c) performance degradation if there are strict constraints on the system quality of service.

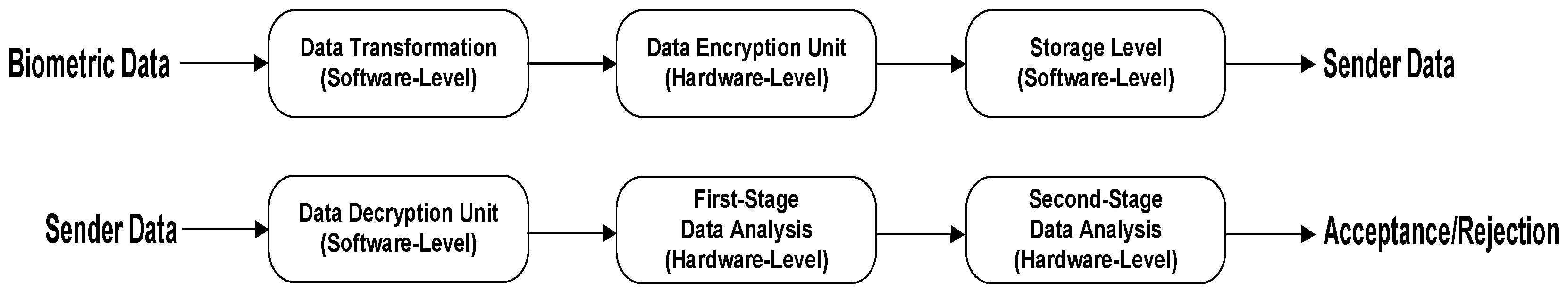

Our system architecture is shown in

Figure 1. The system needs to go to an initialization or user enrollment phase in the beginning. The architecture has two sides: the Sender and the Receiver. At the sender side, an individual takes his/her biometric data (fingerprint and/or iris here) using a cellphone or any other type of mobile device. After data acquisition, it is transformed into a number of 128-bit in-sequence plaintext blocks by a built-in software module within the device. The transformed data blocks stay in a queue dedicated to plaintext. Next, the data blocks based on their priority (based on their order in the sequence) are sent to a 128-bit AES hardware module for encryption. Once a data block is encrypted, it is sent to stay in a queue dedicated to ciphertext.

At the receiverside, the ciphertexts are captured one by one and are stored in a queue. Next, they are sent to a software-level AES decryption module. After decryption, the biometric data are reconstructed and sent to the first-level data analysis hardware unit. In this unit, twelve basic statistical measures from the biometric data are compared against their reference values, which are stored in either registers or memory. The statistical measures are: (1) the number of maximum values; (2) the average value; (3) the median value; (4) the number of minimum values; (5) the mode (or the smallest frequent value); (6) the standard deviation; (7) the number of values that is greater than a high threshold value; (8) the number of values that is less than a low threshold value; (9) the kurtosis; (10) the skewness; (11) the Manhattan norm; and (12) the Euclidean norm. The high threshold value is equal to the mean of the biometric data plus the standard deviation of the data multiplied by three. The low threshold value is equal to the mean of the biometric data. The biometric data are accepted to be sent to the next stage of the recognition system if nine out of twelve statistical measures have their values within a selected tolerance boundary. If the data do not comply with this condition, they are rejected, and the process is stopped.

At the next stage, the biometric data are filtered in order to eliminate noise or any malicious effect introduced into the data. Performing a comparison on the filtered data in this step helps to detect fake biometric data, as well. Average filtering with a window size of three is used for this purpose. Then, the filtered data are sent to the second-level data analysis hardware unit. In this unit, four distance measures are leveraged to compare the filtered biometric data block against the reference biometric data. The distance measures are: (a) the average of the Euclidean distance; (b) the average of the city block (Manhattan) distance; (c) the average of the Hamming distance; and (d) the average of the Chebyshev distance. Next, the calculated values for the distance measures of the test biometric data are compared against their reference values (i.e., the values for four distance measures between the filtered reference biometric data block against the reference biometric data). An acceptance happens when three out of four of the distance measures are within the selected tolerance boundary. This process continues for all of the entering data to the receiver side. The process flow of this system is shown in Algorithm 1.

| Algorithm 1 The flow of the cross-layer biometric recognition system. |

- 1:

Input: A biometric dataset - 2:

Output: The system performance parameters (true positive rate (TPR), true negative rate (TNR), false positive rate (FPR) and false negative rate (FNR)) - 3:

Initialization: - 4:

- 5:

- 6:

- 7:

- 8:

- 9:

for do - 10:

- 11:

- 12:

- 13:

- 14:

- 15:

- 16:

for do - 17:

for do - 18:

- 19:

if then - 20:

- 21:

end - 22:

if then - 23:

- 24:

- 25:

if then - 26:

- 27:

end - 28:

end - 29:

- 30:

- 31:

- 32:

- 33:

- 34:

- 35:

- 36:

if then - 37:

if then - 38:

- 39:

else - 40:

- 41:

end - 42:

else - 43:

- 44:

end - 45:

if then - 46:

- 47:

- 48:

- 49:

- 50:

- 51:

if then - 52:

if then - 53:

- 54:

else - 55:

- 56:

end - 57:

else - 58:

if then - 59:

- 60:

else - 61:

- 62:

end - 63:

end - 64:

end - 65:

end - 66:

end - 67:

end - 68:

- 69:

- 70:

- 71:

|

5. Attacks on the Cross-Layer Biometric Recognition System

In this section, four new Hardware Trojans for the AES hardware module are presented as attacks on the proposed biometric recognition system. The existing works related to the security vulnerabilities of the AES cryptographic hardware are [

6,

7]. These Trojans target the hardware functionality in order to harm the biometric data. Our scenario for these Trojans is that they are not detected during the testing and verification phase since the “Mate Trigger” for the “Main Trigger” of each Hardware Trojan is generated by other parts of the SoC during the “chip run-time operation”. In other words, the mate trigger and the main trigger for each Trojan go to an AND function before being applied to the Trojan payload part. Meanwhile, the main trigger mechanism of each Trojan circuit is designed based on the principle of making its activation “random” and “sneaky”.

Without considering this scenario, the Trojans are not sneaky enough in terms of activation time, and the changes caused in the hardware functionality are considered as limitations. By taking the scenario into account, the function-targeting Hardware Trojans may not be detected during the testing phase since there is no knowledge about the application that is going to be run on the chip. Therefore, there is less controllability and observability for each Trojan circuitry, and they behave more covertly. Furthermore, we can consider a scenario for Hardware Trojan implementation according to which the idle cells (or the time-based unused cells) of the SoC chip are detected and employed adaptively in order to construct the Trojan functionality. In addition, hardware obfuscation may reduce the possibility of detecting the Trojans through physical inspection. The area overheads for the Trojans are calculated, considering the insertion of “extra” cells to implement the Trojans. Without the discussed scenario, these Trojans have limitations in terms of the added circuitry. Meanwhile, only the main trigger is considered for activation of the Trojans here.

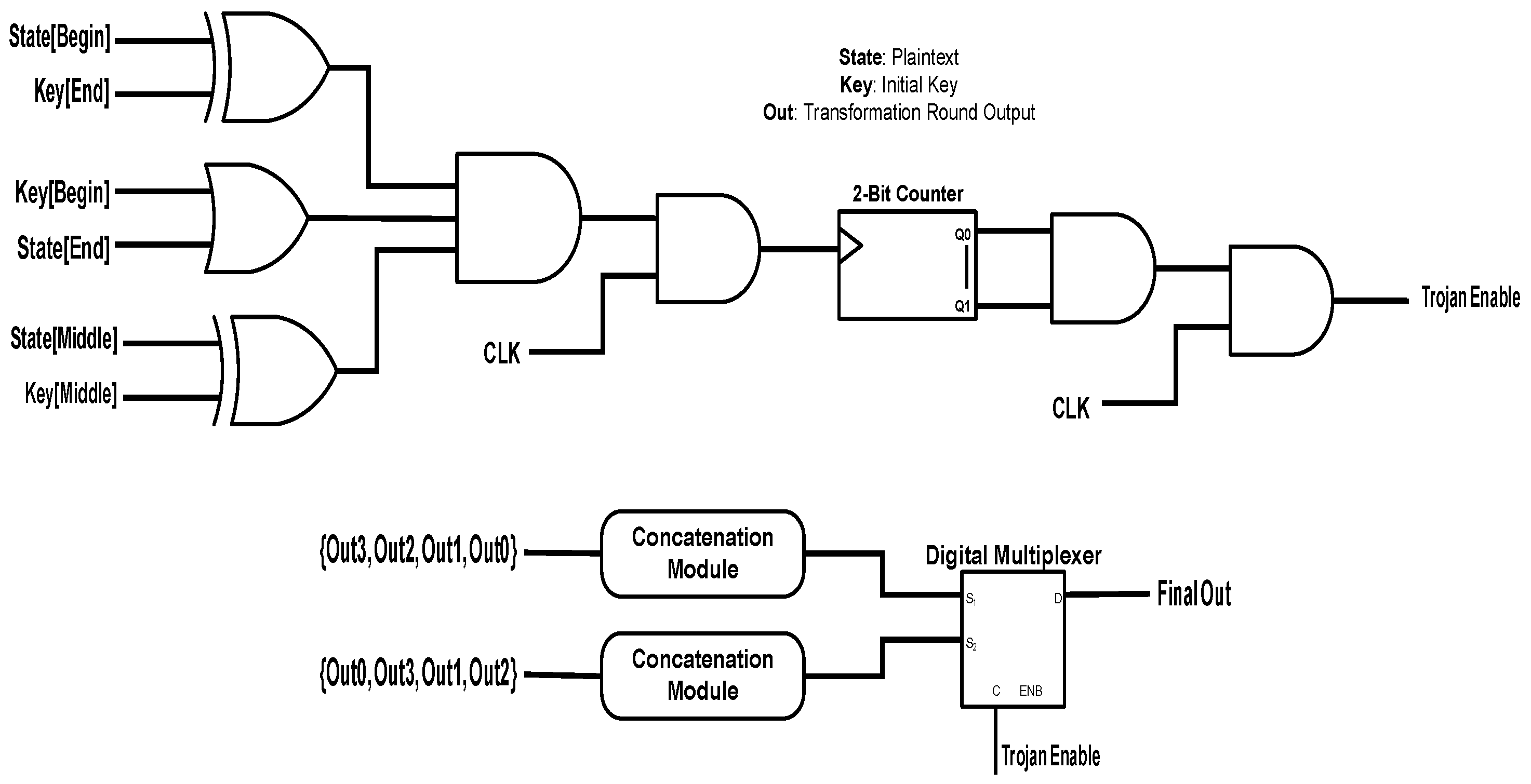

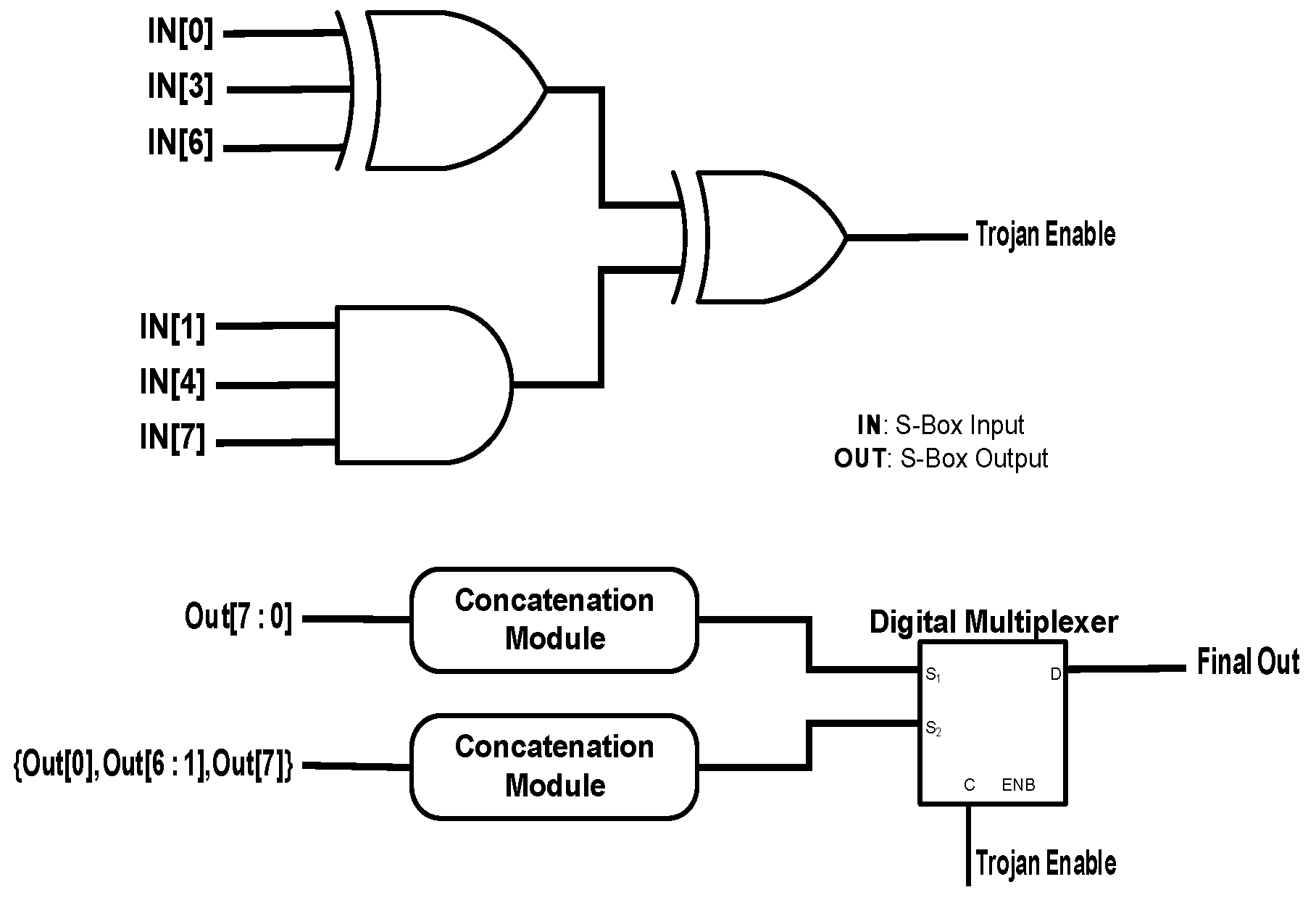

Our first attack targets the output of the intermediate transformation round within the AES algorithm. The trigger circuit and the payload circuit of this attack are shown in the top and the bottom parts of

Figure 2, respectively. According to the trigger circuit, the first, the last and the middle data points of the plaintext and the initial key are chosen. The first data point of the plaintext and the last data point of the key go to an exclusive-OR (XOR) gate; the first data point of the key and the last data point of the plaintext enter an OR gate; and middle data points of the plaintext and the key enter an XOR gate. The resulting three signals go to an AND gate. The gate output signal and the clock signal are sent to another AND gate. The obtained signal from this process triggers a two-bit counter. Whenever the counter reaches its saturation state and also the clock signal is in its active state, the enable signal of the HT becomes active. The output of the intermediate transformation round is built by four 32-bit data elements that are constructed by performing the XOR operation on the round sub-keys and the outputs of the lookup table. If the HT becomes active, then the order of these data elements is changed before being sent to the output port of the intermediate transformation round.

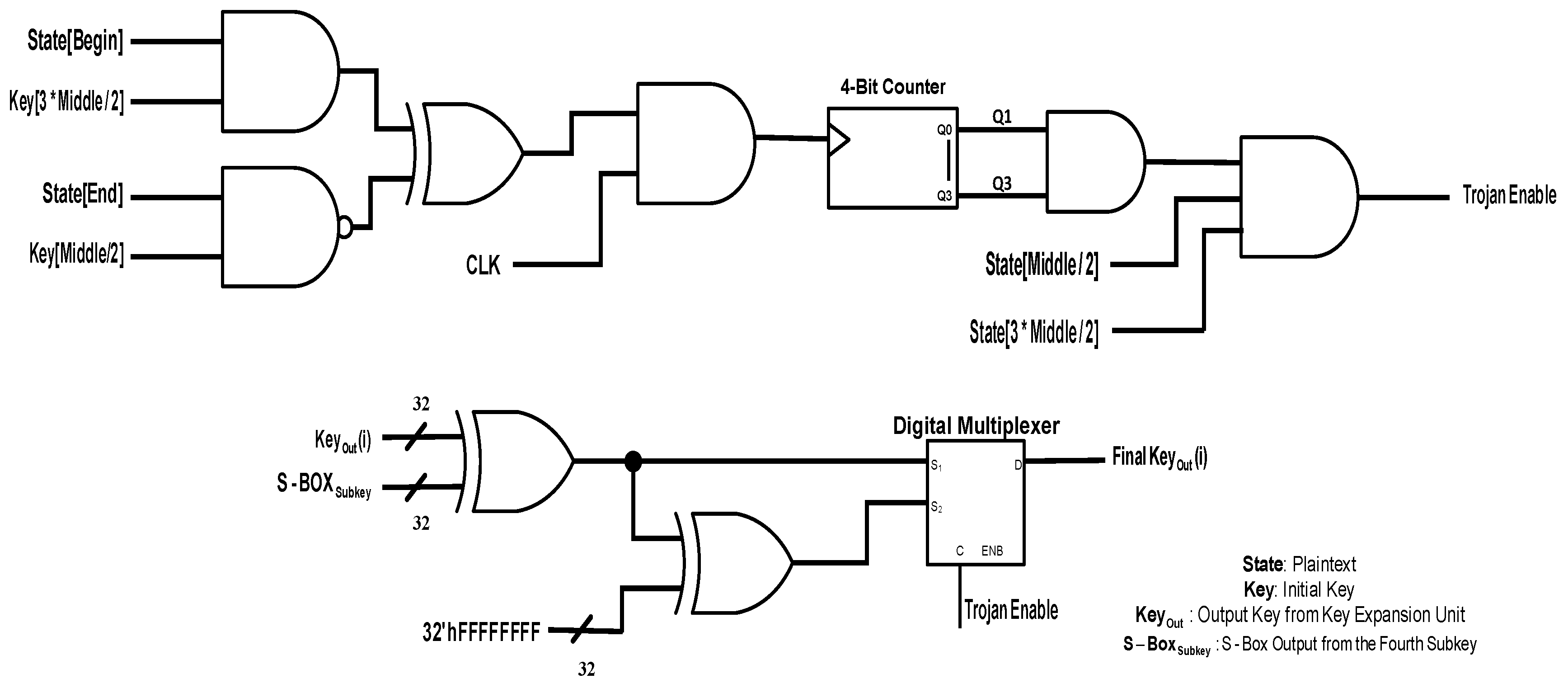

The second attack targets the data elements that make the output key generated by the key expansion unit. The trigger and the payload circuits of this attack are shown in

Figure 3. For the trigger circuit, the first and the last data points of the plaintext are selected. Furthermore, the middle data points in the first and the second half of the key are chosen. The first data point of the plaintext and the middle data point of the first and the second halves of the key are sent to an AND gate, and the other data points are dispatched to an NAND gate. The resulting signals go to an XOR gate. Next, the generated signal and the clock signal enter an AND gate to make the trigger signal for the four-bit counter. The first and the last bits of the counter are sent to an AND gate, and the yield signal goes to an AND function along with the middle data point of the first and the second halves of the plaintext. The outcome signal is the enable signal for the Hardware Trojan. In the normal operation of the key expansion unit, each data element is made by performing the XOR operation on its corresponding sub-key and the substitution-box (S-Box) output when its input is the fourth sub-key. When the HT becomes active, all the bits of the data element are XORed with the logical value of one.

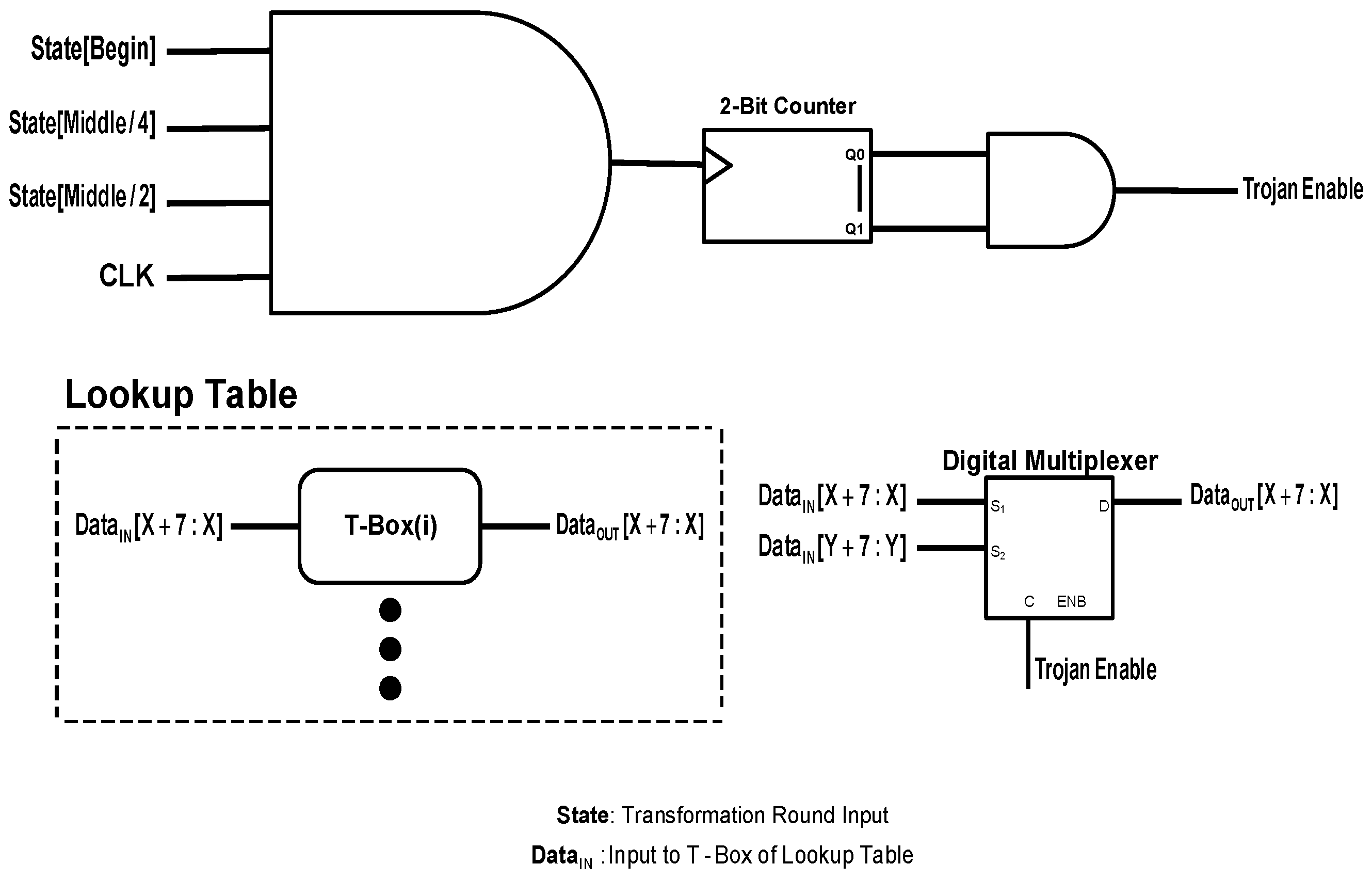

The third attack targets the output of the table boxes (T-Boxes) within the lookup table. The structure of the trigger circuit and the payload circuit for this attack are shown in

Figure 4. For the trigger circuit, the first data point along with the one-fourth and the middle data points of the first half of the input data to the intermediate transformation round along with the clock signal are sent to an AND gate. The gate output signal triggers a two-bit counter. When the counter reaches its saturation state, the Hardware Trojan becomes active. Once it becomes activated, a replacement happens between different portions of the input data to a T-Box. Considering four portions for the input data, the first portion is placed for the last portion, the last portion for the second portion and the second portion for the first portion. The third portion stays the same.

The fourth attack targets the S-Box output for malicious manipulation. The structure of this attack is shown in

Figure 5. For the trigger circuit, two pairs of data, each consisting of three bits, are selected. In each pair, a data point with a certain index is chosen (either zero or one), and the index of the second data point is the first data point plus three, and the index of the third data point is the second data point plus three. The pair of data with the starting index of zero goes to an XOR gate, and the other one goes to an AND gate. The output signals from these two gates are transmitted to an XOR gate. The resulting signal is the enable signal for the HT. When the Trojan becomes active, the first and the last bits of the S-Box are replaced.

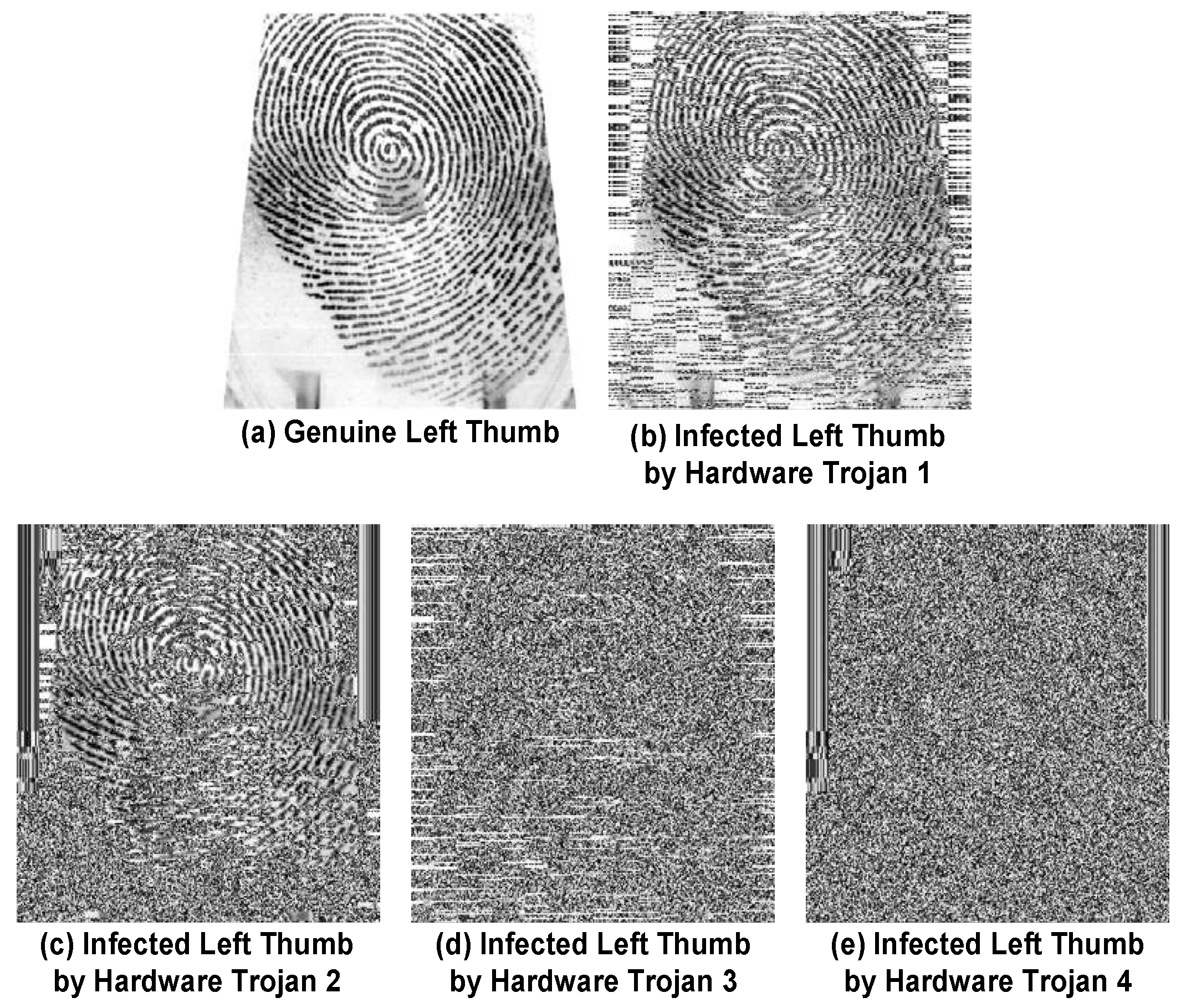

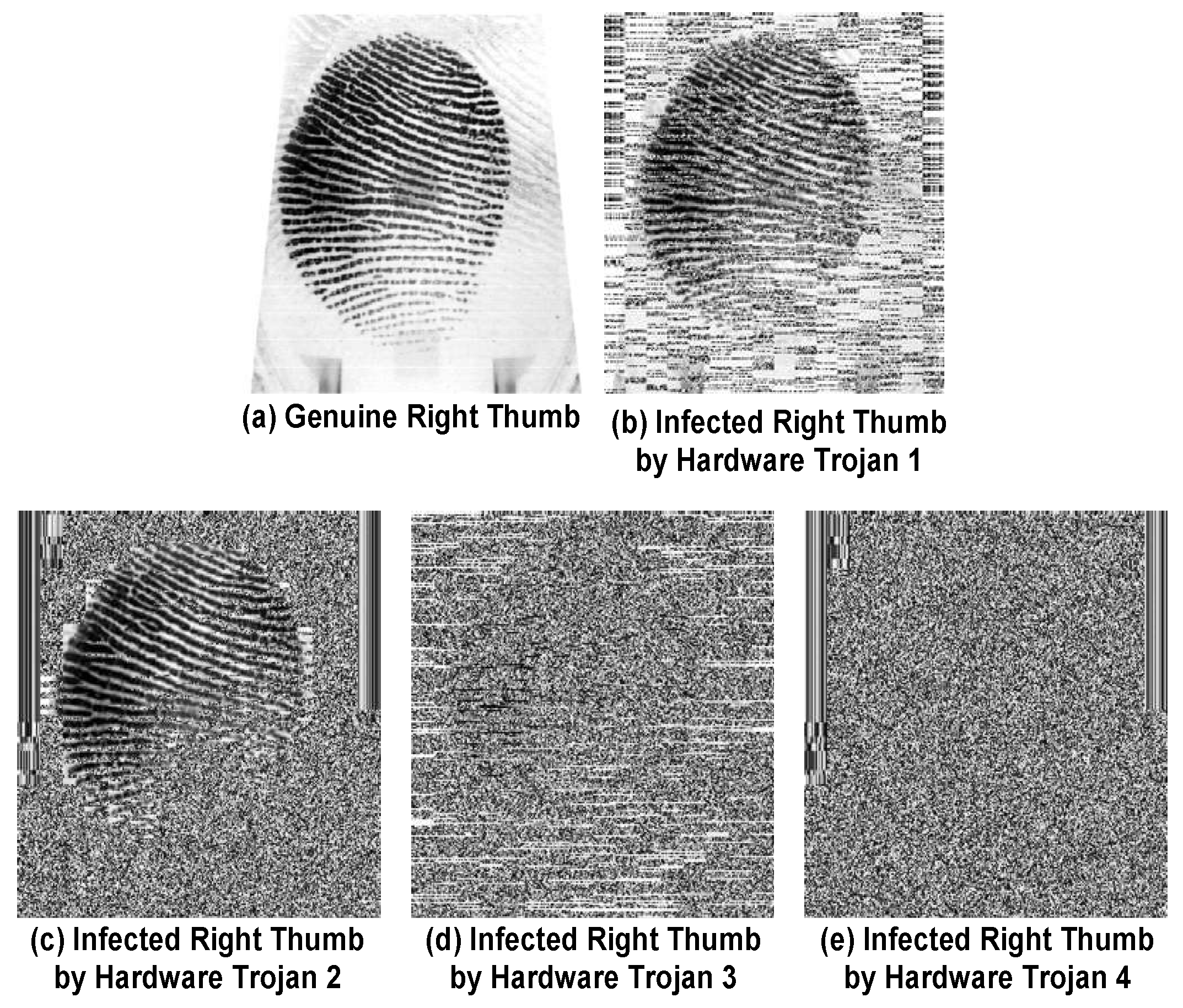

Figure 6,

Figure 7,

Figure 8 and

Figure 9 show the effects of these Hardware Trojans on two biometrics of fingerprint and iris. The used biometric data in this experiment are from the Institute of Automation, Chinese Academy of Sciences (CASIA) -FingerprintV5 and the CASIA-IrisV4 datasets [

8,

9]. Among all the fingerprints in the dataset, the thumb is selected. The indices of these data instances in the datasets are: 139 for the left thumb, 100 for the right thumb, 94 for the left iris and 50 for the right iris. The utilized AES hardware module in this experiment is from [

10]. All the software and the hardware implementation of the modules are done using MATLAB and Verilog HDL, respectively. It is assumed that these are the decrypted data at the receiver side. As can be seen from the figures, the first attack makes the biometric data blurry at the receiver side. However, the structure of the fingerprint or the iris can still be observed. The second attack is more destructive than the first attack, and only a small portion of the biometric data can be seen. Both the third and the fourth attacks fully annihilate the data, but it is believed that the third attack still leaves a minor pattern in the image intact (shown in Part (b) of

Figure 7).

6. Results and Discussion

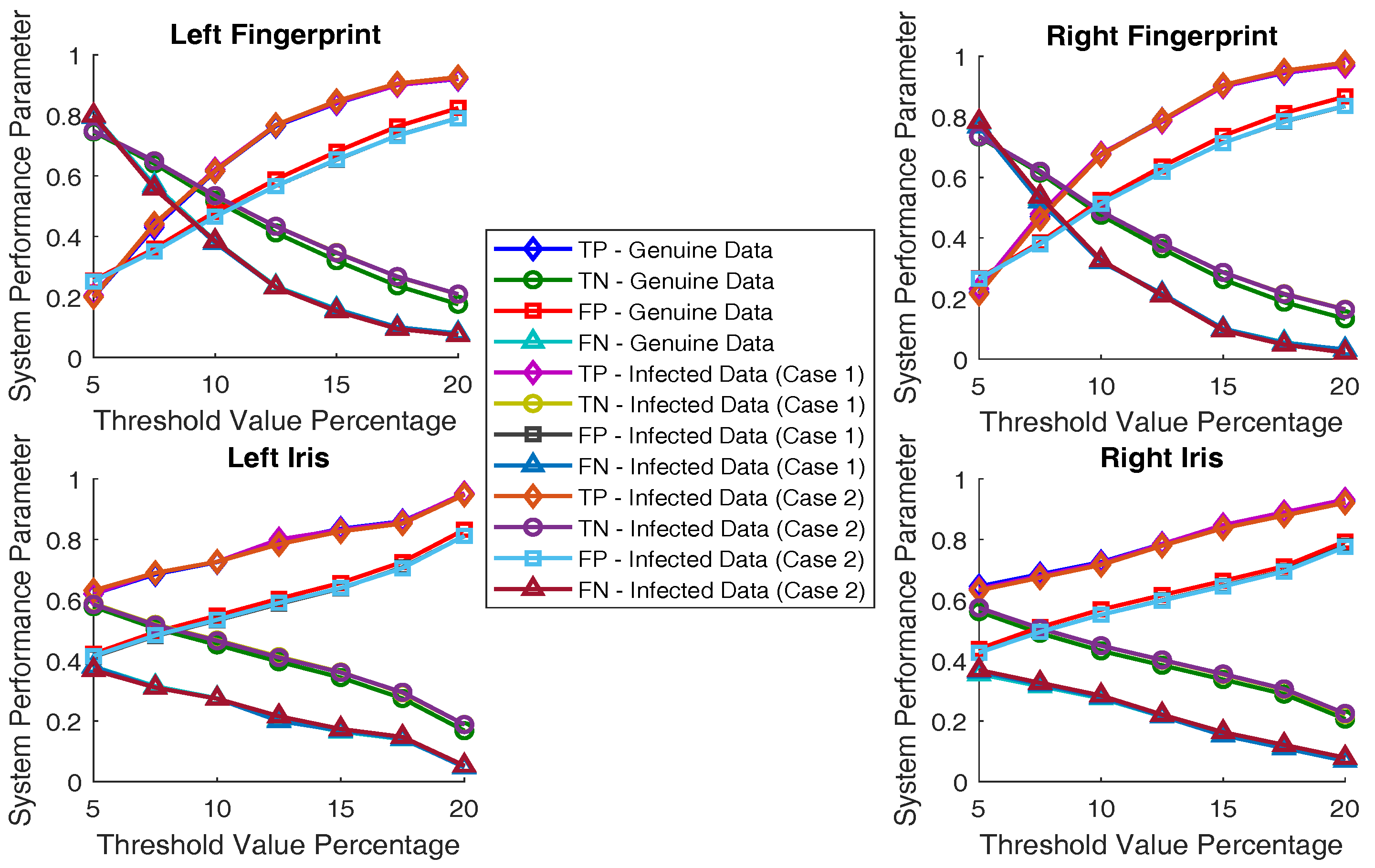

In this section, the results of the performance evaluation and the hardware synthesis of the proposed biometric recognition system are presented. All the biometric images are resized to

pixels, and the software and the hardware modules of the system are implemented accordingly. Fifty subjects are selected from each of the CASIA-FingerprintV5 and the CASIA-IrisV4 datasets for performance evaluation. The chosen subjects for the fingerprint dataset are 50–99, and the ones for the iris dataset are 1 and 100–149 (except 147). The performance evaluation of the biometric recognition system is done using four statistical metrics: (1) the true positive rate, which is the rate of correctly-accepted data samples; (2) true negative rate, which is the rate of correctly-rejected data samples; (3) false positive rate, which is the rate of wrongly-rejected data samples; and (4) false negative rate, which is the rate of wrongly-accepted data samples. Furthermore, the evaluation of each dataset is done for seven different threshold values. For each biometric, the assessment is done through comparing the first image of the biometric data of each person against the second to the fifth images of the biometric data of all the registered people. In order to evaluate the system performance for the malicious incidents as well, two infection cases are developed. For each infection case, ten images from ten people from the set of each biometric data type (which means the left/right side of the fingerprint/iris) are infected by the designed Hardware Trojans. For the first infection case, the third image of the victim subjects is chosen, while the fifth image of the victim subjects is selected for the second infection case. The description of infecting the datasets is shown in

Table 1.

The results of the performance evaluation of the genuine and the infected biometric recognition system are shown in

Table 2,

Table 3 and

Table 4. The average execution time of the biometric recognition system in evaluating a dataset is 36.9246 min. The most important parameter for security evaluation of the system is the false negative rate. As can be comprehended from

Table 2, the system demonstrates a proper rejection capability at lower values of the threshold value. From the other side, the system performs poorly at accepting authentic data. As the threshold value becomes larger, the system operation becomes better at accepting valid data, while its ability for true rejection is degraded. Furthermore, the system performance is similar for all of the tested biometrics. More importantly, the system has a low false negative rate at higher values of the threshold value, which means it exhibits striking defense power in front of incorrect (or malicious) biometric data.

Table 3 and

Table 4 show the system performance when the AES hardware module is infected. Since there are infected images among the datasets in these evaluations, the system demonstrates its defense capability, either through rejecting the infected images or filtering them for removal of an HT payload, if the rates for true rejection and true acceptance are increased. As can be seen from the results, the system proves its defense strength in confronting both infection cases. The graphical illustration of all these results is shown in

Figure 10. As can be observed from the figure, there is growth in the correct acceptance and the correct rejection rates for the infection cases, which helps with the detection and localization of the Trojans.

For the hardware analysis, all the Verilog implementations of the hardware elements are synthesized using the Synopsys Armenia Educational Department (SAED) 32/28 nm generic library and the Synopsys Design Compiler tool. The clock period is set to 20 ns; the input delay is set to 1 ns; and the output delay is set to 1 ns. The synthesis results of the hardware elements of the biometric recognition system are shown in

Table 5. According to the results, the hardware overheads of the Trojans are expressed as: HT 1, 0.88% for power consumption, 0% for critical path duration time and 0.82% for area; HT 2, 0.22% for power consumption, 0% for critical path duration time and 0.19% for area; HT 3, 1.46% for power consumption, 13.14% for critical path duration time and 0.68% for area; and HT 4, 1.45% for power consumption, 6.29% for critical path duration time and 1.01% for area. These overheads are relatively negligible considering all the cores within the chip. In order to compare the proposed system with its counterparts, two benchmark recognition systems are adopted from [

11]. The fingerprint recognition system has a power consumption of 7.8862352 (mW), a critical path duration time of 891.2896 (ms) and an area of 329.5636 (mm

2). The palm recognition system has a power consumption of 105,159.8520 (mW), a critical path duration time of 3656.1606 (ms) and an area of 2516.5824 (mm

2). Our recognition system has higher values for all of the hardware parameters, but it has three main benefits: (i) performing the recognition process in two stages, which reduces the possibility of passing and accepting infected biometric data; (ii) performing a filtering process to eliminate the impact of a Hardware Trojan if applicable; and (iii) easier to locate an intrusion in the system due to a decussate and chaining hardware-software architecture. Meanwhile, our system has a conspicuously smaller hardware footprint in comparison to the existing exclusively hardware-based works, and its algorithm is computationally much simpler in comparison to the existing lightweight biometric recognition systems [

12,

13,

14,

15,

16,

17,

18,

19,

20,

21,

22].

7. Conclusions

A cross-layer biometric recognition system with small computational complexity is studied in this work. The system has both software and hardware modules in a decussate and chaining structure. With this structure, it becomes easier to localize an intrusion in the system caused by an adversary. The system analyzes and security checks biometric data under investigation in two processing stages, which provides a higher level of defense strength. Regarding its hardware analysis, the main design parameters (power consumption, area and critical path duration time) of the hardware modules within the system are acceptable considering all hardware elements within the device. In order to further assess the recognition ability and the confrontation power of the system in front of malicious biometric data, four Hardware Trojans for the encryption unit positioned within the system are presented. The Trojans target different locations of the AES module namely the intermediate transformation round, the key expansion unit, the lookup table and the substitution-box. The HTs subvert the biometric data at different intensity levels with the aim of denial of service. According to the performance evaluation of the system, it is able to recognize the chosen biometrics, fingerprint and iris, with an acceptable level of accuracy. Furthermore, it has the potency to detect the maliciously-manipulated biometric data caused by the designed Hardware Trojans. The footprints of the designed HTs are negligible considering all the hardware elements within the victim chip. At last, studying cross-layer biometric recognition systems for surveillance applications is a relatively new research area, and more research and experiments are required to deliver more trustworthy security cameras for the IoT world.