1. Introduction

Recent advances in safe, collaborative robotics technology and the increasing availability of open source robot hardware facilitated the emergence of numerous new vendors entering the market with relatively inexpensive collaborative industrial robots. While this development is welcome in a field that has been dominated by a few global players for decades, the diversity of the often proprietary, vendor-provided robot programming languages, models, and environments that are provided with these new robots is also increasing rapidly. Although most vendors appear to pursue a common goal in creating more intuitive means for programming robots using web-based programming environments, tablet-like devices as teach pendants, and concepts from the domain of smart phone user experience and design, the resulting programming languages, models, and environments are rarely compatible with one another. This poses challenges to application integrators, who strive to innovate their robot fleet while reusing existing knowledge, expertise, and code available in their organizations. In non-industrial applications, the heterogeneity of proprietary robot systems increases the need for specialized expertise and thus hinders the democratization of the technology—that is, making it more accessible to diverse non-expert users by simplifying (without homogenizing) programming and lowering overall costs of operation. In this context, generic robot programming can help to cope with the heterogeneity of robot systems in application scenarios, which go beyond those envisioned by robot vendors.

Generic (or generalized) robot programming (GRP) refers to a programming system’s support for writing a robot program in one programming language or model for which there are means for converting the program into vendor-specific program instructions or commands [

1,

2,

3]. By “program instructions or commands” it is meant both source code, which needs to be compiled or interpreted before runtime, and instructions or messages, which are interpreted by a target robot system or middleware at runtime. When online programming is used, the instructions are sent to the robot immediately; whereas in offline programming, an entire program is generated for a specific target system first, and then downloaded (or deployed) to the robot [

1,

2]. The concept of GRP stands at the basis of the so-called skill-oriented robot programming approach [

2], which proposes a layered architecture, in which “specific skills” (i.e., task-level automated handling functions, such as pick and place) are created on top of so-called “generic skills” (i.e., generic robot behaviors like motions), which interface the robot-independent specific skills with robot-dependent machine controllers and sensor drivers [

2]. GRP often uses code generation for interfacing different robotic systems with robot-independent path planning, computational geometry, and optimization algorithms into a layered architecture providing a “generation layer” with open interfaces [

3]. Modern frameworks, such as RAZER [

4], take the GRP approach one step further by integrating skill-based programming and parameter interface definitions into web-based human-machine interfaces (HMIs), which can be used to program robots on the shop floor. In all these approaches, the GRP concept is used to enable interfacing higher level robot software components (i.e., skills), which can be created in various programming languages, with different robots. Various commercial and open source GRP tools (e.g., the Robot Operating System (ROS) [

5], RoboDK [

6], Siemens’ Simatic Robot Integration [

7], “Drag&Bot” [

8], RAZER [

4], etc.), which are commonly used in the industrial domain, require the development of robot drivers or code generators for each of the supported robots. Once such drivers or generators exist, users are able to write generic robot programs in one language and/or programming model (e.g., C++ or Python in the case of ROS, or a graphical language or Python in RoboDK), whereas the system automatically converts generic programs to robot-specific programs or instructions.

There are several problems with these approaches that the current paper seeks to address. The development of (reliable) robot-specific drivers and code generators is costly and often left to the open source community (e.g., in the case or ROS). Developing a robot driver or code generator for one of the existing GRP environment requires expert knowledge “beyond just software, including hardware, mechanics, and physics“ [

9] (p. 600), and takes on average up to 25% of the entire development time [

10], especially in the case of online programming, when (near) real-time communication with the robot is required (e.g., as it is the case with ROS). As García et al. [

9] note, while the members of the robot software developer community tends to use the same frameworks, languages, and paradigms (i.e., ROS, C++, Python, object-oriented and component-based programming), there appears to be little awareness within this community concerning the importance of software engineering best practices for improving the quality and maintainability of software in order to make it reusable and reliable. In addition, scarce or lacking documentation of published robot software components [

11,

12] hinders the synthesis of a set of common principles for developing drivers and code generators.

Due to the inherent difficulties of covering a multitude of robots using a single programming model, graphical GRP environments use a reduced subset of control structures. For example, RoboDK does not support if-then-else and other basic conditional statements. To use more advanced programming structures, users need to switch to textual programming. In this context, with new and inexpensive (collaborative) robots entering the market at an unprecedented pace, there is a need for new GRP approaches, which (1) allow the inexpensive, flexible integration of new robots that come with their own programing models, (2) lower the cost of developing new and reliable robot drivers or code generators, (3) simplify robot programming while providing a complete set of programming structures to maximize flexibility and thus to foster the wide adoption of robots in and beyond industrial contexts, and (4) succeed in generating programs for closed robot programming environments, which use binary or obfuscated proprietary program formats.

Against this background, this paper introduces a novel approach to GRP, which leverages graphical user interface (GUI) automation tools and techniques. Modern GUI automation tools use computer vision and machine learning algorithms to process visual elements in GUIs in near real time and to emulate user interactions in reaction to those events. The proposed approach uses GUI automation to couple third-party programming tools with proprietary (graphical) robot programming environments by enabling program generation in those target environments while bypassing vendor-provided application programming interfaces (APIs). In this sense, the contribution of the paper is twofold. First, it introduces a novel web-based graphical robot programming environment, called

Assembly, which is used to generate an intermediary-level program model that conforms to a generic robot programming interface (GRPI). Second, it illustrates both technically and methodologically how GUI automation can be leveraged to generate robot programs in various proprietary robot programming environments. As part of the approach, the programming process is divided into a generic, robot-independent step, which can be carried out anywhere using the web-based

Assembly tool. In a second step, a robot-independent GUI automation model, corresponding to the program, is generated using the

Assembly tool. In a third step, the user can connect a computer running a GUI automation tool, such as SikuliX [

13] or PyAutoGUI [

14], to a target robot programming environment (e.g., an HMI hosted on a teach pendant) to generate a program in that environment. This saves time and allows for maintaining a unified code base, from which programs for proprietary target environments can easily be generated.

The design rationale behind this approach is to democratize collaborative robot programming in makerspaces and other non-industrial contexts by facilitating an easy entry into robotics using a simplified web-based, graphical programming environment and a solution to the GRP problem based on an easily understandable and applicable technology, such as GUI automation. The proposed approach thus aims to provide a viable, more accessible alternative to entrenched robotics “monocultures”, such as the expert-driven ROS ecosystem [

11], and commercial ecosystems controlled by robot manufacturers, which have dominated the robot software landscape for decades. Similarly,

Assembly aims to provide a more straightforward alternative to Blockly and Scratch—the current de facto standards for simplified programming in educational contexts. As García et al. [

9] note, “[r]obots that support humans by performing useful tasks… are booming worldwide” (p. 593), and this is not likely to produce less diversity concerning robot users and usage scenarios. In this sense, the hopes and expectations concerning

Assembly are not primarily to be (re)used by many but to inspire and encourage robot enthusiasts to create more diverse, accessible, inclusive, and user-friendly “do-it-yourself” robot programming tools and to share them online—ideally as web applications. The key ingredient in this configuration is, arguably, represented by a generic robot programming interface, which builds on the “convention over configuration” principle [

15] to enable the loose coupling between various third-party programming models and proprietary robot programming environments through GUI automation and (possibly) other unconventional means.

The approach is evaluated based on a real application focused on generating programs for a UR5 robot (i.e., a Universal Robots’ model 5 robot) installed in an Austrian makerspace (i.e., a shared machine shop equipped with digital manufacturing technologies that are leasable by members on premise) [

16]. Over the past two decades, makerspaces emerged as alternative, non-industrial locales in which advanced manufacturing technologies (such as 3D printers, laser cutters, CNC machines, etc.) can be leased on-premise by interested laypersons, who do not have to be affiliated with a specific institution. Recently, collaborative robots also started to figure in makerspaces. Here, the challenge is twofold. First, existing industrial safety norms and standards cannot be applied to the makerspace context because member applications are not known in advance [

17,

18]. Second, current robot programming environments are still designed with experts in mind and/or require direct access to the robot. This makes it difficult for interested laypersons to learn and practice robot programming remotely (e.g., at home) before getting access to a real robot. The approach to robot programming described in this paper specifically addresses the latter issue.

The generalizability of the approach to list, tree, and block-based programming was assessed using three different robot programming environments, two of which are proprietary. In brief, the results of this evaluation suggest that the proposed approach to generic robot programming by emulating user interactions in robot GUIs and HMIs is feasible for an entire range of graphical (block, tree, or list-based) target robot programming environments. For example, it can be used to generate programs in proprietary graphical robot programming environments, which do not allow for importing programs created using non-proprietary tools. In educational settings, the automated generation of programs in a target graphical environment also fosters learning how to program in that environment. The proposed approach can also be used to generate program or task templates, which only require the adjustment of locations and waypoints in the robot’s native graphical programming environment.

3. Solution Approach

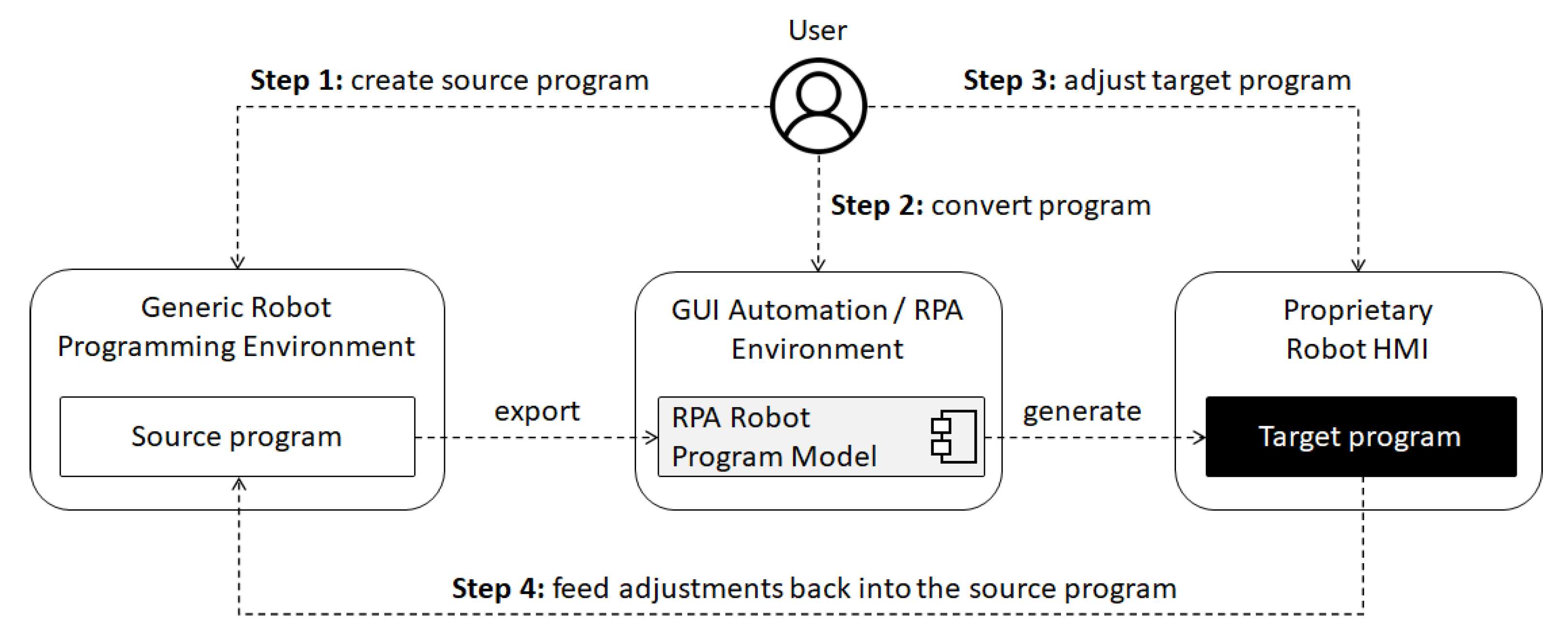

Figure 1 illustrates the proposed approach to generic robot programming by emulating user interactions in different robot-specific graphical programming environments using GUI automation (or RPA) tools and techniques. A user first creates a source program in a non-proprietary GRP environment, such as

Assembly, then exports a so-called RPA model (i.e., a program in the language used by the RPA tool), which can be used within an RPA environment to generate a target program in a proprietary robot programming environment (e.g., the robot’s HMI provided with a teach pendant). The user can check, adjust (e.g., robot poses and waypoints), test, and use the resulting program in the target robot’s HMI. As best practice, the adjustments made to the target program should also be replicated in the source program to maintain consistency.

Although parts of this process can be automated, in this paper, the detailed description of each process step will assume that access to the robot’s HMI is available on the computer hosting the GUI automation tool such that a user can manually trigger the generation of the program in the target environment. In the case of UR’s Polyscope [

9]—the robot HMI which will be used as the main example in this paper—the URSim simulator, which is provided as a virtual machine cost free on UR’s website [

54], can be used as a proof of concept. To access the HMI of a real UR robot remotely, RealVNC [

55] can be used to mirror UR’s HMI on another computer [

51]. This allows for the proposed approach to work over a direct remote connection to the target robot’s HMI hosted on a teach pendant. In the following, each of these steps is described in more detail.

3.1. Assembly—A Generic Web-Based Graphical Environment for Simplified Robot Programming

Assembly is an open source simplified web-based robot programming environment, which belongs to the category of visual robot programming environments for end user development [

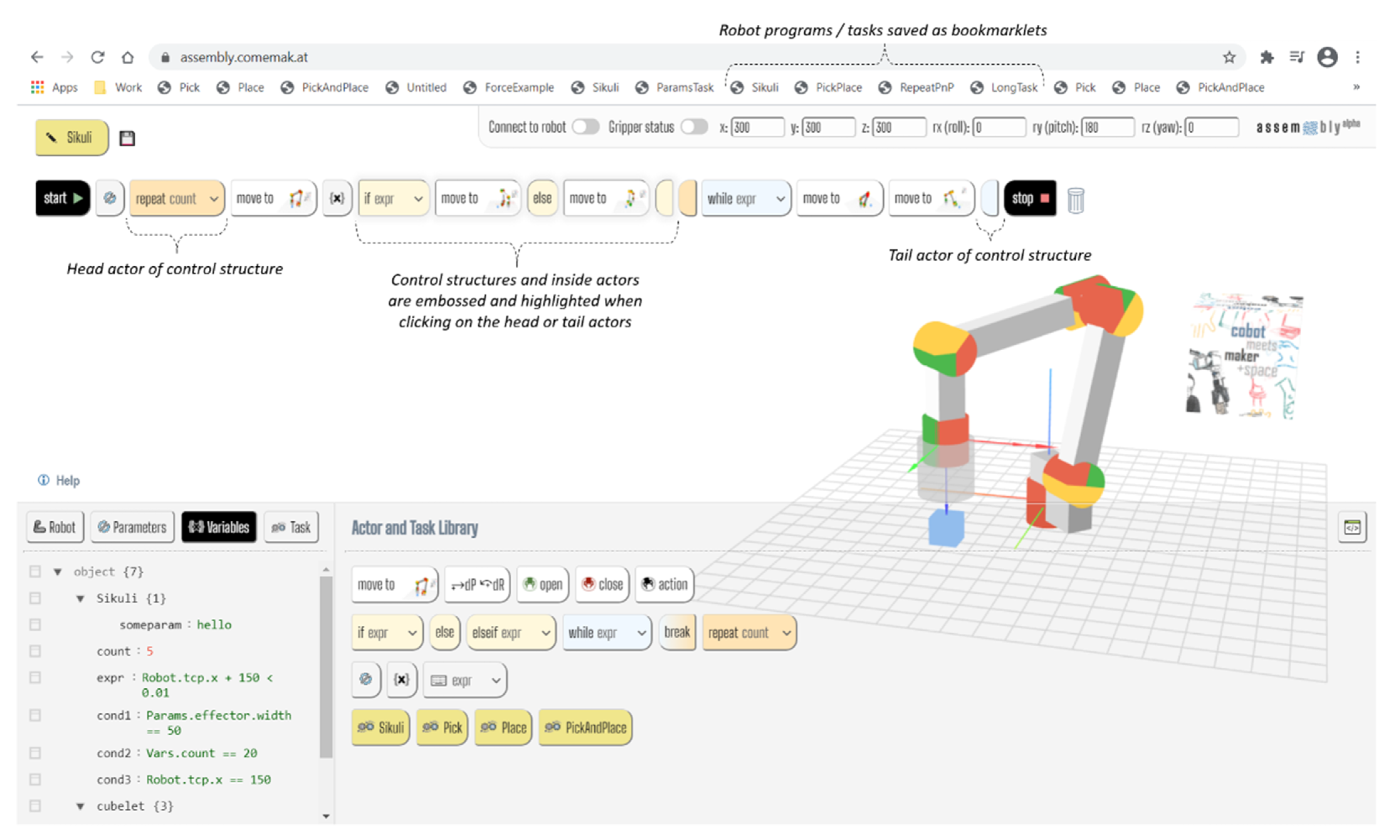

36]. The tool builds on a new block-based programming model that is similar to—but strives to be more straightforward than Blockly (see

Figure 2).

Assembly provides an “Actor and task library”, from which items (i.e., actors or tasks) can be dragged and dropped to form a sequential workflow. Actors implement basic robot behaviors, such as moving in a certain way or actuating an end effector; or program control structures, such as conditionals and loops. Actors can be added to the library by developers. A task represents a parameterizable workflow composed of actors and other tasks. Tasks can be stored as bookmarklets in the browser’s bookmarks bar. A bookmarklet is a HTML link containing JavaScript commands that can be saved in the browser’s “favorites” bar, which—when clicked—can enhance the functionality of the currently displayed web page [

33]. In the case of

Assembly, tasks are bookmarklets that generate a workflow corresponding to a program that has previously been saved by the user. Clicking on a task bookmarklet will also add that task to the library so that it can be used in other tasks.

The design rationale of

Assembly is for programs to be written and read left to right, like text in most Indo-European languages. The tool can be localized to other languages and cultures by changing the progression direction of programs (e.g., from right to left or top to bottom). Unlike in the case of textual programming languages or Blockly, there is no explicit indentation, only a linear enchaining of actor instances, which form a workflow. Instead, as shown in

Figure 2, rounding and highlighting effects are used to indicate how an actor relates to other actors placed before and/or after itself. A workflow may be conceived of as a phrase of varying length and complexity, which—when properly formulated—achieves the desired effect. Consequently, each actor instance may be regarded as a short sentence in that phrase. At the same time, actors encompass the semantics necessary to generate code in textual programming languages.

Technically, a workflow is implemented as a sortable HTML list of items representing actors and tasks, the order of which can be modified by the user. To implement such a list, the “jQuery Sortable” library was used [

56].

Assembly integrates a simple open source generic 6-axis robot simulator and inverse kinematics library [

57], which can be used to define motion waypoints and to visualize robot moves. The simulator can be controlled either by dragging the end effector or by setting the coordinates of a desired waypoint using the provided controls for Cartesian coordinates and Euler angles. To memorize a certain pose as a waypoint, the user must drag the “move to” actor to the workflow after having reached the desire position using the simulator controls. Additional program logic can be implemented by dragging and dropping other actors into the workflow.

Actors implementing control structures are composed of color-coded “head” (e.g., structures such as if, while, else, repeat, etc.) and “tail” (e.g., a closing accolade “}”) actors (or elements) corresponding to an inline instruction, such as:

Similar to Blockly, Assembly helps novice programmers to avoid syntax errors by providing fixed structures that can be arranged in a sequence, whereby some program behavior is achieved regardless of the structure of a program. The goal is for the programmer to (re)arrange the actors and tasks in a logical way until the desired behavior is achieved. A minimum viable program can be implemented using the “move to” actor alone. This actor displays a small icon reflecting the robot’s target pose as at that specific waypoint. After having understood the working principle of actors, one can implement more advanced functionalities.

Besides the sequential orientation of programs,

Assembly differs from Blockly in the way in which it handles parameters and variables. Whereas Blockly allows users to integrate variables and parameters into blocks,

Assembly uses a special screen region called

Context (i.e., lower left-hand side in

Figure 2), where parameters and variables can be defined using an embedded JavaScript Object Notation (JSON) editor. The variables and parameters thus defined can be used with control structures, which contain a dropdown list that is automatically populated with compatible variables, and actors (e.g., the

“dP—dR” relative motion actor). To set parameters or variables before the execution of an actor or task,

Assembly provides two specialized gray-colored actors, whose icons match those of the “Parameters” and “Variables” buttons in the

Context, respectively. Following the

Blackboard design pattern [

58], tasks and actors only communicate with each other by reading and writing into a shared JSON object (i.e., the blackboard), which internally represents the

Context of the current task. Besides the “Parameters” and “Variables” sections, the

Context also contains a read-only “Robot” section which provides real-time access to the coordinates of the robot during execution and a “Task” section, which allows users to parameterize tasks.

As opposed to Assembly, Blockly provides additional types of blocks that have predefined structures and possibly multiple explicit parameters. While this provides custom block creators with more possibilities and flexibility, defining everything (including variables) as a block can, arguably, lead to complicated, non-intuitive constructs for assignments and expressions. Blocks taking many parameters may also expand horizontally or vertically, thus taking up lots of space of the viewport for relatively simple logic. For these reasons, Assembly strives to standardize actors in order to simplify their creation and use by unexperienced programmers.

From a structural point of view, there are three main types of actors in Assembly:

- 3.

Non-parameterizable actors, which do not use any parameters, such as the “else” or “tail” actors;

- 4.

Implicitly parameterizable actors, which use variables and parameters from the Context but neither explicitly expose them nor require user-defined values for them, such as movement and end effector actors;

- 5.

Explicitly parameterizable actors, which expose one variable, the value of which needs to be set by the user in order for the actor to produce a meaningful effect. This is the case for control structures.

By separating the control flow (i.e., workflow) from the data flow (i.e., Context),

Assembly aims to facilitate an easy entry to novice programmers, while providing a so-called “exit” strategy [

31] for more advanced ones. The exit strategy consists in incentivizing users to learn how to work with variables and expressions in the

Context, which are required by different control structures. At the same time, absolute novices can still create functional workflow using non-parameterizable and implicitly parameterizable actors. Once the logic, composition, structure, and expression format of an

Assembly program are mastered, the user can move on to textual programming.

By default,

Assembly generates a JavaScript program, which can be inspected by the user and copied to the clipboard. The basic structure of a language-specific code generator in

Assembly is that of a JSON object, which provides callback functions as object properties corresponding to a specific actor, as shown in

Figure 3. To generate a program,

Assembly iterates through the current workflow, represented as a list of actors, and calls the function corresponding to the type of the actor being processed in the current iteration.

Assembly’s generic code generation algorithm can thus be easily coupled to other language-specific generators.

3.2. A Generic Robot Programming Interface (GRPI)

Figure 4 shows an UML diagram of a GRPI and its relations to the software components that are necessary to generate a robot program from an RPA model. The package RobotHMIAutomation contains a GRPI called IRobotProgramGenerator as well as several implementations of that GRPI, e.g., for UR, Franka Emika Panda, and KUKA iisy robots. The GRPI specifies a series of required fields and methods that need to be provided or implemented by any robot-specific program generator class, module, or component. The dependency of the RobotHMIAutomation package on an external RPA library is explicitly illustrated using a dashed UML arrow. The RPA library used by the RobotHMIAutomation package should at least provide a

click(Image) and a

type(String) function. For example, a call of the

click(Image) function determines the RPA engine to emulate the user action required to perform a left-mouse click upon the graphical element specified by the provided image parameter. These images are specific to the proprietary robot programming environment for which the robot program is being generated. They should thus be considered as being inherent components of any implementation of the GRPI and should be packaged together with that implementation.

To facilitate the generation of programs in a proprietary target program environment, a GRP environment must implement a generic RPA model generator, which conforms to the

IRobotProgramGenerator interface. This approach is similar to the Page Object design pattern in GUI automation, which allows wrapping a GUI in an object-oriented way [

42].

Figure 5 depicts an extract from the JSON object implementing such a generator in

Assembly. The

SikuliCodeGenerator generates RPA models from robot programs created using

Assembly’s graphical editor. These models are designed to work with the Sikuli/SikuliX RPA tool [

13,

59], which uses Python as its scripting language.

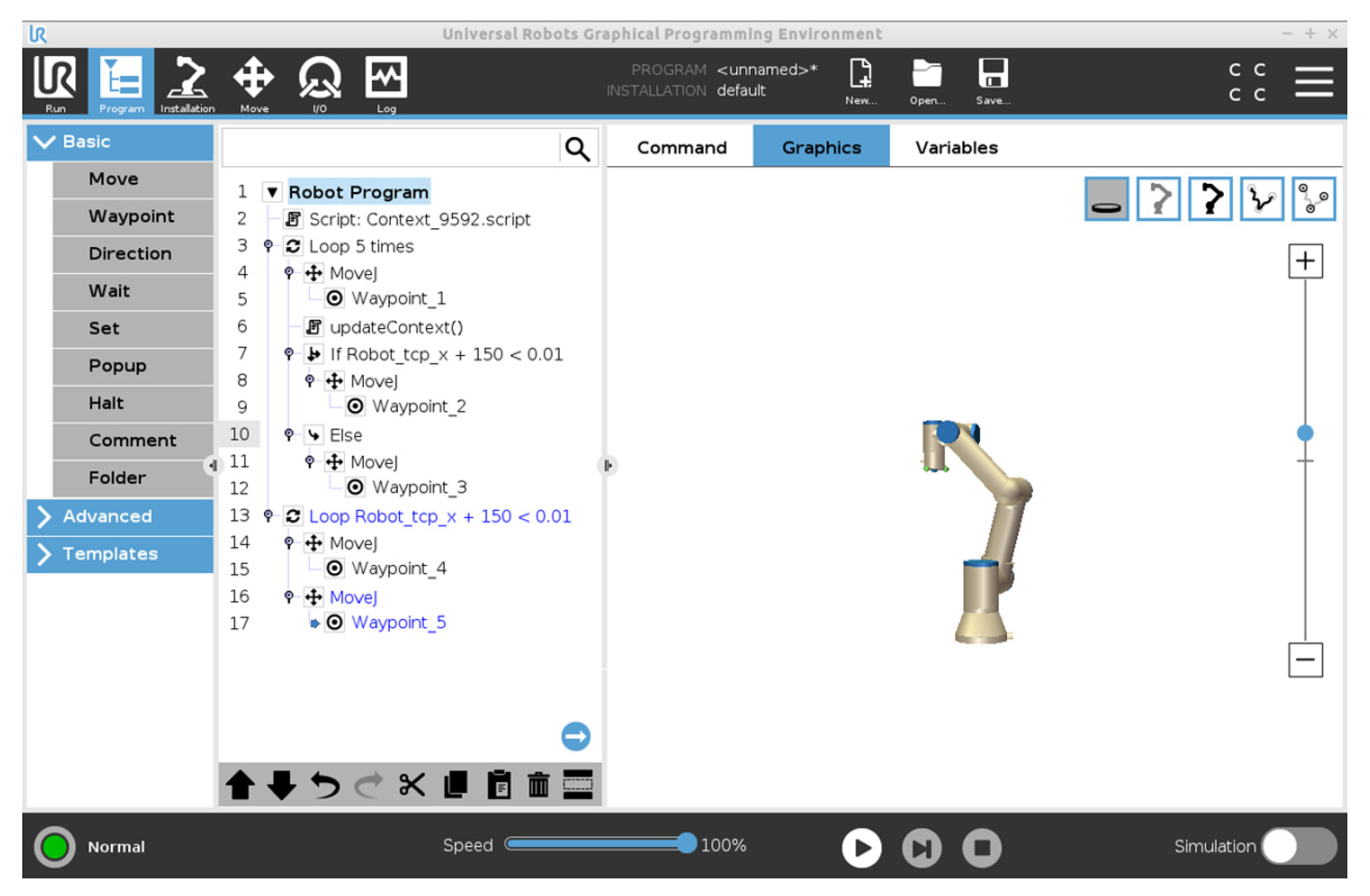

Figure 6 shows the code generated by

Assembly for the robot program depicted in

Figure 1; whereas

Figure 7 depicts the program generated in UR’s proprietary HMI environment (Polyscope) corresponding to the RPA model from

Figure 6. The

wrap() command in

Figure 6 corresponds to a closing accolade (i.e., “}”) in a C-style language and the “tail” actors in

Assembly. Depending on the programming model of the target robot system, this command may, for example, close a conditional structure or a loop by wrapping up the elements pertaining to the respective program structure by generating a terminator element or moving the program cursor to the same level as the corresponding program structure. Hence, in this RPA model as in the GRPI, all control structures are prefixed by

start_, thus signaling that the command requires a

wrap() element later on in the program; whereas the subsequent elements of an articulated control structure, such as

if-elseif-else, will be prefixed by

add_, as in

add_elseif and

add_else. Together with the

wrap() instruction, these prefixes facilitate the reconstruction of a program’s abstract syntax tree, which is a necessary and sufficient condition for generating code or other program semantics in any target programming model. This mechanism is illustrated in the next section in more detail by the example of a

URProgramGenerator for Universal Robots’ Polyscope HMI.

3.3. Robot-Specific Program Generators

Robot-specific program generators are classes or other kinds of modular software components, which emulate the user interactions required to create the program structures corresponding to the different elements of a GRPI in a target robot’s native programming environment (e.g., UR Polyscope, Franka Emika Desk, etc.). These generators must implement the robot independent GRPI, thus bridging a GRP environment such as

Assembly with robot-specific environments. Robot program generators are analogous to robot drivers in ROS but do not require advanced programming knowledge since they only use a limited number of the functions provided by any modern GUI automation library, such as SikuliX [

13] or PyAutoGUI [

14].

Figure 8 shows an extract from a

URProgramGenerator, which implements the

IRobotProgramGenerator interface and is designed to work with SikuliX. In the graphical user interface of SikuliX, the

click command, which emulates a left-button click in a designated region (in this example, the designated region is the entire screen) allows for specifying the target GUI element using a visible picture, which is captured directly on screen. A new program generator can thus be created by reconstructing the steps required of a user to create all the program structures specified by the GRPI. For example, generating a motion instruction (i.e.,

moveTo) having a single waypoint requires emulating a series of clicks on different elements of the target robot’s HMI (in this example, Universal Robots’ Polyscope) and typing the coordinates of the target robot pose in the corresponding text fields in the HMI. In the case of Polyscope, the

wrap() instruction is implemented by emulating two subsequent presses of the left arrow button on a generic keyboard, which moves the program cursor out of the current control structure.

The program generator also provides a series of auxiliary functions, including

updateContext and

typeExp (not shown in

Figure 8 but visible in the generated Polyscope program in

Figure 7). The

updateContext function is used in connection with the

initContext function specified by the

IRobotProgramGenerator interface.

initContext replicates the parameters and variables from the

Context object in

Assembly such that all variables available within the scope of the source program be reconstructed in the target program. In the case of Polyscope,

initContext creates a script command (see line 2 in

Figure 7), which creates all the parameters and variables contained in the

Context object. The

updateContext function is inserted by the generator before control structures in order to update all variables and parameters (e.g., line 6 in

Figure 7).

4. Evaluation

This section presents an evaluation of the proposed approach to GRP on the basis of an application centered on the use of robots in makerspaces. After introducing this application, we describe the infrastructure used in the makerspace to enable the generation of robot programs for the Polyscope HMI of a UR5 robot and discuss some key non-functional requirements, including development effort, program generation performance, and reliability. Then, we present the results of an assessment of the generalizability of the approach based on the creation and analysis of program generators for two additional program models that are commonly used in robot programming (i.e., block-based and list-based programming), which differ from the tree-based model used in Polyscope. Finally, we discuss the benefits and limitations of the proposed approach based on the results of this evaluation.

4.1. Application: Robotics in Makerspaces

Makerspaces are shared machine shops in which people that have diverse backgrounds are provided with access to advanced manufacturing technologies, which typically include 3D printers, laser cutters, and other additive and subtractive manufacturing systems. Makerspace members, who usually do not have specific institutional affiliations, can use these technologies for their own creative, educational, or work-related purposes, while paying a membership fee. With the recent advances in collaborative robotics, more robots are being encountered in European makerspaces than ever before. This attracts robot vendors that are increasingly interested in non-industrial markets as well as companies and research institutions interested in leveraging the open innovation potential in makerspaces [

17]. In this sense, makerspaces pose new safety challenges, since member applications are not usually known in advance. In this respect, makerspaces differ from traditional industrial contexts, where robot applications must undergo safety certifications before they can be used productively [

17].

Since the number of robots in any makerspace is limited and machine hours may cost additionally, makerspace members can benefit from learning and exercising generic robot programming at home using a web-based tool such as

Assembly before testing the programs on the robots in the makerspace. Sketching and sharing an idea with other members over a web-based robot programming environment also helps to identify potential safety issues entailed by an application. These mostly autodidactic, preparatory practices of web-based programming are complemented by the robotics trainings offered by makerspaces, which emphasize safety but usually do not go into great details about programming, since not all trainees have programming experience. Acquiring a basic background in robot programming at home thus enables members to benefit from more advanced programming trainings as well as from asking robotics trainers more precise and purposeful questions, grounded in the goals and needs of the robotics projects they envision. This arguably fosters a problem-oriented, constructivist learning environment [

60,

61] in the makerspace, while the absolute basics are self-taught. In the makerspace, members can then translate their robot programs for the different available robots, for example, to determine which of them may be best suited for the task at hand. At the same time, using the GRPI, robotics trainers can develop program generators for the different robot types available in the makerspace.

Safety is a challenging aspect in makerspace robotics, since—as opposed to an industrial context—makerspace member applications are not known in advance and therefore cannot be certified [

17,

18]. Therefore, makerspace members are responsible for their own safety [

17], whereas makerspace representatives need only configure the safety setting in the robots’ native HMIs to ensure member safety (e.g., by enforcing strict, password-protected speed and force limits). Makerspace members can thus generate programs for any available robot without losing the safety-related and other features provided by the robot’s native HMI. In addition, the task of translating a generic source program into a robot-specific target one also stimulates users to learn how to work with different robots and programming environments by example.

Figure 9 illustrates the usage scenario and infrastructure that can be used in the makerspace to support program creation in

Assembly at home and target program generation for a typical robotic system (in this example, a UR5), composed of a robot arm, a control computer, and a teach pendant hosting the HMI. To connect the toolchain used for program generation (i.e.,

Assembly–SikuliX–Robot HMI), the HMI software hosted on the physical teaching pendant is mirrored (or replicated) using a secure wired connection (e.g., USB, Ethernet) and a remote monitoring and control tool (in this example, RealVNC) running on a computer provided by the makerspace. Universal Robots allows mirroring the robot’s HMI using a VNC server. Other vendors (e.g., KUKA, ABB, Fanuc) offer similar open or proprietary solutions for remotely monitoring and controlling a robot’s HMI in operation. The makerspace computer hosts the SikuliX GUI automation tool, which also provides robot-specific program generators. This way, members can generate programs in a target environment over a remote connection to the robot’s HMI. The setup in

Figure 9 also ensures that members can work safely with collaborative robots by using the safety features of the robot.

Table 1 shows some key performance indicators of program generation using the setup from

Figure 9. Due to the latency of Polyscope, to which around 100 ms are added by the remote connection, and around 25 ms by the image recognition algorithms from the OpenCV library [

62], which is used by SikuliX, the program generation is not as fast as when using the URSim on a powerful computer (e.g., Intel i7 or equivalent with 16GB RAM). The overall difference between the two setups in terms of total program generation time is low because the generator uses a feed-forward strategy—i.e., it does not wait for a success signals, which allows it to pipeline the generation of instructions. The relatively slow performance of the program generation process can also be turned into a useful feature, which allows users to configure the speed of program generation in a target environment to fit their learning pace.

4.2. Generalizability

To assess the generalizability of the approach to other programming models, two additional program generators were created for Blockly and a proprietary list-based programming environment, called Robot Control [

37], which is provided with IGUS® robots. The rationale for this choice was twofold. First, block-based robot programming environments, which are provided with many educational (e.g., Dobot, UArm, Niryo, etc.) and lightweight collaborative robots (e.g., ABB single-arm Yumi [

63]), are increasingly popular because they facilitate an easy entry into robotics programming. Similarly, list-based programming environments represent a popular “no-code” alternative to textual robot programming. For example, modern web-based generic programming environments, such as Drag&Bot, build on list-based programming. Second, simplified block-based and list-based environments usually do not provide means for importing (i.e., converting) programs created using other tools.

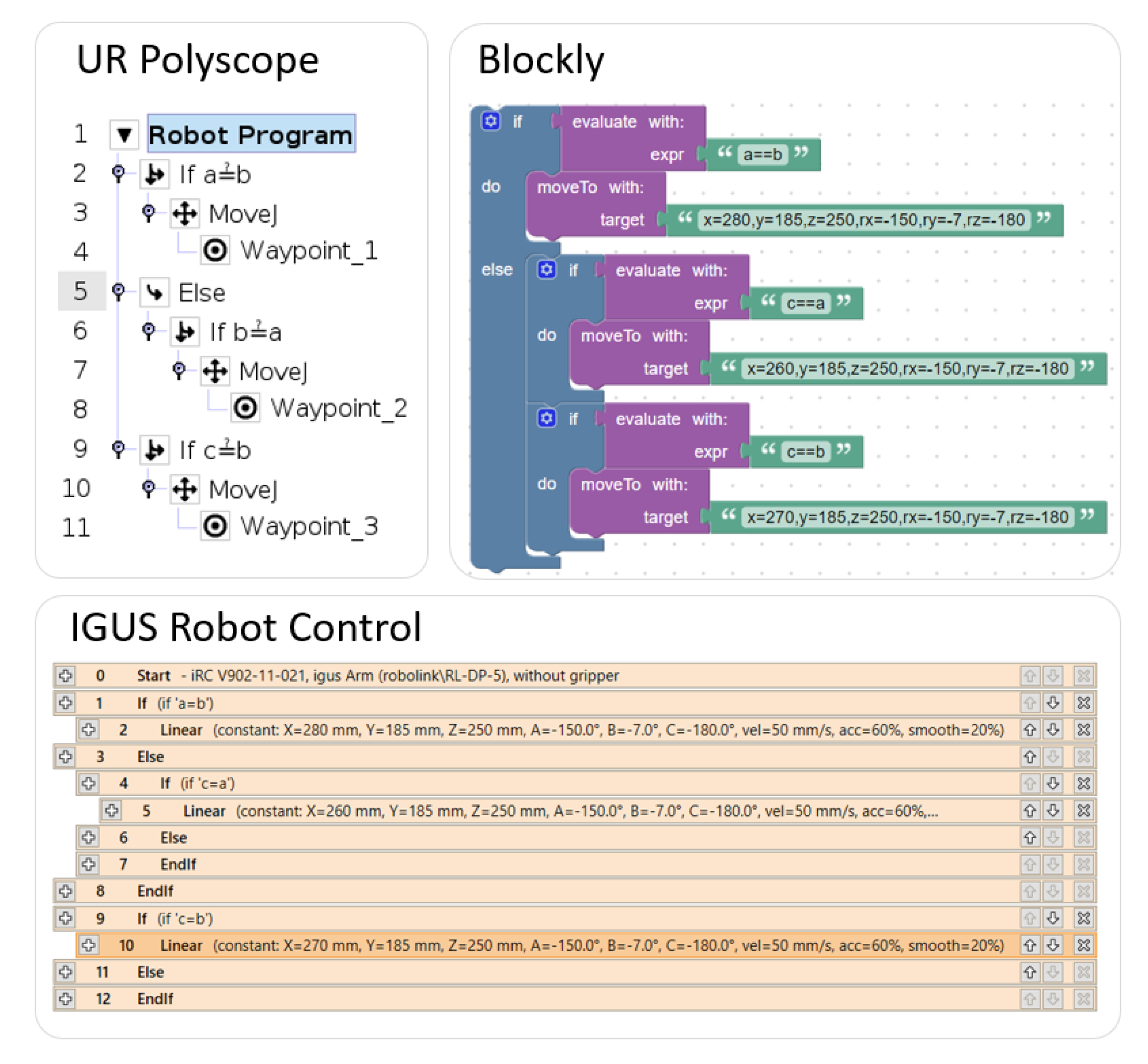

The evaluation procedure was as follows. First, a simple test program was created in Assembly and exported as an RPA model for the SikuliX environment. The content of that RPA model was:

start_if(“a==b”)

moveTo(280,185,250,-150,-7,-180,50,60)

add_else();

start_if(“c==a”)

moveTo(260,185,250,-150,-7,-180,50,60)

wrap()

wrap()

start_if(“c==b”)

moveTo(270,185,250,-150,-7,-180,50,60)

wrap()

The aim with this program was to measure the implementation effort required to create generators that are able to produce (1) motion statements and (2) control structures in diverse target programming environments, and to assess the performance and complexity of those generators when applied to the test program listed above.

Figure 10 shows extracts from the GRPI-conforming generators corresponding to the three robot programming environments that were used in the evaluation (UR Polyscope, Blockly, and IGUS® Robot Control); whereas

Figure 11 shows the programs they produce in the respective environments. The measured key indicators are presented in

Table 2.

First, it must be noted that it was technically possible to create program generators for each of the target environments being tested with relatively little development effort. The effort required to create these generators differed, with Blockly yielding the highest value. The reason for this is that Blockly does not use a fixed program pane, like Polyscope and Robot Control, which require users to create program elements at exact locations below preceding statements or within different control statements using instruction-specific control buttons or menu items. Therefore, Blockly’s keyboard navigation feature was used whenever it provided a simpler solution than emulating the dragging and dropping of elements using mouse control. In Blockly, keyboard control requires a certain amount of time to learn and master. In addition, although the length of the Blockly generator is approximately equal to the lengths of the other two, the relatively long combination of keys required to navigate through the menus must be accompanied by more elaborate comments in the code of the generator. Additionally, the generator functions for the if-then-else statement in Blockly are sensibly more complex, which is an effect of the keyboard navigation and the mechanism by which parameters are associated with different blocks. It must also be emphasized that generation of native Blockly expressions is cumbersome, which prompted the decision to implement a helper function that evaluates expressions provided as strings rather than “spelling out” native Blockly expressions. Although with enough ingenuity a patient programmer could implement an RPA model able to generate native Blockly expressions, this was beyond the scope of this evaluation. In this sense, it suffices to note that each (graphical) programming model uses (slightly) different expression syntax and semantics, which requires appropriate solutions or workarounds to enable RPA-based program generation. The choice of keyboard navigation over drag-and-drop programming may be regarded as a design tactic, which can be applied whenever emulating keyboard rather than mouse interactions is more effective.

The results also suggest that list-based (i.e., Robot Control) and tree-based (i.e., Polyscope) programming are easier to emulate using an RPA approach than block-based programming. This is likely because, in Blockly,

Assembly, and other similar environments, programs and statements are represented as sequences of blocks of different sizes and shapes; whereas in Polyscope and Robot Control, the structure of statements is rather fixed and more easily navigable, either by keyboard or by mouse. This is unsurprising since Blockly’s aim is to facilitate a learner’s transition towards textual programming and thus to foster creativity. By contrast, classic robot programming models are more straightforward and do not have an exit strategy—i.e., a strategy to facilitate the user’s transition towards textual programming [

31].

Concerning the reliability of RPA-based program generation, repeatability arguably provides a good indicator. The program generation process was repeated 50 times for each target environment and, once a working setup was achieved, the OpenCV image recognition algorithms integrated in SikuliX never failed on a 1920 × 1080 screen.

In terms of performance, thanks to the keyboard navigation feature, the Blockly generator outperformed the other two generators. The relatively slow performance of the Polyscope generator can be explained by the fact that URSim (i.e., the Polyscope simulation environment) runs in an Ubuntu virtual machine and uses a rather slow Java UI framework, which induces some latency. For example, Polyscope uses modal dialogues for user inputs (e.g., target locations), which take approximately 100 ms to pop up in the virtual machine.

5. Discussion

Overall, the evaluation results suggest that the proposed approach to generic robot programming by emulating user interactions in an entire range of graphical (block, tree, or list-based) target robot programming environments. Textual robot programming environments were not evaluated as targets since code generation is a well-studied and easily solvable problem. In addition, Assembly generates RPA models in Python, which demonstrates the systems’ capability to generate GRPI-conforming code. The evaluation suggests that there are several usage scenarios in which the proposed approach presents advantages over existing GRP approaches.

In a first scenario, the proposed approach can be used to generate programs in proprietary graphical robot programming environments, which do not allow importing programs created using other tools. This is the case both for IGUS® Robot Control and Blockly (which is used for example with ABB’s one-armed Yumi robot). With such environments, users are provided with no other option than to recreate existing programs whenever the latter needs to be ported to a new robot, which uses a proprietary programming model. This category of robots also includes the newest generation of low cost cobots, such as—for example—the Franka Emika Panda robot, which uses a proprietary web-based programming environment.

In a second scenario, the members of shared machine shops, such as makerspaces, can benefit from the approach by creating robot programs at home and converting them for an entire range of target programming environments in the makerspace. This helps to democratize robot technology by providing inexpensive online access to simplified robot programming, which encourages people who do not typically have access to robots to explore the potential and limits of this technology.

In a third scenario, the automated generation of programs in a target graphical environment fosters learning how to program in the respective target environment. Tools such as SikuliX provide means for easily configuring the pace at which user interactions are emulated (e.g., by configuring the “mouse move delay” parameter). This way, program generation can be adapted to the learning pace of the learner. This also fosters learning to program by example, whereby the examples must be adjusted to fit the requirements of a particular application.

For experts, this way of programming robots may be regarded as a form of test-driven development, in which an application is defined by a series of test cases that need to be passed. In this context, the proposed approach can be used to generate program stubs, which only require the adjustment of locations and waypoints. Generated programs may thus be regarded as design patterns or robot skills, which can be configured and tested on the shop floor.

5.1. Relationship with Component-Based and Model-Driven Robotics Software Engineering

The approach to GRP by GUI automation introduced in this paper shares some similarities with existing component-based [

64] and model-driven [

65] robotics software engineering approaches. Designing component-based robot software entails mapping various cohesive functionalities to components in order to separate design concerns and produce reusable software building blocks [

64]. Furthermore, reusable components should be hardware-independent and interoperable with different software development technologies and control paradigms [

64]. In this sense, the robot program generators introduced in

Section 3.3 help to abstract dependencies on robot-specific hardware and software, thus enabling the development of reusable components on top of a common GRPI. Nevertheless, imposing restrictions upon the architecture of components is beyond the scope of the approach. Whereas in autonomous robotic applications, componentization and object-orientation help to produce reusable robot software, in practical contexts (e.g., on the factory shop floor and in makerspaces) and in human-robot collaboration applications, developers are confronted with safety, interoperability, and integration issues against the background of time pressure, resource scarcity, and lack of software engineering expertise. In this context, the proposed approach provides a frugal, yet effective solution to implementing result-oriented robot-independent applications in an agile and pragmatic way. At the same time, for users who are more interested in robotics research and advanced, (semi-)autonomous applications, the GRPI provides a required interface and contract that can be used by components, as described in [

64].

The proposed approach fully supports model-driven robot programming and software engineering [

65]. Within this approach, a domain-specific language and/or modeling environment is used to derive an abstract representation of a real system or phenomenon [

65]. In practical terms, the application logic is represented using textual and/or visual semantics, whereby the result is regarded as an (executable) model. Block-based programming environments, such as Blockly and Assembly, are good examples of model-based software authoring tools. Other notable examples include BPMN (Business Process Modeling Notation), which has been used to model and simulate multi-robot architectures [

66] and to represent manufacturing processes [

67], and the VDI Norm 2680 [

27], which has been used to create a constraint-based programming system for industrial robot arms [

26]. In this context, the GRPI can be used to interface various modeling languages and environments with robot-specific programming environments. RPA models and robot code generators enable the inclusion of a wide range of robots into high level model representations with relatively little efforts.

5.2. Limitations and Future Work

Despite the successful development of generators for three dissimilar programming models, users should expect some difficulties if keyboard navigation is not supported in some target environments. In such cases, generating some instructions may not be possible without complex workarounds, which might render the image recognition process less reliable. In this sense, evaluation studies should be conducted with more diverse target environments, which heavily rely on mouse or touch-based control.

The relatively long time taken by all generators suggests that users should take into consideration possible delays due to the need of generating target programs repeatedly. In scenarios where program generation needs to be carried out in (near) real time automatically, the approach might thus be inappropriate. The performance of RPA-based program generation is also contingent on the latency of the target environment and will never be as fast as generating textual programs.

Although the time required to develop program generators is comparable to that of developing any other code generator, the proposed approach facilitates test driven development of generators by requiring developers to code in small increments while visually inspecting the result of the generator in the target environment feature by feature. Compared to developing a ROS driver, the required software engineering expertise and development time (including design, code instrumentation, and testing) of program generators is low. However, developing a program generator for complex environments, such as Blockly, requires ingenuity and computational thinking. In addition, as opposed to official APIs, there currently exists no specialized technical support or documentation about how to automate the HMIs of different robots. In this context, the target system’s user manual can provide a valuable source of information. Regarding the means for integrating a generated robot program with other systems using GUI automation technique, details are provided in [

18].

Whereas in non-industrial, educational contexts, the approach presents several advantages over other approaches (e.g., robotic middleware and robot programming using a teach pendant), an assessment of the utility of the approach in industrial contexts is still needed. Such an assessment could, for example, focus on program generation for legacy robot HMIs. Finally, a comprehensive evaluation of the approach with a significant number of users (with and without an industrial background) focused on the usability of the Assembly tool was not yet carried out and is currently in planning.