Surveillance Video Synopsis in GIS

Abstract

:1. Introduction

2. Related Work

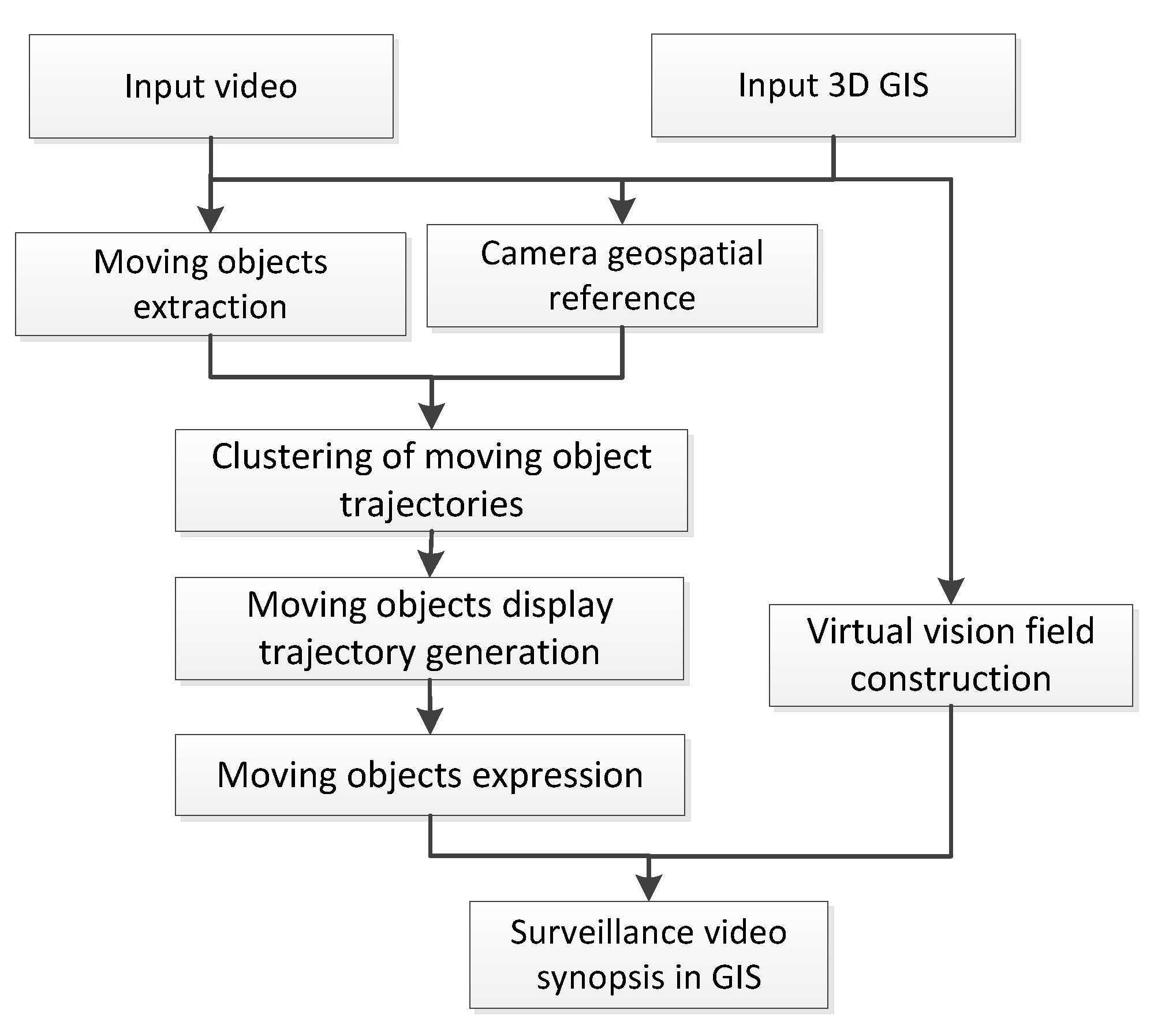

3. Surveillance Video Synopsis in GIS

3.1. Extraction and Clustering of Moving Objects

3.1.1. Extraction of Moving Objects

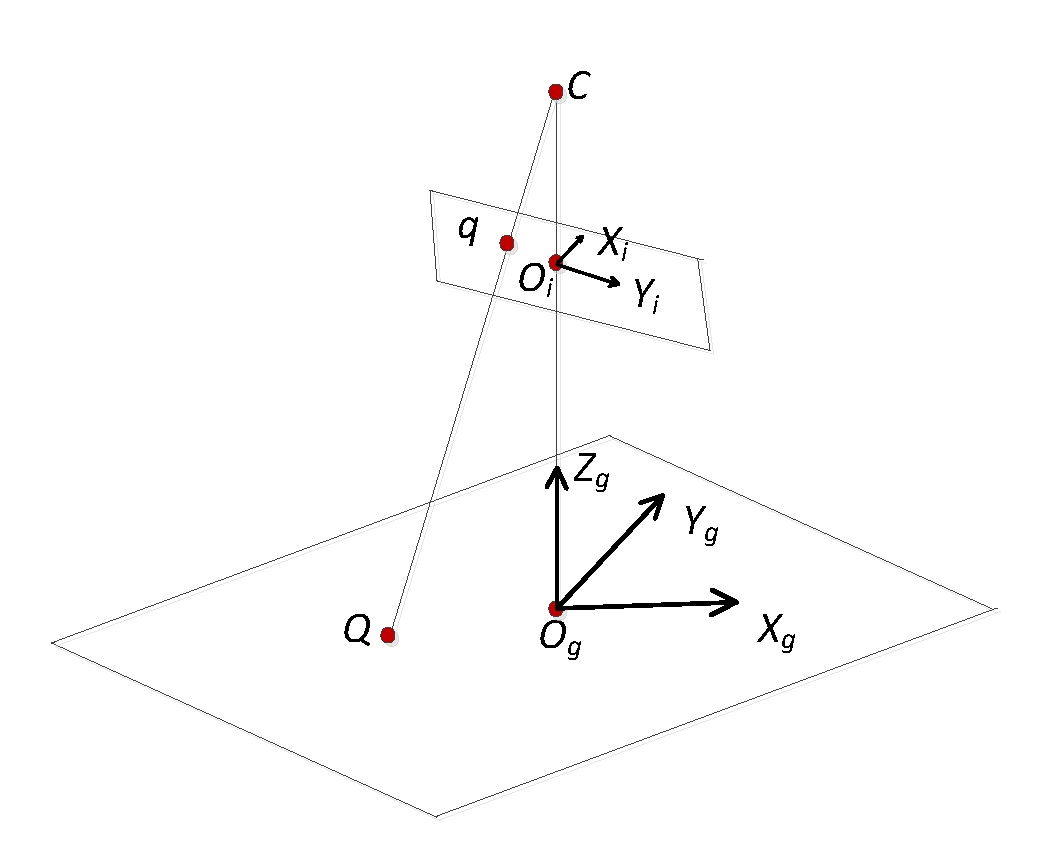

3.1.2. Georeferencing of Moving Object Trajectory

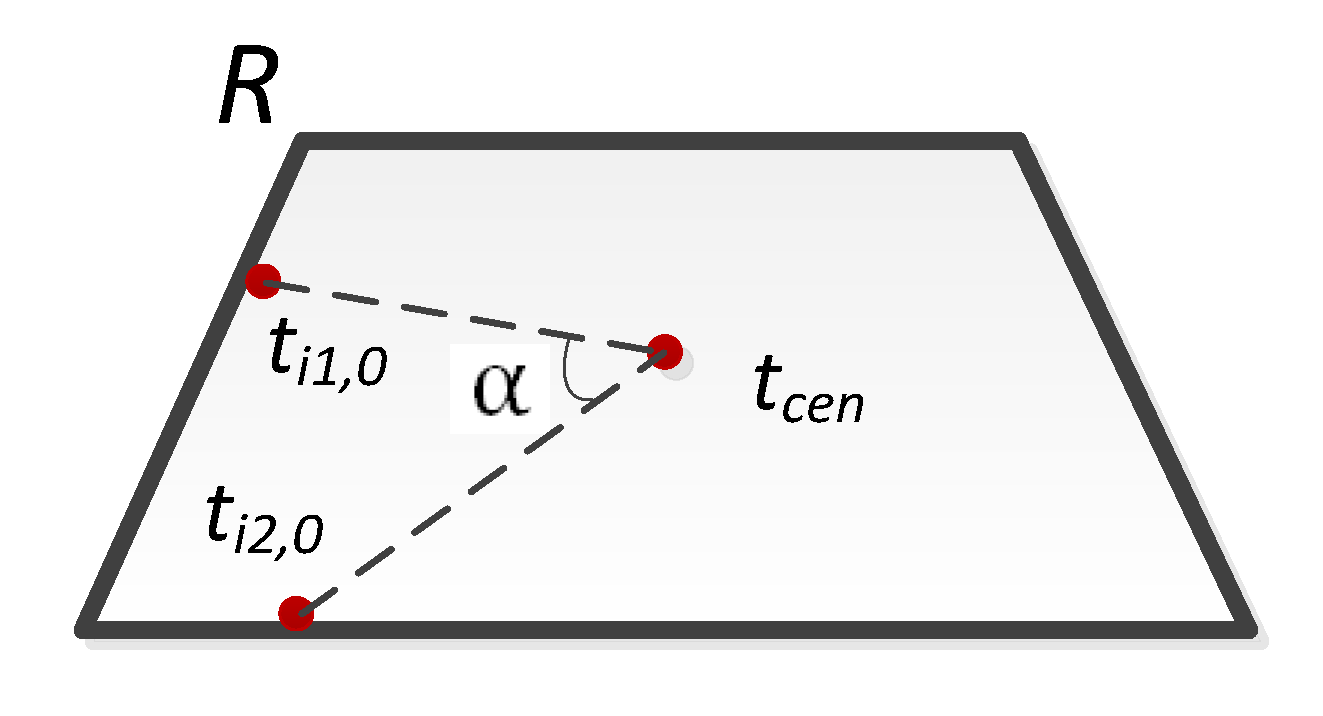

3.1.3. Clustering of Moving Object Trajectories

3.2. Video Synopsis in Virtual Scene

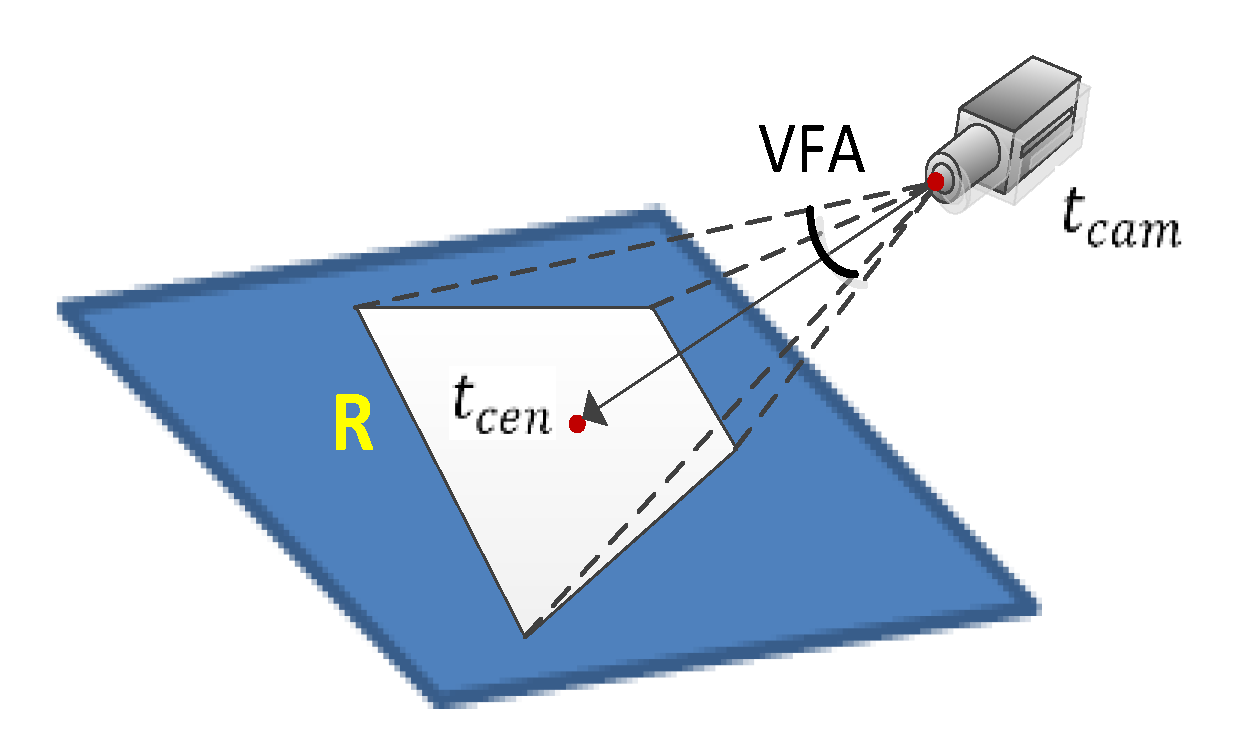

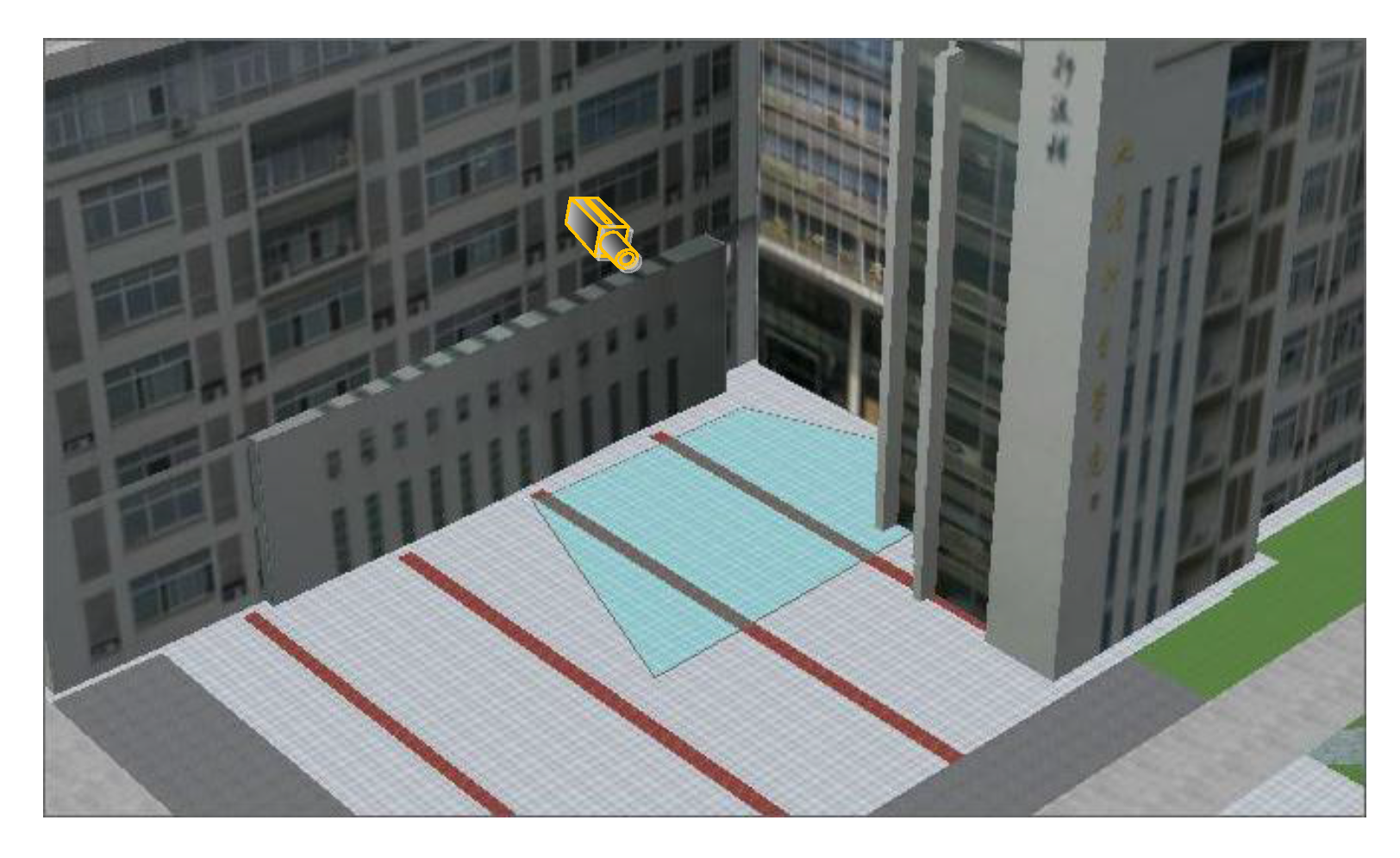

3.2.1. Virtual Field of Vision Generation

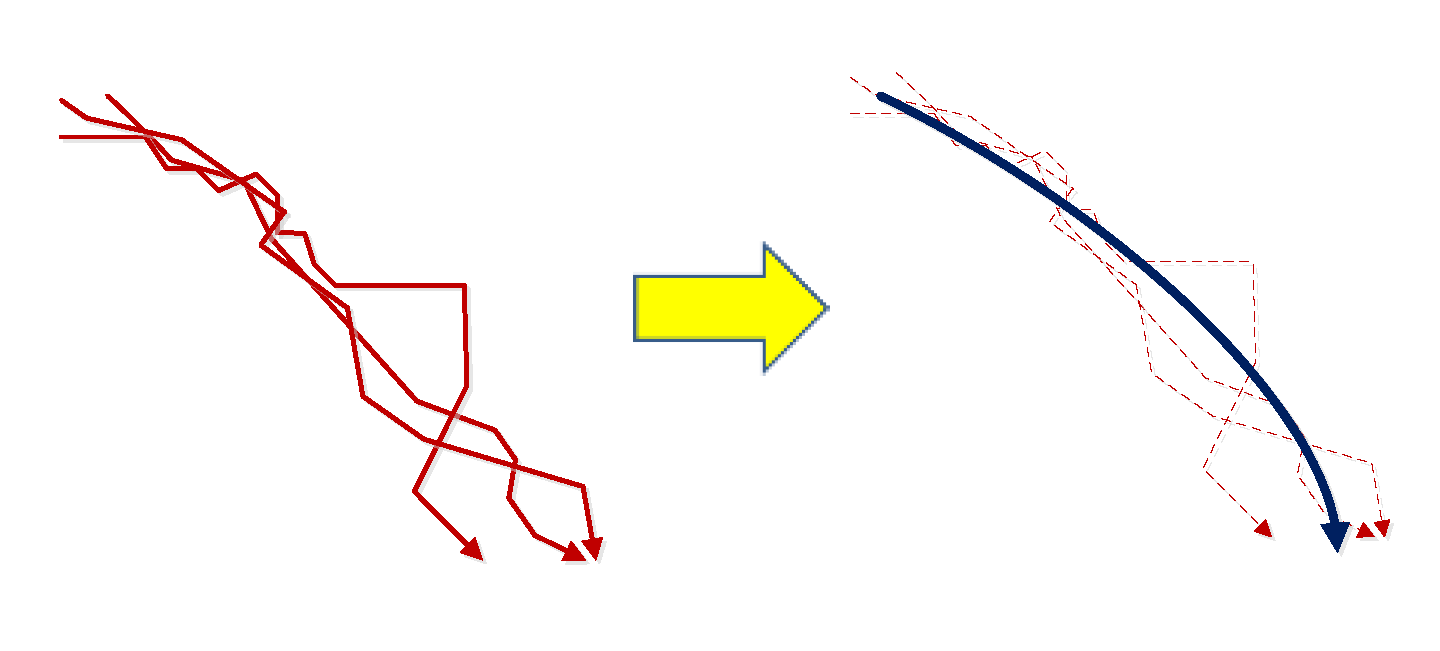

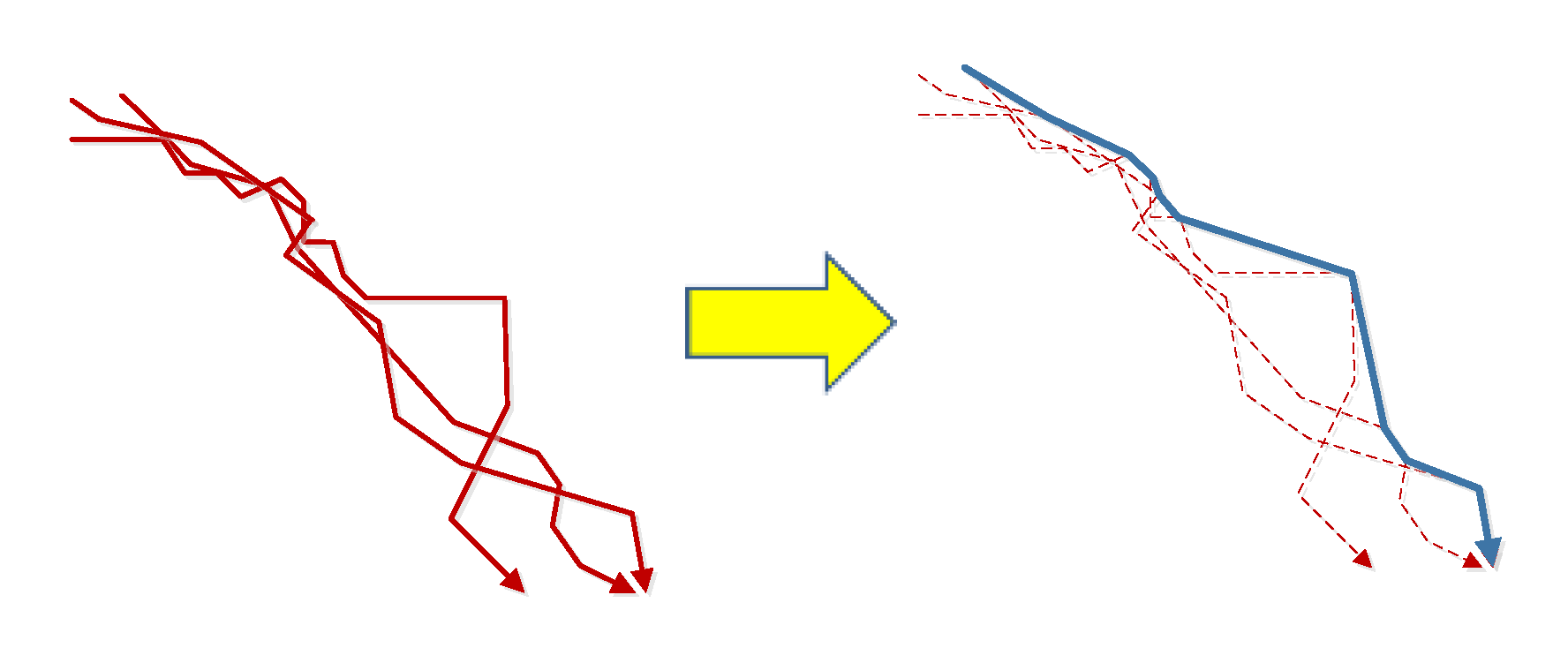

3.2.2. Trajectory Fitting Centerline Generation

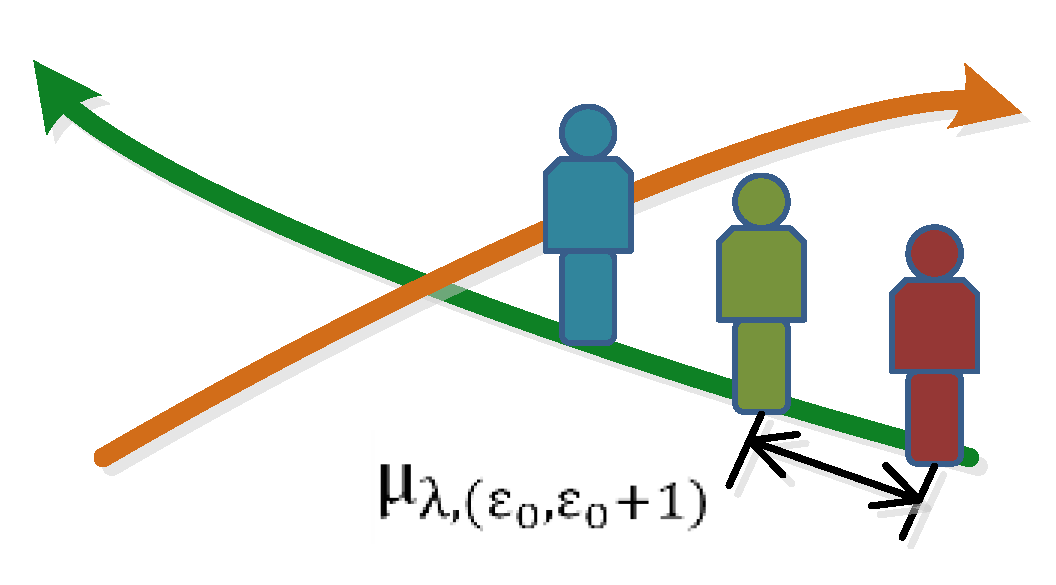

3.2.3. Expression of Video Moving Object

4. Experimental Results

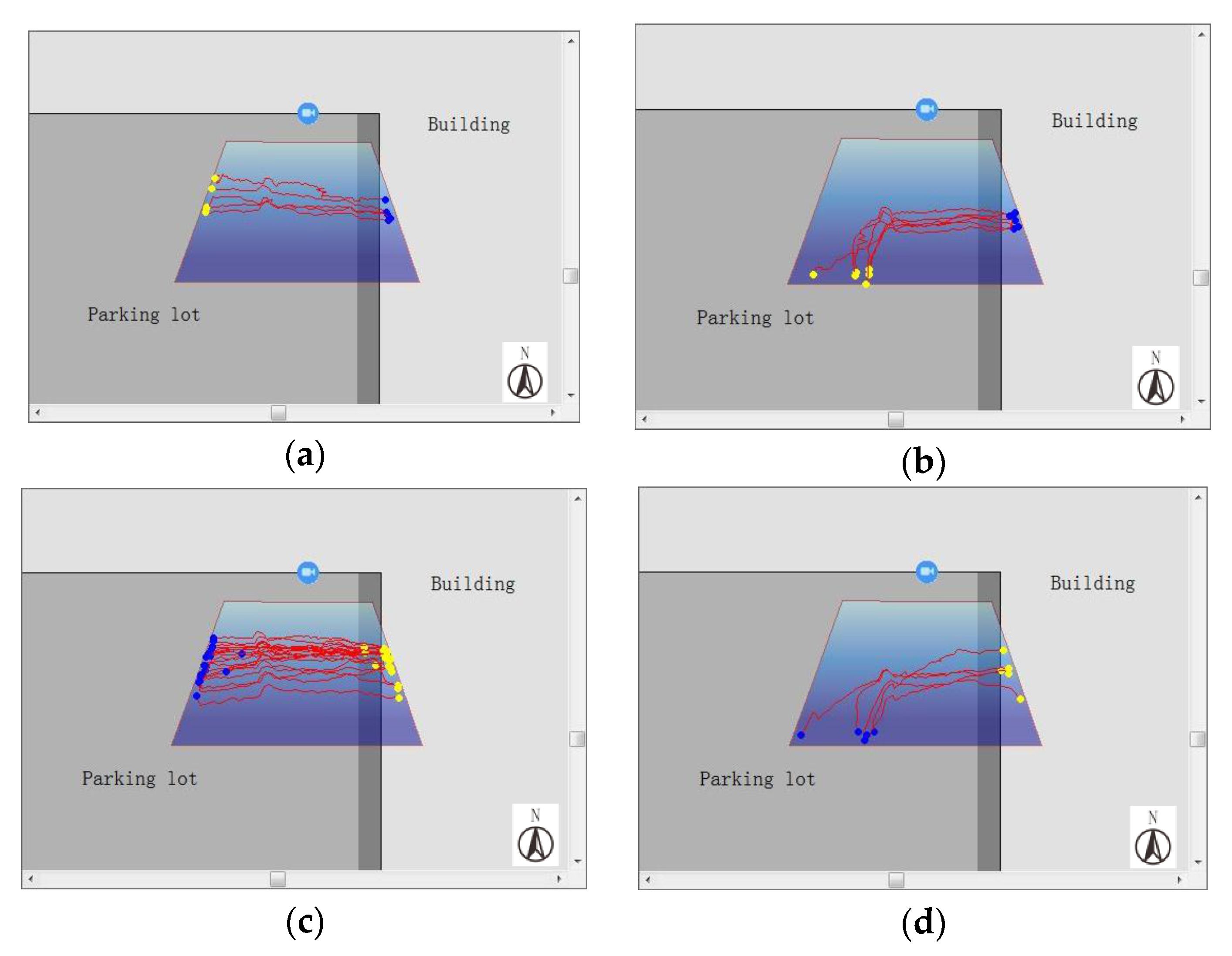

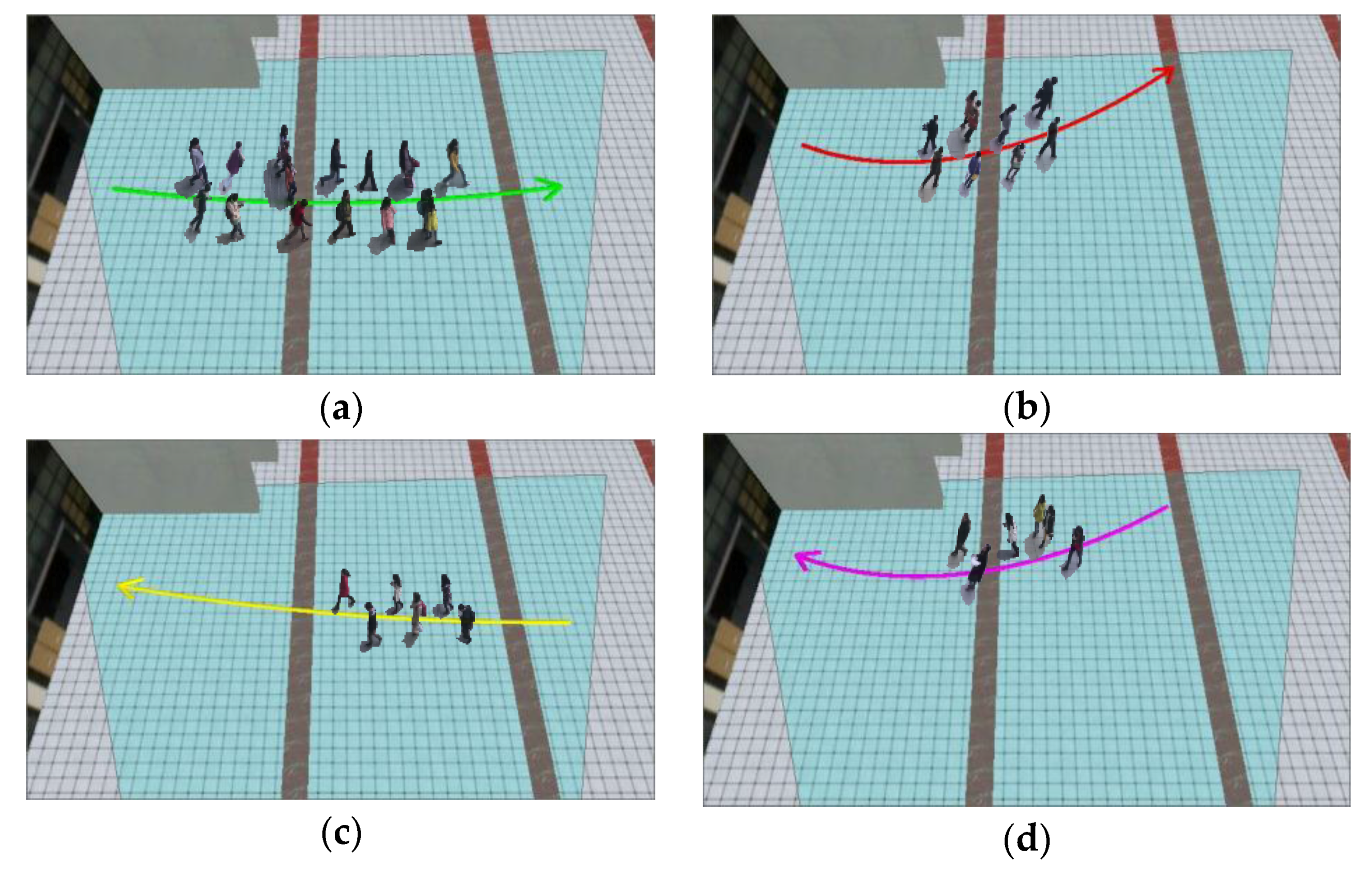

4.1. Clustering of Moving Object Trajectories

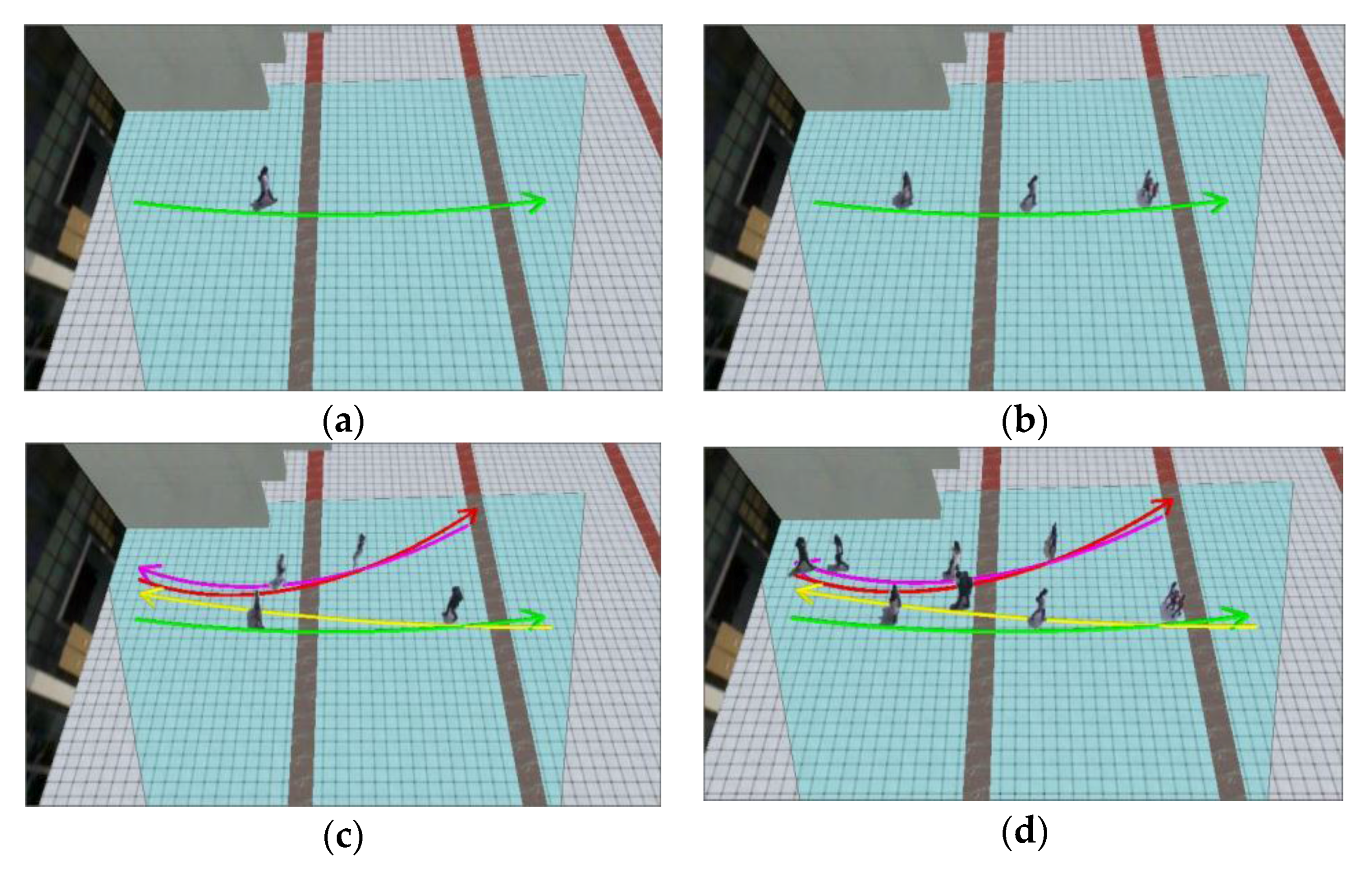

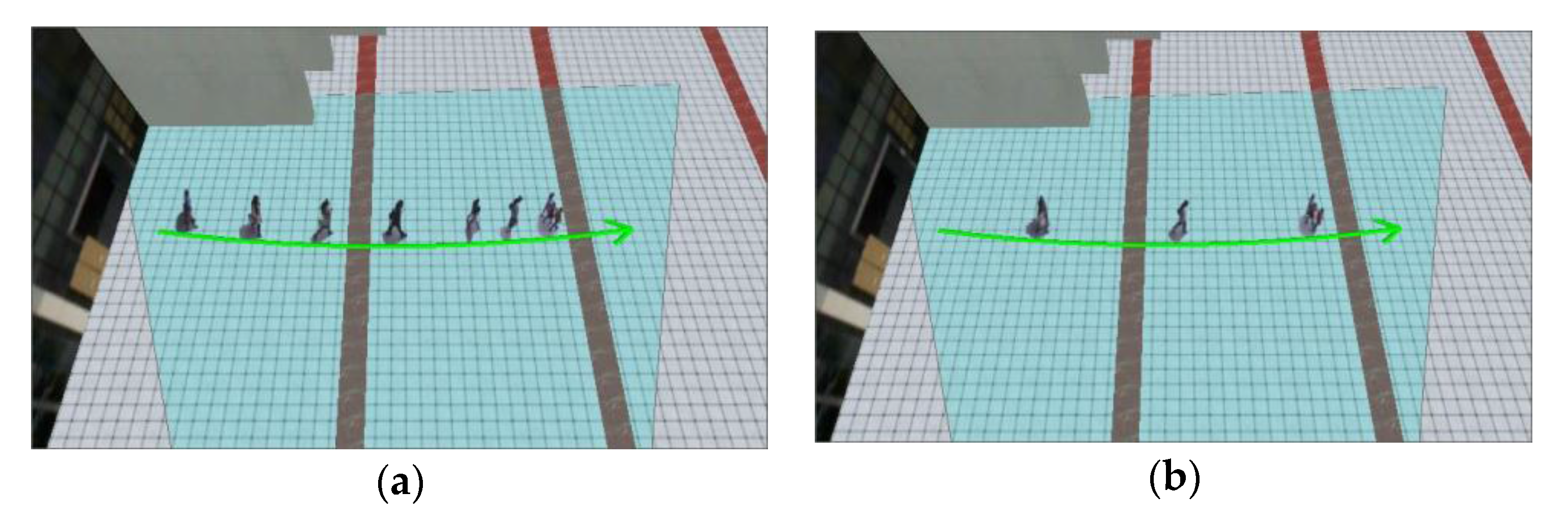

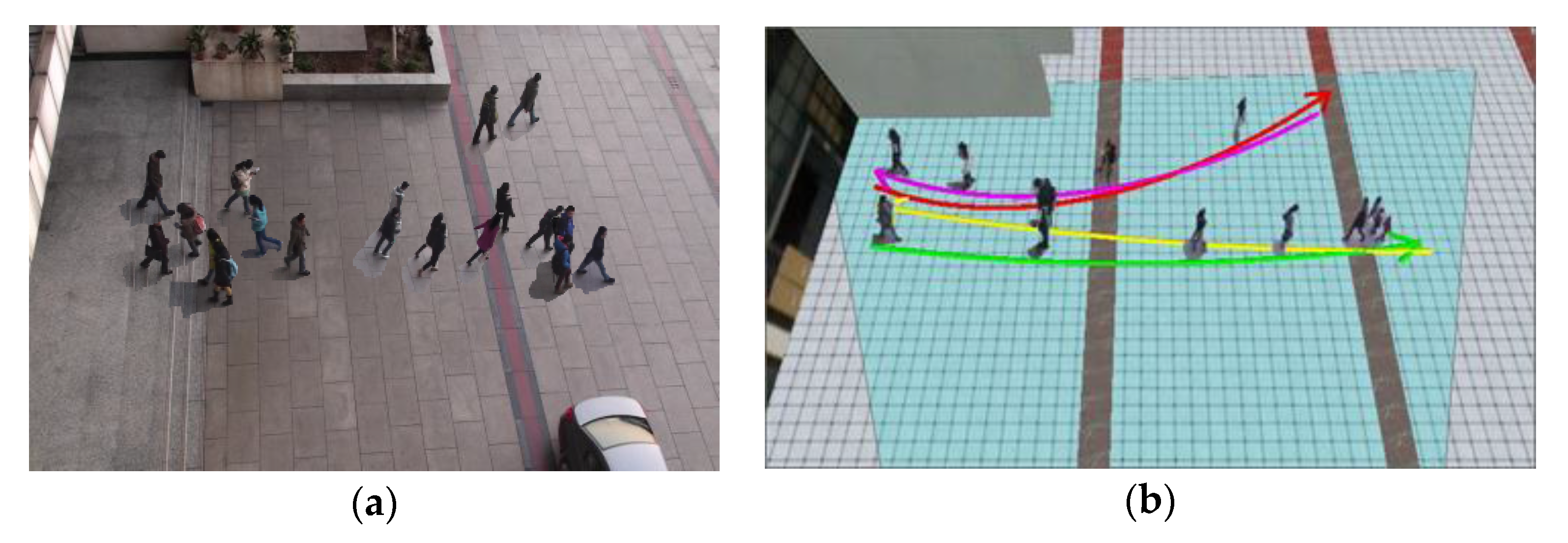

4.2. Visualization of Surveillance Video Synopsis in GIS

- (1)

- On the basis of georeferencing, the moving objects can be classified according to their geographical directions of the trajectory, which cannot be accurately carried out in image space.

- (2)

- There are definite display intervals and timing structural relationship between the moving objects, which avoids the crowding of the video moving objects.

- (3)

- Objects’ moving trajectories can be directly viewed and analyzed along with other geospatial targets in the geographic scene.

4.3. Evaluation of the Timing Compression Rate

4.4. Evaluation of the Accuracy of Moving Object Retrieval

5. Conclusions and Discussion

- (1)

- This study proposed an approach for video synopsis in GIS by extensively applying the model to the integration of video moving objects and GIS.

- (2)

- The proposed approach improves video browsing efficiency while integrating the expression of video and geospatial information.

- (3)

- This work describes the general process and technical details of video synopsis in GIS, discusses the characteristics of the moving object expression model, and evaluates the timing compression rate and moving object retrieval accuracy in the realization of video synopsis.

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Pritch, Y.; Rav-Acha, A.; Peleg, S. Non-chronological video synopsis and indexing. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 1971–1984. [Google Scholar] [CrossRef] [PubMed]

- Sobral, A.; Vacavant, A. A comprehensive review of background subtraction algorithms evaluated with synthetic and real videos. Comput. Vis. Image Underst. 2014, 122, 4–21. [Google Scholar] [CrossRef]

- Bouwmans, T. Traditional and recent approaches in background modeling for foreground detection: An overview. Comput. Sci. Rev. 2014, 11, 31–66. [Google Scholar] [CrossRef]

- Cheng, Y.C.; Lin, K.Y.; Chen, Y.S.; Tarng, J.H.; Yuan, C.Y.; Kao, C.Y. Accurate planar image registration for an integrated video surveillance system. In Proceedings of the CIVI’09 IEEE Workshop on Computational Intelligence for Visual Intelligence, Nashville, TN, USA, 30 March–2 April 2009; pp. 37–43. [Google Scholar]

- Wu, C.; Zhu, Q.; Zhang, Y.T.; Du, Z.Q.; Zhou, Y.; Xie, X.; He, F. An Adaptive Organization Method of Geovideo Data for Spatio-Temporal Association Analysis. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 2, 29–34. [Google Scholar] [CrossRef]

- Puletic, D. Generating and Visualizing Summarizations of Surveillance Videos; George Mason University: Fairfax, VA, USA, 2012. [Google Scholar]

- Gallo, N.; Thornton, J. Fast dynamic video content exploration. In Proceedings of the IEEE International Conference on Technologies for Homeland Security (HST), Waltham, MA, USA, 12–14 November 2013; pp. 271–277. [Google Scholar]

- Park, Y.; An, S.; Chang, U.; Kim, S. Region of Interest Based Video Synopsis. U.S. Patent 9,269,245, 23 February 2016. [Google Scholar]

- Lewis, J.; Grindstaff, G.; Whitaker, S. Open Geospatial Consortium Geo-Video Web Services; Open Geospatial Consortium: Wayland, MA, USA, 2006; pp. 1–36. [Google Scholar]

- Ma, Y.; Zhao, G.; He, B. Design and implementation of a fused system with 3DGIS and multiple-video. Comput. Appl. Softw. 2012, 29, 109–112. [Google Scholar]

- Takehara, T.; Nakashima, Y.; Nitta, N.; Babaguchi, N. Digital diorama: Sensing-based real-world visualization. In Proceedings of the International Conference on Information Processing and Management of Uncertainty in Knowledge-Based Systems, Dortmund, Germany, 28 June–2 July 2010; pp. 663–672. [Google Scholar]

- Roth, P.M.; Settgast, V.; Widhalm, P.; Lancelle, M.; Birchbauer, J.; Brandle, N.; Havemann, S.; Bischof, H. Next-generation 3D visualization for visual surveillance. In Proceedings of the 8th IEEE International Conference on Advanced Video and Signal-Based Surveillance (AVSS), Klagenfurt, Austria, 30 August–2 September 2011; pp. 343–348. [Google Scholar]

- Xie, Y.; Liu, X.; Wang, M.; Wu, Y. Integration of GIS and Moving Objects in Surveillance Video. ISPRS Int. Geo Inf. 2017, 6, 94. [Google Scholar] [CrossRef]

- Chen, Y.Y.; Huang, Y.H.; Cheng, Y.C.; Chen, Y.S. A 3-D surveillance system using multiple integrated cameras. In Proceedings of the IEEE International Conference on Information and Automation (ICIA), Harbin, China, 20–23 June 2010; pp. 1930–1935. [Google Scholar]

- Yang, Y.; Chang, M.C.; Tu, P.; Lyu, S. Seeing as it happens: Real time 3D video event visualization. In Proceedings of the IEEE International Conference on Image Processing (ICIP), Quebec City, QC, Canada, 27–30 September 2015; pp. 2875–2879. [Google Scholar]

- Ji, Z.; Su, Y.; Qian, R.; Ma, J. Surveillance video summarization based on moving object detection and trajectory extraction. In Proceedings of the IEEE 2nd International Conference on Signal Processing Systems (ICSPS), Dalian, China, 5–7 July 2010; Volume 2, pp. 250–253. [Google Scholar]

- Sun, L.; Ai, H.; Lao, S. The dynamic videobook: A hierarchical summarization for surveillance video. In Proceedings of the IEEE 20st International Conference on Image Processing (ICIP), Melbourne, Australia, 15–18 September 2013; pp. 3963–3966. [Google Scholar]

- Chiang, C.C.; Yang, H.F. Quick Browsing Approach for Surveillance Videos. Int. J. Comput. Technol. Appl. 2013, 4, 232–237. [Google Scholar]

- Chen, S.C.; Lin, K.; Lin, S.Y.; Chen, K.W.; Lin, C.W.; Chen, C.S.; Hung, Y.P. Target-driven video summarization in a camera network. In Proceedings of the IEEE 20st International Conference on Image Processing (ICIP), Melbourne, Australia, 15–18 September 2013; pp. 3577–3580. [Google Scholar]

- Chen, K.W.; Lee, P.J.; Hung, Y.P. Egocentric view transition for video monitoring in a distributed camera network. In Advances in Multimedia Modeling; Springer: Berlin/Heidelberg, Germany, 2011; pp. 171–181. [Google Scholar]

- Wang, Y.; Bowman, D.A. Effects of navigation design on Contextualized Video Interfaces. In Proceedings of the IEEE Symposium on 3D User Interfaces (3DUI), Singapore, 19–20 March 2011; pp. 27–34. [Google Scholar]

- Lewis, P.; Fotheringham, S.; Winstanley, A. Spatial video and GIS. Int. J. Geogr. Inf. Sci. 2011, 25, 697–716. [Google Scholar] [CrossRef]

- Wang, X. Intelligent multi-camera video surveillance: A review. Pattern Recognit. Lett. 2013, 34, 3–19. [Google Scholar] [CrossRef]

- Baklouti, M.; Chamfrault, M.; Boufarguine, M.; Guitteny, V. Virtu4D: A dynamic audio-video virtual representation for surveillance systems. In Proceedings of the IEEE 3rd International Conference on Signals, Circuits and Systems (SCS), Medenine, Tunisia, 6–8 November 2009; pp. 1–6. [Google Scholar]

- De Haan, G.; Piguillet, H.; Post, F. Spatial Navigation for Context-Aware Video Surveillance. IEEE Comput. Gr. Appl. 2010, 30, 20–31. [Google Scholar] [CrossRef] [PubMed]

- Hao, L.; Cao, J.; Li, C. Research of GrabCut algorithm for single camera video synopsis. In Proceedings of the IEEE Fourth International Conference on Intelligent Control and Information Processing (ICICIP), Beijing, China, 9–11 June 2013; pp. 632–637. [Google Scholar]

- Fu, W.; Wang, J.; Zhao, C.; Lu, H.; Ma, S. Object-centered narratives for video surveillance. In Proceedings of the 19th IEEE International Conference on Image Processing (ICIP), Orlando, FL, USA, 30 September–3 October 2012; pp. 29–32. [Google Scholar]

- Decombas, M.; Dufaux, F.; Pesquet-Popescu, B. Spatio-temporal grouping with constraint for seam carving in video summary application. In Proceedings of the IEEE 18th International Conference on Digital Signal Processing (DSP), Fira, Greece, 1–3 July 2013; pp. 1–8. [Google Scholar]

- Li, Z.; Ishwar, P.; Konrad, J. Video condensation by ribbon carving. IEEE Trans. Image Process. 2009, 18, 2572–2583. [Google Scholar] [PubMed]

- Huang, C.R.; Chen, H.C.; Chung, P.C. Online surveillance video synopsis. In Proceedings of the IEEE International Symposium on Circuits and Systems (ISCAS), Seoul, Korea, 20–23 May 2012; pp. 1843–1846. [Google Scholar]

- Chiang, C.C.; Yang, H.F. Quick browsing and retrieval for surveillance videos. Multimed. Tools Appl. 2015, 74, 2861–2877. [Google Scholar] [CrossRef]

- Wang, S.; Yang, J.; Zhao, Y.; Cai, A.; Li, S.Z. A surveillance video analysis and storage scheme for scalable synopsis browsing. In Proceedings of the IEEE International Conference on Computer Vision Workshops (ICCV Workshops), Barcelona, Spain, 6–13 November 2011; pp. 1947–1954. [Google Scholar]

- Wang, S.Z.; Wang, Z.Y.; Hu, R. Surveillance video synopsis in the compressed domain for fast video browsing. J. Vis. Commun. Image Represent. 2013, 24, 1431–1442. [Google Scholar] [CrossRef]

- Zhou, Y. Fast browser and index for large volume surveillance video. J. Comput. Appl. 2012, 32, 3185–3188. [Google Scholar] [CrossRef]

- Lu, S.P.; Zhang, S.H.; Wei, J.; Hu, S.M.; Martin, R.R. Timeline Editing of Objects in Video. IEEE Trans. Vis. Comput. Gr. 2013, 19, 1218–1227. [Google Scholar]

- Sun, L.; Xing, J.; Ai, H.; Lao, S. A tracking based fast online complete video synopsis approach. In Proceedings of the IEEE 21st International Conference on Pattern Recognition (ICPR), Tsukuba, Japan, 11–15 November 2012; pp. 1956–1959. [Google Scholar]

- Nie, Y.; Xiao, C.; Sun, H.; Li, P. Compact Video Synopsis via Global Spatiotemporal Optimization. IEEE Trans. Vis. Comput. Graphics 2013, 19, 1664–1676. [Google Scholar] [CrossRef] [PubMed]

- Huang, C.R.; Chung, P.C.J.; Yang, D.K.; Chen, H.C.; Huang, G.J. Maximum a posteriori probability estimation for online surveillance video synopsis. IEEE Trans. Circuits Syst. Video Technol. 2014, 24, 1417–1429. [Google Scholar] [CrossRef]

- Feng, S.; Lei, Z.; Yi, D.; Li, S.Z. Online content-aware video condensation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Providence, RI, USA, 16–21 June 2012; pp. 2082–2087. [Google Scholar]

- Fu, W.; Wang, J.; Gui, L.; Lu, H.; Ma, S. Online video synopsis of structured motion. Neurocomputing 2014, 135, 155–162. [Google Scholar] [CrossRef]

- Xu, M.; Li, S.Z.; Li, B.; Yuan, X.T.; Xiang, S.M. A set theoretical method for video synopsis. In Proceedings of the 1st ACM international conference on Multimedia information retrieval, Vancouver, BC, Canada, 30–31 October 2008; pp. 366–370. [Google Scholar]

- Zhou, X.; Yang, C.; Yu, W. Moving object detection by detecting contiguous outliers in the low-rank representation. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 597–610. [Google Scholar] [CrossRef] [PubMed]

- Smeulders, A.; Chu, D.; Cucchiara, R.; Calderara, S.; Dehghan, A.; Shah, M. Visual tracking: An experimental survey. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 1442–1468. [Google Scholar] [PubMed]

- Zhang, X.; Liu, X.; Wang, S.; Yang, L. Mutual Mapping Between Surveillance Video and 2D Geospatial Data. Geomat. Inf. Sci. Wuhan Univ. 2015, 40, 1130–1136. [Google Scholar]

- Heyer, L.J.; Kruglyak, S.; Yooseph, S. Exploring Expression Data: Identification and Analysis of CoexpressedGenes. Genome Res. 1999, 9, 1106–1115. [Google Scholar] [CrossRef] [PubMed]

| ODSR | 0 | 1 | |

|---|---|---|---|

| CDSR | |||

| 0 |  |  | |

| 1 |  |  | |

| CDSR | ODSR = 0 | ODSR = 1 |

|---|---|---|

| 0 | ||

| 1 |

| ODSR | ODSR = 0 | ODSR = 1 = 5 | ODSR = 1 = 10 | ODSR = 1 = 20 | ODSR = 1 = 30 |

|---|---|---|---|---|---|

| 0 | 19.53% | 19.53% | 6.61% | 8.23% | 11.46% |

| 1 | 11.45% | 3.00% | 4.05% | 6.17% | 8.28% |

| β | True Positive | True Negative | False Positive |

|---|---|---|---|

| 0.7 | 95.2% | 4.8% | 0 |

| 0.75 | 98.4% | 1.6% | 0 |

| 0.8 | 96.8% | 1.6% | 1.6% |

| 0.85 | 96.8% | 1.6% | 1.6% |

| 0.9 | 98.4% | 0 | 1.6% |

| 0.95 | 98.4% | 0 | 1.6% |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xie, Y.; Wang, M.; Liu, X.; Wu, Y. Surveillance Video Synopsis in GIS. ISPRS Int. J. Geo-Inf. 2017, 6, 333. https://doi.org/10.3390/ijgi6110333

Xie Y, Wang M, Liu X, Wu Y. Surveillance Video Synopsis in GIS. ISPRS International Journal of Geo-Information. 2017; 6(11):333. https://doi.org/10.3390/ijgi6110333

Chicago/Turabian StyleXie, Yujia, Meizhen Wang, Xuejun Liu, and Yiguang Wu. 2017. "Surveillance Video Synopsis in GIS" ISPRS International Journal of Geo-Information 6, no. 11: 333. https://doi.org/10.3390/ijgi6110333