Automatic Room Segmentation of 3D Laser Data Using Morphological Processing

Abstract

:1. Introduction

2. Related Work

2.1. Room Segmentation in Robotics Community

2.2. Room Segmentation in AEC Community

2.3. Summary

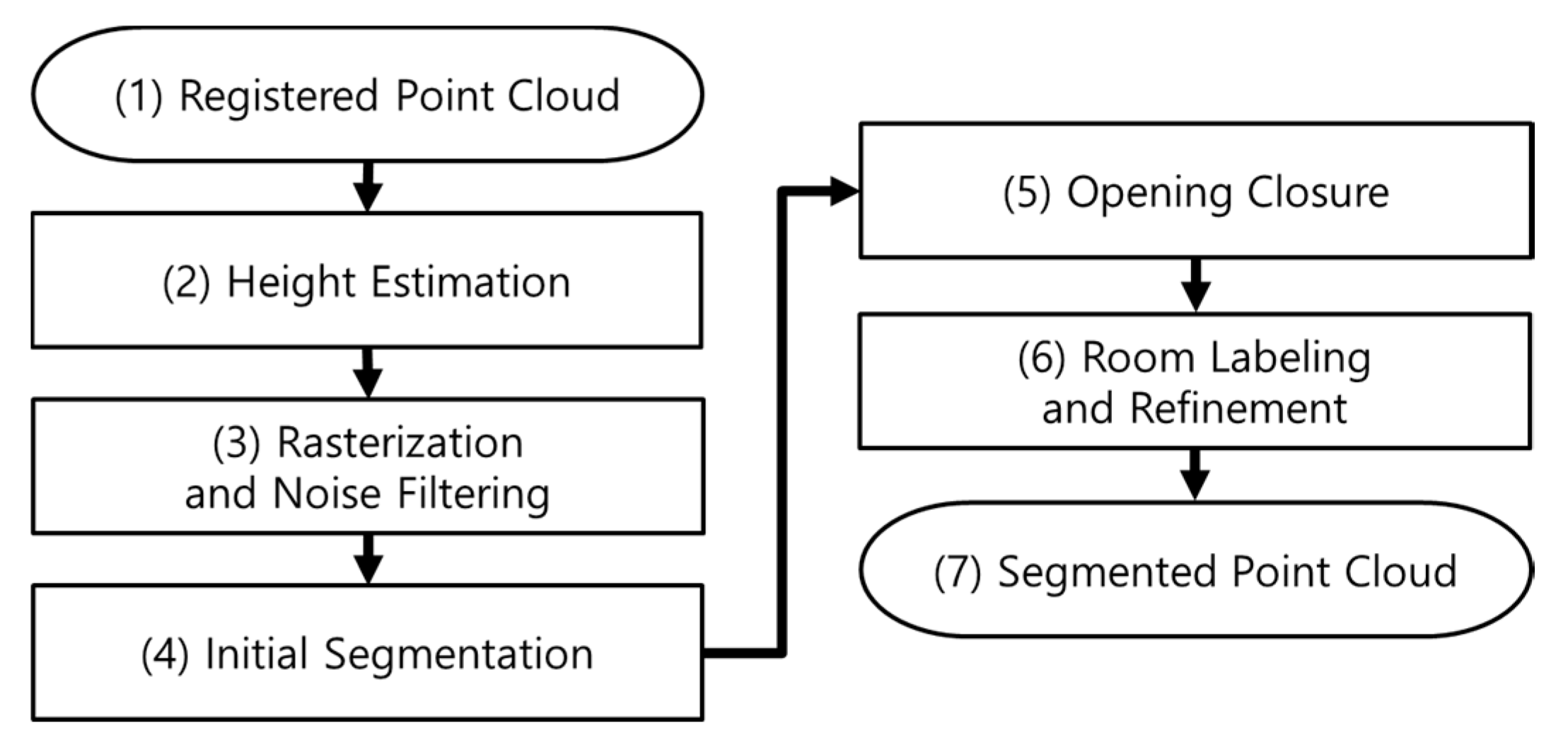

3. Our Approach to Room Segmentation

3.1. Overview

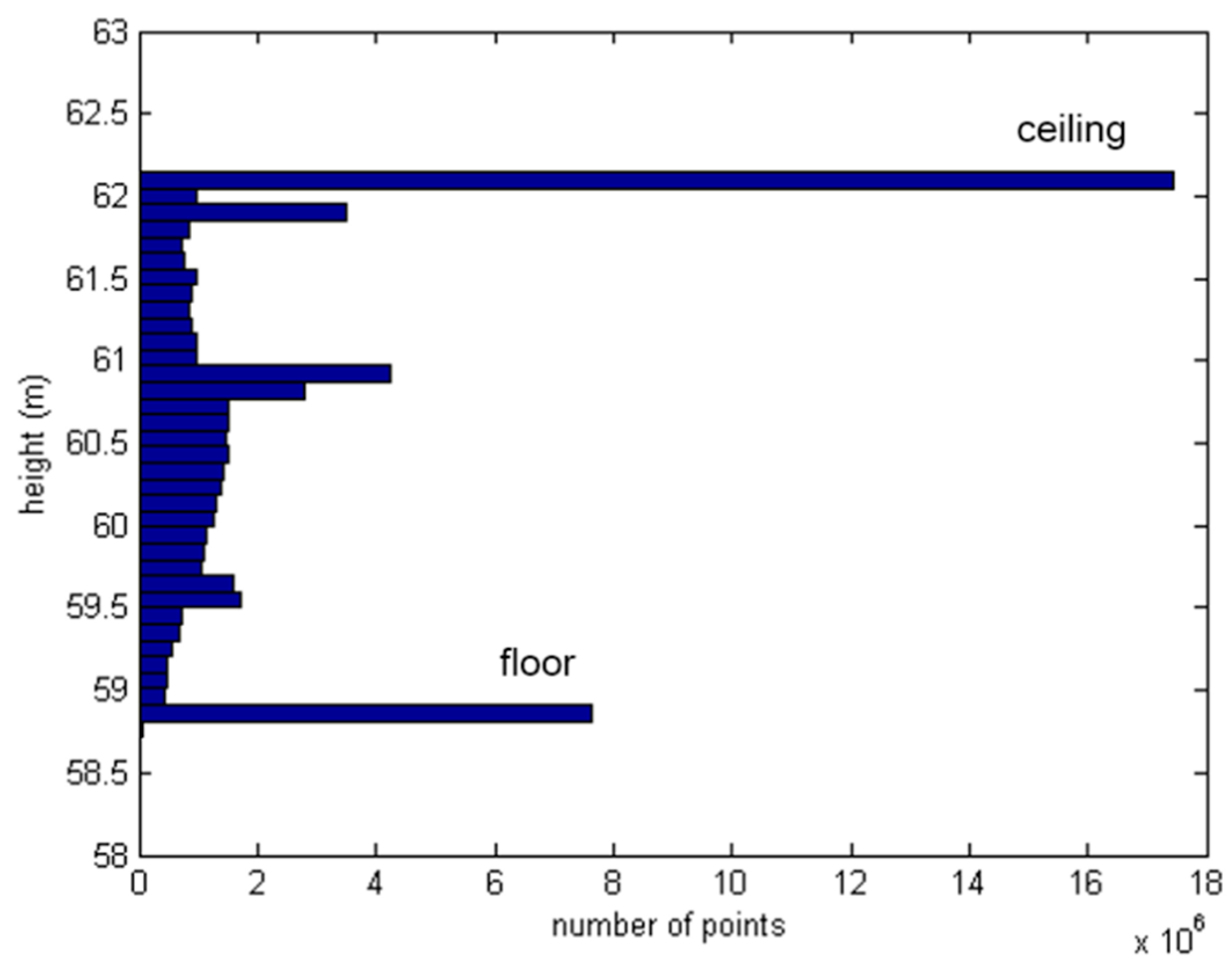

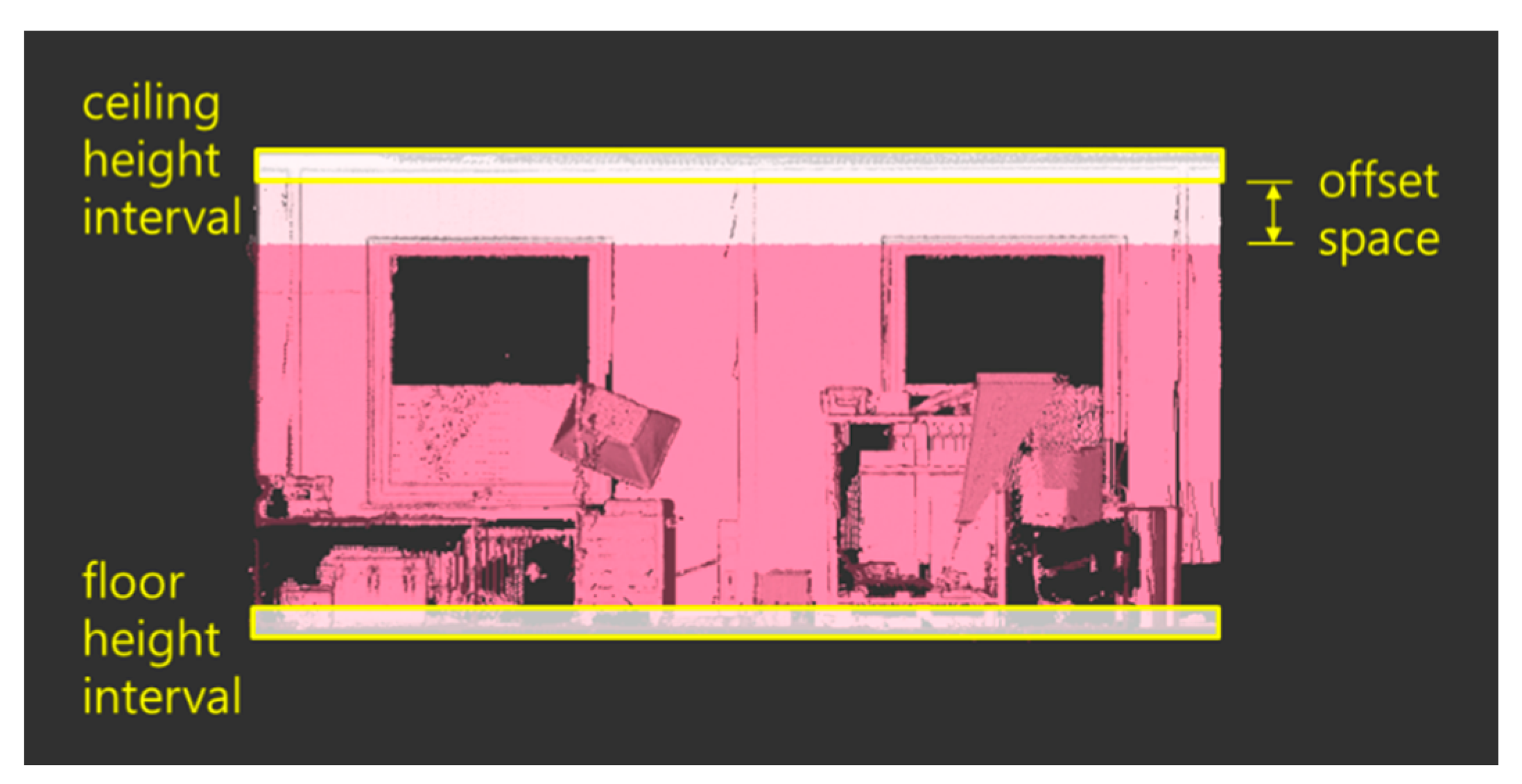

3.2. Height Estimation

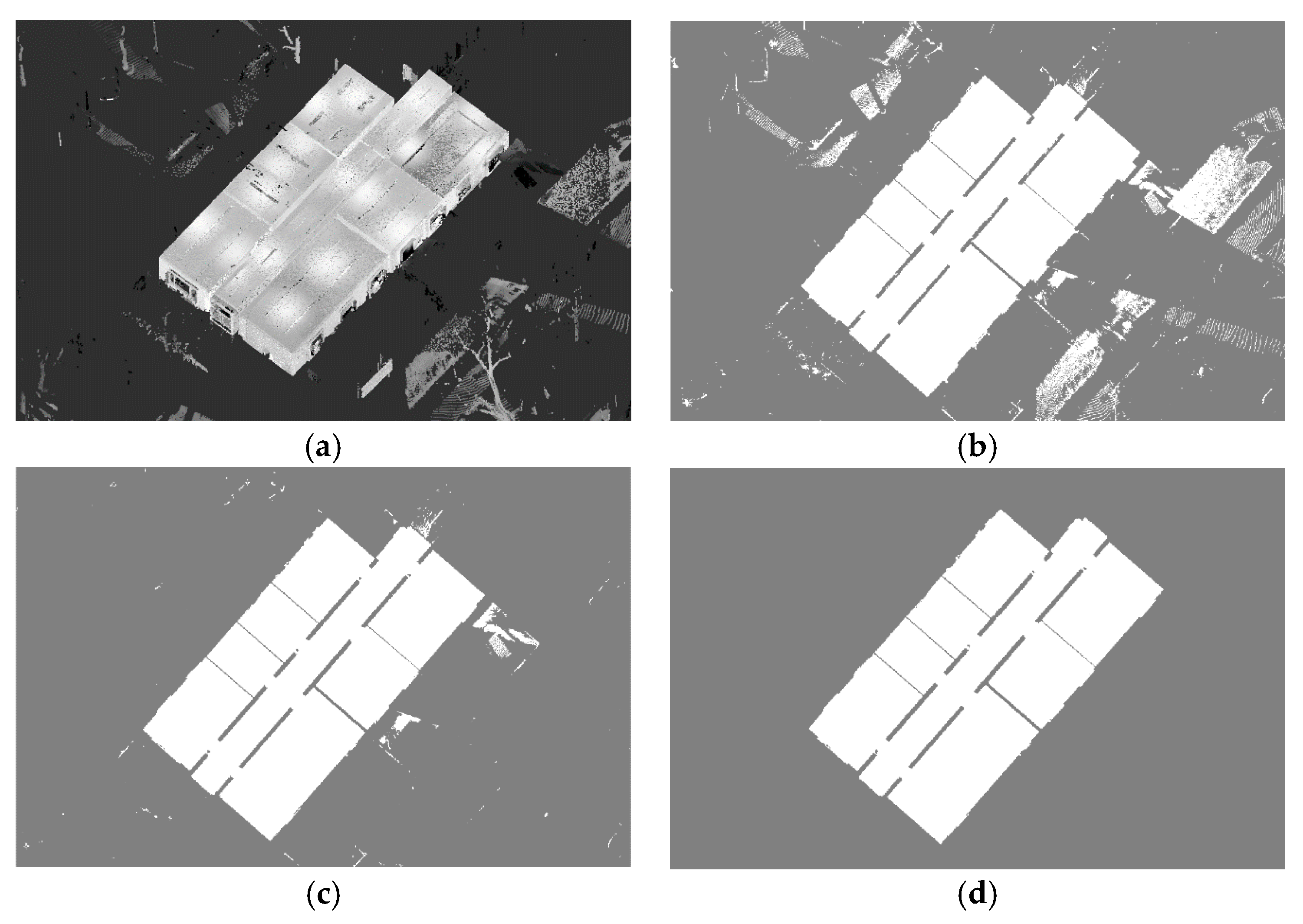

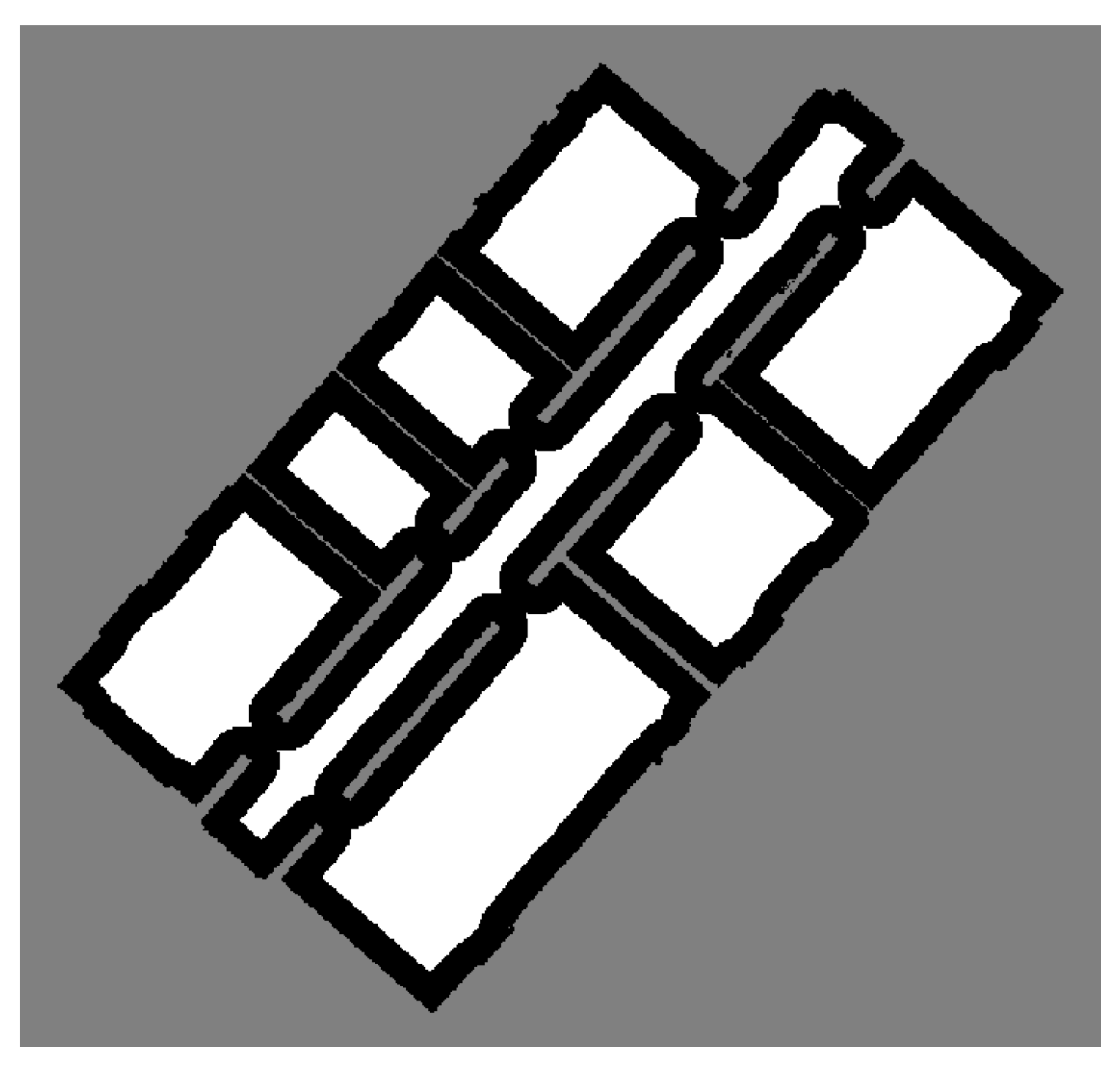

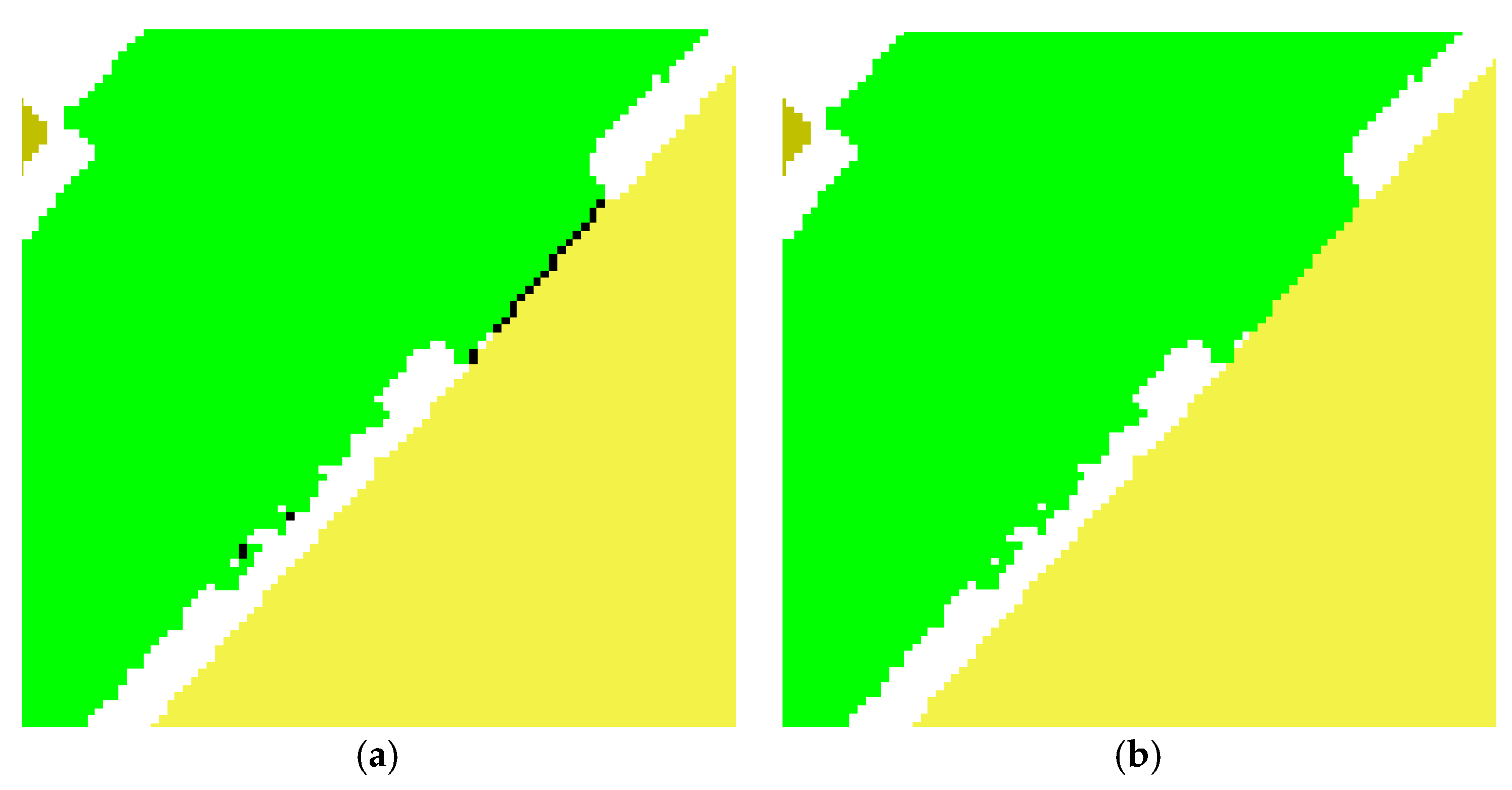

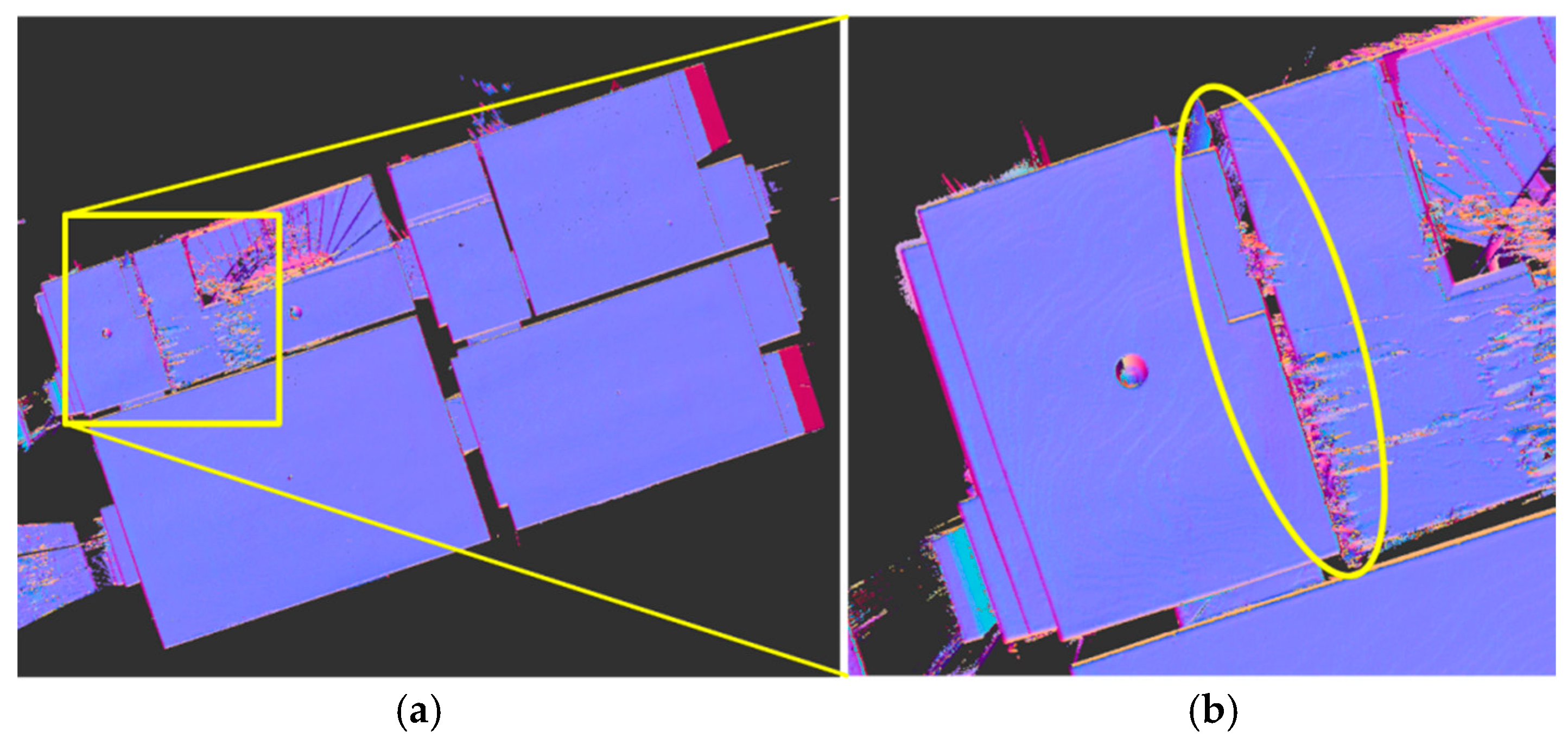

3.3. Rasterization and Noise Filtering

3.4. Initial Segmentation

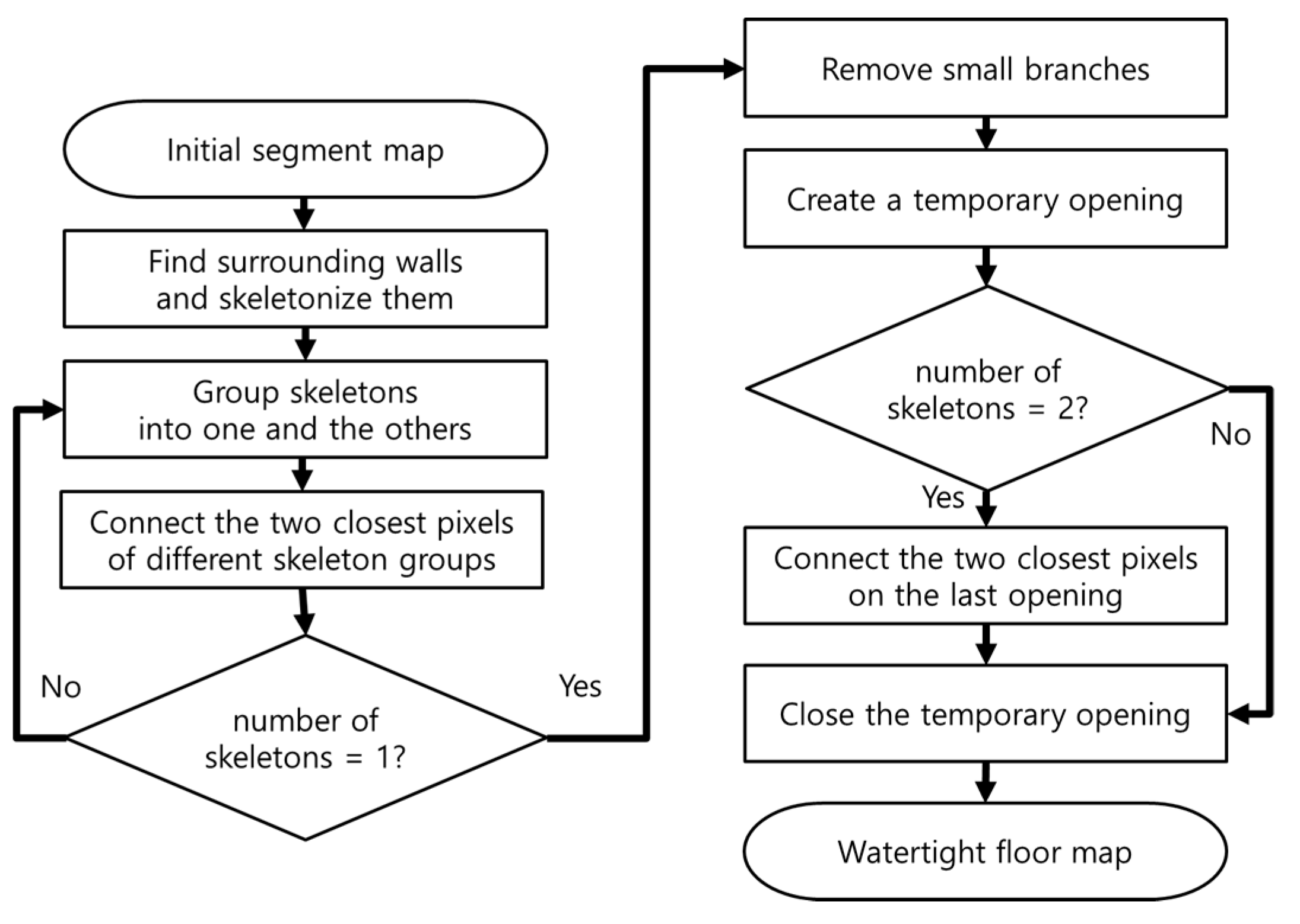

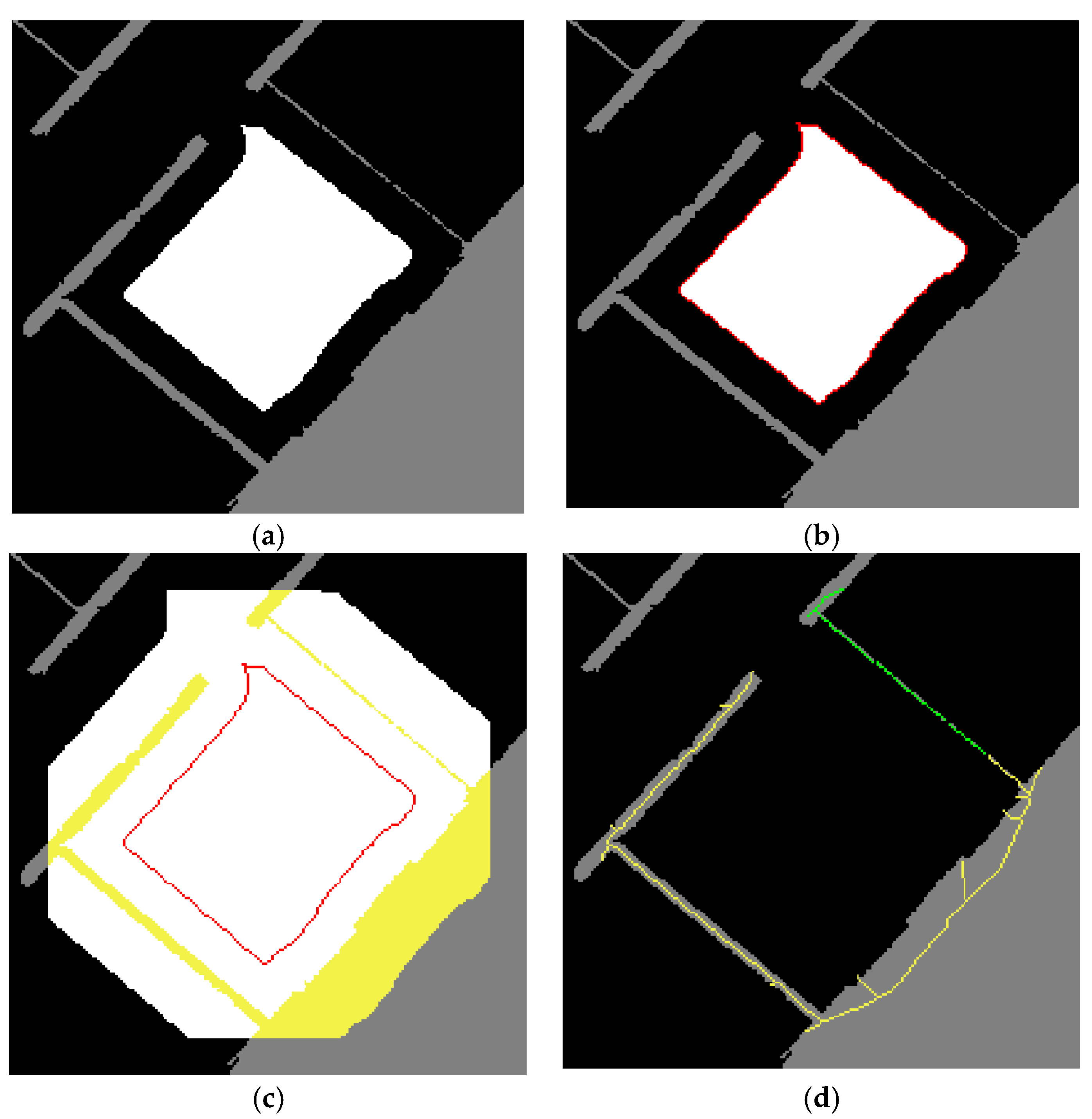

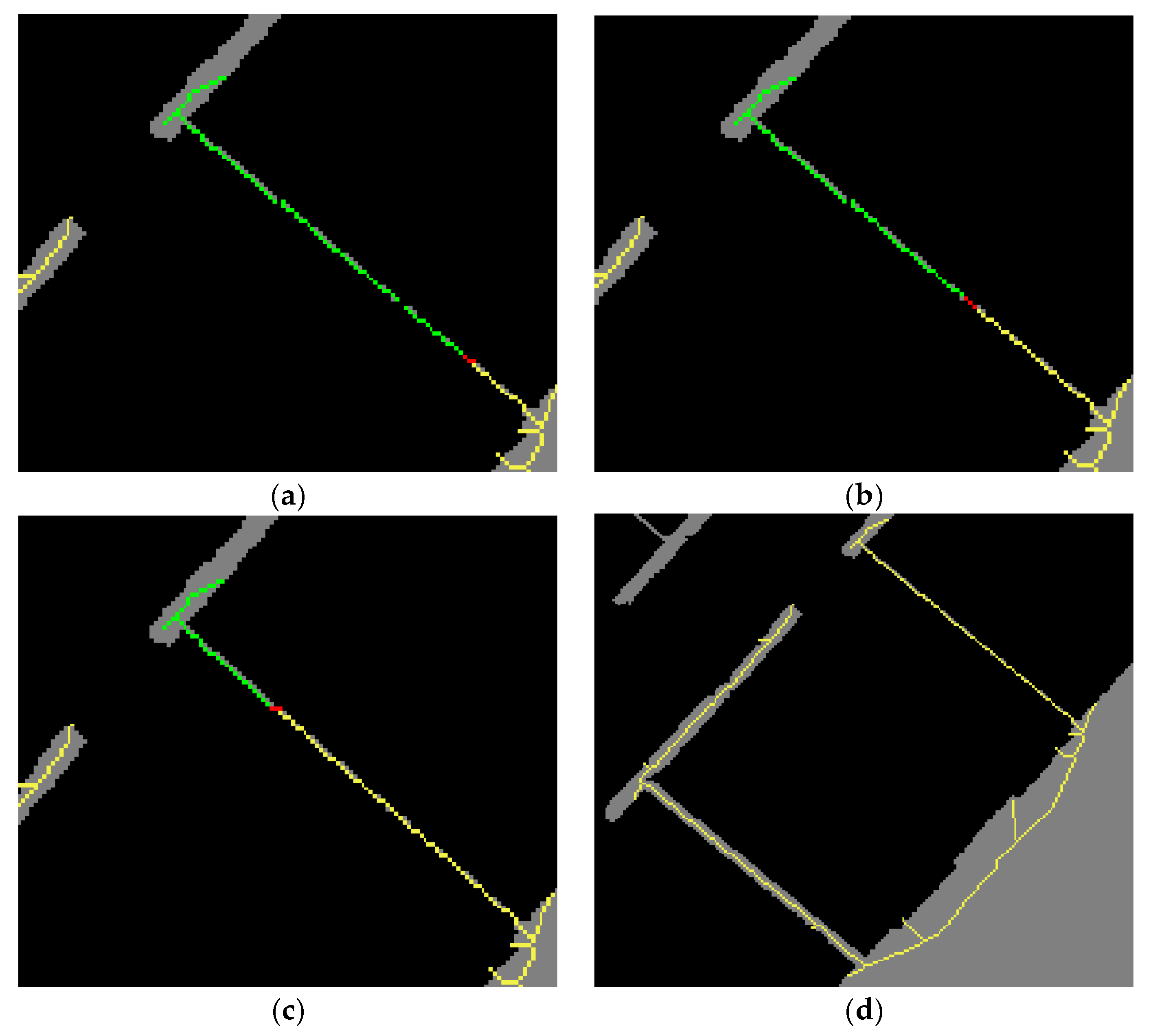

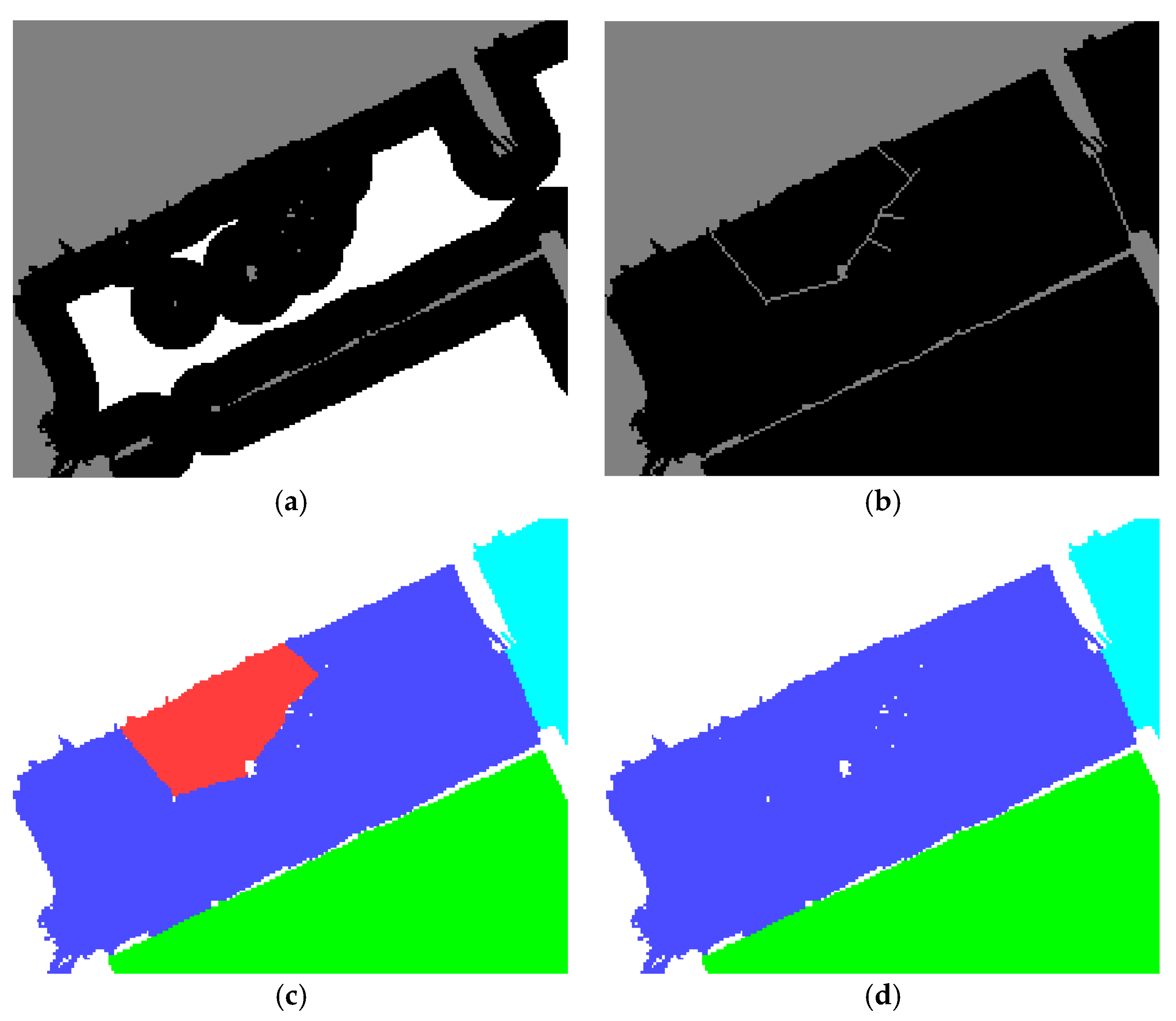

3.5. Opening Closure

3.6. Room Labeling and Refinement

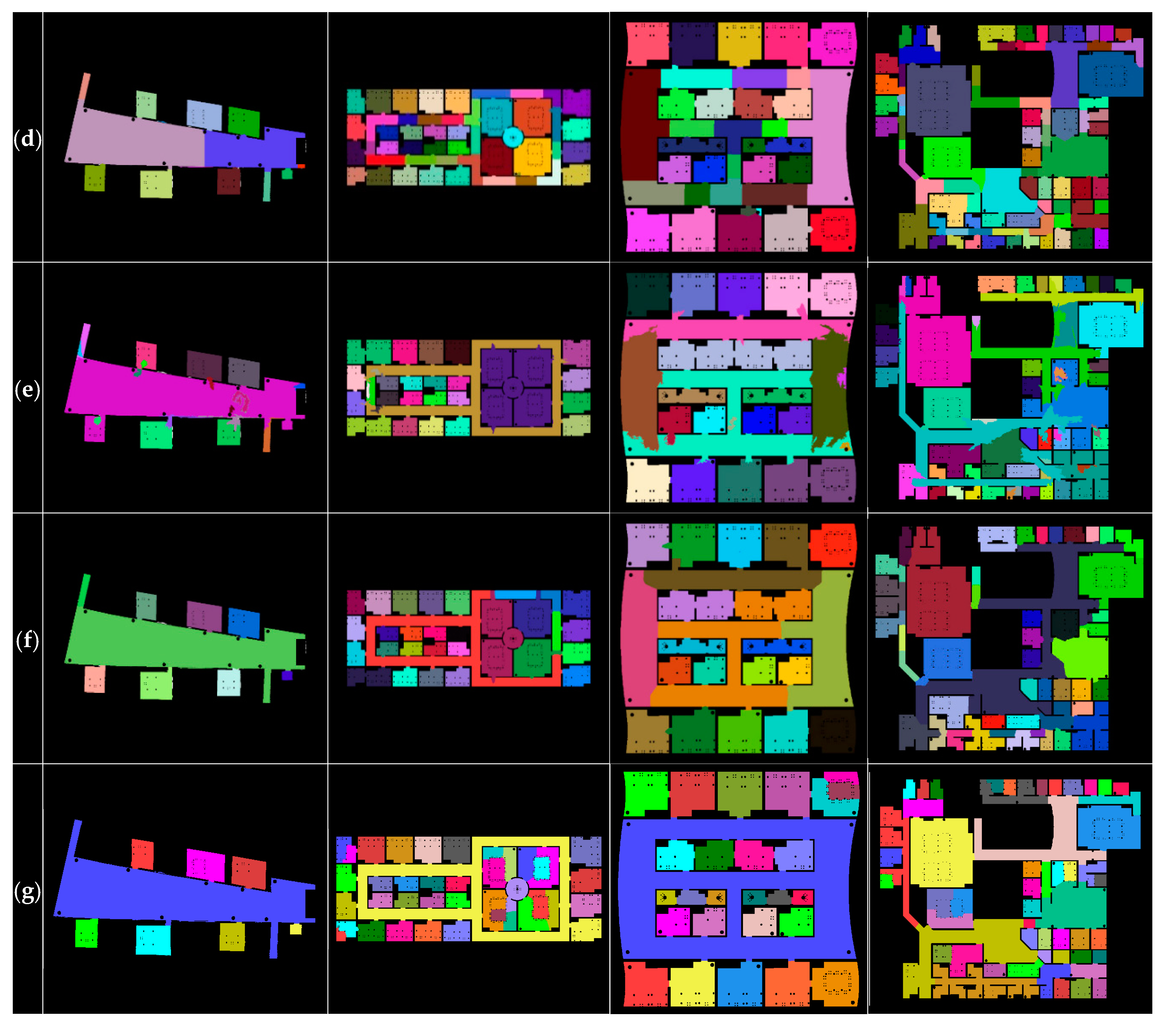

4. Experiments and Results

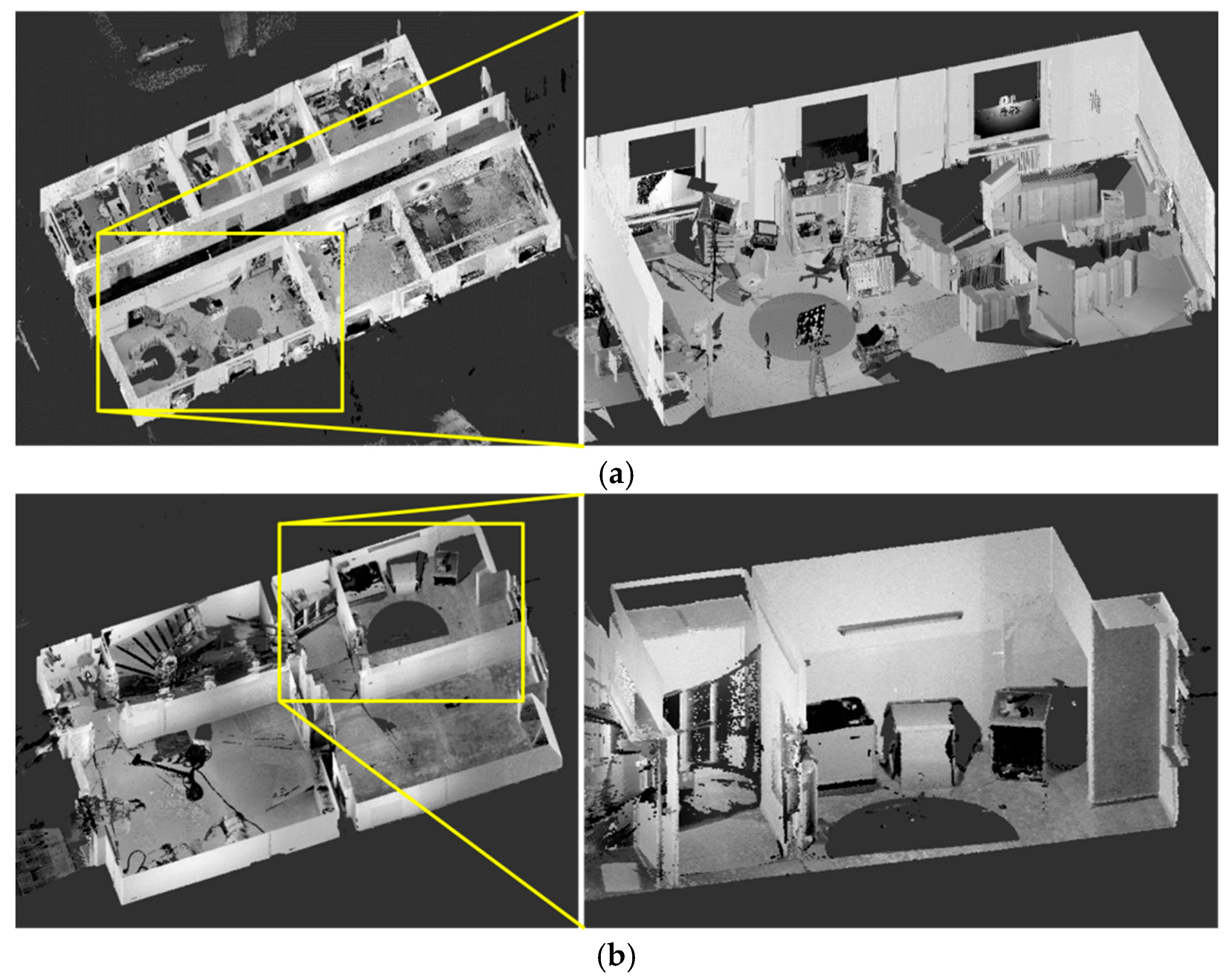

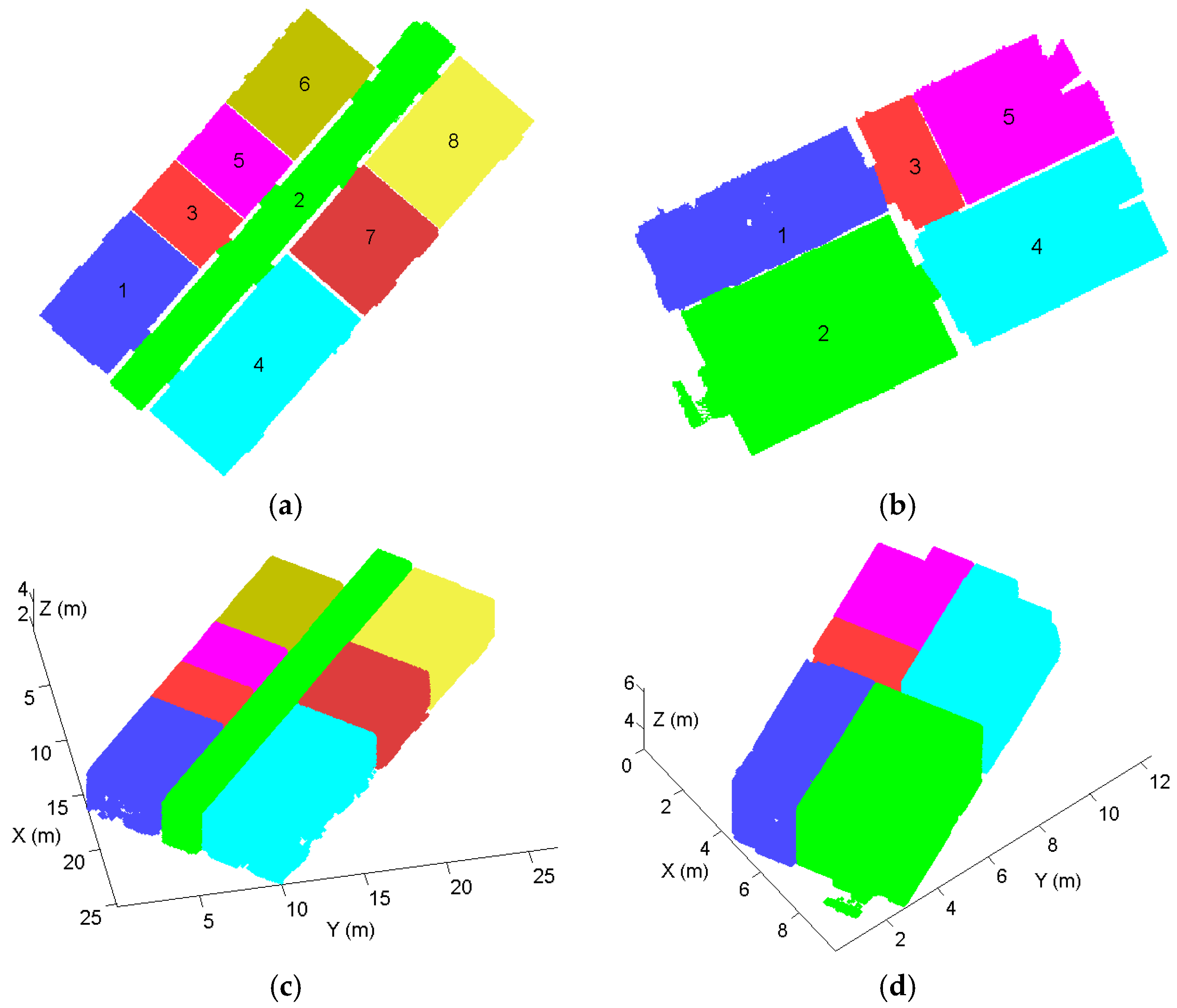

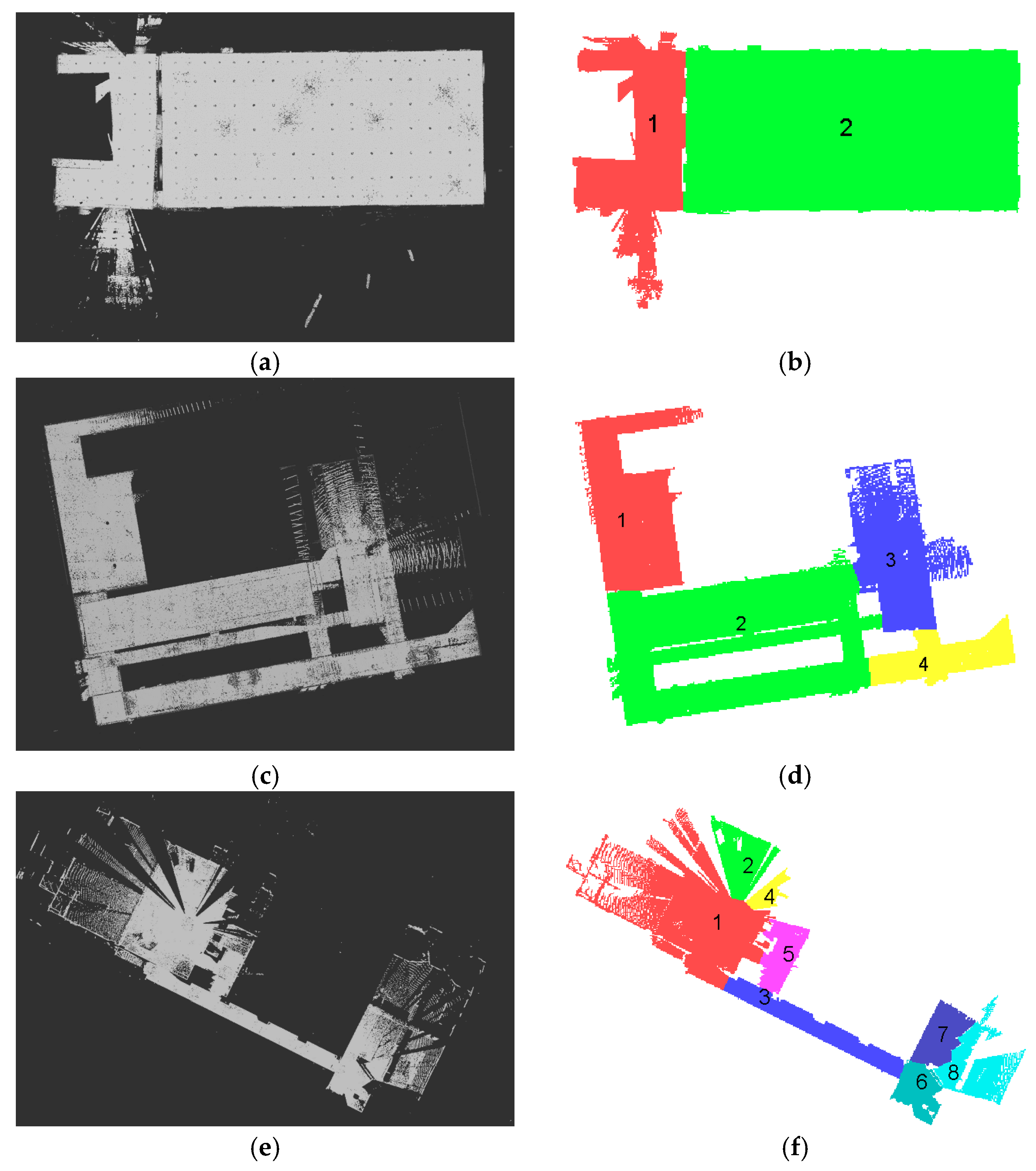

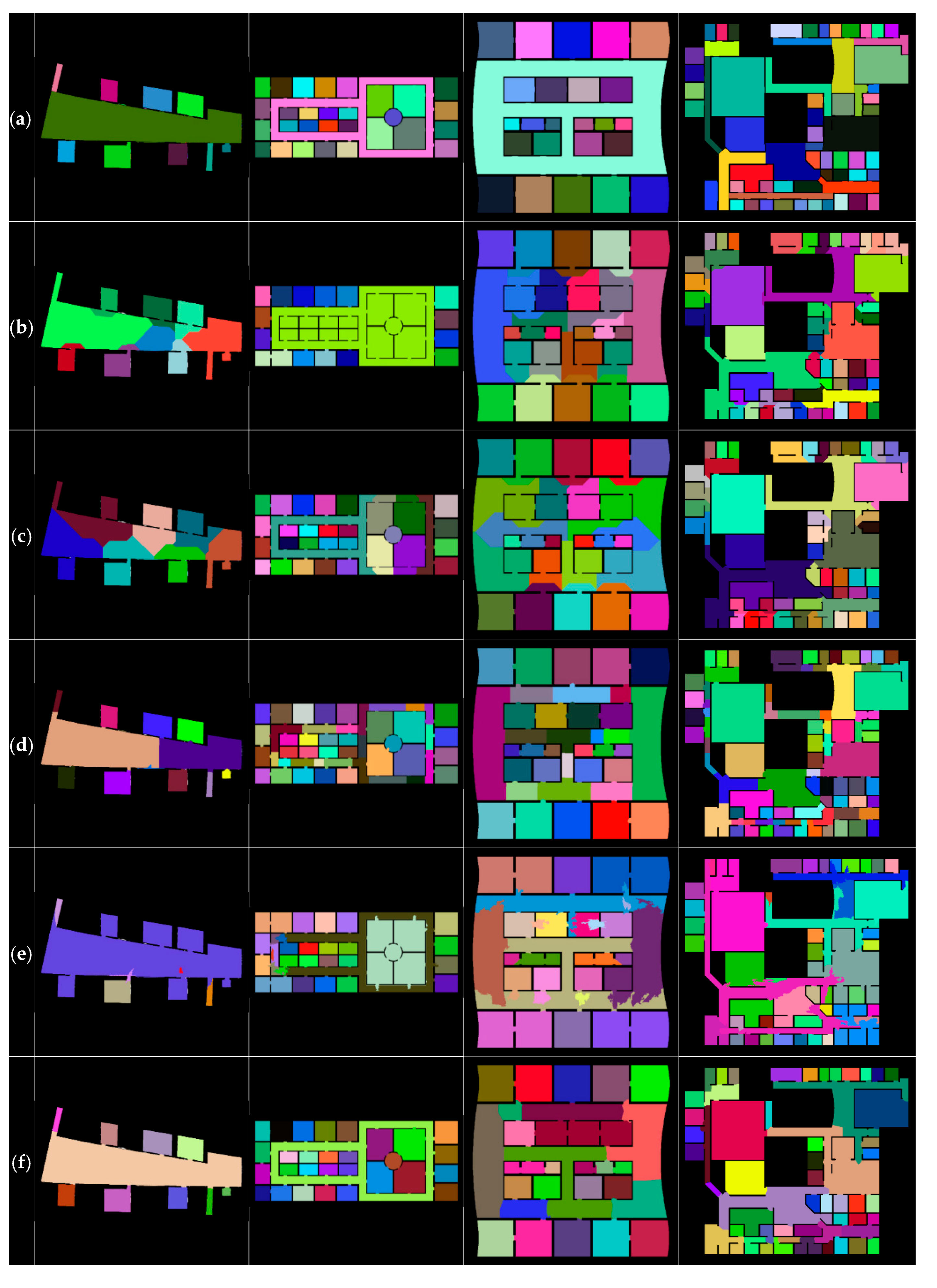

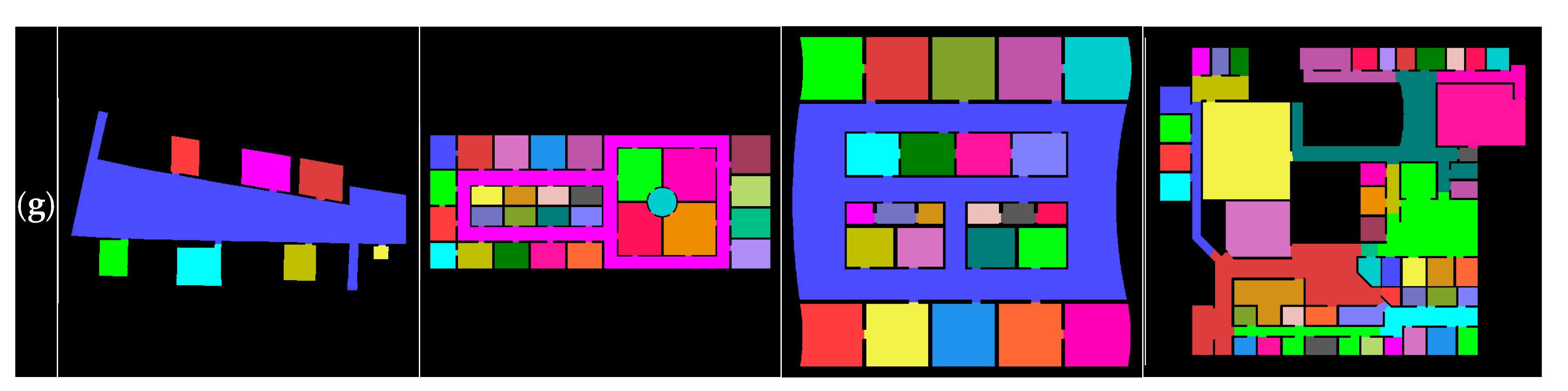

4.1. Evaluation with Real-World Data Sets

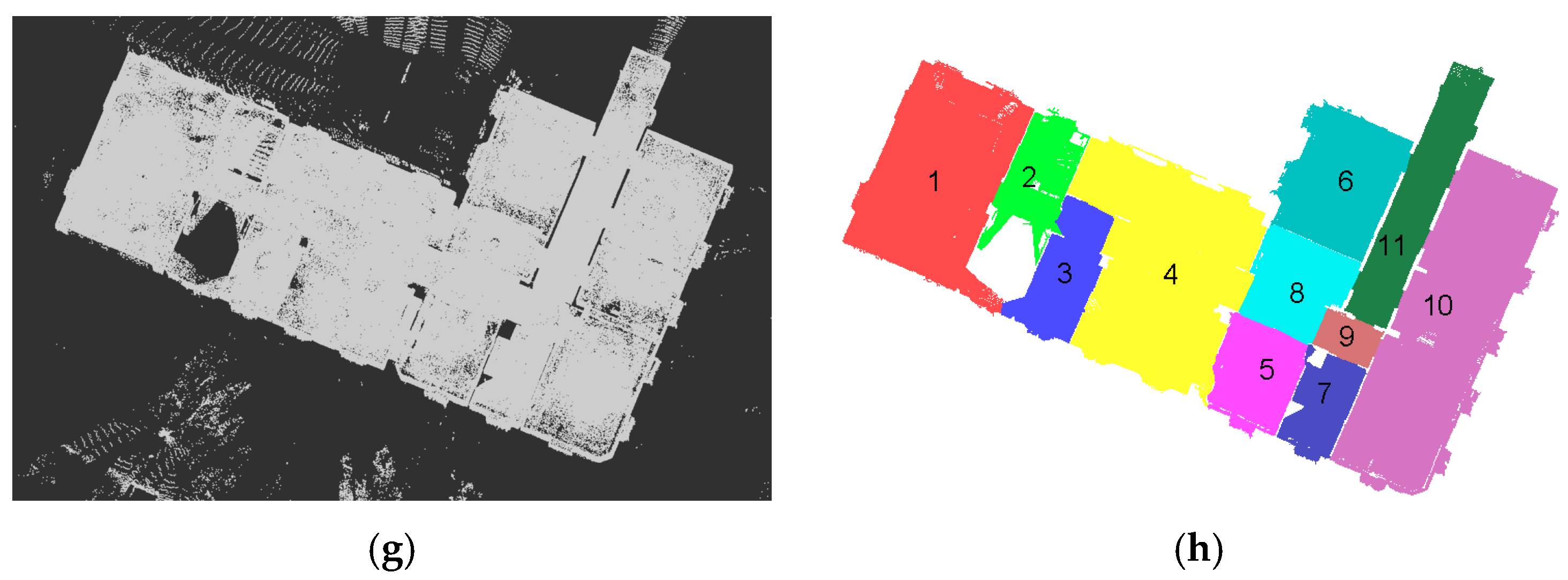

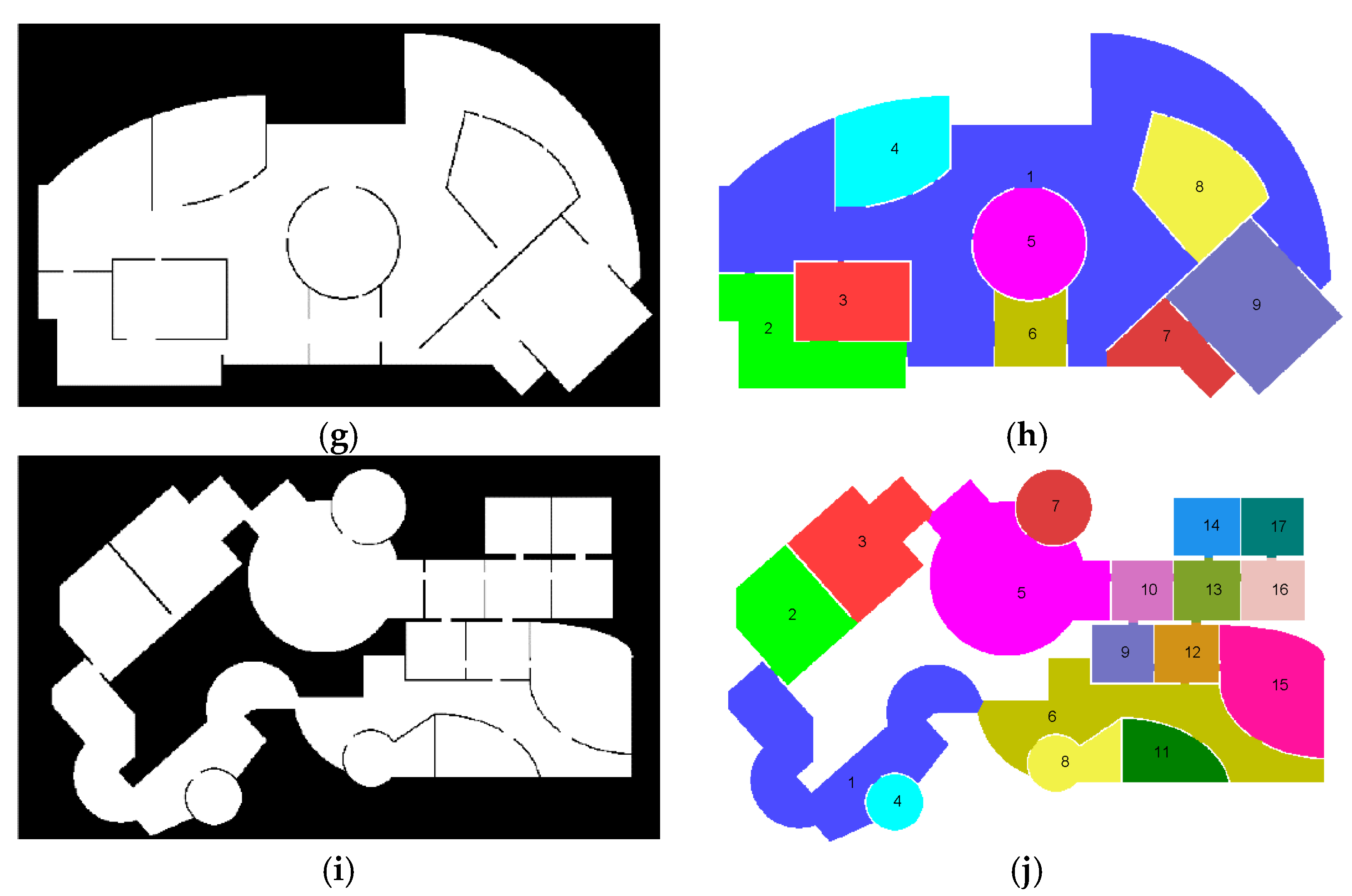

4.2. Evaluation with Synthetic Data Sets

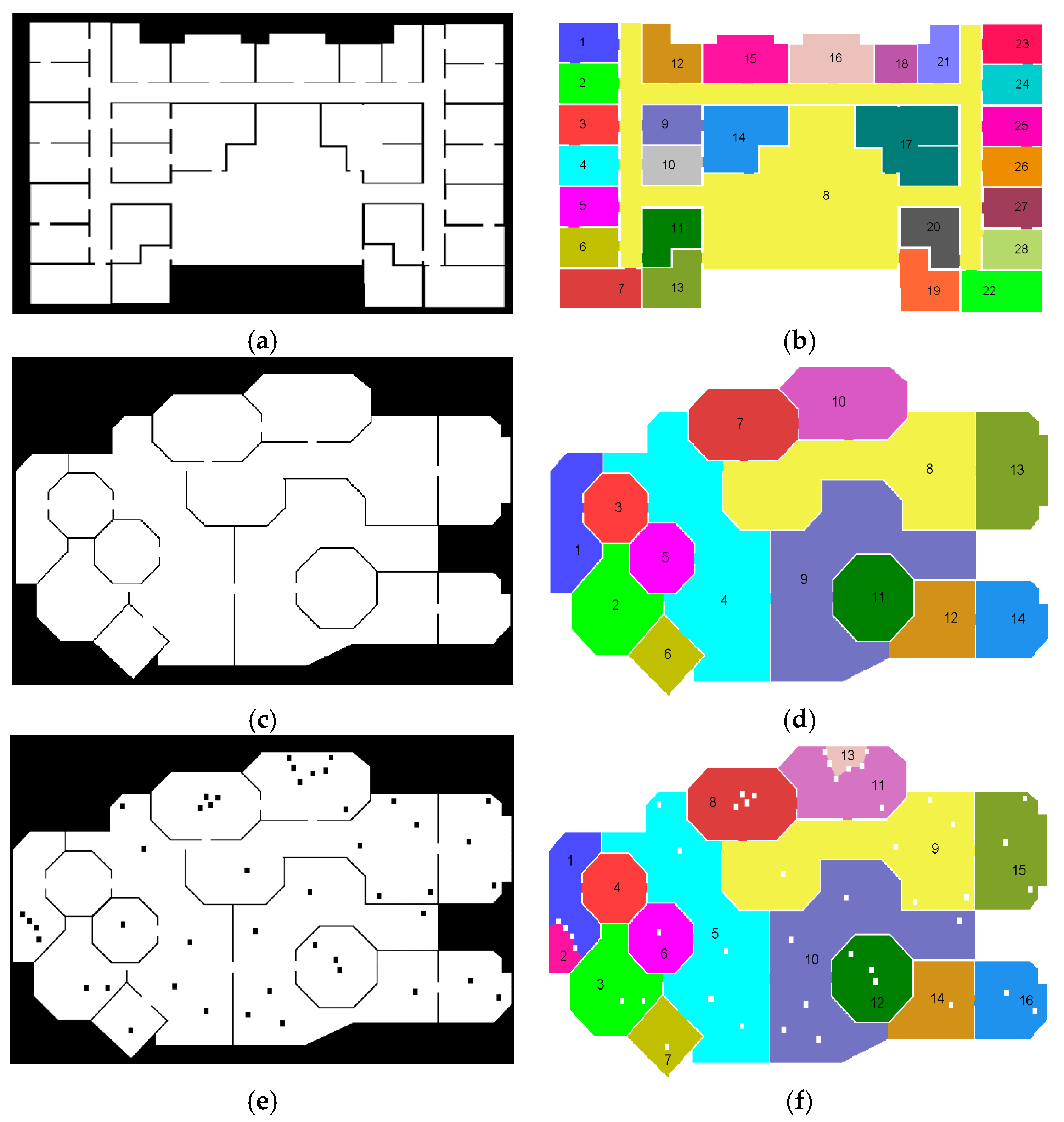

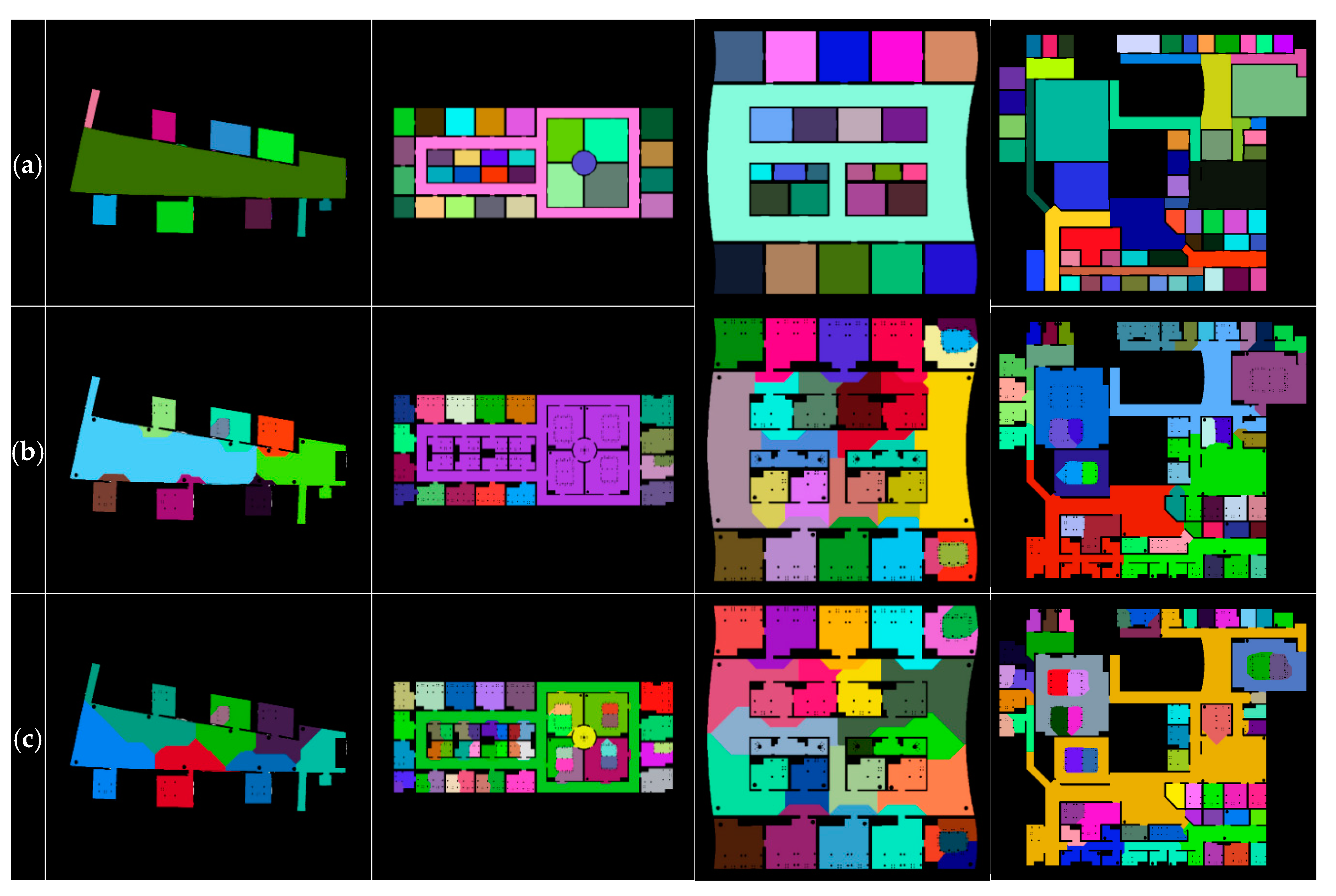

4.3. Comparison with Existing Methods Using Publicly Available Data Sets

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Bosche, F.N.; O’Keeffe, S. The Need for Convergence of BIM and 3D Imaging in the Open World. In Proceedings of the CitA BIM Gathering Conference, Dublin, Ireland, 12–13 November 2015; pp. 109–116. [Google Scholar]

- Xiong, X.; Adan, A.; Akinci, B.; Huber, D. Automatic creation of semantically rich 3D building models from laser scanner data. Autom. Constr. 2013, 31, 325–337. [Google Scholar] [CrossRef]

- Tang, P.; Huber, D.; Akinci, B.; Lipman, R.; Lytle, A. Automatic reconstruction of as-built building information models from laser-scanned point clouds: A review of related techniques. Autom. Constr. 2010, 19, 829–843. [Google Scholar] [CrossRef]

- Carbonari, G.; Stravoravdis, S.; Gausden, C. Building information model implementation for existing buildings for facilities management: A framework and two case studies. In Building Information Modelling (BIM) in Design, Construction and Operations; WIT Press: Southampton, UK, 2015; Volume 149, pp. 395–406. [Google Scholar]

- Wang, C.; Cho, Y.K. Application of As-built Data in Building Retrofit Decision Making Process. Procedia Eng. 2015, 118, 902–908. [Google Scholar] [CrossRef]

- Randall, T. Construction Engineering Requirements for Integrating Laser Scanning Technology and Building Information Modeling. J. Constr. Eng. Manag. 2011, 137, 797–805. [Google Scholar] [CrossRef]

- Jung, J.; Hong, S.; Yoon, S.; Kim, J.; Heo, J. Automated 3D Wireframe Modeling of Indoor Structures from Point Clouds Using Constrained Least-Squares Adjustment for As-Built BIM. J. Comput. Civ. Eng. 2016, 30, 04015074. [Google Scholar] [CrossRef]

- Valero, E.; Adan, A.; Cerrada, C. Automatic Method for Building Indoor Boundary Models from Dense Point Clouds Collected by Laser Scanners. Sensors 2012, 12, 16099–16115. [Google Scholar] [CrossRef] [PubMed]

- Hong, S.; Jung, J.; Kim, S.; Cho, H.; Lee, J.; Heo, J. Semi-automated approach to indoor mapping for 3D as-built building information modeling. Comput. Environ. Urban Syst. 2015, 51, 34–46. [Google Scholar] [CrossRef]

- Previtali, M.; Barazzetti, L.; Brumana, R.; Scaioni, M. Towards automatic indoor reconstruction of cluttered building rooms from point clouds. In Proceedings of the ISPRS Technical Commission V Symposium, Riva del Garda, Italy, 23–25 June 2014; Volume 2, pp. 281–288. [Google Scholar]

- Thomson, C.; Boehm, J. Automatic Geometry Generation from Point Clouds for BIM. Remote Sens. 2015, 7, 11753–11775. [Google Scholar] [CrossRef]

- Jung, J.; Hong, S.; Jeong, S.; Kim, S.; Cho, H.; Hong, S.; Heo, J. Productive modeling for development of as-built BIM of existing indoor structures. Autom. Constr. 2014, 42, 68–77. [Google Scholar] [CrossRef]

- Budroni, A.; Böhm, J. Automatic 3D modelling of indoor Manhattan-world scenes from laser data. In Proceedings of the ISPRS Commission V Mid-Term Symposium ‘Close Range Image Measurement Techniques’, Newcastle upon Tyne, UK, 21–24 June 2010; Volume 38, pp. 115–120. [Google Scholar]

- Adán, A.; Huber, D. Reconstruction of Wall Surfaces under Occlusion and Clutter in 3D Indoor Environments; Robotics Institute, Carnegie Mellon University: Pittsburgh, PA, USA, 2010. [Google Scholar]

- Mura, C.; Mattausch, O.; Villanueva, A.J.; Gobbetti, E.; Pajarola, R. Automatic room detection and reconstruction in cluttered indoor environments with complex room layouts. Comput. Graph. 2014, 44, 20–32. [Google Scholar] [CrossRef]

- Ochmann, S.; Vock, R.; Wessel, R.; Klein, R. Automatic reconstruction of parametric building models from indoor point clouds. Comput. Graph. 2016, 54, 94–103. [Google Scholar] [CrossRef]

- Turner, E.L. 3D Modeling of Interior Building Environments and Objects from Noisy Sensor Suites. Ph.D. Thesis, The University of California, Berkeley, CA, USA, 14 May 2015. [Google Scholar]

- Macher, H.; Landes, T.; Grussenmeyer, P. Point clouds segmentation as base for as-built BIM creation. In Proceedings of the 25th International CIPA Symposium, Taipei, Taiwan, 31 August–5 September 2015; Volume 2, pp. 191–197. [Google Scholar]

- Bormann, R.; Jordan, F.; Li, W.; Hampp, J.; Hagele, M. Room Segmentation: Survey, Implementation, and Analysis. In Proceedings of the IEEE International Conference on Robotics and Automation, Stockholm, Sweden, 16–21 May 2016; pp. 1019–1026. [Google Scholar]

- Wurm, K.M.; Stachniss, C.; Burgard, W. Coordinated multi-robot exploration using a segmentation of the environment. In Proceedings of the IEEE International Conference on Intelligent Robots and Systems, Nice, France, 22–26 September 2008; pp. 1160–1165. [Google Scholar]

- Mozos, Ó.M.; Stachniss, C.; Rottmann, A.; Burgard, W. Using adaboost for place labeling and topological map building. In Robotics Research; Springer: Berlin, Germany, 2007; Volume 28, pp. 453–472. [Google Scholar]

- Fabrizi, E.; Saffiotti, A. Augmenting topology-based maps with geometric information. Robot. Auton. Syst. 2002, 40, 91–97. [Google Scholar] [CrossRef]

- Diosi, A.; Taylor, G.; Kleeman, L. Interactive SLAM using laser and advanced sonar. In Proceedings of the IEEE International Conference on Robotics and Automation, Barcelona, Spain, 18–22 April 2005; pp. 1103–1108. [Google Scholar]

- Jebari, I.; Bazeille, S.; Battesti, E.; Tekaya, H.; Klein, M.; Tapus, A.; Filliat, D.; Meyer, C.; Ieng, S.-H.; Benosman, R.; et al. Multi-sensor semantic mapping and exploration of indoor environments. In Proceedings of the 3rd IEEE Incernational Conference on Technologies for Practical Robot Applications, Woburn, MA, USA, 11–12 April 2011; pp. 151–156. [Google Scholar]

- Brunskill, E.; Kollar, T.; Roy, N. Topological mapping using spectral clustering and classification. In Proceedings of the IEEE International Conference on Intelligent Robots and Systems, San Diego, CA, USA, 29 October–2 Novermber 2007; pp. 3491–3496. [Google Scholar]

- Shi, L.; Kodagoda, S.; Ranasinghe, R. Fast indoor scene classification using 3D point clouds. In Proceedings of the Australasian Conference on Robotics and Automation, Melbourne, Australia, 7–9 December 2011; pp. 1–7. [Google Scholar]

- Siemiatkowska, B.; Harasymowicz-Boggio, B.; Chechlinski, Ł. Semantic Place Labeling Method. J. Autom. Mob. Robot. Intell. Syst. 2015, 9, 28–33. [Google Scholar] [CrossRef]

- Burgard, W.; Fox, D.; Hennig, D.; Schmidt, T. Estimating the absolute position of a mobile robot using position probability grids. In Proceedings of the 13th National Conference on Artificial Intelligence, Portland, OR, USA, 4–8 August 1996; pp. 896–901. [Google Scholar]

- Lu, Z.; Hu, Z.; Uchimura, K. SLAM estimation in dynamic outdoor environments: A review. In Proceedings of the 2nd International Conference on Intelligent Robotics and Applications, Singapore, 16–18 December 2009; pp. 255–267. [Google Scholar]

- Ochmann, S.; Vock, R.; Wessel, R.; Tamke, M.; Klein, R. Automatic generation of structural building descriptions from 3D point cloud scans. In Proceedings of the 9th IEEE International Conference on Computer Graphics Theory and Applications, Lisbon, Portugal, 5–8 January 2014; pp. 1–8. [Google Scholar]

- Ikehata, S.; Yang, H.; Furukawa, Y. Structured indoor modeling. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 13–16 December 2015; pp. 1323–1331. [Google Scholar]

- Meinel, G.; Neubert, M. A comparison of segmentation programs for high resolution remote sensing data. Int. Arch. Photogramm. Remote Sens. 2004, 35 Pt B, 1097–1105. [Google Scholar]

- Mozos, O.M.; Rottmann, A.; Triebel, R.; Jensfelt, P.; Burgard, W. Semantic labeling of places using information extracted from laser and vision sensor data. In Proceedings of the IEEE International Conference on Intelligent Robots and Systems, Beijing, China, 9–15 October 2006. [Google Scholar]

- Friedman, S.; Pasula, H.; Fox, D. Voronoi Random Fields: Extracting Topological Structure of Indoor Environments via Place Labeling. In Proceedings of the 20th International Joint Conference on Artificial Intelligence, Hyderabad, India, 9–12 January 2007; Volume 7, pp. 2109–2114. [Google Scholar]

- Li, Y.; Hu, Q.; Wu, M.; Liu, J.; Wu, X. Extraction and Simplification of Building Façade Pieces from Mobile Laser Scanner Point Clouds for 3D Street View Services. ISPRS Int. J. Geo-Inf. 2016, 5, 231. [Google Scholar] [CrossRef]

- Motameni, H.; Norouzi, M.; Jahandar, M.; Hatami, A. Labeling method in Steganography. Int. Sch. Sci. Res. Innov. 2007, 1, 1600–1605. [Google Scholar]

- Han, S.; Cho, H.; Kim, S.; Jung, J.; Heo, J. Automated and efficient method for extraction of tunnel cross sections using terrestrial laser scanned data. J. Comput. Civ. Eng. 2012, 27, 274–281. [Google Scholar] [CrossRef]

- Bresenham, J.E. Algorithm for computer control of a digital plotter. IBM Syst. J. 1965, 4, 25–30. [Google Scholar] [CrossRef]

- Käshammer, P.; Nüchter, A. Mirror identification and correction of 3D point clouds. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 40, 109. [Google Scholar] [CrossRef]

- Kim, C.; Habib, A.; Pyeon, M.; Kwon, G.-R.; Jung, J.; Heo, J. Segmentation of Planar Surfaces from Laser Scanning Data Using the Magnitude of Normal Position Vector for Adaptive Neighborhoods. Sensors 2016, 16, 140. [Google Scholar] [CrossRef] [PubMed]

- DURAARK Datasets. Available online: http://duraark.eu/data-repository/ (accessed on 22 June 2017).

- IPA Room Segmentation. Available online: http://wiki.ros.org/ipa_room_segmentation (accessed on 12 February 2017).

- Rodríguez-Cuenca, B.; García-Cortés, S.; Ordóñez, C.; Alonso, M.C. Morphological operations to extract urban curbs in 3D MLS point clouds. ISPRS Int. J. Geo-Inf. 2016, 5, 93. [Google Scholar] [CrossRef]

| Properties | Domain | Input Data | Main Assumptions or Limitations | Supplementary Data | References | |

|---|---|---|---|---|---|---|

| Methods | ||||||

| Morphological | Robotics | Grid map | Narrow passages/2D data only | - | Fabrizi et al. [22] | |

| Distance transform | Robotics | Grid map | Narrow passages/2D data only | Virtual markers | Diosi et al. [23] | |

| Voronoi graph | Robotics | Grid map | Narrow passages/2D data only | - | Wurm et al. [20] | |

| Feature based | Robotics | Grid map | Resemblance between data/2D data only | Labeled map | Mozos et al. [33] | |

| Voronoi random | Robotics | Grid map | Narrow passages/2D data only | Labeled map | Friedman et al. [34] | |

| Probabilistic model | AEC | Point cloud | Planar walls | Initial labeling | Ochmann et al. [30] | |

| Iterative binary subdivision | AEC | Point cloud | Planar walls | Scanner locations | Mura et al. [15] | |

| Graph clustering | AEC | Point cloud | Vertical walls/Mobile data only | Scanner path | Turner [17] | |

| Height constraint | AEC | Point cloud | Vertical walls/No link at certain level | - | Macher et al. [18] | |

| K-medoids | AEC | RGBD images | Narrow passages/Manhattan world/Planar walls | - | Ikehata et al. [31] | |

| Proposed Approach | Robotics/AEC | Grid map/Point cloud | Narrow passages/Vertical walls | - | - | |

| Process Phase | Parameter (s) | Data 1 | Data 2 | Remark |

|---|---|---|---|---|

| Height estimation | Interval | 0.10 | 0.10 | User-specified |

| Rasterization | Pixel size | 0.05 | 0.03 | User-specified |

| Noise filtering | Noise-filtering offset | 0.50 | 0.40 | User-specified |

| Initial segmentation | Detection window size for finding initial segments | 1.55 | 0.99 | User-specified |

| Opening closure | Detection window size for finding surrounding walls | 3.10 | 1.98 | Automatic |

| Detection window size for pruning small branches | 1.55 | 0.99 | Automatic |

| Data No. | Data Size (million points) | Pixel Size (meters) | Detection Window Size (meters) | Processing Time (sec) |

|---|---|---|---|---|

| 1 | 37.69 | 0.15 | 4.65 | 25.93 |

| 2 | 40.71 | 0.20 | 4.60 | 26.74 |

| 3 | 33.93 | 0.10 | 1.90 | 23.57 |

| 4 | 25.17 | 0.05 | 1.85 | 52.67 |

| Data No. | Architectural Shape | No. of Room (segment/true) | Map Size (pixels) | Detection Window Size (meters) | Processing Time (sec) |

|---|---|---|---|---|---|

| 1 | Linear | 28/28 | 421 by 704 | 1.05 | 46.62 |

| 2 | Linear | 14/14 | 359 by 606 | 1.05 | 7.98 |

| 3 | Linear | 16/14 | 359 by 606 | 1.05 | 10.75 |

| 4 | Nonlinear | 9/9 | 359 by 606 | 1.55 | 27.69 |

| 5 | Nonlinear | 17/17 | 359 by 606 | 1.05 | 8.66 |

| Data Type | Non-Furnished | Furnished | |||||

|---|---|---|---|---|---|---|---|

| Properties | Correctness (%) | Completeness (%) | Absolute Deviation | Correctness (%) | Completeness (%) | Absolute Deviation | |

| Methods | |||||||

| Morphological | 81.9 ± 13.0 | 81.7 ± 13.5 | 5.2 | 78.3 ± 16.3 | 56.8 ± 15.0 | 6.1 | |

| Distance transform | 83.2 ± 14.5 | 82.1 ± 15.1 | 3.5 | 76.5 ± 17.6 | 51.4 ± 15.7 | 11.8 | |

| Voronoi graph | 95.0± 6.1 | 80.7 ± 7.6 | 10.1 | 94.0 ± 6.7 | 68.6 ± 8.3 | 11.7 | |

| Feature based | 78.0 ± 8.4 | 72.9 ± 10.7 | 4.7 | 79.8 ± 16.0 | 60.0 ± 15.3 | 8.5 | |

| Voronoi random | 90.0 ± 8.4 | 88.2 ± 10.0 | 2.9 | 81.0 ± 14.4 | 64.6 ± 14.6 | 5.4 | |

| Average | 85.6 ± 12.0 | 81.1 ± 12.5 | 5.3 | 81.9 ± 15.7 | 60.3 ± 14.7 | 8.7 | |

| Proposed | 89.6 ± 9.8 | 91.7 ± 9.0 | 2.5 | 84.8 ± 13.9 | 67.8 ± 14.5 | 8.2 | |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jung, J.; Stachniss, C.; Kim, C. Automatic Room Segmentation of 3D Laser Data Using Morphological Processing. ISPRS Int. J. Geo-Inf. 2017, 6, 206. https://doi.org/10.3390/ijgi6070206

Jung J, Stachniss C, Kim C. Automatic Room Segmentation of 3D Laser Data Using Morphological Processing. ISPRS International Journal of Geo-Information. 2017; 6(7):206. https://doi.org/10.3390/ijgi6070206

Chicago/Turabian StyleJung, Jaehoon, Cyrill Stachniss, and Changjae Kim. 2017. "Automatic Room Segmentation of 3D Laser Data Using Morphological Processing" ISPRS International Journal of Geo-Information 6, no. 7: 206. https://doi.org/10.3390/ijgi6070206