1. Introduction

In this paper, we consider two panel data models with unobserved heterogeneous time-varying effects; one with individual effects treated as random functions of time, and the other with common factors whose number is unknown and whose effects are firm-specific. This paper has two distinctive features and can be considered as a generalization of traditional panel data models. First, the individual effects that are assumed to be heterogeneous across units, as well as to be time varying, are treated non-parametrically, following the spirit of the models of

Bai (

2009,

2013),

Li et al. (

2011),

Kneip et al. (

2012),

Ahn et al. (

2013), and

Bai and Carrion-i-Silverstre (

2013). Second, we develop methods that allow us to interpret the effects as measures of technical efficiency in the spirit of the structural productivity approaches of

Olley and Pakes (

1996) and non-structural approaches from the stochastic frontier literature (

Kumbhakar and Lovell 2000;

Fried et al. 2008).

Levinsohn and Petrin (

2003),

Kim et al. (

2016), and

Ackerberg et al. (

2015) have provided rationales for various treatments for the endogeneity of inputs and the appropriate instruments or control functions to deal with the potential endogeneity of inputs and of technical change based on variants of the Olley-Pakes basic model set up. Although we do not explicitly address entry/exit in this paper, we do address dynamics, as well as the potential endogeneity of inputs and the correlation of technical efficiency with input choice (

Amsler et al. 2016). The general factor structure we utilize can pick up potential nonlinear selection effects that may be introduced when using a balanced panel of firms. Our dynamic heterogeneity estimators could be interpreted as general controls for any mis-specified factors, such as selectivity due to entry/exit, that are correlated with the regressors and could ultimately bias slope coefficients.

Olley and Pakes (

1996) utilize series expansions and kernel smoothers to model such selectivity. Our second estimator instead utilizes a general factor structure, which is a series expansion with a different set of basis functions than those used in the polynomial expansions employed by Olley-Pakes. Alternatively, we can interpret the effects based on a panel stochastic frontier production specification that formally models productive efficiency as a stochastic shortfall in production, given the input use.

Van den Broeck et al. (

1994) formulate a Bayesian approach under a random effects composed error model, while

Koop et al. (

1997) and

Osiewalski and Steel (

1998) provided extensions to the fixed effect model utilizing Gibbs sampling and Bayesian numerical methods, but these studies assumed that the individual effects were time invariant. Comparisons between the Bayes and classical stochastic frontier estimators have been made by

Kim and Schmidt (

2000). The estimators we consider are specified in the same spirit as

Tsionas (

2006), who assumed that the effects evolve log-linearly. We do not force the time-varying effects to follow a specific parametric functional form and utilize Bayesian integration methods and a Markov chain-based sampler to provide the slope parameter and heterogeneous individual effects inferences based on estimators of the posterior means of the model parameters.

The paper is organized as follows.

Section 2 describes the first model setup and parameter priors.

Section 3 introduces the second model and the corresponding Bayesian inferences, followed by

Section 4, presenting the Monte Carlo simulations results. The estimation of the translog distance function is briefly discussed and the empirical application results of the Bayesian estimation of the multi-output/multi-input technology employed by the U.S. banking industry in providing intermediation services are presented in

Section 5.

Section 6 provides the concluding remarks.

2. Model 1: A Panel Data Model with Nonparametric Time Effects

Our first model is based on a balanced design with T observations for n individual units. Observations in the panel can be represented in the form , where the index i denotes the ith individual unit, and the index t denotes the tth time period.

A panel data model with heterogeneous time-varying effects is:

where

is the response variable,

is a

vector of the explanatory variables,

is a

vector of the parameters, and

is a nonconstant and unknown individual effect. We make a standard assumption that the measurement error

. The time-varying heterogeneity is assumed to be independent across units. This assumption is quite reasonable in many applications, particularly in production/cost stochastic frontier models where the effects are measuring technical efficiency levels. A firm’s efficiency level primarily relies on its own factors such as its executives’ managerial skills, the firm size, and the operational structure, etc., and should thus be heterogeneous across firms. These factors usually change over time, as does the firm’s efficiency level.

For the

ith unit, the model is:

where

, and

contain the stacked vectors of dimension

T for cross-section i.

When interpreting the effects as firm efficiencies, as is done in stochastic frontier analysis (

Pitt and Lee 1981;

Schmidt and Sickles 1984), the estimation of time-varying technical efficiency levels is as important as that of the slope parameters.

A difference between our model and many other Bayesian approaches in the literature is that no functional form for the prior distribution of the unobserved heterogeneous individual effects is imposed. Instead of resorting to the classical nonparametric regression techniques (

Kneip et al. 2012), a Markov chain Monte Carlo (MCMC) algorithm is implemented to estimate the model. We can consider this to be a generalization of

Koop and Poirier (

2004) in the case of panel data, including both individual-specific and time-varying effects. Moreover, our model does not rely on the restrictive conjugate prior formulation for the time varying individual-effects.

A Bayesian analysis of the panel data model set up above requires specification of the prior distributions over the parameters (

γ,

β,

σ) and computation of the posterior using a Bayesian learning process:

The prior for the individual effect is not assumed to follow a normal distribution; instead, it is only assumed that the first-order or second-order difference of γi follows a normal prior.

where

, and

is the

matrix whose elements are

, for

t = 1,…,T − 1; for all

t = 2,…,T and zero otherwise. The information implied by this prior is that

, or

.

ω is the smoothness parameter that indexes the degree of smoothness.

ω can be considered as a hyper-parameter, or it can be assumed to have its own prior, which is explained in the next section. Provided the continuity and first-order differentiability of

, this assumption says that the first derivative of the time-varying function

in (4) is a smooth function of time. One can assume second-order differentiability instead, which is implied by the condition that

.

A non-informative distribution is assumed for the joint prior of the slope parameter β and the unknown variance term σ2.

This is equivalent to assuming that the prior distribution is uniform on

.

With the assumptions of the priors above, the joint prior of the model parameters is:

The corresponding sample likelihood function is:

The likelihood is formed by the product of the

nT independent disturbance terms, which follow the normal distribution for the idiosyncratic error, assumed to be

NID (0, σ2). Applying Bayes’ theorem, the probability density function is updated utilizing the information from the data and to form the joint posterior distribution given by:

The model in (1) and (2) is identified provided we have a proper prior for the

. To accomplish this, we use (4) with a proper prior for

:

(see (17) below), where

is the sum of squares with

observations. The “non-informative” case is to let

,

. We use

and

following standard practice (

Geweke 1993). The posterior is well-defined and integrable. Such issues have been dealt with by

Koop and Poirier (

2004), whose spline method is equivalent to the difference prior we adopt.

To proceed with further inference, we need to solve this analytically. However, the joint posterior distribution does not have a standard form and taking draws directly from it is problematic. Therefore, we utilize Gibbs sampling to perform Bayesian inference. The Gibbs sampler is commonly used in such situations because of the desirable result that iterative sampling from the conditional distributions will lead to a sequence of random variables converging to the joint distribution. A general discussion on the use of Gibbs sampling is provided by

Gelfand and Smith (

1990), who compare the Gibbs sampler with alternative sampling-based algorithms. A more detailed discussion is given in

Gelman et al. (

2003). Gibbs sampling is well-adapted to sampling the posterior distributions for our model since it is possible to derive the collection of distributions.

The Gibbs sampling algorithm we employ generates a sequence of random samples from the conditional posterior distributions of each block of parameters, in turn conditional on the current values of the other blocks of parameters, and it thus generates a sequence of samples that constitute a Markov Chain, where the stationary distribution of that Markov chain is the desired joint distribution of all the parameters.

In order to derive the conditional posterior distributions of

β,

γ, and

σ, we first rewrite the joint posterior in (8) as:

where

.

The joint posterior can be rewritten as:

From (10), the conditional distribution of β can be shown to follow the multivariate normal distribution with the mean and covariance matrix.

The conditional distribution of

, therefore, is given by:

In order to derive the conditional distribution of the individual effect

, we rewrite the joint posterior distribution as:

Under the assumption that the effects, the are independent across units, the conditional posterior distribution of is the same as that of , and is distributed as a multivariate normal.

where the mean

and covariance matrix

V are

and

for

. The detailed derivation is presented in

Appendix A.

The conditional posterior distribution for

is given below in (15). It is clear that the sum of the squared residuals

has a conditional chi-squared distribution with

nT degrees of freedom, as shown in (16):

If the smoothing parameter

ω is also assumed to follow its own prior instead of being treated as a constant, then its conditional posterior distribution can also be derived. Suppose that

, where

are hyper-parameters that control the prior degree of smoothness that is imposed on the

. Then, the conditional posterior distribution of

is derived as:

Generally, small values of the prior “sum of squares”

correspond to smaller values of

and thus a higher degree of smoothness. Alternatively, we can choose the smoothing parameter

using cross validation, which in a Bayesian context is similar to cross validation for tuning parameters in classical nonparametric regression. We choose the smoothing parameter

ω so that the marginal likelihood (

obtained as in Perrakis et al. 2014) is maximized.

A Gibbs sampler is then used to draw observations from the conditional posteriors based on (11) through (17). Draws from these conditional posteriors will eventually converge to the joint posterior in (8). Since the conditional posterior distribution of β follows the multivariate normal distribution displayed in (12), it will be straightforward to sample from it. For the individual effects , sampling is also straightforward since its conditional posterior follows a multivariate normal distribution with a mean vector and covariance matrix , as expressed in (14).

Finally, to draw samples from the conditional posterior distribution function for the unobserved variance of the measurement error σ term, we have two simple steps. First, we draw samples directly from , which is shown in (16) to follow a chi-squared distribution with the degree of freedom nT. Next, we assign the values of to , where is the random generated variable that follows a in the first step.

3. Model 2: A Panel Data Model with Factors

We next consider a somewhat different specification for the panel data model, wherein the effects are treated as a linear combination of unknown basis functions or factors:

Here,

is a

vector of common factors,

is a

vector of individual-specific factor loadings, and

represents the firm-specific and time invariant effects. For these effects, we retain the

Schmidt and Sickles (

1984) interpretation of the fixed effects as measures of unit specific time invariant productivity (inefficiency), but we embed it in a Bayesian framework using the Bayesian Fixed Effects Specification (BFES) of

Koop et al. (

1997). Following their model specification, the BFES is characterized by marginal prior independence between the individual effects. Therefore, the effects are assumed not to be linked across firms, as would be the case for the spatial stochastic frontier considered by

Glass et al. (

2016).

As for measuring the inefficiency, the essence of the

Schmidt and Sickles (

1984) device in the Bayesian context is that, during the

sth of the total

S (MCMC) iterations or paths, inefficiency is constructed as the difference of the individual effect from the maximum effect across firms:

. Thus, one counts the most efficient firm in the sample as 100% efficient. However, there is uncertainty as to which firm we should use for benchmarking and this is resolved by averaging:

to account for both the parameter uncertainty, as well as the uncertainty regarding the best performing firm. The efficiency level of the most efficient firm in the sample approaches 1 when S → ∞. This method has much in common with the

Cornwell et al. (

1990) (CSS) estimator of time and firm specific productivity effects. The difference is that at each path of the Gibbs sampler, we have new draws for the

, a new value for

, and thus a new value for

. While CSS have one set of estimates and therefore a single firm to use as the benchmark, in the Bayesian approach, we have draws from the posterior of the

. There is also uncertainty as to which firm is the benchmark since we are simulating from the finite sample distribution of the

and thus, we re-compute

and the value of

each time.

The method can be extended to the case in which the time effects are nonlinear, e.g., where . With this specification, we can allow for a firm-specific polynomial trend, where represents the firm-specific coefficients. Of course other covariates can also be included in the time effects if so desired.

The model can be written for the

ith unit as:

or for the

th time period as:

where

, and

. If we set

then

acts as an individual-specific intercept. Effectively, the first column of

contains ones. The model for all observations can be written as

, where

and

.

This model setting follows that in

Kneip et al. (

2012), and it satisfies the following structural assumption, which is Assumption 1 from Kneip et al:

Assumption 1:

For some fixed , there exists an L-dimensional space , where, such that the time-varying individual effect holds with probability 1.

We define the priors similarly to Model 1. Regarding the slope parameter

and variance of the noise term

, we continue to assume a non-informative prior:

. For the common factors, it is reasonable to assume that:

This prior is consistent with the presence of common factors that evolve smoothly over time. The degree of smoothness is controlled by the parameter and by setting Smoothness in this context then comes from the specification of the random walk prior above as essentially a spline.

For the loadings, we assume

. An alternative that we do not pursue but which may attenuate the proliferation of factors would be to stochastically constrain the loadings to approach zero in the following sense: if

, then

,

, for

, where

are parameters between zero and one. The posterior kernel distribution is:

where

denotes any hyper-parameters that are present in the prior of

. When

, we have:

where

denotes the prior on the hyper-parameters. A reasonable choice is the

constant and

, which leads to:

where

.

In order to proceed with Bayesian inference, we again use the Gibbs Sampling algorithm. For our model 2 specification, the implementation of Gibbs sampling is rather straightforward since we can analytically derive the conditional posteriors for the parameters in which we are interested. In what follows, we use the notation

. The conditional posteriors are:

where

,

, for each

where

for each

.

Using a Gibbs sampler, we draw observations from the conditional posteriors from (25) to (29). Draws from the conditional posteriors will eventually converge to the joint posterior (24). The conditional posterior distribution of β follows the multivariate normal (25) and it is straightforward to sample from that distribution. To draw samples from the conditional posterior distribution function for the unobserved variance of the measurement error σ term, we first draw samples directly from the distribution of , which is shown in (26) to follow a chi-squared distribution with the degree of freedom nT, and then assign the values of to , where is the generated random variable that follows in the first step.

For the mean parameter

, sampling is also straightforward since its conditional posterior follows a multivariate normal distribution. The variance matrix

follows an inverted Wishart distribution. For the unknown common factors

and the corresponding factor loadings

we can draw directly from multivariate normal distribution following (28) and (29). Finally, the individual firm effects

can be drawn using the procedure in

Koop et al. (

1997). This involves standard computations as the Bayesian fixed effects are drawn for normal posterior conditional distributions. The difficult distributional issues involved in deriving the analytical finite sample distribution of the parameters and estimates of relative efficiency are resolved through the MCMC procedure used to generate

, a fact that has been mentioned by

Koop et al. (

1997).

can be calculated from the posterior of

and

and thus

can also be calculated.

In our discussion of Model 2, we have treated the number of finite factors (

G) as known. However, we can also utilize Bayesian techniques to develop inferences on

G. Classical inferential approaches have been proposed by

Bai and Ng (

2007),

Onatski (

2009), and

Kneip et al. (

2012). We consider models with at most

L finite factors

G = 1, 2,…,L. Suppose

and

denote the prior and likelihood, respectively, of a model with

G factors, where

is the vector of parameters common to all models (such as

β and

σ) and

denotes a vector of parameters related to the factors and their loadings,

φ and

γ. The marginal likelihood is

. For models with different numbers of factors, say

G and

G’, we can consider the Bayes factor in favor of the first model and against the second:

Computation of the marginal likelihood requires the computation of the integral in the numerator with respect to and . As this is not available analytically, we adopt the following approach.

We first specify:

where

is a convenient importance sampling density. We factor the importance density as

, where

and

are univariate densities. The densities are chosen to be univariate Student’s

t-distributions with five degrees of freedom, with parameters matched to the posterior mean and standard deviation of MCMC draws for

and

γ, respectively. The integral is then calculated using standard importance sampling, which is quite robust. The standard deviations are multiplied by constants

and

, which are selected so that the importance weights are as close to uniform as possible. We use 100 random pairs in the interval 0.1 to 10 and select the values of

h for which the Kolmogorov-Smirnov test is the lowest. We truncate the weights to their 99.5% confidence interval, but in very few instances was this found necessary as extreme values are rarely observed. There is evidence that changing the degrees of freedom of the Student’s

t provides some improvement, but we did not pursue this further as the final results for the Bayes factors were not found to differ significantly.

Given marginal likelihoods

,

, the posterior model probabilities can be estimated as

1:

The posterior model probabilities summarize the evidence in favor of a model with a given number of factors.

4. Monte Carlo Simulations

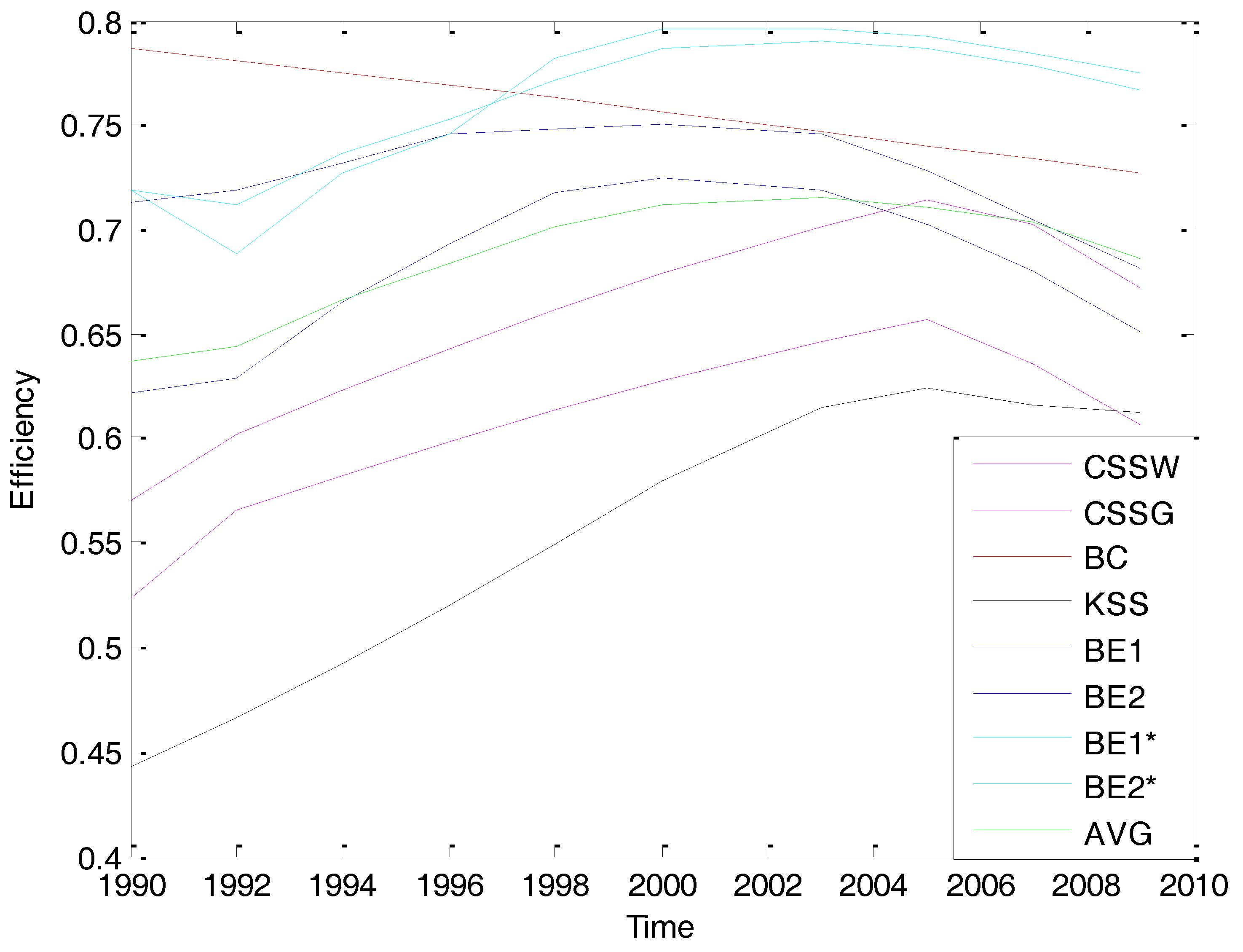

In order to illustrate the model and examine the finite sample performance of the new Bayesian estimators with nonparametric individual effects (BE1) and with the factor model specification for the individual effects (BE2), we carry out a series of Monte Carlo experiments. The performance of the Bayesian estimator is compared with the parametric time-variant estimator of

Battese and Coelli (

1992) (BC), the estimators proposed by (

Cornwell et al. 1990)—within (CSSW) and random effects GLS (CSSG)—and the

Kneip et al. (

2012) estimator that utilizes a combination of nonparametric regression techniques (smoothing splines) and factor analysis (

Bada and Liebl 2014) to model the time-varying unit specific effects. The BC estimates are based on the model (1) where the time-varying effects are given by

The temporal pattern of firm-specific effects

depends on the sign of

. The time-invariant case corresponds to

. The disturbances

are

i.i.d. and are assumed to follow a non-negative truncated normal distribution. Estimation of the BC model is carried out by parametric MLE. The CSSW and CSSG estimates are also based on model (1) and specify the time varying effects as

. Derivations of the within, GLS, and efficient Hausman-Taylor type IV estimators can be found in

Cornwell et al. (

1990). The KSS estimator requires a bit more discussion. They assume that

is a linear combination of some basis functions

In the first step of their three step procedure, they obtain estimates of the slope parameters and nonparametric approximations to

by a least squares regression of

Y on

X and an approximation of the effects using smoothing splines. In the second step, they obtain the empirical covariance matrix of residuals and in the third step they determine the basis functions and corresponding coefficients. Details can be found in

Kneip et al. (

2012). Point estimates and standard errors for BE1 and BE2 are posterior moments whose derivation we detailed in

Section 2 and

Section 3. We averaged the point estimates and the standard deviations of the parameter estimates from all of the simulated paths.

We consider a panel data model with two regressors written as

We generate samples of size

n = 50, 100, 200, with

T = 20, 50. In each experiment, the regressors

are randomly drawn from a standard multivariate normal distribution

N(0,Ip) The

i.i.d. disturbance term

is drawn from a standardized

. Time-varying individual effects are generated by four different DGPs, which specify the effects as following a unit specific quadratic function of a time trend (DGP1), random walk (DGP2) oscillating function given by a linear combination of sine and cosine functions (DGP3), and finally a simple additive mixture of the previous three data generating processes (DGP4). The parameterizations are:

Here

and

are

i.i.d ,

, and

Gibbs sampling was implemented using 55,000 iterations with a burn-in period of 5000 samples. We only consider every other 10th draw to mitigate the impact of autocorrelation from successive samples from the Markov chain. With regard to the selection of the number of factors, Gibbs samplers for all DGPs rely on an MCMC simulation from models with a G value ranging from one to eight. The true number of factors is 3, 2, 1, and 6 for the four respective DGPs.

The simulation results for all the DGPs are displayed in

Table 1,

Table 2,

Table 3 and

Table 4. Estimates and standard errors of the slope coefficients

β1 and

β2 are presented in the upper panel of each table, while estimates of the individual effects

γit and their normalized MSE are displayed in the lower panel of each table. The normalized MSE of the individual effects

γit is calculated as:

Since we have not analyzed the role of correlated effects in these experiments, estimates of the slope parameters should be consistent for CSSW, CSSG, and KSS. Moreover, the BC model utilizes parametric MLE based on

i.i.d. normally distributed random disturbances and thus should also yield consistent slope parameter estimates. Results from the four different specifications of the effects clearly demonstrate that point estimates of the slope coefficients for BC, CSSW, CSSG, and KSS are comparable across the various dgps, although variances will of course be smaller for estimators that do a better job of modeling the effects. The BC estimator does a poor job of estimating the effects since the specification we utilize assumes that the effects have the same temporal pattern for the different units. Generalizations of the BC estimator are available that allow the effects to be functions of selected regressors that may change over units, but we do not utilize these extensions in our experiments. Since DGP1 is consistent with the assumptions for the time-varying effects in the CSS model (we use the version of the CSS estimator utilized in the

Cornwell et al. (

1990) application wherein the unit specific effects were given by a second-order polynomial in the time trend), it is no surprise that the CSSW and CSSG estimators have the best performance compared with the other estimators for this dgp. However, it is also clear from the results of

Table 1 that the Bayesian estimators are comparable to those of the CSSW, CSSG, and KSS estimators in terms of the estimates of individual effects. Moreover, for the sample sizes of

n = 50,

T = 50, and

n = 100,

T = 50, the Bayesian estimators provide more accurate estimates of individual effects than the KSS estimator. This implies that the performance of the Bayesian estimators is quite effective in estimating the time-varying effects of the smoothed-curve forms, like the second-order polynomials. It is not surprising that the mean squared errors of the Bayesian estimators are consistently much lower than those of the BC estimator for all sample sizes.

DGP2 considers the case where the individual effects are generated by a random walk and the results for these experiments are shown in

Table 2. CSSW and CSSG are over-parameterized as they assume that the individual effects are quadratic functions of the time trend and have relatively poorer performances for this dgp. BE1 and BE2 are mostly data driven and impose no functional forms on the temporal pattern of the individual effects. For this relatively simple random walk specification, they outperform the other estimators that rely on functional form assumptions and also have a better estimation performance in terms of the MSE of individual effects than KSS. DGP3 characterizes significant time variations in the individual effects. As we can see from

Table 3, the BE1 and BE 2 estimators have a comparable performance to the KSS estimator and outperform it again for experiments with relatively large panels such as (

n = 100 and 200). The other estimators, whose effects rely on parametric assumptions of simple functional forms, are largely dominated by the Bayesian estimators.

DGP4 is a mixture of the scenarios for the time varying effects used in DGP1–DGP4.

Table 4 indicates that that BE1 and BE2 outperform the BC, CSSW, and CSSG estimators in terms of the MSE of the individual effects and are comparable to KSS.

As we have pointed out, for all the DGPs, the slope parameter estimates are comparable across the six different estimators. However, this is not the case for the individual effects. This is a drawback for the estimation of technical or efficiency change since such measures are usually based on an unobserved latent variable that is estimated using some function of the model residuals. For example, the individual effects correspond to the technical efficiencies in stochastic frontier analysis. Our new Bayesian estimators for the stochastic frontier would appear to be excellent candidates among competing estimators for modeling a production or cost frontier and it is to topic that we now turn to in our empirical model of banking efficiency.