1. Introduction

With the rapid advancements in science and technology, ultrahigh-dimensional data are becoming increasingly common across various fields of scientific research: these include, but are not limited to, biomedical imaging, neuroscience, tomography, and tumor classifications, where the number of variables or parameters can exponentially increase with the sample size. In such a situation, an important task is to recover the important features from thousands or even millions of predictors.

In order to rapidly lower the huge dimensionality of data to an acceptable size, Fan and Lv [

1] introduced the method of sure independence screening, which ranks the importance of predictors according to their marginal utilities. Since then, a series in the literature has been devoted to this issue, in various scenarios, which can basically be divided into two groups: the model-based and the model-free methods. For the former, the typical literature includes, but is not limited to, Wang [

2], Chang et al. [

3], and Wang and Leng [

4] for linear models, Fan et al. [

5] for additive models, and Fan et al. [

6] and Liu et al. [

7] for varying coefficients models, amongst others. Model-based methods are computationally efficient, but can suffer from the risk of model misspecification. To avoid such a risk, researchers developed the model-free methods, without specifying a concrete model. For example, Zhu et al. [

8] proposed a screening procedure named SIRS for the multi-index model; Li et al. [

9] introduced a sure screening procedure via the distance correlation called DCS; for the heterogeneous data, He et al. [

10] developed a quantile-adaptive screening method; Lin et al. [

11] proposed a novel approach, dubbed Nonparametric Ranking Feature Screening (NRS), leveraging the local information flows of the predictors; Lu and Lin [

12] developed a conditional model-free screening procedure, utilizing the conditional distance correlation; and Tong et al. [

13] proposed a model-free conditional feature screening method with FDR control. Additionally, Ref. [

14] recently introduced a data-adaptive threshold selection procedure with error rate control, which is applicable to most kinds of popular screening methods. Ref. [

15] proposed a feature screening method for the interval-valued response.

The literature listed above mainly concentrated on the continuous response; however, ultrahigh-dimensional grouped data, in which the label of a sample can be seen as a categorical response, are also very frequently encountered in many scientific research fields—specifically, for biostatisticians who work on multi-class categorical problems. For example, in the diagnosis of tumor classification, researchers need to judge the type of tumor, according to the gene expression level. If we do not reduce the dimension of the predictors, the established classifier will behave as poorly as random guessing, due to the diverging spectra and accumulation of noise (Fan et al. [

16]); therefore, it makes sense to screen out the null predictors before further analysis. The following are the existing works that have made some progress on this issue. Huang et al. [

17] proposed a screening method based on Pearson chi-square statistics, for discrete predictors. Pan et al. [

18] set the maximal mean difference for each pair of classes as a ranking index and, based on this, proposed a corresponding screening procedure. Mai and Zou [

19] built a Kolmogorov–Smirnov type distance, to measure the dependence between two variables, and used it as a filter for screening out noise predictors. Cui et al. [

20] proposed a screening method via measuring the distance of the distribution of the subgroup from the whole distribution. Recently, Xie et al. [

21] established a category-adaptive screening procedure, by calculating the difference between the conditional distribution of the response and the marginal one. All these aforementioned methods were clearly motivated, and have been examined effectively for feature screening in different settings.

In this paper, we propose a new robust screening method for ultrahigh-dimensional grouped data. Our research was partly motivated by an empirical analysis of a leukemia dataset, consisting of 72 observations and 3571 genes, of which 47 were acute lymphocytic leukemia (ALL), and 25 were acute myelogenous leukemia (AML).

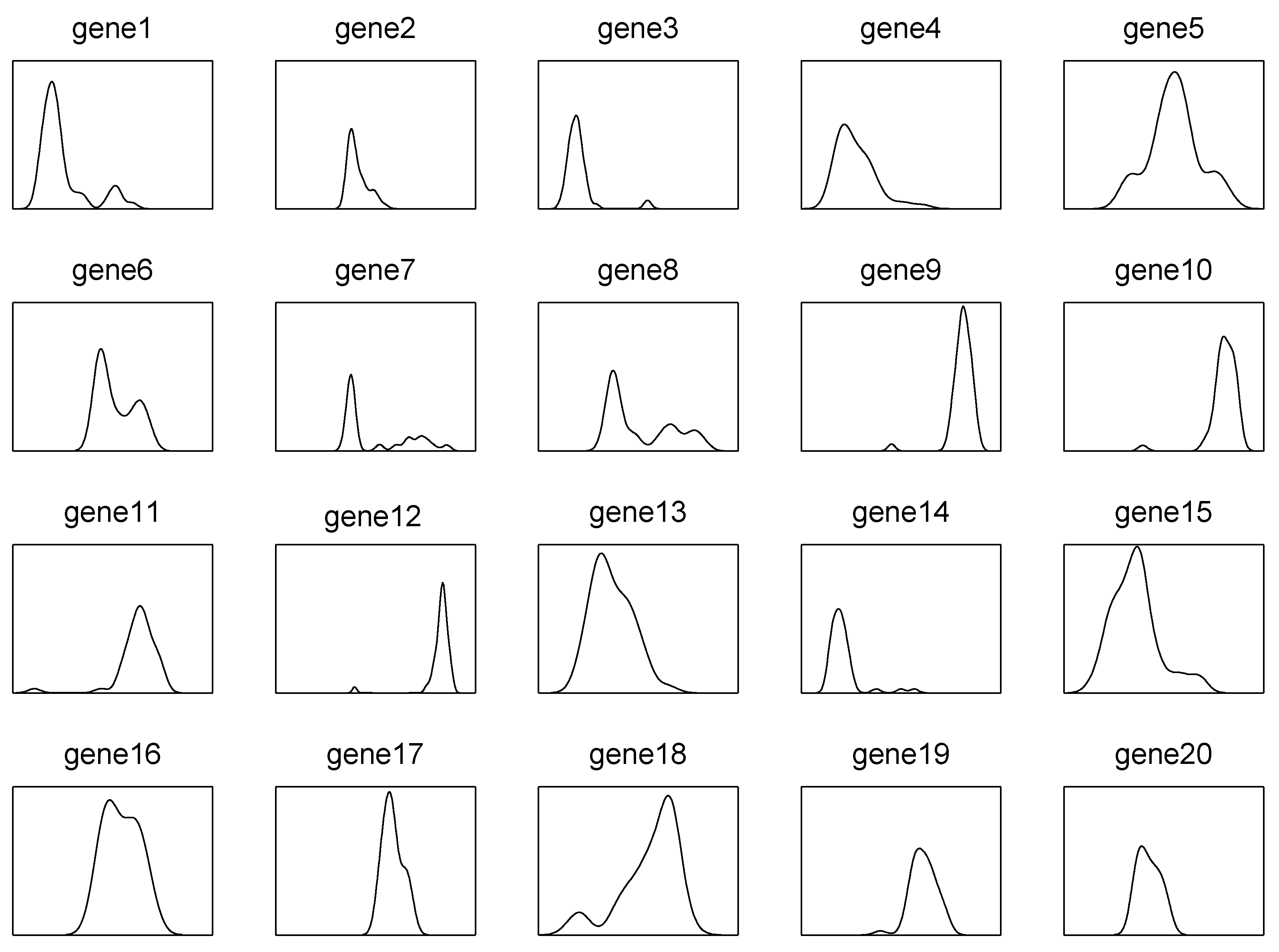

Figure 1 plots the density function of the first 20 features selected from the 3571 genes of the 47 ALLs, from which it can be seen that all of them are far from being a regular distribution, most of them have sharp peaks and heavy tails (e.g., gene 9 and gene 12), and some of them are even multi-modal (e.g., gene 6 and gene 8), although these samples are from the same ALL group. This phenomenon challenges most of the existing methods. For example, the method in Pan et al. [

18] might fail, if data are not normally distributed, and the method in Xie et al. [

21] might lose efficiency when the distribution of a predictor is multi-modal. It is known that quantile-based statistics are not sensitive to outliers and heavy-tailed distributed data; thus, it was expected that the quantile-based screening method would be robust against heterogeneous data. Furthermore, compared to point estimation, quantile-based statistics can usually provide a more detailed picture of a predictor at different quantile levels. Motivated by the above discussion, we propose a quantile-composited screening approach, by aggregating the distributional information over many quantile levels. The basic idea of our method is straightforward. If

has no contribution to predicting the category of an outcome variable, denoted by

Y, at the

-th quantile level, the conditional quantile function of

given

Y should be equal to the unconditional one, i.e,

. Moreover, if

and

Y are independent, we have

for all

, where a.s. means ‘almost surely’. Thus, the equality

plays a key role in measuring the independence between

Y and

. To quantify this kind of independence, we show that

for a given

is equivalent to the independence between the index variable

and the label variable

Y. Then, the equality between

and

is converted to testing the independence between two discrete variables, which can be easily checked by the Pearson chi-square test statistics. Finally, we aggregate all the discriminative information over the whole distribution in an efficient way, based on which, we establish the corresponding screening procedure. Our newly proposed screening method enjoys the following salient features. First of all, compared to the existing methods, it is robust against non-normal data, which are very common in high dimensions. Secondly, it is model-free, in the sense that we do not need to assume a specific statistical model, such as the linear or quadratic discriminant analysis model, between the predictor and the outcome variable. Thirdly, its ranking index has a very simple form, and the computational complexity is controlled at the sample size level, so that the proposed screening method can be implemented very quickly. In addition, as a by-product, our new method is invariant, in regard to the monotonic transformations of the data.

The rest of the paper is organized as follows.

Section 2 gives the details of the quantile-composited screening procedure, including the methodological development, theoretical properties, and some extensions.

Section 3 provides convincing numerical results and two real data analyses. Technical proofs of the main results are deferred to

Appendix A.

2. A New Feature Screening Procedure

Let

be the

p-dimensional predictor, and without loss of generality, let

be the outcome variable indicating which group

belongs to, where

K is allowed to grow with the sample size at some certain rate. Define the index set of active predictors corresponding to quantile level

as

where

. Denote by

the cardinality of

;

is usually less than the sample size

n under the sparsity assumption. Denote by

the

-th quantile of

. Intuitively, if

does not functionally depend on

Y, it should be the case that

for all

Y: in other words,

has no ability to predict its label

Y at the quantile level

. On the other hand, if

is far away from

for some

Y,

will be helpful for predicting the category of

Y. Hence, the difference between

and

determines whether

is a contributive predictor at the

-th quantile level. The following lemma was of central importance to our methodological development.

Lemma 1. Let Y be the outcome variable, and let X be a continuous variable; then, we have two conclusions:

- (1)

if and only if the Bernoulli variable and Y are independent, where is the indicator function;

- (2)

for if Y and are independent.

The proof of this lemma is presented in

Appendix A. Conclusions (1) and (2) imply that the independence between

and

Y for

is equivalent to the independence between

and

Y; consequently, it is natural to apply the Pearson chi-square statistics, to measure the independence between them. Let

,

,

. Then, the dependence of

on the response

Y, at quantile level

, can be evaluated by

Clearly, iff and Y are independent.

provides a way to identify whether

is active at quantile level

. However, it is not easy to determine the informative quantiles for every predictor. Moreover, the active predictors could be contributive at many quantiles instead of a single one. For these reasons, we propose a quantile-composited screening index, which makes an integration for

at the interval

. More specifically, the ranking index is defined as

where

is some positive weight function, and

is a value tending to 0 at some certain rate related to the sample size, which will be specified in the next section. Note that

avoids making integration at the endpoints 0 and 1, because

could be ill-defined at the two points. Theoretically,

if

X is independent of

Y, regardless of the choice of

, which is easy to prove according to Lemma 1. According to the above analysis,

is always non-negative for

, and will equal 0 if

is independent of

Y.

For the choice of weight

, the different settings will lead to different values of

. For example, a naive setting is

for

, which means that all

are treated equally. Clearly, this is not a good option. Intuitively, if

is active,

should be large for some

in

. Then, we should place more weight on these quantile levels. For this reason, we set

; then, the resultant

has the following form:

In addition, for the precise-definition of , we restrict when for all .

In the following, we give the estimation of

. Suppose

is a set of i.i.d samples from

, where i.i.d means independent and identically distributed. Let

be the

th sample quantile of

and

,

,

and

can be estimated as

,

and

, respectively. Then, by plug-in method,

is estimated as

and

is estimated as

Regarding , we make the following remarks:

Remark 1. - 1.

If , follows the distribution with degrees of freedom [22]. - 2.

is invariant to any monotonic transformation on predictors, because is free of the monotonic transformation on .

- 3.

The computation of involves the integration of τ. We can calculate it by an approximate numerical method as - 4.

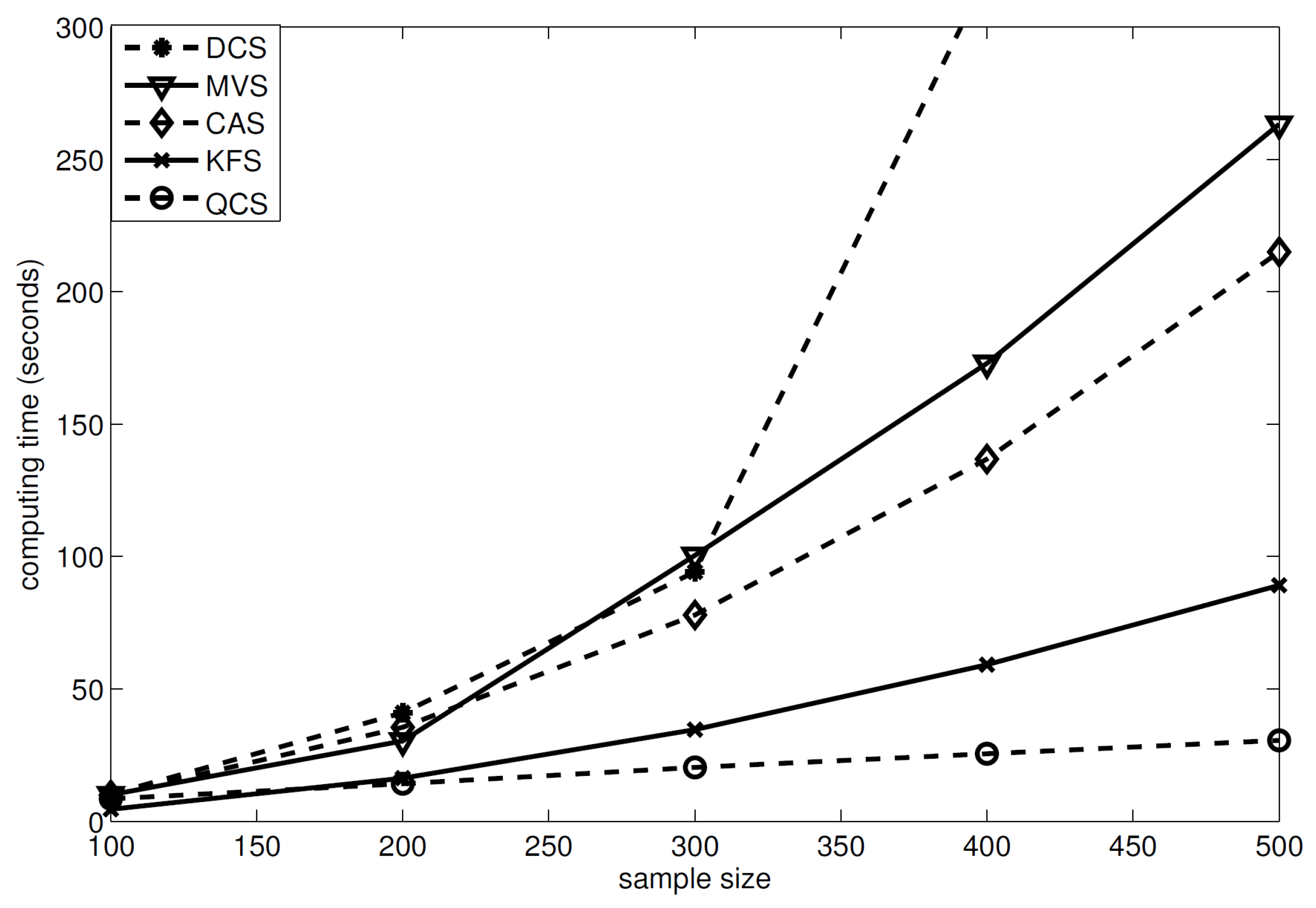

The choice of s. Intuitively, a large s will make the approximation of integration more accurate. However, our method aims to efficiently separate the active predictors from the null ones, instead of getting an accurate estimate of . Figure 2 displays the density curves of marginal utilities of active and inactive predictors versus different choices of s with Example 2 in Section 3. It can be seen that the choice of s does not affect the distribution of either active predictors or inactive ones. - 5.

Figure 2 also shows that the gap between the indices of active predictors and inactive ones is clear, which means the proposed method is efficient at separating the influential predictors from the inactive ones well. Moreover, it can also be observed that the marginal utilities of active predictors are, with a smaller variance, comparable to those of inactive ones, which implies that the new method is sensitive to the active predictors.

With the estimation of

, the index set of active predictors can be estimated as

where

c and

are two predetermined thresholding values. In practice, we usually take a hard threshold criterion, to determine the submodel as

where

is a predetermined threshold value. We call the above quantile-composited screening procedure, based on

as QCS.

2.1. Theoretical Properties

This section provides the sure screening property of the newly proposed method, which guarantees the effectiveness of the newly proposed method. The corresponding technical details of the proof can be found in

Appendix A.

We first prove the consistency of . To this end, we require the following condition.

(C1): There exist two constants , such that for with .

Condition C1 requires that the sample size of each subgroup can be neither too small nor too large. The condition allows that the number of categories can diverge to infinity at some certain rate, with the increase of sample size. The following theorem states the consistency of .

Theorem 1. For a given quantile , under condition (C1),where . This theorem shows that the consistency of can be guaranteed under suitable conditions. In addition, it reminds us that we cannot select the quantiles either very close to zero or to one, because the items would collapse to zero, which would make the consistency of problematic. Based on the above theorem, the following theorem provides the consistency of .

Corollary 1. According to the conditions in Theorem 1, if , This theorem states that the gap between and will disappear with probability tending to 1 as . This theorem also shows that our method can address the dimensionality of order .

In the following, we provide the sure screening property of our method.

Theorem 2. Sure screening property: let ; then, under condition (C1) and the following condition, ,where is the cardinality of . 2.2. Extensions

Up to this point, the new methods have been designed for ultrahigh-dimensional categorical data. In this section, to make the proposed methods applicable in more settings, we give two natural extensions for our method, and in the next section, we use some numerical simulation, to illustrate the effectiveness of these extensions.

Extension to Genome-Wide Association Studies. We first apply our method to the typical case of the genome-wide association studies (GWAS), where the predictors are single-nucleotide polymorphisms (SNPs) in three classes, denoted by

, and the response is continuous. Our strategy for this problem is straightforward: define the sample space

as

, respectively; then, the marginal utility of

at quantile level

is defined as

where

,

,

,

for

.

Extension to additive models. We can extend our method to the model in which both the response and predictors are continuous. To make our method applicable, we first slice the predictors into several segments, according to some threshold values. For example, taking the quartiles of the predictor as the cut points, then the predictors are transformed to a balanced four-categorical variable. Specifically, let

be

N percentiles of

, and define

, where

; here, we define

and

. Then, similar to (

9), we define the marginal utility of

at quantile level

as

where

,

,

for

.