Traffic Sign Detection and Recognition Using YOLO Object Detection Algorithm: A Systematic Review

Abstract

1. Introduction

2. Materials and Methods

2.1. Traffic Signs

2.2. YOLO Object Detection Algorithm

2.3. State of the Art

2.4. Systematic Literature Review Methodology

2.5. Research Questions

2.6. Search Strategy

2.6.1. Databases of Digital Library

2.6.2. Timeframe of Study

2.6.3. Keywords

2.6.4. Inclusion and Exclusion Criteria

- Studies must evaluate traffic sign detection or recognition using the YOLO object detection algorithm.

- Only studies published between 2016 and 2022 are considered.

- The study should be published in a peer-reviewed journal or conference proceedings.

- Preference is given to documents categorized as “Journal” or “Conference” articles.

- The study must be in English.

- Studies that do not utilize the YOLO object detection algorithm for traffic sign detection or recognition.

- Research not focused on traffic sign detection or recognition.

- Publications outside the 2016–2022 timeframe.

- Non-peer-reviewed articles and documents.

- Studies published in languages other than English.

2.6.5. Study Selection

2.7. Data Extraction

2.8. Data Synthesis

3. Results Based on RQs

3.1. RQ1 [Applications]: What Are the Main Applications of Traffic Sign Detection and Recognition Using YOLO?

3.2. RQ2 [Datasets]: What Traffic Sign Datasets Are Used to Train, Validate, and Test These Systems?

- The German Traffic Sign Detection Benchmark (GTSDB) and the German Traffic Sign Recognition Benchmark (GTSRB): The GTSDB and GTSRB datasets are two popular resources for traffic sign recognition research. They contain high-quality images of various traffic signs taken in real-world scenarios in Germany. The images cover a wide range of scenery, times of day, and weather conditions, making them suitable for testing the robustness of recognition algorithms. The GTSDB dataset consists of 900 images, split into 600 for training and 300 for validation. The GTSRB dataset is larger, with more than 50,000 images of 43 classes of traffic signs, such as speed limit signs, stop signs, yield signs, and others. Images are also annotated with bounding boxes and class labels. Both datasets are publicly available and have been used in several benchmarking studies [7,13,37,38,40,46,47,50,52,53,55,58,59,60,63,64,65,66,68,69,70,71,72,77,80,82,92,96,101,103,104,106,109,112,114,115,117,118,125,127,129,130,136,137].

- Tsinghua Tencent 100K (TT100K): The TT100K dataset is a large-scale traffic sign benchmark created by Tsinghua University and Tencent. It consists of 100,000 images from Tencent Street View panoramas, which contain 30,000 traffic sign instances. The images vary in lighting and weather conditions, and each traffic sign is annotated with its class label, bounding box, and pixel mask. The dataset is suitable for traffic sign detection and classification tasks in realistic scenarios. The TT100K dataset is publicly available and can be used for both traffic sign detection and classification tasks [35,38,41,45,51,65,67,80,84,94,95,102,105,108,109,111,113,121,124,128,131,133,134,135].

- Chinese Traffic Sign Dataset (CTSDB y CCTSDB): The CTSDB and CCTSDB datasets are two large-scale collections of traffic sign images for CV research. The CTSDB dataset consists of 10,000 images captured from different scenes and angles, covering a variety of types and shapes of traffic signs. The CCTSDB dataset is an extension of the CTSD dataset, with more than 20,000 images that contain approximately 40,000 traffic signs. The CCTSDB dataset also includes more challenging scenarios, such as occlusion, illumination variation, and scale change [8,18,36,39,60,65,68,81,82,83,85,87,108,116,130,132,135,136,138].

- Belgium Traffic Sign Detection Benchmark and Belgium Traffic Sign Classification Benchmark (BTSDB y BTCDB): The BTSDB dataset, specifically designed for traffic sign detection in Belgium, comprises a total of 7095 images. These images are further divided into 4575 training images and 2520 testing images. The dataset encompasses a diverse range of image sizes, spanning from 11 × 10 pixels to 562 × 438 pixels. The Belgium Traffic Sign Classification Benchmark is a dataset of traffic sign images collected from eight cameras mounted on a van. The dataset contains 62 types of traffic signs and is divided into training and testing sets. The dataset is useful for evaluating traffic sign recognition algorithms, which are essential for intelligent transport systems and autonomous driving. The dataset also provides annotations, background images, and test sequences for further analysis [55,78,101,123].

- Malaysian Traffic Sign Dataset (MTSD): The MTSD includes a variety of traffic sign scenes to be used in traffic sign detection, having 1000 images with different resolutions (FHD pixels; 4K-UHD pixels; UHD+ pixels). It also has 2056 images of traffic signs, divided into five categories, for recognition [11,118].

- Korea Traffic Sign Dataset (KTSD): This dataset has been used to train and test various deep learning architectures, such as YOLOv3 [57], to detect three different classes of traffic signs: prohibitory, mandatory, and danger. The KTSD contains 3300 images of various traffic signs, captured from different roads throughout South Korea. These images feature traffic signs of varying sizes, providing a diverse and comprehensive dataset for traffic sign detection and recognition research [57,59,64].

- Berkley Deep Drive (BDD100K): The Berkeley DeepDrive (BDD) project has released a large-scale and diverse driving video dataset called BDD100K. It contains 100,000 videos with rich annotations to evaluate the progress of image recognition algorithms on autonomous driving. The dataset is available for research purposes and can be downloaded from the BDD website (https://bdd-data.berkeley.edu/, accessed on 12 April 2023). The images in the dataset are divided into two sets: one for training and one for validation. The training set contains 70% of the images, while the validation set contains the remaining 30% [55,90].

- Thai (Thailand) Traffic Sign Dataset (TTSD): The data collection process takes place in the rural areas of Maha Sarakham and Kalasin Provinces within the Kingdom of Thailand. It encompasses 50 distinct classes of traffic signs, each comprising 200 unique instances, resulting in a comprehensive sign dataset that comprises a total of 9357 images [101,126].

- Swedish Traffic Sign Dataset (STSD): This public sign dataset comprises 20,000 images, with 20% of them labeled. Additionally, it contains 3488 traffic signs from Sweden [104].

- DFG Traffic Sign Dataset (DFG): The DFG dataset comprises approximately 7000 traffic sign images captured from highways in Slovenia. These images have a resolution of pixels. To facilitate training and evaluation, the dataset is divided into two subsets, with 5254 images designated for training and the remaining 1703 images for validation. The dataset features a total of 13,239 meticulously annotated instances in the form of polygons, each spanning over 30 pixels. Additionally, there are 4359 instances with less precise annotations represented as bounding boxes, measuring less than 30 pixels in width [12].

- Taiwan Traffic Sign Dataset (TWTSD: The TWTSD dataset comprises 900 prohibitory signs from Taiwan with a resolution of pixels. The training and validation subsets are divided into 70% and 30%, respectively [75].

- Taiwan Traffic Sign (TWSintetic): The Taiwan Traffic Sign (TWSintetic) dataset is a collection of traffic signs from Taiwan, consisting of 900 images, and it has been expanded using generative adversarial network techniques [9].

- Belgium Traffic Signs (KUL): The KUL dataset encompasses over 10,000 images of traffic signs from the Flanders region in Belgium, categorized into more than 100 distinct classes [89].

- Chinese Traffic Sign Detection Benchmark (CSUST): The CSUST dataset comprises over 15,000 images and is continuously updated to incorporate new data [8].

- Foggy Road Image Database (FRIDA): The Foggy Road Image Database (FRIDA) contains 90 synthetic images from 18 scenes depicting various urban road settings. In contrast, FRIDA2 offers an extended collection, with 330 images derived from 66 road scenes. For each clear image, there are corresponding counterparts featuring four levels of fog and a depth map. The fog variations encompass uniform fog, heterogeneous fog, foggy haze, and heterogeneous foggy haze [71,114].

- Foggy ROad Sign Images (FROSI): The FROSI is a database of synthetic images easily usable to evaluate the performance of road sign detectors in a systematic way in foggy conditions. The database contains a set of 504 original images with 1620 road signs (speed and stop signs, pedestrian crossing) placed at various ranges, with ground truth [71,114].

- MarcTR: This dataset contains seven traffic sign classes, collected by using a ZED stereo camera mounted on top of Racecar mini autonomous car [79].

- Turkey Traffic Sign Dataset: The Turkey Traffic Sign Dataset is an essential resource for the development of traffic and road safety technologies, specifically tailored for the Turkish environment. It comprises approximately 2500 images, including a diverse range of traffic signs, pedestrians, cyclists, and vehicles, all captured under real-world conditions in Turkey [77].

- Vietnamese Traffic Sign Dataset: This comprehensive dataset encompasses 144 classes of traffic signs found in Vietnam, categorized into four distinct groups for ease of analysis and application. These include 40 prohibitory or restrictive signs, 47 warning signs, 10 mandatory signs, and 47 indication signs, providing a detailed overview of the country’s traffic sign system [76].

- Croatia Traffic Sign Dataset: This dataset consists of 28 video sequences at 30 FPS with a resolution of pixels. They were taken in the urban traffic of the city of Osijek, Croatia [10].

- Mexican Traffic Sign Dataset: The dataset consists of 1284 RGB images, featuring a total of 1426 traffic signs categorized into 11 distinct classes. These images capture traffic signs from a variety of perspectives, sizes, and lighting conditions, ensuring a diverse and comprehensive collection. The traffic sign images were sourced from a range of locations including avenues, roadways, parks, green areas, parking lots, and malls in Ciudad Juárez, Chihuahua, and Monterrey, Nuevo Leon, Mexico, providing a broad representation of the region’s signage [43].

- WHUTCTSD: It is a more recent dataset with five categories of Chinese traffic signs, including prohibitory signs, guide signs, mandatory signs, danger warning signs, and tourist signs. Data were collected by a camera at a pixel resolution during different time periods. It consists of 2700 images, which were extracted from videos collected in Wuhan, Hubei, China [62].

- Bangladesh Road Sign 2021 (BDRS2021): This dataset consists of 16 classes. Each class consists of 168 images of Bangladesh road signs [69], offering a rich source of data that capture the specific traffic sign environment of Bangladesh, including its urban, rural, and varied geographical landscapes.

- New Zealand Traffic Sign 3K (NZ-TS3K): This dataset is a specialized collection focused on traffic sign recognition in New Zealand [70]. It features over 3000 images, showcasing a wide array of traffic signs commonly found across the country. These images are captured in high resolution (1080 by 1440 pixels), providing clear and detailed visuals essential for accurate recognition and analysis. The dataset is categorized into multiple classes, each representing a different type of traffic sign. These include Stop (236 samples), Keep Left (536 samples), Road Diverges (505 samples), Road Bump (619 samples), Crosswalk Ahead (636 samples), Give Way at Roundabout (533 samples), and Roundabout Ahead (480 samples), offering a diverse range of signs commonly seen on Auckland’s roads.

- Mapillary Traffic Sign Dataset (MapiTSD): The Mapillary Traffic Sign Dataset is an expansive and diverse collection of traffic sign images, sourced globally from the Mapillary platform’s extensive street-level imagery. It features millions of images from various countries, each annotated with automatically detected traffic signs. This dataset is characterized by its wide-ranging geographic coverage and diversity in environmental conditions, including different lighting, weather, and sign types. Continuously updated, it provides a valuable up-to-date resource for training and validating traffic sign recognition algorithms [38].

- Specialized Research Datasets: These datasets consist of traffic sign data compiled by various authors. Generally, they lack detailed public information and are not openly accessible. This category includes datasets from a variety of countries: South Korea [91,103], India [49,99], Malaysia [97], Indonesia [98], Slovenia [54], Argentina [139], Taiwan [74,107,140], Bangladesh [69], and Canada [61]. Each dataset is tailored to its respective country, reflecting the specific traffic signs and road conditions found there.

- Unknown or General Databases (Unknown): Consist of those datasets that do not have any certain information on the subject of traffic [22,42,64,73,86,88,93,99,100,110,119,122,141], or directly constitute general databases such as MSCOCO [63,73,99], KITTI [132], or those that are downloaded from repositories such as Kaggle [42].

3.3. RQ3 [Metrics]: What Metrics Are Used to Measure the Quality of Object Detection in the Context of Traffic Sign Detection and Recognition Using YOLO?

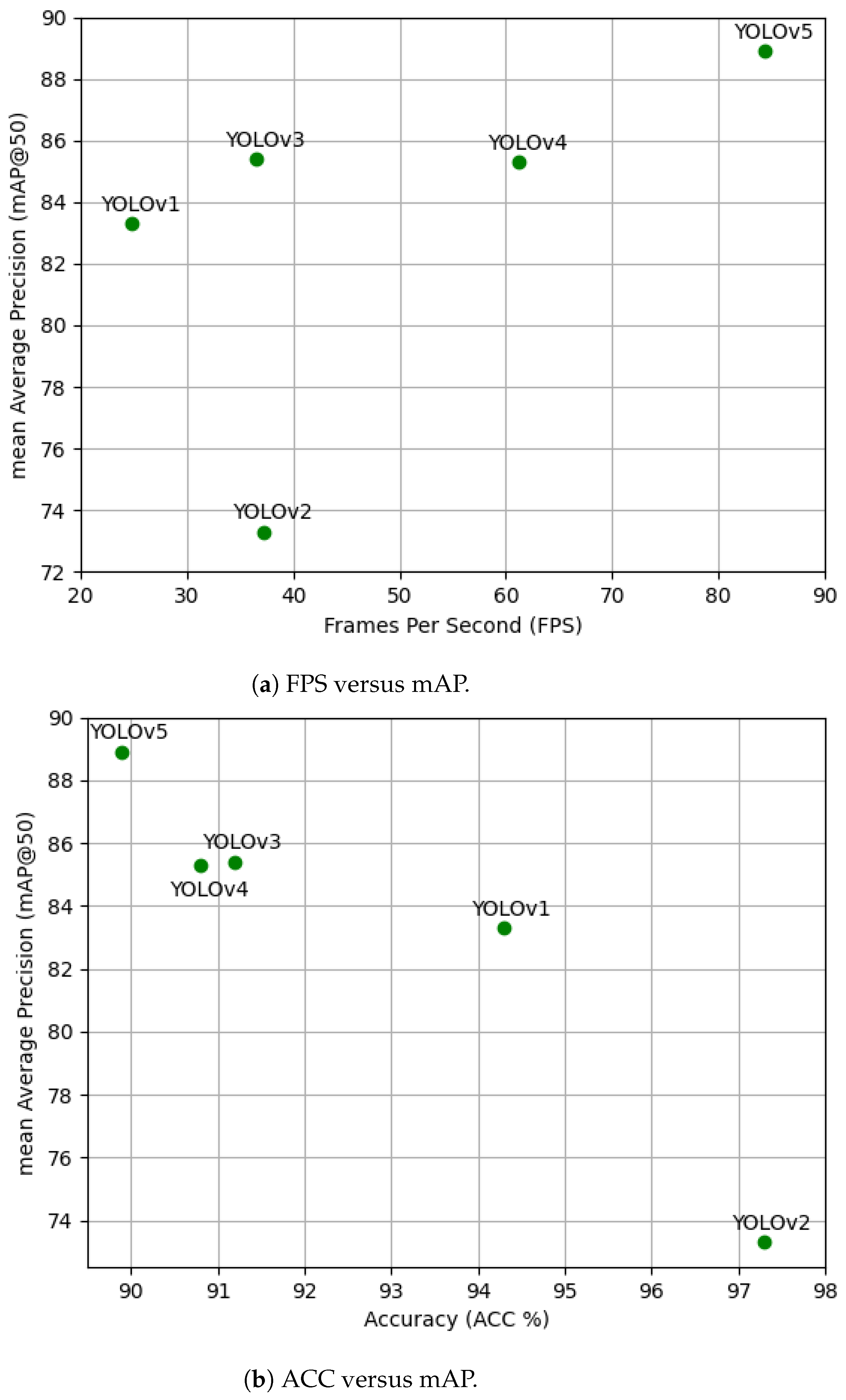

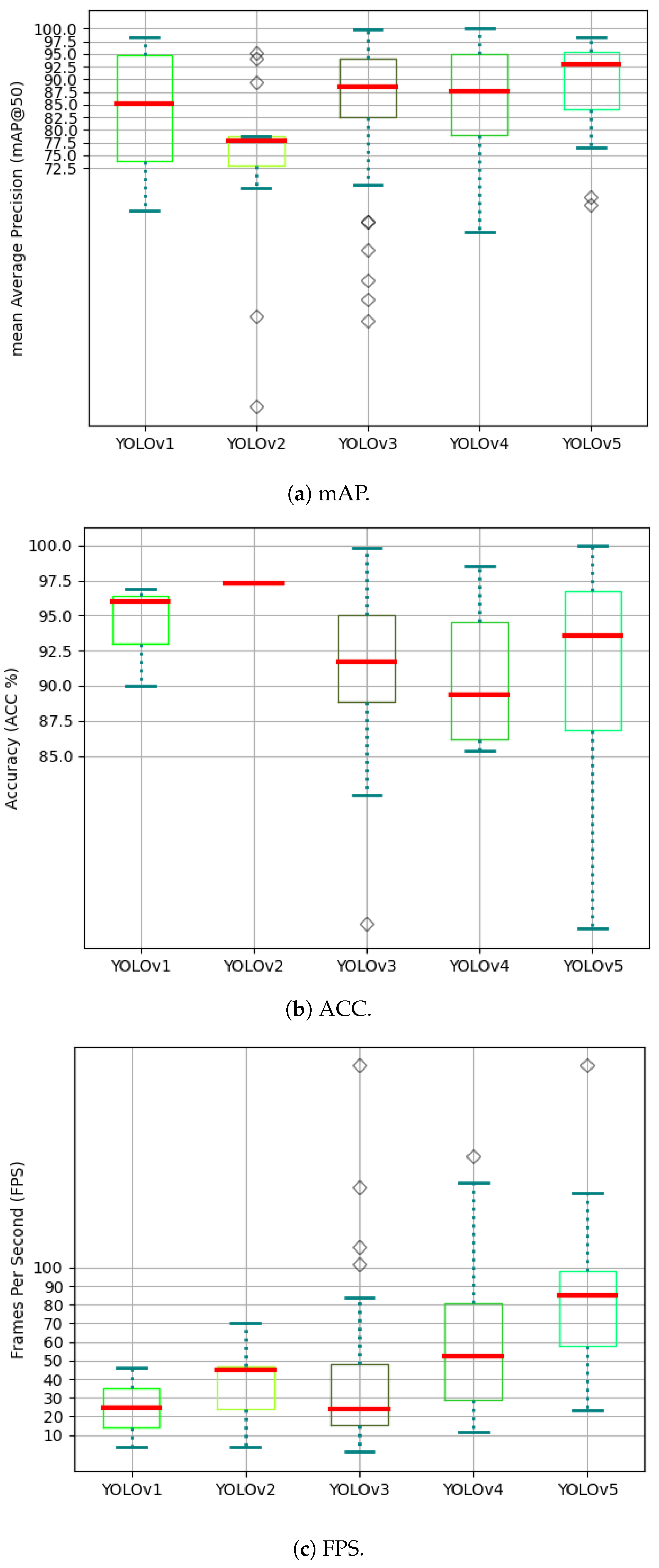

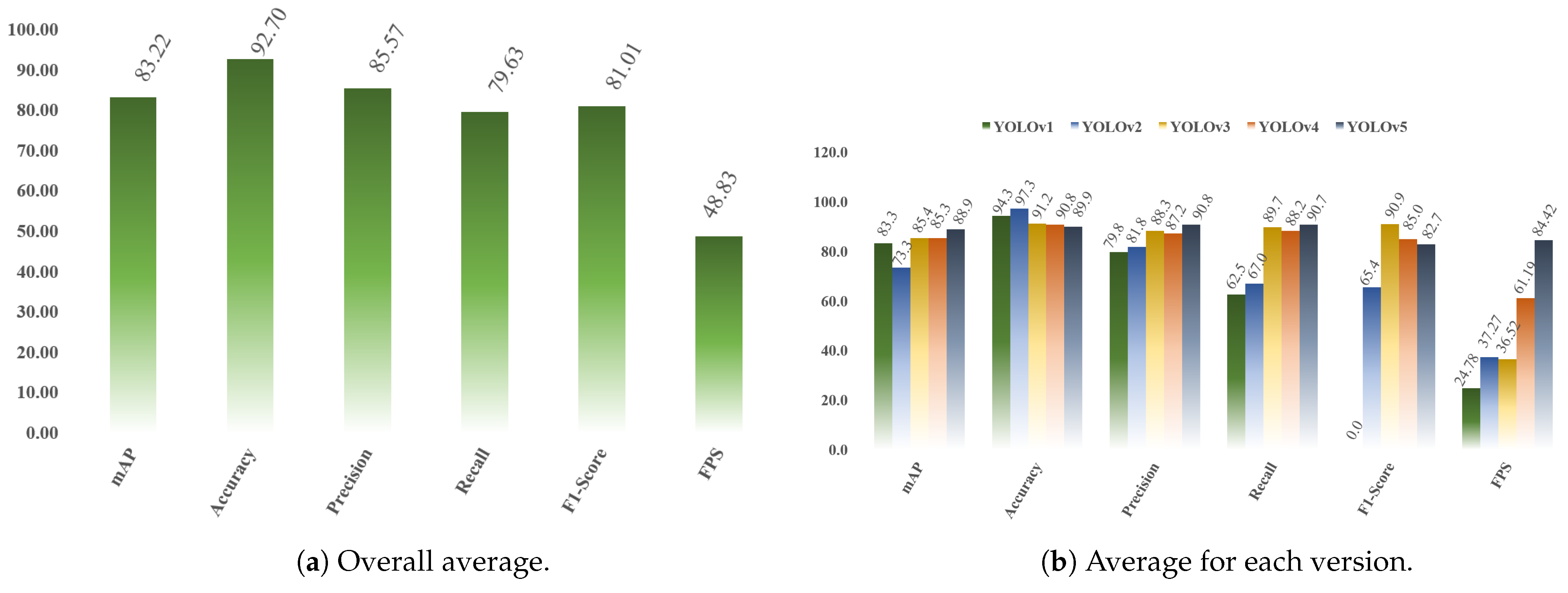

3.4. Comparing Metrics among Different Versions of YOLO

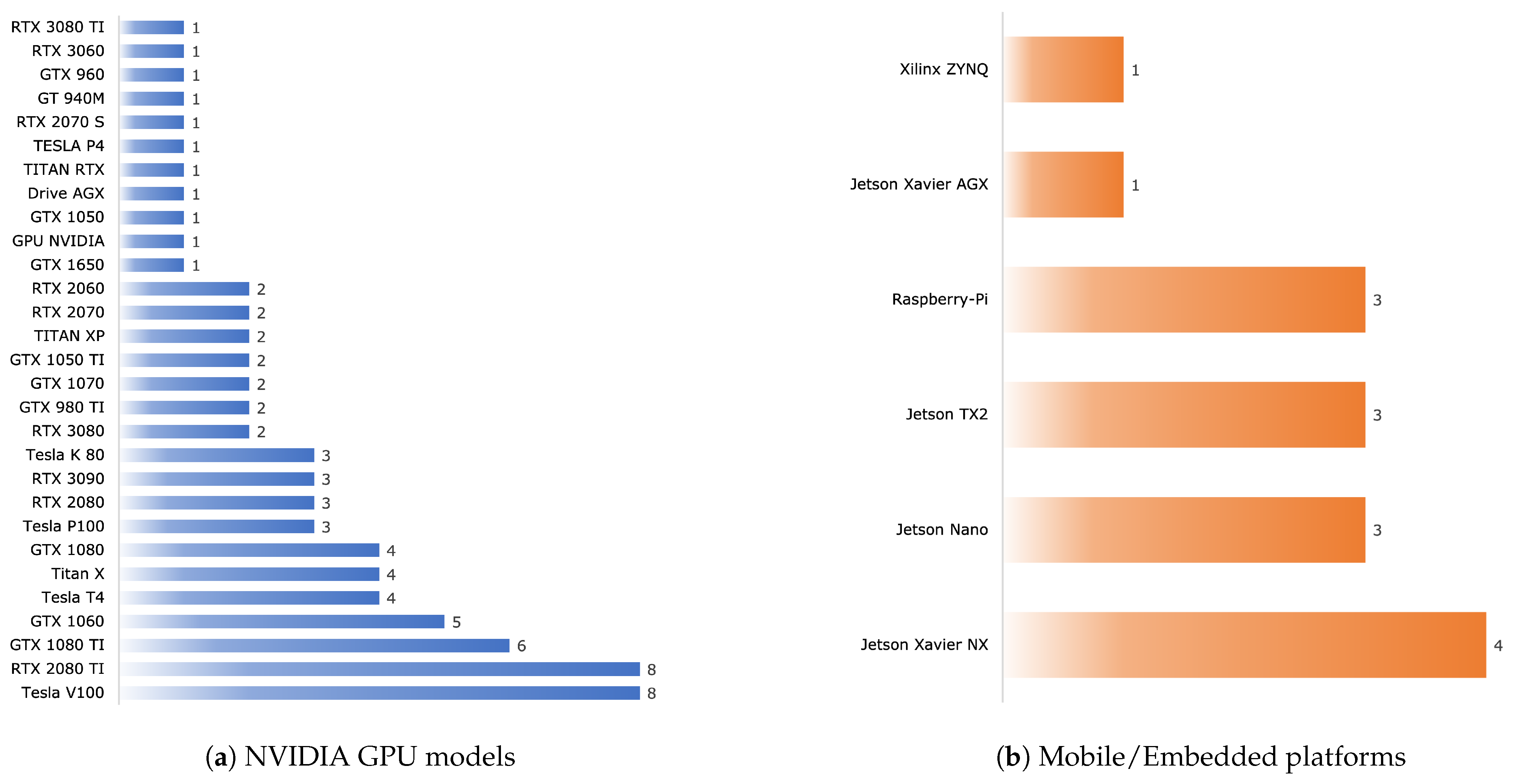

3.5. RQ4 [Hardware]: What Hardware Is Used to Implement Traffic Sign Recognition and Detection Systems Based on YOLO?

3.6. RQ5 [Challenges]: What Are the Challenges Encountered in the Detection and Recognition of Traffic Signs Using YOLO?

3.7. Discussion

3.8. Possible Threats to SLR Validation

3.9. Construct Validity Threat

3.9.1. The Influence of Version Bias on Metrics

3.9.2. Geographic Diversity in Sign Datasets

3.9.3. Hardware Discrepancies

3.9.4. Limitations of Statistical Metrics

3.9.5. Threats to Internal Validation

3.9.6. Threats to External Validation

3.9.7. Threats to the Validation of the Conclusions

3.10. Future Research Directions

- What is the performance of traffic sign detection and recognition systems under extreme weather conditions?

- How could datasets for the development of traffic sign detection and recognition systems be standardized?

- What is the best object detector to be used in traffic sign detection and recognition systems?

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| WHO | World Health Organization |

| RTA | Road Traffic Accidents |

| ADAS | Advanced Driver Assistance Systems |

| DL | Deep Learning |

| CV | Computer Vision |

| YOLO | You Look Only Once |

| GPU | Graphics Processing Unit |

| SLR | Systematic Literature Review |

| RQ | Research question |

| FPS | Frames per second |

| ACC | Accuracy |

| AP | Average Precision |

| mAP | Mean Average Precision |

| F1 | F1 Score |

| IoU | Intersection over Union |

| WoS | Web of Science |

References

- Yu, G.; Wong, P.K.; Zhao, J.; Mei, X.; Lin, C.; Xie, Z. Design of an Acceleration Redistribution Cooperative Strategy for Collision Avoidance System Based on Dynamic Weighted Multi-Objective Model Predictive Controller. IEEE Trans. Intell. Transp. Syst. 2022, 23, 5006–5018. [Google Scholar] [CrossRef]

- World Health Organization (WHO). Global Status Report on Road Safety 2018. Available online: https://www.who.int/publications/i/item/WHO-NMH-NVI-18.20/ (accessed on 1 September 2022).

- Statista. Road Accidents in the United States-Statistics & Facts. Available online: https://www.statista.com/topics/3708/road-accidents-in-the-us/#dossierKeyfigures (accessed on 2 September 2022).

- European Parliament. Road Fatality Statistics in the EU. Available online: https://www.europarl.europa.eu/news/en/headlines/society/20190410STO36615/road-fatality-statistics-in-the-eu-infographic (accessed on 2 September 2022).

- Liu, C.; Li, S.; Chang, F.; Wang, Y. Machine Vision Based Traffic Sign Detection Methods: Review, Analyses and Perspectives. IEEE Access 2019, 7, 86578–86596. [Google Scholar] [CrossRef]

- Jiang, P.; Ergu, D.; Liu, F.; Cai, Y.; Ma, B. A Review of YOLO algorithm developments. Procedia Comput. Sci. 2022, 199, 1066–1073. [Google Scholar] [CrossRef]

- Rajendran, S.P.; Shine, L.; Pradeep, R.; Vijayaraghavan, S. Fast and accurate traffic sign recognition for self driving cars using retinanet based detector. In Proceedings of the 2019 International Conference on Communication and Electronics Systems (ICCES), Coimbatore, India, 17–19 July 2019; pp. 784–790. [Google Scholar]

- Yang, W.; Zhang, W. Real-time traffic signs detection based on YOLO network model. In Proceedings of the 2020 International Conference on Cyber-Enabled Distributed Computing and Knowledge Discovery (CyberC), Chongqing, China, 29–30 October 2020; pp. 354–357. [Google Scholar]

- Dewi, C.; Chen, R.C.; Liu, Y.T.; Jiang, X.; Hartomo, K.D. YOLO V4 for advanced traffic sign recognition with synthetic training data generated by various GAN. IEEE Access 2021, 9, 97228–97242. [Google Scholar] [CrossRef]

- Mijić, D.; Brisinello, M.; Vranješ, M.; Grbić, R. Traffic Sign Detection Using YOLOv3. In Proceedings of the 2020 IEEE 10th International Conference on Consumer Electronics (ICCE-Berlin), Berlin, Germany, 9–11 November 2020; pp. 1–6. [Google Scholar]

- Mohd-Isa, W.N.; Abdullah, M.S.; Sarzil, M.; Abdullah, J.; Ali, A.; Hashim, N. Detection of Malaysian Traffic Signs via Modified YOLOv3 Algorithm. In Proceedings of the 2020 International Conference on Data Analytics for Business and Industry: Way Towards a Sustainable Economy (ICDABI), Sakheer, Bahrain, 26–27 October 2020; pp. 1–5. [Google Scholar]

- Avramović, A.; Sluga, D.; Tabernik, D.; Skočaj, D.; Stojnić, V.; Ilc, N. Neural-network-based traffic sign detection and recognition in high-definition images using region focusing and parallelization. IEEE Access 2020, 8, 189855–189868. [Google Scholar] [CrossRef]

- Gu, Y.; Si, B. A novel lightweight real-time traffic sign detection integration framework based on YOLOv4. Entropy 2022, 24, 487. [Google Scholar] [CrossRef] [PubMed]

- Expertos en Siniestros. Significado de las Señales de Tráfico en España. Available online: http://www.expertoensiniestros.es/significado-senales-de-trafico-en-espana/ (accessed on 27 December 2019).

- Gupta, A.; Anpalagan, A.; Guan, L.; Khwaja, A.S. Deep learning for object detection and scene perception in self-driving cars: Survey, challenges, and open issues. Array 2021, 10, 100057. [Google Scholar] [CrossRef]

- Diwan, T.; Anirudh, G.; Tembhurne, J.V. Object detection using YOLO: Challenges, architectural successors, datasets and applications. Multimed. Tools Appl. 2022, 82, 9243–9275. [Google Scholar] [CrossRef]

- Boukerche, A.; Hou, Z. Object Detection Using Deep Learning Methods in Traffic Scenarios. ACM Comput. Surv. 2022, 54, 1–35. [Google Scholar] [CrossRef]

- Ayachi, R.; Afif, M.; Said, Y.; Ben Abdelali, A. An edge implementation of a traffic sign detection system for Advanced Driver Assistance Systems. Int. J. Intell. Robot. Appl. 2022, 6, 207–215. [Google Scholar] [CrossRef]

- Gámez-Serna, C.; Ruichek, Y. Classification of Traffic Signs: The European Dataset. IEEE Access 2018, 6, 78136–78148. [Google Scholar] [CrossRef]

- Almuhajri, M.; Suen, C. Intensive Survey About Road Traffic Signs Preprocessing, Detection and Recognition. In Advances in Data Science, Cyber Security and IT Applications; Alfaries, A., Mengash, H., Yasar, A., Shakshuki, E., Eds.; Springer International Publishing: Berlin/Heidelberg, Germany, 2019; pp. 275–289. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Liu, C.; Tao, Y.; Liang, J.; Li, K.; Chen, Y. Object detection based on YOLO network. In Proceedings of the 2018 IEEE 4th Information Technology and Mechatronics Engineering Conference (ITOEC), Chongqing, China, 14–16 December 2018; pp. 799–803. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Redmon, J. Darknet: Open Source Neural Networks in C. Available online: https://pjreddie.com/darknet/ (accessed on 15 May 2023).

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Wang, C.Y. Scaled-YOLOv4: Scaling Cross Stage Partial Network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021. [Google Scholar]

- Kasper-Eulaers, M.; Hahn, N.; Berger, S.; Sebulonsen, T.; Myrland, Ø.; Kummervold, P.E. Detecting heavy goods vehicles in rest areas in winter conditions using YOLOv5. Algorithms 2021, 14, 114. [Google Scholar] [CrossRef]

- Wali, S.B.; Abdullah, M.A.; Hannan, M.A.; Hussain, A.; Samad, S.A.; Ker, P.J.; Mansor, M.B. Vision-Based Traffic Sign Detection and Recognition Systems: Current Trends and Challenges. Sensors 2019, 19, 2093. [Google Scholar] [CrossRef]

- Borrego-Carazo, J.; Castells-Rufas, D.; Biempica, E.; Carrabina, J. Resource-Constrained Machine Learning for ADAS: A Systematic Review. IEEE Access 2020, 8, 40573–40598. [Google Scholar] [CrossRef]

- Muhammad, K.; Ullah, A.; Lloret, J.; Del Ser, J.; de Albuquerque, V.H.C. Deep Learning for Safe Autonomous Driving: Current Challenges and Future Directions. IEEE Trans. Intell. Transp. Syst. 2020, 22, 4316–4336. [Google Scholar] [CrossRef]

- Higgins, J.; Thomas, J.; Chandler, J.; Cumpston, M.; Li, T.; Page, M.; Welch, V. Cochrane Handbook for Systematic Reviews of Interventions Version 6.3 (Updated February 2022); Technical Report; Cochrane: London, UK, 2022. [Google Scholar]

- Cardoso Ermel, A.P.; Lacerda, D.P.; Morandi, M.I.W.M.; Gauss, L. Literature Reviews; Springer International Publishing: Cham, Switzerland, 2021. [Google Scholar] [CrossRef]

- Mengist, W.; Soromessa, T.; Legese, G. Method for conducting systematic literature review and meta-analysis for environmental science research. MethodsX 2020, 7, 100777. [Google Scholar] [CrossRef]

- Tarachandy, S.M.; Aravinth, J. Enhanced Local Features using Ridgelet Filters for Traffic Sign Detection and Recognition. In Proceedings of the 2021 Second International Conference on Electronics and Sustainable Communication Systems (ICESC), Coimbatore, India, 4–6 August 2021; pp. 1150–1156. [Google Scholar]

- Yan, W.; Yang, G.; Zhang, W.; Liu, L. Traffic Sign Recognition using YOLOv4. In Proceedings of the 2022 7th International Conference on Intelligent Computing and Signal Processing (ICSP), Xi’an, China, 15–17 April 2022; pp. 909–913. [Google Scholar]

- Kankaria, R.V.; Jain, S.K.; Bide, P.; Kothari, A.; Agarwal, H. Alert system for drivers based on traffic signs, lights and pedestrian detection. In Proceedings of the 2020 International Conference for Emerging Technology (INCET), Belgaum, India, 5–7 June 2020; pp. 1–5. [Google Scholar]

- Ren, X.; Zhang, W.; Wu, M.; Li, C.; Wang, X. Meta-YOLO: Meta-Learning for Few-Shot Traffic Sign Detection via Decoupling Dependencies. Appl. Sci. 2022, 12, 5543. [Google Scholar] [CrossRef]

- Liu, Y.; Shi, G.; Li, Y.; Zhao, Z. M-YOLO: Traffic sign detection algorithm applicable to complex scenarios. Symmetry 2022, 14, 952. [Google Scholar] [CrossRef]

- Li, M.; Zhang, L.; Li, L.; Song, W. YOLO-based traffic sign recognition algorithm. Comput. Intell. Neurosci. 2022, 2022, 2682921. [Google Scholar] [CrossRef] [PubMed]

- Yao, Z.; Song, X.; Zhao, L.; Yin, Y. Real-time method for traffic sign detection and recognition based on YOLOv3-tiny with multiscale feature extraction. Proc. Inst. Mech. Eng. Part D J. Automob. Eng. 2021, 235, 1978–1991. [Google Scholar] [CrossRef]

- Vasanthi, P.; Mohan, L. YOLOV5 and Morphological Hat Transform Based Traffic Sign Recognition. In Rising Threats in Expert Applications and Solutions; Springer: Berlin/Heidelberg, Germany, 2022; pp. 579–589. [Google Scholar]

- Rodríguez, R.C.; Carlos, C.M.; Villegas, O.O.V.; Sánchez, V.G.C.; Domínguez, H.d.J.O. Mexican traffic sign detection and classification using deep learning. Expert Syst. Appl. 2022, 202, 117247. [Google Scholar] [CrossRef]

- Nandavar, S.; Kaye, S.A.; Senserrick, T.; Oviedo-Trespalacios, O. Exploring the factors influencing acquisition and learning experiences of cars fitted with advanced driver assistance systems (ADAS). Transp. Res. Part F Traffic Psychol. Behav. 2023, 94, 341–352. [Google Scholar] [CrossRef]

- Yang, T.; Tong, C. Small Traffic Sign Detector in Real-time Based on Improved YOLO-v4. In Proceedings of the 2021 IEEE 23rd Int Conf on High Performance Computing & Communications; 7th Int Conf on Data Science & Systems; 19th Int Conf on Smart City; 7th Int Conf on Dependability in Sensor, Cloud & Big Data Systems & Application (HPCC/DSS/SmartCity/DependSys), Haikou, China, 20–22 December 2021; pp. 1318–1324. [Google Scholar]

- William, M.M.; Zaki, P.S.; Soliman, B.K.; Alexsan, K.G.; Mansour, M.; El-Moursy, M.; Khalil, K. Traffic signs detection and recognition system using deep learning. In Proceedings of the 2019 Ninth International Conference on Intelligent Computing and Information Systems (ICICIS), Cairo, Egypt, 8–10 December 2019; pp. 160–166. [Google Scholar]

- Hsieh, M.H.; Zhao, Q. A Study on Two-Stage Approach for Traffic Sign Recognition: Few-to-Many or Many-to-Many? In Proceedings of the 2020 International Conference on Machine Learning and Cybernetics (ICMLC), Adelaide, Australia, 2 December 2020; pp. 76–81. [Google Scholar]

- Gopal, R.; Kuinthodu, S.; Balamurugan, M.; Atique, M. Tiny Object Detection: Comparative Study using Single Stage CNN Object Detectors. In Proceedings of the 2019 IEEE International WIE Conference on Electrical and Computer Engineering (WIECON-ECE), Bangalore, India, 15–16 November 2019; pp. 1–3. [Google Scholar]

- Kavya, R.; Hussain, K.M.Z.; Nayana, N.; Savanur, S.S.; Arpitha, M.; Srikantaswamy, R. Lane Detection and Traffic Sign Recognition from Continuous Driving Scenes using Deep Neural Networks. In Proceedings of the 2021 2nd International Conference on Smart Electronics and Communication (ICOSEC), Trichy, India, 7–9 December 2021; pp. 1461–1467. [Google Scholar]

- Rani, S.J.; Eswaran, S.U.; Mukund, A.V.; Vidul, M. Driver Assistant System using YOLO V3 and VGGNET. In Proceedings of the 2022 International Conference on Inventive Computation Technologies (ICICT), Lalitpur, Nepal, 20–22 July 2022; pp. 372–376. [Google Scholar]

- Zhang, H.; Qin, L.; Li, J.; Guo, Y.; Zhou, Y.; Zhang, J.; Xu, Z. Real-time detection method for small traffic signs based on YOLOv3. IEEE Access 2020, 8, 64145–64156. [Google Scholar] [CrossRef]

- Garg, P.; Chowdhury, D.R.; More, V.N. Traffic sign recognition and classification using YOLOv2, Faster R-CNN and SSD. In Proceedings of the 2019 10th International Conference on Computing, Communication and Networking Technologies (ICCCNT), Kanpur, India, 6–8 July 2019; pp. 1–5. [Google Scholar]

- Devyatkin, A.; Filatov, D. Neural network traffic signs detection system development. In Proceedings of the 2019 XXII International Conference on Soft Computing and Measurements (SCM)), St. Petersburg, Russia, 23–25 May 2019; pp. 125–128. [Google Scholar]

- Ruta, A.; Li, Y.; Liu, X. Real-time traffic sign recognition from video by class-specific discriminative features. Pattern Recognit. 2010, 43, 416–430. [Google Scholar] [CrossRef]

- Novak, B.; Ilić, V.; Pavković, B. YOLOv3 Algorithm with additional convolutional neural network trained for traffic sign recognition. In Proceedings of the 2020 Zooming Innovation in Consumer Technologies Conference (ZINC), Novi Sad, Serbia, 26–27 May 2020; pp. 165–168. [Google Scholar]

- Arief, R.W.; Nurtanio, I.; Samman, F.A. Traffic Signs Detection and Recognition System Using the YOLOv4 Algorithm. In Proceedings of the 2021 International Conference on Artificial Intelligence and Mechatronics Systems (AIMS), Bandung, Indonesia, 28–30 April 2021; pp. 1–6. [Google Scholar]

- Manocha, P.; Kumar, A.; Khan, J.A.; Shin, H. Korean traffic sign detection using deep learning. In Proceedings of the 2018 International SoC Design Conference (ISOCC), Daegu, Republic of Korea, 12–15 November 2018; pp. 247–248. [Google Scholar]

- Rajendran, S.P.; Shine, L.; Pradeep, R.; Vijayaraghavan, S. Real-time traffic sign recognition using YOLOv3 based detector. In Proceedings of the 2019 10th International Conference on Computing, Communication and Networking Technologies (ICCCNT), Kanpur, India, 6–8 July 2019; pp. 1–7. [Google Scholar]

- Khan, J.A.; Yeo, D.; Shin, H. New dark area sensitive tone mapping for deep learning based traffic sign recognition. Sensors 2018, 18, 3776. [Google Scholar] [CrossRef]

- Zhang, J.; Huang, M.; Jin, X.; Li, X. A real-time chinese traffic sign detection algorithm based on modified YOLOv2. Algorithms 2017, 10, 127. [Google Scholar] [CrossRef]

- Seraj, M.; Rosales-Castellanos, A.; Shalkamy, A.; El-Basyouny, K.; Qiu, T.Z. The implications of weather and reflectivity variations on automatic traffic sign recognition performance. J. Adv. Transp. 2021, 2021, 5513552. [Google Scholar] [CrossRef]

- Du, L.; Ji, J.; Pei, Z.; Zheng, H.; Fu, S.; Kong, H.; Chen, W. Improved detection method for traffic signs in real scenes applied in intelligent and connected vehicles. IET Intell. Transp. Syst. 2020, 14, 1555–1564. [Google Scholar] [CrossRef]

- Ren, K.; Huang, L.; Fan, C.; Han, H.; Deng, H. Real-time traffic sign detection network using DS-DetNet and lite fusion FPN. J. Real-Time Image Process. 2021, 18, 2181–2191. [Google Scholar] [CrossRef]

- Khan, J.A.; Chen, Y.; Rehman, Y.; Shin, H. Performance enhancement techniques for traffic sign recognition using a deep neural network. Multimed. Tools Appl. 2020, 79, 20545–20560. [Google Scholar] [CrossRef]

- Zhang, J.; Ye1, Z.; Jin, X.; Wang, J.; Zhang, J. Real-time trafc sign detection based on multiscale attention and spatial information aggregator. J. Real-Time Image Process. 2022, 19, 1155–1167. [Google Scholar] [CrossRef]

- Karthika, R.; Parameswaran, L. A Novel Convolutional Neural Network Based Architecture for Object Detection and Recognition with an Application to Traffic Sign Recognition from Road Scenes. Pattern Recognit. Image Anal. 2022, 32, 351–362. [Google Scholar] [CrossRef]

- Chen, J.; Jia, K.; Chen, W.; Lv, Z.; Zhang, R. A real-time and high-precision method for small traffic-signs recognition. Neural Comput. Appl. 2022, 34, 2233–2245. [Google Scholar] [CrossRef]

- Wenze, Y.; Quan, L.; Zicheng, Z.; Jinjing, H.; Hansong, W.; Zhihui, F.; Neng, X.; Chuanbo, H.; Chang, K.C. An Improved Lightweight Traffic Sign Detection Model for Embedded Devices. In Proceedings of the International Conference on Advanced Intelligent Systems and Informatics, Cairo, Egypt, 11–13 December 2021; pp. 154–164. [Google Scholar]

- Lima, A.A.; Kabir, M.; Das, S.C.; Hasan, M.; Mridha, M. Road Sign Detection Using Variants of YOLO and R-CNN: An Analysis from the Perspective of Bangladesh. In Proceedings of the International Conference on Big Data, IoT, and Machine Learning, Cairo, Egypt, 20–22 November 2022; pp. 555–565. [Google Scholar]

- Qin, Z.; Yan, W.Q. Traffic-sign recognition using deep learning. In Proceedings of the International Symposium on Geometry and Vision, Auckland, New Zealand, 28–29 January 2021; pp. 13–25. [Google Scholar]

- Xing, J.; Yan, W.Q. Traffic sign recognition using guided image filtering. In Proceedings of the International Symposium on Geometry and Vision, Auckland, New Zealand, 28–29 January 2021; pp. 85–99. [Google Scholar]

- Hussain, Z.; Md, K.; Kattigenahally, K.N.; Nikitha, S.; Jena, P.P.; Harshalatha, Y. Traffic Symbol Detection and Recognition System. In Emerging Research in Computing, Information, Communication and Applications; Springer: Berlin/Heidelberg, Germany, 2022; pp. 885–897. [Google Scholar]

- Chniti, H.; Mahfoudh, M. Designing a Model of Driving Scenarios for Autonomous Vehicles. In Proceedings of the International Conference on Knowledge Science, Engineering and Management, Singapore, 6–8 August 2022; pp. 396–405. [Google Scholar]

- Dewi, C.; Chen, R.C.; Yu, H. Weight analysis for various prohibitory sign detection and recognition using deep learning. Multimed. Tools Appl. 2020, 79, 32897–32915. [Google Scholar] [CrossRef]

- Dewi, C.; Chen, R.C.; Jiang, X.; Yu, H. Deep convolutional neural network for enhancing traffic sign recognition developed on Yolo V4. Multimed. Tools Appl. 2022, 81, 37821–37845. [Google Scholar] [CrossRef]

- Tran, A.C.; Dien, D.L.; Huynh, H.X.; Long, N.H.V.; Tran, N.C. A Model for Real-Time Traffic Signs Recognition Based on the YOLO Algorithm–A Case Study Using Vietnamese Traffic Signs. In Proceedings of the International Conference on Future Data and Security Engineering, Nha Trang City, Vietnam, 27–29 November 2019; pp. 104–116. [Google Scholar]

- Güney, E.; Bayilmiş, C.; Çakan, B. An implementation of real-time traffic signs and road objects detection based on mobile GPU platforms. IEEE Access 2022, 10, 86191–86203. [Google Scholar] [CrossRef]

- Saouli, A.; Margae, S.E.; Aroussi, M.E.; Fakhri, Y. Real-Time Traffic Sign Recognition on Sipeed Maix AI Edge Computing. In Proceedings of the International Conference on Advanced Intelligent Systems for Sustainable Development, Tangier, Morocco, 21–26 December 2020; pp. 517–528. [Google Scholar]

- Satılmış, Y.; Tufan, F.; Şara, M.; Karslı, M.; Eken, S.; Sayar, A. CNN based traffic sign recognition for mini autonomous vehicles. In Proceedings of the International Conference on Information Systems Architecture and Technology, Szklarska Poreba, Poland, 17–19 September 2018; pp. 85–94. [Google Scholar]

- Wu, Y.; Li, Z.; Chen, Y.; Nai, K.; Yuan, J. Real-time traffic sign detection and classification towards real traffic scene. Multimed. Tools Appl. 2020, 79, 18201–18219. [Google Scholar] [CrossRef]

- Li, S.; Cheng, X.; Zhou, Z.; Zhao, B.; Li, S.; Zhou, J. Multi-scale traffic sign detection algorithm based on improved YOLO V4. In Proceedings of the 25th International Conference on Intelligent Transportation Systems (ITSC), Macau, China, 18 September–12 October 2022; pp. 8–12. [Google Scholar]

- Youssouf, N. Traffic sign classification using CNN and detection using faster-RCNN and YOLOV4. Heliyon 2022, 8, e11792. [Google Scholar] [CrossRef]

- Zou, H.; Zhan, H.; Zhang, L. Neural Network Based on Multi-Scale Saliency Fusion for Traffic Signs Detection. Sustainability 2022, 14, 16491. [Google Scholar] [CrossRef]

- Yang, Z. Intelligent Recognition of Traffic Signs Based on Improved YOLO v3 Algorithm. Mob. Inf. Syst. 2022, 2022, 11. [Google Scholar] [CrossRef]

- Zhao, W.; Danjing, L. Traffic sign detection research based on G- GhostNet-YOLOx model. In Proceedings of the IEEE 4th International Conference on Civil Aviation Safety and Information Technology (ICCASIT), Dali, China, 12–14 October 2022. [Google Scholar]

- Bai, W.; Zhao, J.; Dai, C.; Zhang, H.; Zhao, L.; Ji, Z.; Ganchev, I. Two Novel Models for Traffic Sign Detection Based on YOLOv5s. Axioms 2023, 12, 160. [Google Scholar] [CrossRef]

- Jiang, J.; Yang, J.; Yin, J. Traffic sign target detection method based on deep learning. In Proceedings of the 2021 International Conference on Computer Information Science and Artificial Intelligence (CISAI), Kunming, China, 17–19 September 2021; pp. 74–78. [Google Scholar]

- Kumagai, K.; Goto, T. Improving Accuracy of Traffic Sign Detection Using Learning Method. In Proceedings of the 2022 IEEE 4th Global Conference on Life Sciences and Technologies (LifeTech), Osaka, Japan, 7–9 March 2022; pp. 318–319. [Google Scholar]

- Yu, J.; Ye, X.; Tu, Q. Traffic Sign Detection and Recognition in Multiimages Using a Fusion Model With YOLO and VGG Network. IEEE Trans. Intell. Transp. Syst. 2022, 23, 16632–16642. [Google Scholar] [CrossRef]

- Ćorović, A.; Ilić, V.; Ðurić, S.; Marijan, M.; Pavković, B. The real-time detection of traffic participants using YOLO algorithm. In Proceedings of the 2018 26th Telecommunications Forum (TELFOR), Belgrade, Serbia, 20–21 November 2018; pp. 1–4. [Google Scholar]

- Kong, S.; Park, J.; Lee, S.S.; Jang, S.J. Lightweight traffic sign recognition algorithm based on cascaded CNN. In Proceedings of the 2019 19th International Conference on Control, Automation and Systems (ICCAS), Jeju, Republic of Korea, 15–18 October 2019; pp. 506–509. [Google Scholar]

- Song, W.; Suandi, S.A. TSR-YOLO: A Chinese Traffic Sign Recognition Algorithm for Intelligent Vehicles in Complex Scenes. Sensors 2023, 23, 749. [Google Scholar] [CrossRef]

- Kumar, V.A.; Raghuraman, M.; Kumar, A.; Rashid, M.; Hakak, S.; Reddy, M.P.K. Green-Tech CAV: NGreen-Tech CAV: Next Generation Computing for Traffic Sign and Obstacle Detection in Connected and Autonomous Vehicles. IEEE Trans. Green Commun. Netw. 2022, 6, 1307–1315. [Google Scholar] [CrossRef]

- Liu, X.; Jiang, X.; Hu, H.; Ding, R.; Li, H.; Da, C. Traffic Sign Recognition Algorithm Based on Improved YOLOv5s. In Proceedings of the 2021 International Conference on Control, Automation and Information Sciences (ICCAIS), Xi’an, China, 14–17 October 2021; pp. 980–985. [Google Scholar]

- Wang, H.; Yu, H. Traffic sign detection algorithm based on improved YOLOv4. In Proceedings of the 2020 IEEE 9th Joint International Information Technology and Artificial Intelligence Conference (ITAIC), Chongqing, China, 11–13 December 2020; Volume 9, pp. 1946–1950. [Google Scholar]

- Rehman, Y.; Amanullah, H.; Shirazi, M.A.; Kim, M.Y. Small Traffic Sign Detection in Big Images: Searching Needle in a Hay. IEEE Access 2022, 10, 18667–18680. [Google Scholar] [CrossRef]

- Alhabshee, S.M.; bin Shamsudin, A.U. Deep learning traffic sign recognition in autonomous vehicle. In Proceedings of the 2020 IEEE Student Conference on Research and Development (SCOReD), Batu Pahat, Malaysia, 27–29 September 2020; pp. 438–442. [Google Scholar]

- Ikhlayel, M.; Iswara, A.J.; Kurniawan, A.; Zaini, A.; Yuniarno, E.M. Traffic Sign Detection for Navigation of Autonomous Car Prototype using Convolutional Neural Network. In Proceedings of the 2020 International Conference on Computer Engineering, Network, and Intelligent Multimedia (CENIM), Surabaya, Indonesia, 17–18 October 2020; pp. 205–210. [Google Scholar]

- Jeya Visshwak, J.; Saravanakumar, P.; Minu, R. On-The-Fly Traffic Sign Image Labeling. In Proceedings of the 2020 International Conference on Communication and Signal Processing (ICCSP), Chennai, India, 28–30 July 2020; pp. 0530–0532. [Google Scholar]

- Valeja, Y.; Pathare, S.; Patel, D.; Pawar, M. Traffic Sign Detection using CLARA and YOLO in Python. In Proceedings of the 2021 7th International Conference on Advanced Computing and Communication Systems (ICACCS), Coimbatore, India, 19–20 March 2021; Volume 1, pp. 367–371. [Google Scholar]

- Shahud, M.; Bajracharya, J.; Praneetpolgrang, P.; Petcharee, S. Thai traffic sign detection and recognition using convolutional neural networks. In Proceedings of the 2018 22nd International Computer Science and Engineering Conference (ICSEC), Chiang Mai, Thailand, 21–24 November 2018; pp. 1–5. [Google Scholar]

- Chen, Y.; Wang, J.; Dong, Z.; Yang, Y.; Luo, Q.; Gao, M. An Attention Based YOLOv5 Network for Small Traffic Sign Recognition. In Proceedings of the 2022 IEEE 31st International Symposium on Industrial Electronics (ISIE), Anchorage, AK, USA, 1–3 June 2022; pp. 1158–1164. [Google Scholar]

- Park, Y.K.; Park, H.; Woo, Y.S.; Choi, I.G.; Han, S.S. Traffic Landmark Matching Framework for HD-Map Update: Dataset Training Case Study. Electronics 2022, 11, 863. [Google Scholar] [CrossRef]

- Rehman, Y.; Amanullah, H.; Saqib Bhatti, D.M.; Toor, W.T.; Ahmad, M.; Mazzara, M. Detection of Small Size Traffic Signs Using Regressive Anchor Box Selection and DBL Layer Tweaking in YOLOv3. Appl. Sci. 2021, 11, 11555. [Google Scholar] [CrossRef]

- Song, S.; Li, Y.; Huang, Q.; Li, G. A new real-time detection and tracking method in videos for small target traffic signs. Appl. Sci. 2021, 11, 3061. [Google Scholar] [CrossRef]

- Zhang, S.; Che, S.; Liu, Z.; Zhang, X. A real-time and lightweight traffic sign detection method based on ghost-YOLO. In Multimedia Tools and Applications; Springer: Berlin/Heidelberg, Germany, 2023; Volume 82, pp. 26063–26087. [Google Scholar]

- Dewi, C.; Chen, R.C.; Tai, S.K. Evaluation of robust spatial pyramid pooling based on convolutional neural network for traffic sign recognition system. Electronics 2020, 9, 889. [Google Scholar] [CrossRef]

- Huang, H.; Liang, Q.; Luo, D.; Lee, D.H. Attention-Enhanced One-Stage Algorithm for Traffic Sign Detection and Recognition. J. Sens. 2022, 2022, 3705256. [Google Scholar] [CrossRef]

- Wan, H.; Gao, L.; Su, M.; You, Q.; Qu, H.; Sun, Q. A novel neural network model for traffic sign detection and recognition under extreme conditions. J. Sens. 2021, 2021, 9984787. [Google Scholar] [CrossRef]

- Zhu, Y.; Yan, W.Q. Traffic sign recognition based on deep learning. Multimed. Tools Appl. 2022, 81, 17779–17791. [Google Scholar] [CrossRef]

- Li, Y.; Li, J.; Meng, P. Attention-YOLOV4: A real-time and high-accurate traffic sign detection algorithm. Multimed. Tools Appl. 2022, 82, 7567–7582. [Google Scholar] [CrossRef]

- Zhang, Y.; Lu, Y.; Zhu, W.; Wei, X.; Wei, Z. Traffic sign detection based on multi-scale feature extraction and cascade feature fusion. J. Supercomput. 2022, 79, 2137–2152. [Google Scholar] [CrossRef]

- Yang, T.; Tong, C. Real-time detection network for tiny traffic sign using multi-scale attention module. Sci. China Technol. Sci. 2022, 65, 396–406. [Google Scholar] [CrossRef]

- Xing, J.; Nguyen, M.; Qi Yan, W. The Improved Framework for Traffic Sign Recognition Using Guided Image Filtering. SN Comput. Sci. 2022, 3, 1–16. [Google Scholar] [CrossRef]

- Bi, Z.; Xu, F.; Shan, M.; Yu, L. YOLO-RFB: An Improved Traffic Sign Detection Model. In Proceedings of the International Conference on Mobile Computing, Applications, and Services, Online, 22–24 July 2022; pp. 3–18. [Google Scholar]

- Zeng, H. Real-Time Traffic Sign Detection Based on Improved YOLO V3. In Proceedings of the 11th International Conference on Computer Engineering and Networks, Hechi, China, 21–25 October 2022; pp. 167–172. [Google Scholar]

- Galgali, R.; Punagin, S.; Iyer, N. Traffic Sign Detection and Recognition for Hazy Images: ADAS. In Proceedings of the International Conference on Image Processing and Capsule Networks, Bangkok, Thailand, 27–28 May 2021; pp. 650–661. [Google Scholar]

- Mangshor, N.N.A.; Paudzi, N.P.A.M.; Ibrahim, S.; Sabri, N. A Real-Time Malaysian Traffic Sign Recognition Using YOLO Algorithm. In Proceedings of the 12th National Technical Seminar on Unmanned System Technology 2020, Virtual, 27–28 October 2022; pp. 283–293. [Google Scholar]

- Le, B.L.; Lam, G.H.; Nguyen, X.V.; Duong, Q.L.; Tran, Q.D.; Do, T.H.; Dao, N.N. A Deep Learning Based Traffic Sign Detection for Intelligent Transportation Systems. In Proceedings of the International Conference on Computational Data and Social Networks, Online, 15–17 November 2021; pp. 129–137. [Google Scholar]

- Ma, L.; Wu, Q.; Zhan, Y.; Liu, B.; Wang, X. Traffic Sign Detection Based on Improved YOLOv3 in Foggy Environment. In Proceedings of the International Conference on Wireless Communications, Networking and Applications, Wuhan, China, 16–18 December 2022; pp. 685–695. [Google Scholar]

- Fan, W.; Yi, N.; Hu, Y. A Traffic Sign Recognition Method Based on Improved YOLOv3. In Proceedings of the International Conference on Intelligent Automation and Soft Computing, Chicago, IL, USA, 26–28 May 2021; pp. 846–853. [Google Scholar]

- Tao, X.; Li, H.; Deng, L. Research on Self-driving Lane and Traffic Marker Recognition Based on Deep Learning. In Proceedings of the International Symposium on Intelligence Computation and Applications, Giangzhou, China, 20–21 November 2021; pp. 112–123. [Google Scholar]

- Dewi, C.; Chen, R.C.; Yu, H.; Jiang, X. Robust detection method for improving small traffic sign recognition based on spatial pyramid pooling. J. Ambient. Intell. Humaniz. Comput. 2021, 14, 8135–8152. [Google Scholar] [CrossRef]

- Wan, J.; Ding, W.; Zhu, H.; Xia, M.; Huang, Z.; Tian, L.; Zhu, Y.; Wang, H. An efficient small traffic sign detection method based on YOLOv3. J. Signal Process. Syst. 2021, 93, 899–911. [Google Scholar] [CrossRef]

- Peng, E.; Chen, F.; Song, X. Traffic sign detection with convolutional neural networks. In Proceedings of the International Conference on Cognitive Systems and Signal Processing, Beijing, China, 19–23 November 2016; pp. 214–224. [Google Scholar]

- Thipsanthia, P.; Chamchong, R.; Songram, P. Road Sign Detection and Recognition of Thai Traffic Based on YOLOv3. In Proceedings of the International Conference on Multi-disciplinary Trends in Artificial Intelligence, Kuala Lumpur, Malaysia, 17–19 November 2019; pp. 271–279. [Google Scholar]

- Arcos-Garcia, A.; Alvarez-Garcia, J.A.; Soria-Morillo, L.M. Evaluation of deep neural networks for traffic sign detection systems. Neurocomputing 2018, 316, 332–344. [Google Scholar] [CrossRef]

- Ma, X.; Li, X.; Tang, X.; Zhang, B.; Yao, R.; Lu, J. Deconvolution Feature Fusion for traffic signs detection in 5G driven unmanned vehicle. Phys. Commun. 2021, 47, 101375. [Google Scholar] [CrossRef]

- Khnissi, K.; Jabeur, C.B.; Seddik, H. Implementation of a Compact Traffic Signs Recognition System Using a New Squeezed YOLO. Int. J. Intell. Transp. Syst. Res. 2022, 20, 466–482. [Google Scholar] [CrossRef]

- Khafaji, Y.A.A.; Abbadi, N.K.E. Traffic Signs Detection and Recognition Using A combination of YOLO and CNN. In Proceedings of the International Conference on Communication & Information Technologies (IICCIT-2022), Basrah, Iraq, 7–8 September 2022. [Google Scholar]

- Yan, B.; Li, J.; Yang, Z.; Zhang, X.; Hao, X. AIE-YOLO: Auxiliary Information Enhanced YOLO for Small Object Detection. Sensors 2022, 22, 8221. [Google Scholar] [CrossRef]

- He, X.; Cheng, R.; Zheng, Z.; Wang, Z. Small Object Detection in Traffic Scenes Based on YOLO-MXANet. Sensors 2021, 21, 7422. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.; Guo, J.; Yi, J.; Song, Y.; Xu, J.; Yan, W.; Fu, X. Real-Time and Efficient Multi-Scale Traffic Sign Detection Method for Driverless Cars. Sensors 2022, 22, 6930. [Google Scholar] [CrossRef]

- Wang, Y.; Bai, M.; Wang, M.; Zhao, F.; Guo, J. Multiscale Traffic Sign Detection Method in Complex Environment Based on YOLOv4. Comput. Intell. Neurosci. 2022, 2022, 15. [Google Scholar] [CrossRef]

- Wang, J.; Chen, Y.; Dong, Z.; Gao, M. Improved YOLOv5 network for real-time multi-scale traffic sign detection. Neural Comput. Appl. 2021, 35, 7853–7865. [Google Scholar] [CrossRef]

- Yao, Y.; Han, L.; Du, C.; Xu, X.; Jiang, X. Traffic sign detection algorithm based on improved YOLOv4-Tiny. Signal Process. Image Commun. 2022, 107, 116783. [Google Scholar] [CrossRef]

- Doherty, J.; Gardiner, B.; Kerr, E.; Siddique, N.; Manvi, S.S. Comparative Study of Activation Functions and Their Impact on the YOLOv5 Object Detection Model. In Proceedings of the International Conference on Pattern Recognition and Artificial Intelligence, Paris, France, 1–3 June 2022; pp. 40–52. [Google Scholar]

- Lu, H.; Chen, T.; Shi, L. Research on Small Target Detection Method of Traffic Signs Improved Based on YOLOv3. In Proceedings of the 2022 2nd International Conference on Consumer Electronics and Computer Engineering (ICCECE), Guangzhou, China, 14–16 January 2022; pp. 476–481. [Google Scholar]

- Algorry, A.M.; García, A.G.; Wofmann, A.G. Real-time object detection and classification of small and similar figures in image processing. In Proceedings of the 2017 International Conference on Computational Science and Computational Intelligence (CSCI), Las Vegas, NV, USA, 14–16 December 2017; pp. 516–519. [Google Scholar]

- Kuo, Y.L.; Lin, S.H. Applications of deep learning to road sign detection in DVR images. In Proceedings of the 2019 IEEE International Symposium on Measurement and Control in Robotics (ISMCR), Houston, TX, USA, 19–21 September 2019; p. A2-1. [Google Scholar]

- Abraham, A.; Purwanto, D.; Kusuma, H. Traffic Lights and Traffic Signs Detection System Using Modified You Only Look Once. In Proceedings of the 2021 International Seminar on Intelligent Technology and Its Applications (ISITIA), Surabaya, Indonesia, 21–22 July 2021; pp. 141–146. [Google Scholar]

| Databases | Search Strings | Results |

|---|---|---|

| IEEE | ((“All Metadata”: Traffic Sign) AND ((“All Metadata”:Detection) OR (“All Metadata”:Recognition) OR (“All Metadata”:Identification)) AND (“All Metadata”: Object Detection) OR (“All Metadata”:YOLO)) | 2722 |

| Springer | ‘Object AND Detection AND “Traffic Sign” AND (Detection OR Recognition OR Identification OR YOLO)’ | 1852 |

| MDPI | All Fields: Traffic Sign Detection OR All Fields: Traffic Sign Recognition OR All Fields: Traffic Sign Identification AND Keywords: You Only Look Once OR Keywords: Object Detection | 498 |

| Hindawi P.G. | (“Traffic Sign” AND (“Detection” OR “Recognition” OR “Identification”)) AND (“YOLO”) | 16 |

| Science Direct | (Traffic Sign OR Traffic Sign Detection OR Traffic Sign Recognition OR Traffic Sign Identification OR Object Detection) AND (YOLO OR You Only Look Once) | 80,650 |

| Wiley | “Traffic Sign OR Detection OR Recognition OR Identification” anywhere and “YOLO OR Object Detection” anywhere | 160,803 |

| Sage | Traffic Sign OR Detection OR Recognition OR Identification AND Object Detection OR YOLO | 72,941 |

| Taylor & Francis | [All: traffic] AND [[All: sign] OR [All: detection] OR [All: recognition] OR [All: identification]] AND [[All: object] OR [All: detection] OR [All: yolo]] | 135,385 |

| PLOS | ((((everything: “Traffic Sign”) AND everything:Detection) OR everything:Identification) OR everything:YOLO) OR everything: “Object Detection” | 140,023 |

| Order | Country of Origin | Name of Dataset | Number of Categories | Number of Classes | Number of Images | Number of Traffic Signs | Number of Referenced Articles | Percentage (%) |

|---|---|---|---|---|---|---|---|---|

| 1 | Germany | GTSRB and | +3 | 43 | 52,740 | 52,740 | 44 | 27.33 |

| GTSDB | 900 | 910 | ||||||

| 2 | China | TT100K | 3 | 130 | 100,000 | 30,000 | 26 | 16.15 |

| 3 | China | CTSDB | 10,000 | 21 | 13.04 | |||

| CCTSDB | 3 | 21 | ∼20,000 | ∼40,000 | ||||

| 4 | Belgium | BTSDB and | 3 | 62 | 17,000 | 4627 | 6 | 3.73 |

| BTSCB | 7125 | 7125 | ||||||

| 5 | South Korea | KTSD | - | - | 3300 | - | 3 | 1.86 |

| 6 | Malaysia | MTSD | 5 | 66 | 1000 | 2 | 1.86 | |

| 2056 | 2056 | |||||||

| 7 | USA | BDD1OOK | - | - | 100,000 | - | 2 | 1.86 |

| 8 | Thailand | TTSD | - | 50 | 9357 | - | 2 | 1.86 |

| 9 | France | FRIDA and | - | 90 | - | 2 | 1.86 | |

| FRIDA2 | - | 300 | ||||||

| 10 | France | FROSI | - | 4 | 504 | 1620 | 2 | 1.86 |

| 11 | Sweden | STSD | - | 7 | 20,000 | 3488 | 2 | 1.86 |

| 12 | Slovenia | DFG | +3 | +200 | 7000 | 13,239 | 1 | 0.62 |

| 4359 | ||||||||

| 13 | Taiwan | TWTSD | - | - | 900 | - | 1 | 0.62 |

| 14 | Taiwan | TWynthetic | - | 3 | - | 900 | 1 | 0.62 |

| 15 | Belgium | KUL | - | +100 | +10,000 | - | 1 | 0.62 |

| 16 | China | CSUST | - | - | 15,000 | - | 1 | 0.62 |

| 17 | MarcTR | - | 7 | 3564 | 3564 | 1 | 0.62 | |

| 18 | Turkey | - | 22 | 2500 | 1 | 0.62 | ||

| 19 | Vietnam | - | 4 | 144 | 5000 | 5704 | 1 | 0.62 |

| 20 | Croatia | - | - | 11 | 5567 | 6751 | 1 | 0.62 |

| 21 | Mexico | - | 3 | 11 | 1284 | 1426 | 1 | 0.62 |

| 22 | China | WHUTCTSD | 5 | - | 2700 | 4009 | 1 | 0.62 |

| 23 | Bangladesh | BDRS2021 | 4 | 16 | 2688 | - | 1 | 0.62 |

| 24 | New Zealand | NZ-TS3K | 3 | 7 | 3436 | 3545 | 1 | 0.62 |

| 25 | AW 1 | MapiTSD | - | 300 | 100,000 | 320,000 | 1 | 0.62 |

| 26 | SW 2 | Own | - | - | - | - | 14 | 8.70 |

| 27 | SW | Unknown | - | - | - | 21 | 13.0 | |

| Total | 161 | 100.00 | ||||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Flores-Calero, M.; Astudillo, C.A.; Guevara, D.; Maza, J.; Lita, B.S.; Defaz, B.; Ante, J.S.; Zabala-Blanco, D.; Armingol Moreno, J.M. Traffic Sign Detection and Recognition Using YOLO Object Detection Algorithm: A Systematic Review. Mathematics 2024, 12, 297. https://doi.org/10.3390/math12020297

Flores-Calero M, Astudillo CA, Guevara D, Maza J, Lita BS, Defaz B, Ante JS, Zabala-Blanco D, Armingol Moreno JM. Traffic Sign Detection and Recognition Using YOLO Object Detection Algorithm: A Systematic Review. Mathematics. 2024; 12(2):297. https://doi.org/10.3390/math12020297

Chicago/Turabian StyleFlores-Calero, Marco, César A. Astudillo, Diego Guevara, Jessica Maza, Bryan S. Lita, Bryan Defaz, Juan S. Ante, David Zabala-Blanco, and José María Armingol Moreno. 2024. "Traffic Sign Detection and Recognition Using YOLO Object Detection Algorithm: A Systematic Review" Mathematics 12, no. 2: 297. https://doi.org/10.3390/math12020297

APA StyleFlores-Calero, M., Astudillo, C. A., Guevara, D., Maza, J., Lita, B. S., Defaz, B., Ante, J. S., Zabala-Blanco, D., & Armingol Moreno, J. M. (2024). Traffic Sign Detection and Recognition Using YOLO Object Detection Algorithm: A Systematic Review. Mathematics, 12(2), 297. https://doi.org/10.3390/math12020297