1. Introduction

This study shows how to derive analytical expressions, called “constrained expressions”, that can be used to represent functions that are subject to a set of linear constraints. The product of this paper is a procedure to derive these constrained expressions as a new mathematical tool with the purpose to apply them to solve problems in computational science. Examples of potential applications of constrained expressions are: solving differential equations, performing constrained optimization, solving some types of optimal control problems, path planning, calculus of variations, etc. All of these applications will be the subject of future papers.

A resulting constrained expression,

, is expressed in terms of a function,

, which can be freely chosen. No matter how

is chosen, the resulting constrained expression always satisfies the set of linear constraints. Let us give an example to clarify the purpose of this study. The function,

always satisfies the two following constraints,

and

, for

any function

that is differentiable in

and

.

The constrained expressions are generalized interpolation formulae. The generalization rests on the fact that these expressions are not interpolating expressions for a class (or sub-class) of functions, but for all functions, as done, for instance in Refs. [

1,

2,

3] containing reviews of the classic interpolation methods applied in various areas, or in [

4] for distributed approximating functionals (DAFs), and in its recent improvement [

5], using Hermite DAFs and Sinc DAFs. This paper provides interpolation formulae that are not restricted to using a class of specific functions. This is clarified by the following example.

The problem of writing the equation representing

all linear functions passing through a specified point,

, is straightforward,

, with the line slope,

m, which can be freely chosen. Constrained expressions represent

all functions passing through

. This includes continuous, discontinuous, singular, algebraic, rational, transcendental, and periodic functions, just to mention some. These constrained expressions can be built in several ways. One expression is a direct extension of the linear equation

where

can be

any function satisfying

. A second expression, called

additive, is

where

, and a third expression, called

rational, is

where

can be

any function satisfying

. From Equations (

1) and (

2), the relationship,

, is derived, showing that

is the derivative of any secant of

passing through

.

The expressions introduced in Equations (

1)–(

3) can be used to represent

all interpolating functions passing through the point

, with the only exception for those satisfying

,

, and

. The proof that Equation (

2) provides

all functions passing through the point

is immediate. Assume

represents all functions satisfying

, then, by selecting,

, we obtain,

.

It is possible also to combine Equations (

1)–(

3) to obtain

This study shows how to derive interpolating expressions subject to a variety of linear constraints, such as functions passing through multiple points with assigned derivatives, or subject to multiple relative constraints, as well as periodic functions subject to multiple point constraints. There are many potential applications for these types of expressions. For example, they can be used in optimization problems with constraint embedded expressions. One application of these

constrained expressions is provided in Ref. [

6], where least-squares solutions of initial and boundary value problems applied to linear nonhomogeneous differential equations of any order and with nonconstant coefficients are obtained. These

constrained expressions are provided in terms of a new function,

, which is completely free to choose. In fact, these interpolating expressions are particularly useful when solving linear differential equations because they can be rewritten using functions with embedded constraints (a.k.a., the “subject to” conditions). This has actually been done in Ref. [

6], providing a unified least-squares framework to solve initial and boundary values problems for linear differential equations. This approach provides several orders of magnitudes accuracy gain with respect to the most commonly used numerical integrators.

Particularly important is the fact that Equation (

2), like many other equations provided in this study, can be immediately extended to vectors (bold lower cases), to matrices (upper cases), and to higher dimensional tensors. Specifically, for vectors and matrices

made of independent elements, Equation (

2) becomes

with the only condition being that the vector and the matrix are defined in .

The paper is organized by providing, in the following sections, constrained expressions satisfying:

constraints in one point;

constraints in two and then in n points;

multiple linear constraints;

relative constraints;

constraints on continuous and discontinuous periodic functions.

3. Constraints in n Points

Waring polynomials [

7], better known as “Lagrange polynomials,” are used for polynomial interpolation. This interpolation formula was first discovered in 1779 by Edward Waring, then rediscovered by Euler in 1783, and then published by Lagrange in 1795 [

8]. A two-point Waring polynomial is the equation of a line passing through two points,

and

,

while the general

n-points Waring polynomial is the (

) degree unique polynomial passing through the

n points,

,

Inspired by Waring polynomials, the additive expression of Equation (

3) allows us to derive the interpolation of

all functions passing through two or more distinct points. Using two points,

and

, the Waring polynomial formula is generalized as

where

is a free function subject to the conditions,

and

. Again, if

, then the

contribution to

in Equation (

18) disappears, becoming the classic two-point Waring interpolation form given in Equation (

17). This is because Equation (

18) is obtained using Equation (

6) with

and

. Therefore, to obtain a nonlinear interpolating function,

, then

.

Equation (

18) describes all nonlinear interpolating functions passing through two points. The generalization is immediate. The expression of

all functions passing through a set of

n points is

where

can be any function satisfying

, for

. Equation (

19) extends the Waring’s interpolation form using

n points to any function. In particular, if

, the original formulation of Waring’s interpolation form is obtained. This happens also if

. In fact, to provide additional contribution to

in Equation (

19),

must be, at least, a monomial with degree higher than

n (minimum monomial).

A time-varying

n-dimensional vector,

, passing through a set of

m points,

, at corresponding times,

, can be expressed as

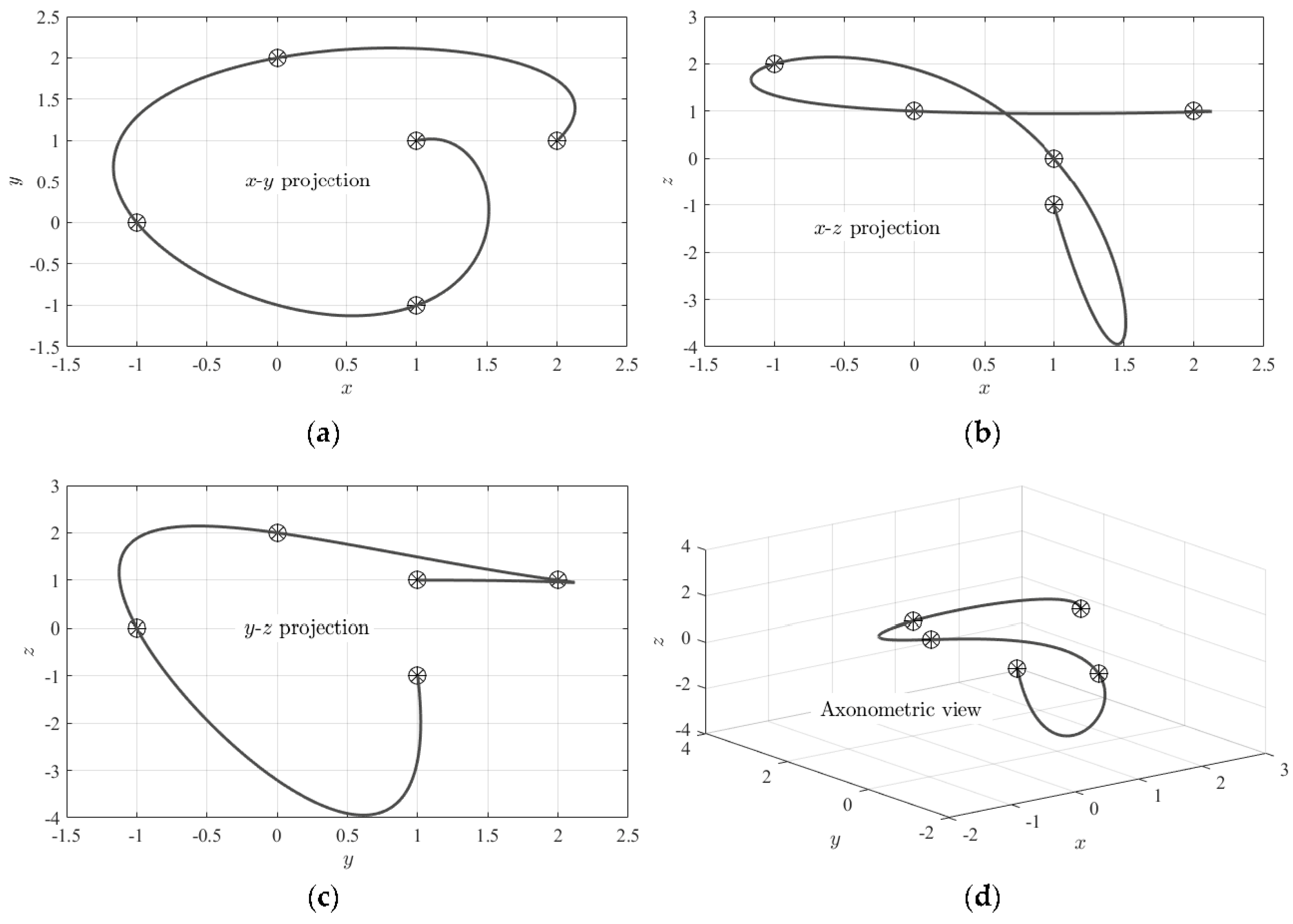

For example, using the five points given in

Table 1 and

, the trajectory shown in

Figure 1 is obtained.

5. Coefficients Functions of Constrained Expressions

Consider the general case of a function with

n distinct constraints,

, where the

n-element vector,

, contains the constraints’ derivative orders and the

n-element vector,

, indicates where the constraints’ are specified. For instance, for the constraints specified in Equation (

24), the

and

vectors are,

and

, respectively.

In this section, we develop an alternative approach to derive

constrained expressions. This is motivated by the fact that Equations (

7), (

18)–(

20) and (

23), all share the same formalism, with a number of terms equal to the number of constraints, expressed as

, and multiplied by some coefficient functions assuming values of one if computed at the constraint’s coordinate value while all the remaining coefficient functions are zero, and vice versa. This property suggests that the constrained expressions should have the following formal structure:

where

is the Kronecker and

n the number of constraints. The

coefficient functions,

, of this expression are such that when the

k-th constraint,

, is verified, then

, while all the other coefficient functions,

, for

. Given a set of constraints, this property allows us to derive the

expressions, as explained in the next section.

Coefficients Functions Derivation

As the number of constraints (

m) increases, the approach previously proposed (using monomials) to find constrained expressions becomes more complicated, always with the risk of obtaining a singular matrix to compute the coefficients,

. For this reason, this section proposes a new procedure to compute all the

functions at once, provided that the

functions are expressed as a scalar product of a set of

m linearly

independent functions contained in the vector

(where

)

Functions must be defined at constraints’ conditions (derivatives and locations). A sufficient condition is using infinite nonsingular differentiable functions.

Let’s derive the

coefficient functions for the following

constraints example:

To compute the function

associated with the first constraint,

, the following relationships:

must be satisfied. Selecting, for instance,

, these relationships can be put in a matrix form,

allowing the coefficients vector,

, of the

function to be obtained by matrix inversion. The other

coefficients vectors, where

, can be computed analogously obtaining the final following system:

Therefore, the inversion of the coefficients matrix,

, provides

all the

coefficient vectors,

and the

polynomials are

This method also fails if the matrix is singular. In this case, different basis function vectors must be selected, until obtaining which is invertible. Because of this, this alternative approach does not look as attractive or preferable over the approach previously described. What makes this approach more interesting is it allows an easy generalization when considering constraints more general, such as linear combination sets of functions and derivatives. This is shown in the next subsection, starting from solving the simple case of relative constraints, still using the previous approach.

6. Relative Constraints

Sometimes, constraints are not defined in an absolute way (e.g.,

) but in a relative way, as

. Constrained expressions can also be derived for relative constraints. In general, a relative constraint can be written as

To give an example, consider the problem of finding an expression satisfying the two relative constraints,

The constrained expression can be searched for as

where

and

are two assigned functions and

and

two unknown coefficients. The two relative constraints imply solving the system,

to obtain the unknown coefficients,

and

. This linear problem admits a solution if the matrix is not singular and if the selected functions,

and

, are defined at the constraints’ conditions. Matrix singularity indeed occurs if, for instance,

and

, as previously highlighted. This means that the case of function and derivative

relative constraints is different from the case of function and derivative

absolute constraints. In this relative constraint case, in addition to spanning two independent function spaces, the functions

and

must admit, at least, a nonzero first derivative. Therefore, using monomials, a potential constrained expression can be searched for as,

The two constraints give the system,

whose solution exists as long as

,

which has, again, the formal expression of Equation (

24). This expression provides

all functions satisfying the two relative constraints.

6.1. Coefficients Functions Derivation for Linear Combination of Relative Constraints

Consider searching for the constrained expression as

A set of

n general linear constraints (this includes absolute and relative constraints) can be expressed by the

n linear equations

and where

and

are vectors of unknown and known coefficients, respectively. For instance, for the

k-th constraint,

, we have

,

,

,

, and

. Using a set of

n independent basis functions (size of vectors

and

), matrix

has the expression

Then, the

unknown vector can be computed from the constraints’ equations,

from which the solution is

Substituting in Equation (

25),

we obtain the expressions for the

functions

an expression easy to derive for given the basis functions vector,

, and a set of linear constraints.

Therefore, the case of general linear constraints can be expressed as

This equation generalizes Equation (

24), where the

functions are multiplying the linear constraints written in terms of the function

.

6.2. Example of Two Linear Constraints

Let’s give a numerical example. Consider finding all functions satisfying the following two linear constraints:

for

,

, and

. In this case, we have

Consider the coefficients functions selection,

Then, the coefficient functions are

where

That is,

and, finally,

Therefore,

all explicit functions satisfying the constraints given in Equation (

28) can be expressed by

where

can be freely chosen as long as

,

, and

exist.

7. Periodic Functions

Periodic functions, with assigned period

T, can be provided in continuous and discontinuous forms,

where

can be any analytic periodic function with period

T (e.g., trigonometric functions),

and

are shifting parameters, and

can be

any function.

All periodic functions passing through the point

, can be expressed using the additive expression form given in Equation (

2),

, that is,

The expressions provided in Equation (

32) can be used along with the general Waring polynomial form given in Equation (

18) to obtain

all periodic functions passing through a set of

n points. By doing that, the periodicity is over a line (

), a quadratic (

), a cubic (

) (and so on) functions.

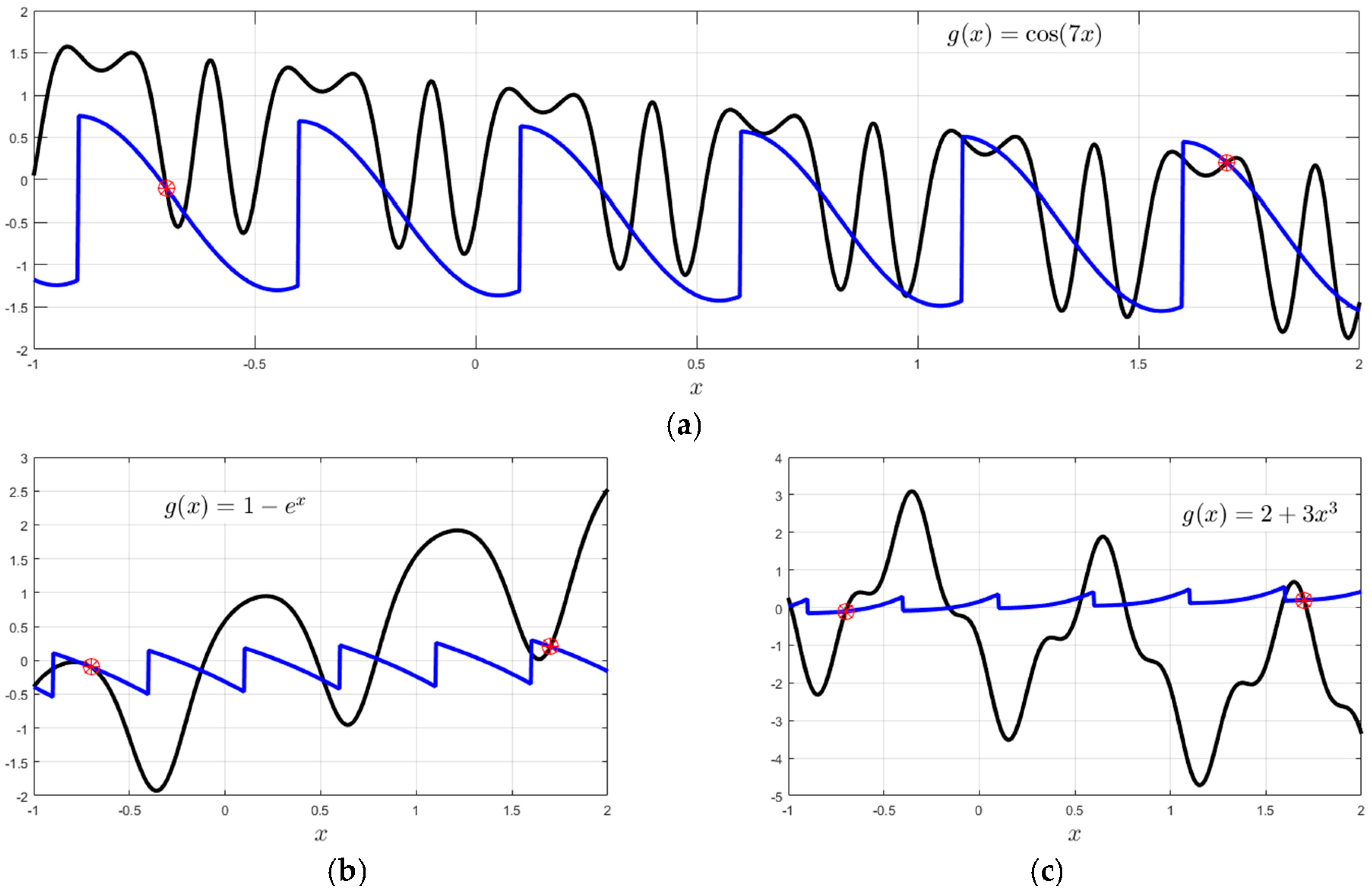

For instance, using two points,

and

(indicated by red markers in all three plots of

Figure 2), we can obtain the continuous (black curves)

and the discontinuous (blue curves)

for the three different expressions:

,

, and

, respectively. These plots clearly show how the periodicity is over a line.

Using

n points, the general Waring interpolation form, continuous or discontinuous periodic functions over

polynomials functions,

can be obtained, where the

n values of

and

are computed using Equation (

32), respectively.

8. Conclusions

This study shows how to derive analytical constrained expressions, representing all functions subject to a set of assigned linear constraints. These expressions are not unique and are defined in terms of a new function, , and its derivatives, evaluated at the constraints’ coordinates. Constrained expressions can be introduced for relative constraints as well as for a set of linear constraints expressed as a linear combination of functions and derivatives specified in some specific coordinates. Finally, the theory has been applied to periodic functions—continuous (using periodic functions) and discontinuous (using modular arithmetic)—with an assigned period and subject to pass through a set of n points.

Applications of the theory presented will be the subject of many future papers. Potential applications are identified in solving differential equations, optimization, optimal control, path planning, calculus of variations, and other scientific problems whose solution is to find functions subject to linear constraints and satisfying some optimality criteria. This theory provides expressions with embedded linear constraints so that the constraints conditions are removed for the mathematical problem. They can be used to restrict the search space to just the one satisfying the problem constraints. An application already developed using these constrained expressions is solving linear nonhomogeneous differential equations with nonconstant coefficients for initial, boundary, and mixed value problems. Ref. [

6] shows how, using constrained expressions, least-squares solutions can be obtained for these two problems.

Natural extension of this theory, currently in progress, is to develop a future “Theory of Connections” for functions and differential equations (instead of points). This theory would describe all possible morphings from one functions to another and from one differential equation to another. An important application of this kind of transformation is modelling phase and property transitions (laminar/turbolence flows, subsonic/supersonic regimes, property of materials at different scales, etc.), having the goal of finding the best transformation that fits experimental data.