Modified Potra–Pták Multi-step Schemes with Accelerated Order of Convergence for Solving Systems of Nonlinear Equations

Abstract

1. Introduction

2. The Method and Analysis of Convergence

2.1. Multi-step Method with Order

3. Computational Efficiency

3.1. Comparison among the Efficiencies

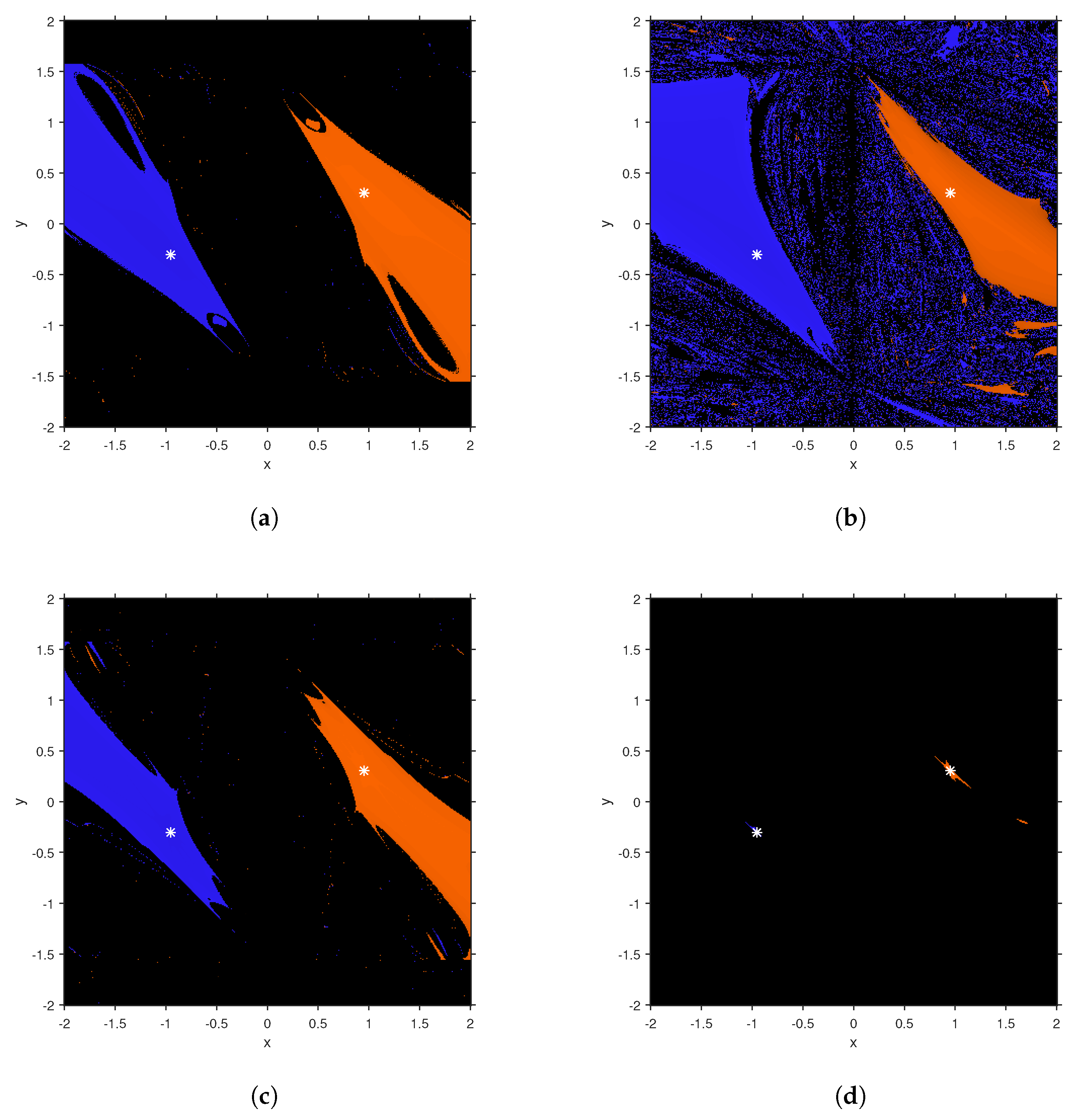

4. Numerical Results

4.1. Example 1

4.2. Example 2

4.3. Example 3

4.4. Example 4

4.5. Example 5

5. Concluding Remarks

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Ostrowski, A.M. Solution of Equations and Systems of Equations; Academic Press: New York, NY, USA, 1966. [Google Scholar]

- Ortega, J.M.; Rheinboldt, W.C. Iterative Solution of Nonlinear Equations in Several Variables; Academic Press: New York, NY, USA, 1970. [Google Scholar]

- Kelley, C.T. Solving Nonlinear Equations with Newton’s Method; SIAM: Philadelphia, PA, USA, 2003. [Google Scholar]

- Traub, J.F. Iterative Methods for the Solution of Equations; Prentice-Hall: Englewood Cliffs, NJ, USA, 1964. [Google Scholar]

- Homeier, H.H.H. A modified Newton method with cubic convergence: The multivariable case. J. Comput. Appl. Math. 2004, 169, 161–169. [Google Scholar] [CrossRef]

- Darvishi, M.T.; Barati, A. A fourth-order method from quadrature formulae to solve systems of nonlinear equations. Appl. Math. Comput. 2007, 188, 257–261. [Google Scholar] [CrossRef]

- Cordero, A.; Hueso, J.L.; Martínez, E.; Torregrosa, J.R. A modified Newton-Jarratt’s composition. Numer. Algor. 2010, 55, 87–99. [Google Scholar] [CrossRef]

- Cordero, A.; Hueso, J.L.; Martínez, E.; Torregrosa, J.R. Efficient high-order methods based on golden ratio for nonlinear systems. Appl. Math. Comput. 2011, 217, 4548–4556. [Google Scholar] [CrossRef]

- Grau-Sánchez, M.; Grau, À.; Noguera, M. On the computational efficiency index and some iterative methods for solving systems of nonlinear equations. J. Comput. Appl. Math. 2011, 236, 1259–1266. [Google Scholar] [CrossRef]

- Grau-Sánchez, M.; Grau, À.; Noguera, M. Ostrowski type methods for solving systems of nonlinear equations. Appl. Math. Comput. 2011, 218, 2377–2385. [Google Scholar] [CrossRef]

- Potra, F.-A.; Pták, V. Nondiscrete Induction and Iterarive Processes; Pitman Publishing: Boston, MA, USA, 1984. [Google Scholar]

- Grau-Sánchez, M.; Noguera, M.; Amat, S. On the approximation of derivatives using divided difference operators preserving the local convergence order of iterative methods. J. Comput. Appl. Math. 2013, 237, 363–372. [Google Scholar] [CrossRef]

- Sharma, J.R.; Arora, H. On efficient weighted-Newton methods for solving systems of nonlinear equations. Appl. Math. Comput. 2013, 222, 497–506. [Google Scholar] [CrossRef]

- Cordero, A.; Torregrosa, J.R. Variants of Newton’s method using fifth order quadrature formulas. Appl. Math. Comput. 2007, 190, 686–698. [Google Scholar] [CrossRef]

- Chicharro, F.I.; Cordero, A.; Torregrosa, J.R. Drawing dynamical and parameter planes of iterative families and methods. Sci. World J. 2013, 2013, 780153. [Google Scholar] [CrossRef] [PubMed]

| Method | H | H | H | H | H | |

|---|---|---|---|---|---|---|

| ACOC | 5.5833 | 6.0210 | - | - | 6.2081 | |

| iter | 3 | 3 | 4 | 4 | 3 | |

| CPU time (seconds) | 0.025 | 0.024 | 0.028 | 0.026 | 0.022 | |

| ACOC | 3.1024 | 2.1869 | - | - | 6.0462 | |

| iter | 3 | 3 | 4 | 4 | 3 | |

| CPU time (seconds) | 0.042 | 0.042 | 0.043 | 0.042 | 0.044 |

| Method | H | H | H | H | H | |

|---|---|---|---|---|---|---|

| ACOC | 5.9898 | 5.9078 | 5.9248 | 5.9442 | 8.4359 | |

| iter | 3 | 3 | 3 | 3 | 3 | |

| CPU time (seconds) | 0.031 | 0.028 | 0.030 | 0.028 | 0.029 | |

| ACOC | 4.3931 | 2.0177 | 5.8962 | 5.9425 | 7.0463 | |

| iter | 3 | 3 | 3 | 3 | 3 | |

| CPU time (seconds) | 0.060 | 0.058 | 0.058 | 0.061 | 0.059 |

| Method | H | H | H | H | H | |

|---|---|---|---|---|---|---|

| ACOC | 3.0100 | 2.0107 | 5.6132 | 5.8724 | 5.2651 | |

| iter | 3 | 3 | 3 | 3 | 3 | |

| CPU time (seconds) | 0.035 | 0.036 | 0.035 | 0.035 | 0.035 |

| H | H | H | H | H | |

|---|---|---|---|---|---|

| H | H | H | H | H | |

|---|---|---|---|---|---|

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Arora, H.; Torregrosa, J.R.; Cordero, A. Modified Potra–Pták Multi-step Schemes with Accelerated Order of Convergence for Solving Systems of Nonlinear Equations. Math. Comput. Appl. 2019, 24, 3. https://doi.org/10.3390/mca24010003

Arora H, Torregrosa JR, Cordero A. Modified Potra–Pták Multi-step Schemes with Accelerated Order of Convergence for Solving Systems of Nonlinear Equations. Mathematical and Computational Applications. 2019; 24(1):3. https://doi.org/10.3390/mca24010003

Chicago/Turabian StyleArora, Himani, Juan R. Torregrosa, and Alicia Cordero. 2019. "Modified Potra–Pták Multi-step Schemes with Accelerated Order of Convergence for Solving Systems of Nonlinear Equations" Mathematical and Computational Applications 24, no. 1: 3. https://doi.org/10.3390/mca24010003

APA StyleArora, H., Torregrosa, J. R., & Cordero, A. (2019). Modified Potra–Pták Multi-step Schemes with Accelerated Order of Convergence for Solving Systems of Nonlinear Equations. Mathematical and Computational Applications, 24(1), 3. https://doi.org/10.3390/mca24010003