Effects of Action Observation Plus Motor Imagery Administered by Immersive Virtual Reality on Hand Dexterity in Healthy Subjects

Abstract

:1. Introduction

2. Materials and Methods

2.1. Participants

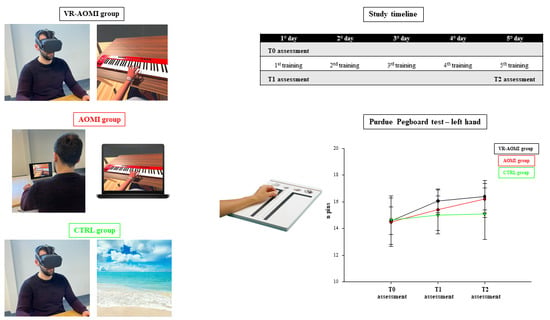

2.2. Intervention

2.3. Study Design, Randomization, and Enrollment

2.4. Functional and Kinematic Assessment

2.5. Statistical Analysis

3. Results

3.1. The Effects of AOMI Applied through VR on Manual Dexterity Assessed with the Purdue Pegboard Test

3.2. The Effects of AOMI Applied through VR on Kinematic Assessment during the Nine-Hole Peg Test

3.3. The Effects of AOMI Applied through VR on Kinematic Assessment during the Finger Tapping Test

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Nomenclature

| VR | Virtual reality |

| AOMI | Action observation and motor imagery |

| CTRL | Control |

| MNS | Mirror Neuron System |

| KVIQ | Kinesthetic and Visual Imagery Questionnaire |

| NHPT | Nine Hole Peg Test |

| PPT | Purdue Pegboard Test |

| FTT | Finger Tapping Test |

| T0 | Baseline assessment |

| T1 | Assessment after the first treatment |

| T2 | Assessment at the end of the treatment period |

References

- Rizzolatti, G.; Fabbri-Destro, M.; Nuara, A.; Gatti, R.; Avanzini, P. The role of mirror mechanism in the recovery, manteinance, and acquisition of motor abilities. Neurosci. Biobehav. Rev. 2021, 127, 404–423. [Google Scholar] [CrossRef] [PubMed]

- Simonsmeier, B.A.; Andronie, M.; Buecker, S.; Frank, C. The effects of imagery interventions in sports: A meta-analysis. Int. Rev. Sport Exerc. Psychol. 2021, 14, 186–207. [Google Scholar] [CrossRef]

- Buccino, G. Action observation treatment: A novel tool in neurorehabilitation. Philos. Trans. R. Soc. Lond. B Biol. Sci. 2014, 369, 20130185. [Google Scholar] [CrossRef] [PubMed]

- Buccino, G.; Solodkin, A.; Small, S.L. Functions of the mirror neuron system: Implications for neurorehabilitation. Cogn. Behav. Neurol. 2006, 19, 55–63. [Google Scholar] [CrossRef] [PubMed]

- Rizzolatti, G.; Cattaneo, L.; Fabbri-Destro, M.; Rozzi, S. Cortical mechanisms underlying the organization of goal-directed actions and mirror neuron-based action understanding. Physiol. Rev. 2014, 94, 655–706. [Google Scholar] [CrossRef] [PubMed]

- Stefan, K.; Cohen, L.G.; Duque, J.; Mazzocchio, R.; Celnik, P.; Sawaki, L.; Ungerleider, L.; Classen, J. Formation of a motor memory by action observation. J. Neurosci. 2005, 25, 9339–9346. [Google Scholar] [CrossRef] [PubMed]

- Angelini, M.; Fabbri-Destro, M.; Lopomo, N.F.; Gobbo, M.; Rizzolatti, G.; Avanzini, P. Perspective-dependent reactivity of sensorimotor mu rhythm in alpha and beta ranges during action observation: An EEG study. Sci. Rep. 2018, 8, 12429. [Google Scholar] [CrossRef] [PubMed]

- Gatti, R.; Rocca, M.A.; Fumagalli, S.; Cattrysse, E.; Kerckhofs, E.; Falini, A.; Filippi, M. The effect of action observation/execution on mirror neuron system recruitment: An fMRI study in healthy individuals. Brain. Imaging Behav. 2017, 11, 565–576. [Google Scholar] [CrossRef] [PubMed]

- De Manzano, Ö.; Kuckelkorn, K.L.; Ström, K.; Ullén, F. Action-Perception Coupling and Near Transfer: Listening to Melodies after Piano Practice Triggers Sequence-Specific Representations in the Auditory-Motor Network. Cereb. Cortex. 2020, 30, 5193–5203. [Google Scholar] [CrossRef]

- Hardwick, R.M.; Caspers, S.; Eickhoff, S.B.; Swinnen, S.P. Neural correlates of action: Comparing meta-analyses of imagery, observation, and execution. Neurosci. Biobehav. Rev. 2018, 94, 31–44. [Google Scholar] [CrossRef]

- Bisio, A.; Bassolino, M.; Pozzo, T.; Wenderoth, N. Boosting Action Observation and Motor Imagery to Promote Plasticity and Learning. Neural Plast. 2018, 2018, 8625861. [Google Scholar] [CrossRef] [PubMed]

- Romano-Smith, S.; Wood, G.; Wright, D.J.; Wakefield, C.J. Simultaneous and alternate action observation and motor imagery combinations improve aiming performance. Psychol. Sport. Exerc. 2018, 38, 100–106. [Google Scholar] [CrossRef]

- Chye, S.; Valappil, A.C.; Wright, D.J.; Frank, C.; Shearer, D.A.; Tyler, C.J.; Diss, C.E.; Mian, O.S.; Tillin, N.A.; Bruton, A.M. The effects of combined action observation and motor imagery on corticospinal excitability and movement outcomes: Two meta-analyses. Neurosci. Biobehav. Rev. 2022, 143, 104911. [Google Scholar] [CrossRef]

- Prasertsakul, T.; Kaimuk, P.; Chinjenpradit, W.; Limroongreungrat, W.; Charoensuk, W. The effect of virtual reality-based balance training on motor learning and postural control in healthy adults: A randomized preliminary study. Biomed. Eng. Online 2018, 17, 124. [Google Scholar] [CrossRef]

- Pastel, S.; Petri, K.; Chen, C.H.; Wiegand Cáceres, A.M.; Stirnatis, M.; Nübel, C.; Schlotter, L.; Witte, K. Training in virtual reality enables learning of a complex sports movement. Virtual Real. 2023, 27, 523–540. [Google Scholar] [CrossRef]

- Fusco, A.; Tieri, G. Challenges and Perspectives for Clinical Applications of Immersive and Non-Immersive Virtual Reality. J. Clin. Med. 2022, 11, 4540. [Google Scholar] [CrossRef]

- Slater, M.; Pérez-Marcos, D.; Ehrsson, H.H.; Sanchez-Vives, M.V. Towards a digital body: The virtual arm illusion. Front. Hum. Neurosci. 2008, 2, 6. [Google Scholar] [CrossRef]

- Banakou, D.; Slater, M. Body ownership causes illusory self-attribution of speaking and influences subsequent real speaking. Proc. Natl. Acad. Sci. USA 2014, 111, 17678–17683. [Google Scholar] [CrossRef]

- Kilteni, K.; Normand, J.M.; Sanchez-Vives, M.V.; Slater, M. Extending body space in immersive virtual reality: A very long arm illusion. PLoS ONE 2012, 7, e40867. [Google Scholar] [CrossRef] [PubMed]

- Argelaguet, F.; Hoyet, L.; Trico, M.; Lecuyer, A. The role of interaction in virtual embodiment: Effects of the virtual hand representation. In Proceedings of the 2016 IEEE Virtual Reality (VR), Greenville, SC, USA, 19–23 March 2016; pp. 3–10. [Google Scholar]

- Nuara, A.; Fabbri-Destro, M.; Scalona, E.; Lenzi, S.E.; Rizzolatti, G.; Avanzini, P. Telerehabilitation in response to constrained physical distance: An opportunity to rethink neurorehabilitative routines. J. Neurol. 2022, 269, 627–638. [Google Scholar] [CrossRef] [PubMed]

- Jastorff, J.; Abdollahi, R.O.; Fasano, F.; Orban, G.A. Seeing biological actions in 3D: An fMRI study. Hum. Brain Mapp. 2016, 37, 203–219. [Google Scholar] [CrossRef] [PubMed]

- Laver, K.E.; Lange, B.; George, S.; Deutsch, J.E.; Saposnik, G.; Crotty, M. Virtual reality for stroke rehabilitation. Cochrane Database Syst. Rev. 2017, 11, CD008349. [Google Scholar] [CrossRef] [PubMed]

- Burin-Chu, S.; Baillet, H.; Leconte, P.; Lejeune, L.; Thouvarecq, R.; Benguigui, N. Effectiveness of virtual reality interventions of the upper limb in children and young adults with cerebral palsy: A systematic review with meta-analysis. Clin. Rehabil. 2024, 38, 15–33. [Google Scholar] [CrossRef] [PubMed]

- Dejian, L.; Dede, C.; Huang, R.; Richards, J. Implicit Learning through Embodiment in Immersive Virtual Reality. In Virtual, Augmented, and Mixed Realities in Education; Springer: Singapore, 2018; pp. 19–33. [Google Scholar]

- Iacoboni, M.; Molnar-Szakacs, I.; Gallese, V.; Buccino, G.; Mazziotta, J.C.; Rizzolatti, G. Grasping the intentions of others with one’s own mirror neuron system. PLoS Biol. 2005, 3, e79. [Google Scholar] [CrossRef]

- Liuzza, M.T.; Candidi, M.; Sforza, A.L.; Aglioti, S.M. Harm avoiders suppress motor resonance to observed immoral actions. Soc. Cogn. Affect. Neurosci. 2015, 10, 72–77. [Google Scholar] [CrossRef] [PubMed]

- Amoruso, L.; Urgesi, C. Contextual modulation of motor resonance during the observation of everyday actions. NeuroImage 2016, 134, 74–84. [Google Scholar] [CrossRef] [PubMed]

- Choi, J.W.; Kim, B.H.; Huh, S.; Jo, S. Observing Actions Through Immersive Virtual Reality Enhances Motor Imagery Training. IEEE Tranbs. Neural. Syst. Rehabil. Eng. 2020, 28, 1614–1622. [Google Scholar] [CrossRef]

- Mao, H.; Li, Y.; Tang, L.; Chen, Y.; Ni, J.; Liu, L.; Shan, C. Effects of mirror neuron system-based training on rehabilitation of stroke patients. Brain Behav. 2020, 10, e01729. [Google Scholar] [CrossRef]

- Tofani, M.; Santecchia, L.; Conte, A.; Berardi, A.; Galeoto, G.; Sogos, C.; Petrarca, M.; Panuccio, F.; Castelli, E. Effects of Mirror Neurons-Based Rehabilitation Techniques in Hand Injuries: A Systematic Review and Meta-Analysis. Int. J. Environ. Res. Public Health 2022, 19, 5526. [Google Scholar] [CrossRef]

- Agnelli, M.; Libeccio, B.; Frisoni, M.C.; Bolzoni, F.; Temporiti, F.; Gatti, R. Action observation plus motor imagery and somatosensory discrimination training are effective non-motor approaches to improve manual dexterity. J. Hand Ther. 2023, 37, 94–100. [Google Scholar] [CrossRef]

- Chan, T. An investigation of finger and manual dexterity. Percept. Mot. Skills. 2000, 90, 537–542. [Google Scholar] [CrossRef]

- Karni, A.; Meyer, G.; Rey-Hipolito, C.; Jezzard, P.; Adams, M.M.; Turner, R.; Ungerleider, L.G. The acquisition of skilled motor performance: Fast and slow experience-driven changes in primary motor cortex. Proc. Natl. Acad. Sci. USA 1998, 95, 861–868. [Google Scholar] [CrossRef]

- Buddenberg, L.A.; Davis, C. Test-retest reliability of the Purdue Pegboard Test. Am. J. Occup. Ther. 2000, 54, 555–558. [Google Scholar] [CrossRef]

- Lakens, D. Calculating and reporting effect sizes to facilitate cumulative science: A practical primer for t-tests and ANOVAs. Front. Psychol. 2013, 4, 863. [Google Scholar] [CrossRef]

- Oldfield, R.C. The assessment and analysis of handedness: The Edinburgh inventory. Neuropsychologia 1971, 9, 97–113. [Google Scholar] [CrossRef]

- Scalona, E.; De Marco, D.; Bazzini, M.C.; Nuara, A.; Zilli, A.; Taglione, E.; Pasqualetti, F.; Della Polla, G.; Lopomo, N.F.; Fabbri-Destro, M.; et al. A Repertoire of Virtual-Reality, Occupational Therapy Exercises for Motor Rehabilitation Based on Action Observation. Data 2022, 7, 9. [Google Scholar] [CrossRef]

- Malouin, F.; Richards, C.L.; Jackson, P.L.; Lafleur, M.F.; Durand, A.; Doyon, J. The Kinesthetic and Visual Imagery Questionnaire (KVIQ) for assessing motor imagery in persons with physical disabilities: A reliability and construct validity study. J. Neurol. Phys. Ther. 2007, 31, 20–29. [Google Scholar] [CrossRef]

- Temporiti, F.; Mandaresu, S.; Calcagno, A. Kinematic evaluation and reliability assessment of the Nine Hole Peg Test for manual dexterity. J. Hand Ther. 2023, 36, 560–567. [Google Scholar] [CrossRef]

- Li, J.; Zhu, H.; Pan, Y.; Wang, H.; Cen, Z.; Yang, D.; Luo, W. Three-Dimensional Pattern Features in Finger Tapping Test for Patients with Parkinson’s disease. Proc. Annu. Int. Conf. IEEE Eng. Med. Biol Soc. 2020, 2020, 3676–3679. [Google Scholar]

- Huygelier, H.; Mattheus, E.; Abeele, V.V.; van Ee, R.; Gillebert, C.R. The Use of the Term Virtual Reality in Post-Stroke Rehabilitation: A Scoping Review and Commentary. Psychol. Belg. 2021, 61, 145–162. [Google Scholar] [CrossRef]

- Lee, S.H.; Jung, H.Y.; Yun, S.J.; Oh, B.M.; Seo, H.G. Upper Extremity Rehabilitation Using Fully Immersive Virtual Reality Games with a Head Mount Display: A Feasibility Study. PMR 2020, 12, 257–262. [Google Scholar] [CrossRef]

- Romano Smith, S.; Wood, G.; Coyles, G.; Roberts, J.W.; Wakefield, C.J. The effect of action observation and motor imagery combinations on upper limb kinematics and EMG during dart-throwing. Scand. J. Med. Sci. Sports 2019, 29, 1917–1929. [Google Scholar] [CrossRef]

- Gatti, R.; Tettamanti, A.; Gough, P.M.; Riboldi, E.; Marinoni, L.; Buccino, G. Action observation versus motor imagery in learning a complex motor task: A short review of literature and a kinematics study. Neurosci. Lett. 2013, 540, 37–42. [Google Scholar] [CrossRef]

- Pereira, E.A.; Raja, K.; Gangavalli, R. Effect of training on interlimb transfer of dexterity skills in healthy adults. Am. J. Phys. Med. Rehabil. 2011, 90, 25–34. [Google Scholar] [CrossRef]

- Calvo-Merino, B.; Grèzes, J.; Glaser, D.E.; Passingham, R.E.; Haggard, P. Seeing or Doing? Influence of Visual and Motor Familiarity in Action Observation. Curr. Biol. 2006, 16, 1905–1910. [Google Scholar] [CrossRef]

- Turhan, B.; Gümüş, Z.H. A Brave New World: Virtual Reality and Augmented Reality in Systems Biology. Front. Bioinform. 2022, 2, 873478. [Google Scholar] [CrossRef]

- Ferri, S.; Pauwels, K.; Rizzolatti, G.; Orban, G.A. Stereoscopically Observing Manipulative Actions. Cereb. Cortex. 2016, 26, 3591–3610. [Google Scholar] [CrossRef]

- Petkova, V.I.; Khoshnevis, M.; Ehrsson, H.H. The perspective matters! Multisensory integration in ego-centric reference frames determines full-body ownership. Front. Psychol. 2011, 2, 35. [Google Scholar] [CrossRef]

- Šlosar, L.; Peskar, M.; Pišot, R.; Marusic, U. Environmental enrichment through virtual reality as multisensory stimulation to mitigate the negative effects of prolonged bed rest. Front. Aging Neurosci. 2023, 15, 1169683. [Google Scholar] [CrossRef]

- Tinga, A.M.; Visser-Meily, J.M.; van der Smagt, M.J.; Van der Stigchel, S.; van Ee, R.; Nijboer, T.C. Multisensory Stimulation to Improve Low- and Higher-Level Sensory Deficits after Stroke: A Systematic Review. Neuropsychol. Rev. 2016, 26, 73–91. [Google Scholar] [CrossRef]

- Brugada-Ramentol, V.; Bozorgzadeh, A.; Jalali, H. Enhance VR: A multisensory approach to cognitive training and monitoring. Front. Digit. Health 2022, 4, 916052. [Google Scholar] [CrossRef] [PubMed]

- Waltemate, T.; Gall, D.; Roth, D.; Botsch, M.; Latoschik, M.E. The impact of avatar personalization and immersion on virtual body ownership, presence, and emotional response. IEEE Trans. Vis. Comput. Graph. 2018, 24, 1643–1652. [Google Scholar] [CrossRef] [PubMed]

- Proud, E.L.; Miller, K.J.; Bilney, B.; Morris, M.E.; McGinley, J.L. Construct validity of the 9-Hole Peg Test and Purdue Pegboard Test in people with mild to moderately severe Parkinson’s disease. Physiotherapy 2020, 107, 202–208. [Google Scholar] [CrossRef] [PubMed]

- Tseng, Y.C.; Chang, K.Y.; Liu, P.L.; Chang, C.C. Applying the purdue pegboard to evaluate precision assembly performance. In Proceedings of the IEEE International Conference on Industrial Engineering and Engineering Management (IEEM) 2017, Singapore, 10–13 December 2017; pp. 1179–1183. [Google Scholar]

- Cortes, J.C.; Goldsmith, J.; Harran, M.D.; Xu, J.; Kim, N.; Schambra, H.M.; Luft, A.R.; Celnik, P.; Krakauer, J.W.; Kitago, T. A short and distinct time window for recovery of arm motor control early after stroke revealed with a global measure of trajectory kinematics. Neurorehabilit. Neural Repair 2017, 31, 552–560. [Google Scholar] [CrossRef] [PubMed]

| VR-AOMI Group | AOMI Group | CTRL Group | p-Value | |

|---|---|---|---|---|

| (n = 15) | (n = 15) | (n = 15) | ||

| Age (years) | 24.06 ± 3.1 | 23.53 ± 3.24 | 22.53 ± 1.06 | 0.32 |

| Weight (kg) | 67.43 ± 10.82 | 66.06 ± 12.52 | 69.53 ± 8.71 | 0.67 |

| Height (cm) | 176.53 ± 6.76 | 172.6 ± 11.09 | 172.73 ± 6.96 | 0.39 |

| Gender | 11M/4F (27%F) | 9M/6F (40%F) | 10M/5F (33%F) | 0.74 |

| KVIQ | 36.6 ± 7.22 | 40.87 ± 6.42 | 38.27 ± 8.13 | 0.35 |

| (A) | |||||

| VR-AOMI | T0 | T1 | T2 | d (CI95) T1–T0 | d (CI95) T2–T0 |

| R task | 15.49 ± 1.57 | 16.60 ± 1.14 | 17.17 ± 1.57 | 1.03 (0.39, 1.65) | 1.51 (0.75, 2.52) |

| L task | 14.58 ± 1.03 | 16.06 ± 0.85 | 16.40 ± 0.99 | 2.37 (1.53, 3.36) | 1.84 (0.99, 2.67) |

| B Task | 12.04 ± 1.22 | 12.71 ± 1.09 | 13.35 ± 1.38 | 0.75 (0.16, 1.31) | 1.79 (0.95,2.61) |

| R + L + B task | 42.62 ± 2.94 | 45.42 ± 2.82 | 46.98 ± 3.80 | 1.75 (0.92, 2.56) | 2.07 (1.15, 2.97) |

| Assembly task | 40.86 ± 5.02 | 44.55 ± 4.94 | 46.86 ± 5.70 | 1.86 (0.99, 2.69) | 2.73 (1.60, 3.85) |

| (B) | |||||

| AOMI | T0 | T1 | T2 | d (CI95) T1–T0 | d (CI95) T2–T0 |

| R task | 15.53 ± 1.33 | 16.51 ± 1.53 | 17.13 ± 1.67 | 0.98 (0.34,1.58) | 1.51 (0.74, 2.42) |

| L task | 14.49 ± 1.83 | 15.42 ± 1.55 | 16.22 ± 1.38 | 1.35 (0.63, 2.05) | 1.70 (0.89, 2.50) |

| B Task | 12.18 ± 1.37 | 12.77 ± 1.50 | 13.13 ± 1.41 | 0.84 (0.24, 1.42) | 1.09 (0.43, 1.72) |

| R + L + B task | 42.33 ± 3.94 | 44.29 ± 3.90 | 46.46 ± 3.56 | 1.38 (0.65, 2.08) | 1.91 (1.03, 2.76) |

| Assembly task | 43.86 ± 5.91 | 46.15 ± 5.58 | 48.40 ± 4.68 | 0.62 (0.06, 1.17) | 1.11 (0.45, 1.75) |

| (C) | |||||

| CTRL | T0 | T1 | T2 | d (CI95) T1–T0 | d (CI95) T2–T0 |

| R task | 16.00 ± 1.58 | 16.10 ± 1.57 | 16.22 ± 2.00 | 0.1 (−0.41, 0.60) | 0.22 (−0.30, 0.73) |

| L task | 14.64 ± 1.81 | 15.02 ± 1.43 | 15.11 ± 1.92 | 0.52 (−0.3, 1.05) | 0.50 (−0.04, 1.04) |

| B Task | 12.11 ± 1.58 | 12.24 ± 1.40 | 12.80 ± 1.77 | 0.16 (−0.35, 0.67) | 1.18 (0.54, 1.84) |

| R + L + B task | 42.95 ± 4.73 | 43.15 ± 4.18 | 44.11 ± 5.42 | 0.09 (−0.42, 0.60) | 0.72 (0.14, 1.28) |

| Assembly task | 45.09 ± 5.77 | 46.78 ± 5.69 | 47.78 ± 6.53 | 0.90 (0.28, 1.49) | 1.53 (0.76, 2.28) |

| ΔT1–T0 Cohen’s d (CI95) | ΔT2–T0 Cohen’s d (CI95) | |||||

|---|---|---|---|---|---|---|

| VR-AOMI/AOMI | VR-AOMI/CTRL | AOMI/CTRL | VR-AOMI/AOMI | VR-AOMI/CTRL | AOMI/CTRL | |

| R task | 0.13 (−0.59–0.84) | 0.90 (0.14–1.65) | 0.81 (0.06–1.55) | 0.08 (−0.63–0.80) | 1.39 (0.58–2.19) | 1.35 (0.54–2.13) |

| L task | 0.84 (0.09–1.58) | 1.64 (0.79–2.46) | 0.79 (0.04–1.52) | 0.09 (−0.63–0.80) | 1.42 (0.60–2.21) | 1.31 (0.50–2.09) |

| B Task | 0.08 (−0.63–0.80) | 0.62 (−0.12–1.35) | 0.60 (−0.14–1.33) | 0.44 (−0.29–1.16) | 0.94 (0.18–1.69) | 0.36 (−0.37–1.08) |

| R + L + B task | 0.18 (−0.54–0.90) | 1.38 (0.57–2.17) | 1.16 (0.38–1.93) | 0.09 (−0.62–0.81) | 1.71 (0.85–2.54) | 1.57 (0.73–2.38) |

| Assembly task | 0.48 (−0.26–1.20) | 1.03 (0.26–1.79) | 0.21 (−0.51–0.92) | 0.45 (−0.28–1.17) | 1.67 (0.82–2.49) | 0.59 (−0.15–1.32) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Adamo, P.; Longhi, G.; Temporiti, F.; Marino, G.; Scalona, E.; Fabbri-Destro, M.; Avanzini, P.; Gatti, R. Effects of Action Observation Plus Motor Imagery Administered by Immersive Virtual Reality on Hand Dexterity in Healthy Subjects. Bioengineering 2024, 11, 398. https://doi.org/10.3390/bioengineering11040398

Adamo P, Longhi G, Temporiti F, Marino G, Scalona E, Fabbri-Destro M, Avanzini P, Gatti R. Effects of Action Observation Plus Motor Imagery Administered by Immersive Virtual Reality on Hand Dexterity in Healthy Subjects. Bioengineering. 2024; 11(4):398. https://doi.org/10.3390/bioengineering11040398

Chicago/Turabian StyleAdamo, Paola, Gianluca Longhi, Federico Temporiti, Giorgia Marino, Emilia Scalona, Maddalena Fabbri-Destro, Pietro Avanzini, and Roberto Gatti. 2024. "Effects of Action Observation Plus Motor Imagery Administered by Immersive Virtual Reality on Hand Dexterity in Healthy Subjects" Bioengineering 11, no. 4: 398. https://doi.org/10.3390/bioengineering11040398