Prediction of Biochar Yield and Specific Surface Area Based on Integrated Learning Algorithm

Abstract

:1. Introduction

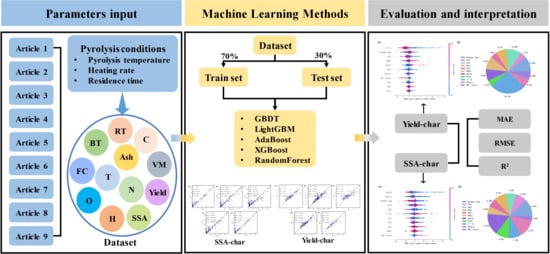

2. Materials and Methods

2.1. Dataset Collection

2.2. Dataset Preprocessing

2.3. Standardization of Datasets

2.4. Machine Learning Models

2.4.1. RandomForest

2.4.2. Adaboost

2.4.3. XGBoost

2.4.4. GBDT

2.4.5. LightGBM

2.5. Metrics for Machine Learning Model Evaluation

2.6. Feature Importance Analysis for Machine Learning Models

3. Results

3.1. Statistical Analysis of Sample Data

3.2. Model Prediction

3.2.1. Machine Learning Models Predicting Comparative Yield Productions

3.2.2. Machine Learning Predicting Comparative Surface Area

3.3. Machine Learning Models Explained

3.3.1. Explanation of the Yield Prediction Model for Biochar

3.3.2. Explanation of the Surface areas Model for Biochar

3.4. Compare with Previous Work

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Joselin Herbert, G.M.; Unni Krishnan, A. Quantifying environmental performance of biomass energy. Renew. Sustain. Energy Rev. 2016, 59, 292–308. [Google Scholar] [CrossRef]

- Lee, S.H.; Lum, W.C.; Boon, J.G.; Kristak, L.; Antov, P.; Pędzik, M.; Rogoziński, T.; Taghiyari, H.R.; Lubis, M.A.R.; Fatriasari, W.; et al. Particleboard from agricultural biomass and recycled wood waste: A review. J. Mater. Res. Technol. 2022, 20, 4630–4658. [Google Scholar] [CrossRef]

- Zhao, L.; Wang, Z.; Ren, H.Y.; Chen, C.; Nan, J.; Cao, G.L.; Yang, S.S.; Ren, N.Q. Residue cornstalk derived biochar promotes direct bio-hydrogen production from anaerobic fermentation of cornstalk. Bioresour. Technol. 2021, 320, 124338. [Google Scholar] [CrossRef]

- Liang, Y.; Wang, Y.; Ding, N.; Liang, L.; Zhao, S.; Yin, D.; Cheng, Y.; Wang, C.; Wang, L. Preparation and hydrogen storage performance of poplar sawdust biochar with high specific surface area. Ind. Crops Prod. 2023, 200, 116788. [Google Scholar] [CrossRef]

- Nguyen, Q.A.; Smith, W.A.; Wahlen, B.D.; Wendt, L.M. Total and Sustainable Utilization of Biomass Resources: A Perspective. Front. Bioeng. Biotechnol. 2020, 8, 546. [Google Scholar] [CrossRef] [PubMed]

- Chen, D.; Yin, L.; Wang, H.; He, P. Pyrolysis technologies for municipal solid waste: A review. Waste Manag. 2014, 34, 2466–2486. [Google Scholar] [CrossRef] [PubMed]

- Leng, L.; Huang, H.; Li, H.; Li, J.; Zhou, W. Biochar stability assessment methods: A review. Sci. Total Environ. 2019, 647, 210–222. [Google Scholar] [CrossRef] [PubMed]

- Xiang, W.; Zhang, X.; Chen, J.; Zou, W.; He, F.; Hu, X.; Tsang, D.C.W.; Ok, Y.S.; Gao, B. Biochar technology in wastewater treatment: A critical review. Chemosphere 2020, 252, 126539. [Google Scholar] [CrossRef]

- Lee, D.J.; Cheng, Y.L.; Wong, R.J.; Wang, X.D. Adsorption removal of natural organic matters in waters using biochar. Bioresour. Technol. 2018, 260, 413–416. [Google Scholar] [CrossRef]

- Lyu, H.; Tang, J.; Huang, Y.; Gai, L.; Zeng, E.Y.; Liber, K.; Gong, Y. Removal of hexavalent chromium from aqueous solutions by a novel biochar supported nanoscale iron sulfide composite. Chem. Eng. J. 2017, 322, 516–524. [Google Scholar] [CrossRef]

- Azeem, M.; Hassan, T.U.; Tahir, M.I.; Ali, A.; Jeyasundar, P.G.S.A.; Hussain, Q.; Bashir, S.; Mehmood, S.; Zhang, Z. Tea leaves biochar as a carrier of Bacillus cereus improves the soil function and crop productivity. Appl. Soil Ecol. 2021, 157, 103732. [Google Scholar] [CrossRef]

- Bolan, N.; Hoang, S.A.; Beiyuan, J.; Gupta, S.; Hou, D.; Karakoti, A.; Joseph, S.; Jung, S.; Kim, K.-H.; Kirkham, M.B.; et al. Multifunctional applications of biochar beyond carbon storage. Int. Mater. Rev. 2021, 67, 150–200. [Google Scholar] [CrossRef]

- Leng, L.; Xiong, Q.; Yang, L.; Li, H.; Zhou, Y.; Zhang, W.; Jiang, S.; Li, H.; Huang, H. An overview on engineering the surface area and porosity of biochar. Sci Total Environ. 2021, 763, 144204. [Google Scholar] [CrossRef] [PubMed]

- Choi, M.K.; Park, H.C.; Choi, H.S. Comprehensive evaluation of various pyrolysis reaction mechanisms for pyrolysis process simulation. Chem. Eng. Process.-Process. Intensif. 2018, 130, 19–35. [Google Scholar] [CrossRef]

- Diblasi, C. Modeling chemical and physical processes of wood and biomass pyrolysis. Prog. Energy Combust. Sci. 2008, 34, 47–90. [Google Scholar] [CrossRef]

- Zhu, X.; Li, Y.; Wang, X. Machine learning prediction of biochar yield and carbon contents in biochar based on biomass characteristics and pyrolysis conditions. Bioresour. Technol. 2019, 288, 121527. [Google Scholar] [CrossRef] [PubMed]

- Cao, H.; Xin, Y.; Yuan, Q. Prediction of biochar yield from cattle manure pyrolysis via least squares support vector machine intelligent approach. Bioresour. Technol. 2016, 202, 158–164. [Google Scholar] [CrossRef]

- Muhammad Saleem, I.A. Machine Learning Based Prediction of Pyrolytic Conversion for Red Sea Seaweed. In Proceedings of the Budapest 2017 International Conferences LEBCSR-17, ALHSSS-17, BCES-17, AET-17, CBMPS-17 & SACCEE-17, Budapest, Hungary, 6–7 September 2017. [Google Scholar]

- Tripathi, M.; Sahu, J.N.; Ganesan, P. Effect of process parameters on production of biochar from biomass waste through pyrolysis: A review. Renew. Sustain. Energy Rev. 2016, 55, 467–481. [Google Scholar] [CrossRef]

- Paula, A.J.; Ferreira, O.P.; Souza Filho, A.G.; Filho, F.N.; Andrade, C.E.; Faria, A.F. Machine Learning and Natural Language Processing Enable a Data-Oriented Experimental Design Approach for Producing Biochar and Hydrochar from Biomass. Chem. Mater. 2022, 34, 979–990. [Google Scholar] [CrossRef]

- Ganaie, M.A.; Hu, M.; Malik, A.K.; Tanveer, M.; Suganthan, P.N. Ensemble deep learning: A review. Eng. Appl. Artif. Intell. 2022, 115, 105151. [Google Scholar] [CrossRef]

- Yang, Y.; Lv, H.; Chen, N. A Survey on ensemble learning under the era of deep learning. Artif. Intell. Rev. 2022, 56, 5545–5589. [Google Scholar] [CrossRef]

- Srungavarapu, C.S.; Sheik, A.G.; Tejaswini, E.S.S.; Mohammed Yousuf, S.; Ambati, S.R. An integrated machine learning framework for effluent quality prediction in Sewage Treatment Units. Urban Water J. 2023, 20, 487–497. [Google Scholar] [CrossRef]

- Tsai, W.; Wang, S.; Chang, C.; Chien, S.; Sun, H. Cleaner production of carbon adsorbents by utilizing agricultural waste corn cob. Resour. Conserv. Recycl. 2001, 32, 43–53. [Google Scholar] [CrossRef]

- Emmanuel, T.; Maupong, T.; Mpoeleng, D.; Semong, T.; Mphago, B.; Tabona, O. A survey on missing data in machine learning. J. Big Data 2021, 8, 140. [Google Scholar] [CrossRef]

- Were, K.; Bui, D.T.; Dick, Ø.B.; Singh, B.R. A comparative assessment of support vector regression, artificial neural networks, and random forests for predicting and mapping soil organic carbon stocks across an Afromontane landscape. Ecol. Indic. 2015, 52, 394–403. [Google Scholar] [CrossRef]

- Sun, Y.; Zheng, W.; Zhang, L.; Zhao, H.; Li, X.; Zhang, C.; Ma, W.; Tian, D.; Yu, K.H.; Xiao, S.; et al. Quantifying the Impacts of Pre- and Post-Conception TSH Levels on Birth Outcomes: An Examination of Different Machine Learning Models. Front. Endocrinol. 2021, 12, 755364. [Google Scholar] [CrossRef]

- Zhu, X.; Wan, Z.; Tsang, D.C.W.; He, M.; Hou, D.; Su, Z.; Shang, J. Machine learning for the selection of carbon-based materials for tetracycline and sulfamethoxazole adsorption. Chem. Eng. J. 2021, 406, 126782. [Google Scholar] [CrossRef]

- Morales-Hernández, A.; Van Nieuwenhuyse, I.; Rojas Gonzalez, S. A survey on multi-objective hyperparameter optimization algorithms for machine learning. Artif. Intell. Rev. 2022, 56, 8043–8093. [Google Scholar] [CrossRef]

- Grimm, K.J.; Mazza, G.L.; Davoudzadeh, P. Model Selection in Finite Mixture Models: A k-Fold Cross-Validation Approach. Struct. Equ. Model. A Multidiscip. J. 2016, 24, 246–256. [Google Scholar] [CrossRef]

- Hoarau, A.; Martin, A.; Dubois, J.-C.; Le Gall, Y. Evidential Random Forests. Expert Syst. Appl. 2023, 230, 120652. [Google Scholar] [CrossRef]

- Tirink, C.; Piwczynski, D.; Kolenda, M.; Onder, H. Estimation of Body Weight Based on Biometric Measurements by Using Random Forest Regression, Support Vector Regression and CART Algorithms. Animal 2023, 13, 798. [Google Scholar] [CrossRef]

- Zhao, T.; Liu, S.; Xu, J.; He, H.; Wang, D.; Horton, R.; Liu, G. Comparative analysis of seven machine learning algorithms and five empirical models to estimate soil thermal conductivity. Agric. For. Meteorol. 2022, 323, 109080. [Google Scholar] [CrossRef]

- Barrow, D.K.; Crone, S.F. A comparison of AdaBoost algorithms for time series forecast combination. Int. J. Forecast. 2016, 32, 1103–1119. [Google Scholar] [CrossRef]

- Dong, J.; Chen, Y.; Yao, B.; Zhang, X.; Zeng, N. A neural network boosting regression model based on XGBoost. Appl. Soft Comput. 2022, 125, 109067. [Google Scholar] [CrossRef]

- Tasneem, S.; Ageeli, A.A.; Alamier, W.M.; Hasan, N.; Safaei, M.R. Organic catalysts for hydrogen production from noodle wastewater: Machine learning and deep learning-based analysis. Int. J. Hydrogen Energy 2023, 52, 599–616. [Google Scholar] [CrossRef]

- Pathy, A.; Meher, S.P.B. Predicting algal biochar yield using eXtreme Gradient Boosting (XGB) algorithm of machine learning methods. Algal Res. 2020, 50, 102006. [Google Scholar] [CrossRef]

- Shehadeh, A.; Alshboul, O.; Al Mamlook, R.E.; Hamedat, O. Machine learning models for predicting the residual value of heavy construction equipment: An evaluation of modified decision tree, LightGBM, and XGBoost regression. Autom. Constr. 2021, 129, 103827. [Google Scholar] [CrossRef]

- Wu, Y.; Zhou, Y. Hybrid machine learning model and Shapley additive explanations for compressive strength of sustainable concrete. Constr. Build. Mater. 2022, 330, 127298. [Google Scholar] [CrossRef]

- Fahmi, R.; Bridgwater, A.V.; Donnison, I.; Yates, N.; Jones, J.M. The effect of lignin and inorganic species in biomass on pyrolysis oil yields, quality and stability. Fuel 2008, 87, 1230–1240. [Google Scholar] [CrossRef]

- Li, W.; Dang, Q.; Brown, R.C.; Laird, D.; Wright, M.M. The impacts of biomass properties on pyrolysis yields, economic and environmental performance of the pyrolysis-bioenergy-biochar platform to carbon negative energy. Bioresour. Technol. 2017, 241, 959–968. [Google Scholar] [CrossRef]

- Xu, S.; Chen, J.; Peng, H.; Leng, S.; Li, H.; Qu, W.; Hu, Y.; Li, H.; Jiang, S.; Zhou, W.; et al. Effect of biomass type and pyrolysis temperature on nitrogen in biochar, and the comparison with hydrochar. Fuel 2021, 291, 120128. [Google Scholar] [CrossRef]

- Angin, D. Effect of pyrolysis temperature and heating rate on biochar obtained from pyrolysis of safflower seed press cake. Bioresour. Technol. 2013, 128, 593–597. [Google Scholar] [CrossRef] [PubMed]

- Bridgwater, A.V. Review of fast pyrolysis of biomass and product upgrading. Biomass Bioenergy 2012, 38, 68–94. [Google Scholar] [CrossRef]

- Alabdrabalnabi, A.; Gautam, R.; Mani Sarathy, S. Machine learning to predict biochar and bio-oil yields from co-pyrolysis of biomass and plastics. Fuel 2022, 328, 125303. [Google Scholar] [CrossRef]

- Li, H.; Ai, Z.; Yang, L.; Zhang, W.; Yang, Z.; Peng, H.; Leng, L. Machine learning assisted predicting and engineering specific surface area and total pore volume of biochar. Bioresour. Technol. 2023, 369, 128417. [Google Scholar] [CrossRef]

- Li, L.; Zhao, Y.; Yu, H.; Wang, Z.; Zhao, Y.; Jiang, M. An XGBoost Algorithm Based on Molecular Structure and Molecular Specificity Parameters for Predicting Gas Adsorption. Langmuir 2023, 39, 6756–6766. [Google Scholar] [CrossRef] [PubMed]

| C | H | O | N | VM | Ash | FC | T | RT | HR | Yield-Char | SSA-Char | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| count | 593 | 593 | 593 | 577 | 521 | 597 | 506 | 622 | 622 | 617 | 474 | 348 |

| mean | 48.44 | 6.41 | 1.92 | 40.93 | 76.33 | 7.75 | 21.55 | 476.22 | 69.23 | 19.95 | 38.97 | 80.15 |

| std | 9.84 | 1.37 | 3.98 | 9.17 | 9.01 | 7.72 | 58.13 | 147.25 | 70.65 | 38.75 | 14.75 | 112.08 |

| min | 4.80 | 3.42 | 0 | 0.87 | 27.62 | 0.16 | 3.37 | 30 | 1 | 1 | 9.17 | 0.02 |

| 25% | 43.92 | 5.81 | 0.49 | 39.37 | 72.95 | 2.42 | 11.25 | 356.25 | 30 | 10 | 28.52 | 4.97 |

| 50% | 47.75 | 6.19 | 1.07 | 42.54 | 77.75 | 6.04 | 16.49 | 500 | 60 | 10 | 35.77 | 25.63 |

| 75% | 51.01 | 6.70 | 1.89 | 45.51 | 82.38 | 9.86 | 20.09 | 600 | 60 | 18 | 47.04 | 98.33 |

| max | 87.62 | 13.67 | 40.41 | 63.34 | 94.16 | 45.54 | 600 | 900 | 480 | 300 | 93.50 | 525.86 |

| Yield-Char (%) | ||||||

|---|---|---|---|---|---|---|

| Train Set | Test Set | |||||

| MSE | RMSE | R2 | MSE | RMSE | R2 | |

| GBDT | 23.05 | 4.80 | 0.99 | 85.44 | 9.24 | 0.75 |

| LightGBM | 21.52 | 4.64 | 0.99 | 85.83 | 9.26 | 0.75 |

| AdaBoost | 24.63 | 4.96 | 0.96 | 96.78 | 9.84 | 0.72 |

| XGBoost | 22.51 | 4.75 | 0.99 | 70.66 | 8.41 | 0.79 |

| RandomForest | 15.18 | 3.90 | 0.98 | 61.05 | 7.81 | 0.71 |

| SSA-Char (%) | ||||||

|---|---|---|---|---|---|---|

| Train Set | Test Set | |||||

| MSE | RMSE | R2 | MSE | RMSE | R2 | |

| GBDT | 1043.77 | 32.31 | 0.98 | 3285.73 | 57.32 | 0.87 |

| LightGBM | 1352.46 | 36.78 | 0.96 | 3534.53 | 59.45 | 0.90 |

| AdaBoost | 1230.90 | 35.08 | 0.97 | 4320.78 | 65.73 | 0.85 |

| XGBoost | 1031.07 | 32.11 | 0.96 | 2043.97 | 45.21 | 0.92 |

| RandomForest | 1071.65 | 31.90 | 0.97 | 2718.36 | 52.14 | 0.81 |

| Model Method | Performance Prediction | Data Volume | Model Performance | Ref. |

|---|---|---|---|---|

| XGBoost | Biochar yield | 94 | R2 = 0.96 | [46] |

| Dense neural network | Bio-oil yield | 96 | R2 = 0.96 | |

| XGBoost | Biochar yield | 91 | R2 = 0.75 | [38] |

| Ramdomforest | Biochar yield | 245 | R2 = 0.85 | [16] |

| Ramdomforest | SSA | 169 | R2 = 0.84 | [47] |

| GBR | SSA | 169 | R2 = 0.9 | |

| XGBoost | Biochar yield | 622 | R2 = 0.79 | This study |

| XGBoost | SSA | 622 | R2 = 0.92 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, X.; Liu, X.; Sun, L.; Jia, X.; Tian, F.; Liu, Y.; Wu, Z. Prediction of Biochar Yield and Specific Surface Area Based on Integrated Learning Algorithm. C 2024, 10, 10. https://doi.org/10.3390/c10010010

Zhou X, Liu X, Sun L, Jia X, Tian F, Liu Y, Wu Z. Prediction of Biochar Yield and Specific Surface Area Based on Integrated Learning Algorithm. C. 2024; 10(1):10. https://doi.org/10.3390/c10010010

Chicago/Turabian StyleZhou, Xiaohu, Xiaochen Liu, Linlin Sun, Xinyu Jia, Fei Tian, Yueqin Liu, and Zhansheng Wu. 2024. "Prediction of Biochar Yield and Specific Surface Area Based on Integrated Learning Algorithm" C 10, no. 1: 10. https://doi.org/10.3390/c10010010