1. Introduction

Nowadays, we are witnessing an enormous popularity and a literal avalanche of bio-inspired algorithms [

1] permeating practically all facets of life. Procedures using artificial intelligence (AI) [

2] are being built into a vast number of different systems that include Internet search engines [

3], cloud computing systems [

4], Internet of Things [

5], autonomous (self-driving) vehicles [

6], AI chips in flagship smartphones [

7], expert medical systems [

8], robots [

9], agriculture [

10], architectural designs [

11] and data mining [

12], to quote just a tiny fragment. AI can chat with humans and even solve problems stated in the common human language [

13], generate paintings and other artworks at a textual prompt [

14], create music [

15], translate between different languages [

16], play very complex games and win them [

17], etc. AI artworks have been winning art competitions (and creating controversies at that) [

15]. Questions are even posed as to whether AI can show its own creativity comparable to that of humans [

18]. Many AI functionalities are met in ordinary life, and we may not even recognize them. All of the mentioned applications and many more are exponentially multiplying, becoming more powerful and more spectacular. The possibilities, at least currently, appear endless. Concerns have been raised for possible dangers for humanity as a whole with using AI, and some legislations have already brought laws limiting the allowed performances and uses of artificial intelligence [

19].

Scientific breakthroughs behind all of this are nothing short of astounding. Behind each result we see—and behind those that we may not even be aware of—there is an accelerating landslide of publications including at least hundreds of dedicated science journals with a vast number of articles, numerous books and an uncounted number of all possible kinds of intellectual property. Currently, a renaissance of biomimetic computing is in full swing—and it is still spreading, engulfing more and more different areas.

Not all results in the field of biomimetic computing are so spectacularly in the spotlight and followed by hype as those that mimic human behavior or even our creativity. However, maybe the most important achievements are hidden among the results that do not belong to this group. They include handling big data, performing time analysis or performing multi-criteria optimization. Such intelligent algorithms that are mostly “invisible” to the eyes of the general public are causing a silent revolution not only in engineering, physics, chemistry, medicine, healthcare and life sciences, but also in economics, finance, business, cybersecurity, language processing and many more fields.

Our attention in this text is dedicated to bio-inspired optimization algorithms. They are extremely versatile and convenient for complex optimization problems. The result of such wide applicability is their overwhelming presence in diverse fields—there are practically no areas of human interest where they do not appear. As an illustration of their ubiquity, we mention here just some selected fields where their applications have been reported. They encompass various branches of engineering, including mechanical engineering (automotive [

20,

21], aerospace [

22], fluid dynamics [

23], thermal engineering [

24], automation [

25], robotics [

26], mechatronics [

27], MEMS [

28,

29], etc.), electrical engineering [

30] (including power engineering [

31], electronics [

32], microelectronics [

33] and nanoelectronics [

33], control engineering [

34], renewable energy [

35], biomedical engineering [

36], telecommunications [

36], signal processing [

37]), geometrical optics [

38], photonics [

39], nanophotonics and nanoplasmonics [

40], image processing [

41] including pattern recognition [

42], computing [

30], [

43], networking (computer networks [

44] including Internet and Intranet [

45], social networks [

46], networks on a chip [

47], optical networks [

48], cellular (mobile) networks [

49], wireless sensor networks [

50], Internet of things [

51], etc.), data clustering and mining [

52], civil engineering [

53,

54], architectural design [

55], urban engineering [

56], smart cities [

57], traffic control and engineering [

58], biomedicine and healthcare [

59,

60], pharmacy [

61,

62], bioinformatics [

63], genomics [

64], computational biology [

60], environmental pollution control [

65] and computational chemistry [

66]. Other optimization fields where biomimetic algorithms find application include transportation and logistics [

67], industrial production [

68], manufacturing including production planning, supply chains, resource allocation and management [

69], food production and processing [

70], agriculture [

71], financial markets [

72] including stock market prediction [

73], as well as cryptocurrencies and blockchain technology [

74], and even such seemingly unlikely fields as language processing and sentiment analysis [

75]. The cited applications are just a tip of an iceberg, and there is a vast number of other uses not even mentioned here.

According to the 1997 paper by Wolpert and Macready titled “No free lunch theorems for optimization”, if an algorithm finds the best solutions in one field, chances are that it will not perform so well in others [

76]. This means that no algorithm will always find the optimum solutions in all fields. Because of that, there is an enormous number of different algorithms and algorithm modifications or improvements that excel in some areas, and some of them even in many, but each one of them will have its own peculiarities, advantages and disadvantages. Thus, a logical consequence of such a situation is the existence of a large number of review papers attempting to sort out the state of affairs among the numerous different algorithms. The situation is not facilitated by the fact that some of the metaheuristic algorithms actually overlap with others and are similar, or in some cases are literally identical among themselves, the main differences being in the algorithm names [

77]. In addition, some algorithms that had been very popular some years ago fell out of use while others rose to fame. Due to the mentioned reasoning, there is a constant need for updated reviews. Another problem is related to the enormous extent of the field. While excellent and exhaustive in-depth critical reviews naturally do appear, the majority only cover some particular subjects, out of the sheer impossibility of encompassing everything, while many do not even attempt to achieve a comprehensive coverage and focus on assorted bits instead.

To render this work, we analyzed 108 review papers and monographs on bio-inspired optimization algorithms (not all of which are cited here) plus numerous contributed research articles which contain review sections, each typically a few pages long. We believe we created a unique survey that covers a number of topics that none of the above-mentioned sources considered and which, to the best of our knowledge, cannot be found in a single place. In other words, we attempted to offer a synthesis of different subjects that contains updated information and offers as wide an overview as we were able to create. With this work, we tried to write a self-contained and comprehensive material covering the main fields from among multitudinous and often redundant (and in some cases even conflicting) bio-inspired optimization algorithms in a form accessible to as wide a multidisciplinary scientific audience as possible.

We attempted to include some of the most recent results (years of publication 2022 or 2023) that could not have possibly been mentioned in a vast majority of the previous review papers due to the simple fact that these results did not exist at the time. Obviously, such publications were not present for a sufficient time to allow a confident measure of the degree of their acceptance by the scientific community. Thus, our choice had to be partly subjective. We also took care to include the topics that in our opinion are of high importance now and for which we anticipate even higher impact in the future (some examples being multi-objective and hybrid optimization algorithms). At the same time, we strived not to omit older but still significant and widely used methods. We are well aware that in today’s rapidly expanding and branching field of biomimetic AI optimization algorithms, we may have overlooked and omitted some important sources, but this is almost inevitable in the current environment.

Another contribution of this text is related to the systematization and taxonomy of some topics in the field. There are contradictory reports in the literature on classification and even on some definitions, and we tried to present our point of view on it. We offered some modifications to the classification that we hope could serve at least a bit better than some of those previously published. We also attempted to clarify a few conflicting pieces of information from prior works.

Further, as an example, we dedicated a part of our review to two partially interconnected fields, namely microelectronics and nanophotonics. We are unaware of encountering that combination in a single comprehensive text, and even less one written in this manner. The importance of this inclusion is also reflected in the fact that optimization algorithms are rarely included in the typical curricula of the researchers in these two fields and are mostly related to the profiles of mathematicians and computer scientists.

We made efforts to keep the writing style as simple and clear as possible, yet exact and with correct nomenclature. At the same time, we tried to avoid excessive in-depth handling of any narrowly specialized field. This was done to ensure the usefulness of the manuscript to a range of researchers at different levels, from beginners to experts in the field, as well as to casual readers, i.e., to make it accessible to the widest circle of scientific audience. Our hope was that the present work could become handy as a kind of user-friendly one-stop all-purpose manual and a comprehensive overview of the main points to ensure simpler navigation through the enormously vast body of literature, especially for such a multidisciplinary-oriented audience as that gathered around the Biomimetics journal.

The landscape of biomimetic optimization algorithms is rapidly evolving, and new advances are being introduced daily. This means that any reviews of the state of the art will necessarily become dated relatively quickly. Thus, it is essential to bring as updated information about the existing techniques as possible. This broad survey, while the authors are well aware of the enormity of the task and the inevitable shortcomings and incompleteness of the work that stem from the pure impossibility of being all-encompassing, strives to offer its modest contribution to staying updated at least for the time being. This work may be thus seen as a partial snapshot of an explosively spreading and evolving field.

The manuscript is structured as follows:

Section 2 presents a possible taxonomy of different bio-inspired optimization algorithms and considers the redundancy of some of the existing procedures. The following sections briefly present some of the most important and well known ones, such as heuristic procedures including biology-based metaheuristic algorithms and hyper-heuristics, neural networks and hybrid methods. As an illustration, a section is dedicated to the advances in the application of bio-inspired multi-criteria optimization in microelectronics, and another section is dedicated to the recent applications in nanooptics and nanophotonics. These are followed by some conclusions and an outlook. Due to their relative complexity, an overview of the topics presented in this work is schematically shown in

Figure 1.

2. A Possible Taxonomy of Bio-Inspired Algorithms

In this section, we present one possible hierarchical classification of bio-inspired algorithms. The consideration has been made without taking into account any specific targeted applications of the algorithms. Generally, taxonomies of bio-inspired algorithms are relatively rarely considered in the literature. The majority of papers simply skip the topic altogether or handle it casually, presenting only the methods that are of immediate interest to the subject of the paper or, even more often, giving only a partial and non-systematic picture and denoting it as a classification. This is not to say that exhaustive and systematic papers on the subject do not exist. However, it appears that no consensus has been reached about the taxonomy of at least some bio-inspired algorithms yet.

Some quality papers dedicated to the topic and published within the last few years include [

78,

79,

80]. In this article, we present our view of the subject that includes many elements of the previously proposed classifications, but also incorporates novel ones, as well as updated information on some approaches proposed within the last few years, which could not be included previously since they were presented after the quoted papers appeared. We do not claim the generality of our taxonomy, although we did try to incorporate as much available data as we were able.

A problem when attempting to define a categorization in this field is that some approaches, although having different names, actually present algorithms very similar or even basically identical to those previously published. Often they offer only incremental advances, such as somewhat better results at benchmarks of precision or computing speed. This is a very slippery ground, however, since according to the previously mentioned No Free Lunch Theorem [

76], no algorithm is convenient for all purposes, and while one of them may offer a fast and accurate solution to one class of optimization problems, there is no guarantee that it will not perform drastically worse with other problems, become stuck in a local optimum, never even reaching a global optimum, or even fail completely to give a meaningful solution. For this reason, it is very difficult to decide which procedures merit inclusion in the classification and which do not.

A number of benchmarks have been proposed to compare different optimization procedures, and the most recent publications in the field use them to prove the qualities and advantages of their proposals over the competing ones. A systematic review of methods to compare the performance of different algorithms has been published by Beiranvand, Hare and Lucet [

81]. A more recent consideration of that kind dedicated to metaheuristics has been presented by Halim, Ismail and Das [

82], who offered an exhaustive and systematic review of measures for determining the efficiency and the effectiveness of optimization algorithms. A benchmarking process for five global approaches for nanooptics optimization has been described by Scheider et al. [

83].

One can find various taxonomy proposals in the literature, each with its own merits and disadvantages.

Figure 2 represents the scheme of a possible classification of bio-inspired optimization methods.

3. Heuristics

Heuristics can be briefly described as problem solving through approximate algorithms. The word stems from the Ancient Greek εὑρίσκω (meaning “to discover”). It includes approaches that do not mandatorily result in an optimum solution and are actually imperfect, yet are adequate for attaining a “workable” solution, i.e., a sufficiently good one that will probably be useful and accurate enough for a majority of cases. On the other hand, they may not work in certain cases, or may consistently introduce systematic errors in others. The methods used include pragmatic trade-offs, rules of thumb (use of approximations based on prior knowledge in similar situations), a trial and error approach, the process of elimination, guesswork (“educated guesses”) and acceptable/satisfactory approximations. The main benefit is that heuristic approaches usually have vastly lower computational cost, and their main deficiencies are that they are usually dependent on a particular problem (i.e., not generally applicable in all situations) and their accuracy may be quite low in certain cases, while inherently they do not offer a way to estimate that accuracy.

Heuristic approaches include common heuristic algorithms, metaheuristic algorithms and hyper-heuristic algorithms. All of these approaches are considered to represent the foundations of AI.

“Basic” Heuristic Algorithms

The heuristic algorithms represent the oldest approximate approach to optimization problems, from which metaheuristics and hyper-heuristics evolved. They include a number of approximate goal attainment methods. While there is no universally accepted taxonomy of common heuristic algorithms, a possible classification is presented in

Table 1. Metaheuristic and hyper-heuristic algorithms are not included in this subsection, since these are covered separately in the next two sections. This is a short overview only, presented for the sake of generality, since the quoted algorithms are mostly unrelated to bio-inspired methods. The comprehensiveness of the table is not claimed, and some quoted methods may overlap more or less, thus appearing in multiple categories at the same time.

4. Metaheuristics

Metaheuristics represent a conceptual generalization and enhancement of the heuristic approach. While the literature usually does not appear to provide a clear and consistent definition of metaheuristics and there seems not to be a consensus about it, it does offer various descriptions, among which is that metaheuristic algorithms represent iterative global optimization methods that make use of some underlying heuristics by making an intelligent combination of various higher-level strategies for exploring the search space, seeking to avoid local optima and to find an approximate solution for the global optimum [

94,

95]. The mentioned approaches are typically inspired by natural phenomena and mimic them. These phenomena may be for instance animal or human collective behavior, physiological processes or plant properties, but they also include some non-biological processes such as physical, astrophysical or chemical phenomena and mathematical procedures [

96] (these non-biomimetic algorithms are not covered by this treatise).

The methods in metaheuristics are sometimes denoted as metaphor-based since their naming and design are more or less inspired by actual biological and other processes. The metaheuristics are the best known, most popular and by far most often applied among the heuristic methods, and the papers dealing with them are the most numerous group of publications on nature-based optimization algorithms.

Many new procedures that belong to this group are constantly being proposed, almost on a daily basis. A paper by Ma et al. [

97] presented an exhaustive list of more than 500 metaphor-based metaheuristic algorithms and their benchmark basis. While many of the proposed methods simply represent reiteration or sometimes even literal renaming of known methods, some newly described approaches do show relevance and usability and introduce new levels of sophistication and performance. As mentioned before in this text, the existing tsunami of metaphor-based algorithms has been heavily criticized by some researchers, who have been arguing that the approach is fundamentally flawed and that a new taxonomy should be introduced since it would expose the essential similarity among many of the newly proposed methods [

98].

Swarm intelligence (SI) algorithms (mostly based on the collective behavior of animals) are by far the largest of the metaheuristic procedures and biomimetic computation approaches generally. They encompass the largest part of bio-inspired algorithms, amounting to about 67.12% of all of them. The article [

80] calculates that about 49% of all nature-based methods belong to this class; however, if we do not take into account those of non-biological origin, a simple recalculation brings us to a percentage of more than 67%.

4.1. Evolutionary Algorithms (EAs)

The group of evolutionary algorithms (EAs) includes different population-based metaheuristic optimization algorithms. They are inspired by the processes of Darwinian evolution of species, including the procreation of offspring, genetic mutations, recombination and natural selection. They are not related to any particular specificities of any concrete optimization problem and thus are applicable to a very wide variety of different scenarios. They have a predefined objective (fitness) function determining the desired quality of the solution, and the candidate solutions are individuals in the population. There are numerous metaheuristic procedures that belong to the EA group.

Table 2 shows a few selected basic EAs. It quotes the name of the algorithm first, then its standard abbreviation, the names of its proposers or key proponents and the year when the proposal was first presented (or the main popularization event occurred), and finally an important reference related to the topic (either the original publication that proposed the algorithm or a comprehensive survey or review). In the subdivisions following

Table 2, short descriptions of the selected algorithms are given.

4.1.1. Genetic Algorithms (GAs)

Genetic algorithms (GAs) (Holland, 1975) [

102] are a class of optimization algorithms belonging to the evolution-based bio-inspired metaheuristics that mimic the Darwinian process of natural selection and genetic evolution.

A GA is a population-based optimization algorithm. The potential solutions to an optimization problem are represented as individuals within a population. These individuals are denoted as a genotype of chromosomes and represent potential solutions to the optimization problem, regardless of the field to which they belong (in our case microelectronics and nanophotonics). Their data are encoded as a string of binary digits that can be further manipulated and processed.

An initial population of chromosomes/individuals is randomly generated, based on the properties of the optimization problem to be solved by the GA. The fittest chromosomes/individuals are then selected as a subset of the chromosome population. The strategies for the assessment of the fittest individual can be rank-based, or some kind of procedure for the choice of the fittest may be applied. These procedures include roulette wheel selection and tournament selection. In this manner, candidate members of the population are compared among themselves, and the fittest ones are chosen as the parents for the next generation. Crossover and mutation operators are applied to them in each iterative cycle to ensure variety, and the process is repeated until the optimum or near-optimum solution is found or, alternatively, until the number of iterations exceeds the predefined value. In this manner, the quality of solutions gradually improves over successive generations. Genetic operations guard the diversity of the solutions. In this way, the algorithm is able to investigate different areas of the search space and reach the global optimum, avoiding being trapped in local optima.

Figure 3 shows a simplified flowchart of a genetic algorithm. The important Darwinian steps (selection, crossover and mutation) are performed after each evaluation is performed among the members of the subset of the fittest. This procedure is repeated iteratively, each iteration representing a single generation of the genetic evolutionary process.

4.1.2. Memetic Algorithms (MAs)

Similarly to GAs, memetic algorithms (MAs) [

100] are optimization algorithms belonging to the evolutionary bio-inspired algorithms, but they combine the Darwinian process of natural selection and evolution with the behavior of a meme, as conceptualized by Dawkins [

103]. The Darwinian part is reflected in the application of the principles of evolutionary computation, i.e., population-based evolutionary processes, while the meme represents local search operations that enhance the exploration performance of the algorithm. In other words, memetic algorithms represent an extension/enhancement of the genetic algorithm that improves the convergence speed and the overall search quality. The local search component (meme) utilizes the promising regions of the search space through the iterative improvement of individual solutions.

Typically, memetic algorithms consist of five steps:

Initializing the Population: Random generation of candidate solutions.

Evaluation: The fitness of each candidate solution is assessed according to the problem’s fitness criterion (objective function).

Evolutionary Process: Genetic operators are applied (selection, crossover and mutation) according to the standard evolutionary algorithm rules; thus, the population evolves through generations.

Local Search: In addition to the evolutionary processes, local search techniques (memes) are applied to refine or improve individual solutions. This local search often utilizes problem-specific knowledge or heuristics to locally explore the solution space more accurately.

End: The algorithm terminates when the ending criterion is met—achieving a satisfactory solution or reaching the maximum set number of iterations.

A possible flowchart of a memetic algorithm is shown in

Figure 4.

4.1.3. Differential Evolution (DE)

The differential evolution algorithm [

101] is probably the most frequently published and analyzed of all bio-inspired algorithms, having appeared as the main topic of more than 86,500 publications as of May 2023 (see Figure 1 in [

97]). It includes a population of candidate solutions (also denoted as vectors or agents) and objective vectors. Basically, this algorithm is generally similar to a genetic algorithm; however, there are significant differences. The main dissimilarity is related to the choice of parents for offspring. While in a genetic algorithm, the candidates are compared among themselves, usually through some selection procedure (choice of the fittest, roulette wheel or tournament selection), here they are selected by comparison of the trial agents (candidate solutions) with the objective vectors (the target).

Mutations proceed by forming a mutant vector by randomly picking two individuals from the existing population (base vectors), calculating their difference and adding its weighted value to the objective (target) vector. This is denoted as differential mutation. The mutant vectors are recombined with the target vectors, and the results of this operation are trial vectors. The recombination or crossover proceeds with each component of the trial vector being either chosen from the mutant vector or from the target vector. This is accomplished according to the crossover rate (recombination rate), which represents a predefined probability. In this way, the Darwinian survival of the fittest is replaced by the combination with the target vector. The trial vectors are then compared to target vectors according to the fitness evaluation. If a trial vector represents an improvement over the corresponding target vector, it replaces the target vector. If not, the target vector is not replaced. In this way, the selection is accomplished and evolution continues.

Figure 5 shows a flowchart of the DE algorithm. The iterative procedure proceeds until the set objective is reached or the maximum number of iterations is exceeded.

The DE method is extremely popular due to its advantages such as simplicity, ability to work with difficult functions and robustness. Since DE does not require its functions to be differentiable because it does not need any calculations of gradients, it is convenient for discontinuous problems, as well as time-variable problems, cases with high levels of noise and other optimization tasks related to complex problems. All of these properties make it suitable for a wide range of different complex problems, which is the explanation for its overwhelming popularity and widespread use.

4.2. Swarm Intelligence (SI) Algorithms

Swarm intelligence algorithms are a huge group of nature-inspired metaheuristic methods dealing with complex optimization problems for which exact mathematical or traditional approaches are difficult or even impossible to implement. They are based on the social behavior of large collectives of animals (flocks of birds, schools of fish, swarms of insects, herds of large mammals) and their ways of attaining their goals. This is also the main part of our taxonomy consideration, and some of the most-used and best-known algorithms belong to it.

In the following part, some illustrative examples of swarm intelligence algorithms are briefly outlined. The criterion of their inclusion was either their acceptance by the scientific and engineering community, as seen through the number of publications investigating a particular algorithm and the number of citations, or their novelty—some quite recent algorithms are also presented, and the inclusion criterion was the number of citations (if some very recently proposed methods gathered a relatively large number of citations in a short time, they probably merit inclusion). The authors of this text are aware that such an approach may have inherent issues with subjectivity and the choice of criteria. In addition, the number of existing swarm intelligence methods is overwhelming (and growing daily), and it is difficult to estimate if some particular omitted cases were to be preferred over those chosen. Thus, some important methods may have been skipped, while some other less important ones may have been taken into account. We strived to keep such situations at a minimum.

Table 3 shows some selected swarm intelligence algorithms. The

Table 1 description is valid for its contents. In the subdivisions following,

Table 3 some selected algorithms quoted in it are described in more detail.

4.2.1. Particle Swarm Optimization (PSO)

PSO is the most popular swarm intelligence (SI) algorithm, with about 66,000 publications as of May 2023 [

97]. In the same way as the SI approach in general, PSO is inspired by the collective behavior of large groups of social animals (insects, fish, birds, mammals). It is a population-based metaheuristic algorithm, especially convenient for continuous search spaces.

Each particular social animal in the swarm is regarded as a single “particle” or point in the search space, defined by its location and velocity, which approaches the ideal solution according to an objective function (for a social animal, that solution can be the food location, mating pair location or another goal). Every particle typically changes its velocity v toward its target by using its own experience of the position, plus the experience of its neighbors (the location of the particles in the nearby surroundings), the location data of all the particles searching for the solution with a predefined inertia w. Each particle’s motion is defined by four variables:

Its current position in the search space;

Its best position in the past—past best (Pbest);

The best position in its direct proximity—local best (Lbest);

The ideal position for all particles combined—global best (Gbest).

Using these parameters, every particle will update its data and will follow the relation

where

i denotes the number of the concrete particle in the swarm. The velocity of the particle is updated according to

where 𝑐

1 and 𝑐

2 are acceleration coefficients, predefined inertia is

w and rand () is a random number function that generates any arbitrary number in the range [0, 1]. 𝑐

1 is connected to the best solution of each particle, while 𝑐

2 is associated with the best solution of all the localities. The flowchart for the basic PSO algorithm is shown in

Figure 6.

In the swarm initialization step, the swarm population size is defined, as is the dimensionality of the search space; random positions and velocities are assigned to each particle. During the evaluation, the algorithm determines the fitness (in this case, the position with regard to the objective function) of each particle. The update of the personal best (cognitive component) starts from the best position attained so far for that concrete particle (personal best), and if its current position has achieved a fitness that exceeds the personal best, its data are updated with the newly attained best position in the search space. The global best (social component) update is based on the information shared by each particle with its locally surrounding neighboring particles. The update is completed by the best position in search space attained by any particle in the local neighborhood. Velocity is updated based on the inertia component w, the personal best of the particle and the global best. In this way, the trade-off between exploitation and exploration is controlled. The desired end is reached if the targeted fitness value is attained.

However, PSO can become trapped in a local optimum because of specific constraints in the exploration phenomena, especially when functions have multiple local optima. Over the years, numerous PSO modifications and upgrades have been put forth by researchers as solutions to this problem. Among them are PSO with time-varying acceleration coefficients [

129], in which the rates of social and cognitive learning varied over time; human behavior-based PSO [

130], which imitates human behavior by incorporating negative traits in humans by using the term “Gworst”; and PSO with aging leaders and challengers (ALCPSO) [

131], where a leader is initially assigned, and as the leader ages, a new particle challenges its dominance. When coping with unimodal problems, these algorithms work well, but as the algorithm is moved toward more intricate and multimodal functions, the performance starts to deteriorate.

4.2.2. Ant Colony Optimization (ACO)

ACO is the second most popular swarm intelligence algorithm, with more than 16,000 publications as of May 2023 [

97]. This bio-inspired metaheuristic algorithm is based on the foraging behavior of ants in ant colonies.

It is known that ants as a collective find the shortest paths between their nest and the food sources due to worker ants leaving pheromone trails behind themselves and the rest of the legion of workers simply following these trails.

Each ant (

k) in the ant colony mimicked by ACO will arbitrarily select a route, creating a graph structure and generating pheromones at the edges of the graph as it does so. The probability of choosing the route is calculated as

Here,

is the set of neighbors of vertex

i of the

kth ant,

represents the amount of pheromone trace on the edge (

i, j),

α and

β are the weight factors that influence the pheromone trail and

is the visibility value. In contrast to the other paths, where the pheromone evaporation rate is such that pheromones are partially evaporated, the route providing the minimal objective function has its evaporation rate spike in each iteration (4).

where

m is the number of ants,

ρ is the pheromone evaporation rate and

is the quantity of pheromone laid on the edge (

i,

j) by ant

k.

The pseudocode of the ACO procedure is shown in

Figure 7. The flowchart of the ACO is presented in

Figure 8.

As shown in the flowchart, during the initialization step, a colony of ants is generated, where each individual ant represents a potential solution to the optimization problem and the initial pheromone levels are defined. During the individual ant movement step, each ant moves from one position (node) to another. The target node is chosen by its attractiveness, which is heuristically determined. The solution is built for each individual ant by selecting the node and by the ant moving along the node edges based on the pheromone level and the attractiveness of the node (again heuristically determined). A pheromone update to strengthen the trails is performed after every ant reaches its individual solution, the pheromone amount being proportional to the fitness of the individual solution. The global update is related to the pheromone levels, and it is performed according to the best solution found until that moment. The procedures are iteratively repeated until the desired solution is attained or the maximum number of iterations is exceeded.

4.2.3. Whale Optimization Algorithm (WOA)

This swarm intelligence algorithm is based on the social behavior of humpback whales. More concretely, it mimics their peculiar method of hunting fish schools for food. These highly intelligent creatures developed their own hunting strategy: after their leader (alpha) encounters the target fish school, it starts circling around it, at the same time blowing bubbles that create a kind of net around the fish, preventing them from escaping it. The circling is a spiral and closes in on the prey. Another whale, supporting the leader, emits a call for the others to make a formation behind the leader, assume their attack positions and prepare to lunge at the prey. This rather complex maneuver is called bubble-net hunting.

A simplified procedure mimicking the bubble-net foraging attacks used by humpback whales when they are hunting their prey is used in the WOA optimization algorithm. The algorithm was proposed by Mirjalili and Lewis in their highly cited paper from 2016 [

105]. This algorithm is population-based. It consists of three stages—exploration, exploitation and convergence (spiraling—local search).

The algorithm proceeds as follows: At the beginning, a population of “whales” is initialized in a random manner. Each individual whale represents a potential solution to the optimization problem, The whale is regarded as a particle; i.e., it is described solely by its position in the search space. In the exploration stage, the whales modify their position according to their current location and the best solution found until that stage. The aim of exploration is to diversify the search by the whales approaching the promising regions of the search space. Their position change is described by the following equations [

105]:

During encircling the prey,

Updating the position of the current whale towards the best solution by encircling is accomplished as

where

is the distance vector,

t is the current iteration number,

is a coefficient and point (.) denotes element-by-element multiplication;

represents the position vector.

For spiral position updating, the following is valid:

where

is the distance between the

ith whale and the targeted prey and represents the best solution encountered until that moment,

b is a constant parameter that defines the spiral shape and

l is a random number in the [−1, 1] interval.

If p denotes the probability of whales choosing encircling or spiraling, then Equation (6) is valid for, e.g., p > 0.5, and Equation (7) is valid for p < 0.5.

As the algorithm progresses, the whales converge towards the global optimum. The convergence is achieved by gradually reducing the search space according to the presented procedure. For the whale optimization procedure in its basic form, a pseudocode may be written as shown in

Figure 9.

A possible flowchart for the whale optimization algorithm is shown in

Figure 10. Numerous different versions of this algorithm exist, some of them generally improved, some of them tuned for a particular application. Hybrid and multi-objective versions are also encountered.

4.2.4. Grey Wolf Optimizer (GWO)

The GWO algorithm is a swarm-intelligence-based metaheuristic procedure inspired by the social behavior and hunting strategies of wolfs in packs. It is assumed that in dependence on their roles in their packs, grey wolves are socially organized as a pyramidal hierarchy, although that may not be so in reality in the wild, and some prestigious sources have even described it as a myth [

132].

Realistic or not, the often-described scheme is the source of the popular and very useful optimization grey wolf optimizer algorithm that was proposed in 2014 by Mirjalili, Mirjalili and Lewis. The metaheuristic optimizer can be presented as follows: At the top of the pyramid is Alpha, and he is the leader of the pack and the decision maker. Beta is the next in order; he helps Alpha in making decisions and disciplines the pack. He is also the candidate for the next Alpha. Delta is an average wolf, a “soldier” following Alpha and Beta in the hunt. Omega is the weakest in the pack and lowest in ranking.

According to the ranks of the grey wolves that execute the hunting process, the GWO algorithm is also organized into four groups. These hunting categories are also called alpha, beta, delta and omega, with alpha here denoting the most successful search strategy.

Similar to the previously described SI-based algorithms, the GWO search begins by establishing a random population of grey wolves. The four wolf groups are then formed, the positions of individuals are determined, and the distances to the intended prey are calculated. During the search process, each wolf is a particle that symbolizes a potential solution and is updated. In order to maintain exploration and exploitation and prevent the local optimum from stagnating, GWO additionally uses operations that are controlled by two factors. GWO just needs one vector of position; hence, it uses less memory than the PSO algorithm [

21]. Additionally, while PSO preserves the best solution so far obtained by all particles as well as the single best solution for every particle, GWO only retains the three best solutions.

The standard GWO algorithm is initialized by setting the number of pack members to

n, the parameter

a that gradually decreases its value from 2 to 0, the maximum number of iterations

tmax, and the search agents

, where

i = 1, 2, …,

n for a fitness function

. The three best solutions are, according to the pack hierarchy, denoted as

. The distances to the target are described as

and the search agents (solutions) are

The next iteration step is calculated as

where

·

·

and

are coefficients, while

are random vectors with intensities in the range from 0 to 1.

The pseudocode of the grey wolf optimizer is given in

Figure 11. The flowchart of the GWO is shown in

Figure 12.

4.2.5. Firefly Optimization Algorithm (FOA)

Fireflies emit bioluminescent light at night to communicate among themselves and to attract mates. The attraction is based on the light intensity of an individual firefly (the stronger it is, the stronger the allure) and on its position (the apparent brightness of nearer fireflies is stronger).

A metaphor-based algorithm inspired by the attraction between fireflies was proposed by Xin-She Yang in 2008 [

110]. The main expression driving the movement of the fireflies is

where

is the updated position of an individual firefly (iteration step

t + 1),

is the current position (iteration step

t),

is the attractiveness between a pair of fireflies at zero distance and γ denotes the light absorption coefficient (the bracketed term multiplied by

represents a light intensity decrease due to distance and the light absorption of the atmosphere). In the last term on the right, α denotes a scaling factor, and

is a random vector defining perturbation.

The algorithm in its basic form works as follows: First, a population of fireflies, each representing a potential solution, is randomly initialized. They are considered as particles, meaning that their position in search space actually defines a candidate solution. Their movement is based on the attraction to other fireflies, which is larger if the bioluminescent glow of a neighboring firefly is stronger or the neighboring firefly is nearer. The brightness is defined by the objective function related to the individual’s position in the search space. The firefly positions are updated according to Equation (12) which includes both the distance and the real intensity of the glow. The firefly positions are iteratively updated. In this way, the promising regions of the search space are exploited.

A pseudocode for the basic firefly optimization algorithm is given in

Figure 13. A flowchart of the algorithm is presented in

Figure 14.

4.2.6. Bat Optimization Algorithm (BOA)

The BOA is a global metaheuristic algorithm that simulates the echolocation of microbats belonging to the zoological suborder Microchiroptera. To locate their prey, microbats emit sound pulses typically in the range from 14 kHz to 200 kHz, i.e., for the most part far from the human hearing range, constantly varying the pulse frequency, loudness and pulse rate. The echo reflected from their prey enables them to locate, approach and catch it. The bat optimization algorithm is based on that behavior. It was proposed by Xin-She Yang in 2010 [

133].

Despite its simple design, the BOA has proven itself to be effective. The bat optimization algorithm can sometimes result in an imbalance between exploration and exploitation in order to find the true global solution if the parameters utilized are not adjusted appropriately. As a result, numerous studies have developed a variety of hybridized and modified bat algorithms to boost their efficiency and find overall solutions to optimization problems [

134]. Other varieties of BOA have been proposed as enhancements and adaptations to many practical situations.

The optimization proceeds in the following manner: At an iteration number

t, each individual bat is allotted a velocity

and a location

in a multidimensional search space. Here,

i denotes the number of an individual microbat. There exists a specific best answer,

xbest, amongst all the bats. Equations (13)–(15) help in updating the positions and velocities:

where

β ∈ [0, 1] is an arbitrary vector obtained from a uniform distribution. Each bat is initially given a frequency

f that is randomly picked from the range [

fmin, fmax]. Due to this, the bat algorithm can be viewed as a frequency-tuning algorithm that offers a balanced combination of exploitation and exploration [

135].

A system for automatic control and auto-zooming into the area with potential options is fundamentally provided by the loudness and pulse emission rates. BOA uses a simple monotonic form for both loudness

A and pulse emission rate

r, although in reality, these may have quite complex forms. They are defined as

The pseudocode of the BOA procedure is shown in

Figure 15. It clearly shows the iterative nature of the optimization process.

Figure 16 shows the flowchart of the BOA.

4.2.7. Orca Predation Algorithm (OPA)

The orca predation algorithm (OPA) is one of the more recent swarm intelligence algorithms, being proposed in 2022 [

123]. It should not be confused with some other similarly named metaheuristics, including killer whale algorithm [

136], ORCA optimization algorithm (OOA) [

137], orcas algorithm (OA) [

138] and artificial orcas algorithm (AOA) [

139] (which all have a lower citation count per year, and some of them even have no citations at all), or with some other programming systems totally unrelated to either optimization algorithms or biomimetics. The OPA mimics the hunting behavior of killer whales (Orcinus orca), creatures whose intelligence is comparable to that of humans. When they hunt their prey, they do so in packs, using echolocation to find it and communicating among themselves using their sonars to exchange information and coordinate their attacks. Instead of being based on a random feeding frenzy, their attacks are highly coordinated and planned. This makes them formidable predators; actually, orcas are apex predators of the ocean, preying even on sharks. The ruthless efficiency of their hunting skills motivated the creation of the OPA.

The algorithm divides the procedure into three mathematical sub-models: (1) searching for prey, (2) driving and encircling it and (3) attacking it. In order to introduce a balance between the exploration and exploitation stages, different weight coefficients are assigned to different stages of prey driving and encircling, and the algorithm parameters are adjusted to achieve that goal. More concretely, the positions of those killer whales which are superior are ascertained, as are the positions of those which are average and randomly chosen. In this way, the optimal solutions are approached during the attack stage, while at the same time, the diversity of individual killer whales is fully retained.

Different stages of the OPA can be described by the steps corresponding to the real-world hunt of an orca pack. The first step is the establishment of the pack itself. It is considered to be a population of

N particles in the search space (potential solutions), and the search space has

D dimensions, so the orca pack population can be described as

. Here,

denotes the position of the

Nth individual orca in the pack. The second step is searching for the prey, typically a school of fish. After one of the orcas has spotted a school, the chasing phase begins. The orca pack disperses and starts the first stage of the chase—the driving, in which the whole school is driven by orcas towards the water’s surface. The driving can proceed according to one of two different procedures, in dependence on the size of the orca pack. The pack may be small (case 1) or large (case 2). To describe this situation, a probability

q from the interval [0, 1] is used. The algorithm generates a random number rand, and if the orca pack is large, then rand >

q, and the first method of driving will be used; if the orca pack is small (rand ≤

q), the second method will be used. The two methods are described by the equations

where

t is the number of the current iteration;

Vchase is the chasing speed of orcas;

a,

b and

d are random coefficients from the [0, 1] range, and

e is a random coefficient from the range [0, 2]; the numbers 1 and 2 in the subscripts denote the first (18) or the second (19) mentioned driving strategy;

x is the position of

ith orca particle; the subscript “best” denotes the best solution for

x;

i is the number of the orca under consideration; and

M is the average location of the orca pack (solution population) defined as

The positions of orca particles during the driving procedure will be determined by

After the driving has ended, the encircling stage begins. In that way, orcas force the fish from the school to form a roughly spherical and tightly packed formation. If three orcas are randomly selected, other orcas will follow them during encircling, and the position of the

ith orca will be determined according to their position as

The orca positions during encirclement are changing according to

where

f is the objective function.

In the final, attacking stage, the algorithm chooses the four best-positioned orcas to attack. Their positions and speeds are calculated as

where

V denotes speed; numbers 1 to 4 denote orcas in the best positions;

d1,

d2 and

d3 are randomly chosen orcas from the pack of

N; and the subscript attack denotes that the value is valid for the attacking stage. The parameter

g1 is a randomly generated number in the range [0, 2], while

g2 is a randomly generated number in the range [−2.5, 2.5].

The flowchart of the orca predation algorithm is shown in

Figure 17. It is based on the diagram from [

123], but with some modifications. The parameter

xlow represents the lower boundary of the problem. Although complicated at first look, the algorithm is rather simple in the mathematical sense, so its calculation speed is comparatively high [

123].

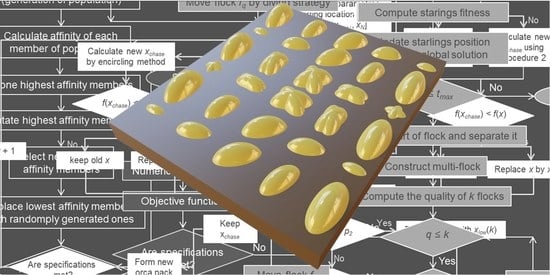

4.2.8. Starling Murmuration Optimizer (SMO)

Starling murmuration is a magnificent display of the collective behavior of starlings (family Sturnidae) when huge flocks of countless birds swoop and swirl in intricately synchronized shape-shifting living clouds, creating amazing visual spectacles [

140] (see

Figure 18). The motive for the use of that behavior in optimization is that not a single bird among the countless thousands of them flying in coordination ever collides with any other. It is no wonder that among the first practical applications of the SMO algorithm was the flight coordination of massive swarms of drones [

141].

The starling murmuration optimizer (SMO) is a metaheuristic algorithm introduced in 2022 by Zamani et al. [

124]. It is a population-based algorithm utilizing a dynamic multi-flock construction. It introduces three new search strategies: separating, diving and whirling.

At the initial step of the algorithm, individual starlings are stochastically distributed. The initial position of the

ith starling in the group of

N birds is described by

where

N is the total number of starlings,

D is the number of dimensions of the search space,

is the

dth dimension of the starling

si,

is the upper bound of the search space and

is the lower bound of the search space.

rand(0, 1) is a random function with a value in the interval between 0 and 1.

After initialization, some of the starlings separate from the flock to form a new flock

Psep that will explore the search space according to

where

t is the number of the current iteration and

tmax is the maximum number of allowed iterations. The search strategy is defined by

where

XG(

t) is the global position obtained during the iterative step

t,

Xr’(

t) is the position selected from a proportion of the fittest starlings and the separated flock, and

Xr(

t) is randomly selected from a population. The separation operator

is a new operator based on the standard quantum harmonic oscillator, in which

y represents a random number from the Gaussian distribution [

124].

The starlings that remained after the separation dynamically construct the multi-flock with members

f1,

f2, …,

fk. The quality

Qq(

t) of the

qth flock is calculated by

to select either the diving (exploration) or the whirling (exploitation) search strategy. The diving strategy explores the search space using a new quantum random dive operator, and the whirling strategy exploits the neighborhood of promising regions using a new cohesion force operator [

124]. Here,

sfij(

t) is the fitness value of the

ith starling from the

jth flock

fj;

k is the number of flocks in a murmuration

M. The average quality of all flocks is denoted as μ

q.

Figure 19 shows the flowchart of the starling murmuration algorithm. Mathematically, the starling murmuration algorithm is rather complex because of the use of the new quantum operators.

4.3. Metaheuristics Mimicking Human or Zoological Physiological Functions

Physiological functions of humans or certain mammals have also served as bio-inspiration for some metaheuristic optimization methods.

Table 4 shows just three such algorithms, and one of the most important and most often used ones among them, the artificial immune system (AIS), is presented in more detail in the subdivision that follows.

Artificial Immune Systems (AISs)

Artificial immune systems (AISs) are a class of algorithms mimicking the function of the human and generally vertebrate immune system. They are among the most popular optimization algorithms, with the number of published papers about AISs being about 22,000 as of May 2023 [

97]. In dependence on how they are used, they can be classified both as metaphor-based metaheuristic algorithms [

145,

146] and machine learning techniques [

147]. They belong to metaheuristic algorithms because they perform optimization tasks, explore the search space and perform iterative improvements to find approximately optimal solutions [

145,

146]. On the other hand, they also belong to machine learning techniques because they involve rule-based learning from data and include adaptive mechanisms (utilizing feedback information) [

147]. The fact that they are bio-inspired and metaphor-based is a trait that differentiates them from conventional machine learning procedures. It may be said that AISs inherently represent a combination of metaheuristic algorithms with machine learning; i.e., the two areas overlap in them.

The function of an AIS algorithm is based on various functions of the immune system. After encountering antigens (any agents that our immune system sees as foreign and tries to fight off), immune cells trigger an immune response and produce antibodies or activate themselves. In the AIS algorithms, the immune response corresponds to the evolution-based adaptation of antibodies to improve their fitness or affinity to antigens (e.g., through modification of the existing ones or generation of new ones by way of mutation or recombination).

Since they represent a group of procedures, AISs include different algorithms. The most widely used and popular ones among those are the clonal selection algorithm, the artificial immune network algorithms, the negative selection algorithm, the dendritic cell algorithm, the danger theory, the humoral immune response, the pattern recognition receptor model and the artificial immune recognition system. Some of the mentioned types of the AIS algorithm group themselves present groups rather than a single algorithm. In further text, we give a short description of some of the most well known AIS algorithms.

Clonal selection algorithms (CSAs) are inspired by an acquired immunity mechanism called clonal selection. According to the theory of clonal selection, T cells (lymphocytes that attack and destroy foreign agents) and B cells (lymphocytes that make antibodies) achieve improvements in their response to antigens (substances and other agents that trigger a response of the immune system because the system does not recognize them and tries to fight them off—bacteria, viruses, toxins, allergens, foreign particulate matter, etc.) through the process of affinity maturation in which T-cell-activated B cells produce antibodies with more and more increasing affinity for a particular antigen during an immune response to exposures to that antigen. CSA algorithms focus on a “survival of the fittest” Darwinian selection process applied to the immune cells. In that kind of time-maturation process, the selection corresponds to the affinity of antigen-antibody interactions, reproduction corresponds to the cell division and variation corresponds to the somatic hypermutation (a mechanism at the cell level by which the immune system adapts to the new hostile foreign elements such as pathogen microorganisms). Antibodies with improved affinities are selectively cloned and mutated. Thus, a population of diverse improved solutions is generated.

Artificial immune network algorithms are inspired by the interactions of the immune cells within the immune system. The algorithm creates a network graph structure to represent candidate solutions. Each graph node corresponds to a potential solution. The training algorithm generates or removes the interconnections between the nodes on the basis of affinity (which corresponds to the similarity in the search space). This leads to the network graph evolution and promotes cooperation and competition among the network graph nodes.

The negative selection algorithm is inspired by the negative selection mechanism where the immune system identifies and kills self-reacting cells, i.e., the T cells that anomalously target and attack the organism’s own cells, by the process of apoptosis—programmed cell death. The algorithm establishes exemplary pattern “detectors” of self-components which it trains on normal cells, evolving them to recognize non-self patterns, achieving a high detection rate for anomalous patterns, while minimizing false positives. A population of antigen patterns (non-self patterns) is generated in the process.

The dendritic cell algorithm is based on mimicking the functions of dendritic cells (a type of phagocytes and a type of antigen-presenting cells that activate immune response and orchestrate the behavior of T cells). In this algorithm, candidate solutions are represented as antigens, while the role of dendritic cells is to capture and disable antigens. The algorithm implements a feedback system through which dendritic cells adapt themselves in dependence on their interaction with antigens. This is a multi-scale process since it involves different levels, starting from the molecule networks within a single cell to the whole population of cells.

An artificial immune recognition system combines various immune system elements, including an artificial immune network, negative selection and clonal selection. In other words, it combines different immune aspect-based algorithms to arrive at an improved and robust optimization procedure.

As an illustration of the way the AIS algorithms function, we present here the flowchart of one of the clonal selection algorithms. The flowchart is shown in

Figure 20.

The particular properties of various types of AISs are often further modified and tailored since the algorithms from this group offer large and flexible customization possibilities. Over the years, different variants and extensions of the AISs have been introduced to adapt the algorithms to different specific applications and to improve their efficiency.

4.4. Anthropological Algorithms (Mimicking Human Social Behavior)

This subsection presents metaheuristic optimization algorithms inspired by human social behavior. The description of the contents of

Table 2 is also valid for

Table 5. In the single subdivision following

Table 5, more details are given about the tabu search algorithm (TSA), although there is disagreement among different research teams regarding which metaheuristic group this algorithm actually belongs in. Our reasoning is that since tabu (or, spelled alternatively, taboo) is a social phenomenon present in practically all human societies and the algorithm draws its name from it, as well as its basic properties, it should merit inclusion in this group.

Tabu Search Algorithm (TSA)

As mentioned above, there is contradictory information in the literature on the correct classification of the tabu (taboo) search algorithm. Different researchers classified it in different metaheuristic groups, even among the mathematics-based nature-inspired algorithms [

96]. However, since its main assumption is based on anthropological habits, it is more often than not categorized among human social behavior-based algorithms [

158].

The word “tabu” (or, in an alternative and more frequently used spelling, “taboo”) is a Tongan expression for a sacred thing that is forbidden to be touched. Almost all human societies have their taboos in one form or another.

Tabu search is a single-solution-based metaheuristic optimization algorithm [

97], contrary to all the other metaheuristics quoted here, which are population-based. It is designed to perform combinatorial optimization utilizing local search methodology. When searching for an improved potential solution, it checks the immediate neighboring solutions in the search space. A peculiarity of this algorithm is that it utilizes memory to remember the previously visited solutions and to prohibit their revisiting. In other words, these solutions become tabu. Solutions that are undesirable according to a user-defined rule or set of rules (the aspiration criteria) also become tabu. The algorithm makes a list of forbidden solutions and thus remembers them. Another peculiarity of the algorithm is that in situations when no improved solution exists (e.g., when stuck in a local minimum), the algorithm allows for choosing a worse solution—i.e., it relaxes its basic requirement of local search to always strive for a better solution. In this way, tabu search becomes a local search method that is able to escape local minima and continue searching for a global optimum.

Figure 21 shows the pseudocode of the standard tabu search algorithm.

Figure 22 shows the flowchart of the standard tabu search algorithm.

4.5. Plant-Based Algorithms

This subsection presents metaheuristic optimization algorithms inspired by plant life properties. The

Table 2 description is valid for the contents of

Table 6. In the single subdivision following

Table 6, the most popular plant-based algorithm, flower pollination algorithm (FPA), is described in more detail.

Flower Pollination Algorithm (FPA)

The clade of flowering plants (Angiospermae) represents the most advanced and most diverse land plants on the earth, with currently more than 300,000 known species. Among the reasons for such dominance in nature is their way of reproduction by pollination. They bear male and female reproductive cells. The male cells, the pollen, are borne in the stamens. The female cells are megaspores, and their division creates the egg cell. These are enclosed in the carpel, where one or more carpels form the pistil. As an example,

Figure 23 shows the main reproductive parts of a flowering plant (a prickly pear flower).

Flowering plants may be pollinated (i.e., their pollen brought to the egg cell) in abiotic ways (by wind, rain or dew, or by sheer gravity) or biotic ways (i.e., by insects, birds or mammals). If a plant is pollinated in a biotic way, it probably will have developed a mechanism to attract its pollinator organisms. Most often, such plants form petals that may be brightly colored (sometimes petals are denoted as tepals, if the petals are indistinguishable from the protective sepals). Another way to attract biotic pollinators is through smell, which can be, in dependence on the desirable pollinator, fragrance from the human point of view or stench (e.g., resembling a rotten carcass, which attracts the targeted pollinators, e.g., blowflies). In abiotically pollinated plants, the petals and sepals may be completely absent (any appeal to animals being unnecessary). A flower may be self-pollinated (the pollen of a single flower pollinating the egg cell of the same flower) or cross-pollinated (two different flowers are needed for pollination). Such a system may appear complex, but it ensured the dominance and diversity of flowering plants.

The flower pollination algorithm (FPA) is a metaheuristic algorithm based on the pollination behavior of flowering plants. It was proposed by Xin-She Yang in 2012 in his widely cited conference paper [

165]. The FPA is the most widely published and cited of all plant-based algorithms, having appeared in about 1000 dedicated publications as of May 2023 [

97]. In the basic version of the algorithm, it is assumed that each plant bears a single flower, where each flower produces only one grain of pollen. In this way, the candidate solution is the flower or its grain of pollen. The motion through the search space is accomplished by biotic cross-pollination. The movement of each grain of pollen is represented by Lévy flight (a type of random walk), a method that has spread to other metaheuristics too [

166]. The FPA allows exchange of information and the choice of improved solutions. In this way, it promotes the exchange of “knowledge” between different flowers/candidate solutions and thus enhances the exploration stage.

A typical FPA procedure begins by initializing a starting flower/pollen grain population, where the positions of the pollen grains represent the candidate solutions. Corresponding to the natural pollination process, FPA allows the flowers to interact and exchange information in a search for better solutions in several manners. A flower is selected for reproduction according to its fitness/objective function value and becomes the pollinating flower (the source). It then perturbs its position in the search space through a random mechanism (controlled by a randomization factor) and generates a new solution. It can be procreated by randomly choosing local or global pollination. The fitness of the solution (offspring) is compared to the pollinator flower. In dependence on their values of fitness, the original flower is replaced or retained. These steps are repeated for the whole flower population. Exploration is thus accomplished thorough random perturbations, while the exploitation proceeds through the selection process based on the fitness criterion. If a satisfactory solution is found, or a maximum number of iterations is reached, the algorithm terminates.

A pseudocode for the FPA can be found in [

159]. Equations describing the FPA, including the procedure for the calculation of the best solution and those for Lévy flight, can be found in the same article.

Figure 24 presents a flowchart for the FPA.

There are numerous improved variations and upgraded alternative versions of the FPA. Among others, FPA procedures were written for multi-objective optimization [

167]. Many hybrids of the FPA with other metaheuristics were reported, as well as with mathematical optimization methods and with machine learning methods [

168].

5. Hyper-Heuristics

The hyper-heuristic algorithms [

169] were first introduced as “heuristics to choose heuristics”, i.e., an approach to automate the selection or design (generation) of metaheuristic algorithms to be able to solve the most difficult optimization problems. The term was coined by Cawling, Kendall and Soubeiga in 2000 [

170]. Hyper-heuristics may be a learning method or a search procedure. They use conventional heuristics or metaheuristics as their “base” and explore them, seeking strategies to combine them, select the most convenient ones among them or generate the optimal ones. Thus, a hyper-heuristic algorithm operates in the search space of heuristics/metaheuristics, in contrast to ordinary heuristics/metaheuristics which operate in the search space of an optimization problem. Its goal is to reach a generality instead of targeting a specific problem space. The goal of hyper-heuristic algorithms is to find effective strategies through a high-level approach that are adaptable to a range of different problems and problem domains. Regardless of the methodology used, one can implement them as iterative procedures, where a sequence of lower-level algorithms keeps reiterating, all the time attempting to improve the solution(s) from the previous step. Reinforcement learning techniques [

171,

172] can be also utilized to automatically learn and improve the hyper-heuristic procedure.

A possible workflow for hyper-heuristics includes the initialization step where a set of base heuristics or their constitutive parts is selected or generated, followed by an iterative exploration of the search space of possible heuristics/metaheuristics or their parts, adapting or refining the available heuristics or generating new ones. The final step is the performance evaluation of the obtained solutions in the meta-search space and, in dependence on its results, arrival at the termination criteria. A few common hyper-heuristic approaches are briefly presented below.

5.1. Selection Hyper-Heuristics

The selection of hyper-heuristic algorithms comprises a group of already existing and available heuristic/metaheuristic algorithms that evaluate their performance within the context of a current problem and select the most promising one among them according to given criteria. These criteria may be based on a previous experience with the application of pre-existing heuristics/metaheuristics on a similar type of optimization problem. Besides that kind of history-based approach, a set of criteria may be based on the features that are problem-specific. Besides the selection of a single existing algorithm that best fits the current problem, there is a possibility to learn connections between partial stages of solving a problem and the most convenient heuristics/metaheuristics for those stages.

The two main methodologies used for hyper-heuristic selection are (1) approaches utilizing constructive low-level heuristics/metaheuristics (incrementally and intelligently building a solution, starting from an empty set) and (2) approaches utilizing perturbative low-level heuristics/metaheuristics (utilizing automatic selection and applying heuristics to improve a candidate solution) [

169].

5.2. Generation Hyper-Heuristics

During the optimization processes they perform, generation hyper-heuristics dynamically generate new heuristics or modify pre-existing ones. Contrary to selection hyper-heuristics that perform their search in a search space containing complete pre-existing heuristics, generation hyper-heuristics perform their search in a search space consisting of heuristic components. In this manner, generation hyper-heuristics create new heuristics through the use of the algorithm components. This goal can be achieved in various manners, such as using machine learning methodologies, genetic programming (an evolutionary approach that “genetically breeds” a population of computer programs in an artificial, evolution-like manner by transforming the existing ones in an iterative manner) [

173] and search-based techniques.

5.3. Ensemble Hyper-Heuristics

The idea of ensemble hyper-heuristics is to make a combination of two or more lower-level procedures or algorithms to generate a novel algorithm that will cover a number of different strategies for finding the solution to the problem in a variety of situations. Combining diverse heuristic/metaheuristic algorithms, i.e., using an ensemble of optimization strategies, means uniting their strengths and combining their results to arrive at a novel and improved method of solution. This approach is related to generation hyper-heuristics.

The techniques used to combine outputs of such ensemble sets of algorithms include the method of weighted averages, voting, artificial neural networks and some other machine learning methods. In this way, a generalized intelligently combined procedure is obtained that functions better than a sum of its parts (i.e., better than any of these sub-procedures or sub-algorithms operating independently).

6. Hybridization Methods

One of the relatively often used approaches in bio-inspired optimization is the hybridization of two or more different techniques, each with its own advantages and disadvantages, in order to boost their advantages and to lessen or even cancel disadvantages. In order to belong to the main topic of this survey, at least one of them should be biomimetic. Hybrid approaches make use of the complementary strengths of the combined methods. In this way, the solution quality and accuracy are enhanced, and the efficiency and robustness of the resulting strategies are improved over their constitutive blocks. In this way, an effective and flexible and effective method to solve complex optimization problems is obtained. The choice of hybridization type will be dependent on the particular problem, as well as the required optimization objectives.

The subject of hybrid metaheuristics is almost a separate science field in itself. For an excellent overview of its methods, taxonomy and approaches, see [

174].

Figure 25 shows a possible classification of the hybridization methods, based on the mentioned reference by Raidl, but somewhat modified. The classification is by no means exhaustive, and it could be extended to include more methods.

Numerous treatises dedicated to hybridization methods have been written. For the sake of completeness, we mention some selected details on them. However, we must restrict ourselves here, being aware that we are only scratching the surface. The further text gives a very short overview of the most pertinent bio-inspired hybridization methods.

6.1. Hybrids of Two or More Metaheuristic Algorithms

The most obvious method to perform hybridization is surely to combine two or more metaheuristic algorithms into a single hybrid [

175]. One of the possible approaches to this task is switching between separate algorithmic methods at various points of the process of bio-inspired optimization. For instance, a hybrid could commence using a global algorithm for general exploration of the search space, and then switch to a local search algorithm to explore the immediate vicinity of a solution discovered by the global algorithm and thus refine that solution. In this way, merging of two metaheuristic results in a hybrid that retains the advantages of both separate constituents. The applicable global search algorithms for this purpose include particle swarm optimization and other globally oriented swarm intelligence algorithms, or one may apply evolutionary algorithms such as a genetic algorithm, memetic algorithm or differential evolution algorithm. Local search can be then accomplished using some non-bio-inspired algorithms, e.g., hill climbing or the very popular simulated annealing. In this way, both exploration and exploitation are boosted compared to a single-algorithm case.

An alternative approach to the hybridization of metaheuristic algorithms would be to use ensemble methods that utilize two or more metaheuristic algorithms that would function independently of each other. These independently determined solutions can be combined by averaging or weighted aggregation. Such an approach offers the benefit of diversity of the optimization search processes and thus contributes to increased accuracy.