1. Introduction

The recent industrial revolution has had a significant impact on the production and manufacturing sectors, with more advancements on the way. Many industry revolutions have come and gone in the world of research and academia with the aim of increasing and modifying the relationship between man and machine, which is accomplished through the intelligent integration of the Internet of Things (IoT) and cyber–physical systems [

1,

2,

3]. The current phase of the industrial revolution, Industry 4.0, has made technology available in a way that allows for seamless and smart decision making, which has generally improved efficiency and revenue in these sectors. One of the most important aspects of Industry 4.0 is its application in the prognostics and health management (PHM) of equipment, which entails detecting anomalies as well as predicting when these systems may fail, thereby providing a healthy and conducive environment for production. Most of the time, this is accomplished through the use of various types of sensors to collect rich data from these machines in collaboration with a machine learning algorithm that sequentially trains to understand the nature of the data and then aids in the detection and early prediction of any future fault occurrences using advanced technology [

4,

5]. Most of the means associated with useful data collection from machines for adequate health monitoring include, but not limited to, vibration sensors, acoustic emission sensors, thermal sensors, current sensors, and so on. The selection of the appropriate sensor to be used on equipment is solely dependent on the nature of the machine, the type of environment, and the researcher’s expertise. The next industrial revolution, Industry 5.0, is intended and projected to be more sophisticated and advanced in the sense that machines not only assist humans but also collaborate with humans in solving technical problems [

6].

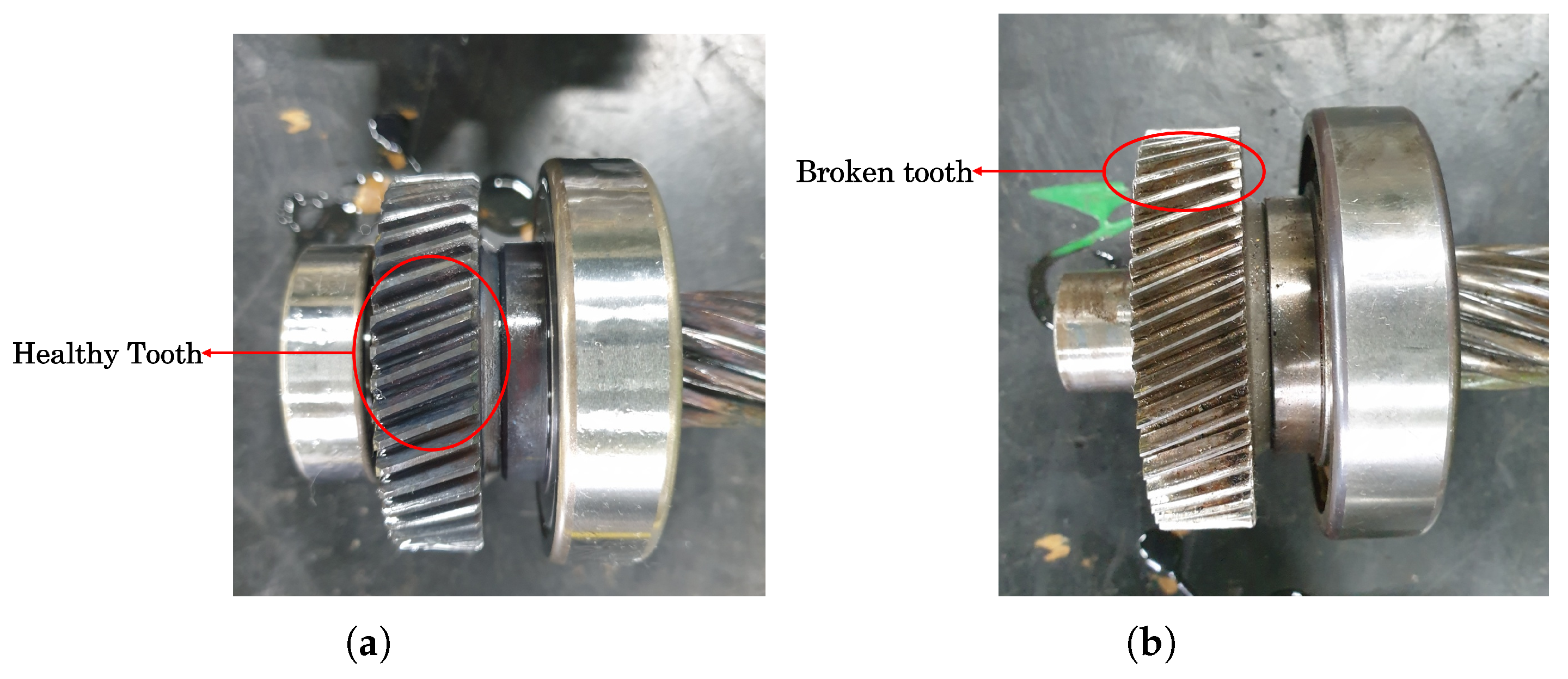

The plastic/fabric extruder machine is a critical piece of equipment in the plastic manufacturing industry. Its working principle entails collecting plastic raw materials through its hopper, which is then directed to the extruder screw, whose pressure and rotation are provided by the gear and an electric motor combined with the machine’s heater pad to melt the plastic raw materials as well as transport them to the chamber where the molten plastic would be used to achieve whatever purpose it has been assigned in terms of shape. Nonetheless, the machine’s effectiveness is dependent on the flawless performance of its components. Among these, the extruder screw stands out as a critical component of the plastic extruder machine. As a result, ensuring the continuing functionality of this specific component becomes critical. The reason for this monitoring is that any fault in the extruder screw could have serious consequences for the manufacturing process. The induction motor provides the required rotary power and torque, while the gear as shown in

Figure 1 reduces the rotational speeds of the extruder screw, resulting in more torque for crushing and transporting raw materials. There has been a lot of research on fault diagnostics and prediction in induction motors [

7,

8,

9], but the focus of this research is on the gearbox, whose failure would be catastrophic in the sense that the operational movement and rotation of the extruder screw, which has been designed to provide the required amount of torque and pressure for the manufacturing process, would be greatly affected. In our study, the gear component of the plastic extruder gearbox is made up of helical gears, which are known for their high contact ratio and thus provide high torque [

10].

While vibrations are frequently anticipated to come from the running gears, vibration sensors are among the most popular sensors for condition-based gearbox monitoring. Unfortunately, unlike spur gears, helical gears are known to produce less noisy and non-stationary vibration signals due to their contact ratio difference; however, noise and non-stationary signal generation are common with gears, which are regarded as challenges for most signal processing methods [

11,

12]. Nonetheless, scientists have devised solutions to this problem, such as fusing vibration data with other sensor data like sound and thermal data, or denoising and decomposing vibration data signals to extract the important spectral properties of the signal [

13,

14]. Most known classes of helical gear failures often cause unusual friction between the meshing and/or mating gear components, which generates heat, making thermal data useful for fault analysis in helical gearbox fault detection and isolation (FDI) [

15].

Generally, time-frequency signal transformations are preferred, especially in helical gearbox vibration signals because they present the signal in such a way that useful information can be easily extracted or detected in a signal, which aids in fault diagnosis. Nonetheless, the AE-LSTM is well recognized for its capacity to identify faults or function in multivariate time-domain signals. As a result, the AE-LSTM is an excellent tool for processing and training a fusion of thermal and vibration datasets for proper FDI [

16,

17]. Machine learning (ML) algorithms have long been used by scientists owing to their efficiency and adaptability to small datasets, as well as their high diagnostic and prognostic accuracy, low computational cost, and ease of implementation. However, due to some of its well-known issues, including their propensity for over-fitting, poor performance with complex datasets, and high parameter dependence, Artificial Neural Network (ANN)-based algorithms, such as Feed-forward neural networks (FNNNs), Long Short-Term Memories (LSTMs), Deep neural networks (DNNs), Deep belief networks (DBNs), Recurrent neural networks (RNNs), Convolutional neural networks (CNNs), etc., have presented the ideal sophisticated diagnostic and prognostic tool, despite their unique challenges such as high computational cost and interpretability issues; however, their uniqueness and robustness in PHM is no match for traditional ML algorithms. Furthermore, the ANNs mentioned which are often referred to as the second generation of ANNs, are recognized for their high computational costs, stemming from their layered architecture which includes hidden layers. Despite this, their efficiency has endured and remains prevalent in recent academic research. Nevertheless, there has been a notable shift in academia toward the adoption of the third generation of ANNs, known as Spiking Neural Networks (SNNs), which offer potential alternatives. SNNs are regarded as the third generation of ANNs, which are known to perform better and also display a lesser computational cost than the second generation ANNs [

18,

19]. The authors of [

19] presented a comparative analysis between artificial and spiking neural networks in machine fault detection tasks using reservoir computing (RC) technology. RC, which acts as an optimizer, employs untrained internal layers (the reservoir), influenced by input and the environment, while optimizing connection weights solely at the output layer. This immutability streamlines learning, a significant advantage that has been shown in various academic journals [

18,

19,

20]. In their research, ball bearing fault detection using an induction motor phase current signal show that second-generation ANN reservoir architecture is significantly inferior both in accuracy and computation cost. Nonetheless, our study utilized second-generation ANNs within the scope of our expertise, as the efficient implementation of SNNs demands a higher level of skill acquisition.

Gearboxes play a critical role in industrial domains, particularly in contexts involving torque transfer, speed reduction, and motion dynamics modification, among other activities. As a result, the consequences of gearbox failure resonate far and wide. Any failure, regardless of the underlying reason, has the potential to produce unneeded downtime. The resulting operational stop reduces productivity by hindering industrial processes. Furthermore, the resulting output shortage directly leads to a revenue loss. The interdependence of gearbox functionality and industrial processes emphasizes the importance of preventive maintenance and constant monitoring to avoid potential failures. Mitigating the danger of gearbox failure by such methods not only ensures operational continuity but also protects against the tangible economic ramifications of stopped output and financial losses. As a result, grasping the basic properties of faults is a fundamental prerequisite in the pursuit of effective fault mitigation within mechanical systems. This includes determining their frequency, patterns of occurrence, and severity. This early understanding serves as the foundation for developing meticulous tactics for correcting these flaws as effectively as possible. A significant insight comes when considering the gearbox in a plastic extruder machine, where the prevalent failures are directly linked with gear-related concerns. While helical gears are more resistant to failure than spur gears, failure is an unavoidable possibility.

Numerous failures have been extensively researched and documented in the academic world. These include broken teeth, fissures, the occurrence of pitting corrosion, uniform wear, axis alignment inconsistencies, fatigue-induced difficulties, instances of impact induced fractures, and the likes. Surprisingly, amid this spectrum of failures, those linked to fatigue phenomena have emerged as the most common [

21,

22,

23,

24]. Notably, the prevalence of fatigue-related failures broadens its impact, serving as a critical precursor for additional severe and catastrophic defects within the system [

24]. Tooth bending fatigue and surface contact fatigue are the two main types of fatigue failure, which are typically linked to issues with gear assembly, misalignment, unintentional stress concentration, and unsuitable material choice or heat treatment [

24,

25]. Gear tooth wear is a similarly common form of failure to fatigue in terms of prominence. This failure mechanism involves the loss of gear material and frequently results from many triggers that include mechanical, electrical, and chemical effects [

25]. Fundamentally speaking, abrasive and adhesive wear are distinguished modes of tooth wear failures. Adhesive wear is characterized by material transfer between teeth, which leads to propensities for ripping and welding, as opposed to abrasive wear, which includes material removal as a result of inter-tooth contact [

25,

26]. Scuffing is a key failure mode that is frequently ignored in gear analysis. This occurrence results from sliding motions interacting with lubricated contacts, which generate high temperatures. These elevated temperatures can consequently cause the surface film that coats the gears to deteriorate, leading to deformations and eventually the melting of the relatively softer gear components [

25,

26,

27].

The accuracy of relying exclusively on vibration signals for precise defect identification may be compromised by the elevated levels of noise and temperature that frequently accompany malfunctioning gear conditions. As a result, many researchers have implemented techniques to improve diagnostic precision. These methods often entail either applying denoising techniques to separate important signals from the noise-contaminated vibration data originating from gear components or combining vibration signals with other sensor outputs to create comprehensive diagnostic models. The incorporation of vibration and acoustic sensor data helped the development of a thorough diagnostic model, as demonstrated by the researchers in [

28]. Their method involved the independent extraction of statistical features from each sensor. Relevant attributes were identified using a cutting-edge feature selection method. In the end, a comprehensive diagnostic model specifically designed to solve chipped gear defects was developed by synergistically combining the chosen features from both sensors. In a different study [

29], the author skillfully combined current and vibration sensors operating over a range of frequencies to create a condition-based monitoring framework for spotting gear wear issues. The study’s conclusions emphasized not only the attainment of desired results but also a calculated approach for reducing the computing demands generally connected with data fusion. This was accomplished by carefully assessing the dataset to only include the most pertinent qualities, and then strategically incorporating statistical and heuristic feature engineering techniques.

Additionally, Zhang Y. and Baxter K. proposed a cross-domain fault diagnostic framework by synergistically combining vibration and torque information from a gearbox in a different exploration [

30]. Their ground-breaking approach addressed a common issue that arises when utilizing different statistics from diverse sensors. To counteract this, they used a fusion strategy in which the various sensor datasets were combined into a single 1-D sample array. Then, as a crucial element of their cross-domain fault diagnostic approach, a CNN-based classifier was used. This innovative method made it possible to integrate several sensor outputs, improving the system’s capacity for diagnostics. Several researchers have made considerable advances in refining sensor fusion approaches, as demonstrated by the approach used in this study [

31]. To build a diagnostic model, the author used a trio of sensors—a vibration accelerometer, a microphone, and sound emission sensors—across a variety of operational circumstances. Their process entailed extracting wavelet features from each sensor’s data stream, followed by identifying relevant features. This technique resulted in a powerful model that validated their intended aim. A similar three-sensor fusion technique was discovered in another study involving the prediction of the remaining usable life (RUL) of a hydraulic gear pump in the presence of variable pollution levels [

32]. The researchers used a Kalman filter-based linear model to smoothly fuse fault features from three distinct sensor data streams—vibration, flow rate, and pressure signals—in this case. These fused properties were then used as an input for a Bidirectional Long Short-Term Memory (BI-LSTM) network, resulting in the creation of a strong RUL architecture.

Due to the inherent characteristics and different origins of sensor data commonly used in sensor fusion, these datasets often contain intrusive background noise, lack stable patterns across time, and depart from a normal Gaussian distribution. As described in this specific research study [

33,

34], these variables collectively restrict the extraction of important information from the data. Consequently, it is necessary to use supplementary signal processing techniques to present these datasets in a way that allows for effective information extraction. In the context of our investigation, the vibration datasets acquired from machinery necessitate undergoing a denoising process. This procedure is critical for extracting relevant information from vibration data. The effectiveness of this process is dependent on the robustness of the signal-processing algorithms used and the expertise of the analyst. Numerous methods for denoising and decomposing signals have been introduced, including discrete wavelet transform (DWT), Bayesian filter-based methods, and empirical mode decomposition, the latter of which is based on the Hilbert Huang transform (HHT) [

35,

36]. Among these techniques, discrete wavelet transform and Bayesian-filter-based algorithms are well-known for their effectiveness and robustness. However, when it comes to performance, empirical evidence has shown that discrete wavelet transform is a better option for both signal denoising and decomposition tasks [

36]. This insight acted as a catalyst for its preferential use in our ongoing inquiry.

One of the primary goals of this research is to properly combine vibration sensor data with thermal sensor data to build a reliable PHM scheme. To achieve this integration, appropriate fusion techniques must be used to develop strong health indicators (HIs) for an efficient diagnostic model [

37,

38,

39]. It is critical to note that, while the requirement for a fusion algorithm is undeniable, the technique to be used is significantly dependent on the specific challenge at hand. Local Linear Embedding (LLE), for example, can be sensitive to the choice of nearest neighbors, whereas Principal Component Analysis (PCA) may encounter difficulties when dealing with datasets having a normal distribution. Independent Component Analysis (ICA), on the other hand, is dependable when dealing with non-Gaussian input distributions, particularly when these inputs display statistical independence, as demonstrated in previous studies [

40,

41]. In a related study [

40], the authors conducted a thorough comparison of Independent Component Analysis (ICA) and Autoencoder (AE) approaches. The goal of this study was to synchronize data collection from numerous IJTAG-compatible Embedded Instruments (EIs) and build a machine learning-based system-level model for forecasting the end of life (EOL) in safety-critical systems that use multiple on-chip embedded instruments. According to the findings of the study, the ICA and EI fusion strategy excelled in capturing latent variables for model training, hence improving the EOL prognostic power. In addition, J. Weidong introduced the FastICA compound neural network, an original ICA-based network that makes use of feature extraction from multi-channel vibration measurements [

42]. This method shows how ICA has the potential to be used as a strong feature extraction tool for challenging sensor data fusion problems.

As a result, the techniques outlined across the spectrum of reviewed research highlight a common theme: the inherent limits of relying simply on vibration signals for diagnosing gear-related difficulties. This collaborative knowledge acts as a catalyst, propelling us to incorporate a unique methodology into our model. Our method combines vibration and thermal sensor data from a plastic extruder machine’s gearbox. While it has been recognized that malfunctioning gearboxes frequently generate heat due to irregular gear meshing, little study has been conducted to harness thermal signals for comprehensive defect investigation which most recorded studies often focus on thermal imagining rather than thermal data signals. This undertaking is a unique step, resulting from the inspiration obtained from the combination of earlier study findings. Therefore, with all these findings in view, the contributions of this sensor fusion plastic extruder gearbox outlier detection fault-based model are highlighted as follows:

A DWT for enhanced vibration signal analysis in plastic extruder gearbox fault diagnosis: By incorporating a DWT strategy, the aim is to extract invaluable insights from the vibration signals entrenched in noise. This technique seeks to bolster the efficacy of diagnosing faults within the plastic extruder gearboxes.

An effective statistical time-frequency domain feature extraction and correlation filter-based selection technique: A method for feature extraction is presented in our investigation, which is particularly effective in the time-frequency domain. Furthermore, a feature selection process based on correlation filters, a technique commonly utilized in feature engineering, is incorporated. This process aims to enhance significant and crucial characteristics, thereby improving the overall performance of the model.

A multi-sensor fusion using the FastICA technique: Our strategy includes a multi-sensor fusion paradigm aided by the (FastICA) technique. The proposed technique harmoniously blends selected information from multiple separate sensor datasets. This fusion not only condenses data to a single-dimensional array but also preserves the unique characteristics of each source.

An AE-LSTM outlier detection using a fused multi-sensor dataset approach: We achieved an outlier detection by leveraging an AE-LSTM, which is enabled by a fusion of multi-sensor data techniques. This comprehensive methodology results in a strong framework ready for defect detection in the context of a plastic extruder gearbox.

A proposed framework validation and proposed global evaluation metrics: A set of global evaluation indicators are provided to validate our suggested approach. These evaluations highlight the framework’s efficiency and efficacy, demonstrating its ability to manage the complexities of defect detection within plastic extruder gears.

The rest of the paper is structured thus:

Section 2 covers the materials and methodologies employed in the paper.

Section 3 presents the results and analytical discussion of the results. Conclusively,

Section 4 and

Section 5 summarize experimental results and conclusion of the study, respectively.

2. Materials and Methods

The choice of materials and methodologies adopted in the event of a study goes a long way in determining the robustness and efficiency of the output. This section explains the necessary materials and the essential principles underlying the key elements that constitute the foundation of our research. These include DWT for denoising and signal decomposition; FastICA for feature dimension reduction; an overview of AE-LSTM; and the proposed outlier detection model which constitutes the materials, processes, sequences, and the application of the process model for fault detection.

2.1. DWT for Denoising/Decomposition Overview

The wavelet transform is a signal analysis mathematical tool. Through a succession of wavelets, it decomposes signals into multiple frequency components at different scales, capturing both time and frequency information. This enables localized signal feature analysis, which is important for tasks like denoising, compression, and feature extraction. These series are produced by orthogonal functions and indicate a square-integrable function, whether real or complex-valued [

33,

34]. Just like DFT and STFT which are often used in situations where the fast Fourier transform (FFT) falls short in performance, the wavelet transform as highlighted earlier is a time-frequency signal process tool that is a unique and efficient tool that can present a signal in an orthogonal or non-orthogonal format using basic a function known as a wavelet [

34,

43]. Generally, the essential difference is in the decomposition approach: the Fourier transform (FT) divides a signal into its sinusoidal components, whereas the wavelet transform employs localized functions (wavelets) that exist in both real and fourier space. Because of this localization in both domains, the wavelet transform can provide more intuitive and interpretable information about a signal. Wavelet transform, as opposed to FT which focuses on the frequency of a signal in most cases, incorporates both time and frequency characteristics, allowing for a more dense study of signals with localized features.

As a mathematical tool, the general equation of a wavelet transform is presented thus in Equation (

1):

a and

represent the scale parameter and the normalization factor for energy conservation, which regulates the dilation of the wavelet function of the transform,

b represents the translation parameter across the time axis,

represents the mother wavelet, and

represents the complex conjugate of the presented mother wavelet.

In academia, the two most prevalent wavelet transforms are discrete wavelet transform and continuous wavelet transform (CWT). Their main distinction is the function used in their computation. For example, in the creation of a DWT, an orthogonal wavelet is frequently used, whereas CWT adapts a non-orthogonal wavelet. Because of the nature of the signal retrieved from the extruder gearbox, which is embedded with noise; hence, the study focused on DWT. DWT is well known for its usage in signal denoising and decomposition into distinct levels. The Discrete Wavelet Transform (DWT) transforms a signal into approximation and detail coefficients at different scales. The approximation coefficients indicate the low-frequency content of the signal, whereas the detail coefficients represent high-frequency features. This iterative technique gives a multiscale examination of the signal, allowing for efficient representation, compression, and signal processing. The coefficients can be used for signal reconstruction and additional analysis.

The general equation for obtaining the wavelet transform is shown in Equation (

2).

k represents the scale or level of decomposition,

m represents the translation or position in each decomposed level,

is the discrete-time signal being transformed,

[

n] represents the discrete wavelet function.

The performance of a wavelet is solely based on the wavelet function (mother wavelet). Therefore, it is important to note that the wavelet function’s specific form differs depending on the wavelet family (e.g., Haar, Daubechies, Morlet, etc.). The aforementioned formulas represent the wavelet transform’s conceptual structure, while the actual computation includes evaluating the integral or sum over the proper ranges.

2.2. FastICA for Dimension Reduction

Primarily, ICA was created to solve the problem of blind source separation in image and audio processing. Its major goal was to extract from observed signals a set of statistically independent components. FastICA was created in response to the potential of ICA for dimensionality reduction, specifically for feature fusion [

40]. In many circumstances, the mutual information among numerous aspects is buried by high-order statistical characteristics, and FastICA is successful at minimizing high-order correlations while maintaining mutual independence among these features. FastICA is thus a useful tool for reducing dimensionality by merging characteristics while keeping their independence [

40,

41,

44].

FastICA is a signal decomposition algorithm that divides observed signals into statistically independent components. It assumes the signals are a mixture of unknown sources and attempts to estimate the original sources by maximizing their independence. The procedure begins by centering the signals and then whitening them to remove correlations and equalize variances. To quantify the divergence from gaussianity in the altered signals, a measure of non-gaussianity, such as negentropy, is used [

43,

44]. FastICA maximizes this metric iteratively by updating the weights of linear combinations of the observed signals. After obtaining the independent components, dimensionality reduction can be accomplished by picking a selection of components that capture the most relevant information or contribute the most to the original signals. The dimensionality of the data is efficiently decreased by removing less relevant components. The reconstructed signals can then be obtained by projecting the independent components back. For our study, FastICA is presented due to its prowess in fault detection scenarios the more discriminant the data the better it is for the training model to easily adapt and classify and/or detect the presence of abnormality in a set of data.

2.3. Correlation Coefficients

Correlation coefficients are statistical measurements that assess the degree and direction of a relationship between two variables. The Pearson correlation coefficient, Spearman rank-order correlation coefficient, and Kendall rank correlation coefficient are three regularly used correlation measurements. The Pearson correlation coefficient evaluates the linear relationship between variables. It is calculated by dividing the covariance of the variables by the product of their standard deviations. Pearson correlation coefficients vary from −1 to 1. A value of −1 indicates a strong negative linear association, 0 shows no linear relationship, and 1 suggests a strong positive linear relationship. It is commonly symbolized by the symbol (rho). The Spearman rank-order correlation coefficient, on the other hand, is a non-parametric statistic that assesses the strength of a monotonic relationship between variables. It is based on the data ranks rather than the actual data values. Its range, like the Pearson correlation coefficient, is from −1 to 1, with −1 indicating a strong negative monotonic association, 0 suggesting no monotonic link, and 1 indicating a strong positive monotonic relationship. The Kendall rank correlation coefficient is another non-parametric statistic that assesses the strength of the monotonic association between variables. It takes into account the number of concordant and discordant pairs in the data.

Thus, in our study and the majority of studies involving linear variables, the Pearson correlation coefficient is frequently selected above alternative correlation coefficients. The other two types, however, operate more effectively than the Pearson correlation in situations involving non-linear variables. The general mathematical expression for Pearson correlation, Spearman correlation, and Kendall rank correlation are represented in Equations (

3)–(

5). The correlation coefficient has generally been used successfully in academia for feature reduction, selection, diagnostics, prognosis, and other tasks. The Pearson coefficient was used in this study to extract meaningful and discriminant features, which is essential for effective problem diagnosis and fault detection [

7,

8,

43,

45].

represents the Pearson correlation coefficient,

n represents the number of data points,

x and

y represents the variables being compared,

represents the Spearman correlation coefficient,

d represents the difference between the ranks of corresponding data points of the two variables,

represents the Kendall rank correlation coefficient,

P and

Q represent a concordant pair (pairs of data points for which the ranks of both variables follow the same order) and discordant pairs (pairs of data points for which the ranks of the two variables follow opposite orders), respectively, and

and

represent the variables being compared.

2.4. Autoencoder

AEs are a form of artificial neural network that is used to learn input data representations. They are made up of three basic parts: an encoder, a bottleneck layer, and a decoder. In the bottleneck layer, the encoder maps the input data to a compressed representation. The bottleneck layer acts as a bottleneck for information flow, lowering the input’s dimensionality. The latent space representation is the learned representation in the bottleneck layer. The decoder attempts to recover the original input data using the latent space representation. The AE’s purpose is to reduce the reconstruction error, which is the difference between the input data and the reconstructed output.

By defining the problem as a supervised learning task, AEs can be trained with the aid of unlabeled data. The goal is to produce an output that closely resembles the original input. This is accomplished by reducing the reconstruction error, for instance (

x,

), where

x is the initial input sequence and

is the resultant reconstruction sequence. The AE learns to extract relevant features from input data and build a compressed representation in the latent space by iteratively modifying the network’s parameters. As a result, AEs can be used for tasks like dimensionality reduction, data denoising, and anomaly detection [

3,

46,

47,

48].

2.5. Long Short-Term Memory (LSTM)

LSTM networks were created to get around regular RNNs’ limitations when processing lengthy sequences. To capture and hold long-term dependencies in sequential data, they contain memory cells and gating mechanisms. A memory cell used by LSTMs serves as a conveyor belt for information as it moves through the sequence. Long-term memories are stored in the cell state, and what should be discarded is decided by the forget gate. The output gate regulates the output depending on the cell state, whereas the input gate controls fresh information that is added to the cell state. Because they have the capacity to learn and spread pertinent information over lengthy sequences, LSTMs excel at jobs involving sequential data.

The mathematical expression for the LSTM architectural structure is defined with the following Equations (

6)–(

12):

i,

f,

O represents the input, forget, and output gates,

describes the current input to the LSTM architectural structure,

,

,

,

represents the cell state, previous cell state, the hidden cell state, and the previous hidden cell state, respectively, and

,

W,

b represents the sigmoid function, weight and bias of each gate [

49,

50,

51,

52].

For a more insightful explanation of the structure of the LSTM; LSTMs employ gates that permit selective information memory retaining and forgetting, allowing them to update the cell state based on the current input and past state. The input gate applies an activation function to the input and previous hidden state (such as sigmoid, ReLU, or softmax), yielding values between 0 and 1. These values are then multiplied element by element-wise with the input, with their importance scaled accordingly. The forget gate generates values between 0 and 1 by applying a sigmoid function to the input and prior concealed state. These values are then multiplied element by element with the prior cell state, with the previous values scaled according to their importance. Values between 0 and 1 are produced by the output gate after applying a sigmoid function to the input and prior concealed state. The output of applying a hyperbolic tangent function to the current cell state is then multiplied element-wise by these values to produce the LSTM’s final output. A vector of values that is updated at each time step makes up the cell state of LSTMs. Utilizing the current input, the prior cell state, and the prior concealed state, the cell state is updated. Following that, the hidden state, which is utilized to make predictions, is updated using the revised cell state [

53,

54,

55].

2.6. The Proposed Outlier Detection Model

In the study, an AE-LSTM deep learning approach is employed to create an anomaly detection model. Anomaly detection entails recognizing patterns that differ clearly from the usual pattern in a given dataset. Anormality detection seeks to distinguish uncommon datasets, known as anomaly datasets, from normal datasets. Many strategies have been developed in academia to detect anomalies [

3,

8,

45,

56,

57], such as statistical methods, machine learning algorithms and data visualization approaches; supervised, semi-supervised, and unsupervised learning approaches; outlier detection; the clustering technique; and so on, are some of the commonly used techniques employed for anomaly detection, where presented models learn the normal patterns or structures from the data without explicitly labeled anomalies. Once trained, the models can detect outliers from learned usual behavior and highlight them as potential abnormalities.

Figure 2 displays the anomaly detection model employed in our study. We employed an outlier detection methodology with the aid of an AE-LSTM deep learning approach. The model consists of five major steps which are summarized below.

Data acquisition: Both vibration and thermal data were collected in order to construct an appropriate model for monitoring extruder gear performance. The incorporation of several data sources is prompted by the fact that vibration signals obtained from gearboxes are prone to noise contamination, making it difficult to extract valuable insights on their own. A more comprehensive and useful picture can be built by adding additional data, such as temperature measurements. Vibration data are critical for detecting anomalies or inconsistencies in the operation of the gear. However, because of the existence of noise, it is frequently impossible to distinguish important patterns or trends purely from vibration signals. This is when the extra thermal data come into play. By combining vibration and thermal data, it is possible to identify hidden links and correlations between the performance of the gear and the accompanying temperature fluctuations. The use of both vibration and thermal data seeks to improve the accuracy and usability of the model built to monitor the extruder gear. This method allows for a more comprehensive study, allowing for the detection of potential problems such as high friction, overheating, or abnormal operating circumstances. Finally, by combining multiple data sources, a more robust and efficient model may be constructed, providing useful insights for optimizing extruder gear performance, maintenance, and dependability.

Signal processing and feature extraction: The second key aspect of the model revolves around signal processing, with the aim of extracting valuable information from gearbox vibration data while minimizing the inherent noise. The DWT was used as a method for deconstructing, filtering, and pre-processing the vibration signals to achieve this. The DWT extracted time-frequency statistical information from both the original signal and each vibration signal decomposition level. A full analysis of the vibration data was performed by performing decomposition at various levels, collecting variances across different scales and frequencies. Thermal data, on the other hand, as a time-varying signal, did not go through decomposition. Instead, from the raw temperature data, time statistical features were extracted. The goal of this method was to capture the temporal patterns and trends revealed by temperature readings. The study aims to improve the quality and usability of the information gained by applying the DWT to vibration signal processing and extracting time statistical features from temperature data. This methodology allowed us to identify key trends, correlations, and anomalies in the vibration and temperature data, allowing us to gain a more comprehensive understanding of the extruder gear’s behavior and performance.

Feature selection: To obtain an effective diagnosis in the setting of anomaly detection, discriminant traits are required. A correlation filter technique was used to guarantee that the features extracted had enough discriminative power. This technique ensures that only features with a correlation percentage of 70% or above are deemed closely connected. By removing characteristics that do not match this correlation threshold, the resulting feature set is tailored to include informative and discriminating features, improving the accuracy and effectiveness of the diagnosis process.

Signal fusion: The integration of data from numerous sources while keeping their different characteristics is a critical step in our suggested model’s signal data fusion. FastICA was used as the signal-processing method in our study for this reason. FastICA aided us in the merging of data from several sources, allowing us to mix and extract important information while preserving the distinctive qualities of each data source. We accomplished effective signal integration using FastICA, allowing for a thorough analysis that captures the synergistic effects and correlations across the various data sources in our investigation.

Diagnosis/outlier detection: The entire model’s procedures are built with the goal of detecting faults, specifically through outlier detection. The model’s structure is deliberately constructed to accomplish this aim. As for the AI tool of choice in our investigation, an AE-LSTM was employed. Details concerning the implementation and operation of the AE-LSTM have been discussed earlier in this section. The overarching goal is to use this AI tool to discover issues by finding anomalies in data, allowing for prompt diagnosis and intervention.

2.6.1. Model Hyper-Parameter Function

In the hidden layers of neural networks, activation functions are used to introduce nonlinearity, which is critical for representing complex input. For instance, linear regression models are insufficient for most data representations because they lack nonlinear activation functions. Sigmoid, tanh, and ReLU (Rectified Linear Unit) are examples of common activation functions that are often employed in deep-layer neural networks. In binary classification tasks, the sigmoid function transfers inputs to a range of 0 to 1. However, given big input values, it can saturate, inhibiting learning. The tanh function is similar to the sigmoid function; however, it maps inputs to a range of −1 to 1.

On the other hand, ReLU has grown in popularity as a result of its capacity to improve training efficiency and effectiveness. Positive inputs are kept while negative inputs are set to 0. ReLU can experience the “dying ReLU” problem when neurons stuck in the negative area become inactive, despite its simplicity and computational efficiency. Loss functions, also known as cost functions, estimate how much the actual ground truth departs from the outputs that were projected. Various task kinds are catered for by various loss functions. Cross-entropy loss is appropriate for classification jobs while mean squared error loss is frequently utilized for regression activities. When developing deep learning models, the loss function is minimized by changing the model’s weights and biases. Iterative optimization is used to improve the model’s performance and accuracy. The mathematical equations for some of the regularly employed activation functions

,

, and

are presented in Equations (

13)–(

15), respectively.

,

,

represent sigmoid, relu and softmax functions, respectively,

x represents the input class which can be any real number.

The success of a model is often determined by the architecture chosen, a decision that is often reliant on the researcher’s knowledge and experience.

Table 1 details the Architecture Parameters of the model used in our analysis.

2.6.2. Model Global Performance Evaluation Metrics Overview

It is critical to thoroughly examine the diagnostic skills of various deep learning while taking into account variables like model complexity, computational needs, and parameterization in order to accurately estimate their capabilities. This makes the use of defined criteria for assessing performance and discriminating necessary. These parameters include F1-score, accuracy, sensitivity, precision, and false alarm rate. By using these measurements, we can compare and objectively assess the performance of various models, allowing us to make well-informed decisions based on their individual advantages and disadvantages. Some of the known global evaluation metrics employed in studies are presented thus in Equations (

16)–(

20).

where

and

, respectively, are the numbers of accurately classified groups, numbers of inaccurately classified groups, numbers of inaccurately labeled samples that belong to a group that was accurately classified, and the number of inaccurately labeled samples belonging to a group that was inaccurately classified. It is essential to evaluate categorization models in order to judge their effectiveness and dependability. Although metrics like true positives (

TP), false positives (

FP), true negatives (

TN), and false negatives (

FN) give a general picture of classification accuracy, it is frequently required to assess the performance of each specific class to get a more complete picture. Take the case of a classifier that completes a five-class issue with an overall accuracy of 95%. This apparently great accuracy may really be the consequence of the model’s ability to categorize three or four of the five classes accurately while misclassifying the other one or two classes. However, in the case of an outlier detection model as in the case of our model, determining these metrics helps assess the model’s performance in accurately identifying outliers while minimizing false positives and false negatives, ensuring effective outlier detection not just for the instance but also when employed in other instances.

This discrepancy underlines the necessity for the confusion matrix, which enables us to assess the diagnostic efficacy of each class in a model. The confusion matrix gives a thorough examination of the predictions made by the classifier/outlier, dividing them into true positives, false positives, true negatives, and false negatives for each class. a model’s performance may be evaluated more carefully by understanding where it performs best or worse by examining its confusion matrix.

In conclusion, while global metrics offer an overall evaluation of classification accuracy, assessing class-specific performance using the confusion matrix is essential to spot any inconsistencies or biases and to make defensible choices about the validity of a classification model.

2.7. Data Collection and Pre-Processing

This section discusses the data acquisition process, sensor placement, signal processing, feature extraction, feature selection, and signal fusion. The data employed in the study were acquired from two independent plastic extruder machines (healthy and faulty machines) in SPONTECH.

SPONTECH is a subsidiary of Toray Inc. (Tokyo, Japan); Toray Co. is Japan’s premier chemical and textile conglomerate, with an unrivaled No. 1 position in carbon fiber, as well as Japan’s leading material giant, producing engineering plastics, IT materials, and chemical fibers in addition to carbon fibers.

Figure 3 depicts the setup of individual sensors on the plastic extruder machine. These sensors are deliberately critically placed to collect crucial information that will be used to generate a dataset with useful characteristics when examined. As previously stated, two plastic extruder machines were used in this study. The first machine as seen in

Figure 4a had been running for less than four months, and its data is designated as the healthy dataset.

The second machine, represented in

Figure 4b, had been in service for more than two years and had a chipped gear tooth. This machine (with the chipped gear tooth) was used to create the faulty dataset. In the study, evaluation and analysis of the variations between the healthy and faulty situations are achieved by incorporating data from these two machines in order to get insights into the performance and potential concerns of the plastic extruder machines.

Figure 5a,b depicts a visualization of time-domain data gathered from the vibration and thermal sensors respectively in order to have a better understanding of the data collected from both machines. In our investigation, a shear Piezotronics accelerometer model 353B33 with a vibration sensitivity of 99.2 mv/g was employed for our research. To acquire thermal data signals, an RTD PT100 thermal sensor was used to collect gearbox-generated heat temperature variation. This visualization provides a comprehensive perspective of the data collected from various sensors, allowing us to assess and comprehend the nature of the data signals gathered from the plastic extruder machines used in our research.

From observation in

Figure 5a, a little distinction can be seen between the vibration data generated from the healthy and faulty gearbox conditions. The healthy data displayed a uniform periodic pattern throughout the whole range of the dataset, occasionally modulated at various intervals. On the other hand, the faulty data visualization shows a non-consistent behavior across the whole data range; the early part displayed a non-constituent data display while the remaining part of the dataset displayed to an extent a uniform visualization of the dataset. However, it is important to note that this visual representation alone might not necessarily indicate the needed discriminative information for ensuring the effectiveness of an anomaly detection model.

Additionally,

Figure 5b shows a comparable data visualization of temperature signals for both the healthy and faulty gearbox. On the other hand, it is noticeable that the temperature measurements in the healthy dataset are a little lower than those in the faulty dataset. The difference is normal given that a damaged gearbox will probably produce higher temperatures than a healthy one. These temperature changes can help spot abnormalities in gearbox performance and offer useful insights into possible variances between the two circumstances.

The substantial quantity of noise contained in the vibration signals produced by gearboxes must be addressed in order to efficiently extract or enhance vital information. As a result, a DWT is used to de-noise the signals for both healthy and malfunctioning gearboxes. The visual representation of the decomposition and denoising of the vibration signal received from the gearboxes is shown in

Figure 6. By separating the wanted signal components from the noise using this method, the study was able to emphasize and extract the essential data required for additional analysis and diagnostics.

The Discrete Wavelet Transform (DWT) was applied to both the healthy and faulty gearbox signals, as shown in

Figure 6a,b, resulting in a four-level decomposition. This decomposition efficiently decreases the effects of noise in the signals, revealing the time-frequency domain properties of the processed signals. As discussed earlier in the previous chapter, the DWT decomposition generates the approximate and detailed coefficients that represent the signal’s low and high frequencies, respectively. In our investigation, our concentration was primarily on approximate coefficient because it offers more detailed information on the gearbox signal’s significant frequencies and features.

2.7.1. Feature Extraction

DWT are signal processing tools that uniquely transform a signal to its time-frequency domain; thus, time-frequency domain features are frequently used to ensure that useful information is successfully extracted from these signal-presenting features that are rich and contain all of the useful details of a given signal. In our investigation, a multi-sensor approach is employed with only the vibration signal being subjected to a DWT; on the other hand, only time-domain statistical features were extracted from the thermal sensor dataset, which is a time-variant data.

In this study, we used sixteen statistical features in the time domain to evaluate temperature data. In addition, we all employed sixteen time-frequency domain features in analyzing the DWT decomposition of the vibration signal as well as the original signal; the sixteen statistical time-frequency features included twelve time-domain features and four frequency-domain features as summarized in

Table 2. Our goal was to extract useful information from the signals in order to improve the model’s efficiency.

It is vital to highlight that no precise criteria were adhered to while selecting statistical features. Instead, our choice was based on the popularity of specific characteristics in the area and the authors’ experience.

2.7.2. Feature Selection and Sensor Fusion

To evaluate the adequacy of the extracted features for our model, we conducted a discriminant test using a Pearson correlation-filter-based approach. This method involved assessing the correlation between features and dropping the features with a correlation of 70% or more leaving behind features below the 70% similarity threshold.

Figure 7 and

Figure 8 show the correlation plot for both the thermal and vibration extracted features in our study, respectively.

Furthermore, the correlation filter-based model selected five features from the thermal data (shown in

Figure 9a) and seven features from the vibration data (shown in

Figure 9b). This feature selection process efficiently reduced the dataset, retaining only the relevant and most discriminant features necessary for optimal model performance.

To integrate the multi-sensor data in our study, FastICA (fast independent component analysis) was used to combine multi-sensor data in our investigation. This method was used to keep the distinguishing characteristics of each sensor’s separate qualities while blending them together. The FastICA ensures that the fused data keep the distinct properties of each sensor, allowing us to gather and exploit the essential information from all sensors in a cohesive manner.