Improved Results on Delay-Dependent and Order-Dependent Criteria of Fractional-Order Neural Networks with Time Delay Based on Sampled-Data Control

Abstract

:1. Introduction

- •

- A novel class of LKFs is established, in which time delay and fractional-order information are taken into account so as to reduce the conservatism of the stability criteria.

- •

- A new method is proposed to present the relations among the terms of the fractional-order Leibniz–Newton formula for FONNs with time delay by free-weighting matrices. Because is very difficult to deal with, more functionals need to be constructed, which may also be conservative and computationally complex. Based on this method, the estimation of can be avoided.

- •

- Compared with the existing results, a less conservative stability for FONNs is established, which achieves a longer sampling period. Moreover this method is applied to the stability analysis of fractional-order linear time-delay systems.

- •

- Based on the stability criteria obtained, the sampled-data controller of the FONNs is designed. The results are in terms of LMIs, which make computation and application easier.

2. Preliminaries

- For any and ,

- For any and ,

- , for any , where , is symmetric positive definite matrix.

- ;

- .

3. Main Results

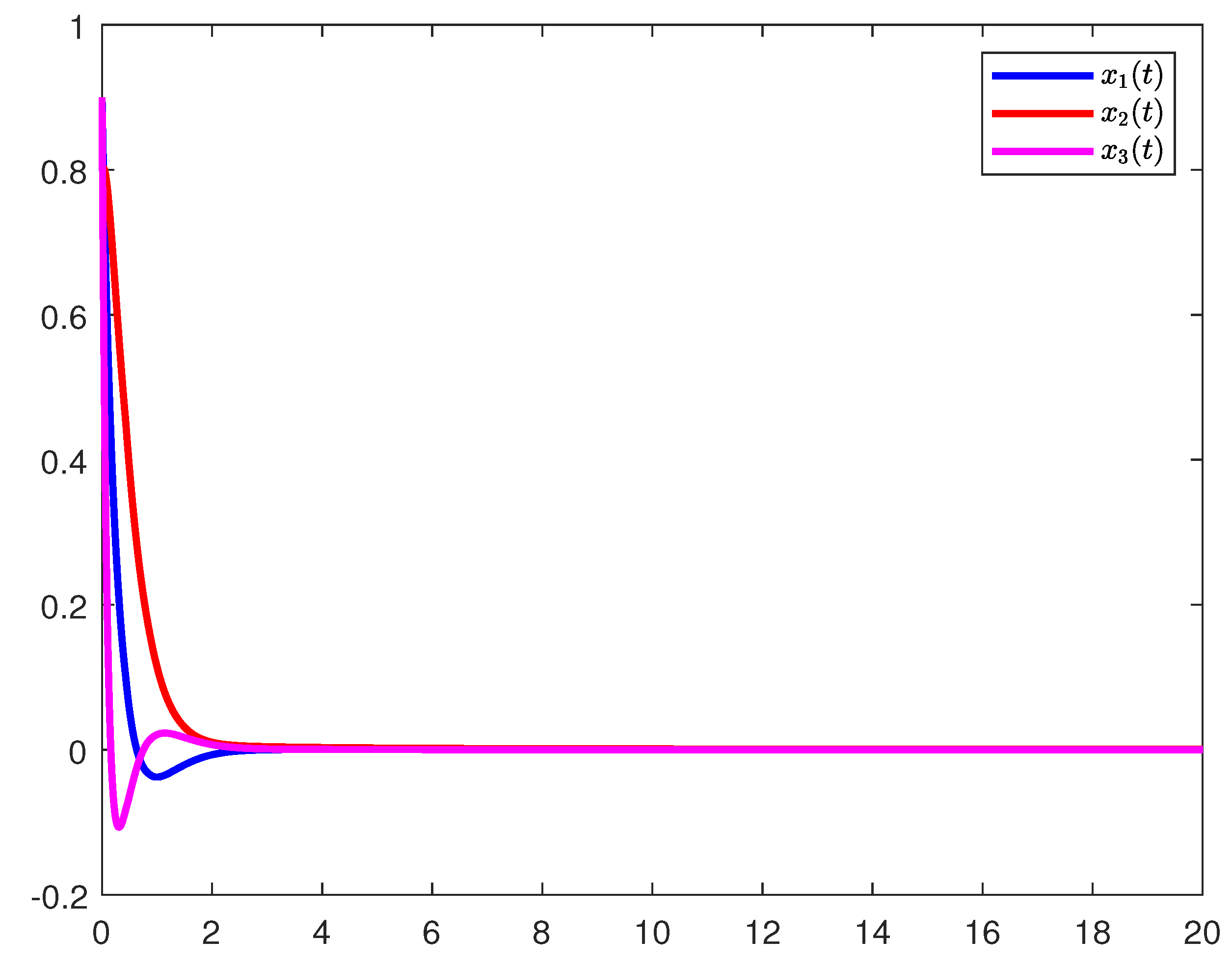

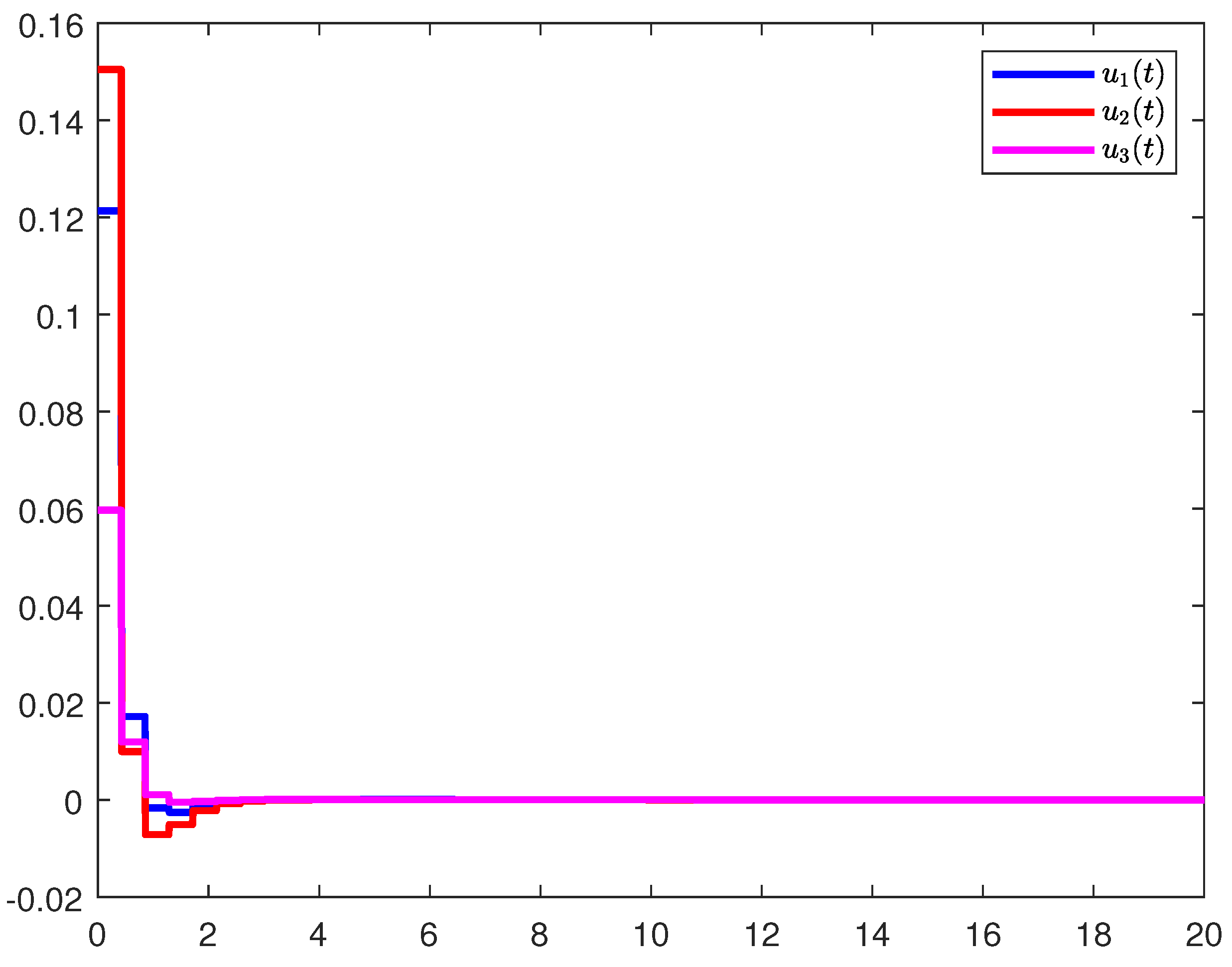

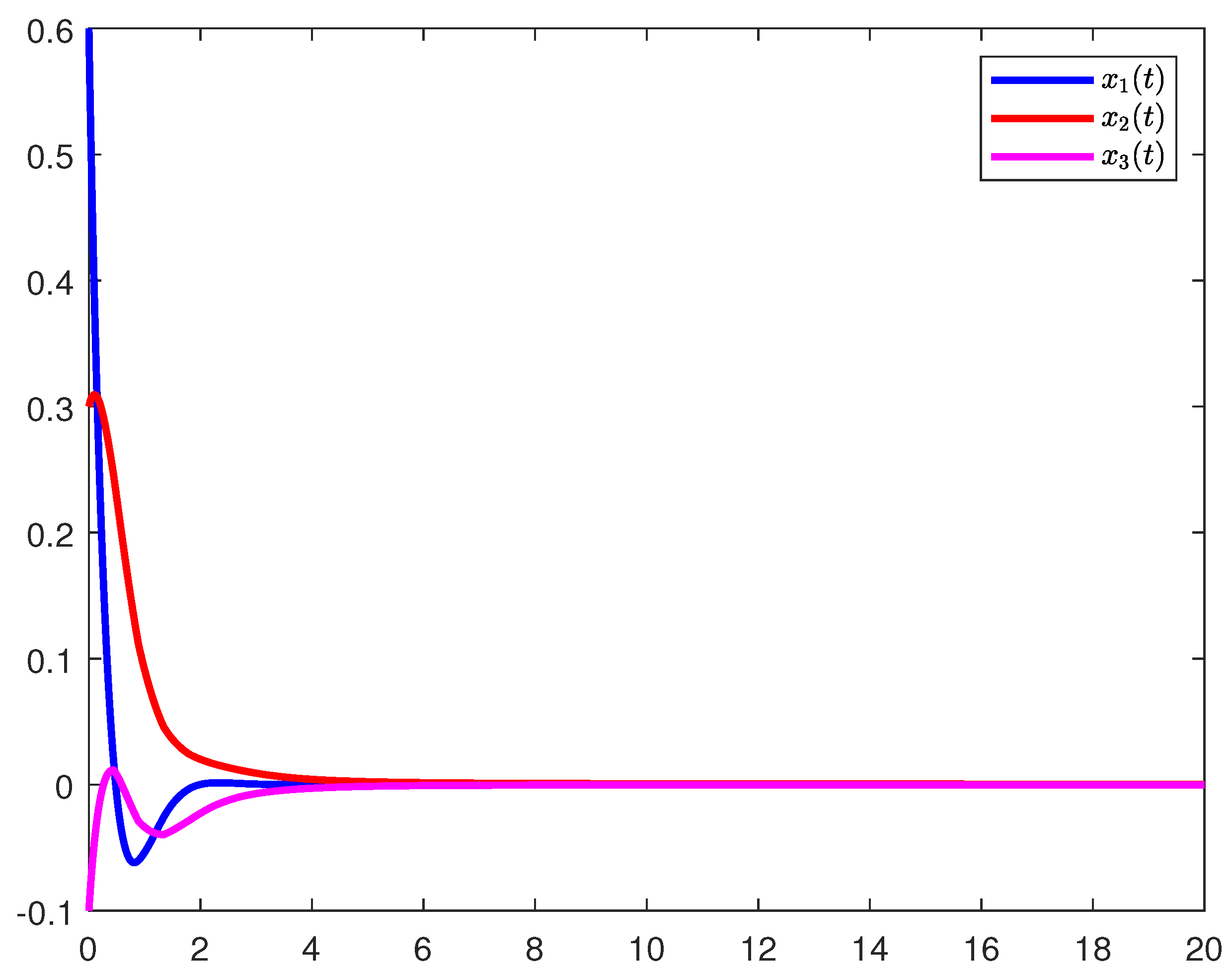

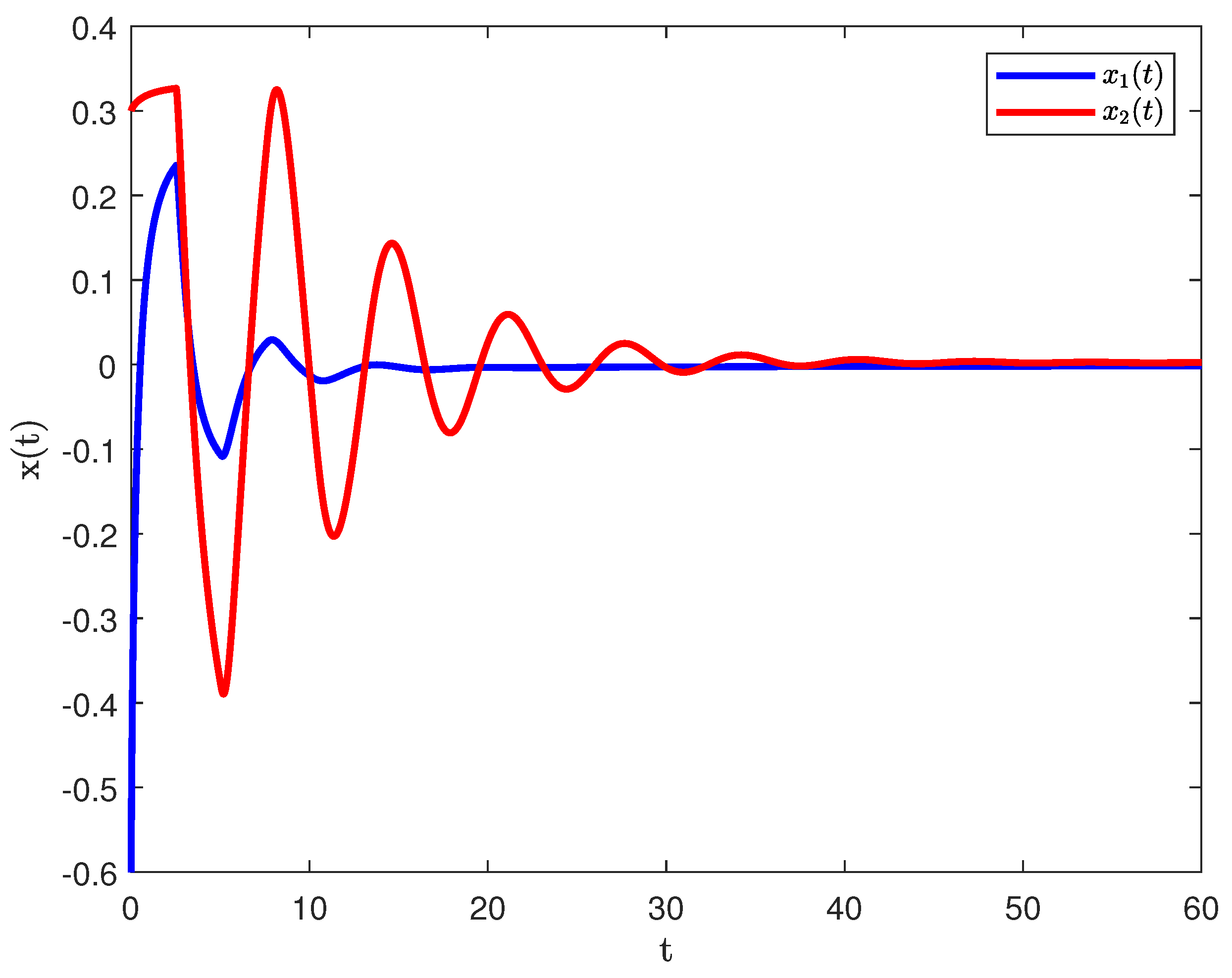

4. Numerical Examples

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Mandelbrot, B.B. The Fractal Geometry of Nature; WH Freeman: New York, NY, USA, 1982. [Google Scholar]

- Kilbas, A.A.; Marichev, O.I.; Samko, S.G. Fractional Integrals and Derivatives (Theory and Applications); Gordon and Breach: Yverdon, Switzerland, 1983. [Google Scholar]

- Hilfer, R. Applications of Fractional Calculus in Physics; World Scientific: Singapore, 2000. [Google Scholar]

- Oppenheim, A.V.; Willsky, A.S.; Nawab, S.H.; Ding, J.J. Signals and Systems; Prentice Hall: Upper Saddle River, NJ, USA, 1997. [Google Scholar]

- Kilbas, A.A.; Srivastava, H.M.; Trujillo, J.J. Theory and Applications of Fractional Differential Equations; Elsevier: Amsterdam, The Netherlands, 2006. [Google Scholar]

- Delavari, H.; Mohadeszadeh, M. Robust finite-time synchronization of non-identical fractional-order hyperchaotic systems and its application in secure communication. IEEE/CAA J. Autom. Sin. 2016, 6, 228–235. [Google Scholar] [CrossRef]

- Yousefpour, A.; Jahanshahi, H.; Munoz-Pacheco, J.M.; Bekiros, S.; Wei, Z.C. A fractional-order hyper-chaotic economic system with transient chaos. Chaos Solitons Fractals 2020, 130, 109400. [Google Scholar] [CrossRef]

- Zeng, D.Q.; Wu, K.T.; Zhang, R.M.; Zhong, S.; Shi, K.B. Improved results on sampled-data synchronization of Markovian coupled neural networks with mode delays. Neurocomputing 2018, 275, 2845–2854. [Google Scholar] [CrossRef]

- Zhang, G.L.; Zhang, J.Y.; Li, W.; Ge, C.; Liu, Y.J. Robust synchronization of uncertain delayed neural networks with packet dropout using sampled-data control. Appl. Intell. 2021, 51, 9054–9065. [Google Scholar] [CrossRef]

- Wang, H.; Ni, Y.J.; Wang, J.W.; Tian, J.P.; Ge, C. Sampled-data control for synchronization of Markovian jumping neural networks with packet dropout. Appl. Intell. 2022, 53, 8898–8909. [Google Scholar] [CrossRef]

- Picozzi, S.; West, B.J. Fractional Langevin model of memory in financial markets. Phys. Rev. E 2002, 66, 046118. [Google Scholar] [CrossRef] [PubMed]

- Zhang, W.W.; Cao, J.D.; Chen, D.Y.; Alsaadi, F.E. Synchronization in fractional-order complex-valued delayed neural networks. Entropy 2018, 20, 54. [Google Scholar] [CrossRef]

- Thuan, M.V.; Binh, T.N.; Huong, D.C. Finite-time guaranteed cost control of Caputo fractional-order neural networks. Asian J. Control 2020, 22, 696–705. [Google Scholar] [CrossRef]

- Xu, S.; Liu, H.; Han, Z.M. The passivity of uncertain fractional-order neural networks with time-varying delays. Fractal Fract. 2022, 6, 375. [Google Scholar] [CrossRef]

- Wang, C.X.; Zhou, X.D.; Shi, X.Z.; Jin, Y.T. Delay-dependent and order-dependent LMI-based sliding mode H∞ control for variable fractional order uncertain differential systems with time-varying delay and external disturbance. J. Frankl. Inst. 2022, 359, 7893–7912. [Google Scholar] [CrossRef]

- Chen, Y.; Wang, B.; Chen, Y.; Wang, Y. Sliding Mode Control for a Class of Nonlinear Fractional Order Systems with a Fractional Fixed-Time Reaching Law. Fractal Fract. 2022, 6, 678. [Google Scholar] [CrossRef]

- Jia, T.; Chen, X.; He, L.; Zhao, F.; Qiu, J. Finite-Time Synchronization of Uncertain Fractional-Order Delayed Memristive Neural Networks via Adaptive Sliding Mode Control and Its Application. Fractal Fract. 2022, 6, 502. [Google Scholar] [CrossRef]

- Stamova, I.; Henderson, J. Practical stability analysis of fractional-order impulsive control systems. Isa Trans. 2016, 64, 77–85. [Google Scholar] [CrossRef]

- Guo, L.; Ali Shah, K.; Bai, S.; Zada, A. On the Analysis of a Neutral Fractional Differential System with Impulses and Delays. Fractal Fract. 2022, 6, 673. [Google Scholar] [CrossRef]

- Chen, L.P.; Wu, R.C.; Cheng, Y.; Chen, Y.Q. Delay-dependent and order-dependent stability and stabilization of fractional-order linear systems with time-varying delay. IEEE Trans. Circuits Syst. II Express Briefs 2020, 67, 1064–1068. [Google Scholar] [CrossRef]

- Ma, Z.; Sun, K. Nonlinear Filter-Based Adaptive Output-Feedback Control for Uncertain Fractional-Order Nonlinear Systems with Unknown External Disturbance. Fractal Fract. 2023, 7, 694. [Google Scholar] [CrossRef]

- Zhang, Q.; Wang, H.; Wang, L. Order-dependent sampling control for state estimation of uncertain fractional-order neural networks system. Optim. Control Appl. Methods, 2023; under review. [Google Scholar]

- Cao, K.; Gu, J.; Mao, J.; Liu, C. Sampled-Data Stabilization of Fractional Linear System under Arbitrary Sampling Periods. Fractal Fract. 2022, 6, 416. [Google Scholar] [CrossRef]

- Cao, K.C.; Qian, C.J.; Gu, J.P. Sampled-data control of a class of uncertain nonlinear systems based on direct method. Syst. Control Lett. 2021, 155, 105000. [Google Scholar] [CrossRef]

- Li, S.; Ahn, C.K.; Guo, J.; Xiang, Z.R. Neural network-based sampled-data control for switched uncertain nonlinear systems. IEEE Trans. Syst. Man Cybern. Syst. 2019, 51, 5437–5445. [Google Scholar] [CrossRef]

- Zhang, Q.; Ge, C.; Zhang, R.N.; Yang, L. Order-dependent sampling control of uncertain fractional-order neural networks system. Authorea, 2022; Preprints. [Google Scholar]

- Agarwal, R.P.; Hristova, S.; O’Regan, D. Lyapunov Functions and Stability Properties of Fractional Cohen—GrossbergNeural Networks Models with Delays. Fractal Fract. 2023, 7, 732. [Google Scholar] [CrossRef]

- Chen, L.; Gong, M.; Zhao, Y.; Liu, X. Finite-Time Synchronization for Stochastic Fractional-Order Memristive BAM Neural Networks with Multiple Delays. Fractal Fract. 2023, 7, 678. [Google Scholar] [CrossRef]

- Zhao, K. Stability of a Nonlinear Langevin System of ML-Type Fractional Derivative Affected by Time-Varying Delays and Differential Feedback Control. Fractal Fract. 2022, 6, 725. [Google Scholar] [CrossRef]

- Duarte-Mermoud, M.A.; Aguila-Camacho, N.; Gallegos, J.A.; Castro-Linares, R. Using general quadratic Lyapunov functions to prove Lyapunov uniform stability for fractional order systems. Commun. Nonlinear Sci. Numer. Simul. 2015, 22, 650–659. [Google Scholar] [CrossRef]

- Jia, J.; Huang, X.; Li, Y.X.; Cao, J.D.; Alsaedi, A. Global stabilization of fractional-order memristor-based neural networks with time delay. IEEE Trans. Neural Netw. Learn. Syst. 2019, 31, 997–1009. [Google Scholar] [CrossRef] [PubMed]

- Xiong, J.L.; Lam, J. Stabilization of networked control systems with a logic ZOH. IEEE Trans. Autom. Control 2009, 54, 358–363. [Google Scholar] [CrossRef]

- Hu, T.T.; He, Z.; Zhang, X.J.; Zhong, S.M.; Yao, X.Q. New fractional-order integral inequalities: Application to fractional-order systems with time-varying delay. J. Frankl. Inst. 2021, 358, 3847–3867. [Google Scholar] [CrossRef]

- Jin, X.C.; Lu, J.G. Order-dependent and delay-dependent conditions for stability and stabilization of fractional-order time-varying delay systems using small gain theorem. Asian J. Control 2023, 25, 1365–1379. [Google Scholar] [CrossRef]

- Jin, X.C.; Lu, J.G. Order-dependent LMI-based stability and stabilization conditions for fractional-order time-delay systems using small gain theorem. Int. J. Robust Nonlinear Control 2022, 32, 6484–6506. [Google Scholar] [CrossRef]

- Sene, N.; Ndiaye, A. On Class of Fractional-Order Chaotic or Hyperchaotic Systems in the Context of the Caputo Fractional-Order Derivative. J. Math. 2020, 2020, 8815377. [Google Scholar] [CrossRef]

- Li, Q.P.; Liu, S.Y.; Chen, Y.G. Combination event-triggered adaptive networked synchronization communication for nonlinear uncertain fractional-order chaotic systems. Appl. Math. Comput. 2018, 333, 521–535. [Google Scholar] [CrossRef]

- Yu, N.X.; Zhu, W. Event-triggered impulsive chaotic synchronization of fractional-order differential systems. Appl. Math. Comput. 2021, 388, 125554. [Google Scholar] [CrossRef]

| 0.9 | 0.92 | 0.95 | 0.98 | |

|---|---|---|---|---|

| [26] | 0.13 | 0.14 | 0.15 | 0.17 |

| Corollary 2 | 0.410 | 0.415 | 0.423 | 0.431 |

| 0.9 | 0.92 | 0.95 | 0.98 | |

|---|---|---|---|---|

| [22] | 0.12 | 0.13 | 0.15 | 0.16 |

| Corollary 2 | 0.426 | 0.432 | 0.440 | 0.448 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dai, J.; Xiong, L.; Zhang, H.; Rui, W. Improved Results on Delay-Dependent and Order-Dependent Criteria of Fractional-Order Neural Networks with Time Delay Based on Sampled-Data Control. Fractal Fract. 2023, 7, 876. https://doi.org/10.3390/fractalfract7120876

Dai J, Xiong L, Zhang H, Rui W. Improved Results on Delay-Dependent and Order-Dependent Criteria of Fractional-Order Neural Networks with Time Delay Based on Sampled-Data Control. Fractal and Fractional. 2023; 7(12):876. https://doi.org/10.3390/fractalfract7120876

Chicago/Turabian StyleDai, Junzhou, Lianglin Xiong, Haiyang Zhang, and Weiguo Rui. 2023. "Improved Results on Delay-Dependent and Order-Dependent Criteria of Fractional-Order Neural Networks with Time Delay Based on Sampled-Data Control" Fractal and Fractional 7, no. 12: 876. https://doi.org/10.3390/fractalfract7120876