A Theory of Semantic Information †

Abstract

:1. Introduction

2. A Brief Review of the Classical Theory of Semantic Information

- ~ (Not): “~Pa” means “a is not P”;

- ∨ (Or): “Pa∨Qb” means “a is P or b is Q”;

- ∧ (and): “Pa∧Qb” means “a is P and b is Q”;

- → (if … then): implication

- ≡ (if and only if): equivalence

- (1)

- For each Z, there is 0 ≤ m(Z) ≤ 1,

- (2)

- For all kn state descriptors, there is ∑m(Z) = 1,

- (3)

- For any non-false sentence i, its m(i) is the sum of all m(Z) within R(i).

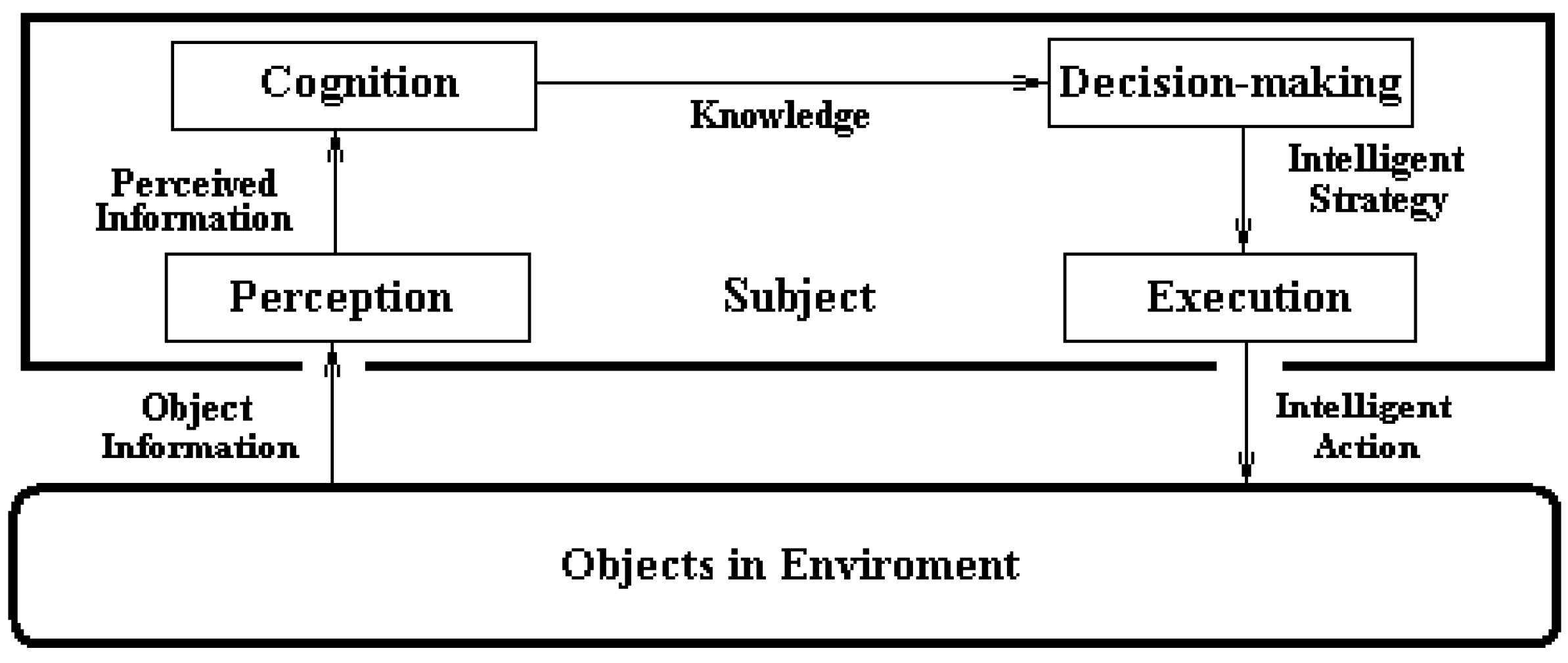

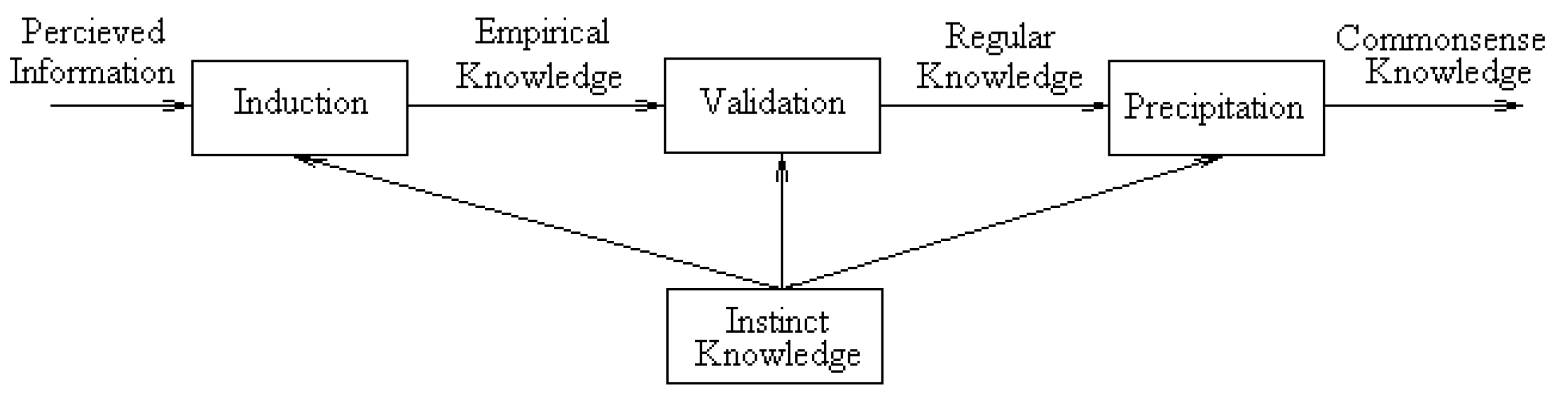

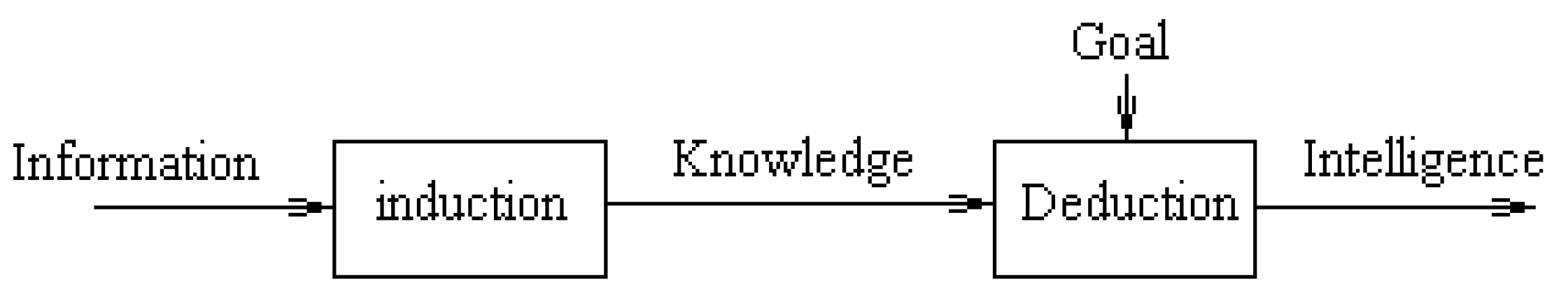

3. Fundamental Concepts Related to Semantic Information

3.1. Classic Concepts on Semantics and Information

- --

- N. Wiener announced that [15] information is information, neither matter, nor energy.

- --

- C. Shannon considers that [2] information is what can be used to remove uncertainty.

- --

- G. Bateson [16]: Information is the difference that makes difference.

- --

- V. Bertalanffy wrote that [17] information is a measure of system’s complexity.

3.2. Concepts Related to Semantic Information in View of Ecosystem

- (1)

- The object/ontological information defined in Definition 1, the “states-pattern presented by object”, is neither matter nor energy and is thus in agreement with Wiener’s statement. However, the Definition 1 is more standardized than the statement.

- (2)

- The concept of perceived/epistemological information defined in Definition 2 is just what can be used to remove the uncertainties concerning the “states-pattern”. It is easy to see that the concept of information in Shannon theory is the statistically syntactic information, only one component of the perceived information. So, the Definition 2 is more reasonable and more complete than Shannon’s understanding is.

- (3)

- As for the Bateson’s statement, it is pointed out that the most fundamental “difference” among objects is their own “states-pattern” presented by the objects. Hence, the Definition 1 and Bateson’s statement is equivalent to each other. But Definition 1 is more regular.

- (4)

- Bertalanffy regarded information as “complexity of system”, in fact the complexity of a system is just the complexity of its “states-pattern” presented.

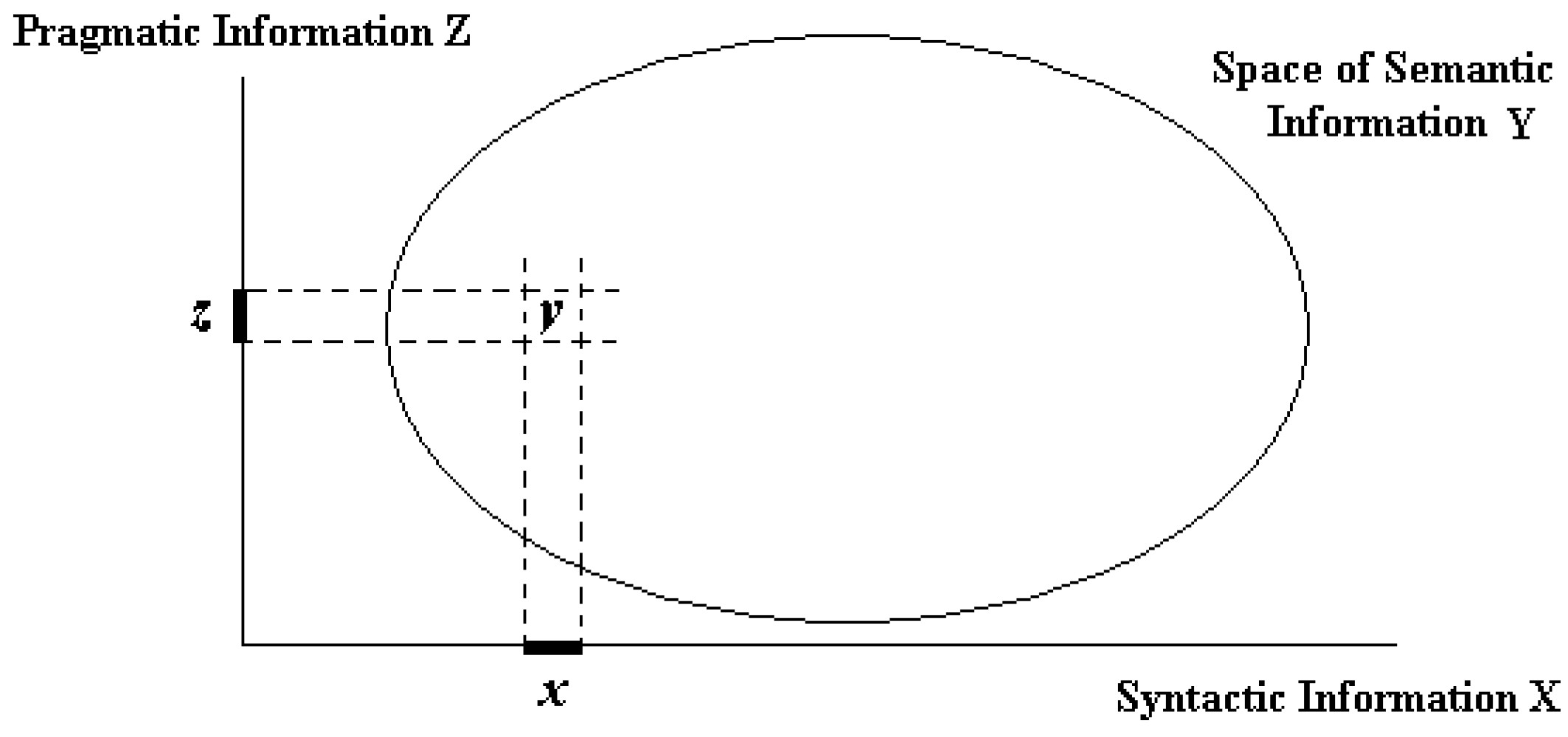

- (1)

- Semantic information is the result of mapping the joint of syntactic and pragmatic information into the space of semantic information and naming.

- (2)

- Semantic information is the legal representative of perceived/comprehensive information.

- (3)

- Semantic information plays very important role in knowledge and intelligence research.

4. Nucleus of Semantic Information Theory

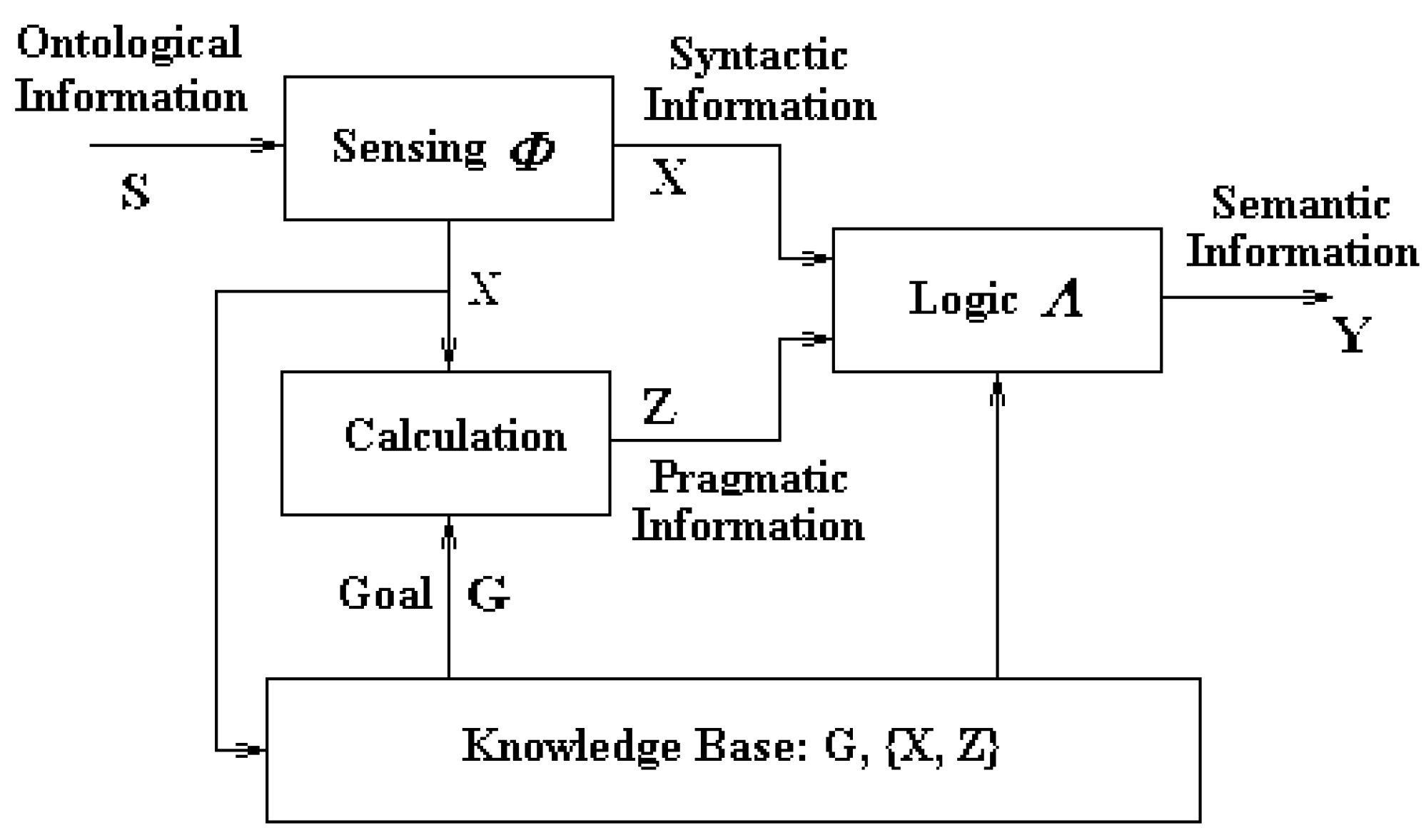

4.1. Semantic Information Genesis

- step 1

- Generating X from S via Sensing Function

- Mathematically, this is the mapping function from S to X.

- This function can be realized via sensing organs for humans.

- It can also be implemented via sensor systems for machine.

- step 2-1

- Generating Z from X via Retrieval Operation

- Using X, the just produced syntactic information in step 1, as the key word to search the set {Xn, Zn} in knowledge base for finding the Xn that is matched with X. If such an Xn is found, the Zn corresponding to Xn in {Xn, Zn} is accepted as the pragmatic information Z.

- This operation can be realized via recalling/memorizing function for humans. It can also be implemented via information retrieval system for machine.

- step 2-2

- Generating Z from X via Calculation Function

- If the Xn matching with X cannot be found from {Xn, Zn}, this means that the system is facing a new object never seen before. In this case, the pragmatic information can be obtained via calculating the correlation between X and G, the system’s goal:Z ~ Cor. (X, G)

- This function can be performed via utility evaluation for humans.

- It can also be implemented via calculation for machine.

- step 3

- Generating Y from X and Z via Mapping and Naming

- Map (X, Z) into the space of semantic information and name the result:Y = λ (X, Z)

- This function can be performed via abstract and naming for humans.

- It can also be implemented via mapping and naming for machine.

- If {x = apple, z = nutritious}, then y = fruit.

- If {x = apple, z = useful for information processing}, then y = i-pad computer.

- If {x = apple, z = information processor in pocket}, then y = i-phone.

4.2. The Representation and Measurement of Semantic Information

4.2.1. Representation of Semantic Information

4.2.2. Measurement of Semantic Information

5. Concluding Remarks

Acknowledgments

Conflicts of Interest

Appendix A. Note on the Measurement of Semantic Information

References

- Shannon, C.E. A Mathematical Theory of Communication. BSTJ 1948, 47, 379–423; 632–656. [Google Scholar]

- Kolmogorov, A.N. Three Approaches to the Quantitative Definition of Information. Int. J. Comput. Mach. 1968, 2, 157–168. [Google Scholar] [CrossRef]

- Kolmogorov, A.N. Logic Basis for Information Theory and Probability Theory. IEEE Trans. Inf. Theory 1968, 14, 662–664. [Google Scholar] [CrossRef]

- Chaitin, G.J. Algorithmic Information Theory; Cambridge University Press: Cambridge, UK, 1987. [Google Scholar]

- Carnap, R.; Bar-Hillel, Y. Semantic Information. Br. J. Philos. Sci. 1953, 4, 147–157. [Google Scholar]

- Brilluion, A. Science and Information Theory; Academic Press: New York, NY, USA, 1956. [Google Scholar]

- Bar-Hillel, Y. Language and Information; Reading, MA, USA, 1964. [Google Scholar]

- Millikan, R.G. Varieties of Meaning; MIT Press: Cambridge, UK, 2002. [Google Scholar]

- Stonier, J. Informational Content: A Problem of Definition. J. Philos. 1966, 63, 201–211. [Google Scholar]

- Gottinger, H.W. Qualitative Information and Comparative Informativeness. Kybernetik 1973, 13, 81. [Google Scholar] [CrossRef]

- Floridi, L. The Philosophy of Information; Oxford University Press: Oxford, UK, 2011. [Google Scholar]

- De Saussure, F. Course in General Linguistics; Ryan, M., Rivkin, J., Eds.; Blackwell Publishers: Oxford, UK, 2001. [Google Scholar]

- Peirce, C.S. Collect Papers: Volume V. Pragmatism and Pragmaticism; Harvard University Press: Cambridge, MA, USA, 1934. [Google Scholar]

- Morris, C.W. Writings on the General Theory of Signs; Mouton: The Hague, The Netherlands, 1971. [Google Scholar]

- Wiener, N. Cybernetics; John-Wiley and Sons: New York, NY, USA, 1948. [Google Scholar]

- Bateson, G. Steps towards an Ecology of Mind; Jason Aronson Inc.: Lanham, MD, USA, 1972. [Google Scholar]

- Bertalanffy, L.V. General System Theory; George Braziller Inc.: New York, NY, USA, 1968. [Google Scholar]

- Zhong Y, X. Principles of Information Science; Beijing University of Posts and Telecommunications Press: Beijing, China, 1988. [Google Scholar]

- Zhong, Y.X. Information Conversion: The integrative Theory of Information, Knowledge, and intelligence. Sci. Bull. 2013, 85, 1300–1306. [Google Scholar]

- Zhong, Y.X. Principles of Advanced Artificial Intelligence; Science Press: Beijing, China, 2014. [Google Scholar]

- Zadeh, L.A. Fuzzy Sets Theory. Inf. Control 1965, 8, 338–353. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2017 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhong, Y. A Theory of Semantic Information. Proceedings 2017, 1, 129. https://doi.org/10.3390/IS4SI-2017-04000

Zhong Y. A Theory of Semantic Information. Proceedings. 2017; 1(3):129. https://doi.org/10.3390/IS4SI-2017-04000

Chicago/Turabian StyleZhong, Yixin. 2017. "A Theory of Semantic Information" Proceedings 1, no. 3: 129. https://doi.org/10.3390/IS4SI-2017-04000

APA StyleZhong, Y. (2017). A Theory of Semantic Information. Proceedings, 1(3), 129. https://doi.org/10.3390/IS4SI-2017-04000