Classification of Maize in Complex Smallholder Farming Systems Using UAV Imagery

Abstract

:1. Introduction

2. Materials and Methods

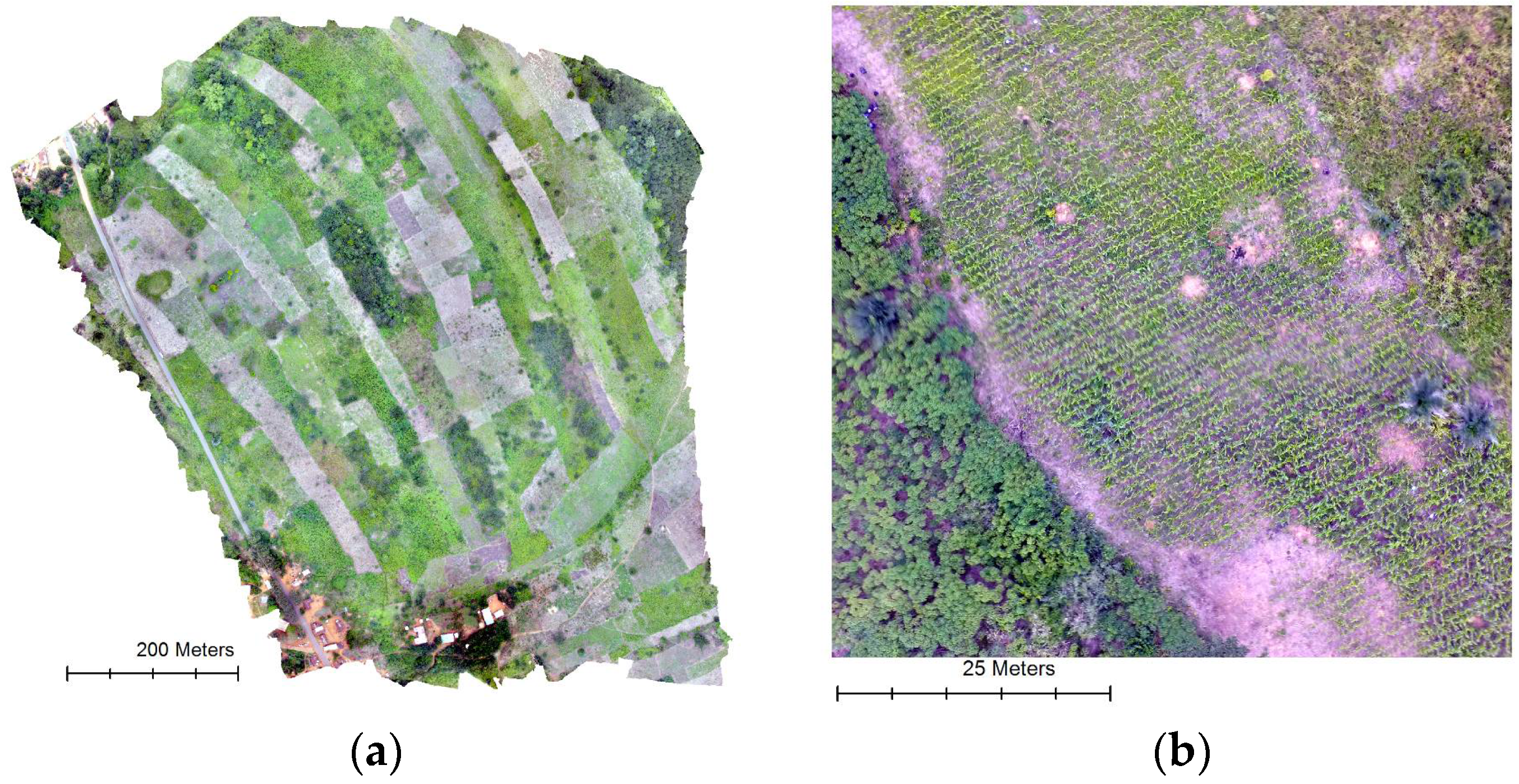

2.1. Study Area

2.2. Image Acquisition

2.3. Image Analysis

3. Results

3.1. Classification Accuracy

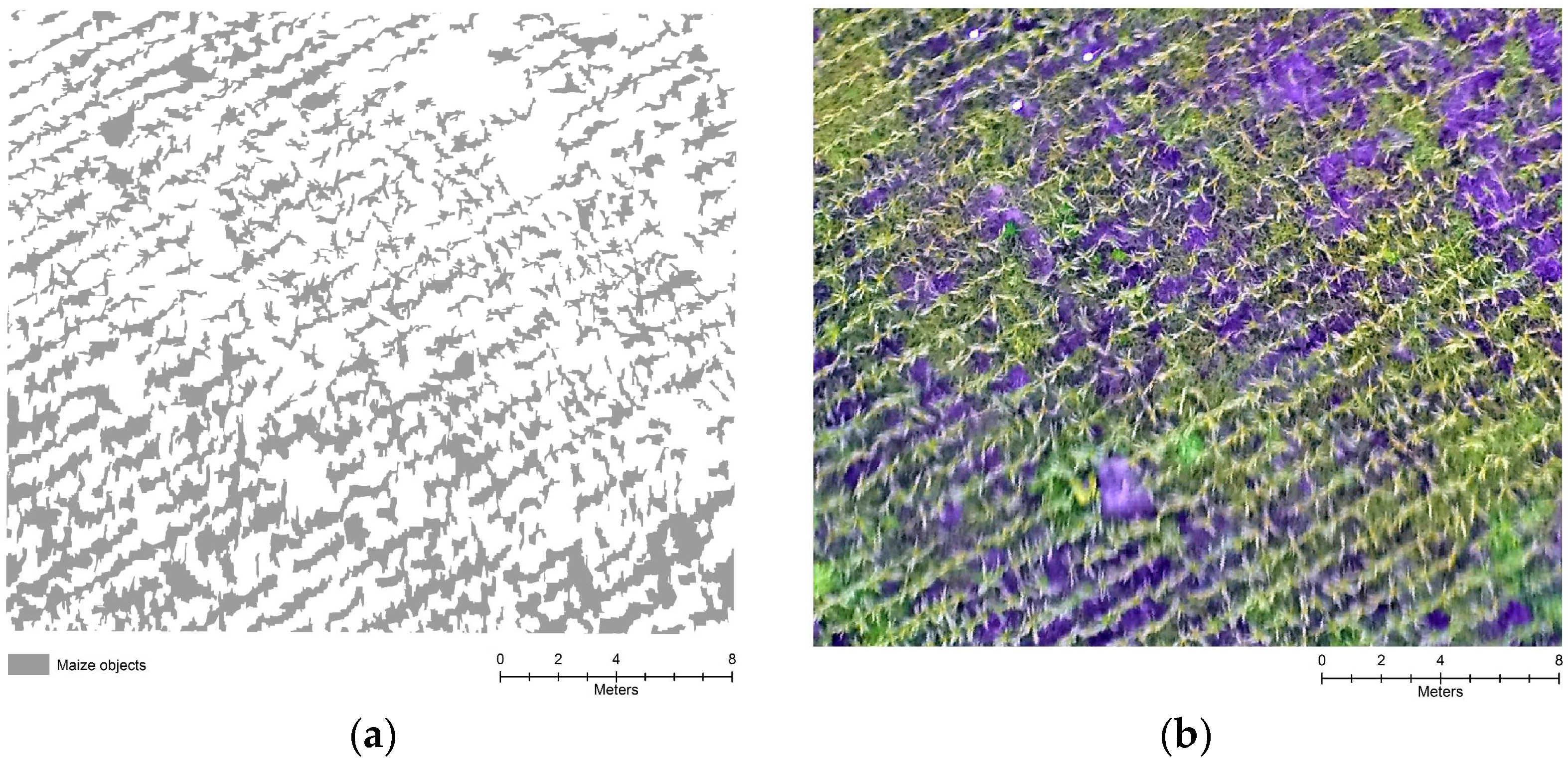

3.2. Maize Objects

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Foley, J.A.; Ramankutty, N.; Brauman, K.A.; Cassidy, E.S.; Gerber, J.S.; Johnston, M.; Mueller, N.D.; O’Connell, C.; Ray, D.K.; West, P.C.; et al. Solutions for a cultivated planet. Nature 2011, 478, 337–342. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Godfray, H.C.J.; Beddington, J.R.; Crute, I.R.; Haddad, L.; Lawrence, D.; Muir, J.F.; Pretty, J.; Robinson, S.; Thomas, S.M.; Toulmin, C. Food Security: The Challenge of Feeding 9 Billion People. Science 2010, 327, 812–818. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Moulin, S.; Bondeau, A.; Delecolle, R. Combining agricultural crop models and satellite observations: From field to regional scales. Int. J. Remote Sens. 1998, 19, 1021–1036. [Google Scholar] [CrossRef]

- Lobell, D.B. The use of satellite data for crop yield gap analysis. Field Crop. Res. 2013, 143, 56–64. [Google Scholar] [CrossRef]

- Torres-Sánchez, J.; Peña, J.; De Castro, A.; López-Granados, F. Multi-temporal mapping of the vegetation fraction in early-season wheat fields using images from UAV. Comput. Electron. Agric. 2014, 103, 104–113. [Google Scholar] [CrossRef] [Green Version]

- Atzberger, C. Advances in remote sensing of agriculture: Context description, existing operational monitoring systems and major information needs. Remote Sens. 2013, 5, 949–981. [Google Scholar] [CrossRef]

- Laliberte, A.S.; Rango, A. Incorporation of texture, intensity, hue, and saturation for rangeland monitoring with unmanned aircraft imagery. In Proceedings of the The International Archives of the Photogrammetry, Remote Sensing, and Spatial Information Sciences, Calgary, AB, Canada, 5–8 August 2008. [Google Scholar]

- Hay, G.J.; Castilla, G. Object-based image analysis: Strengths, weaknesses, opportunities and threats (SWOT). In Proceedings of the 1st International Conference on Object-based Image Analysis (OBIA 2006), Salzburg, Austria, 4–5 July 2006. [Google Scholar]

- Hall, O.; Hay, G.J. A multiscale object-specific approach to digital change detection. Int. J. Appl. Earth Obs. Geoinf. 2003, 4, 311–327. [Google Scholar] [CrossRef]

- Dzanku, F.; Sarpong, D. Agricultural Diversification, Food Self-Sufficiency and Food Security in Ghana–the Role of Infrastructure and Institutions. In African Smallholders: Food Crops, Markets and Policy; CABI: Oxfordshire, UK, 2010; pp. 189–213. [Google Scholar]

- O’Keeffe, K. Maize Growth & Development; NSW Department of Primary Industries: Sydney, Australia, 2009.

- Colless, J.M. Maize Growing; NSW Agriculture: Orange, Australia, 1992.

- Haralick, R.M. Statistical and structural approaches to texture. Proc. IEEE 1979, 67, 786–804. [Google Scholar] [CrossRef]

- Koutsias, N.; Karteris, M.; Chuvico, E. The use of intensity-hue-saturation transformation of Landsat-5 Thematic Mapper data for burned land mapping. Photogramm. Eng. Remote Sens. 2000, 66, 829–840. [Google Scholar]

- Laliberte, A.S.; Rango, A. Texture and scale in object-based analysis of subdecimeter resolution unmanned aerial vehicle (UAV) imagery. IEEE Trans. Geosci. Remote Sens. 2009, 47, 761–770. [Google Scholar] [CrossRef]

- Benz, U.C.; Hofmann, P.; Willhauck, G.; Lingenfelder, I.; Heynen, M. Multi-resolution, object-oriented fuzzy analysis of remote sensing data for GIS-ready information. ISPRS J. Photogramm. Remote Sens. 2004, 58, 239–258. [Google Scholar] [CrossRef] [Green Version]

- Baatz, M.; Schäpe, A. Multiresolution Segmentation: An Optimization Approach for High Quality Multi-Scale Image Segmentation; Herbert Wichmann Verlag: Berlin, Germany, 2000; Volume 58, pp. 12–23. [Google Scholar]

- Woodcock, C.E.; Strahler, A.H. The factor of scale in remote sensing. Remote sens. Environ. 1987, 21, 311–332. [Google Scholar] [CrossRef]

- Tzotsos, A.; Argialas, D. Support vector machine classification for object-based image analysis. In Object-Based Image Analysis; Springer: Berlin, Germany, 2008; pp. 663–677. [Google Scholar]

- Huang, C.; Davis, L.; Townshend, J. An assessment of support vector machines for land cover classification. Int. J. Remote Sens. 2002, 23, 725–749. [Google Scholar] [CrossRef]

- Foody, G.M.; Mathur, A. A relative evaluation of multiclass image classification by support vector machines. IEEE Trans. Geosci. Remote Sens. 2004, 42, 1335–1343. [Google Scholar] [CrossRef] [Green Version]

- Congalton, R.G. A review of assessing the accuracy of classifications of remotely sensed data. Remote Sens. Environ. 1991, 37, 35–46. [Google Scholar] [CrossRef]

- Laliberte, A.S.; Goforth, M.A.; Steele, C.M.; Rango, A. Multispectral remote sensing from unmanned aircraft: Image processing workflows and applications for rangeland environments. Remote Sens. 2011, 3, 2529–2551. [Google Scholar] [CrossRef]

- Mather, P.M. Computer Processing of Remotely Sensed Data; John Wiley & Sons: New York, NY, USA, 1987; 111p. [Google Scholar]

- Jensen, A.; Clemens, S.R.; McKee, M.; Zaman, B. Retrieval of Spectral Reflectance of High Resolution Multispectral Imagery Acquired with an Autonomous Unmanned Aerial Vehicle. Photogramm. Eng. Remote Sens. 2014, 80, 1139–1150. [Google Scholar] [CrossRef]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hall, O.; Dahlin, S.; Marstorp, H.; Archila Bustos, M.F.; Öborn, I.; Jirström, M. Classification of Maize in Complex Smallholder Farming Systems Using UAV Imagery. Drones 2018, 2, 22. https://doi.org/10.3390/drones2030022

Hall O, Dahlin S, Marstorp H, Archila Bustos MF, Öborn I, Jirström M. Classification of Maize in Complex Smallholder Farming Systems Using UAV Imagery. Drones. 2018; 2(3):22. https://doi.org/10.3390/drones2030022

Chicago/Turabian StyleHall, Ola, Sigrun Dahlin, Håkan Marstorp, Maria Francisca Archila Bustos, Ingrid Öborn, and Magnus Jirström. 2018. "Classification of Maize in Complex Smallholder Farming Systems Using UAV Imagery" Drones 2, no. 3: 22. https://doi.org/10.3390/drones2030022