Greenness Indices from a Low-Cost UAV Imagery as Tools for Monitoring Post-Fire Forest Recovery

Abstract

1. Introduction

2. Materials and Methods

2.1. UAV Deployment and Field Sampling

2.2. Image Analysis

- The green chromatic coordinate (GCC) has also been used to detect vegetation and analyze plant phenology and dynamics [31,32]. Both ExGI and GRVI correlate with measurements made with a SpectroSense narrow spectrometer [33], but GRVI is far less sensitive to changes in scene illumination [32]. It is simply the chromatic coordinate of the green channel expressed as a proportion of the sum of coordinates:

- The green red vegetation index (GRVI) was first used by Rouse et al. [34] who concluded it could be used for several measures of crops and rangelands. Their conclusions have been later confirmed in several occasions [35,36,37,38]. It responds to leave senescence of deciduous forests in a parallel way to that of NDVI [37] and hence could be useful for discriminating senescent leaves from green needles. This index is given by:

- Lastly, the visible atmospherically resistant index (VARI) was proposed by Gitelson et al. [39]. It is an improvement of GRVI that reduces atmospheric effects. Although this is not an expected severe effect in low flying UAV platforms, it might locally be so, at Mediterranean sites with large amounts of bare soil. In addition, it has been reported to correlate better than GRVI with vegetation fraction [39].It is defined as:

- Applying the greenness threshold to the indices layers, in order to erase all non-green pixels, which were set to 0, For ExGI, GRVI and VARI, we defined green pixels as those with values greater than 0 and reclassified values less than 0 as 0. For GCC, the applied threshold was 1/3, and hence all values equal to or lesser than 1/3 were set to 0.

- Calculating the zone statistics of the greenness index and the filtered canopy height model for each 5*5 m2 cell within the study area. Zone statistics produces six different measures of the index value per each cell: count, mean, standard deviation, median, maximum and minimum.

- Calculating the pooled DBH of all measured pines within the cells of this same grid.

2.3. Statistical Analysis

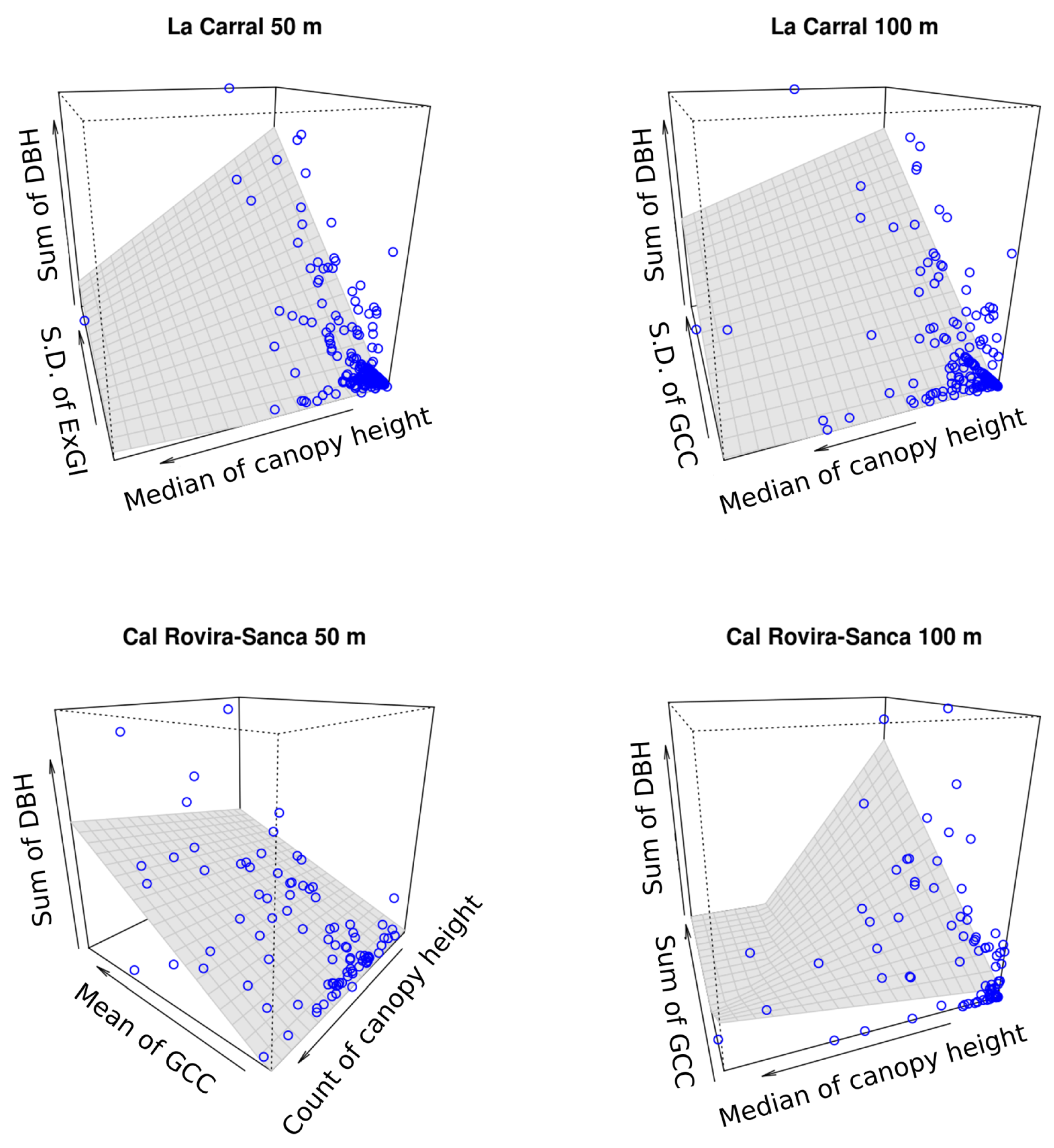

3. Results

4. Discussion

5. Conclusion

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Hardin, P.J.; Hardin, T.J. Small-scale remotely piloted vehicles in environmental research. Geogr. Compass 2010, 4, 1297–1311. [Google Scholar] [CrossRef]

- Colomina, I.; Molina, P. Unmanned aerial systems for photgrammetry and remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2014, 92, 79–97. [Google Scholar] [CrossRef]

- Matese, A.; Toscano, P.; Di Gennaro, S.F.; Genesio, L.; Vaccari, F.P.; Primicerio, J.; Belli, C.; Zaldei, A.; Bianconi, R.; Gioli, B. Intercomparison of UAV, aircraft and satellite remote sensing platforms for precision viticulture. Remote Sens. 2015, 7, 2971–2990. [Google Scholar] [CrossRef]

- Wallace, L.; Lucieer, A.; Watson, C.; Turner, D. Development of a UAV-LiDAR system with application to forest inventory. Remote Sens. 2012, 4, 1519–1543. [Google Scholar] [CrossRef]

- Getzin, S.; Nuske, R.S.; Wiegand, K. Using unmenned aerial vehicles (UAV) to quantify spatial gap patterns in forests. Remote Sens. 2014, 6, 6988–7004. [Google Scholar] [CrossRef]

- Salamí, E.; Barrado, C.; Pastor, E. UAV fligh experiments applied to the remote sensing of vegetated areas. Remote Sens. 2014, 6, 11051–11081. [Google Scholar] [CrossRef]

- Díaz-Varela, R.; de la Rosa, R.; León, L.; Zarco-Tejada, P.J. High-resolution airborne UAV imagery to assess olive tree crown parameters using 3D photo reconstruction: Application in breeding trials. Remote Sens. 2015, 7, 4213–4232. [Google Scholar] [CrossRef]

- Puliti, S.; Ørka, H.O.; Gobakken, T.; Næsset, E. Inventory of small forest areas using and unmanned aerial system. Remote Sens. 2015, 7, 9632–9654. [Google Scholar] [CrossRef]

- Guerra-Hernández, J.; González-Ferreiro, E.; Sarmento, A.; Silva, J.; Nunes, A.; Correia, A.C.; Fontes, L.; Tomé, M.; Díaz-Varela, R. Using high resolution UAV imagery to estimate tree variables in Pinus pinea plantation in Portugal. For. Syst. 2016, 25, 2171–9845. [Google Scholar] [CrossRef]

- Karpina, M.; Jarząbek-Rychard, M.; Tymków, P.; Borkowski, A. UAV-based automatic tree growth measurement for biomass estimation. In Proceedings of the XXIII ISPRS Congress, Prague, Czech Republic, 12–19 July 2016; Volume XLI-G8. [Google Scholar] [CrossRef]

- Baena, S.; Moat, J.; Whaley, O.; Boyd, D.S. Identifying species from the air: UAVs and the very high resolution challenge for plant conservation. PLoS ONE 2017, 12, e0188714. [Google Scholar] [CrossRef]

- Thiel, C.; Schmullius, C. Comparison of UAV photograph-based and airborne lidar-based point clouds over forest from a forestry application perspective. Int. J. Remote Sens. 2016, 38, 2411–2426. [Google Scholar] [CrossRef]

- Nebiker, S.; Annen, A.; Scherrer, M.; Oesch, D. A light-weight multispectral sensor for micro UAV-opportunities for very high resolution airborne remote sensing. Int. Arch Photogram. Remote Sens. Spat. Inf. Sci. 2008, XXXV, 1193–1200. [Google Scholar]

- Wallace, L.; Lucieer, A.; Malenobský, Z.; Turner, D.; Vopĕnka, P. Assessment of forest structure using two UAV techniques: A comparison of airborne laser scanning and structure from motion (SfM) point clouds. Forests 2016, 7, 62. [Google Scholar] [CrossRef]

- Gianetti, G.; Chirici, G.; Gobakkern, T.; Næsset, E.; Travaglini, D.; Puliti, S. A new approach with DTM-independent metrics for forest growing stock prediction using UAV photogrammetric data. Remote Sens. Environ. 2018, 213, 195–205. [Google Scholar] [CrossRef]

- Puliti, S.; Talbot, B.; Astrup, R. Tree-stump detection, segmentation, classification, and measurement using unmanned aerial vehicle (UAV) imagery. Forests 2018, 9, 102. [Google Scholar] [CrossRef]

- Blaschke, T. Object based image analysis for remote sensing. ISPRS J Photogram. Remote Sens. 2010, 65, 2–16. [Google Scholar] [CrossRef]

- Chehata, N.; Orny, C.; Boukir, S.; Guyon, D. Object-based forest change detection using high resolution satellite images. In Proceedings of the Photogrammetric Image Analysis, Munich, Germany, 5–7 October 2011; pp. 49–54. [Google Scholar]

- Torres-Sánchez, J.; López-Granados, F.; Serrano, N.; Arquero, O.; Peña, J.M. High-trhoughput 3-D monitoring agricultural-tree plantations with unmanned aerial vehicle (UAV) technology. PLoS ONE 2015, 10, e0130479. [Google Scholar] [CrossRef]

- Padró, J.C.; Muñoz, F.J.; Planas, J.; Pons, X. Comparison of four UAV georeferencing methods for environmental monitoring purposes focusing on the combined use with airborne and satellite remote sensing platforms. Int. J. Appl. Earth Obs. Geoinf. 2019, 79, 130–140. [Google Scholar] [CrossRef]

- Rodrigo, A.; Retana, J.; Picó, X. Direct regeneration is not the only response of Mediterranean forests to large fires. Ecology 2004, 85, 716–729. [Google Scholar] [CrossRef]

- Institut Cartogràfic i Geològic de Catalunya Mapa Geològic amb Llegenda Interactiva (Versió 2). 2014. Available online: http://betaportal.icgc.cat/wordpress/mapa-geologic-amb-llegenda-interactiva/ (accessed on 13 January 2017).

- Vautherin, J.; Rutishauser, S.; Schneider-Zapp, K.; Choi, H.F.; Chovancova, V.; Glass, A.; Strecha, C. Photogrammetric accuracy and modelling of rolling shutter cameras. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 3, 139–146. [Google Scholar] [CrossRef]

- XnView MP 0.83 [GPL Software]. 2016. Available online: https://www.xnview.com/en/xnviewmp/ (accessed on 15 September 2016).

- AgiSoft PhotoScan Professional (Version 1.2.6) [Software]. 2016. Available online: http://www.agisoft.com/downloads/installer/ (accessed on 13 September 2106).

- Westoby, M.J.; Brasington, J.; Glasser, N.F.; Hambrey, M.J.; Reynolds, J.M. ‘Structure-from-Motion’ photgrammetry: A low-cost, effective tool for geoscience applications. Geomorphology 2012, 179, 300–314. [Google Scholar] [CrossRef]

- Agisoft PhotoScan User Manual. Professional Edition; Version 1.4; Agisoft LLC: Saint-Petersburg, Russia, 2017.

- McGaughey, R.J. Fusion/LDV: Software for Lidar Data Analysis and Visualization. October 2016—Fusion Version 3.60; US Department of Agriculture, Forest Service, Pacific Northwest Research Station: Seattle, WA, USA, 2016. [Google Scholar]

- CloudCompare 2.7.0. [GPL software]. 2016. Available online: http://www.cloudcompare.org/ (accessed on 30 August 2016).

- Kraus, K.; Pfeifer, N. Determination of terrain models in wooded areas with airborne laser scanner data. ISPRS J. Photogramm. Remote Sens. 1998, 53, 193–203. [Google Scholar] [CrossRef]

- Woebbecke, D.M.; Meyer, G.E.; Von Bargen, K.; Mortensen, D.A. Color indices for weed identification under various soil, residue, and lighting conditions. Trans. Am. Soc. Agric. Eng. 1995, 38, 259–269. [Google Scholar] [CrossRef]

- Sonnentag, O.; Hufkens, K.; Teshera-Sterne, C.; Young, A.M.; Friedl, M.; Braswell, B.H.; Milliman, T.; O’Keefe, J.; Richardson, A.D. Digital repeat photography for phenological research in forest ecosystems. Agric. For. Meteorol. 2012, 152, 159–177. [Google Scholar] [CrossRef]

- Rasmussen, J.; Ntakos, G.; Nielsen, J.; Svensgaard, J.; Poulsen, R.N.; Christensen, S. Are vegetation indices derived from consumer-grade cameras mounted on UAVs sufficiently reliable for assessing experimental plots? Eur. J. Agron. 2017, 74, 75–92. [Google Scholar] [CrossRef]

- Rouse, J.W.; Haas, R.H.; Schell, J.A.; Deering, D.W. Monitoring vegetation systems in the Great Plains with ERTS. In Proceedings of the 3rd ERTS Symposium, NASA SP-351 I, Washington, DC, USA, 10–14 December 1973; pp. 309–317. [Google Scholar]

- Tucker, C.J. Red and photographic infrared linear combinations for monitoring vegetation. Remote Sens. Environ. 1979, 8, 127–150. [Google Scholar] [CrossRef]

- Falkowski, M.J.; Gessler, P.E.; Morgan, P.; Hudak, A.T.; Smith, A.M.S. Characterizing and mapping forest fire fuels using ASTER imagery and gradient modeling. For. Ecol. Manag. 2005, 217, 129–146. [Google Scholar] [CrossRef]

- Motohka, T.; Nasahara, K.N.; Oguma, H.; Tsuchida, S. Applicability of green-red vegetation index for remote sensing of vegetation phenology. Remote Sens. 2010, 2, 2369–2387. [Google Scholar] [CrossRef]

- Viña, A.; Gitelson, A.A.; Nguy-Robertson, A.L.; Peng, Y. Comparison of different vegetation indices for the remote assessment of green leaf area index of crops. Remote Sens. Environ. 2011, 115, 3468–3478. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Kaufman, Y.J.; Stark, R.; Rundquist, D. Novel algorithms for remote estimation of vegetation fraction. Remote Sens. Environ. 2002, 80, 76–87. [Google Scholar] [CrossRef]

- Leduc, M.-B.; Knudby, A.J. Mapping wild leek through the forest canopy using a UAV. Remote Sens. 2018, 10, 70. [Google Scholar] [CrossRef]

- Toomey, M.; Friedl, M.A.; Frolking, S.; Hufkens, K.; Klosterman, S.; Sonnentag, O.; Balodcchi, D.D.; Bernacchi, C.J.; Biraud, S.C.; Bohrer, G.; et al. Greenness indices from digital cameras predict the timing and seasonal dynamics of canopy-scale photosynthesis. Ecol. Appl. 2015, 25, 99–115. [Google Scholar] [CrossRef] [PubMed]

- QGIS Development Team. QGIS Geographic Information System. Open Source Geospatial Foundation Project. 2016. Available online: http://qgis.osgeo.org (accessed on 5 August 2016).

- Ribbens, E.; Silander, J.A., Jr.; Pacala, S.W. Seedling recruitment in forests: Calibrating models to predict patterns of tree seedling dispersion. Ecology 1994, 75, 1794–1806. [Google Scholar] [CrossRef]

- Parresol, B.R. Assessing tree and stand biomass: A review with examples and critical comparisons. For. Sci. 1999, 45, 573–593. [Google Scholar]

- Bolte, A.; Rahmann, R.; Kuhr, M.; Pogoda, P.; Murach, D.; Gadow, K. Relationships between tree dimension and coarse root biomass in mixed stands of European beech (Fagus sylvatica L.) and Norway spruce (Picea abies [L.] Karst.). Plant Soil 2004, 264, 1–11. [Google Scholar] [CrossRef]

- Poorter, L.; Bongers, L.; Bongers, F. Architecture of 54 moist-forest tree species: Traits, trade-offs, and functional groups. Ecology 2006, 87, 1289–1301. [Google Scholar] [CrossRef]

- Poorter, L.; Wright, S.J.; Paz, H.; Ackerly, D.D.; Condit, R.; Ibarra-Manríquez, G.; Harms, K.E.; Licona, J.C.; Martínez-Ramos, M.; Mazer, S.J.; et al. Are functional traits good predictors of demographic rates? Evidence from five neotropical forests. Ecology 2008, 89, 1908–1920. [Google Scholar] [CrossRef]

- He, H.; Zhang, C.; Zhao, X.; Fousseni, F.; Wang, J.; Dai, H.; Yang, S.; Zuo, Q. Allometric biomass equations for 12 tree species in coniferous and broadleaved mixed forests, Northeastern China. PLoS ONE 2018, 13, e0186226. [Google Scholar] [CrossRef]

- Trasobares, A.; Pukkala, T.; Miina, J. Growth and yield model for uneven-aged mixtures of Pinus sylvestris L. and Pinus nigra Arn. In Catalonia, north-east Spain. Ann. For. Sci. 2004, 61, 9–24. [Google Scholar] [CrossRef]

- Porté, A.; Bosc, A.; Champion, I.; Loustau, D. Estimating the foliage area of Maritime pine (Pinus pinaster Aït.) branches and crowns with application to modelling the foliage area distribution in the crown. Ann. For. Sci. 2000, 57, 73–86. [Google Scholar] [CrossRef]

- Lefsky, M.A.; Harding, D.; Cohen, W.B.; Parker, G.; Shugart, H.H. Surface Lidar remote sensing of basal area and biomass in deciduous forests of Eastern Maryland, USA. Remote Sens. Environ. 1999, 67, 83–98. [Google Scholar] [CrossRef]

- Cade, B.S. Comparison of tree basal area and canopy cover in habitat models: Subalpine forest. J. Wildl. Manag. 1997, 61, 326–335. [Google Scholar] [CrossRef]

- Mitchell, J.E.; Popovich, S.J. Effectiveness of basal area for estimating canopy cover of ponderosa pine. For. Ecol. Manag. 1997, 95, 45–51. [Google Scholar] [CrossRef]

- Popescu, S.C. Estimating biomass of individual pine trees using airborne lidar. Biomass Bioenergy 2007, 31, 646–655. [Google Scholar] [CrossRef]

- R Core Team R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2016; Available online: https://www.R-project.org/ (accessed on 5 December 2016).

- RStudio Team RStudio: Integrated Development for R. RStudio, Inc., Boston, MA, USA. 2015. Available online: http://www.rstudio.com/ (accessed on 5 December 2016).

- Pearson, R.L.; Miller, L.D. Remote mapping of standing crop biomass for estimation of the productivity of the short-grass Prairie, Pawnee National Grassland, Colorado. In Proceedings of the 8th International Symposium on Remote sensing of Environment, Ann Arbor, MI, USA, 2–6 October 1972; pp. 1357–1381. [Google Scholar]

- Baluja, J.; Diago, M.P.; Balda, P.; Zorer, R.; Meggio, F.; Morales, F.; Tardaguila, J. Assessment of vineyard water status variability by thermal and multispectral imagery using an unmanned aerial vehicle (UAV). Irrig. Sci. 2012, 30, 511–522. [Google Scholar] [CrossRef]

- Bannari, A.; Morin, D.; Bonn, F.; Huete, A.R. A review of vegetation indices. Remote Sens. Rev. 1995, 13, 1–95. [Google Scholar] [CrossRef]

- Geipel, J.; Link, J.; Claupein, W. Combined spectral and spatial modelling of corn yield based on aerial images and crop surface models acquired with an unmanned aircraft system. Remote Sens. 2014, 11, 10335–10355. [Google Scholar] [CrossRef]

- Modzelewska, A.; Stereńczak, K.; Mierczyk, M.; Maciuk, S.; Balazy, R.; Zawila-Niedźwiecki, T. Sensitivity of vegetation indices in relation to parameters of Norway spruce stands. Folia Forestalia Pol. Ser. A For. 2017, 59, 85–98. [Google Scholar] [CrossRef]

- Sieberth, T.; Wackrow, R.; Chandler, J.H. Motion blur disturbs—The influence of motion-blurred images in photogrammetry. Photogramm. Rec. 2014, 29, 434–453. [Google Scholar] [CrossRef]

- Lelégard, L.; Delaygue, E.; Brédif, M.; Vallet, B. Detecting and correcting motion blur from images shot with channel-dependent exposure time. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, I-3, 341–346. [Google Scholar]

- Granzier, J.J.M.; Valsecchi, M. Variation in daylight as a contextual cue for estimating season, time of day, and weather conditions. J. Vis. 2014, 14, 1–23. [Google Scholar] [CrossRef] [PubMed]

- Tagel, X. Study of Radiometric Variations in Unmanned Aerial Vehicle Remote Sensing Imagery for Vegetation Mapping. Master’s Thesis, Lund University, Lund, Sweden, 2017. [Google Scholar]

- Burkart, A.; Aasen, H.; Alonso, L.; Menz, G.; Bareth, G.; Rascher, U. Angular dependency of hyperspectral measurement over wheat characterized by a novel UAV based goniometer. Remote Sens. 2015, 6, 725–746. [Google Scholar] [CrossRef]

- Mesas-Carrascosa, F.-J.; Torres-Sánchez, J.; Clavero-Rumbao, I.; García-Ferrer, A.; Peña, J.-M.; Borra-Serrano, I.; López-Granados, F. Assessing optimal flight parameters for generating accurate multispectral orthomosaics by UAV to support site-specific crop management. Remote Sens. 2015, 7, 12793–12814. [Google Scholar] [CrossRef]

- Instituto Geográfico Nacional. PNOA—Características Generales. Available online: http://pnoa.ign.es/caracteristicas-tecnicas (accessed on 17 December 2018).

- Del Pozo, S.; Rodríguez-Gonzálvez, P.; Hernández-López, D.; Felipe-García, B. Vicarious radiometric calibration of a multispectral camera on board an unmanned aerial system. Remote Sens. 2014, 6, 1918–1937. [Google Scholar] [CrossRef]

- Suomalainen, J.; Anders, N.; Iqbal, S.; Roerink, G.; Franke, J.; Wenting, P.; Hünniger, D.; Bartholomeus, H.; Becker, R.; Kooistra, L. A lightweight hyperspectral mapping system and photogrammetric processing chain for unmanned aerial vehicles. Remote Sens. 2014, 6, 11013–11030. [Google Scholar] [CrossRef]

| Site | La Carral | La Carral | Cal Rovira-Sanca | Cal Rovira-Sanca |

|---|---|---|---|---|

| Date (DD/MM/YY) | 03/07/18 | 03/07/18 | 03/03/18 | 03/03/18 |

| Time (UTC) | 16:59 | 17:26 | 10:13 | 10:25 |

| Sun elevation angle (°) | 19.08 | 14.49 | 27.29 | 28.96 |

| Sun azimuth angle (°) | 243.95 | 249.07 | 130.07 | 132.86 |

| Centre of Scene (UTM31N-ETRS89) | (378698,4640314) | (378715,4640324) | (375511,4642336) | (375522,4642349) |

| # of images | 160 | 147 | 90 | 67 |

| Flight height (m) | 50 | 120 | 50 | 120 |

| Flight speed (m/s) | 4 | 4 | 4 | 4 |

| Area (ha) | 5.82 | 24.6 | 7.54 | 21.3 |

| Side overlap (%) | 55 | 65 | 48 | 62 |

| Forward overlap (%) | 74 | 89 | 57 | 82 |

| Effective overlap (# image/pixel) | 3.40 | 7.94 | 2.88 | 4.80 |

| Pixel size (cm) | 1.46 | 4 | 1.59 | 3.96 |

| Reprojection error (pixel) | 8.31 | 7.95 | 1.78 | 1.76 |

| Mean shutter speed (s) | 1/288 | 1/312 | 1/457 | 1/525 |

| Motion blur (cm - pixel) | 1.39–0.95 | 1.28–0.32 | 0.88–0.55 | 0.76–0.16 |

| ExGI | GRVI | GCC | VARI | All Indices | CHM | |

|---|---|---|---|---|---|---|

| Count of non-zero index | 0.154 (124.0) | 0.114 (118.4) | 0.147 (127.9) | 0.121 (119.8) | 0.134 (14.5) | 0.028 (50.0) |

| Max index value | 0.389 (27.2) | 0.164 (98.2) | 0.297 (37.7) | 0.051 (119.6) | 0.225 (65.9) | 0.014 (121.4) |

| Mean index value | 0.401 (12.7) | 0.195 (61.0) | 0.370 (50.5) | 0.039 (184.6) | 0.251 (66.9) | 0.015 (113.3) |

| Median index value | 0.227 (80.6) | 0.162 (50.6) | 0.182 (103.3) | 0.076 (118.4) | 0.162 (39.1) | 0.008 (125.0) |

| Std index values | 0.440 (37.3) | 0.152 (57.9) | 0.466 (32.2) | 0.005 (40.0) | 0.266 (84.5) | 0.018 (100.0) |

| Sum of index values | 0.256 (40.2) | 0.088 (78.4) | 0.155 (122.6) | 0.027 (122.2) | 0.132 (74.6) | 0.014 (114.3) |

| All measures | 0.311 (36.8) | 0.146 (26.4) | 0.270 (48.4) | 0.053 (76.8) | 0.016 (41.1) |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Larrinaga, A.R.; Brotons, L. Greenness Indices from a Low-Cost UAV Imagery as Tools for Monitoring Post-Fire Forest Recovery. Drones 2019, 3, 6. https://doi.org/10.3390/drones3010006

Larrinaga AR, Brotons L. Greenness Indices from a Low-Cost UAV Imagery as Tools for Monitoring Post-Fire Forest Recovery. Drones. 2019; 3(1):6. https://doi.org/10.3390/drones3010006

Chicago/Turabian StyleLarrinaga, Asier R., and Lluis Brotons. 2019. "Greenness Indices from a Low-Cost UAV Imagery as Tools for Monitoring Post-Fire Forest Recovery" Drones 3, no. 1: 6. https://doi.org/10.3390/drones3010006

APA StyleLarrinaga, A. R., & Brotons, L. (2019). Greenness Indices from a Low-Cost UAV Imagery as Tools for Monitoring Post-Fire Forest Recovery. Drones, 3(1), 6. https://doi.org/10.3390/drones3010006