Easy Rocap: A Low-Cost and Easy-to-Use Motion Capture System for Drones

Abstract

:1. Introduction

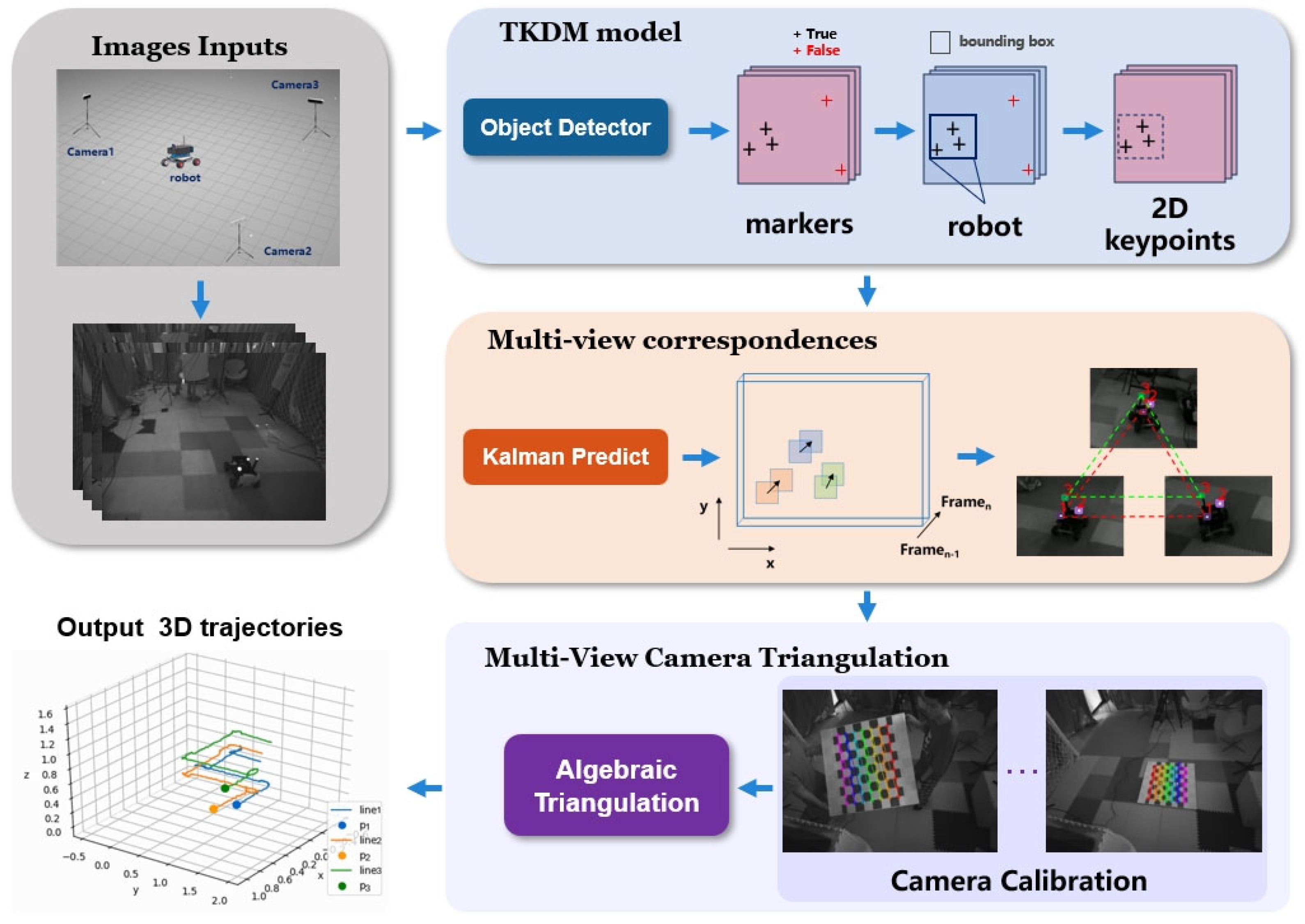

- (1)

- A Multi-view Correspondence method that combines Object Detection and MOT is proposed. The dual-layer detector achieves robust object detection in complex environments, and the MOT method can stably track markers under short-term occlusion. Multi-view correspondences use geometric constraints to correctly match corresponding image points.

- (2)

- A high-precision 3D robotic pose estimation system for complex dynamic scenes is open-sourced. When there is no obstruction in the simulation environment, the average positioning accuracy reaches 0.008 m, the average orientation accuracy reaches 0.65 degrees, and the solving speed reaches 30 frames per second (FPS).

2. Related Works

2.1. Motion Capture Methods

2.2. Multi-Object Tracking

2.3. Direct Pose Estimation

3. Methods

3.1. Two-Stage Keypoints Detection Model (TKDM)

| Algorithm 1 Filtering of Object Detection Results |

| Input: frame ; object detector ; detection score threshold |

| Output: Filtered marker detection boxes |

| 1. |

| 2. |

| 3. marker class boxes , robot class boxes |

| 4. for in do |

| 5. Check if and is contained by a certain a robot box |

| 6. if Failed then |

| 7. delete |

| 8. end |

| 9. |

| 10. return: |

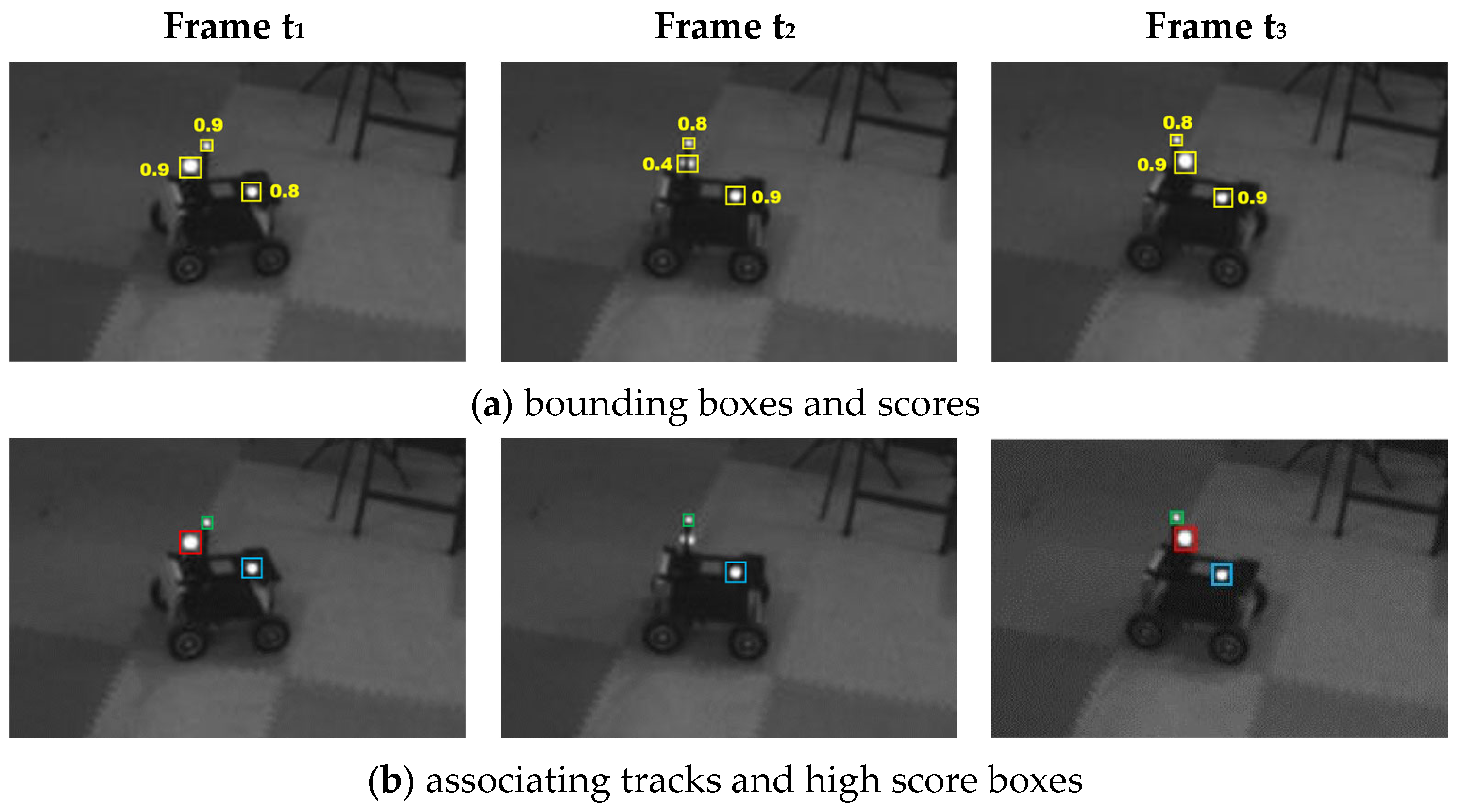

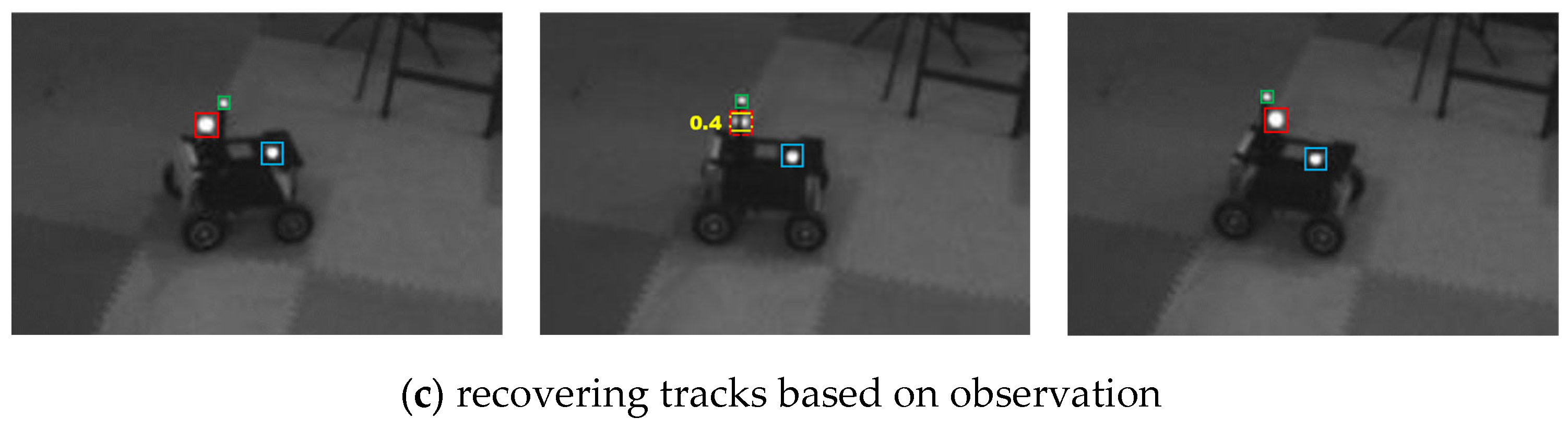

3.2. Multi-View Correspondences

| Algorithm 2 Trajectory Establishment |

| Input: Track ; Filtered detection boxes |

| 1. Associate and using Similarity |

| 2. remaining object boxes from |

| 3. remaining tracks from |

| 4. for in do |

| 5. Check if there is an available IDtraj ≤ nummarket |

| 6. if True then |

| 7. |

| 8. end |

| 9. else |

| 10. delete |

| 11. end |

| 12. return: |

3.3. Multi-View Camera Triangulation

4. Experiments

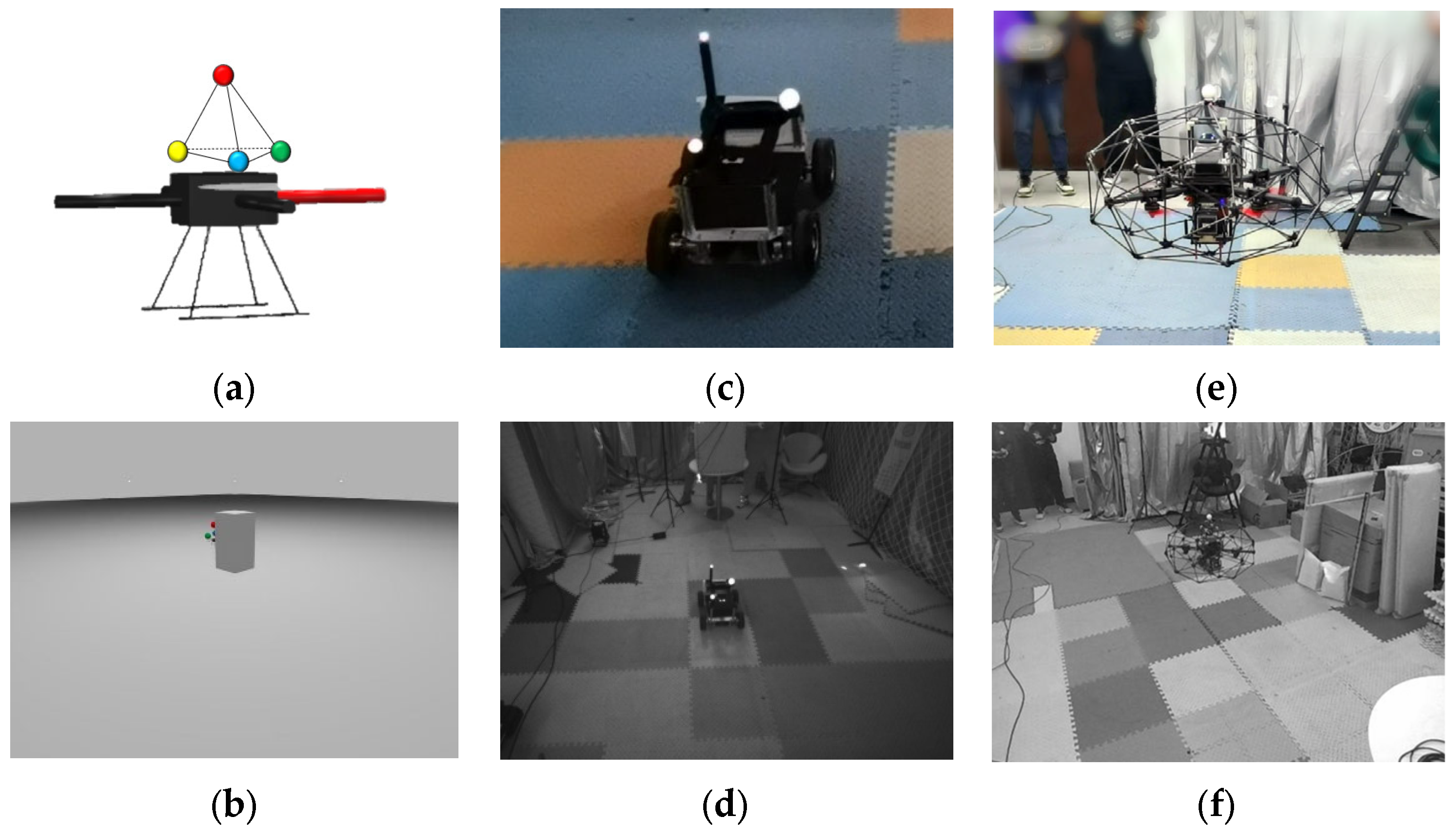

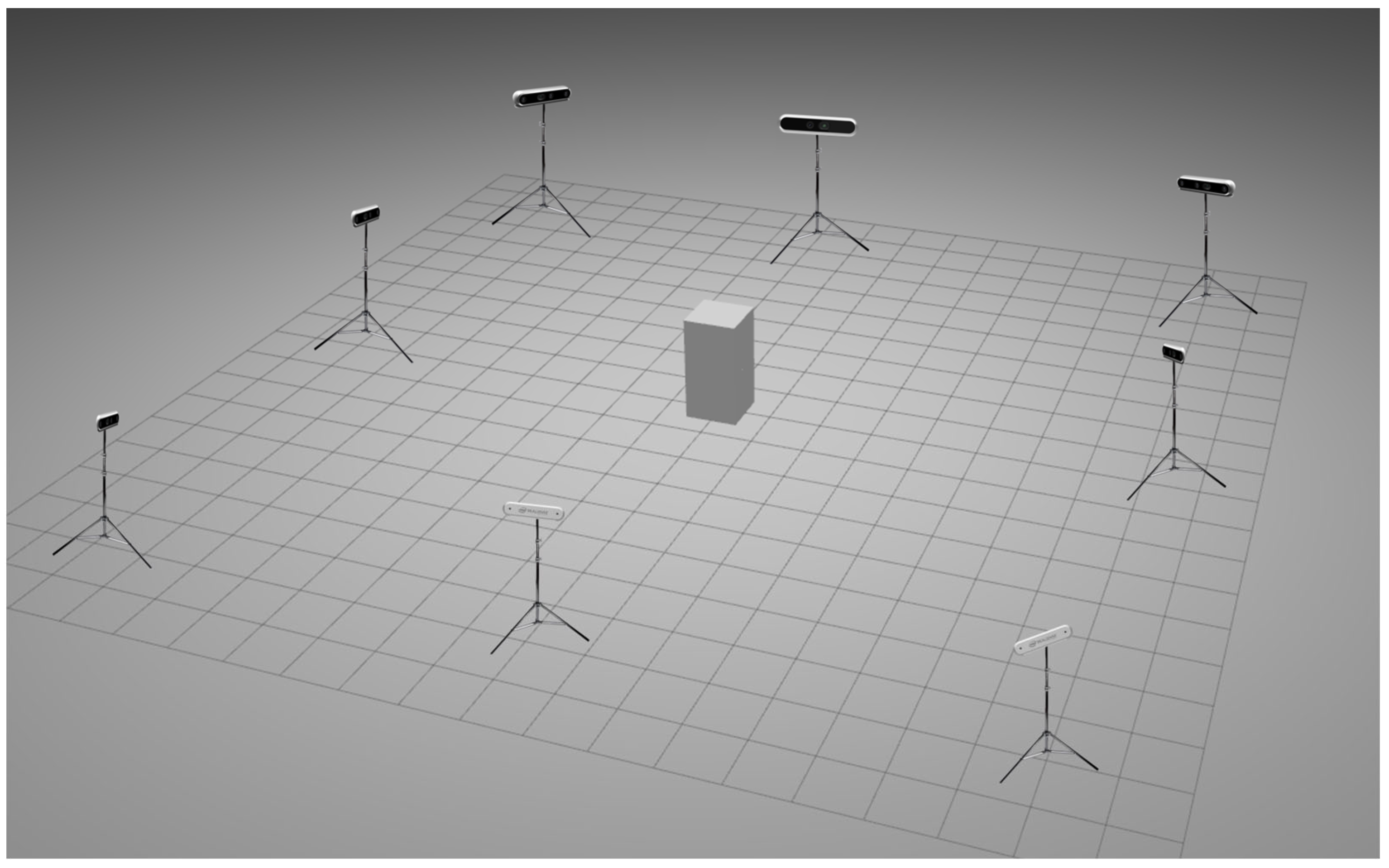

4.1. Experimental Settings

4.1.1. Dataset Description

4.1.2. Experimental Scenes

4.1.3. Evaluation Metrics

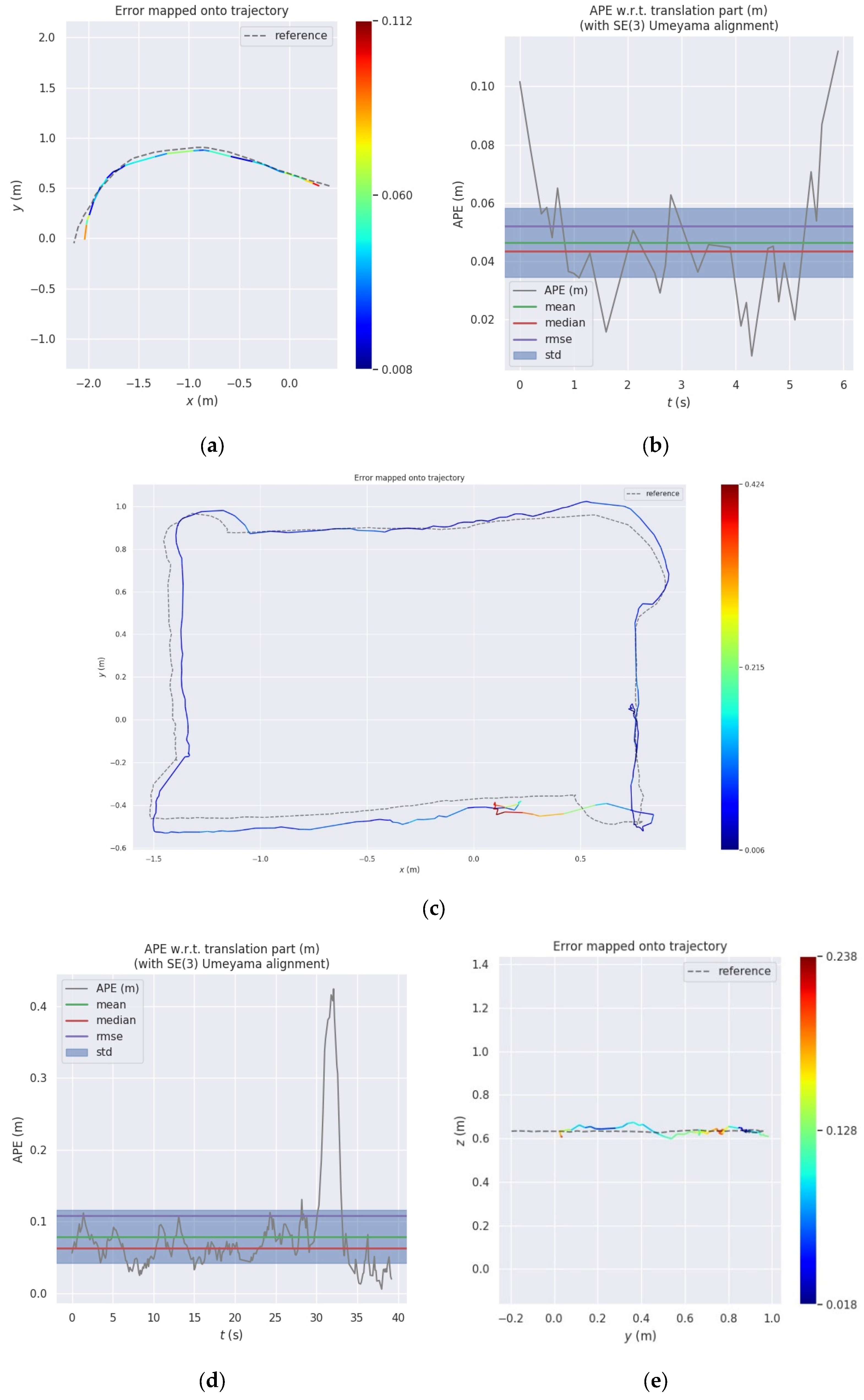

4.2. Experimental Results

4.3. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Dinelli, C.; Racette, J.; Escarcega, M.; Lotero, S.; Gordon, J.; Montoya, J.; Dunaway, C.; Androulakis, V.; Khaniani, H.; Shao, S.J.D. Configurations and Applications of Multi-Agent Hybrid Drone/Unmanned Ground Vehicle for Underground Environments: A Review. Drones 2023, 7, 136. [Google Scholar] [CrossRef]

- Mohsan, S.A.H.; Khan, M.A.; Noor, F.; Ullah, I.; Alsharif, M.H. Towards the unmanned aerial vehicles (UAVs): A comprehensive review. Drones 2022, 6, 147. [Google Scholar] [CrossRef]

- Wisth, D.; Camurri, M.; Fallon, M. VILENS: Visual, inertial, lidar, and leg odometry for all-terrain legged robots. IEEE Trans. Robot. 2022, 39, 309–326. [Google Scholar] [CrossRef]

- Schneider, J.; Eling, C.; Klingbeil, L.; Kuhlmann, H.; Förstner, W.; Stachniss, C. Fast and effective online pose estimation and mapping for UAVs. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 4784–4791. [Google Scholar]

- Jiang, N.; Xu, Y.; Xu, T.; Xu, G.; Sun, Z.; Schuh, H. GPS/BDS short-term ISB modelling and prediction. GPS Solut. 2017, 21, 163–175. [Google Scholar] [CrossRef]

- Sefidgar, M.; Landry, R.J.D., Jr. Unstable landing platform pose estimation based on Camera and Range Sensor Homogeneous Fusion (CRHF). Drones 2022, 6, 60. [Google Scholar] [CrossRef]

- Brena, R.F.; García-Vázquez, J.P.; Galván-Tejada, C.E.; Muñoz-Rodriguez, D.; Vargas-Rosales, C.; Fangmeyer, J. Evolution of indoor positioning technologies: A survey. J. Sens. 2017, 2017, 2530413. [Google Scholar] [CrossRef]

- Van Opdenbosch, D.; Schroth, G.; Huitl, R.; Hilsenbeck, S.; Garcea, A.; Steinbach, E. Camera-based indoor positioning using scalable streaming of compressed binary image signatures. In Proceedings of the 2014 IEEE International Conference on Image Processing (ICIP), Paris, France, 27–30 October 2014; pp. 2804–2808. [Google Scholar]

- Schweinzer, H.; Syafrudin, M. LOSNUS: An ultrasonic system enabling high accuracy and secure TDoA locating of numerous devices. In Proceedings of the 2010 International Conference on Indoor Positioning and Indoor Navigation, Zurich, Switzerland, 15–17 September 2010; pp. 1–8. [Google Scholar]

- Bencak, P.; Hercog, D.; Lerher, T. Indoor positioning system based on bluetooth low energy technology and a nature-inspired optimization algorithm. Electronics 2022, 11, 308. [Google Scholar] [CrossRef]

- Guan, W.; Chen, S.; Wen, S.; Tan, Z.; Song, H.; Hou, W. High-accuracy robot indoor localization scheme based on robot operating system using visible light positioning. IEEE Photonics J. 2020, 12, 1–16. [Google Scholar] [CrossRef]

- Chen, C.; Jin, A.; Wang, Z.; Zheng, Y.; Yang, B.; Zhou, J.; Xu, Y.; Tu, Z. SGSR-Net: Structure Semantics Guided LiDAR Super-Resolution Network for Indoor LiDAR SLAM. IEEE Trans. Multimed. 2023, 26, 1842–1854. [Google Scholar] [CrossRef]

- Masiero, A.; Fissore, F.; Antonello, R.; Cenedese, A.; Vettore, A. A comparison of UWB and motion capture UAV indoor positioning. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, 42, 1695–1699. [Google Scholar] [CrossRef]

- Munawar, H.S.; Ullah, F.; Heravi, A.; Thaheem, M.J.; Maqsoom, A. Inspecting buildings using drones and computer vision: A machine learning approach to detect cracks and damages. Drones 2021, 6, 5. [Google Scholar] [CrossRef]

- Nenchoo, B.; Tantrairatn, S. Real-Time 3D UAV pose estimation by visualization. Multidiscip. Digit. Publ. Inst. Proc. 2020, 39, 18. [Google Scholar]

- Zhou, B.; Li, C.; Chen, S.; Xie, D.; Yu, M.; Li, Q. ASL-SLAM: A LiDAR SLAM with Activity Semantics-Based Loop Closure. IEEE Sens. J. 2023, 23, 13499–13510. [Google Scholar] [CrossRef]

- Zhou, B.; Mo, H.; Tang, S.; Zhang, X.; Li, Q. Backpack LiDAR-Based SLAM With Multiple Ground Constraints for Multistory Indoor Mapping. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–16. [Google Scholar] [CrossRef]

- Xu, Y.; Chen, C.; Wang, Z.; Yang, B.; Wu, W.; Li, L.; Wu, J.; Zhao, L. PMLIO: Panoramic Tightly-Coupled Multi-LiDAR-Inertial Odometry and Mapping. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2023, 10, 703–708. [Google Scholar] [CrossRef]

- Sun, S.; Chen, C.; Wang, Z.; Zhou, J.; Li, L.; Yang, B.; Cong, Y.; Wang, H. Real-Time UAV 3D Image Point Clouds Mapping. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2023, 10, 1097–1104. [Google Scholar] [CrossRef]

- Krul, S.; Pantos, C.; Frangulea, M.; Valente, J. Visual SLAM for indoor livestock and farming using a small drone with a monocular camera: A feasibility study. Drones 2021, 5, 41. [Google Scholar] [CrossRef]

- Jospin, L.; Stoven-Dubois, A.; Cucci, D.A. Photometric long-range positioning of LED targets for cooperative navigation in UAVs. Drones 2019, 3, 69. [Google Scholar] [CrossRef]

- Memon, S.A.; Son, H.; Kim, W.-G.; Khan, A.M.; Shahzad, M.; Khan, U. Tracking Multiple Unmanned Aerial Vehicles through Occlusion in Low-Altitude Airspace. Drones 2023, 7, 241. [Google Scholar] [CrossRef]

- Menolotto, M.; Komaris, D.-S.; Tedesco, S.; O’Flynn, B.; Walsh, M. Motion capture technology in industrial applications: A systematic review. Sensors 2020, 20, 5687. [Google Scholar] [CrossRef]

- Li, J.; Liu, X.; Wang, Z.; Zhao, H.; Zhang, T.; Qiu, S.; Zhou, X.; Cai, H.; Ni, R.; Cangelosi, A. Real-time human motion capture based on wearable inertial sensor networks. IEEE Internet Things J. 2021, 9, 8953–8966. [Google Scholar] [CrossRef]

- Tuli, T.B.; Manns, M. Real-time motion tracking for humans and robots in a collaborative assembly task. In Proceedings of the 6th International Electronic Conference on Sensors and Applications, Online, 15–30 November 2019; p. 48. [Google Scholar]

- Salisu, S.; Ruhaiyem, N.I.R.; Eisa, T.A.E.; Nasser, M.; Saeed, F.; Younis, H.A. Motion Capture Technologies for Ergonomics: A Systematic Literature Review. Diagnostics 2023, 13, 2593. [Google Scholar] [CrossRef] [PubMed]

- Gu, C.; Lin, W.; He, X.; Zhang, L.; Zhang, M. IMU-based Mocap system for rehabilitation applications: A systematic review. Biomim. Intell. Robot. 2023, 3, 100097. [Google Scholar]

- Luque-Vega, L.F.; Lopez-Neri, E.; Arellano-Muro, C.A.; González-Jiménez, L.E.; Ghommam, J.; Saad, M.; Carrasco-Navarro, R.; Ruíz-Cruz, R.; Guerrero-Osuna, H.A. UAV-based smart educational mechatronics system using a MoCap laboratory and hardware-in-the-loop. Sensors 2022, 22, 5707. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z. Microsoft kinect sensor and its effect. IEEE Multimed. 2012, 19, 4–10. [Google Scholar] [CrossRef]

- Roetenberg, D.; Luinge, H.; Slycke, P. Full 6dof human motion tracking using miniature inertial sensors. Xsens Motion Technol. BV Tech. Rep. 2013, 1, 1–17. [Google Scholar]

- Jha, A.; Chiddarwar, S.S.; Bhute, R.Y.; Alakshendra, V.; Nikhade, G.; Khandekar, P.M. Imitation learning in industrial robots: A kinematics based trajectory generation framework. In Proceedings of the 2017 3rd International Conference on Advances in Robotics, New Delhi, India, 28 June–2 July 2017; pp. 1–6. [Google Scholar]

- Mueller, F.; Deuerlein, C.; Koch, M. Intuitive welding robot programming via motion capture and augmented reality. IFAC-PapersOnLine 2019, 52, 294–299. [Google Scholar] [CrossRef]

- Shi, Y.; Zhang, Y.; Li, Z.; Yuan, S.; Zhu, S. IMU/UWB Fusion Method Using a Complementary Filter and a Kalman Filter for Hybrid Upper Limb Motion Estimation. Sensors 2023, 23, 6700. [Google Scholar] [CrossRef] [PubMed]

- Ren, Y.; Zhao, C.; He, Y.; Cong, P.; Liang, H.; Yu, J.; Xu, L.; Ma, Y. LiDAR-aid Inertial Poser: Large-scale Human Motion Capture by Sparse Inertial and LiDAR Sensors. IEEE Trans. Vis. Comput. Graph. 2023, 29, 2337–2347. [Google Scholar] [CrossRef]

- Hii, C.S.T.; Gan, K.B.; Zainal, N.; Mohamed Ibrahim, N.; Azmin, S.; Mat Desa, S.H.; van de Warrenburg, B.; You, H.W. Automated Gait Analysis Based on a Marker-Free Pose Estimation Model. Sensors 2023, 23, 6489. [Google Scholar] [CrossRef]

- Wojke, N.; Bewley, A.; Paulus, D. Simple online and realtime tracking with a deep association metric. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Bejing, China, 17–20 September 2017; pp. 3645–3649. [Google Scholar]

- Luo, W.; Xing, J.; Milan, A.; Zhang, X.; Liu, W.; Kim, T.-K. Multiple object tracking: A literature review. Artif. Intell. 2021, 293, 103448. [Google Scholar] [CrossRef]

- Ciaparrone, G.; Sánchez, F.L.; Tabik, S.; Troiano, L.; Tagliaferri, R.; Herrera, F. Deep learning in video multi-object tracking: A survey. Neurocomputing 2020, 381, 61–88. [Google Scholar] [CrossRef]

- Bewley, A.; Ge, Z.; Ott, L.; Ramos, F.; Upcroft, B. Simple online and realtime tracking. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; pp. 3464–3468. [Google Scholar]

- Zhang, Y.; Sun, P.; Jiang, Y.; Yu, D.; Weng, F.; Yuan, Z.; Luo, P.; Liu, W.; Wang, X. Bytetrack: Multi-object tracking by associating every detection box. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; pp. 1–21. [Google Scholar]

- Kalman, R.E. A new approach to linear filtering and prediction problems. J. Basic Eng. 1960, 82, 35–45. [Google Scholar] [CrossRef]

- Kuhn, H.W. The Hungarian method for the assignment problem. Nav. Res. Logist. Q. 1955, 2, 83–97. [Google Scholar] [CrossRef]

- Wang, Z.; Zheng, L.; Liu, Y.; Li, Y.; Wang, S. Towards real-time multi-object tracking. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 107–122. [Google Scholar]

- Yifu, Z.; Chunyu, W.; Xinggang, W.; Wenjun, Z.; Wenyu, L. A Simple Baseline for Multi-Object Tracking. arXiv 2020, arXiv:2004.01888. [Google Scholar]

- Zhou, X.; Koltun, V.; Krähenbühl, P. Tracking objects as points. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 474–490. [Google Scholar]

- Sun, P.; Cao, J.; Jiang, Y.; Zhang, R.; Xie, E.; Yuan, Z.; Wang, C.; Luo, P. Transtrack: Multiple object tracking with transformer. arXiv 2020, arXiv:2012.15460. [Google Scholar]

- Meinhardt, T.; Kirillov, A.; Leal-Taixe, L.; Feichtenhofer, C. Trackformer: Multi-object tracking with transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 8844–8854. [Google Scholar]

- Chu, P.; Wang, J.; You, Q.; Ling, H.; Liu, Z. Transmot: Spatial-temporal graph transformer for multiple object tracking. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 2–7 January 2023; pp. 4870–4880. [Google Scholar]

- Zeng, F.; Dong, B.; Zhang, Y.; Wang, T.; Zhang, X.; Wei, Y. Motr: End-to-end multiple-object tracking with transformer. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; pp. 659–675. [Google Scholar]

- Cao, J.; Pang, J.; Weng, X.; Khirodkar, R.; Kitani, K. Observation-centric sort: Rethinking sort for robust multi-object tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 9686–9696. [Google Scholar]

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R. Vision meets robotics: The kitti dataset. Int. J. Robot. Res. 2013, 32, 1231–1237. [Google Scholar] [CrossRef]

- Cutler, M.; Michini, B.; How, J.P. Lightweight infrared sensing for relative navigation of quadrotors. In Proceedings of the 2013 International Conference on Unmanned Aircraft Systems (ICUAS), Atlanta, GA, USA, 28–31 May 2013; pp. 1156–1164. [Google Scholar]

- Faessler, M.; Mueggler, E.; Schwabe, K.; Scaramuzza, D. A monocular pose estimation system based on infrared leds. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 907–913. [Google Scholar]

- Fishberg, A.; How, J.P. Multi-agent relative pose estimation with UWB and constrained communications. In Proceedings of the 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Kyoto, Japan, 23–27 October 2022; pp. 778–785. [Google Scholar]

- Cossette, C.C.; Shalaby, M.A.; Saussié, D.; Le Ny, J.; Forbes, J.R. Optimal multi-robot formations for relative pose estimation using range measurements. In Proceedings of the 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Kyoto, Japan, 23–27 October 2022; pp. 2431–2437. [Google Scholar]

- Jones, B.; Thompson, K.; Pierce, D.; Martin, S.; Bevly, D. Ground-Vehicle Relative Position Estimation with UWB Ranges and a Vehicle Dynamics Model. IFAC-PapersOnLine 2022, 55, 681–687. [Google Scholar] [CrossRef]

- Jin, Z.; Jiang, C. Range-Aided LiDAR-Inertial Multi-Vehicle Mapping in Degenerate Environment. arXiv 2023, arXiv:2303.08454. [Google Scholar] [CrossRef]

- Hao, N.; He, F.; Tian, C.; Yao, Y.; Mou, S. KD-EKF: A Kalman Decomposition Based Extended Kalman Filter for Multi-Robot Cooperative Localization. arXiv 2022, arXiv:2210.16086. [Google Scholar]

- Pritzl, V.; Vrba, M.; Štěpán, P.; Saska, M. Fusion of Visual-Inertial Odometry with LiDAR Relative Localization for Cooperative Guidance of a Micro-Scale Aerial Vehicle. arXiv 2023, arXiv:2306.17544. [Google Scholar]

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. Yolox: Exceeding yolo series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar]

- Zhang, Z.; Deriche, R.; Faugeras, O.; Luong, Q.-T. A robust technique for matching two uncalibrated images through the recovery of the unknown epipolar geometry. Artif. Intell. 1995, 78, 87–119. [Google Scholar] [CrossRef]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- Hartley, R.; Zisserman, A. Multiple View Geometry in Computer Vision; Cambridge University Press: Cambridge, UK, 2003. [Google Scholar]

- Iskakov, K.; Burkov, E.; Lempitsky, V.; Malkov, Y. Learnable triangulation of human pose. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27–28 October 2019; pp. 7718–7727. [Google Scholar]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Zhang, H.; Cisse, M.; Dauphin, Y.N.; Lopez-Paz, D. Mixup: Beyond empirical risk minimization. arXiv 2017, arXiv:1710.09412. [Google Scholar]

- Xu, W.; Cai, Y.; He, D.; Lin, J.; Zhang, F. Fast-lio2: Fast direct lidar-inertial odometry. IEEE Trans. Robot. 2022, 38, 2053–2073. [Google Scholar] [CrossRef]

| Platform | Scene | Motion Pattern | Data Length/Seconds | Camera Frequency/FPS | Truth Traj Frequency /Hz | LiDAR Frequency /Hz | |

|---|---|---|---|---|---|---|---|

| Seq01 | UAV | simulation | horizontal | 60.71 | 15 | 1000 | |

| Seq02 | UAV | simulation | helical | 44.81 | 15 | 1000 | |

| Seq03 | UAV | simulation | horizontal | 46.33 | 15 | 1000 | |

| Seq04 | UGV | real-world | curvilinear | 6.01 | 90 | 10 | |

| Seq05 | UGV | real-world | square | 42.80 | 90 | 10 | |

| Seq06 | UAV | real-world | curvilinear | 27.09 | 90 | 10 |

| Scene | Motion Pattern | Trajectory Length/m | Duration /s | RMSE(APE) /m | Mean (APE) /m | |

|---|---|---|---|---|---|---|

| Seq01 | simulation | horizontal | 23.73 | 60.71 | 0.0080 | 0.0069 |

| Seq02 | simulation | helical | 12.79 | 43.91 | 0.0090 | 0.0079 |

| Seq03 | simulation | horizontal (occlusion) | 18.67 | 46.33 | 0.0150 | 0.0132 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, H.; Chen, C.; He, Y.; Sun, S.; Li, L.; Xu, Y.; Yang, B. Easy Rocap: A Low-Cost and Easy-to-Use Motion Capture System for Drones. Drones 2024, 8, 137. https://doi.org/10.3390/drones8040137

Wang H, Chen C, He Y, Sun S, Li L, Xu Y, Yang B. Easy Rocap: A Low-Cost and Easy-to-Use Motion Capture System for Drones. Drones. 2024; 8(4):137. https://doi.org/10.3390/drones8040137

Chicago/Turabian StyleWang, Haoyu, Chi Chen, Yong He, Shangzhe Sun, Liuchun Li, Yuhang Xu, and Bisheng Yang. 2024. "Easy Rocap: A Low-Cost and Easy-to-Use Motion Capture System for Drones" Drones 8, no. 4: 137. https://doi.org/10.3390/drones8040137