Components and Indicators of the Robot Programming Skill Assessment Based on Higher Order Thinking

Abstract

:1. Introduction

2. Literature Review

2.1. Robot Programming

- (1)

- Identify the Problem: This refers to understanding the problem and determining the “Input”, “Process” and “Output” components that must be completed in order to solve the problem.

- (2)

- Design a Solution: This refers to the process of ordering the sequence of algorithms using flowcharts or pseudocodes.

- (3)

- Coding the Program: This is the way of transforming the commands and procedure sequence from the conceptual design into a programming language.

- (4)

- Test the Program: This refers to the validation of the syntax of the computer code and the interpretation of the results for the goals of program execution. It also includes testing for hardware compatibility, covering the input and output sections.

- (5)

- Program Implementation: This refers to the outcomes of the program. This should also be continued by further enhancements.

- (1)

- Component 1: The ability to solve problems step by step:

- Describe the problem and the sequence of ways to solve it.

- Draw the flowcharts or pseudocodes to show the sequence of ways to solve the problems.

- Change the sequence of steps if the results are not met.

- Tackle the presented tasks by breaking them down into smaller tasks.

- Capture the issues that can cause problems to repeat.

- (2)

- Component 2: The ability to create computer programs:

- Create a program by a computer language from a blank page.

- Create a program with a single-decision condition.

- Create a program with the nested structure of decision conditions.

- Create a variable to control the loop task programs.

- Create a variable and input data that affect the output.

- Build your own program from the beginning, until you achieve the objectives.

- Create a function that can modify parameters.

- (3)

- Component 3: The ability to connect to the robot:

- Connect the port between the computer and the microcontroller.

- Create objects for using analog and/or digital signals.

- Create a graphical user interface (GUI) to display the analog and/or digital inputs.

- Create a graphical user interface (GUI) for the digital outputs.

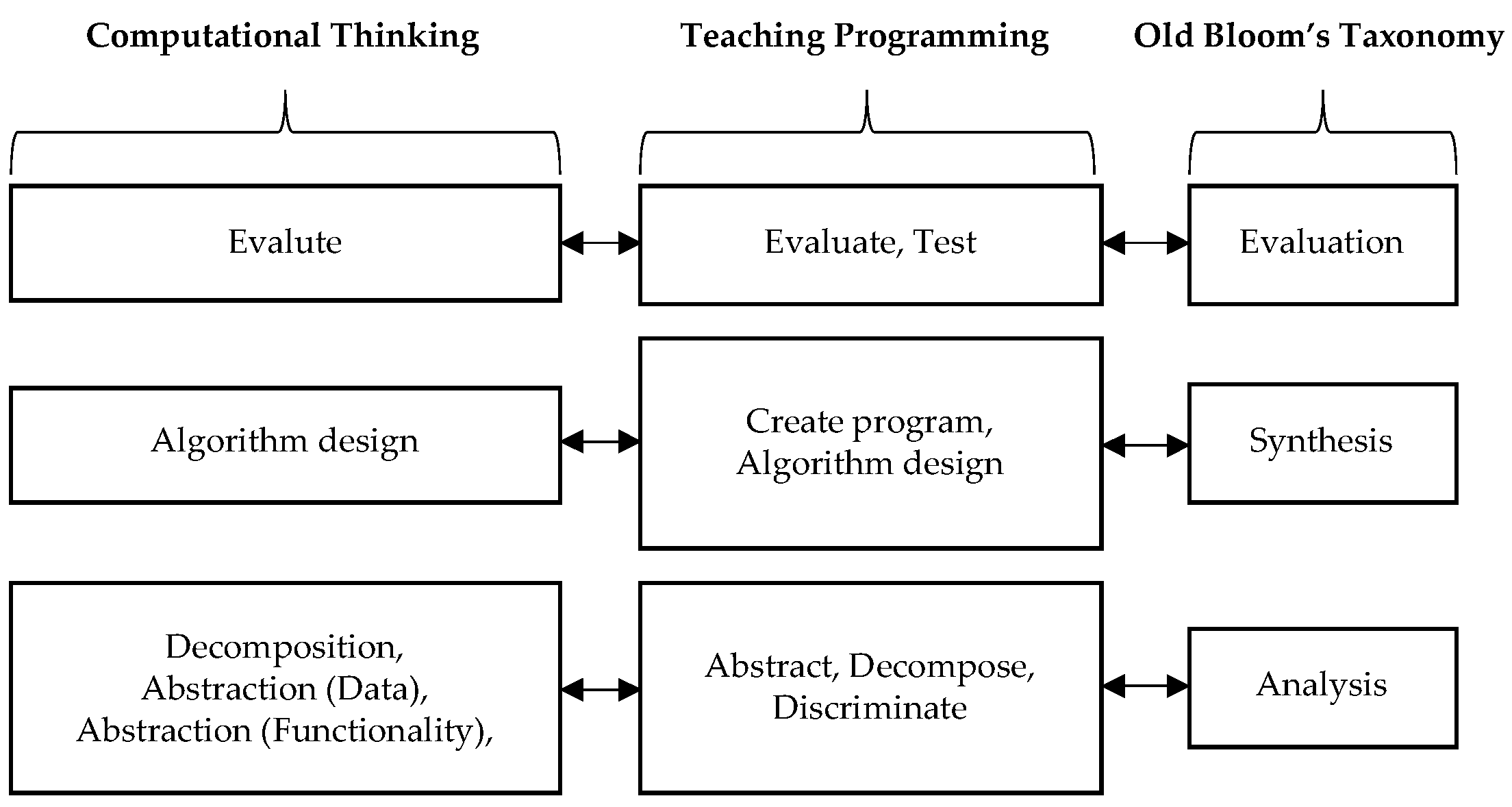

2.2. Higher Order Thinking

- (1)

- The knowledge dimensions:

- Factual—The fundamental understanding of terminology; scientific terms; labels; lexicon; slang; symbols or representations, and specifics, such as a knowledge of events, individuals, events, and information sources.

- Conceptual—Knowledge of a subject’s classifications and categories, concepts, theories, models, or frameworks.

- Procedural—Knowing how to perform a skill, procedure, technique, or methodology.

- Metacognitive—The method or approach of learning and thinking, being aware of one’s own cognition and being able to control, monitor and regulate one’s own cognitive process.

- (2)

- The cognitive process dimensions:

- Analyze—Breakdown a component and determine how the parts relate to one another and to an overall concept or purpose by differentiating, organizing, and attributing.

- Evaluate—Make decisions utilizing criteria and standards by checking and critiquing.

- Create—Integrate elements to create a coherent or functional whole; reorganize elements to create a new structure or pattern by generating, planning, and producing.

3. Objectives

- (1)

- To synthesize the components and indicators of the robot programming skill assessment based on higher order thinking.

- (2)

- To evaluate the validity and reliability of the robot programming skill assessment based on higher order thinking.

4. Methodology

4.1. The Details of the Participants in This Research

- (1)

- To test the validity of the components and indicators in the form of a questionnaire instrument in terms of the robot programming skill based on higher order thinking, the researcher worked with seven experts from various fields, whose qualifications were as follows:

- The Ph.D. lecturers in Educational Evaluation; two persons.

- The Ph.D. lecturers in Computer Engineering; two persons.

- The Ph.D. lecturer in Educational Technology; one person.

- The Ph.D. lecturer in Psychology; one person.

- The psychiatrist with at least 5 years of adolescent behavior research experience; one person.

- (2)

- To test the reliability of the components and indicators, the researchers used the robot programming assessment instrument with 50 volunteers in a robot programming skills training program—July 2021 course of the MARA: Manufacturing Automation and Robotics Academy, Ministry of Industry, Thailand. The participant acquisition was due to the public announcement made by the Department of Skill Development via the MARA website [63] in June 2021. Within three weeks of the announcement, 200 people had signed up for the training course. The researchers then set a quota of 50 technician volunteers to use the assessment instrument. All the participants were industrial plant technicians who had no prior experience of programming a robot before enrolling in the training course.

4.2. The Details of the Research Instrument

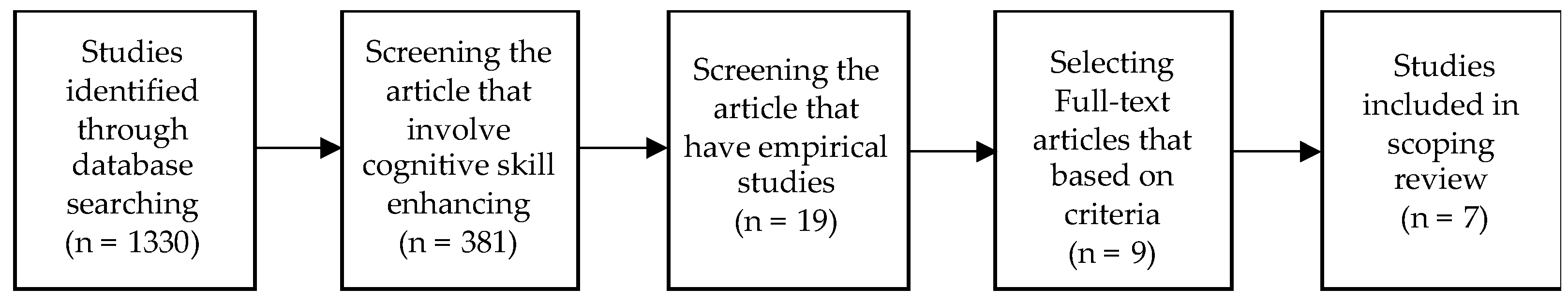

4.3. The Details of the Synthesis of the Components and Indicators

- (1)

- Inclusion criteria:

- Published between 2013 and 2022.

- Include articles with search terms in the title and abstract.

- Include experimental research publications in the search.

- Include papers for which the abstract content corresponds to the research question.

- (2)

- Exclusion criteria:

- There is no complete article.

- Unrelated to research due to inconsistency with the research question.

- Duplicate study (if there are multiple databases).

- Insufficient information.

- (3)

- Data sources and search strategies:

4.4. The Details of the Evaluations of the Components and Indicators

- (1)

- An initial assessment of the content validity for all components and indicators was conducted by having seven experts perform the evaluation using a content validity index test (CVI) [64]. This process led to minor revisions of some key language, but the original content remained the same.

- (2)

- After revising the instrument, the questionnaires in a Google Forms link was provided to the trainer who supervised the robot programming skill training—July 2021 course of the Manufacturing Automation and Robotics Academy, Ministry of Industry, Thailand. Subsequently, the trainer provided the Google Forms link to trainees, who were a sample group, to rate themselves after they finished the course.

- (3)

- The collected data was analyzed by using Cronbach’s Alpha statistic [65] to examine the reliability of the components and indicators of the robot programming skill assessment based on higher order thinking.

5. Results

- (1)

- The researchers discovered seven empirical studies that could be used for synthesizing the components and indicators of the robot programming skill assessment based on higher order thinking, after conducting a literature study using the scoping review analysis. The results are shown in Table 4 and Table 5.

- (2)

- The researchers developed the components and indicators of robot programming skills based on higher order thinking by combining programming procedures with the verbs that indicate cognitive skills analyzed from the scoping review. The results are show in Table 6.

- (3)

- The validity test conducted by seven experts showed all 16 items measuring the three components reached an acceptable validity based on the content validity index test (CVI = 1.00).

- (4)

- The reliability analysis used Cronbach’s Alpha statistic to examine the internal consistency of the components and indicators. The results for all 16 indicators were a Cronbach’s Alpha valued at 0.747. Moreover, the analysis of the questionnaire’s reliability for the three components of the questionnaire consisted of: (1) The ability to solve problems step by step (α = 0.827), (2) The ability to create computer programs (α = 0.722), and (3) The ability to connect to the robot (α = 0.778). Since the results of all the components using Cronbach’s Alpha calculations appear to be greater than 0.7, we then can conclude that the individual components and the overall indicators were acceptable reliability.

6. Discussion

- (1)

- (2)

- The robot programming skill is a higher order thinking skill based on Bloom’s cognitive taxonomy that falls into three categories: problem solving, critical thinking and the transfer of knowledge and skills [74]. The researchers can provide additional details as follows:

- Component 1 (The ability to solve problems step-by-step) is the main ability of the robot programming skill. It conforms to the meaning of the following phrase: “Problem-solving approach”, defined by the APA Dictionary of Psychology [75] as “The process whereby difficulties, obstacles, or stressful events are addressed using coping strategies.”

- Component 2 (The ability to create computer programs) is a part of the problem-solving skill that conforms to Jonassen [76], who details that programming activities could be classified as one solution for the “Design Problem Solving” type that focuses on analysis and planning. This also corresponds to Chandrasekaran [77], who determined that the key to problem solving is a step of critical thinking that understands the problem and defines the structure and sequence of work to fix the problem.

- Component 3 (The ability to connect to the robot) is a part of applying the knowledge about the robot modules that direct the robot to work by taking values from the inputs to generate the outputs. It conforms to Matsun et al. [78], who used Arduino Uno microcontroller programming as a tool for scientific learning, which confirms that this tool can promote the higher order thinking of students from learning activities, such as the hypothesis about the relationship between input and output modules, testing the solution, observing the results, and improving the processes obtained from the results displayed by the system. This also corresponds to Avello-Martínez et al. [79], who mentioned that allowing students to experience the use of robotics in the classroom is another way to enhance the creative and computational thinking of the students, which is based on the cognitive processes of higher order thinking skills.

- (3)

- (4)

- The scale for evaluating the robot programming skills was defined as a four-point scale consistent with Marzano and Kendall [80], who established the standardized measurement methods for the assessments of the cognitive domain.

7. Conclusions

8. Suggestion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Alimisis, D. Educational robotics: Open questions and new challenges. Themes Sci. Technol. Educ. 2013, 6, 63–71. Available online: http://earthlab.uoi.gr/theste/index.php/theste/article/view/119 (accessed on 3 February 2022).

- OECD. SKILLS FOR 2030. OECD Future of Education and Skills 2030 Concept Note. 2019. Available online: www.oecd.org/education/2030-project (accessed on 29 December 2021).

- Almerich, G.; Suárez-Rodríguez, J.; Díaz-García, I.; Cebrián-Cifuentes, S. 21st-century competences: The relation of ICT competences with higher-order thinking capacities and teamwork competences in university students. J. Comput. Assist. Learn. 2020, 36, 468–479. [Google Scholar] [CrossRef]

- Conklin, W. Higher-Order Thinking Skills to Develop 21st Century Learners; Shell Education: Huntington Beach, CA, USA, 2012. [Google Scholar]

- Widiawati, L.; Joyoatmojo, S.; Sudiyanto, S. Higher order thinking skills as effect of problem-based learning in the 21st century learning. Int. J. Multicult. Multireligious Underst. 2018, 5, 96–105. [Google Scholar]

- Hafni, R.N.; Nurlaelah, E. 21st Century Learner: Be a Critical Thinking. In Proceedings of the Second of International Conference on Education and Regional Development 2017 (ICERD 2nd), Bandung, Indonesia, 20–21 November 2017; Volume 1. [Google Scholar]

- Taylor, A.T.; Berrueta, T.A.; Murphey, T.D. Active learning in robotics: A review of control principles. Mechatronics 2021, 77, 102576. [Google Scholar] [CrossRef]

- Jean, A. A brief history of artificial intelligence. Medecine/Sciences 2020, 36, 1059–1067. [Google Scholar] [CrossRef]

- Mouha, R.A. Deep Learning for Robotics. J. Data Anal. Inf. Process. 2021, 9, 63–76. [Google Scholar] [CrossRef]

- Saukkoriipi, J.; Heikkilä, T.; Ahola, J.M.; Seppälä, T.; Isto, P. Programming and control for skill-based robots. Open Eng. 2020, 10, 368–376. [Google Scholar] [CrossRef]

- Herrero, H.; Moughlbay, A.A.; Outón, J.L.; Sallé, D.; de Ipiña, K.L. Skill based robot programming: Assembly, vision and Workspace Monitoring skill interaction. Neurocomputing 2017, 255, 61–70. [Google Scholar] [CrossRef]

- Cheah, C.-S. Factors Contributing to the Difficulties in Teaching and Learning of Computer Programming: A Literature Review. Contemp. Educ. Technol. 2020, 12, ep272. [Google Scholar] [CrossRef]

- Durak, H.Y.; Yilmaz, F.G.K.; Yilmaz, R. Computational Thinking, Programming Self-Efficacy, Problem Solving and Experiences in the Programming Process Conducted with Robotic Activities. Contemp. Educ. Technol. 2019, 10, 173–197. [Google Scholar] [CrossRef]

- Abadi, M.; Plotkin, G.D. A simple differentiable programming language. Proc. ACM Program. Lang. 2020, 4, 1–28. [Google Scholar] [CrossRef] [Green Version]

- Lertyosbordin, C.; Maneewan, S.; Srikaew, D. Components and Indicators of Problem-solving Skills in Robot Programming Activities. Int. J. Adv. Comput. Sci. Appl. 2021, 12, 132–140. [Google Scholar] [CrossRef]

- Department of Computer Science and Statistics. Computer Programming. The University of Rhode Island. 2020. Available online: https://homepage.cs.uri.edu/faculty/wolfe/book/Readings/Reading13.htm (accessed on 3 February 2022).

- Amjo. Six Steps in the Programming Process. Dotnet Languages. 30 June 2018. Available online: https://www.dotnetlanguages.net/six-steps-in-the-programming-process/ (accessed on 3 February 2022).

- Jorge Valenzuela. Computer Programming in 4 Steps. ISTE. International Society for Technology in Education (ISTE). 20 March 2018. Available online: https://www.iste.org/explore/Computer-Science/Computer-programming-in-4-steps (accessed on 3 February 2022).

- School of Computer Science. The Programming Process. University of Birmingham. Available online: https://www.cs.bham.ac.uk/~rxb/java/intro/2programming.html (accessed on 3 February 2022).

- Wikibooks. The Computer Revolution/Programming/Five Steps of Programming—Wikibooks, Open Books for an Open World. Wikibooks. 2021. Available online: https://en.m.wikibooks.org/wiki/The_Computer_Revolution/Programming/Five_Steps_of_Programming (accessed on 3 February 2022).

- Sharma, P.; Singh, D. Comparative Study of Various SDLC Models on Different Parameters. Int. J. Eng. Res. 2015, 4, 188–191. [Google Scholar] [CrossRef] [Green Version]

- Commons, M.L.; Crone-Todd, D.; Chen, S.J. Using SAFMEDS and direct instruction to teach the model of hierarchical complexity. Behav. Anal. Today 2014, 14, 31–45. [Google Scholar] [CrossRef]

- Lysaker, P.H.; Buck, K.D.; Carcione, A.; Procacci, M.; Salvatore, G.; Nicolò, G.; Dimaggio, G. Addressing metacognitive capacity for self reflection in the psychotherapy for schizophrenia: A conceptual model of the key tasks and processes. Psychol. Psychother. Theory Res. Pract. 2010, 84, 58–69. [Google Scholar] [CrossRef] [Green Version]

- Mahoney, M.J.; Kazdin, A.E.; Lesswing, M.J. Behavior modification: Delusion or deliverance? In Annual Review of Behavior Therapy: Theory & Practice; Franks, C.M., Wilson, G.T., Eds.; Brunner/Mazel: New York, NY, USA, 1974. [Google Scholar]

- Ardini, S.N. Teachers’ Perception, Knowledge and Behaviour of Higher Order Thinking Skills (HOTS). Eternal Engl. Teach. J. 2018, 8, 20–33. [Google Scholar] [CrossRef]

- Bloom, B.S.; Engelhart Max, D.; Furst Edward, J.; Hill Walker, H.; Krathwohl, D.R. Taxonomy of Educational Objectives: The Classification of Educational Goals; Edwards Bros.: Ann Arbor, MI, USA, 1956. [Google Scholar]

- Anderson, L.W.; Krathwohl, D.R. A Taxonomy for Learning, Teaching, and Assessing: A Revision of Bloom’s Taxonomy of Educational Objectives; Longman: New York, NY, USA, 2001. [Google Scholar]

- Miho, T.; Katja, A. Future Shocks and Shifts: Challenges for the Global Workforce and Skills Development. April 2017. Available online: https://www.oecd.org/education/2030-project/about/documents/Future-Shocks-and-Shifts-Challenges-for-the-Global-Workforce-and-Skills-Development.pdf (accessed on 29 December 2021).

- Zion Market Research. Robot Software Market—Global Industry Analysis. Zion Market Research. 21 November 2019. Available online: https://www.zionmarketresearch.com/report/robot-software-industry (accessed on 29 December 2021).

- Stacie, S. “In Memory: Seymour Papert,” MIT Media Lab. 20 January 2017. Available online: https://www.media.mit.edu/posts/in-memory-seymour-papert/ (accessed on 29 December 2021).

- Master, A.; Cheryan, S.; Moscatelli, A.; Meltzoff, A. Programming experience promotes higher STEM motivation among first-grade girls. J. Exp. Child Psychol. 2017, 160, 92–106. [Google Scholar] [CrossRef] [Green Version]

- Mcdonald, C.V. STEM Education: A review of the contribution of the disciplines of science, technology, engineering, and mathematics. Sci. Educ. Int. 2016, 27, 530–569. [Google Scholar]

- Jeong, H.; Hmelo-Silver, C.E.; Jo, K. Ten years of Computer-Supported Collaborative Learning: A meta-analysis of CSCL in STEM education during 2005–2014. Educ. Res. Rev. 2019, 28, 100284. [Google Scholar] [CrossRef]

- Yücelyiğit, S.; Toker, Z. A meta-analysis on STEM studies in early childhood education. Turk. J. Educ. 2021, 10, 23–36. [Google Scholar] [CrossRef]

- Daintith, J.; Wright, E. Robotics; Oxford University Press: New York, NY, USA, 2008. [Google Scholar] [CrossRef]

- Butterfield, A.; Ngondi, G.E.; Kerr, A. Programming; Oxford University Press: New York, NY, USA, 2016. [Google Scholar] [CrossRef]

- Schumacher, J.; Welch, D.; Raymond, D. Teaching introductory programming, problem solving and information technology with robots at West Point. In Proceedings of the Frontiers in Education Conference, Reno, NV, USA, 10–13 October 2001; Volume 2, pp. F1B/2–F1B/7. [Google Scholar] [CrossRef]

- Jawawi, D.N.A.; Mamat, R.; Ridzuan, F.; Khatibsyarbini, M.; Zaki, M.Z.M. Introducing Computer Programming to Secondary School Students Using Mobile Robots. In Proceedings of the 10th Asian Control Conference (ASCC2015), Kota Kinabalu, Malaysia, 31 May–3 June 2015; pp. 1–6. [Google Scholar] [CrossRef]

- Sharma, M.K. A study of SDLC to develop well engineered software. Int. J. Adv. Res. Comput. Sci. 2017, 8, 520–523. [Google Scholar]

- Suryantara, I.G.N.; Andry, J.F. Development of Medical Record with Extreme Programming SDLC. IJNMT Int. J. New Media Technol. 2018, 5, 47–53. [Google Scholar] [CrossRef]

- Pambudi, W.S.; Suheta, T. Implementation of Fuzzy-PD for Folding Machine Prototype Using LEGO EV3. TELKOMNIKA Telecommun. Comput. Electron. Control. 2018, 16, 1625–1632. [Google Scholar] [CrossRef]

- Jung, H.-W. A study on basic software education applying a step-by-step blinded programming practice. J. Digit. Converg. 2019, 17, 25–33. [Google Scholar] [CrossRef]

- Agamawi, Y.M.; Rao, A.V. CGPOPS: A C++ Software for Solving Multiple-Phase Optimal Control Problems Using Adaptive Gaussian Quadrature Collocation and Sparse Nonlinear Programming. ACM Trans. Math. Softw. (TOMS) 2020, 46, 1–38. [Google Scholar] [CrossRef]

- Surfing Scratcher. Assessment Rubric for Coding. 2 September 2019. Available online: https://surfingscratcher.com/assessment-rubric-for-coding/ (accessed on 7 February 2022).

- Patrick. Griffin, Assessment for Teaching; Cambridge University Press: Cambridge, UK, 2017; Available online: https://books.google.com/books/about/Assessment_for_Teaching.html?hl=th&id=4i42DwAAQBAJ (accessed on 7 February 2022).

- Schraw, G.J.; Robinson, D.H. Assessment of Higher Order Thinking Skills; Information Age Pub.: Charlotte, NC, USA, 2011. [Google Scholar]

- Paglia, F.L.; Francomano, M.M.; Riva, G.; Barbera, D.L. Educational robotics to develop executive functions visual spatial abilities, planning and problem solving. Annu. Rev. CyberTherapy Telemed. 2018, 16, 80–86. [Google Scholar]

- Lertyosbordin, C.; Maneewan, S.; Nittayathammakul, V. Development of training model on robot programming to enhance creative problem–solving and collaborative learning for mathematics–science program students. J. Thai Interdiscip. Res. 2018, 13, 61–66. Available online: https://ph02.tci-thaijo.org/index.php/jtir/article/view/126274/95463 (accessed on 7 April 2022).

- Hu, C.C.; Tseng, H.T.; Chen, M.H.; Alexis, G.P.I.; Chen, N.S. Comparing the effects of robots and IoT objects on STEM learning outcomes and computational thinking skills between programming-experienced learners and programming-novice learners. In Proceedings of the IEEE 20th International Conference on Advanced Learning Technologies, ICALT 2020, Tartu, Estonia, 6–9 July 2020; pp. 87–89. [Google Scholar] [CrossRef]

- Kim, S.U. A Comparative Study on the Effects of Hands-on Robot and EPL Programming Activities on Creative Problem-Solving Ability in Children. In Proceedings of the ACM International Conference Proceeding Series, Singapore, Singapore, 15–18 May 2020; pp. 49–53. [Google Scholar] [CrossRef]

- Çınar, M.; Tüzün, H. Comparison of object-oriented and robot programming activities: The effects of programming modality on student achievement, abstraction, problem solving, and motivation. J. Comput. Assist. Learn. 2021, 37, 370–386. [Google Scholar] [CrossRef]

- Angeli, C. The effects of scaffolded programming scripts on pre-service teachers’ computational thinking: Developing algorithmic thinking through programming robots. Int. J. Child-Comput. Interact. 2022, 31, 100329. [Google Scholar] [CrossRef]

- Sarı, U.; Pektaş, H.M.; Şen, Ö.F.; Çelik, H. Algorithmic thinking development through physical computing activities with Arduino in STEM education. Educ. Inf. Technol. 2022, 1–21. [Google Scholar] [CrossRef]

- Resnick, L.B. Education and Learning to Think; National Academy Press: Washington, DC, USA, 1987. [Google Scholar]

- Lewis, A.; Smith, D. Defining higher order thinking. Theory Into Pract. 1993, 32, 131–137. [Google Scholar] [CrossRef]

- King, F.; Goodson, L.; Rohani, F. Higher Order Thinking Skills • Definition • Teaching Strategies • Assessment; Educational Services Program: Tallahassee, FL, USA, 1998. [Google Scholar]

- Broadfoot, P.; Black, P. Redefining assessment? The first ten years of assessment in education. In Assessment in Education: Principles, Policy & Practice; Taylor & Francis: Oxfordshire, UK, 2004; Volume 11, pp. 7–26. [Google Scholar] [CrossRef]

- Craddock, D.; Mathias, H. Assessment options in higher education. Assess. Eval. High. Educ. 2009, 34, 127–140. [Google Scholar] [CrossRef]

- Corliss, S.; Linn, M. Assessing learning from inquiry science instruction. In Assessment of Higher Order Thinking Skills; Schraw, G., Robinson, D.H., Eds.; Information Age Pub.: Charlotte, NC, USA, 2011; pp. 219–243. [Google Scholar]

- Selby, C.C. Relationships: Computational thinking, pedagogy of programming, and Bloom’s Taxonomy. In Proceedings of the Workshop in Primary and Secondary Computing Education, London, UK, 9–11 November 2015; pp. 80–87. [Google Scholar] [CrossRef]

- Leighton, J.P. A cognitive model for the assessment of higher order thinking in students. In Assessment of Higher Order Thinking Skills; Information Age Pub.: Charlotte, NC, USA, 2011; pp. 151–181. [Google Scholar]

- Likert, R. A Technique for the Measurement of Attitudes; Archives of Psychology; The Science Press: New York, NY, USA, 1932; Volume 22. [Google Scholar]

- MARA. Announcement for Special Projects No Training Costs with Snacks and Lunch. 24 July 2021. Available online: https://www.dsd.go.th/mara/Region/ShowACT/72089?region_id=23&cat_id=3 (accessed on 6 April 2022).

- Rodrigues, I.B.; Adachi, J.D.; Beattie, K.A.; MacDermid, J.C. Development and validation of a new tool to measure the facilitators, barriers, and preferences to exercise in people with osteoporosis. BMC Musculoskelet. Disord. 2017, 18, 1–9. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zach. How to Calculate Cronbach’s Alpha in R (With Examples). Statology. 28 March 2021. Available online: https://www.statology.org/cronbachs-alpha-in-r/ (accessed on 3 February 2022).

- Xu, Q.; Heller, K.; Hsu, L.; Aryal, B. Authentic assessment of students’ problem solving. AIP Conf. Proc. 2013, 1513, 434. [Google Scholar] [CrossRef] [Green Version]

- Gao, X.; Grisham-Brown, J. The Use of Authentic Assessment to Report Accountability Data on Young Children’s Language, Literacy and Pre-math Competency. Int. Educ. Stud. 2011, 4, 41. [Google Scholar] [CrossRef]

- Rovinelli, R.J.; Hambleton, R.K. On the Use of Content Specialists in the Assessment of Criterion-Referenced Test Item Validity. San Francisco. April 1976. Available online: https://eric.ed.gov/?q=Rovinelli&id=ED121845 (accessed on 10 March 2022).

- Müller, C.M.; de Vos, R.A.I.; Maurage, C.A.; Thal, D.R.; Tolnay, M.; Braak, H. Staging of Sporadic Parkinson Disease-Related α-Synuclein Pathology: Inter- and Intra-Rater Reliability. J. Neuropathol. Exp. Neurol. 2005, 64, 623–628. [Google Scholar] [CrossRef]

- Stephanie Glen. Cronbach’s Alpha: Definition, Interpretation, SPSS. StatisticsHowTo. 2022. Available online: https://www.statisticshowto.com/probability-and-statistics/statistics-definitions/cronbachs-alpha-spss/ (accessed on 10 March 2022).

- Taber, K.S. The Use of Cronbach’s Alpha When Developing and Reporting Research Instruments in Science Education. Res. Sci. Educ. 2018, 48, 1273–1296. [Google Scholar] [CrossRef]

- Burry-Stock, J.A.; Shaw, D.G.; Laurie, C.; Chissom, B.S. Rater Agreement Indexes for Performance Assessment. Educ. Psychol. Meas. 2016, 56, 251–262. [Google Scholar] [CrossRef]

- Segal, D.; Chen, P.Y.; Gordon, D.A.; Kacir, C.D.; Gylys, J. Development and Evaluation of a Parenting Intervention Program: Integration of Scientific and Practical Approaches. Int. J. Hum.–Comput. Interact. 2010, 15, 453–467. [Google Scholar] [CrossRef]

- Hadzhikoleva, S.; Hadzhikolev, E.; Kasakliev, N. Using peer assessment to enhance Higher Order thinking skills. TEM J. 2019, 8, 242–247. [Google Scholar] [CrossRef]

- APA Dictionary of Psychology. Problem-Solving Approach. 2022. Available online: https://dictionary.apa.org/problem-solving-approach (accessed on 8 March 2022).

- Jonassen, D.H. Learning to Solve Problems: A Handbook for Designing Problem-Solving Learning Environments; Taylor & Francis: Abingdon, UK, 2010; Available online: https://www.routledge.com/Learning-to-Solve-Problems-A-Handbook-for-Designing-Problem-Solving-Learning/Jonassen/p/book/9780415871945 (accessed on 8 March 2022).

- Chandrasekaran, B. Design Problem Solving: A Task Analysis. AI Mag. 1990, 11, 59. [Google Scholar] [CrossRef]

- Matsun; Boisandi; Sari, I.N.; Hadiati, S.; Saputri, D.F. The effect of physics learning using ardouno uno based media on higher-order thinking skills. J. Phys. Conf. Ser. 2021, 2104, 012014. [Google Scholar] [CrossRef]

- Avello-Martínez, R.; Lavonem, J.; Zapata-Ros, M. Codificación y robótica educativa y su relación con el pensamientocomputacional y creativo. Una revisión compresiva. Rev. De Educ. A Distancia (RED) 2020, 20, 1–21. [Google Scholar] [CrossRef] [Green Version]

- Marzano, R.J.; Kendall, J.S. Designing & Assessing Educational Objectives; Corwin Press: Thousand Oaks, CA, USA, 2008. [Google Scholar]

- Eberly Center. Align Assessments, Objectives, Instructional Strategies. Carnegie Mellon University. 2022. Available online: https://www.cmu.edu/teaching/assessment/basics/alignment.html (accessed on 10 March 2022).

| Thinking Ordering | Old Cognitive Domain [26] | Revision Cognitive Domain [27] |

|---|---|---|

| Low | Knowledge | Remember |

| Comprehension | Understand | |

| Application | Apply | |

| High | Analysis | Analyze |

| Synthesis | Evaluate | |

| Evaluation | Create |

| Level | Science Skills | Learning Activities/Assessment |

|---|---|---|

| Low | Demonstrating knowledge of scientific concepts, laws, theories, procedures and instruments | Recall |

| Define | ||

| Describe | ||

| List | ||

| Identify | ||

| High | Applying scientific knowledge and procedures to solve complex problems | Formulate questions |

| Hypothesize/predict | ||

| Design investigations | ||

| Use model | ||

| Compare/contrast/classify | ||

| Analyze | ||

| Find solutions | ||

| Interpret | ||

| Integrate/synthesize | ||

| Relate | ||

| Evaluate |

| Dimension | Analyze | Evaluate | Create |

|---|---|---|---|

| Factual | Select | Check | Generate |

| Conceptual | Relate | Determine | Assemble |

| Procedural | Differentiate | Conclude | Compose |

| Metacognitive | Deconstruct | Reflect | Actualize |

| Paper_ID | Year | Study Environment | Region | Gender |

|---|---|---|---|---|

| La Paglia et al. [47] | 2018 | Elementary school | Italy | Mixed |

| Lertyosbordin et al. [48] | 2018 | Middle school | Thailand | Mixed |

| Hu et al. [49] | 2020 | Higher Education | Taiwan | Not available |

| Kim [50] | 2020 | Elementary school | Republic of Korea | Mixed |

| Çınar and Tüzün [51] | 2021 | High school | Turkey | Mixed |

| Angeli [52] | 2022 | Higher Education | Cyprus | Mixed |

| Sari et al. [53] | 2022 | Higher Education | Turkey | Mixed |

| Paper_ID | Research Design | Sample Design | Sample Size | Manipulate Variable | Dependent Variable |

|---|---|---|---|---|---|

| La Paglia et al. [47] | Two-group pre-test & post-test | Random | 30 people (group 1: 15; group 2: 15) | Robot programming activities | Higher order thinking includes: forecasting, planinng, and problem solving |

| Lertyosbordin [48] | One-group pre-test & post-test | Random | 40 people | Robot programming activities | Creative problem-solving skills include: problem analysis, finding a solution and robot testing |

| Hu et al. [49] | Two-group post-test | Purposive | 13 people (group 1: 6; group 2: 6) | Robots and IoT programming courses | Computational-thinking learning outcome |

| Kim [50] | Two-group pre-test & post-test | Purposive | 45 people (group 1: 22; group 2: 23) | Hands-on robot and EPL programming activities | Creative problem solving includes: understanding the problem, generating ideas planning for action and an evaluation |

| Çınar and Tüzün [51] | Two-group pre-test & post-test | Purposive | 81 people (group 1: 41; group 2: 40) | Object-oriented and robot programming activities | Achievement, abstraction, problem solving and motivation |

| Angeli [52] | One-group pre-test & post-test | Purposive | 50 people | Robot programming activities | Computaional thinkinig skills include: skills of sequencing, flow of control, and debugging |

| Sari et al. [53] | One-group pre-test & post-test | Purposive | 24 people | Arduino coding activities | Algorithmic-thinking skills include: understanding the problem, determining the solution strategies and creating the algorithm |

| Components | Indicators | Evidence-Based References | ||||||

|---|---|---|---|---|---|---|---|---|

| [47] | [48] | [49] | [50] | [51] | [52] | [53] | ||

| 1. The ability to solve problems step by step | 1. Describe the problem and the sequence of ways to solve it. | ● | ● | ● | ● | ● | ● | ● |

| 2. Draw the flowcharts or pseudocodes to show the sequence of ways to solve problems. | ● | ● | ● | ● | ● | ● | ● | |

| 3. Change the sequence of steps if the results are not achieved. | ● | ● | ● | ● | ● | ● | ● | |

| 4. Tackle the tasks presented by breaking them down into smaller tasks. | ● | ● | ● | ● | ● | ● | ● | |

| 5. Capture the issues that can cause problems to repeat. | ● | ● | ● | ● | ● | ● | ● | |

| 2. The ability to create computer programs | 6. Create a program using a computer language from a blank page. | ◑ | ◕ | ◕ | ◕ | ● | ◕ | ◕ |

| 7. Create a program with a single-decision condition. | ◕ | ◕ | ◕ | ◕ | ● | ◕ | ◕ | |

| 8. Create a program with the nested structure of decision conditions. | ◑ | ● | ● | ● | ◕ | ◑ | ◔ | |

| 9. Create a variable to control the loop task programs. | ◑ | ◕ | ◕ | ● | ◕ | ◔ | ◔ | |

| 10. Create a variable and input data that affect the output. | ◕ | ◕ | ◕ | ● | ● | ◕ | ◕ | |

| 11. Build your own program from the beginning, until you achieve the objectives. | ◑ | ◕ | ◕ | ● | ◕ | ◕ | ● | |

| 12. Create a function that can modify parameters. | ◕ | ◕ | ◕ | ● | ● | ◕ | ◕ | |

| 3. The ability to connect to the robot | 13. Connect the port between the computer and the microcontroller. | ◕ | ◕ | ◕ | ● | ● | ◑ | ● |

| 14. Create objects for using analog and/or digital signals. | ◑ | ◕ | ◕ | ● | ● | ◑ | ● | |

| 15. Create a graphical user interface (GUI) to display the analog and/or digital inputs. | ◑ | ◔ | ◔ | ◑ | ◑ | ◑ | ◔ | |

| 16. Create a graphical user interface (GUI) for the digital outputs. | ◑ | ◔ | ◔ | ◑ | ◑ | ◑ | ◔ | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lertyosbordin, C.; Maneewan, S.; Easter, M. Components and Indicators of the Robot Programming Skill Assessment Based on Higher Order Thinking. Appl. Syst. Innov. 2022, 5, 47. https://doi.org/10.3390/asi5030047

Lertyosbordin C, Maneewan S, Easter M. Components and Indicators of the Robot Programming Skill Assessment Based on Higher Order Thinking. Applied System Innovation. 2022; 5(3):47. https://doi.org/10.3390/asi5030047

Chicago/Turabian StyleLertyosbordin, Chacharin, Sorakrich Maneewan, and Matt Easter. 2022. "Components and Indicators of the Robot Programming Skill Assessment Based on Higher Order Thinking" Applied System Innovation 5, no. 3: 47. https://doi.org/10.3390/asi5030047