A Grey Wolf Optimisation-Based Framework for Emotion Recognition on Electroencephalogram Data †

Abstract

1. Introduction

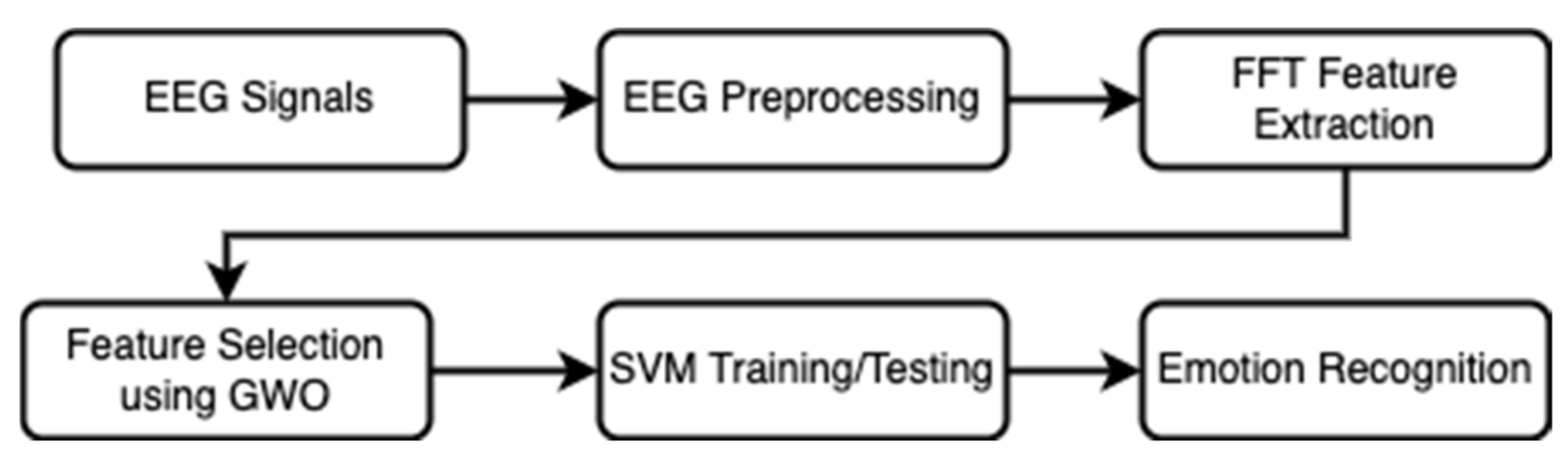

Contributions of This Paper

- We provide a framework for emotion recognition based on GWO and SVM methods.

- We provide a literature review based on parameters such as highlights, datasets, and accuracy.

- We analyse the performance of the proposed framework using MATLAB.

2. Materials and Methods

3. Methodology

3.1. EEG Signal Acquisition

3.2. EEG Preprocessing

3.3. Features and Feature Selection

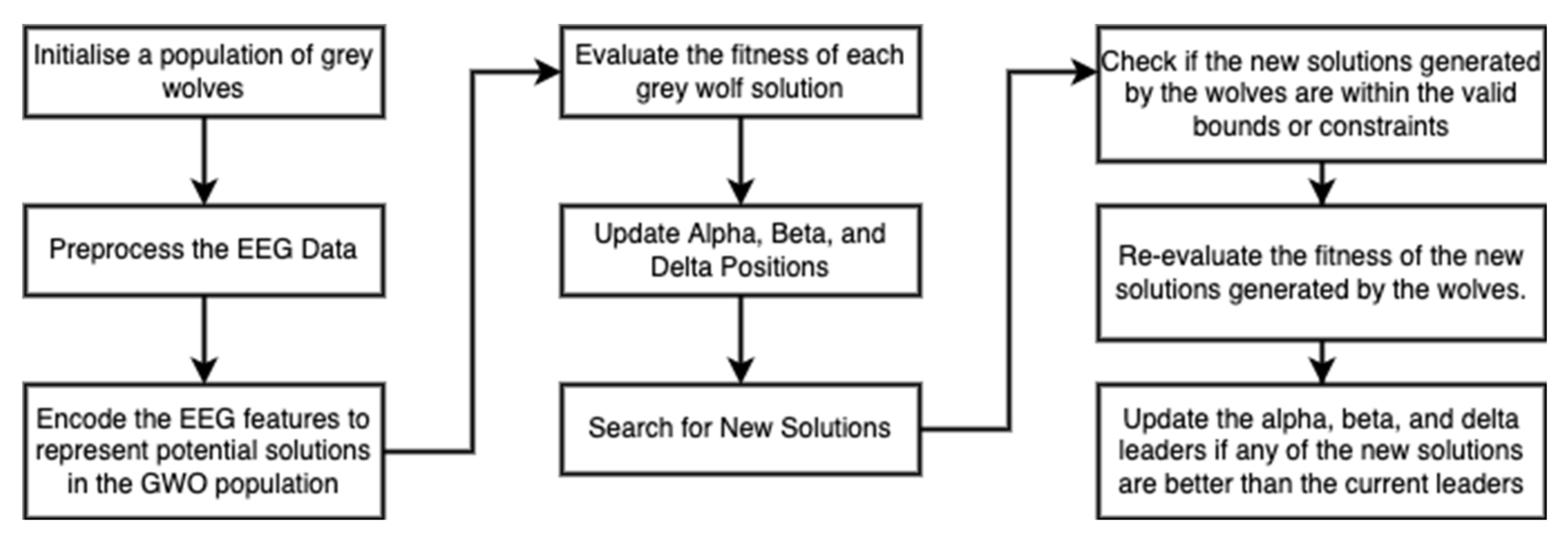

Grey Wolf Optimisation (GWO) Algorithm

- Enter the population size in either grey wolves or search agents.

- The search agents’ position directions will first be set to the upper and lower boundary zones of the grey wolves, so they can start exploring for and encircling prey. Maximum iterations are determined by plugging a value into Equations (1)–(4).

- Analyse the levels of fitness for each of the possible solutions. The fitness numbers represent the relative sizes of the wolf and its prey. The fitness value is supported by the top three wolf types, which are types a, b, and c. Many subspecies of grey wolves modify their pursuing habit from (6) to (8).

- Equations are used to continually improve the search agent’s location, (8)–(11).

- Repeat steps 3–5 until the wolves are within striking distance of their target.

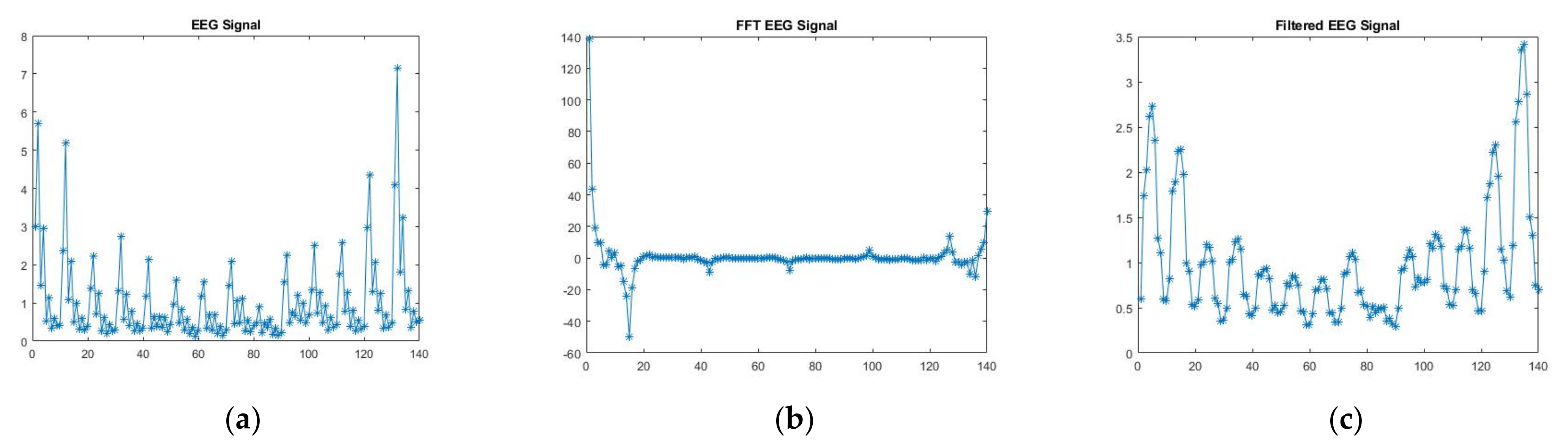

- The process of termination is finished once a predetermined number of iterations has been completed. The Input_EEG Signal, FFT_EEG Signal and processed Clean_EEG Signal has been depicted in Figure 3a–c.

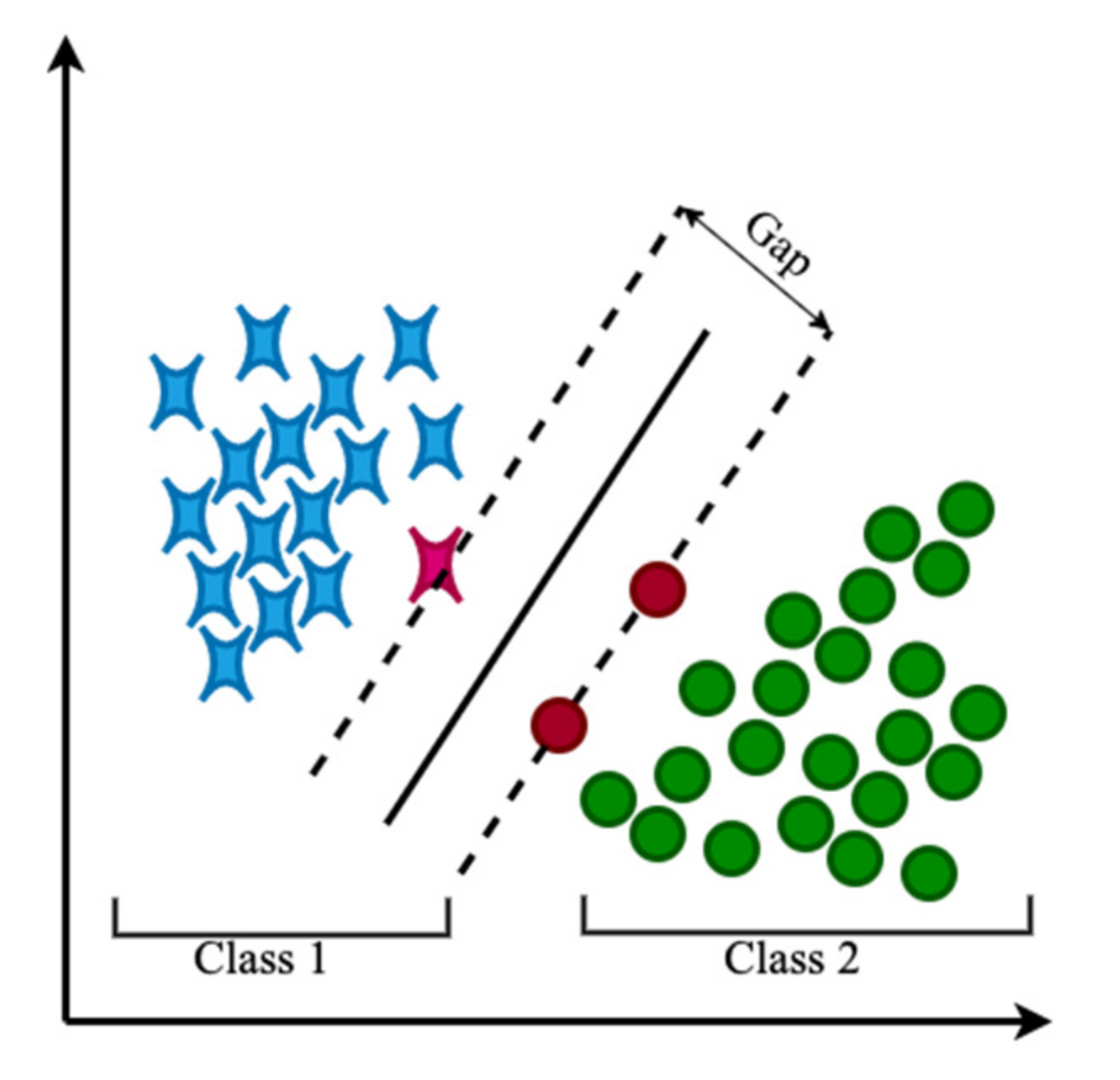

3.4. Classification

4. Results and Discussion

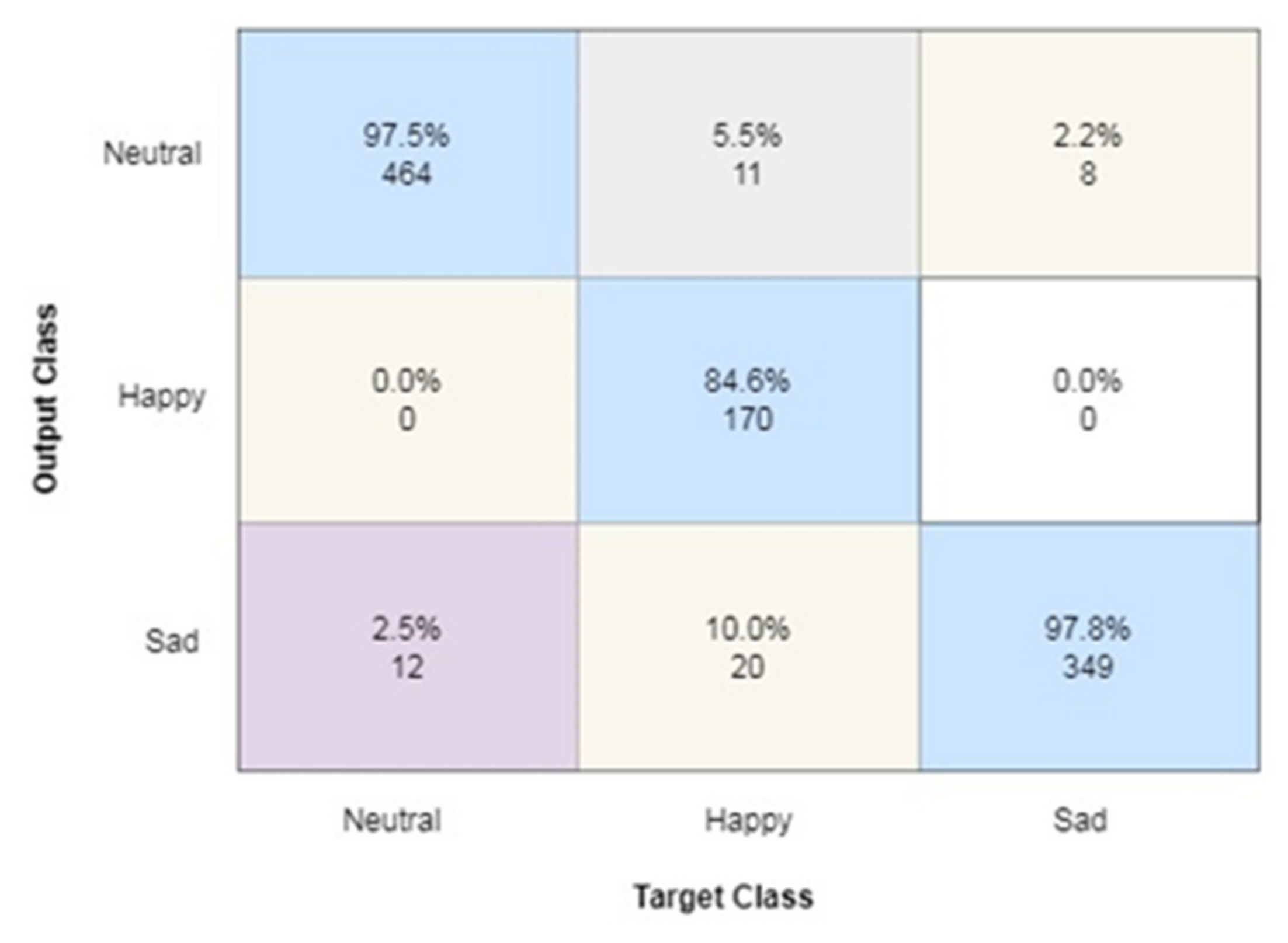

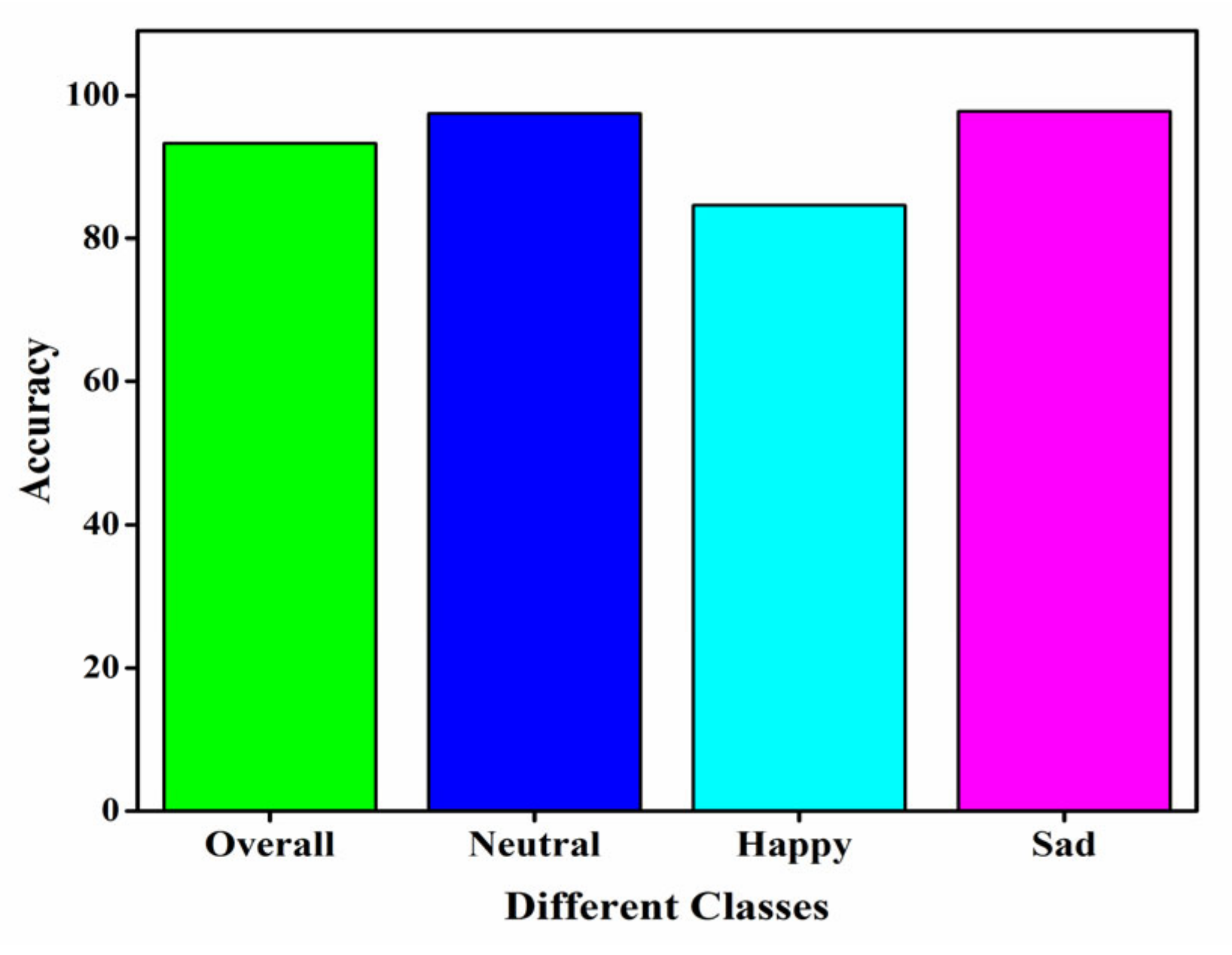

Performance Metrics

- Accuracy: It represents the overall percentage of accurate predictions in relation to the entire number of predictions.

- Confusion matrix: A confusion matrix, also known as an error matrix, is a method in machine learning and statistics for classifying data. Classification in the confusion matrix can be seen as well as the confusion matrix itself.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Jaswal, R.A.; Dhingra, S. EEG Signal Accuracy for Emotion Detection Using Multiple Classification Algorithm. Harbin Gongye Daxue Xuebao/J. Harbin Inst. Technol. 2022, 54, 1–12. [Google Scholar]

- Jaswal, R.A.; Dhingra, S.; Kumar, J.D. Designing Multimodal Cognitive Model of Emotion Recognition Using Voice and EEG Signal. In Recent Trends in Electronics and Communication; Springer: Singapore, 2022; pp. 581–592. [Google Scholar]

- Yildirim, E.; Kaya, Y.; Kiliç, F. A channel selection method for emotion recognition from EEG based on swarm-intelligence algorithms. IEEE Access 2021, 9, 109889–109902. [Google Scholar] [CrossRef]

- Ghane, P.; Hossain, G.; Tovar, A. Robust understanding of EEG patterns in silent speech. In Proceedings of the 2015 National Aerospace and Electronics Conference (NAECON), Dayton, OH, USA, 15–19 June 2015; pp. 282–289. [Google Scholar]

- Cîmpanu, C.; Ferariu, L.; Ungureanu, F.; Dumitriu, T. Genetic feature selection for large EEG data with commutation between multiple classifiers. In Proceedings of the 2017 21st International Conference on System Theory, Control and Computing (ICSTCC), Sinaia, Romania, 19–21 October 2017; pp. 618–623. [Google Scholar]

- Houssein, E.H.; Hamad, A.; Hassanien, A.E.; Fahmy, A.A. Epileptic detection based on whale optimization enhanced support vector machine. J. Inf. Optim. Sci. 2019, 40, 699–723. [Google Scholar] [CrossRef]

- Marzouk, H.F.; Qusay, O.M. Grey Wolf Optimization for Facial Emotion Recognition: Survey. J. Al-Qadisiyah Comput. Sci. Math. 2023, 15, 1–11. [Google Scholar] [CrossRef]

- Alyasseri, Z.A.; Alomari, O.A.; Makhadmeh, S.N.; Mirjalili, S.; Al-Betar, M.A.; Abdullah, S.; Ali, N.S.; Papa, J.P.; Rodrigues, D.; Abasi, A.K. Eeg channel selection for person identification using binary grey wolf optimizer. IEEE Access 2022, 10, 10500–10513. [Google Scholar] [CrossRef]

- Shahin, I.; Alomari, O.A.; Nassif, A.B.; Afyouni, I.; Hashem, I.A.; Elnagar, A. An efficient feature selection method for arabic and english speech emotion recognition using Grey Wolf Optimizer. Appl. Acoust. 2023, 205, 109279. [Google Scholar] [CrossRef]

- Algarni, M.; Saeed, F.; Al-Hadhrami, T.; Ghabban, F.; Al-Sarem, M. Deep learning-based approach for emotion recognition using electroencephalography (EEG) signals using Bi-directional long short-term memory (Bi-LSTM). Sensors 2022, 22, 2976. [Google Scholar] [CrossRef] [PubMed]

- Li, S.; Lyu, X.; Zhao, L.; Chen, Z.; Gong, A.; Fu, Y. Identification of emotion using electroencephalogram by tunable Q-factor wavelet transform and binary gray wolf optimization. Front. Comput. Neurosci. 2021, 15, 732763. [Google Scholar] [CrossRef] [PubMed]

- Jasim, S.S.; Abdul Hassan, A.K.; Turner, S. Driver Drowsiness Detection Using Gray Wolf Optimizer Based on Voice Recognition. Aro-Sci. J. Koya Univ. 2022, 10, 142–151. [Google Scholar] [CrossRef]

- Ghosh, R.; Sinha, N.; Biswas, S.K.; Phadikar, S. A modified grey wolf optimization based feature selection method from EEG for silent speech classification. J. Inf. Optim. Sci. 2019, 40, 1639–1652. [Google Scholar] [CrossRef]

- Yin, Y.; Zheng, X.; Hu, B.; Zhang, Y.; Cui, X. EEG emotion recognition using fusion model of graph convolutional neural networks and LSTM. Appl. Soft Comput. 2021, 100, 106954. [Google Scholar] [CrossRef]

- Padhmashree, V.; Bhattacharyya, A. Human emotion recognition based on time–frequency analysis of multivariate EEG signal. Knowl. Based Syst. 2022, 238, 107867. [Google Scholar]

- Ozdemir, M.A.; Degirmenci, M.; Izci, E.; Akan, A. EEG-based emotion recognition with deep convolutional neural networks. Biomed. Eng./Biomed. Tech. 2021, 66, 43–57. [Google Scholar] [CrossRef] [PubMed]

- Koelstra, S.; Muhl, C.; Soleymani, M.; Lee, J.S.; Yazdani, A.; Ebrahimi, T.; Pun, T.; Nijholt, A.; Patras, I. Deap: A database for emotion analysis; Using physiological signals. IEEE Trans. Affect. Comput. 2011, 3, 18–31. [Google Scholar] [CrossRef]

| Author | Year | Highlights | Dataset | Accuracy (%) |

|---|---|---|---|---|

| Yildirim et al. [7] | 2021 | Swarm intelligence (SI) algorithms are used to extract 736 characteristics based on spectral power and phase locking values, and to then identify the most important features for emotion identification. | EEG | Between 56.27% and 60.29% |

| Alyasseri et al. [8] | 2022 | Proposed BGWO-SVM using 23 sensors and with 5 auto-regressive coefficients. | EEG motor imagery dataset | 94.13% |

| Shahin et al. [9] | 2023 | Using bio-inspired optimisation algorithms like grey wolf optimiser (GWO) and KNN, we propose an intelligent feature selection process (K-nearest neighbour). The algorithm proposed is called GWO-KNN. Used GWO-KNN to categorise feelings and compared it to other methods. | RAVDESS | 95% |

| Algarni et al. [10] | 2022 | Emotion recognition using EEG signals is proposed, along with a deep learning-based method to boost the model’s accuracy. | DEAP | 99.45% (valence), 96.87% (arousal), 99.68% (liking) |

| Li et al. [11] | 2021 | SVM and BGWO were proposed as a technique for EEG recognition. | DEAP | 90.48% |

| Jasim et al. [12] | 2022 | For voice recognition using mel-frequency cepstral coefficients (MFCCs) and linear predicted coefficients, the authors propose an approach called GWOANN that is based on GWO. | Voice dataset | 86.96% (MFCCs), 90.05% (LPCs) |

| Ghosh et al. [13] | 2019 | The EEG data for silent speech categorisation were used with a feature selection approach based on GWO. Using an EEG dataset, this work categorises the five made-up vowels (a, e, i, o, u). | EEG | 65% |

| Yin et al. [14] | 2021 | Create a novel deep learning-based method for detecting human emotions. | DEAP | 90.45 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jaswal, R.A.; Dhingra, S. A Grey Wolf Optimisation-Based Framework for Emotion Recognition on Electroencephalogram Data. Eng. Proc. 2023, 59, 214. https://doi.org/10.3390/engproc2023059214

Jaswal RA, Dhingra S. A Grey Wolf Optimisation-Based Framework for Emotion Recognition on Electroencephalogram Data. Engineering Proceedings. 2023; 59(1):214. https://doi.org/10.3390/engproc2023059214

Chicago/Turabian StyleJaswal, Ram Avtar, and Sunil Dhingra. 2023. "A Grey Wolf Optimisation-Based Framework for Emotion Recognition on Electroencephalogram Data" Engineering Proceedings 59, no. 1: 214. https://doi.org/10.3390/engproc2023059214

APA StyleJaswal, R. A., & Dhingra, S. (2023). A Grey Wolf Optimisation-Based Framework for Emotion Recognition on Electroencephalogram Data. Engineering Proceedings, 59(1), 214. https://doi.org/10.3390/engproc2023059214