- Article

Integrating Deep Learning Nodes into an Augmented Decision Tree for Automated Medical Coding

- Spoorthi Bhat,

- Veda Sahaja Bandi and

- Joshua Carberry

- + 1 author

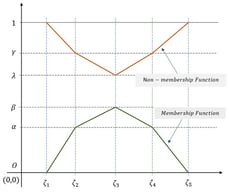

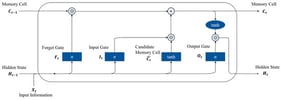

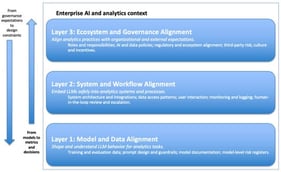

Accurate assignment of International Classification of Diseases (ICD) codes is essential for healthcare analytics, billing, and clinical research. However, manual coding remains time-consuming and error-prone due to the scale and complexity of the ICD taxonomy. While hierarchical deep learning approaches have improved automated coding, their deployment across large taxonomies raises scalability and efficiency concerns. To address these limitations, we introduce the Augmented Decision Tree (ADT) framework, which integrates deep learning with symbolic rule-based logic for automated medical coding. ADT employs an automated lexical screening mechanism to dynamically select the most appropriate modeling strategy for each decision node, thereby minimizing manual configuration. Nodes with high keyword distinctiveness are handled by symbolic rules, while semantically ambiguous nodes are assigned to deep contextual models fine-tuned from PubMedBERT. This selective design eliminates the need to train a deep learning model at every node, significantly reducing computational cost. A case study demonstrates that this hybrid and adaptive ADT approach supports scalable and efficient ICD coding. Experimental results show that ADT outperforms a pure decision tree baseline and achieves accuracy comparable to that of a full deep learning-based decision tree, while requiring substantially less training time and computational resources.

12 February 2026

![Generating FGDPs from a doctor’s notes (adapted from [25]).](https://mdpi-res.com/cdn-cgi/image/w=470,h=317/https://mdpi-res.com/analytics/analytics-05-00011/article_deploy/html/images/analytics-05-00011-g001-550.jpg)