1. Introduction

Supply chains encompass manufacturing procurement, material handling, transportation, and distribution [

1]. Demand propagates upstream as the information flow and supply responses in the form of commodity flow. However, supply cannot immediately match the demand due to various physical limitations involved in this process. For example, factories can produce only a certain number of items, trucks cannot exceed speed limits, and the capacity and number of container ships are constrained. The lead times might also be several weeks or even months in global supply chains. In this regard, inventory management is essential, since the inventory plays the role of a buffer against uncertainty and ensures business continuity across supply chains [

2].

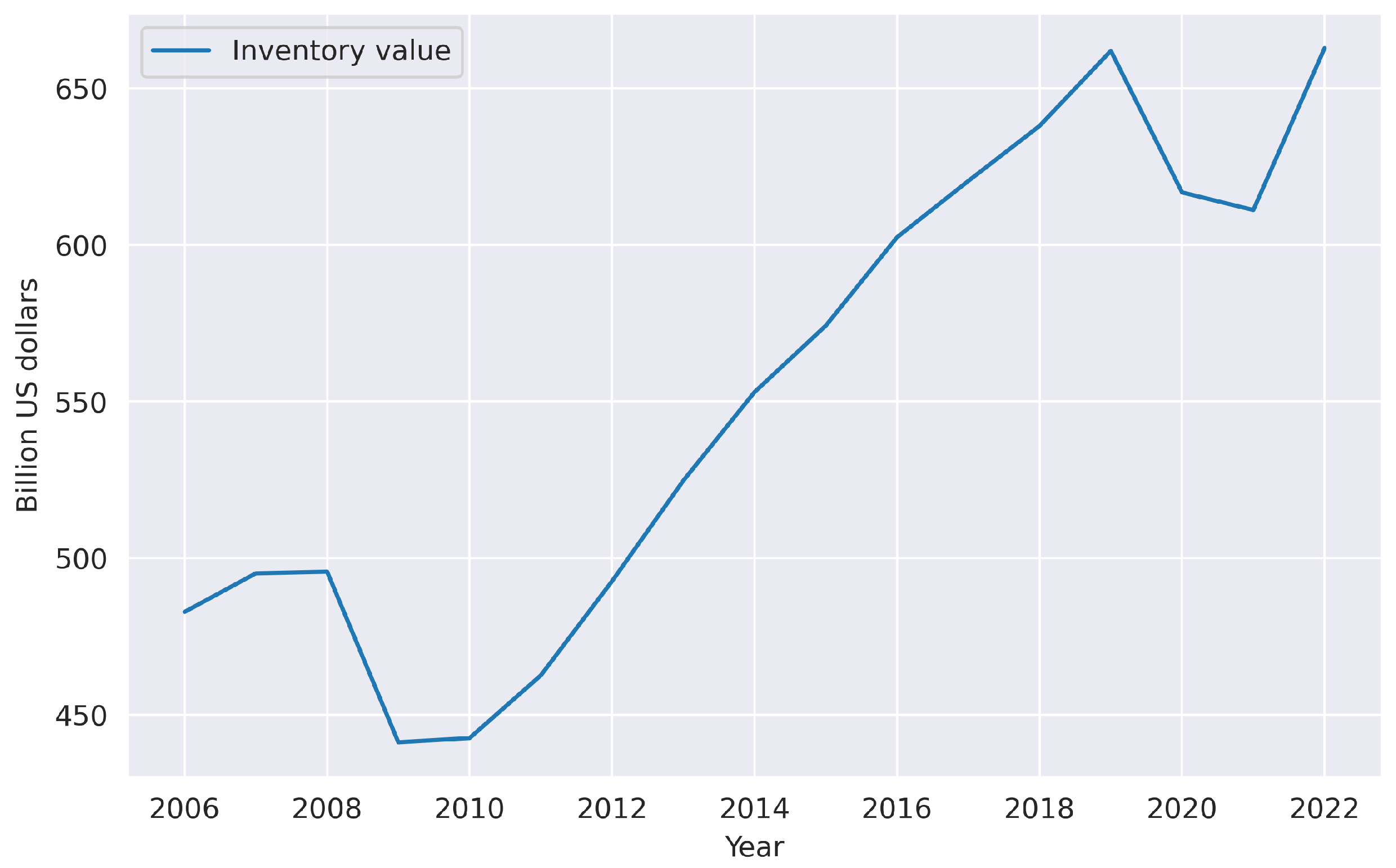

The importance of managing inventory is also illustrated by the US Department of Commerce, which regularly keeps track of inventory value held in different parts of the supply chain. In April 2022, USD 2.27 trillion were held in inventory across all types of business [

3]. The retail industry alone accounted for USD 662 billion, approximately 17% of which was held by e-commerce [

4]. As

Figure 1 shows, these values recovered from the pandemic shocks and are on the rise.

Humanity has engaged in inventory management, at least in some primitive form, since it started gathering and stockpiling the planet’s resources. However, the rigorous approach of prioritizing items for management attention can be dated back to Joseph Moses Juran [

5]. Since a typical inventory system could contain an immense number of items with unique characteristics such as price, demand, and physical properties, analyzing them individually would outstrip anyone’s managerial effort and attention. That is why retailers frequently use stock-keeping-unit (SKU) segmentation to guide themselves in planning, buying, and allocating products [

6]. The common purposes for SKU segmentation include:

Selecting an appropriate inventory control policy [

7,

8].

Understanding the demand patterns [

9].

Optimal allocation under the limited storage capacity [

10].

Selecting an appropriate supply-chain strategy [

9].

Evaluating the environmental impacts of inventory throughout its life cycle [

11].

Every customer sees SKUs on a daily basis as barcodes in the grocery stores or items on the e-commerce platforms. A typical retailer carries 10,000 SKUs [

6]. For giants such as Walmart, the number exceeds 75 million [

12]. However, the e-commerce industry typically operates with an even more extensive catalog of items. A genuinely gargantuan case is the Amazon marketplace, acting as the home for more than 350 million SKUs [

13]. Considering the extension of the supply chain directly into inhand devices through the adoption of digital technologies, and consumers’ impatience, the future scale of the e-commerce industry can be expected to skyrocket.

Besides the volume, SKU data can also be distinguished by the high dimensionality, which means that SKUs can be segmented on the basis of various attributes. The most traditional way is the ABC classification method, which segments the SKUs on the basis of sales volume. The underlying theory of this segmentation method is the 20–80 Pareto principle. The principle suggests that 20% of SKUs generate approximately 80% of total revenue. Therefore this 20% should constitute the category that deserves the most attention from management. ABC segmentation utilizes only one dimension to segment the SKUs that, on the first hand, facilitates implementation [

14]. However, on the other hand, SKUs within the segment can have substantially different characteristics. For example, two SKUs may have similar contributions to the revenue, but one may have a high unit price but low demand, and the other may have a low unit value but high demand. Indeed, it would be wrong to manage these SKUs under the same strategy. The physical properties of the SKU are another example. ABC segmentation does not consider perishability, which is essential for grocery retail. The weight and height of the loaded pallet can be crucial if the business operates under the constrained storage space, which is the case for retail chains in dense urban areas [

10].

Given the data volumes and the multitude of potentially important dimensions to consider, it becomes computationally impossible to individually manage each SKU. In this regard, the prominent question arises: “How to aggregate SKUs so that the resulting management policies are close enough to the case where each SKU is considered individually?” Our paper aims to answer this long-standing question by taking advantage of state-of-the-art big-data technologies and automated machine-learning (AutoML) approaches [

15].

The remainder of this paper is organized as follows.

Section 2 presents the related work and highlights the research gap.

Section 3 introduces the methodology at a high level and provides the rationale behind the choice of the individual components.

Section 4 demonstrates how the proposed framework functions on the basis of a real-world dataset.

Section 5 highlights the potential advantages of the proposed framework, and discusses several use cases along with managerial implications. Lastly,

Section 6 summarizes the paper and suggests promising directions for future research.

2. Related Work and Novelty

Considering the size and dimensionality of SKU data, and the market pressure on businesses to make critical decisions quickly, it is frequently not computationally feasible to treat every item individually. An alternative would be to define groups while taking into account all product features that notably influence the operational, tactical, or strategic problem of interest. These features can vary depending on the problem of interest, and go beyond the revenue and demand attributes used in classical ABC analysis. Clustering in particular and unsupervised machine learning in general are the most natural approaches to creating groups of SKUs that share similar characteristics [

16].

Early attempts to group SKUs using cluster analysis go back to Ernst and Cohen, who proposed the operations related groups method to consider a full range of operationally significant item attributes. The method is similar to factor analysis and allows for considering a full range of operationally significant item attributes. The method was initially designed to assist an automobile manufacturer with developing inventory control policies [

7]. Shortly after that, Srinivasan and Moon introduced a hierarchical-clustering-based methodology to facilitate inventory control policies in supply chains [

17]. Hierarchical clustering still remains a popular technique. For instance, in a recent study, the authors proposed a hierarchical segmentation for spare-part inventory management [

18]. Even though such an approach is simple to implement and interpret, hierarchical clustering has several fundamental shortcomings. First, the method does not scale well because of the computational complexity compared to more efficient algorithms, such as K-means. Second, hierarchical clustering is biased towards large clusters and tends to break them. Third, in hierarchical clustering, the order of the data impacts the final results. Lastly, the method is sensitive to noise and outliers [

19].

Currently, K-means is the most popular clustering technique [

20]. The algorithm is appealing in many aspects, including computational and memory efficiency, and interpretability in low-dimensional cases. The notable applications to SKU segmentation include Canneta et al., who combined K-means with self-organizing maps to cluster products across multiple dimensions [

21]. Wu et al. applied K-means along with correlation analysis to identify primary indicators to predict demand patterns [

22]. Egas and Masel used K-means clustering to reduce the time required to derive the inventory-control parameters in a large-scale inventory system. The proposed method outperformed a demand-based assignment strategy [

23]. Ozturk et al. used K-means to obtain SKU clusters. Then, an artificial neural network was applied to forecast demand for each individual cluster [

24].

It is also essential to highlight the applications of fuzzy C-means to SKU segmentation. Fuzzy C-means is a form of clustering in which a cluster is represented by a fuzzy set. Therefore, each data point can belong to more than one cluster. Notable applications include multicriteria ABC analysis using fuzzy C-means clustering [

25]. A recent study by Kucukdeniz and Sonmez proposed the integrated order-picking strategy based on SKU segmentation through fuzzy C-means. In this study, fuzzy clustering was applied to consider the order frequency of SKUs and their weights while designing the warehouse layout [

26]. Fuzzy C-means is an interesting alternative to K-means. However, the fact that each SKU can belong to more than one cluster is a disadvantage in the business context. Since businesses must quickly and confidently make critical yes/no decisions, the fuzziness of SKU segments does not provide any helpful flexibility for decision making at scale, and introduces unnecessary layers of complexity. Additionally, the fuzzy C-means method has the same drawbacks as those of classical K-means. The drawbacks include the a priori specification of the number of clusters, poor performance on datasets that contain clusters with unequal sizes or densities, and sensitivity to noise and outliers [

27].

In order to overcome the aforementioned shortcomings, preprocessing and dimensionality reduction techniques like PCA are applied to SKU data prior to clustering. For example, the authors conducted a study on the application of a constrained clustering method reinforced with principal component analysis. According to the research, the proposed method can provide significant compactness among item clusters [

28]. Another study took advantage of PCA and K-means to segment SKUs for recommendation purposes [

29]. In recent work, Bandyopadhyay et al. used PCA prior to K-means to achieve the effective segmentation of both customers and SKUs in the context of the fashion e-commerce industry [

29].

Table 1 lists the aforementioned clustering-based approaches to SKU segmentation and groups them in accordance with the utilized method. Even though the combination of K-means and PCA helps in overcoming some critical problems such as sensitivity to noise and multicollinearity, it does not address the parametrization problem, namely, the a priori specification of the number of clusters. Moreover, parametrization becomes even more sophisticated because the potential user must choose the number of principal components for PCA. The problem of parametrization and model finetuning can be extremely tedious and time-consuming in real-world applications. That is why our work attempts to close the research gap by proposing a framework that leverages AutoML for the automated cluster analysis of SKUs.

3. Materials and Methods

Summarizing the related work, we represent the data pipeline in the following flexible form: data storage and retrieval, preprocessing and standardization, dimensionality reduction (PCA), and clustering (K-means).

Figure 2 shows an example of such a pipeline visualized using Orange, a Pythonic data mining toolbox [

30]. The pipeline is flexible and capable of segmenting SKUs in the unsupervised learning setting, which is crucial since the class values corresponding to an a priori partition of the SKUs are not known [

31].

The popularity of K-means can be justified by the fact that more sophisticated clustering techniques such as mean shift [

32] and density-based spatial clustering of applications with noise (DBSCAN) [

33] do not allow for one to explicitly set the number of clusters (

k in K-means). The finetuning of these algorithms is nontrivially performed by selecting “window size” for mean shift and “density reachability” for DBSCAN. Additionally, the algorithms do not perform well if SKU data contain convex clusters with significant differences in densities.

The incorporation of PCA, on the other hand, allows for one to improve the clustering results for two main reasons. First, SKU features can share mutual information and correlate with each other. Second, it is natural for SKU data to contain some portion of noise due to the measurement or recording error. On that basis, PCA could be helpful as a tool in decorrelation and noise reduction [

8].

3.1. Data Storage

The underlying data layout, including file format and schema, is an essential aspect in obtaining high computational performance and storage efficiency. The proposed framework for automated cluster analysis works on top of the Apache Parquet file format. Apache Parquet is an efficient, structured, compressed, binary file format that stores data in a column-oriented way instead of by row [

34]. As a result, the values in each column are physically stored in contiguous memory locations, which provides the following crucial benefits:

Data are stored by column instead of by row. Columnwise compression is space-efficient because the specific compression techniques to a data type can be applied at the column level.

Query processing is more efficient as the columns are stored together. This is because the query engine can fetch only specific column values, skipping the columns that are not needed for a particular query.

Different encoding techniques can be applied to different columns, drastically reducing storage requirements without sacrificing query performance [

35].

As a result, Parquet provides convenient and space-efficient data compression and encoding scheme with enhanced performance to operate with complex multidimensional and multitype data.

3.2. Feature Scaling

Feature scaling, also known as data normalization, is an essential step in data preprocessing. Machine-learning techniques such as K-means use distances between data points to determine their similarity that render them especially sensitive to feature scaling. The primary role of feature scaling in ML pipelines is to standardize the independent variables, so they are in a fixed range. If feature scaling is not performed, K-means weigh variables with greater absolute values higher, severely undermining the clustering outcome [

36].

The proposed framework for automated cluster analysis uses min–max scaling, the most straightforward method that scales features, so they are in the range of [0, 1]. The min–max scaling is performed according to the following formula:

where

x is an original value and

is the normalized one.

3.3. Clustering

K-means is the most straightforward clustering method and, as the related work section revealed, is widely adopted for SKU segmentation purposes. K-means splits n observations (SKUs in the context of this study) into k clusters. Formally, given a set of SKUs , where each SKU is a feature vector, K-means segments x into k sets minimizing the within-cluster sum of squares, also known as inertia .

The proposed framework for automated cluster analysis incorporates minibatch K-means, a variant of K-means clustering that operates with so-called minibatches to decrease computational time while still attempting to minimize inertia. Minibatches refer to the randomly and iteratively sampled subsets of the input data. This approach significantly reduces the computational budget required to converge to a nearly optimal solution [

37]. Algorithm 1 demonstrates the key principle behind minibatch K-means. The algorithm iterates between two crucial steps. In the first step,

observations are sampled randomly to form a minibatch. Then, the minibatch is assigned to the nearest centroid that updates in the second step. In contrast to the standard K-means algorithm, the centroid updates are conducted per sample. These two steps are performed until convergence, or a predetermined stoppage criterion is met.

| Algorithm 1 Minibatch K-means [37]. |

- 1:

Given: k, minibatch , iterations t, data set X - 2:

Initialize each with an x picked randomly from X - 3:

- 4:

for to t do - 5:

examples picked randomly from x - 6:

for do - 7:

▹ Store the center nearest to x - 8:

end for - 9:

for do - 10:

▹ Get cached center for this x - 11:

▹ Update per-center counts - 12:

▹ Get per-center learning rate - 13:

▹ Take gradient step - 14:

end for - 15:

end for

|

3.4. Dimensionality Reduction

Observations originated from natural phenomena or industrial processes frequently contain a large number of variables. A multitude of SKU features that can potentially impact operational and strategic decisions is a notable example. Therefore, the data analysis pipelines can benefit from dimensionality reduction in one form or another. In general, dimensionality reduction can be defined as the transformation of data into a low-dimensional representation, so that the obtained representation retains meaningful properties and patterns of the original data [

38]. Principal component analysis (PCA) is the most widely adopted and theoretically grounded approach to dimensionality reduction. The proposed framework for automated cluster analysis incorporates PCA in order to decompose a multidimensional dataset of SKUs into a set of orthogonal components, such that the set of the obtained orthogonal components has a lower dimension, but is still capable of explaining a maximal amount of variance [

39].

Computation for PCA starts with considering the dataset of

n SKUs with

m features. The SKU dataset can be represented as the following matrix:

where

is a

ith row vector of length

m. Thus,

is a

matrix whose columns represent features of a given SKU.

The sample means

and standard deviations

can be defined with respect to the columns

and

. After that, the matrix can be standardized as follows:

where

stands for a

matrix.

Covariance matrix

can be defined as

. So, we can finally perform a standard diagonalization as follows:

where eigenvalues

are arranged in descending order:

. Transformation matrix

is the orthogonal eigenvector matrix. The rows are the principal components of

, and the value of

represents the variance associated with the

ith principal component [

39].

3.5. Validation

Since the ground truth is not known, clustering results must be validated on the basis of an internal evaluation metric. Generally, inertia gives some notion of how internally coherent clusters are. However, the metric suffers from several disadvantages. For example, it assumes that clusters are convex and isotropic, which is not always the case with SKUs. Additionally, inertia is not a normalized metric, and Euclidean distances tend to inflate in high-dimensional spaces. Fortunately, the latest can be partially alleviated by PCA [

40].

Unsupervised learning in general and clustering in particular approach the problem, which is biobjective by nature. On the one hand, data instances must be partitioned, such that points in the same cluster are similar to each other (this property is also known as cohesion). On the other hand, clusters themselves must be different from each other (a property known as separation). The silhouette coefficient is explicitly designed to evaluate the clustering results taking into account both cohesion and separation. The Silhouette coefficient is calculated for each sample and is composed of two components (

a and

b).

where

a is the mean distance between a sample and all other points within the same cluster (measure of cohesion).

b is the mean distance between a sample and all other points in the next nearest cluster (measure of separation) [

41].

3.6. Parameter Optimization

Parameter optimization is a common problem in SKU segmentation, and a distinct issue from the process of dimensionality reduction and solving the clustering problem itself. Parameter optimization in the context of the unsupervised learning-based SKU segmentation is the process of determining the optimal number of clusters in a data set (

k in K-means) and the optimal number of principal components (

p in PCA) [

42]. If appropriate values of

k and

p are not apparent from prior knowledge of the SKU properties, they must be defined somehow. That is where the elements of AutoML come into play. Grid search or a parameter sweep is the traditional way of performing parameter optimization [

43]. The method performs an exhaustive search through a specified set of parameter space guided by the Silhouette score.

The proposed framework for automated cluster analysis is built on top of the pipeline that has two hyperparameters to be finetuned. Namely, the number of clusters and the number of principal components . Two sets of hyperparameters K and P can be represented as a set of ordered pairs , also known as Cartesian product . Grid search then trains the pipeline with each pair and outputs the settings that achieved the highest Silhouette score.

Grid search suffers from the curse of dimensionality, but since the parameter settings that it evaluates are independent of each other, the parallelization process is straightforward, allowing for one to leverage the specialized hardware and cloud solutions [

44].

Implementation

The proposed framework for automated cluster analysis was implemented entirely in Python. The reading of the data from the Apache Parquet file format and all the manipulations with the data structures were performed using Pandas [

45], which was optimized for performance, with critical code paths written in such low-level programming languages as Cython and C [

46].

The unsupervised learning components, including K-means and PCA, were implemented using scikit-learn, an open-source ML library for Python [

47]. The scikit-learn implementation of K-means uses multiple processor cores benefiting from multiplatform shared-memory multiprocessing execution in Cython. Additionally, data are processed in chunks of 256 samples in parallel, which results in a low memory footprint [

48]. The scikit-learn implementation of PCA was based on [

49]. The data visualization was performed using Seaborn, a Python data visualization library [

50].

5. Discussion

This chapter discusses our research contributions and the technological advantages of the proposed implementation. It also discusses several use cases and provides managerial implications.

5.1. Research Contributions

The idea of prioritizing items for management attention dates back to the 1950s [

5], and the first attempt to cluster SKUs dates back to the 1990s [

7]. As time progresses, new clustering techniques are applied to this longstanding problem. Nowadays, the most common techniques include hierarchical clustering, K-means, fuzzy C-means, and K-means combined with PCA.

Even though hierarchical clustering is simple to implement and interpret at the managerial level, it does not scale well because of the computational complexity. The algorithm is also biased towards large clusters and tends to break them even if it leads to suboptimal segmentation. The method is also sensitive to noise and outliers [

19].

K-means is appealing in many aspects, including computational and memory efficiency, and interpretability in low-dimensional cases [

20]. However, the method requires the number of clusters to be specified in advance. In business applications, the a priori specification of the number of clusters is a severe drawback because it requires an additional effort to parametrize and finetune the model. Additionally, K-means performs poorly on datasets that contain clusters with unequal sizes or densities, and is also sensitive to both noise and outliers [

20].

Fuzzy C-means is an alternative to K-means that treats a cluster as a fuzzy set, allowing for SKUs to belong to more than one cluster. This approach may have theoretical importance. However, the fact that each SKU can belong to more than one cluster is a clear disadvantage in the business context. Since supply chains operate under pressure from both supply and demand, businesses have to make critical decisions quickly and confidently. Therefore, the fuzziness of SKU segments does not provide any helpful flexibility for decision making at scale, and introduces unnecessary layers of complexity to decision making [

27].

In order to overcome the mentioned shortcomings, preprocessing and dimensionality reduction techniques such as PCA are applied to SKU data prior to clustering. The incorporation of PCA allows for one to improve the clustering results through decorrelation and noise reduction [

38]. However, even though the combination of K-means and PCA helps to overcome some critical problems, it further worsens the parametrization and finetuning problem because the potential user must choose the number of principal components and the number of clusters. Our work closes this research gap by proposing to automate parametrization and finetuning. We propose an AutoML-based framework for SKU segmentation that leverages grid search along with the computationally efficient and parallelism-friendly implementations of clustering and dimensionality reduction algorithms. The following subsections shed light on the technological advantages of the proposed solution and managerial implications.

5.2. Technological Contributions

The proposed framework for automated cluster analysis can work with SKU features beyond that utilized by a classical ABC approach. The framework operates in an unsupervised learning setting, which is crucial since class values corresponding to an a priori partition of the SKUs are not known. The optimal number of clusters in a dataset and the optimal number of principal components are determined through the grid search in a fully automated fashion using the Silhouette score as a performance estimation metric.

In a real-world setting, decision making could be less straightforward and incorporate unquantifiable metrics. In this regard, the critical advantage of the framework is that the grid search examines every possible solution within the search space and ranks them in accordance with the Silhouette score. Therefore, the end user can select a solution that is aligned with some potentially unquantifiable managerial insights, but is not necessarily best according to the performance estimation criteria. Additionally, the framework is equipped with data-visualization capabilities, which give end users the ability to diagnose the segmentation validity, choose among alternative solutions, and effectively communicate results to the critical stakeholders.

The proposed framework is strongly associated with the so-called “no free lunch” theorem. According to the theorem, there cannot exist a superior algorithm to any competitive one across the domain of all possible problems [

52]. In this regard, the question of whether the proposed framework is inferior or superior to the potential alternative is meaningless, since its performance would depend on the data generation mechanism and distribution of feature values. Nevertheless, the following properties should be highlighted as the indisputable practical advantages of the proposed approach:

Automatism. Such labor-intensive and time-consuming steps as parameter optimization and finetuning are fully automated and do not require the participation of data scientists or other human experts.

Parallelism. The proposed framework for automated cluster analysis incorporates minibatch K-means, a computationally cheap variant of K-means clustering. The implementation of minibatch K-means uses multiple processor cores benefiting from multiplatform shared-memory multiprocessing execution in Cython. Additionally, data are processed in chunks of 256 samples in parallel. The parallelization process for the grid search is straightforward as well, which allows for one to leverage the specialized hardware and cloud solutions.

Big-data-friendliness. The proposed framework operates on top of the Apache Parquet file format, an efficient, structured, compressed, partition-friendly, and column-oriented data format. The reading of the data from the Apache Parquet file format and all the manipulations with the data structures were performed using Pandas, which was optimized for performance.

Interpretability. Data-visualization capabilities allow for a potential user to diagnose the segmentation validity, spot outliers, gain critical insights regarding the data structure, and effectively communicate results to the critical stakeholders.

Universality. The optimal number of clusters in a dataset and the optimal number of principal components are usually not apparent from prior knowledge of the SKU properties. Since the proposed framework performs an exhaustive search through a specified set of parameters, it may be applied to any SKU dataset with numeric features or even extended to similar domains, for example, customer or supplier segmentation. The latter could be postulated as a promising direction for future research.

5.3. Managerial Implications

Treating thousands of units individually is overwhelming for businesses of all sizes, and beyond anyone’s managerial effort and attention. Additionally, it may be simply computationally unfeasible to invoke a sophisticated forecasting technique or inventory policy at the granular SKU level. Segmentation implies focus, and allows for one to define a manageable number of SKU groups to facilitate strategy, and enhance various operational planning and material handling activities.

A classical example involves segmenting SKUs by margin and volume, which allows for a manager to focus on high-volume and high-margin products [

6]. So, a very-high-cost, high-margin product line with volatile demand would benefit from a flexible and responsive supply chain. For this line, a company can afford to spend more on transportation to meet demand and keep the high fill rate. On the other hand, low-cost everyday items with stable demand can take advantage of a slow and economical supply chain to minimize costs and increase margins. Additionally, segmenting raw materials may help in applying a better sourcing strategy, and segmentation based on demand patterns and predictability may help in estimating the supply-chain resilience and manage risks accordingly. The numerical experiment demonstrated in our manuscript is an example of SKU segmentation based on the physical property of goods at the pallet level. Such segmentation is aimed at deriving SKU clusters that can be treated under common material handling policy.

Other common use cases include a selection of an appropriate inventory control policy [

7], categorizing SKUs by the demand patterns [

9], optimal allocation under the limited storage capacity [

10], selecting an appropriate supply-chain strategy [

9], and evaluating the environmental impacts of inventory throughout its life cycle [

11].

Real-world supply chains and operations are very dynamic and subject to change. The company’s product portfolio may change, and it may not know what the margins and demand patterns will be, or some SKUs are more challenging to handle due to their physical properties and require longer lead times. In these cases, cluster analysis must be conducted once again with the updated data that potentially contains new features. That is where the true power of our solution comes into play. Since the optimal number of clusters in a dataset and the optimal number of principal components are usually not apparent from the SKU properties, they must be finetuned. However, parametrization and finetuning are labor-intensive and time-consuming steps that involve the participation of highly qualified experts like data scientists or machine-learning engineers. The proposed framework for automated cluster analysis fully automates finetuning. Therefore, the precious time of the qualified labor force can be reallocated to other corporate tasks. Additionally, the computational cheapness and parallelism-friendliness of the proposed framework entail scalability, which is crucial as a business grows. Besides that, our solution is supplemented with data-visualization capabilities that allow for a potential user to diagnose the segmentation validity, spot outliers, gain critical insights regarding the data structure, and effectively communicate results to critical stakeholders.

6. Conclusions

A retailer may carry thousands or even millions of SKUs. Besides the volume, SKU data can be high-dimensional. Given the data volumes and the multitude of potentially important dimensions to consider, it could be computationally challenging to manage each SKU individually. In order to address this problem, a framework for automated cluster analysis was proposed. The framework incorporates minibatch K-means clustering, principal component analysis, and grid search for parameter tuning. It operates on top of the Apache Parquet file format, an efficient, structured, compressed, column-oriented data format; therefore, it is expected to be efficient for real-world SKU segmentation problems of high dimensionality.

The proposed framework was tested on the basis of a real-world dataset that contained SKU data at the pallet level. Since the framework is equipped with data-visualization tools, the potential user can diagnose the segmentation validity. For example, using the pairwise view of the features after clustering, the user can conclude that the produced SKU clusters are homogeneous enough to be treated under common inventory management or material handling policy. The data visualization tools allow for one to spot outliers, gain critical insights regarding the data structure, and effectively communicate results to critical stakeholders.

It is also essential to highlight a few limitations of our solution. First, K-means clustering, which constitutes a core of the proposed framework, segments data exclusively on the basis of Euclidean distance and is thereby not applicable to categorical data. Therefore, the incorporation of variations in K-means capable of working with non-numerical data can be postulated as a useful extension of our solution and a promising direction for future research. K-modes [

53] and K-prototypes [

54] are preliminary candidates for this extension. Second, PCA is restricted to only a linear map, which may lead to a substantial loss of variance (and information) when the dimensionality is reduced drastically. Autoassociative neural networks, also known as autoencoders, have the potential to overcome this issue through their ability to stack numerous nonlinear transformations to reduce input into a low-dimensional latent space [

55]. Therefore, exploring autoencoders may also be considered the second promising direction for future research. Since the proposed framework performs an exhaustive search through a specified set of parameters, it may be applied to similar domains, for example, customer or supplier segmentation, which is the third promising extension and direction for future research.