Abstract

Artificial intelligence (AI) agents are widely used in the retail and distribution industry. The primary objective was to investigate whether the gender of AI agents influences trust and grounding. This paper examined the influence of AI agent gender and brand concepts on trust and grounding within virtual brand spaces. For this purpose, it used two independent variables: brand concept (functional vs. experiential) and AI agent gender (male vs. female). The dependent variables included AI agent trust and grounding. The study revealed that in virtual brand spaces centered around a functional concept, male AI agents generated higher levels of trust than female AI agents, whereas, when focused on an experiential concept, female AI agents induced higher levels of grounding than male AI agents. Furthermore, the findings indicate that the association between customers’ identification with AI agents and recommendations for actual brand purchases is mediated by trust and grounding. These findings support the idea that users who strongly identify with AI agents are more inclined to recommend brand products. By presenting alternatives that foster the establishment and sustenance of a meaningful, sustainable relationship between humans and AI, this study contributes to research on human–computer interactions.

1. Introduction

Recently, at CES 2024—hosted by the Consumer Technology Association—the French cosmetic company L’Oréal introduced a generative AI called the “Beauty Genius”. AI provides personalized skincare recommendations based on updated photos of the user’s skin. Walmart, the world’s largest retailer, provides a generative AI chatbot that searches for and recommends products for specific purposes.

Utilizing artificial intelligence (AI) holds substantial potential for enhancing numerous business functions, encompassing the automation of operational processes, extraction of marketing insights from data, engagement with both customers and employees, and the formulation of strategic plans involving segmentation, analytics, and beyond [1]. A considerable number of retailers and service providers have embraced the utilization of generative AI to discern the preferences and needs of their clientele. Within the retail and distribution sector, there exists a burgeoning interest in leveraging AI-driven data analysis to optimize productivity and efficiency, primarily due to the staggering volume of data generated on a daily basis [2]. As a result, diverse AI-based platforms have been developed and employed.

AI agents, including chatbots, are widely used technologies in the retail and distribution industry, particularly in and e-commerce [3]. The proliferation of AI agents has been propelled by advances in computer technology. This research defines them as digital entities with anthropomorphic appearances, controlled by humans or software, and capable of interactions. Virtual brand space represents one of the fastest-growing domains of AI commercialization. These agents facilitate targeted advertising by linking with consumer databases and promptly responding to user queries about products and services. This automated social presence may supplement or replace human service staff, especially when addressing routine inquiries.

Anthropomorphism has often been employed by AI agent designers to enhance animacy. These agents, whether endowed with human-like behaviors or embodied in visual or physical interfaces [4], impact judgments of likeability and animacy. Studies affirm the effects of anthropomorphism on AI agents, with human-like appearances fostering a stronger sense of social presence and enabling richer human–computer interactions (HCIs) than purely functional forms [5]. Consequently, the significance of human–AI interactions is increasingly emphasized in today’s marketplace, driving the development of social AI that adheres to behavioral norms in human interactions [6].

To enhance realism, AI agents are often designed with additional human elements; previous research on HCIs revealed that individuals are influenced by gender stereotypes when interacting with computers. Manipulating a robot’s gender by altering its appearance led to perceptions where male robots were deemed more agentic, suitable for tasks requiring mathematical ability, while female robots were perceived as more communal, fitting tasks requiring verbal ability [7].

Brand strategies should embrace emerging technological trends, such as virtual stores, to craft personalized and captivating customer experiences. From a strategic brand management perspective, brand concepts are delineated into functional and experiential types. This distinction underscores the potential variability in consumer responses based on the alignment between the gender of AI agents and brand concepts.

This study provides an overview of theoretical and practical knowledge concerning AI agents in marketing by investigating the impact of AI agent identification on brand recommendations within the virtual brand space.

This study examines the impact of AI agent gender and brand concepts on the trust and grounding of AI agents within virtual brand spaces and provides valuable insights into the effectiveness of AI agents in shaping virtual experiences. In the dynamic landscape of advancing AI technology, this study proposes strategies for the thoughtful utilization of AI technology.

2. Literature Review and Hypothesis

2.1. AI Agents and the Gender of Agents

AI encompasses “programs, algorithms, systems, and machines” [8] that emulate elements of human intelligence and behavior [9,10]. Using various technologies like machine learning, natural language processing, deep learning, big data analysis, and physical robots [1,11,12], AI has seen substantial development and become integral in consumers’ daily lives.

AI agents, defined as “computer-generated graphically displayed entities that represent either imaginary characters or real humans controlled by AI” [13], exist in diverse forms, including animated pictures, interactive 3D avatars in virtual environments [14], and human-like animated customer service agents resembling real sales representatives [15]. These agents simulate human-like interactions by comprehending user queries and executing specific tasks akin to real-person interactions.

Advancements in AI have enabled AI agents to learn and improve from each customer interaction, thereby enhancing their intelligence. They offer consumers an easily accessible means of interaction, assisting customers in online transactions by providing additional information, personalized advice, recommendations [15,16,17], and technical support. Strategically used, these agents enable companies to engage with customers on a personal level and provide continuous support, striving for a seamless, time-efficient, and cost-effective online experience [18,19].

Research has delved into how variations in AI agent morphology influence user evaluations and interactions with technology [20]. AI agents, functioning as social bots, trigger social responses from users despite their awareness of the nonhuman nature of computers [21]. Visual cues that mirror human characteristics tend to prompt users to treat chat agents as human and engage with them socially [22].

Among the design elements to enhance realism, agents can incorporate “human” characteristics and features. Gender, which has been extensively studied among these characteristics, significantly impacts the effectiveness of agents [23]. Gender stereotypes play a significant role in shaping human–AI agent interactions, which is akin to the influence of human gender stereotypes [7]. For instance, research conducted by Nass et al. (1997) revealed that when hearing a male voice from a computer, humans tend to attribute dominance to the machine’s personality, which is not the case when hearing a female voice. Therefore, male voices tend to reinforce the sense of trust between humans and computers. Furthermore, an exploration into the evaluation of robots based on gender-associated appearances found that robots with a male-like appearance garnered positive assessments when engaged in adventurous and strength-demanding tasks. Meanwhile, those with a female-like appearance received favorable evaluations when undertaking delicate and meticulous tasks [7]. Previous studies have underscored the impact of gender stereotypes on human–computer interactions, illustrating that participants exhibit stereotypical responses toward computers featuring different gender-associated voices. In particular, masculine voices are perceived as more authoritative, and dominant traits are more readily associated with men [24].

Gender stereotypes, which affect judgments of competence and warmth, often lead to men being perceived as more competent and women as warmer [25,26]. These biases influence evaluations across various scenarios [27,28,29]

2.2. Brand Concept

Within brand management, functional brands emphasize their functional performance. Previous research has defined functional value as a product’s ability to fulfill its intended functions in a consumer’s everyday life [30]. Functional needs, motivating consumers to seek products that address consumption-related problems [31,32], are met by products demonstrating functional performance. Therefore, a functional brand is designed to meet externally generated consumption needs [33]. Brands, as suggested by Park et al. [34], can be managed to alleviate uncertainty in consumer lives, offering control and efficacy, thus closely associating functional brands with product performance. Visual representations within brands remind or communicate functional benefits to customers [31].

Functional brands aim to effectively convey and reinforce their commitment to aiding customers, thereby strengthening brand–customer relationships [30,35]. Customer satisfaction with functional brands is pivotal in determining customer commitment, aligning with the core concept of brand management. According to the information-processing paradigm, consumer behavior leans toward objective and logical problem-solving [36,37]. Hence, customer confidence in a preferred functional brand is likely higher when the utilitarian value of the product category is substantial. Furthermore, Chaudhuri and Holbrook [37] identified a significant negative correlation between emotional response and a brand’s functional value.

Experiential brand strategies differentiate themselves from other strategies. Holbrook and Hirschman [38] defined experiential needs as desires for products that provide sensory pleasure; brands emphasizing experiential concepts highlight the brand’s impact on sensory satisfaction, spotlighting the experiential and fantasy aspects associated with consumption through various elements of the marketing mix. While research often investigates experiential needs in a visual context during purchasing decisions, other human senses constitute aesthetic experiences in traditional marketing research [31]. A complete appreciation of an aesthetic experience results from combining sensory inputs.

2.3. AI Agent Trust and Grounding

Most researchers have primarily focused on interactive AI agents, noting their ability to enhance customer satisfaction with a website or product, credibility, and patronage intentions [39,40]. Regarding HCIs, social-response theory posits that individuals respond to technology endowed with human-like features [41]. Studies suggest that increased anthropomorphism in an agent positively correlates with perceived credibility and competence [42]. However, even if an AI agent is realistic, the lack of anthropomorphism might hinder users’ willingness to engage or communicate due to the absence of perceived social potential [43]. Users tend to apply social rules to technology that exhibits human-like traits despite their conscious acknowledgment that they are interacting with a machine [40]. The degree of social presence embodied in avatars on company websites significantly impacts trust in website information and its emotional appeal, influencing purchase intentions.

Trust in HCI research aligns with discussions on interpersonal communication, exploring whether conversational agents designed with properties known to enhance trust in human relationships are more trusted by users. Rooted in the Computers As Social Actors paradigm, this approach indicates that social norms guiding human interaction apply to HCIs, as users unconsciously treat computers as independent social entities [41]. Trust in conversational agents is similar to trust in humans, with the belief that agents possessing trustworthy traits foster user trust. Various studies have defined trust similarly, emphasizing positive expectations about reliability, dependability, and confidence in an [44,45]. Trust in technology centers on expectations of reliable performance, predictability, and dependability. However, debates persist on whether factors fostering trust in human relationships apply similarly to trust in human–agent interactions.

Nonetheless, divergent views emerge, suggesting that users approach machine interactions distinctively, highlighting that the principles governing trust in interpersonal relations may not directly apply to human–machine trust [46,47]. For instance, Clark et al. [46] indicated that users approach conversations with computers more utilitarianly, distinguishing between social and transactional roles due to perceiving agents as tools. Consequently, users view conversations with agents primarily as goal-oriented transactions. They prioritize aspects like performance and security in their trust judgments concerning machines, questioning the necessity of establishing social interactions or relationships with machines.

Continual interaction with AI agents is significant, generating data that can enhance system efficiency [48]. This symbiotic relationship fosters shared cognitive understanding, mutually benefiting users and the system. Establishing mutual understanding, termed “grounding”, is pivotal in these interactions. While human communication achieves this naturally, achieving collaborative grounding with AI systems presents challenges [49]. Grounding denotes mutual understanding in conversation, involving explicit verbal and nonverbal acknowledgments that signify comprehension of prior conversation elements [50]. Consequently, grounding does not introduce new content to the conversation; rather, it serves as a clear signal that the listener has not only received but also comprehended the speaker’s prior contributions to the ongoing dialog. True linguistic grounding remains a challenge for machines, despite their ability to exhibit nonlinguistic grounding signals.

AI agents can be viewed as “assistants” or “companions.” In the “assistant” perspective, AI technology is a useful aid to humans, assisting in task completion, such as tracking Uber ride arrivals or aiding disabled individuals [39]. These conversations lean toward task-oriented, formal exchanges focused on specific functional goals. In contrast, the “companion” perspective focuses on emotional support, where agents are seen as trustworthy companions engaging users in typical everyday conversations, akin to human interactions [51]. Sophisticated natural language processing capabilities allow AI avatars to mimic human-like behaviors, enabling users to interact as they would with another human [52].

This study proposes that the effects of two personality traits on evaluating AI agent trust and grounding will vary based on brand concepts (functional concept vs. experiential concept). Functional brands emphasize practical purposes, whereas experiential brands focus on sensory experiences [38]. For functional concepts, the AI agent’s competence (representing the brand’s competence) becomes crucial for purchase decisions [53]. In contrast, experiential brands are evaluated based on consumers’ sensory experiences and affective responses, where warmth exhibited by the AI agent is more significant in influencing emotional decisions and brand sales [54]. Consequently, the following hypothesis is proposed:

H1:

For functional brands, a male AI agent will have higher trust and grounding, whereas for experiential brands, a female AI agent will have higher trust and grounding.

Integrating AI agents into customer service signifies a transformative evolution, offering precise recommendations and enhancing company–consumer relationships [55]. These AI-powered systems facilitate interaction and blur the distinction between human service assistants and conversational chatbots. Perceptions of AI-empowered agents are shaped by their anthropomorphic design cues during interaction and significantly influenced by their pre-encounter introduction and framing [16].

Van Looy et al. [56] defined AI agent identification as users’ emotional attachment to the AI agent, categorized into three subcategories: perceived similarity, embodied presence, and wishful identification. Studies indicate that male and younger users are more likely to identify with their avatars, especially if the avatars are idealized [57,58,59]. Several studies have explored the relationship between identification with virtual characters and various outcomes. For instance, Kim et al. [60] demonstrated that identification with virtual characters can enhance player self-efficacy and trust within their virtual communities. Additionally, Yee et al. [61] found that online virtual world visitors who perceive a smaller psychological gap between themselves and virtual avatars express greater satisfaction with avatars and spend more time online. Moreover, identification positively influences trust in virtual avatars [62,63].

Antecedents of trust can vary depending on individual differences (e.g., age or gender) and contextual factors. For instance, research shows that engaging in small talk with an embodied conversational agent can be effective in settings such as customer service, healthcare, or casual information exchange, whereas it might not be as effective in more serious settings like financial transactions or military training [64]. Certain demographic factors or personal traits can moderate the impact of these features [44].

Grounding establishes a connection between conversational turns, confirming active listening and fostering closeness and mutual understanding [65]. Beyond mere confirmation, grounding has a role in relationship development, acknowledging contributions and establishing shared knowledge [66,67]. Understanding, a cornerstone in human relationships, resolves conflicts and nurtures stronger emotional connections through shared thoughts and feelings [65]. These attributions significantly influence customers’ perceptions of salespersons, thereby impacting the formation of trust and inclinations for future interactions. Recognizing and establishing shared knowledge during conversations is fundamental in relationship development [67]. When consumers experience identification with AI agents, they tend to develop heightened trust with them. This leads to sustained grounding, wherein the consumer not only comprehends but also acknowledges the guidance provided by the agent. Consequently, an intention may emerge within the consumer to prefer or recommend the brand advocated by the AI agent. Consequently, the following hypothesis is proposed:

H2:

The effect of AI agent identifications on brand recommendation is mediated by AI agent trust and grounding.

3. Materials and Methods

3.1. Data Collection and Sample

Employing a between-subjects design, the research used two independent variables: brand concept (functional vs. experiential) and AI agent gender (male vs. female). The measured dependent variables included AI agent trust and grounding, while brand attitude served as a control variable. To test the proposed hypotheses, a 2 × 2 experimental design comprising brand concept (functional brand vs. experiential brand) and AI agent gender (male vs. female). Data were collected from individuals who had used the XR platform within the last month.

The final sample consisted of 187 respondents, with 49.7% being men and 50.3% being women, falling within the age range of 25–40 years. An explanation of the survey’s purpose was provided to the participants, ensuring that they were aware of their right to withdraw at any point. Additionally, participants were assured of data confidentiality in accordance with the Korean Statistical Law, with a commitment to destroy all personal data after a year. Each participant was randomly assigned to one of the four experimental conditions.

3.2. Stimulus Development and Measures

Nonverbal avatar communication can encompass various forms such as text-based (speech-to-text avatars), gestures, and/or facial expressions. Perceptions regarding the conversational agent may be shaped by both anthropomorphic design cues during conversation and by the agent’s pre-introduction. Starting off, the study researchers aimed to develop an AI agent for the experiment, where two university students specializing in statistical data and business administration, respectively, were approached. Additionally, to maintain objectivity and ensure the validity of the data collected, a field expert provided feedback. To enhance the social presence of AI agents, efforts were made to assign names, where the male AI agent was named Ethan and the female AI agent was named Anna. Subsequently, specific store guidance phrases were formulated for each AI agent within a virtual brand store. The formulated phrase for Anna read as “Welcome to the (brand) Store. I’m Anna, an AI agent here to assist you. Feel free to ask me anything about (brand), and I’ll respond promptly.”

The researcher conducted two pre-tests to select brand concepts suitable for the virtual place. In the first pre-test, Grandeur (AZERA in US) was chosen as the functional brand (n = 15; M functional benefit = 4.98 vs. M experiential benefit = 4.06; p < 0.001). In the second pre-test, BMW MINI was selected as the experiential brand (n = 23; Mfunctional benefit = 4.11 vs. Mexperiential benefit = 4.81; p < 0.001). Next, AI agents were created. A male and female agent were made using Zepeto, a virtual space platform. Ethan was the male AI agent (n = 14; male = 4.85 vs. Female = 2.09; p < 0.001); Anna was the female AI agent (n = 18; Male = 3.11 vs. Female = 5.71; p < 0.001). The items of each measure were assessed on a 7-point scale, ranging from 1 = strongly disagree to 7 = strongly agree (Table 1).

Table 1.

Scale.

3.3. Procedure

Participants were introduced to the AI agents within the virtual brand space and were tasked with evaluating the AI agents. Participants in the male AI agent group were instructed to create an AI agent that portrayed a male figure, whereas those in the female AI agent group were instructed to develop one resembling a female figure. Before the experiment, participants received information regarding the study’s purpose. Each participant completed a questionnaire that included control variables for brand attitude.

After finishing the control variable task, the participants engaged in a 5 min session within the virtual brand space. Upon completion, they were prompted to respond to manipulation check items regarding AI agent gender and brand concepts. Additionally, participants were requested to express their opinions on AI trust, grounding avatar identification, and recommendations. The entire procedure lasted approximately 15 min.

4. Results

4.1. Manipulation Checks

Before analyzing the experimental results, a manipulation check was performed to ensure proper manipulation of the experimental stimuli. The results showed the experimental stimulus as successfully manipulated. Participants in the Grandeur group perceived functional benefits (M = 4.98) as higher than experiential benefits (M = 4.08; F = 17.238, p < 0.001). Moreover, participants in the MINI group perceived experiential benefits (M = 4.81) higher than functional benefits (M = 4.06; F = 47.859, p < 0.001).

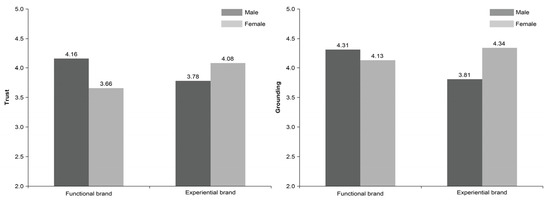

4.2. Analysis of Trust and Grounding

Analysis of variance (ANOVA) was performed for empirical verification. ANOVA determines the differences between the means of different groups. The results of the 2 × 2 between-subjects analysis on AI agent trust revealed that the main effects of brand concept (F = 1.745, p > 0.1) and AI agent gender (F = 1.859, p > 0.1) were not statistically significant. However, the two-way interaction effect between brand concept and AI agent gender was found to be significant (F = 7.623, p < 0.01). Specifically, when virtual brand space was a functional concept participants reported a higher level of AI agent trust with a male AI agent (M = 4.16) than with a female AI agent (M = 3.66; F = 5.685, p < 0.05). However, when the virtual brand space was an experiential concept, participants’ trust did not differ based on AI agent gender (male AI agent = 3.78 vs. female AI agent = 4.08; F = 3.236, p = 0.06).

Next, the results of the 2 × 2 between-subjects analysis on grounding revealed that the main effects of brand concept (F = 0.728, p > 0.1) and AI agent gender (F = 1.039, p > 0.1) were not statistically significant. However, the two-way interaction effect between them was found to be significant (F = 4.426, p < 0.05). Specifically, when the virtual brand space was a functional concept, participants’ grounding did not differ based on AI agent gender (male AI agent = 4.31 vs. female AI agent = 4.13; F = 0.641, p > 0.1). However, when virtual brand space was an experiential concept, participants reported a higher level of grounding with a male AI agent (M = 4.34) than with a female AI agent (M = 3.81; F = 4.476, p < 0.05). Therefore, this finding supports H1 and H2 (Table 2; Figure 1).

Table 2.

Results of 2 × 2 between-subjects analysis.

Figure 1.

Interaction effects.

4.3. Mediation Effect

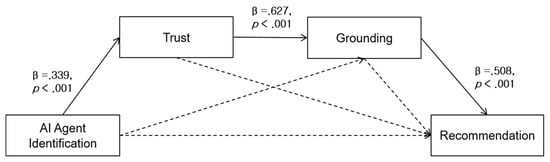

A mediation analysis was performed to test Hypothesis 2, which explains the mechanism of this study. For this, Model 6 of the PROCESS macro was used (Table 3). AI agent identification was set as an independent variable, AI trust and grounding were mediating variables, and recommendation was a dependent variable. The analysis results showed significant mediating effects of trust and grounding. Therefore, this finding supports Hypothesis 2 (Figure 2).

Table 3.

Results of Model 6 of the PROCESS Macro.

Figure 2.

Double mediation effects.

5. Conclusions

The popularity of AI agents is fueled by two macroenvironmental factors. First, advancements in computer/digital technologies have enabled the development of more complex avatars. These often appear in 3D forms, imbued with seemingly distinctive personalities, appearances, and behavioral patterns, and are overall more appealing than the previous simpler versions [72,73]. Second, the increase in the use of AI agents reflects the growing importance of online service experiences, such as education, gaming, banking, and shopping [73,74]. Online customers frequently express frustration when they cannot quickly and easily find relevant information on a website. AI agents can effectively and efficiently provide a solution to this.

Online stores, relying solely on graphical user interfaces, do not enable retailers to persuade potential customers to buy products or provide customers with the opportunity to ask questions and learn more about products as they would with a human salesperson. Communication is crucial in attracting, serving, and retaining customers. One of the most beneficial ways to engage customers anywhere, anytime, and provide them with easy and natural interaction is to use a conversational user interface.

This study examines the impact of the gender of AI agents on trust and grounding in the virtual brand space. The primary objective was to investigate whether the gender of AI agents influences trust and grounding, focusing on their symbiotic relations. The study reveals the following key findings: in virtual brand spaces with a functional concept, male AI agents were found to elicit higher levels of trust. However, in virtual brand spaces with an experiential concept, female AI agents elicited higher levels of grounding. Additionally, this research indicates that the relationship between customers’ identification with AI agents and recommendations for actual brand purchases is mediated by trust and grounding. These findings support the notion that users strongly identifying with AI agents are more likely to recommend brand products after engaging in conversation within the virtual brand space.

The analysis reveals two significant academic implications. First, the study identifies the pivotal role of the brand concept in establishing continuous grounding between consumers and AI agents. Contextually, grounding signifies an ongoing state of communication facilitated through linguistic and nonlinguistic means. Given the nonhuman nature of AI agents, the expectation of sustained grounding from a consumer’s perspective poses challenges. As such, from a corporate standpoint, the collection of consumer grounding data is imperative for enhancing the quality of AI agents and delivering personalized services. This research indicates that for effective grounding, the alignment between brand concepts and the gender of the AI agent must be carefully considered.

Second, this research identifies the influence of the AI agent’s gender in augmenting the brand experience within virtual spaces. With the progression of virtual space platform technology, the prevalence of companies establishing brand stores in virtual spaces is on the rise. Offering consumers more than a mere store visit is crucial to providing diverse experiences. This study underscores the significance of emphasized brand images and AI agent gender in exploring the brand and validates their roles in the overall virtual brand experience.

In contrast to the physical world, the virtual brand space enables a brand experience that transcends time and space. Furthermore, this study establishes the role of conversation as a mediator between AI agent identification and brand recommendation. These insights contribute to understanding how the gender of AI agent representation can shape the customer–AI agent relationship in virtual spaces, outlining implications for marketers and designers.

The study results will provide practical insights for marketers and shed light on the evolving nature of consumer–AI agent interactions in virtual environments. Subsequent research can build upon these findings to explore additional factors influencing conversation in the virtual brand space and develop more targeted marketing strategies.

Although this study provides valuable insights, it is important to acknowledge certain limitations. First, automobiles comprised the chosen product category for the experiment. Given that automobiles are classified as high-involvement products, prior consumer knowledge may have a substantial impact on outcomes. Despite analyzing attitudes toward the experimental brand as a control variable, the study did not account for prior knowledge specifically related to the automobile product category. Therefore, future research focusing on low-involvement product categories would contribute significantly to a more comprehensive understanding.

Second, this study did not consider the degree of the AI agent’s anthropomorphism. The literature suggests that AI agents can anthropomorphize using animated forms that closely resemble real individuals, and a higher degree of anthropomorphism has been associated with increased grounding. Consequently, future research examining variations in anthropomorphism, ranging from animated to realistic representations, would offer meaningful insights into the impact on consumer responses.

AI agents are attracting attention among virtual space platform users because of their potential to become the next-generation search service, replacing human assistants [75,76]. The next-generation AI agent, represented by a virtual human, leads the innovative development of intelligent technology to a new historical stage [77,78]. This technology will profoundly impact how society produces, lives, and communicates and will fundamentally reshape society and humanity [79].

Marketers must understand how to best integrate AI into businesses and contribute to individuals’ higher acceptance to maintain a competitive advantage. Given the advent of AI-generated marketing, it is critical to understand whether consumers will accept AI-generated content and information, and how they accept it.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The dataset that supports the findings of this study is available from the corresponding author on request.

Conflicts of Interest

The author declares no conflicts of interest.

References

- Davenport, T.; Guha, A.; Grewal, D.; Bressgott, T. How artificial intelligence will change the future of marketing. J. Acad. Mark. Sci. 2020, 48, 24–42. [Google Scholar] [CrossRef]

- Gesing, B.; Peterson, S.J.; Michelsen, D. Artificial intelligence in logistics; DHL Customer Solutions & Innovation: Troisdorf, Germany, 2018; Volume 3. [Google Scholar]

- Brandtzaeg, P.B.; Følstad, A. Chatbots: Changing user needs and motivations. Interactions 2018, 25, 38–43. [Google Scholar] [CrossRef]

- Cassell, J.; Sullivan, J.; Prevost, S.; Churchill, E. Embodied Conversational Agents; MIT Press: Cambridge, MA, USA, 2000. [Google Scholar]

- Kwak, S.S. The impact of the robot appearance types on social interaction with a robot and service evaluation of a robot. Arch. Res. 2014, 27, 81–93. [Google Scholar] [CrossRef]

- Bartneck, C.; Forlizzi, J. A design-centred framework for social human-robot interaction. In RO-MAN, Proceedings of the 13th IEEE International Workshop on Robot and Human Interactive Communication (IEEE Catalog No. 04TH8759), Okayama, Japan, 20–22 September 2004; IEEE: Piscataway, NJ, USA, 2004; Volume 2004, pp. 591–594. [Google Scholar] [CrossRef]

- Eyssel, F.; Hegel, F. (S)he’s got the look: Gender stereotyping of robots. J. Appl. Soc. Psychol. 2012, 42, 2213–2230. [Google Scholar] [CrossRef]

- Shankar, V. How artificial intelligence (AI) is reshaping retailing. J. Retail. 2018, 94, vi–xi. [Google Scholar] [CrossRef]

- Huang, M.H.; Rust, R.T. Artificial intelligence in service. J. Serv. Res. 2018, 21, 155–172. [Google Scholar] [CrossRef]

- Syam, N.; Sharma, A. Waiting for a sales renaissance in the fourth Industrial Revolution: Machine learning and artificial intelligence in sales research and practice. Ind. Mark. Manag. 2018, 69, 135–146. [Google Scholar] [CrossRef]

- Mariani, M. Big data and analytics in tourism and hospitality: A perspective article. Tour. Rev. 2020, 75, 299–303. [Google Scholar] [CrossRef]

- Mariani, M.M.; Baggio, R.; Fuchs, M.; Höepken, W. Business intelligence and big data in hospitality and tourism: A systematic literature review. Int. J. Contemp. Hosp. Manag. 2018, 30, 3514–3554. [Google Scholar] [CrossRef]

- Choi, Y.K.; Miracle, G.E.; Biocca, F. The effects of anthropomorphic agents on advertising effectiveness and the mediating role of presence. J. Interact. Advert. 2001, 2, 19–32. [Google Scholar] [CrossRef]

- Jin, S.A.A.; Lee, K.M. The influence of regulatory fit and interactivity on brand satisfaction and trust in e-health marketing inside 3D virtual worlds (second life). Cyberpsychol. Behav. Soc. Netw. 2010, 13, 673–680. [Google Scholar] [CrossRef]

- Verhagen, T.; Van Nes, J.; Feldberg, F.; Van Dolen, W. Virtual customer service agents: Using social presence and personalization to shape online service encounters. J. Comput. Mediat. Commun. 2014, 19, 529–545. [Google Scholar] [CrossRef]

- Araujo, T. Living up to the chatbot hype: The influence of anthropomorphic design cues and communicative agency framing on conversational agent and company perceptions. Comput. Hum. Behav. 2018, 85, 183–189. [Google Scholar] [CrossRef]

- De Cicco, R.; Silva, S.C.; Alparone, F.R. Millennials’ attitude toward chatbots: An experimental study in a social relationship perspective. Int. J. Retail. Distrib. Manag. 2020, 48, 1213–1233. [Google Scholar] [CrossRef]

- Adam, M.; Wessel, M.; Benlian, A. AI-based chatbots in customer service and their effects on user compliance. Electron. Mark. 2021, 31, 427–445. [Google Scholar] [CrossRef]

- Prentice, C.; Weaven, S.; Wong, I.A. Linking AI quality performance and customer engagement: The moderating effect of AI preference. Int. J. Hosp. Manag. 2020, 90, 102629. [Google Scholar] [CrossRef]

- Fong, T.; Nourbakhsh, I.; Dautenhahn, K. A survey of socially interactive robots. Robot. Auton. Syst. 2003, 42, 143–166. [Google Scholar] [CrossRef]

- Zhao, S. Toward a taxonomy of copresence. Presence Teleoperators Virtual Environ. 2003, 12, 445–455. [Google Scholar] [CrossRef]

- Go, E.; Sundar, S.S. Humanizing chatbots: The effects of visual, identity and conversational cues on humanness perceptions. Comput. Hum. Behav. 2019, 97, 304–316. [Google Scholar] [CrossRef]

- Nass, C.; Yen, C. The Man Who Lied to His Laptop: What Machines Teach Us about Human Relationships; Current: New York, NY, USA, 2010. [Google Scholar]

- Nass, C.; Moon, Y.; Green, N. Are machines gender neutral? Gender-stereotypic responses to computers with voices. J. Appl. Soc. Psychol. 1997, 27, 864–876. [Google Scholar] [CrossRef]

- Broverman, I.K.; Vogel, S.R.; Broverman, D.M.; Clarkson, F.E.; Rosenkrantz, P.S. Sex-role stereotypes: A current appraisal. J. Soc. Issues 1972, 28, 59–78. [Google Scholar] [CrossRef]

- Fiske, S.T.; Cuddy, A.J.; Glick, P. Universal dimensions of social cognition: Warmth and competence. Trends Cogn. Sci. 2007, 11, 77–83. [Google Scholar] [CrossRef] [PubMed]

- Ashton-James, C.E.; Tybur, J.M.; Grießer, V.; Costa, D. Stereotypes about surgeon warmth and competence: The role of surgeon gender. PLoS ONE 2019, 14, e0211890. [Google Scholar] [CrossRef]

- Heilman, M.E. Gender stereotypes and workplace bias. Res. Organ. Behav. 2012, 32, 113–135. [Google Scholar] [CrossRef]

- Huddy, L.; Terkildsen, N. Gender stereotypes and the perception of male and female candidates. Am. J. Pol. Sci. 1993, 37, 119–147. [Google Scholar] [CrossRef]

- Park, C.W.; Eisingerich, A.B.; Pol, G.; Park, J.W. The role of brand logos in firm performance. J. Bus. Res. 2013, 66, 180–187. [Google Scholar] [CrossRef]

- Jeon, J.E. The impact of brand concept on brand equity. Asia Pac. J. Innov. Entrep. 2017, 11, 233–245. [Google Scholar] [CrossRef]

- Park, C.W.; Jaworski, B.J.; MacInnis, D.J. Strategic brand concept-image management. J. Mark. 1986, 50, 135–145. [Google Scholar] [CrossRef]

- Brakus, J.J.; Schmitt, B.H.; Zarantonello, L. Brand experience: What is it? How is it measured? Does it affect loyalty? J. Mark. 2009, 73, 52–68. [Google Scholar] [CrossRef]

- Park, C.W.; MacInnis, D.J.; Priester, J.; Eisingerich, A.B.; Iacobucci, D. Brand attachment and brand attitude strength: Conceptual and empirical differentiation of two critical brand equity drivers. J. Mark. 2010, 74, 1–17. [Google Scholar] [CrossRef]

- Morgan, R.M.; Hunt, S.D. The commitment-trust theory of relationship marketing. J. Mark. 1994, 58, 20–38. [Google Scholar] [CrossRef]

- Moorman, C.; Zaltman, G.; Deshpande, R. Relationships between providers and users of market research: The dynamics of trust within and between organizations. J. Mark. Res. 1992, 29, 314–328. [Google Scholar] [CrossRef]

- Chaudhuri, A.; Holbrook, M.B. The chain of effects from brand trust and brand affect to brand performance: The role of brand loyalty. J. Mark. 2001, 65, 81–93. [Google Scholar] [CrossRef]

- Holbrook, M.B.; Hirschman, E.C. The experiential aspects of consumption: Consumer fantasies, feelings, and fun. J. Consum. Res. 1982, 9, 132. [Google Scholar] [CrossRef]

- Chattaraman, V.; Kwon, W.S.; Gilbert, J.E.; Ross, K. Should AI-based, conversational digital assistants employ social-or task-oriented interaction style? A task-competency and reciprocity perspective for older adults. Comput. Hum. Behav. 2019, 90, 315–330. [Google Scholar] [CrossRef]

- Holzwarth, M.; Janiszewski, C.; Neumann, M.M. The influence of avatars on online consumer shopping behavior. J. Mark. 2006, 70, 19–36. [Google Scholar] [CrossRef]

- Nass, C.; Moon, Y. Machines and mindlessness: Social responses to computers. J. Soc. Issues 2000, 56, 81–103. [Google Scholar] [CrossRef]

- Westerman, D.; Tamborini, R.; Bowman, N.D. The effects of static avatars on impression formation across different contexts on social networking sites. Comput. Hum. Behav. 2015, 53, 111–117. [Google Scholar] [CrossRef]

- Nowak, K.L.; Fox, J. Avatars and computer-mediated communication: A review of the definitions, uses, and effects of digital representations. Rev. Commun. Res. 2018, 6, 30–53. [Google Scholar] [CrossRef]

- Bickmore, T.W.; Picard, R.W. Establishing and maintaining long-term human-computer relationships. ACM Trans. Comput. Hum. Interact. 2005, 12, 293–327. [Google Scholar] [CrossRef]

- Tseng, S.; Fogg, B.J. Credibility and computing technology. Commun. ACM 1999, 42, 39–44. [Google Scholar] [CrossRef]

- Clark, L.; Pantidi, N.; Cooney, O.; Doyle, P.; Garaialde, D.; Edwards, J.; Spillane, B.; Gilmartin, E.; Murad, C.; Munteanu, C.; et al. What makes a good conversation? Challenges in designing truly conversational agents. In Proceedings of the Conference on Human Factors in Computing Systems, Glasgow, UK, 4–9 May 2019; p. 3300705. [Google Scholar]

- Madhavan, P.; Wiegmann, D.A. Similarities and differences between human–human and human–automation trust: An integrative review. Theor. Issues Ergon. Sci. 2007, 8, 277–301. [Google Scholar] [CrossRef]

- Horvitz, E. Principles of mixed-initiative user interfaces. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Pittsburgh, PA, USA, 15–20 May 1999; pp. 159–166. [Google Scholar] [CrossRef]

- Kontogiorgos, D.; Pereira, A.; Gustafson, J. Grounding behaviours with conversational interfaces: Effects of embodiment and failures. J. Multimodal User Interfaces 2021, 15, 239–254. [Google Scholar] [CrossRef]

- Richardson, D.C.; Dale, R. Looking to understand: The coupling between speakers’ and listeners’ eye movements and its relationship to discourse comprehension. Cogn. Sci. 2005, 29, 1045–1060. [Google Scholar] [CrossRef]

- Sundar, S.S.; Jung, E.H.; Waddell, T.F.; Kim, K.J. Cheery companions or serious assistants? Role and demeanor congruity as predictors of robot attraction and use intentions among senior citizens. Int. J. Hum. Comput. Stud. 2017, 97, 88–97. [Google Scholar] [CrossRef]

- Guzman, A.L. Voices in and of the machine: Source orientation toward mobile virtual assistants. Comput. Hum. Behav. 2019, 90, 343–350. [Google Scholar] [CrossRef]

- Parulekar, A.A.; Raheja, P. Managing celebrities as brands: Impact of endorsements on celebrity image. In Creating Images and the Psychology of Marketing Communication; Lawrence Erlbaum Associates: Mahwah, NJ, USA, 2006; pp. 161–169. [Google Scholar]

- Bennett, A.M.; Hill, R.P. The universality of warmth and competence: A response to brands as intentional agents. J. Consum. Psychol. 2012, 22, 199–204. [Google Scholar] [CrossRef]

- Lee, S.; Choi, J. Enhancing user experience with conversational agent for movie recommendation: Effects of self-disclosure and reciprocity. Int. J. Hum. Comput. Stud. 2017, 103, 95–105. [Google Scholar] [CrossRef]

- Van Looy, J.; Courtois, C.; De Vocht, M.; De Marez, L. Player identification in online games: Validation of a scale for measuring identification in MMOGs. Media Psychol. 2012, 15, 197–221. [Google Scholar] [CrossRef]

- Bailey, R.; Wise, K.; Bolls, P. How avatar customizability affects children’s arousal and subjective presence during junk food–sponsored online video games. Cyberpsychol. Behav. 2009, 12, 277–283. [Google Scholar] [CrossRef]

- Mohd Tuah, N.; Wanick, V.; Ranchhod, A.; Wills, G.B. Exploring avatar roles for motivational effects in gameful environments. EAI Endorsed Trans. Creat. Technol. 2017, 17, 1–11. [Google Scholar] [CrossRef][Green Version]

- Szolin, K.; Kuss, D.J.; Nuyens, F.M.; Griffiths, M.D. ‘I am the character, the character is me’: A thematic analysis of the user-avatar relationship in videogames. Comput. Hum. Behav. 2023, 143, 107694. [Google Scholar] [CrossRef]

- Kim, C.; Lee, S.G.; Kang, M. I became an attractive person in the virtual world: Users’ identification with virtual communities and avatars. Comput. Hum. Behav. 2012, 28, 1663–1669. [Google Scholar] [CrossRef]

- Yee, N.; Bailenson, J.N.; Ducheneaut, N. The Proteus effect: Implications of transformed digital self-representation on online and offline behavior. Commun. Res. 2009, 36, 285–312. [Google Scholar] [CrossRef]

- Jeon, J.E. The effect of ideal avatar on virtual brand experience in XR platform. J. Distrib. Sci. 2023, 21, 109–121. [Google Scholar]

- Park, B.W.; Lee, K.C. Exploring the value of purchasing online game items. Comput. Hum. Behav. 2011, 27, 2178–2185. [Google Scholar] [CrossRef]

- Bickmore, T.; Cassell, J. Social dialogue with embodied conversational agents. Adv. Nat. Multimodal Dial. Syst. 2005, 30, 23–54. [Google Scholar]

- Weger, H.; Castle Bell, G.C.; Minei, E.M.; Robinson, M.C. The relative effectiveness of active listening in initial interactions. Int. J. List. 2014, 28, 13–31. [Google Scholar] [CrossRef]

- Clark, H.H.; Brennan, S.E. Grounding in communication. In Perspectives on Socially Shared Cognition; Resnick, L.B., Levine, J.M., Teasley, S.D., Eds.; American Psychological Association: Washington, DC, USA, 1991; pp. 222–233. [Google Scholar]

- Reis, H.T.; Lemay, E.P., Jr.; Finkenauer, C. Toward understanding: The importance of feeling understood in relationships. Soc. Pers. Psychol. Compass 2017, 11, e12308. [Google Scholar] [CrossRef]

- Bergner, A.S.; Hildebrand, C.; Häubl, G. Machine talk: How verbal embodiment in conversational AI shapes consumer–brand relationships. J. Consum. Res. 2023, 50, 742–764. [Google Scholar] [CrossRef]

- Schultze, U. Performing embodied identity in virtual worlds. Eur. J. Inf. Syst. 2014, 23, 84–95. [Google Scholar] [CrossRef]

- Barnes, S.J.; Mattsson, J. Exploring the fit of real brands in the second-life virtual world. J. Mark. Manag. 2011, 27, 934–958. [Google Scholar] [CrossRef]

- Papagiannidis, S.; Pantano, E.; See-To, E.W.K.; Bourlakis, M. Modelling the determinants of a simulated experience in a virtual retail store and users’ product purchasing intentions. J. Mark. Manag. 2013, 29, 1462–1492. [Google Scholar] [CrossRef]

- Ahn, S.J.; Fox, J.; Bailenson, J.N. Avatars. In Leadership in Science and Technology: A Reference Handbook; Bainbridge, W.S., Ed.; SAGE Publications: Thousand Oaks, CA, USA, 2012; pp. 695–702. [Google Scholar]

- Garnier, M.; Poncin, I. The avatar in marketing: Synthesis, integrative framework and perspectives. Rech. Appl. Mark. 2013, 28, 85–115. [Google Scholar] [CrossRef]

- Kim, S.; Chen, R.P.; Zhang, K. Anthropomorphized helpers undermine autonomy and enjoyment in computer games. J. Consum. Res. 2016, 43, 282–302. [Google Scholar] [CrossRef]

- Singh, H.; Singh, A. ChatGPT: Systematic review, applications, and agenda for multidisciplinary research. J. Chin. Econ. Bus. Stud. 2023, 21, 193–212. [Google Scholar] [CrossRef]

- Woods, H.S. Asking more of Siri and Alexa: Feminine persona in service of surveillance capitalism. Crit. Stud. Media Commun. 2018, 35, 334–349. [Google Scholar] [CrossRef]

- McDonnell, M.; Baxter, D. Chatbots and gender stereotyping. Interact. Comput. 2019, 31, 116–121. [Google Scholar] [CrossRef]

- Costa, P.; Ribas, L. AI becomes her: Discussing gender and artificial intelligence. Technoetic Arts J. Specul. Res. 2019, 17, 171–193. [Google Scholar] [CrossRef]

- Hill-Yardin, E.L.; Hutchinson, M.R.; Laycock, R.; Spencer, S.J. A Chat (GPT) about the future of scientific publishing. Brain Behav. Immun. 2023, 110, 152–154. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).