Abstract

Artificial intelligence (AI) technologies are changing the ways of interaction between humans and machines, and smart interactions have become one of the hot topics of artificial intelligent in-home voice assistants (AVAs) by connecting humans, machines, content, and AVAs. Based on the privacy calculus theory (PCT), the authors conducted an online questionnaire-based survey to investigate the influential mechanisms of smart interactions on stickiness intention (SI), demonstrated the positive (negative) effects of smart interactions on benefits and risks, and verified the moderating role of susceptibility to normative influence (SNI). The results show that smart interactions positively impact SI via utilitarian benefit and hedonic benefit; humanness has a U-shaped effect on privacy risk; personalization, connectivity, and linkage positively impact privacy risk; multimodal control negatively impacts privacy risk; and SNI positively moderates the effects of smart interactions on stickiness intention. The study enriched and expanded the literature on smart interactions in the context of AIoT and offered practical implications for AVA service providers and developers to design or optimize smart interactions for AI interactive services. By examining the double-edged sword effects of personalization and humanness, our findings offer novel insights into the privacy calculus in smart interactions.

1. Introduction

The applications of artificial intelligence (AI) technologies and the usage of smart voice entities have informed a cutting-edge research field for scholars and practitioners [1,2,3]. AI is the extent of intelligence provided through digital interfaces or algorithm capacity to replicate human behaviors [4,5]. Voice-based interaction devices (e.g., Amazon Alexa and Alibaba TmallGenie) enter millions of households, and smart interactions by integrating voice interaction are regarded as a novel interaction paradigm among AI, users, content, processes, and machines when it comes to the artificial intelligence of things (AIoT).

Artificial intelligent in-home voice assistants (AVAs) often comprise a digital camera, sensitive microphones, a touch screen, and interfaces that enable customers to conduct various tasks employing interactive voice control, gesture control, and eye control [1]. AVAs are becoming part of the everyday lives of users [6] who work with AI-based machines or devices, such as air conditioners, refrigerators, fully automatic washing machines, and smart rice cookers. In the AVA context, smart interaction refers to the extent to which AVAs, users, machines, and content act on each other, communication media, and information, and the extent to which such impacts are synchronized in human–AI–machine interactions [7]. Through these interactions, such AVAs empower consumers to control in-home devices, conduct video chats, hear classical music, order takeout foods, and listen to audio novels. AVAs have become a novel communication channel and can connect with brands and users [1], which has piqued the interest of academics and service providers [1].

Unlike conventional devices or applications (e.g., chatbots), AVAs expand their functions beyond enhancing performance and efficiency at work to aiding individual tasks in daily life by interacting with users in real time [8,9]. They can greatly increase the efficiency or performance of consumers by conducting various tasks and interacting with users, machines, and content [7]. Furthermore, AVAs resemble personal assistants to users, which enhances consumers’ utilitarian and hedonic benefits but increases privacy risks [10]. During the interactions, AVAs must collect users’ private information to optimize their algorithms and provide better service, but users frequently see it as a menace to privacy [1]. The personalization–privacy dilemma (PPD) examines the trade-offs between personalization and risks (i.e., privacy risk) in many research disciplines [11,12,13,14], including the adoption of novel technologies, social network sites, and social media. Existing studies on voice assistants have proven the effects of technology qualities, such as perceived usability and perceived utility [15]. Prior works have also found that these factors are essential factors in determining the usage of AVAs, such as the enablers, obstacles [6,16], and customer engagement [17]. However, studies on how various subdimensions of smart interactions with AVAs influence stickiness intention are scarce. Additionally, users are vulnerable to the normative influence (NI) of their relatives, friends, and other important persons in the context of smart homes. Thus, few studies have paid attention to its effects on the stickiness intention of users. Questions to ponder include (1) how do smart interactions with AVAs enhance stickiness intention via benefits and risks of users? Some scholars also call for more research to unfold the effects of smart interactions between users, content, machines, and AIs [17]; (2) how does AVAs’ humanness (as a game-changer) influence stickiness intention by transforming benefits and risks? and (3) how does susceptibility to normative influence (SNI) moderate the relationships between smart interactions and stickiness intention?

To address the three questions, researchers established the model and hypotheses to investigate how smart interactions with AVAs enhance stickiness intention by extending the privacy calculus theory (PCT). First, we echo existing studies on newly developed, interactive, and personalized technologies (e.g., AI) in interactive marketing [8,10,15,16,18] to investigate the subdimensions of smart interactions (i.e., AI–human interaction (such as personalization, responsiveness, multimodal control, and humanness), AI–content interaction (connectivity), AI–machine interaction (linkage), human–human interaction (communication)), which will offer an all-round picture of smart interactions with AIs. Second, we use PCT to investigate the effects of smart interactions on benefits and risks, providing a comprehensive picture of how smart interactions affect stickiness intention toward AIs. Third, we find that humanness has a U-shaped effect on privacy risk, enriching the literature on PCT. We examine the “double-edged sword effects” of personalization and humanness and test their negative and positive effects, which will extend the findings of Lavado-Nalvaiz et al. [1] and Uysal et al. [18]. Finally, we highlight the moderating role of SNI in the effects of smart interactions on the stickiness intention of users, enriching the literature on SNI in the AIoT context [17,19].

We examined the literature, developed hypotheses, explained our research technique and data collection process, conducted data analysis, concluded the results, discussed the implications, and described limits in the following sections.

2. Theoretical Background

2.1. Artificial Intelligent In-Home Voice Assistant (AVA)

AVAs are systems, methods, and programs that display intelligence and use voice recognition and synthesis to promote natural user–machine interactions [20]. They are changing and evolving traditional human–computer interactions [17,21]. Distinct from traditional AI applications and based on large language modeling technologies (LLMT), AVAs adopt cutting-edge natural language processing (NPL) and machine learning abilities to conduct natural interactions with users and machines together in real time in the AIoT context [17]. Traditional AI applications can only mechanically interact with humans or machines through automation, and users cannot control and use machines via AI based on multimodal inputs (such as human voices, text, touches, and gestures). These technological characteristics differ from traditional computer technologies, including chatbots, websites, mobile apps, and social media [17]. By embedding and employing distinct and diverse interactive features, AVAs are capable of handling multimodal inputs (such as human voices, text, touches, and gestures) to conduct natural communications with users and machines. For example, this user–AI interaction feels like communicating with a real person. Past studies on voice assistants focused on the antecedents of user acceptance [6,8,16,21], attitude [22], fairness perception of the evaluation [23], brand loyalty [24], consumer attachment to artificial intelligence agents [25], satisfaction [26,27], behavioral intention [26], consumer engagement [17,28], continuance usage intention [1,9,29], privacy information disclosure or protection [4,29,30], and purchase intention [21,26,29], etc., but few studies focused on smart interactions among AVAs, users, machines, and content [31]. Thus, previous works explicating human–machine interaction with technologies could not interpret how smart interactions impact stickiness intention. Therefore, given PCT, we aim to uncover how smart interactions with AVAs impact stickiness intention via benefits or privacy risk.

2.2. Smart Interactions in the AIoT Context

Existing research indicates that interaction (or interactivity) has been defined from four distinct vantage points: as a characteristic of technology, as a process of information exchange, as an experience of using technologies, and as a mix of the first three [32,33].

First, previous studies have categorized interactions into three kinds: human–machine, human–human, and human–content [34]. Users perceive human–machine interaction by employing AVAs’ functions, using voice control and gesture control to seek and access personal information, conducting voice shopping, and playing music and videos. What is more, human–content interaction involves the interactions between humans and content by reading and experiencing content posted by others on a knowledge-sharing platform (e.g., the Zhihu app in China).

Second, interaction (interactivity) is one multi-dimensional construct [34]. Interaction has been categorized into many subdimensions in different contexts. Even though scholars have referred to the aspects of interaction differently, the four characteristics most frequently recognized in prior research are personalization, control, responsiveness, and communication (connectivity or connectedness) [31,32].

However, as technologies advance and new mediums evolve, interactive ways, content, abilities, and processes range from one to another, suggesting that the concept and dimensions of interaction are still changing as before [34]. AVAs significantly differ from traditional technologies, platforms, or devices (e.g., computers, apps, and systems) regarding usage scenarios. Therefore, smart interaction differs from traditional interaction in terms of medium, interfaces, types, ways, command forms, input tools, and goals (see Table 1, Figure 1 and Figure 2).

Table 1.

Smart interaction vs. traditional interactivity.

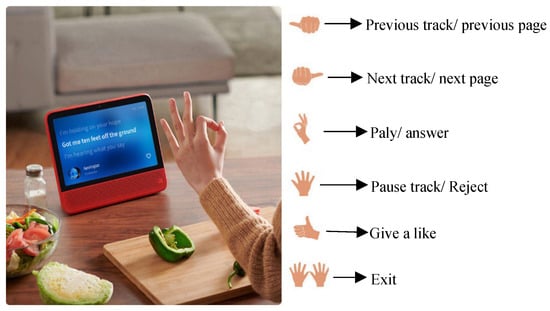

Figure 1.

The example of implementing multimodal control by using gestures.

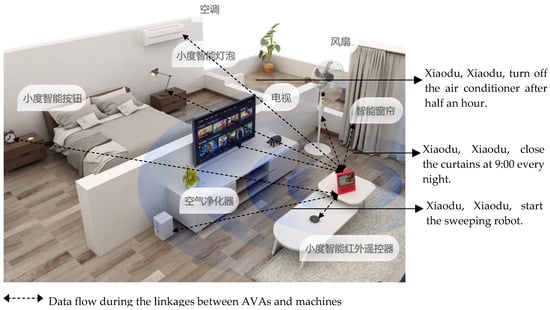

Figure 2.

Examples of implementing linkage by using AVAs.

According to Jeong & Shin [11], we argued that smart interactions consist of AI–human interaction, AI–machine interaction, AI–content interaction, and human–human interaction [7]. (1) For interaction medium, distinct from traditional interactivity, users can experience human–AI interactions by interacting with AVAs in which AI can conduct self-learning to learn consumer needs or requirements continuously and in which their algorithm could adapt to user needs to service them better [35]. This will enhance the personalization of these interactions. (2) For the user interface, general information technologies enable users to adopt a CLI or GUI to conduct traditional interactivity, but AI enables users to conduct smart interactions using NUI and ACI. (3) For input tools, users can input command lines by using keyboards and mice or input graphical representations by employing a touch screen in the context of traditional interactivity, but in the AVA context, individuals can employ multimodal inputs (e.g., voices and gestures; see Figure 1) to conduct smart interactions by using cameras, microphones, sensors, and touch screens of AVAs, which will serve users better and be more accessible.

In sum, the notion of interaction is changing as technology develops, and AIs are changing the nature of interaction. As a human-centered AI system, AVAs can interact and connect with users, machines, and content to provide personalized service or information for users based on active self-learning and multimodal inputs [31], as shown in Figure 2.

Therefore, based on the human-in-the-loop (HITL) [36], we define smart interaction as the extent to which AVAs, users, machines, and content act on each other, communication media, and information, which enables users to change the forms or content of an AI-mediated environment and to perform various interactive tasks conveniently and fast via multiple inputs (e.g., voices, gestures, haptics, eyes) and the extent to which such impacts are synchronized in human–AI–machine interactions [7]. For example, linkage refers to the degree to which machines are interconnected with AVAs to perform various tasks for users [7], as shown in Figure 2.

2.3. Computers as Social Actors (CASA)

CASA has become an important paradigm for studying individuals’ social responses to computers [37] and is employed as the theoretical foundation to illustrate the cognition and social responses of humans to machines or computers in the HCI context [38]. The CASA paradigm states that when humanness cues are displayed using humanization techniques (e.g., computers), users tend to feel computers are closer to humans and feel computers as social actors [39]. Given the media equation theory (MET), individuals unconsciously adopt the same rules employed in human–human connection and view computers as peers in social interactions. As computers can employ natural languages to interact with users who feel the interactions resemble those with real humans [37], computers (e.g., AVAs) are often regarded as social entities. Users expect AVAs to be empathic and to have good social responses like humans and will treat them as social actors. Therefore, the CASA paradigm is employed to explain and underpin our understanding of the user communication process in smart interactions with AVAs in our study.

2.4. Privacy Calculus Theory

According to the privacy calculus theory (PCT), humans judge disclosing personal information by calculating the rewards and dangers [40]. PCT is often used to explain the attitudes, beliefs, intentions, and behaviors of IT users when the usage of IT involves the cost of privacy risks [41]. According to PCT, behavioral intention is influenced not only by projected value but also by privacy risk [42,43]. Users are regarded to be rational because users may make their decisions on account of a cost/benefit tradeoff and pursue utility maximization [41].

When disclosing their personal information or data, users report distinct levels of privacy risk [44]. AVAs have distinct advantages in boosting service efficiency but also pose more privacy risks [1,45]. Using AVAs could trigger users’ privacy calculus, requiring them to perform trade-offs between benefits and risks while sharing their personal data [1,46]. Thus, PCT is one of the most appropriate to investigate how smart interactions with AVAs affect stickiness intention via benefits or privacy risk.

2.5. The Personalization–Privacy Paradox (PPP) Framework

Personalization can help users complete activities faster and live better lives by providing effective, efficient, and reliable information and services during the AI–human contact process [5,47]. Users regard privacy as a vital concern, and privacy risk is the degree to which users perceive a prospective loss caused by the disclosure of personal information [48]. The distinction is referred to as the privacy–personalization conundrum [49]. When interacting with AVAs, personalization could raise consumers’ anxieties and privacy risks about their private data being recorded, retained, and shared [50].

3. Research Framework and Hypotheses

3.1. The Effects of Smart Interactions on Utilitarian Benefit

3.1.1. The Effects of AI–Human Interaction on Utilitarian Benefit

Humanness refers to the extent to which an object resembles humanlike attributes, including mind, emotion, behaviors, and a sense of humor [51]. Söderlund & Oikarinen [52] also defined humanness as the extent to which an object possesses human-like features. Some existing studies regarded humanness as a social dimension in human–machine interaction [53]. Existing studies have investigated that anthropomorphism influences the effort expectancy of users toward AI devices [54], and users humanize AVAs as private assistants or secretaries to finish their daily tasks at home, including inquiring about traffic and weather conditions or setting alarms and reminders [9]. They also discovered that anthropomorphism can enhance utilitarian attitudes [9]. Aw et al. [10] further pointed out that human-like attributes and technology features affect smart-shopping perception. Thus,

H1a:

Humanness positively impacts utilitarian benefit.

The degree to which users perceive their capacity to voluntarily determine where to go, with whom to communicate, what features to use, and how to use the content of websites is referred to as user control [55]. Existing research has revealed that user control is critical to human–machine interaction [56]. Yoo et al. [56] similarly found that user control can enhance utilitarian value. Users can employ AVAs to finish their tasks more quickly by using hands-free voice control, eye control, or gesture control, and they will get a utilitarian advantage. Therefore,

H1b:

Multimodal control positively impacts utilitarian benefit.

Personalization is the technique of tailoring web content to meet the individual demands of users and optimize transaction opportunities [57]. Personalization positively impacts the perceived usefulness of the site [58]. Companies can employ personalization strategies to provide consumers with tailored information that is consistent with their needs, preferences, or behavioral habits, which can improve users’ efficiencies in gathering and processing information and enhance the perceived utilitarian value and usefulness of products or services during shopping [59]. When interacting with AVAs, users can acquire personalized, useful, or utilitarian information to meet their requirements [21]. Additionally, AVAs allow users to customize their personalized and useful baby training plans according to the kids’ ages, bringing convenience and positive impacts to family education. Furthermore, the Baidu maps assistants via AVAs have assistive functions in which users can customize their shortest traffic routes during navigation [17]. Thus, we provide the hypothesis:

H1c:

Personalization positively affects utilitarian benefit.

Responsiveness is the degree to which websites participate in and reply swiftly to user requests and the degree to which users may obtain rapid and effective responses from customer service [60,61]. Yuan et al. [62] reported that responsiveness enhances utilitarian value. We anticipate that AVAs or smart speakers will be able to respond quickly and effectively to a user’s query, instruction, or request, increasing users’ perceived usefulness of AVAs. For example, AVAs can give immediate and accurate responses to users’ questions, which causes users to feel that AVAs and their in-time responses are valuable and helpful for them. Therefore,

H1d:

Responsiveness positively impacts utilitarian benefit.

3.1.2. The Effect of AI–Content Interaction on Utilitarian Benefit

Connectivity refers to the extent to which AVAs can connect with the content from multiple websites or apps to provide various audio-visual resources for users [7]. Huang & Rust [31] noted that machines, humans, and objects are all interconnected and found that data flows are shared ubiquitously, facilitating learning [7]. Ubiquitous connection is regarded as the belief that a person is always and everywhere connected to others [63]. Instant connectivity positively impacts perceived usefulness in understanding and employing a given technology [64]. The connectivity may involve machine-to-machine connection, machine-to-human connection, and human-to-physical environment connection [65,66,67]. Following the logic, AVAs can connect with the content from massive websites or apps to obtain rich audio and video resources and further facilitate individuals to perform various tasks [7], enhancing users’ perceived practical benefit for AVAs. Thus,

H1e:

Connectivity positively impacts utilitarian benefit.

3.1.3. The Effect of AI–Machine Interaction on Utilitarian Benefit

Linkage refers to the degree to which machines are interconnected with AVAs to perform various tasks for users [7]. In addition, the data flow shared ubiquitously facilitates the connection between AVAs and machines. First, when Amazon’s Alexa connects with an air-conditioner, the air-conditioner can take a user’s commands and change the air temperature and humidity within his or her house. Second, AVAs can connect with machines they possess or sensors and control them to finish daily tasks. For example, constant connectivity allows users to report various aspects of the physical environment (e.g., room temperature), enabling them to obtain a huge amount of data and promoting customers into well-informed users. Thus,

H1f:

Linkage positively impacts utilitarian benefit.

3.1.4. The Effect of Human–Human Interaction on Utilitarian Benefit

Communication refers to how well people perceive the technology that promotes two-way communication between users [55,68]. Communication is an AI-mediated human–human interaction in which users can communicate with each other via AVAs to strengthen their relationships. Existing works have also revealed that communication favors personal perceptions [68]. Effective communication over technologies enhances users’ satisfaction [68]. First, AVAs can enable users to conduct voice-based communication with users, see each other via live video, and facilitate them to monitor their parents or children at home remotely in case of accidental personal injury or theft. Second, users can communicate with their friends, colleagues, or teachers and obtain useful information (e.g., traffic flow information and commodity information) via AVAs, making connecting users with far-flung jobs or learning activities easier. Thus, we propose:

H1g:

Communication positively affects utilitarian benefit.

3.2. The Effects of Smart Interactions on Hedonic Benefit

3.2.1. The Effects of AI–Human Interaction on Hedonic Benefit

Humanness explains why users label AVAs as people and seek affective support from them as friends or companions [9]. Existing studies show that humanness is a critical element in human–AVA interactions [8,56]. AVAs could master various skills (e.g., telling jokes and dancing) and conduct lively and entertaining conversations with users, which may elicit their perception analogous to the enjoyment of chatting with humans and will enhance the hedonic perception of users [62]. Humans can perceive a sense of social connection, belonging, and intimacy by humanizing non-human objects. These social ties also make people happier and healthier [69]. According to Mishra et al. [9], anthropomorphism will improve users’ hedonistic attitudes. Yuan et al. [62] also found that anthropomorphism positively impacts hedonic benefit. Thus, we expect that humanness will enhance users’ hedonic benefit. Thus, we posit:

H2a:

Humanness positively influences hedonic benefit.

Previous studies on interactivity have shown that providing user control to individuals is essential for their physical or psychological well-being [33]. Lunardo & Mbengue [70] also claimed that control is crucial for users to enjoy their experience. Kim et al. [71] suggested that perceived control positively affects game enjoyment. Horning [72] pointed out that perceived control positively affects news enjoyment. In a smart home, users can send voice messages (e.g., “Tmall genie, please play some soft music”) or employ hand gestures to AVAs and request AVAs to play classical or light music and control them as they wish, which helps users to relax and gives them enjoyable and immersive experiences. Therefore, we propose:

H2b:

Multimodal control positively affects hedonic benefit.

Personalized recommendation systems automatically track, collect, and use consumers’ personal information authorized by users to deliver tailored advertisements according to user profiles [73]. When consumers receive personalized information that is felt as pleasant and likable, they will believe that the knowledge fits their requirements and that their level of enjoyment will increase [74]. Kim & Han [74] suggested that personalization positively affects the perceived entertainment of advertising. Wang et al. [75] noted that tourist recommendation systems can recommend personalized and relevant points of interest to enhance enjoyable trip experiences when user profiles are utilized correctly and effectively. Following the logic, we argue:

H2c:

Personalization positively impacts hedonic benefit.

Responsiveness involves the extent to which mediums participate and respond promptly to users’ requests [61]. According to Cyr et al. [76], responsiveness can boost enjoyment. Horning [72] pointed out that the perceived responsiveness of second-screen users positively affects news enjoyment. In the smart home context, AVAs can respond promptly to users’ voice requests (e.g., “Tmall genie, please play some light music”), facilitating users to enjoy wonderful music. Therefore, we propose:

H2d:

Responsiveness positively affects hedonic benefit.

3.2.2. The Effect of AI–Content Interaction on Hedonic Benefit

Huang & Rust [31] argued that users can connect with objects (e.g., content). Users gain happiness and pleasure from continuous connection with internet material via mobile SNS use, and ubiquitous connectivity improves enjoyment [67]. Smart products can be authorized to connect and interact with each other without direct interaction from users [65], which offers enjoyable and fun programs or other services for users. Firstly, AVAs could connect with entertainment content from various websites (e.g., TV series and movies on the Tencent video website) and can receive voice commands to stream wonderful and relaxing music for tired users after a day’s work. Secondly, AVAs can connect with rich game resources to enable users to play enjoyable games. Thirdly, AVAs can connect with rich video resources from online audio-visual entertainment platforms (e.g., Bilibili, a video-sharing app), offering enjoyable and memorable online experiences for users. As a result, we anticipate that the connection between AVAs and content will enhance user hedonic benefits. Taken together, we predict:

H2e:

Connectivity positively impacts hedonic benefit.

3.2.3. The Effect of AI–Machine Interaction on Hedonic Benefit

Linkage is the degree to which machines are all interconnected with AVAs to carry out various duties for users, and ubiquitous data flow enables the interconnection of AVAs and machines. First, AVAs can connect with other in-home entertainment devices (e.g., smart televisions, projectors) by using image projection technology based on a high-definition multimedia interface (HDMI) to provide large-screen movie-watching and entertainment for users, which will serve them better and double their pleasure. Second, AVAs can connect with smart robots to play games with children. Third, as smart home central consoles, AVAs can connect with massive household appliances (e.g., smart curtains) by voice control and gesture control to finish different funny tasks, facilitating users to enjoy their leisure time. Thus, we predict that the linkage between AVAs and machines will improve hedonic benefits. Therefore,

H2f:

Linkage positively affects hedonic benefit.

3.2.4. The Effect of Human–Human Interaction on Hedonic Benefit

The level to which the technologies improve two-way communication among users or between users and AVAs was characterized as communication [32,55]. Effective communication will improve users’ satisfaction with the technology [68]. First, users can share a good time about their wonderful lives with other family members (e.g., children and parents) by using video calls, VR, and AR via AVAs. Second, during voice interaction, users can connect with their friends through AVAs about entertainment news, glamorous clothing, and movie information, providing users with a pleasurable experience. For instance, users can invite their companions to watch and listen to online concerts after work via AVAs and share a great time with partners, which helps users relax and clear their minds. Therefore,

H2g:

Communication positively affects hedonic benefit.

3.3. The Effects of Smart Interactions on Privacy Risk

Uncanny valley theory (UVT) suggests that humanness may elicit users’ emotional and cognitive responses [1]. When the humanness of AVAs continues to boost, AVAs become more likable and more empathetic, enhancing users’ affective and cognitive reactions to them [77]. AVAs are felt as human-like, but they cannot mimic perfect persons, so UVT predicts a U-shaped effect [1]. Humanlike features would be regarded as being positive and decline privacy risk (PR) until a point; after that, they are felt as being unsettling and disturbing, eliciting fear and distrust [77]. More humanness might cause users more worries about private information [78]. Uysal et al. [18] also noted that AVAs’ humanness could increase data privacy concerns. When AVAs have too many humanlike characteristics, they will look like more humans, such as displaying their minds and will. This will cause users to feel that they will lose control over these devices, boosting the perceived risk of misusing their personal information or data. Additionally, the low-level humanness of AVAs will reduce costs since it will weaken PR generated from higher trust and social presence with AVAs; high-level humanlike voices of AVAs may bring out users’ confusion about their humanity, which may enhance distrust in AVAs. Thus,

H3a:

Humanness has a U-shaped effect on privacy risk.

Users have control over how personal information is disclosed and used, which can reduce users’ context-specific concerns about privacy violations by using certain external agents [79]. To lessen or stop the negative effects of potential privacy invasions, users rely on user control that can reduce users’ uncertainty when shopping online [43]. When interacting with AVAs with screens, users can employ the privacy control settings to control or mitigate privacy risk. Firstly, AVAs with screens may allow users to control private voice recordings, and users can view, hear, and delete them at any time. Secondly, users can turn off the camera or microphone of some AVAs with a button press that can disconnect the camera or microphone and temporarily stop the use of AVAs. Most AVAs include a built-in shutter, allowing users to cover their cameras to easily protect their private information. Therefore,

H3b:

Multimodal control negatively impacts privacy risk.

Personalization would elicit perceived danger of information exposure [13], and can elicit privacy risks [12,80]. Personalization needs more personal information disclosure that is beyond users’ control, which would significantly cause users’ worries about personal data privacy [1,46]. Users may believe they will lose control of their personal data and AVAs, increasing their privacy risk. High-level customization might not be enough to make up for the privacy invasion, which may enhance the privacy risk associated with employing AVAs. Therefore, the higher the personalization is, the more privacy risk users perceive. Hence:

H3c:

Personalization positively impacts privacy risk.

Connectivity indicates that users can access rich content from third-party websites anytime and anywhere at home [67]. AVAs can offer users suitable content based on their voice requests and collect users’ personal information, exposing them to others since users may be requested to offer their personal information (e.g., authorizing access to address books). This will elicit users’ concerns about leaking their privacy information that they are reluctant to provide to strangers and are concerned about being misused [81]. For example, users buy takeaway food via AVAs by using location-based services, and they are requested to provide their home addresses to third-party takeaway websites, which elicits users’ worries about the misuse of their private information. Therefore, we propose:

H3d:

Connectivity positively impacts privacy risk.

Linkage indicates that AVAs need to connect with machines to offer better services for users supplied by third-party server providers, which may lead to the leakage of users’ privacy information. For instance, to conduct remote care of children and the elderly at home, AVAs need to link with smart cameras and facilitate users being at work to monitor video images with browsers through these cameras in real time. And these cameras provided by third-party companies will detect falls of the elderly at home by using AI-based image depth learning technologies and help users to call for first aid. This may lead to letting out users’ private video information in the use of AVAs and then cause users worry about scammers’ misuse of the information. Therefore, we propose:

H3e:

Linkage positively impacts privacy risk.

3.4. The Effects of Privacy Calculus

Stickiness intention (SI) refers to users’ willingness to use AVAs more frequently or for longer periods in the future [82]. Utilitarian benefit focuses on functional, instrumental, and cognitive benefits, but hedonic benefit reflects the affective benefits of users, including fun and enjoyment [83]. McLean & Osei-Frimpong [19] revealed that utilitarian benefits positively impact the usage of in-home voice assistants. AVAs can offer useful news, real-time stock status updates, accurate weather forecasts, oncoming road condition statuses, schedule reminders, and other information to users via voice commands. Hedonic benefits will promote using in-home voice assistants [19]. Gursoy et al. [54] reported that hedonic motivations positively affect the perceived performance expectancy of AI. Hedonic and utilitarian attitudes favorably impact the adoption of AVAs [9]. Canziani & MacSween [20] also found that hedonic perceptions can enhance the intention to voice order by using smart home devices. AVAs can offer users valuable information or a joyful experience, encouraging them to keep using AVAs. Therefore, we assume:

H4a,b:

Utilitarian benefit (a) and hedonic benefit (b) positively impact stickiness intention.

Chellappa & Sin [42] argued that privacy concerns negatively affect the likelihood of using personalization services. Following this logic, we predict that AVAs with screens being embedded into smart speakers can record users’ voiceprint information, enable monitoring and video recording, and may even send private information to third-party platforms and individuals in the case of wrongly recognizing voice commands from users, which will decline the stickiness intention of users. Therefore, we propose:

H5:

Privacy risk negatively affects stickiness intention.

3.5. The Moderating Effect of Susceptibility to Normative Influence

Susceptibility to normative influence (SNI) refers to the tendency or the need that individuals have to obtain identification with others or improve their image to significant people or the intention to conform to others’ expectations on shopping decisions [84]. SNI also indicates the degree to which customers “identify with a group to enhance their self-image and ego,” and high-SNI users tend to seek products with social benefits to obtain or maintain in-group acceptance [85]. SNI significantly affects consumers’ adoption behaviors and efforts to obtain social acceptance and results in social approval [86]. Users with high SNI will perceive an intense sense of acquiring and using products to comply with significant others’ expectations, and users with high-level SNI have a higher level of buying intention than those with low-level SNI [87].

Given that AVAs are often employed by users and other family members at home or friends, normative influence will change their usage behaviors in smart interactions. Users not only prize individual needs but also family needs or social needs, so impulsive shopping will enhance when users consider the desire to purchase or use for other family members or the need to interact or communicate with friends. Additionally, we predict that compared to low-SNI users, smart interactions have stronger effects on stickiness intention for high-SNI users. Therefore,

H6a–g: SNI moderates the effect of smart interactions (humanness (a), multimodal control (b), personalization (c), responsiveness (d), connectivity (e), linkage (f), and communication (g)) on stickiness intention, that is, the higher the users’ SNI, the stronger the positive impact of smart interactions on SI.

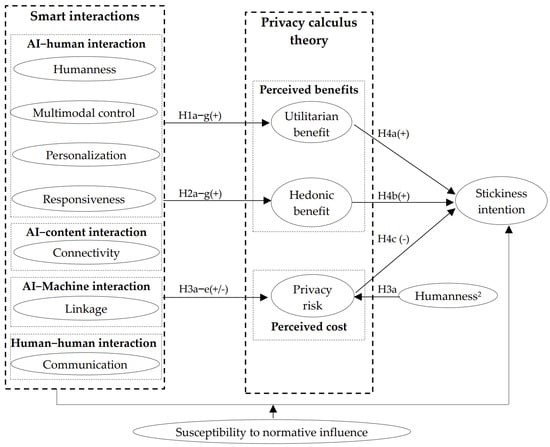

To sum up, we construct our model (see Figure 3).

Figure 3.

Research model.

4. Research Method

Based on PCT and the personalization–privacy paradox (PPP), the authors used a survey to unfold the impacts of smart interactions on stickiness intention via benefits (privacy risk), unveiling the moderating role of SNI.

4.1. Measurement Scales

The authors operationalized the constructs using items modified from past studies (see Table 2) and measured the variables using 7-point Likert scales. We measured personalization by four items from Li & Yeh [88] and Xue et al. [58]; responsiveness by four items from Wu & Wu [60]; multimodal control by six items from Gao et al. [89] and Wu & Hsiao [90]; linkage (LK) (connectivity (CN)) by two items from Xue et al. [7]; communication by four items from Lee [91] and Xu et al. [92]; and humanness (HU) by two items from Fernandes & Oliveira [16] in this study, and revised the items of SNI from Bearden et al. [93] and Mangleburg et al. [94].

Table 2.

Measurement items of partial constructs in this study.

4.2. Sample and Data Collection

Researchers surveyed www.wjx.com (accessed on 1 May 2022) (one social media app in China) to collect the data, lasting six weeks from May 2022. The data were gathered from 450 consumers in China. By cleansing and deleting 42 invalid samples that contained missing values or failed the screening questions, 387 valid responses were collected from 408 completed surveys. We employed IBM SPSS v.26.0 to store, analyze, and handle the demographic information of this study. In this study, 90.18% of the respondents had used AVAs for at least three months to offer insight into the factors of smart interactions. The final sample was 50.65% male, and most respondents were between 26 and 40 years of age (77.52%). Most of the respondents had a bachelor’s (63.57%) degree and employed AVAs; 51.68% of the respondents reported their monthly income range as higher than 7000 RMB. Table 3 presents the demographic breakdown of the respondents.

Table 3.

Demographics information (n = 387).

5. Data Analysis and Results

We employed IBM SPSS v.26.0 software and SmartPLS v.3.2.8 software to analyze and handle the data by using the method of PLS-SEM. This is because PLS-SEM is one powerful method against nonnormality, and it is suitable for developing theory and getting maximum variance in our study. The method has been widely employed in previous studies [8,36,49] and is suitable for prediction-based models that focus on distinguishing critical predictors or driver constructs.

5.1. Common-Method Variance Bias Test (CMV)

We used three methods to control CMV. First, Harman’s single-factor test was conducted, and we found that the first factor entailed a 31.982% variance in data, larger than the cut-off value of 40% [99], which indicated the absence of CMV. Second, we employed an unmeasured latent approach to check common method bias (CMB) [100]. The average variance explained by the substantive structure (0.676) is much larger than that explained by the common method factor (0.003), and the ratio between them is 228:1, indicating that CMB was not a threat in this study.

5.2. Measurement Model Analysis

This study examined the reliability of all the constructs. First, Cronbach’s alpha coefficients ranged from 0.775 to 0.941 (see Table 4), all exceeding the threshold values of 0.7 [101]. Second, the composite reliability (CR) values ranged from 0.843 to 0.955, exceeding 0.7 (see Table 4). Third, all the values of the average variance extracted (AVE) were higher than 0.5 (see Table 4), indicating good convergent validity.

Table 4.

Discriminant validity of measures used in measurement and correlation matrix.

Discriminant validity was examined by employing two methods. First, the square root of the AVE values of every construct was higher than its correlation with another construct [101], indicating good discriminant validity (see Table 4). Second, discriminant validity was examined by testing the heterotrait-monotrait (HTMT), and the HTMT values of each construct were smaller than the threshold value of 0.85, suggesting good discriminant validity.

5.3. Structural Model Analysis

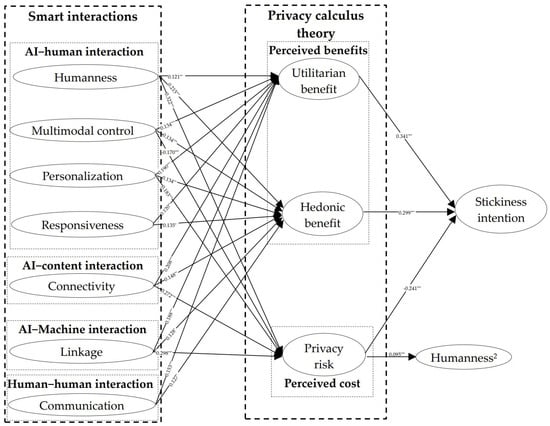

We summarized the structural model using a PLS analysis with path coefficients (see Table 5). To test the hypotheses in this study, we conducted PLS analysis using SmartPLS 3.2.8 software to compute the coefficients of our model. The results provided support for the hypotheses in this study (see Table 5 and Figure 4) and suggested that smart interactions have positive effects on stickiness intention via utilitarian benefit, hedonic benefit, and privacy risk.

Table 5.

The results of path analysis.

Figure 4.

Structural model result. Notes: * = p < 0.05, ** = p < 0.01, *** = p < 0.001.

First, the dimensions of smart interactions positively affect utilitarian benefit (H1a: HU→UN, β = 0.121, p < 0.01; H1b: MC→UN, β = 0.134, p < 0.001; H1c: PE→UN, β = 0.190, p < 0.001; H1d: RE→UN, β = 0.120, p < 0.01; H1e: CN→UN, β = 0.208, p < 0.001; H1f: LK→UN, β = 0.188, p < 0.001; and H1g: CO→UN, β = 0.153, p < 0.001; see Figure 4), providing support for H1a-g.

Second, the dimensions of smart interactions positively affect hedonic benefit (H2a: HU→HE, β = 0.215, p < 0.001; H2b: MC→HE, β = 0.134, p < 0.001; H2c: PE→HE, β = 0.134, p < 0.01; H2d: RE→HE, β = 0.135, p < 0.05; H2e: CN→HE, β = 0.148, p < 0.01; H2f: LK→HE, β = 0.128, p < 0.05; and H2g: CO→UN, β = 0.127, p < 0.05), providing support for H2a–g.

Third, humanness has a nonlinear (U-shaped) effect on privacy risk (H3a: HU2→PR, β = 0.095, p < 0.001), providing support for H3a; multimodal control negatively impacts privacy risk (H3b: MC→PR, β = −0.170, p < 0.001), supporting H4b; personalization positively affects privacy risk (H3c: PE→PR, β = 0.183, p < 0.001), supporting H4c; connectivity positively affects privacy risk (H3d: CN→PR, β = 0.272, p < 0.001), supporting H3e; and linkage positively affects privacy risk (H4c: LK→PR, β = 0.298, p < 0.001), supporting H3e.

Fourth, utilitarian benefit (H4a: UN→SI, β = 0.341, p < 0.05) and hedonic benefit (H4b: HE→SI, β = 0.299, p < 0.001) have positive effects on stickiness intention, but privacy risk negatively impacts stickiness intention (H5: PR→SI, β = −0.241, p < 0.001).

5.4. The Moderating Roles of Susceptibility to Normative Influence

After conducting the regression analyses, the results showed that compared with low-SNI users, HU (H6a: β = 0.091, p < 0.001; see Table 6), MC (H6b: β = 0.155, p < 0.001), PE (H6c: β = 0.181, p < 0.001), RE (H6d: β = 0.132, p < 0.001), CN (H6e: β = 0.132, p < 0.001), LK (H6f: β = 0.132, p < 0.001), and CO (H6g: β = 0.132, p < 0.001) have a stronger positive impact on SI for high-SNI users, supporting H6a–g.

Table 6.

Results of testing the moderating role of SNI.

6. Discussion and Conclusions

6.1. Major Findings

Based on PCT, this study explored how smart interactions (namely personalization (PE), responsiveness (RE), multimodal control (MC), connectivity (CN), linkage (LK), humanness (HU), and communication (CO)) affect stickiness intention via perceived benefits and privacy risk. First, the empirical results showed that smart interactions (HU, MC, PE, RE, CN, LK, and CO) positively impact utilitarian benefit (UN), which extended the findings of Huang & Rust [31] in thinking that AI can personalize service by performing logical, analytical, and rule-based learning, and that of Hsu et al. [102] that PE features positively impact UN. Second, the results demonstrated that smart interactions (PE, RE, MC, CN, LK, CO, and HU) positively impact hedonic benefit (HE). Third, humanness has a U-shaped effect on privacy risk; multimodal control can decline users’ privacy risk; personalization positively impacts privacy risk; connectivity positively impacts privacy risk; and linkage positively impacts privacy risk. Fifth, utilitarian and hedonic benefits positively affect stickiness intention, but privacy risk negatively impacts stickiness intention. Finally, SNI positively moderates the effect of smart interactions (i.e., HU, MC, PE, RE, CN, LK, and CO) on stickiness intention.

6.2. Theoretical Implications

This study aimed to unravel how smart interactions (e.g., HU, MC, PE, RE, CN, LK, and CO) with or via AVAs influence the stickiness intention of users based on PCT and the Personalization–Privacy Paradox (PPP) framework.

First, from a theoretical lens, this study contributes to the literature on smart interactions, as it is one of the first empirical studies to construct and extend the subdimensions of smart interactions in the AIoT context, which extended the theory on interactivity (interaction) [91,103,104,105]. This study further added three subdimensions (namely, MC, LK, and HU) and constructed smart interactions in the AIoT context. This is because the traditional definition or constructs of interactivity was established based on the traditional 2G network context [89], and interactivity was conducted by using mice and keyboards, but in the human–AI interaction (HAI) and 4G (or 5G) network context, users could interact with AI by employing voices, gestures, haptics, and eyes, et al.

Additionally, this study contributes to the literature on AVAs by uncovering the interactive features (e.g., MC, CN, and LK) of AVAs that are distinct from traditional AI applications and similar AI applications, which extended the findings of [12,32,73,85,103]. For instance, we find that MC, CN, and LK can empower users to connect and interact with AVAs and machines simultaneously, which extends the scope and object of interactions [1] in the AIoT context. For example, Lavado-Nalvaiz et al. [1] only explored the effects of personalization and humanization on continued usage. This is because existing studies on similar AI applications overlook that the ways, content, and mediums of interactions between AI and users (machines) have changed as technologies have advanced over time. Such study is essential because the ways, content, and mediums of AI–human interactions have changed, and dimensions of smart interactions need to be constructed again in the AIoT context [1,8,30].

Second, this work adds to the body of literature on smart interactions by verifying the effects of the subdimensions of smart interactions on UN, HE, and PR and extending the literature on PCT. Previous studies on interactivity in the HAI context focused on the factors impacting purchase intention [104,106] and engagement [17,107], but little is known about which specific subdimension of smart interaction is most efficient in stimulating UN, HE, and PR in the AIoT context. This study is one of the first theoretical and empirical works that explicates how the different subdimensions of smart interactions among AVAs and users (machines) drive UN, HE, and PR.

Third, this study contributes to the literature on stickiness intention by establishing a theoretically grounded and empirically validated framework that explains SI towards AVAs through jointing benefits (i.e., UN and HE) and risk (i.e., PR) [33] based on PCT. Prior works on the antecedents of SI in the HCI context have focused on user needs or motivations [19] and perceived value [62,108] rather than the antecedents of these factors; this study constructed a benefit–risk model to explain SI in the AIoT context.

Fourth, by examining the double-edged sword effects of personalization, our findings offer novel insights into the privacy calculus by offering new evidence on how smart interactions affect SI in the AIoT context. In the existing works on privacy calculus, current debates focus on two aspects: (1) existing studies shed more light on whether benefits and privacy risk contribute equally to the privacy calculus of users; and (2) previous works have highlighted the importance of exploring the boundary conditions of the calculus. In the AIoT context, users will obtain more personalized benefits by disclosing privacy information, initiating the risk–benefit trade-off, and eliciting the privacy calculus, which extends the findings of Hayes et al. [14] and Lavado-Nalvaiz et al. [1]. For example, Kang et al. [109] argued that users feel threats to their privacy only if personalization surpasses a certain threshold, but Lavado-Nalvaiz et al. [1] reported that personalization positively affects privacy risk. This is because previous studies have overlooked the negative effects that low to middle level personalization (as a territorial behavior) may have a negative effect on privacy risk by enhancing psychological ownership towards AVAs, which users regard as their own personal assistants (performing a territorial behavior).

Fifth, distinct from the findings of Lavado-Nalvaiz et al. [1] and Uysal et al. [18], we further report that (1) humanness has a U-shaped effect on privacy risk, verifying the findings of Lavado-Nalvaiz et al. [1]; (2) personalization positively impacts privacy risk, which verified the findings of Lavado-Nalvaiz et al. [1] that personalization positively affects privacy risk; (3) this study demonstrates the positive effect of connectivity and linkage on privacy risk and the negative impact of multimodal control on privacy risk [1]; and (4) this study also found the double-edged sword effects of humanness, which extends the literature on humanness [1,19,55,56,110].

Sixth, we verify the positive moderating role of SNI and extend the findings of Hsieh & Lee [110], which adds to the literature on SNI [85,86,111]. These findings offer some insights into the positive effects of SNI in the relationships between smart interactions and the stickiness intention of users [86].

Therefore, we investigate the different effects of benefits and privacy risks elicited by smart interactions on stickiness intention to extend the concept of PCT to incorporate the contextual stimuli (i.e., smart interactions in our study), which extends the findings of Jain et al. [8], Lavado-Nalvaiz et al. [1], and McLean & Osei-Frimpong [19] on the personalization–privacy paradox framework and SI [82,98].

6.3. Managerial Implications

First, service providers or managers of AVAs should provide smart interaction services for users to enhance users’ benefits by offering users personalized services, real-time responses, and many audio-visual resources, which will produce more utility and more enjoyment.

Second, developers or practitioners of AVAs should adopt AI interactive technologies to optimize smart interaction functions to enhance benefits of users: (1) developers can optimize their algorithms of the AI devices and respond to users’ requests faster and more accurately, which will enhance users’ perceived utilitarian and hedonic benefits; (2) designers offer more ways or mediums to conduct multimodal control (e.g., gesture control, voice control, haptic control, and eye control), improve the efficiencies of finishing personal tasks, and users can enjoy more audio-visual resources (e.g., pop music, movies, and TV dramas) by multimodal control; (3) based on artificial intelligence-generated content (AIGC) and embedded social caretaking, designers can incorporate natural human voices in AVAs and offer more emotionally customized everyday conversations with users; (4) practitioners can connect AVAs with many applications or websites (e.g., Taobao app, Zhihu app, Iqiyi app) and offer all kinds of audio-visual content or resources for users; (5) developers should empower AVAs with more natural human voices and emotions and enable AVAs to have more humanness, personality, and more authentic responses to users’ requests to facilitate two-way communication among users.

Finally, for high-SNI users, developers of AVAs can develop more personalized features for their family members or friends of different ages to meet their expectations.

6.4. Limitations and Future Research

This study has five limits, providing lines for future study. First, to ensure internal validity, we used a self-reported survey to investigate the influencing mechanism of smart interactions on stickiness intention via benefits and privacy risk. Future research should conduct field experiments, machine learning, and secondary data to replicate this examination and to check external validity. Second, similar to the methods of McLean et al. [17] and Lavado-Nalvaiz et al. [1], our study was carried out in just one country by employing quantitative methods and using a professional market research company. Therefore, future studies should replicate the examinations and test divergent results owing to cultural effects to explicate how smart interactions impact product attachment by using data from multiple sources. Third, this study conducted one survey to examine their effects on benefits and risks. Future works could use machine learning or deep learning to collect multimodal and unstructured data to examine differences in the personalization–privacy paradox (PPP), extending the privacy calculus theory [46]. Fourth, we have focused on the positive and adverse impacts of smart interactions; further investigations that explore the negative effects caused by smart interactions, including user disengagement, negative engagement, and value co-destruction, could achieve a deeper understanding of the dark-side effects of smart interactions.

Author Contributions

Conceptualization, J.H. and J.X.; methodology, J.H. and J.X.; software, J.H. and J.X.; validation, J.X. and X.L.; formal analysis, X.L.; investigation, J.X. and X.L.; resources, J.X.; data curation, J.X. and X.L.; writing—original draft preparation, J.H. and J.X.; writing—review and editing, J.X.; visualization, J.X. and X.L.; supervision, J.X.; project administration, J.X.; funding acquisition, J.X. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Natural Science Foundation of China (72102238, 72372110, 72172100, 72302168 and 71832015), the China Postdoctoral Science Foundation (2021M702319), and the Fundamental Research Funds for the Central Universities of China (2024ZY-SX06).

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki. The study involving human participants was approved by the Ethics Committee.

Informed Consent Statement

Informed consents were obtained from all participants involved in this study. All participants were informed about this study, and participation was on a fully voluntary basis. Participants were assured of the confidentiality and anonymity of the information associated with this survey.

Data Availability Statement

The authors will share data upon the request.

Acknowledgments

Thanks to Wenjie Li from School of Business, Sun Yat-sen University and Sidan Tan from Business School, Sichuan University in China for their assistance in conducting the study.

Conflicts of Interest

Author Jinyi He was employed by the company HSBC Insurance Brokerage Co. Ltd. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Lavado-Nalvaiz, N.; Lucia-Palacios, L.; Pérez-López, R. The role of the humanisation of smart home speakers in the personalisation–privacy paradox. Electron. Commer. Res. Appl. 2022, 53, 101146. [Google Scholar] [CrossRef]

- Wang, C.L. Editorial–what is an interactive marketing perspective and what are emerging research areas? J. Res. Interact. Mark. 2024, 18, 161–165. [Google Scholar] [CrossRef]

- Zimmermann, R.; Mora, D.; Cirqueira, D.; Helfert, M.; Bezbradica, M.; Werth, D.; Weitzl, W.J.; Riedl, R.; Auinger, A. Enhancing brick-and-mortar store shopping experience with an augmented reality shopping assistant application using personalized recommendations and explainable artificial intelligence. J. Res. Interact. Mark. 2023, 17, 273–298. [Google Scholar] [CrossRef]

- Cao, G.; Wang, P. Revealing or concealing: Privacy information disclosure in intelligent voice assistant usage- a configurational approach. Ind. Manag. Data Syst. 2022, 122, 1215–1245. [Google Scholar] [CrossRef]

- Poushneh, A. Humanizing voice assistant: The impact of voice assistant personality on consumers’ attitudes and behaviors. J. Retail. Consum. Serv. 2021, 58, 102283. [Google Scholar] [CrossRef]

- Vimalkumar, M.; Sharma, S.K.; Singh, J.B.; Dwivedi, Y.K. ‘Okay google, what about my privacy?’: User’s privacy perceptions and acceptance of voice based digital assistants. Comput. Hum. Behav. 2021, 120, 106763. [Google Scholar] [CrossRef]

- Xue, J.; Niu, Y.; Liang, X.; Yin, S. Unraveling the effects of voice assistant interactions on digital engagement: The moderating role of adult playfulness. Int. J. Hum. Comput. Interact. 2024, 40, 4934–4955. [Google Scholar] [CrossRef]

- Jain, S.; Basu, S.; Dwivedi, Y.K.; Kaur, S. Interactive voice assistants-does brand credibility assuage privacy risks? J. Bus. Res. 2022, 139, 701–717. [Google Scholar] [CrossRef]

- Mishra, A.; Shukla, A.; Sharma, S.K. Psychological determinants of users’ adoption and word-of-mouth recommendations of smart voice assistants. Int. J. Inf. Manag. 2021, 67, 102413. [Google Scholar] [CrossRef]

- Aw, E.C.-X.; Tan, G.W.-H.; Cham, T.-H.; Raman, R.; Ooi, K.-B. Alexa, what’s on my shopping list? Transforming customer experience with digital voice assistants. Technol. Forecast. Soc. Change 2022, 180, 121711. [Google Scholar] [CrossRef]

- Jeong, M.; Shin, H.H. Tourists’ experiences with smart tourism technology at smart destinations and their behavior intentions. J. Travel Res. 2019, 59, 1464–1477. [Google Scholar] [CrossRef]

- Sutanto, J.; Palme, E.; Tan, C.H.; Phang, C.W. Addressing the personalization–privacy paradox: An empirical assessment from a field experiment on smartphone users. Mis. Q. 2013, 37, 1141–1164. [Google Scholar] [CrossRef]

- Xu, H.; Luo, X.R.; Carroll, J.M.; Rosson, M.B. The personalization privacy paradox: An exploratory study of decision making process for location-aware marketing. Decis. Support Syst. 2011, 51, 42–52. [Google Scholar] [CrossRef]

- Hayes, J.L.; Brinson, N.H.; Bott, G.J.; Moeller, C.M. The influence of consumer–brand relationship on the personalized advertising privacy calculus in social media. J. Interact. Mark. 2021, 55, 16–30. [Google Scholar] [CrossRef]

- Buteau, E.; Lee, J. Hey alexa, why do we use voice assistants? The driving factors of voice assistant technology use. Commun. Res. Rep. 2021, 38, 336–345. [Google Scholar] [CrossRef]

- Fernandes, T.; Oliveira, E. Understanding consumers’ acceptance of automated technologies in service encounters: Drivers of digital voice assistants adoption. J. Bus. Res. 2021, 122, 180–191. [Google Scholar] [CrossRef]

- McLean, G.; Osei-Frimpong, K.; Barhorst, J. Alexa, do voice assistants influence consumer brand engagement?-examining the role of ai powered voice assistants in influencing consumer brand engagement. J. Bus. Res. 2021, 124, 312–328. [Google Scholar] [CrossRef]

- Uysal, E.; Alavi, S.; Bezençon, V. Trojan horse or useful helper? A relationship perspective on artificial intelligence assistants with humanlike features. J. Acad. Mark. Sci. 2022, 50, 1153–1175. [Google Scholar] [CrossRef]

- McLean, G.; Osei-Frimpong, K. Hey Alexa … examine the variables influencing the use of artificial intelligent in-home voice assistants. Comput. Hum. Behav. 2019, 99, 28–37. [Google Scholar] [CrossRef]

- Canziani, B.; MacSween, S. Consumer acceptance of voice-activated smart home devices for product information seeking and online ordering. Comput. Hum. Behav. 2021, 119, 106714. [Google Scholar] [CrossRef]

- Gao, Y.; Liu, H. Artificial intelligence-enabled personalization in interactive marketing: A customer journey perspective. J. Res. Interact. Mark. 2023, 17, 663–680. [Google Scholar] [CrossRef]

- Li, X.; Sung, Y. Anthropomorphism brings us closer: The mediating role of psychological distance in user–ai assistant interactions. Comput. Hum. Behav. 2021, 118, 106680. [Google Scholar] [CrossRef]

- Lopez, A.; Garza, R. Consumer bias against evaluations received by artificial intelligence: The mediation effect of lack of transparency anxiety. J. Res. Interact. Mark. 2023, 17, 831–847. [Google Scholar] [CrossRef]

- Huh, J.; Kim, H.-Y.; Lee, G. “Oh, happy day!” examining the role of ai-powered voice assistants as a positive technology in the formation of brand loyalty. J. Res. Interact. Mark. 2023, 17, 794–812. [Google Scholar] [CrossRef]

- Yim, A.; Cui, A.P.; Walsh, M. The role of cuteness on consumer attachment to artificial intelligence agents. J. Res. Interact. Mark. 2024, 18, 127–141. [Google Scholar] [CrossRef]

- Yam, K.C.; Bigman, Y.E.; Tang, P.M.; Ilies, R.; Cremer, D.D.; Soh, H. Robots at work: People prefer—and forgive—service robots with perceived feelings. J. Appl. Psychol. 2020, 106, 1557–1572. [Google Scholar] [CrossRef]

- Jin, E.; Eastin, M.S. Birds of a feather flock together: Matched personality effects of product recommendation chatbots and users. J. Res. Interact. Mark. 2023, 17, 416–433. [Google Scholar] [CrossRef]

- Chen, Y.H.; Keng, C.-J.; Chen, Y.-L. How interaction experience enhances customer engagement in smart speaker devices? The moderation of gendered voice and product smartness. J. Res. Interact. Mark. 2022, 16, 403–419. [Google Scholar] [CrossRef]

- Zhu, Y.; Lu, Y.; Gupta, S.; Wang, J.; Hu, P. Promoting smart wearable devices in the health-ai market: The role of health consciousness and privacy protection. J. Res. Interact. Mark. 2023, 17, 257–272. [Google Scholar] [CrossRef]

- Lin, M.Y.-C.; Do, B.-R.; Nguyen, T.T.; Cheng, J.M.-S. Effects of personal innovativeness and perceived value of disclosure on privacy concerns in proximity marketing: Self-control as a moderator. J. Res. Interact. Mark. 2022, 16, 310–327. [Google Scholar] [CrossRef]

- Huang, M.-H.; Rust, R.T. Engaged to a robot? The role of ai in service. J. Serv. Res. 2020, 24, 30–41. [Google Scholar] [CrossRef]

- Mcmillan, S.J.; Hwang, J.S. Measures of perceived interactivity: An exploration of the role of direction of communication, user control, and time in shaping perceptions of interactivity. J. Advert. 2002, 31, 29–42. [Google Scholar] [CrossRef]

- Shi, X.; Evans, R.; Shan, W. Solver engagement in online crowdsourcing communities: The roles of perceived interactivity, relationship quality and psychological ownership. Technol. Forecast. Soc. Change 2022, 175, 121389. [Google Scholar] [CrossRef]

- Park, M.; Yoo, J. Effects of perceived interactivity of augmented reality on consumer responses: A mental imagery perspective. J. Retail. Consum. Serv. 2020, 52, 101912. [Google Scholar] [CrossRef]

- Kaplan, A.; Haenlein, M. Siri, siri, in my hand: Who’s the fairest in the land? On the interpretations, illustrations, and implications of artificial intelligence. Bus. Horiz. 2019, 62, 15–25. [Google Scholar] [CrossRef]

- Zanzotto, F.M. Human-in-the-loop artificial intelligence. J. Artif. Intell. Res. 2019, 64, 243–252. [Google Scholar] [CrossRef]

- Munnukka, J.; Talvitie-Lamberg, K.; Maity, D. Anthropomorphism and social presence in human–virtual service assistant interactions: The role of dialog length and attitudes. Comput. Hum. Behav. 2022, 135, 107343. [Google Scholar] [CrossRef]

- Song, M.; Xing, X.; Duan, Y.; Cohen, J.; Mou, J. Will artificial intelligence replace human customer service? The impact of communication quality and privacy risks on adoption intention. J. Retail. Consum. Serv. 2022, 66, 102900. [Google Scholar] [CrossRef]

- Araujo, T. Living up to the chatbot hype: The influence of anthropomorphic design cues and communicative agency framing on conversational agent and company perceptions. Comput. Hum. Behav. 2018, 85, 183–189. [Google Scholar] [CrossRef]

- Culnan, M.J.; Armstrong, P.K. Information privacy concerns, procedural fairness, and impersonal trust: An empirical investigation. Organ. Sci. 1999, 10, 104–115. [Google Scholar] [CrossRef]

- Keith, M.J.; Thompson, S.C.; Hale, J.; Lowry, P.B.; Greer, C. Information disclosure on mobile devices: Re-examining privacy calculus with actual user behavior. Int. J. Hum. Comput. Stud. 2013, 71, 1163–1173. [Google Scholar] [CrossRef]

- Chellappa, R.K.; Sin, R.G. Personalization versus privacy: An empirical examination of the online consumer’s dilemma. Inf. Technol. Manag. 2005, 6, 181–202. [Google Scholar] [CrossRef]

- Li, H.; Luo, X.; Zhang, J.; Xu, H. Resolving the privacy paradox: Toward a cognitive appraisal and emotion approach to online privacy behaviors. Inf. Manag. 2017, 54, 1012–1022. [Google Scholar] [CrossRef]

- Zhu, M.; Wu, C.; Huang, S.; Zheng, K.; Young, S.D.; Yan, X.; Yuan, Q. Privacy paradox in mhealth applications: An integrated elaboration likelihood model incorporating privacy calculus and privacy fatigue. Telemat. Inform. 2021, 61, 101601. [Google Scholar] [CrossRef]

- Gonçalves, R.B.; de Figueiredo, J.C.B. Effects of perceived risks and benefits in the formation of the consumption privacy paradox: A study of the use of wearables in people practicing physical activities. Electron. Mark. 2022, 32, 1485–1499. [Google Scholar] [CrossRef]

- Ameen, N.; Hosany, S.; Paul, J. The personalisation-privacy paradox: Consumer interaction with smart technologies and shopping mall loyalty. Comput. Hum. Behav. 2022, 126, 106976. [Google Scholar] [CrossRef]

- Kim, D.; Park, K.; Park, Y.; Ahn, J.-H. Willingness to provide personal information: Perspective of privacy calculus in iot services. Comput. Hum. Behav. 2019, 92, 273–281. [Google Scholar] [CrossRef]

- Zhu, H.; Ou, C.X.J.; Heuvel, W.J.A.M.v.d.; Liu, H. Privacy calculus and its utility for personalization services in e-commerce: An analysis of consumer decision-making. Inf. Manag. 2017, 54, 427–437. [Google Scholar] [CrossRef]

- Norberg, P.A.; Horne, D.R.; Horne, D.A. The privacy paradox: Personal information disclosure intentions versus behaviors. J. Consum. Aff. 2007, 41, 100–126. [Google Scholar] [CrossRef]

- Cloarec, J. The personalization–privacy paradox in the attention economy. Technol. Forecast. Soc. Change 2020, 161, 120299. [Google Scholar] [CrossRef]

- Kim, H.-Y.; McGill, A.L. Minions for the rich? Financial status changes how consumers see products with anthropomorphic features. J. Consum. Res. 2018, 45, 429–450. [Google Scholar] [CrossRef]

- Söderlund, M.; Oikarinen, E.-L. Service encounters with virtual agents: An examination of perceived humanness as a source of customer satisfaction. Eur. J. Mark. 2021, 55, 94–121. [Google Scholar] [CrossRef]

- Hu, P.; Lu, Y.; Gong, Y. Dual humanness and trust in conversational ai: A person-centered approach. Comput. Hum. Behav. 2021, 119, 106727. [Google Scholar] [CrossRef]

- Gursoy, D.; Chi, O.H.; Lu, L.; Nunkoo, R. Consumers acceptance of artificially intelligent (ai) device use in service delivery. Int. J. Inf. Manag. 2019, 49, 157–169. [Google Scholar] [CrossRef]

- Liu, Y. Developing a scale to measure the interactivity of websites. J. Advert. Res. 2003, 43, 207–216. [Google Scholar] [CrossRef]

- Yoo, W.-S.; Lee, Y.; Park, J. The role of interactivity in e-tailing: Creating value and increasing satisfaction. J. Retail. Consum. Serv. 2010, 17, 89–96. [Google Scholar] [CrossRef]

- Korper, S.; Ellis, J. Thinking ahead in e-commerce. Exec. Excell. 2001, 18, 19. [Google Scholar]

- Xue, J.; Liang, X.; Xie, T.; Wang, H. See now, act now: How to interact with customers to enhance social commerce engagement? Inf. Manag. 2020, 57, 103324. [Google Scholar] [CrossRef]

- Childers, T.L.; Carr, C.L.; Peck, J.; Carson, S. Hedonic and utilitarian motivations for online retail shopping behavior. J. Retail. 2001, 77, 511–535. [Google Scholar] [CrossRef]

- Wu, G.; Wu, G. Conceptualizing and measuring the perceived interactivity of websites. J. Curr. Issues Res. Advert. 2006, 28, 87–104. [Google Scholar] [CrossRef]

- Ahn, T.; Ryu, S.; Han, I. The impact of the online and offline features on the user acceptance of internet shopping malls. Electron. Commer. Res. Appl. 2005, 3, 405–420. [Google Scholar]

- Yuan, C.; Zhang, C.; Wang, S. Social anxiety as a moderator in consumer willingness to accept ai assistants based on utilitarian and hedonic values. J. Retail. Consum. Serv. 2022, 65, 102878. [Google Scholar] [CrossRef]

- Choi, S.B.; Lim, M.S. Effects of social and technology overload on psychological well-being in young south korean adults: The mediatory role of social network service addiction. Comput. Hum. Behav. 2016, 61, 245–254. [Google Scholar]

- Hubert, M.; Blut, M.; Brock, C.; Backhaus, C.; Eberhardt, T. Acceptance of smartphone-based mobile shopping:Mobile benefits, customer characteristics, perceived risks and the impact of application context. Psychol. Mark. 2017, 34, 175–194. [Google Scholar]

- Verhoef, P.C.; Stephen, A.T.; Kannan, P.K.; Luo, X.; Abhishek, V.; Andrews, M.; Bart, Y.; Datta, H.; Fong, N.; Hoffman, D.L. Consumer connectivity in a complex, technology-enabled, and mobile-oriented world with smart products. J. Interact. Mark. 2017, 40, 1–8. [Google Scholar]

- Russo, M.; Ollier-Malaterre, A.; Morandin, G. Breaking out from constant connectivity: Agentic regulation of smartphone use. Comput. Hum. Behav. 2019, 98, 11–19. [Google Scholar] [CrossRef]

- Choi, S. The flipside of ubiquitous connectivity enabled by smartphone-based social networking service: Social presence and privacy concern. Comput. Hum. Behav. 2016, 65, 325–333. [Google Scholar]

- Fan, L.; Liu, X.; Wang, B.; Wang, L. Interactivity, engagement, and technology dependence: Understanding users’ technology utilisation behaviour. Behav. Inf. Technol. 2017, 36, 113–124. [Google Scholar] [CrossRef]

- Epley, N. A mind like mine: The exceptionally ordinary underpinnings of anthropomorphism. J. Assoc. Consum. Res. 2018, 3, 591–598. [Google Scholar]

- Lunardo, R.; Mbengue, A. Perceived control and shopping behavior: The moderating role of the level of utilitarian motivational orientation. J. Retail. Consum. Serv. 2009, 16, 434–441. [Google Scholar]

- Kim, K.; Schmierbach, M.G.; Bellur, S.; Chung, M.-Y.; Fraustino, J.D.; Dardis, F.; Ahern, L. Is it a sense of autonomy, control, or attachment? Exploring the effects of in-game customization on game enjoyment. Comput. Hum. Behav. 2015, 48, 695–705. [Google Scholar] [CrossRef]

- Horning, M.A. Interacting with news: Exploring the effects of modality and perceived responsiveness and control on news source credibility and enjoyment among second screen viewers. Comput. Hum. Behav. 2017, 73, 273–283. [Google Scholar] [CrossRef]

- Sundar, S.S.; Marathe, S.S. Personalization versus customization: The importance of agency, privacy, and power usage. Hum. Commun. Res. 2010, 36, 298–322. [Google Scholar] [CrossRef]

- Kim, Y.J.; Han, J.Y. Why smartphone advertising attracts customers: A model of web advertising, flow, and personalization. Comput. Hum. Behav. 2014, 33, 256–269. [Google Scholar] [CrossRef]

- Wang, T.; Duong, T.D.; Chen, C.C. Intention to disclose personal information via mobile applications: A privacy calculus perspective. Int. J. Inf. Manag. 2016, 36, 531–542. [Google Scholar] [CrossRef]

- Cyr, D.; Head, M.; Ivanov, A. Perceived interactivity leading to e-loyalty: Development of a model for cognitive–affective user responses. Int. J. Hum. -Comput. Stud. 2009, 67, 850–869. [Google Scholar] [CrossRef]

- Mathur, M.B.; Reichling, D.B.; Lunardini, F.; Geminiani, A.; Antonietti, A.; Ruijten, P.A.; Levitan, C.A.; Nave, G.; Manfredi, D.; Bessette-Symons, B. Uncanny but not confusing: Multisite study of perceptual category confusion in the uncanny valley. Comput. Hum. Behav. 2020, 103, 21–30. [Google Scholar] [CrossRef]

- Ford, M.; Palmer, W. Alexa, are you listening to me? An analysis of alexa voice service network traffic. Pers. Ubiquitous Comput. 2019, 23, 67–79. [Google Scholar] [CrossRef]

- Xu, H.; Teo, H.H.; Tan, B.C.Y.; Agarwal, R. Effects of individual self-protection, industry self-regulation, and government regulation on privacy concerns: A study of location-based services. Inf. Syst. Res. 2012, 23, 1342–1363. [Google Scholar] [CrossRef]

- Ozturk, A.B.; Nusair, K.; Okumus, F.; Singh, D. Understanding mobile hotel booking loyalty: An integration of privacy calculus theory and trust-risk framework. Inf. Syst. Front. 2017, 19, 753–767. [Google Scholar] [CrossRef]

- Gao, W.; Liu, Z.; Guo, Q.; Li, X. The dark side of ubiquitous connectivity in smartphone-based sns: An integrated model from information perspective. Comput. Hum. Behav. 2018, 84, 185–193. [Google Scholar] [CrossRef]

- Li, Y.; Li, X.; Cai, J. How attachment affects user stickiness on live streaming platforms: A socio-technical approach perspective. J. Retail. Consum. Serv. 2021, 60, 102478. [Google Scholar] [CrossRef]

- Liu, F.; Zhao, X.; Chau, P.; Tang, Q. Roles of perceived value and individual differences in the acceptance of mobile coupon applications: Evidence from china. Internet Res. 2015, 25, 471–495. [Google Scholar] [CrossRef]

- Kropp, F.; Lavack, A.M.; Holden, S.J.S. Smokers and beer drinkers: An examination of values and consumer susceptibility to interpersonal influence. J. Consum. Mark. 1999, 16, 536–557. [Google Scholar] [CrossRef]

- Batra, R.; Homer, P.M.; Kahle, L.R. Values, susceptibility to normative influence, and attribute importance weights: A nomological analysis. J. Consum. Psychol. 2001, 11, 115–128. [Google Scholar] [CrossRef]

- Wooten, D.B.; Reed, A., II. Playing it safe: Susceptibility to normative influence and protective self-presentation. J. Consum. Res. 2004, 31, 551–556. [Google Scholar] [CrossRef]

- Bandyopadhyay, N. The role of self-esteem, negative affect and normative influence in impulse buying: A study from india. Mark. Intell. Plan. 2016, 34, 523–539. [Google Scholar] [CrossRef]

- Li, Y.M.; Yeh, Y.S. Increasing trust in mobile commerce through design aesthetics. Comput. Hum. Behav. 2010, 26, 673–684. [Google Scholar] [CrossRef]

- Gao, Q.; Rau, P.L.P.; Salvendy, G. Perception of interactivity: Affects of four key variables in mobile advertising. Int. J. Hum. Comput. Interact. 2009, 25, 479–505. [Google Scholar] [CrossRef]

- Wu, I.L.; Hsiao, W.H. Involvement, content and interactivity drivers for consumer loyalty in mobile advertising: The mediating role of advertising value. Int. J. Mob. Commun. 2017, 15, 577–603. [Google Scholar] [CrossRef]

- Lee, T. The impact of perceptions of interactivity on customer trust and transaction intentions in mobile commerce. J. Electron. Commer. Res. 2005, 6, 165–180. [Google Scholar]

- Xu, X.; Yao, Z.; Sun, Q. Social media environments effect on perceived interactivity. Online Inf. Rev. 2019, 43, 239–255. [Google Scholar] [CrossRef]

- Bearden, W.O.; Netemeyer, R.G.; Teel, J.E. Further validation of the consumer susceptibility to interpersonal influence scale. Adv. Consum. Res. 1990, 17, 770–776. [Google Scholar]

- Mangleburg, T.F.; Doney, P.M.; Bristol, T. Shopping with friends and teens’ susceptibility to peer influence. J. Retail. 2004, 80, 101–116. [Google Scholar] [CrossRef]

- Lee, S.Y.; Choi, J. Enhancing user experience with conversational agent for movie recommendation: Effects of self-disclosure and reciprocity. Int. J. Hum. Comput. Stud. 2017, 103, 95–105. [Google Scholar] [CrossRef]

- Yang, B.; Kim, Y.; Yoo, C. The integrated mobile advertising model: The effects of technology- and emotion-based evaluations. J. Bus. Res. 2013, 66, 1345–1352. [Google Scholar] [CrossRef]

- Davis, F.D.; Bagozzi, R.P.; Warshaw, P.R. Extrinsic and intrinsic motivation to use computers in the workplace 1. J. Appl. Soc. Psychol. 1992, 22, 1111–1132. [Google Scholar] [CrossRef]

- Lee, C.-H.; Chiang, H.-S.; Hsiao, K.-L. What drives stickiness in location-based ar games? An examination of flow and satisfaction. Telemat. Inform. 2018, 35, 1958–1970. [Google Scholar] [CrossRef]

- Harman, H.H. Modern Factor Analysis; University of Chicago Press: Chicago, IL, USA, 1976. [Google Scholar]

- Podsakoff, P.M.; MacKenzie, S.B.; Lee, J.-Y.; Podsakoff, N.P. Common method biases in behavioral research: A critical review of the literature and recommended remedies. J. Appl. Psychol. 2003, 88, 879–903. [Google Scholar] [CrossRef]

- Fornell, C.; Larcker, D.F. Evaluating structural equation models with unobservable variables and measurement error. J. Mark. Res. 1981, 18, 39–50. [Google Scholar] [CrossRef]

- Hsu, S.H.-Y.; Tsou, H.-T.; Chen, J.-S. “Yes, we do. Why not use augmented reality?” customer responses to experiential presentations of ar-based applications. J. Retail. Consum. Serv. 2021, 62, 102649. [Google Scholar] [CrossRef]

- Hamilton, K.A.; Lee, S.Y.; Chung, U.C.; Liu, W.; Duff, B.R.L. Putting the “me” in endorsement: Understanding and conceptualizing dimensions of self-endorsement using intelligent personal assistants. New Media Soc. 2021, 23, 1506–1526. [Google Scholar] [CrossRef]