Abstract

Correlations related to quantum entanglement have convinced many physicists that there must be some at-a-distance connection between separated events, at the quantum level. In the late 1940s, however, O. Costa de Beauregard proposed that such correlations can be explained without action at a distance, so long as the influence takes a zigzag path, via the intersecting past lightcones of the events in question. Costa de Beauregard’s proposal is related to what has come to be called the retrocausal loophole in Bell’s Theorem, but—like that loophole—it receives little attention, and remains poorly understood. Here we propose a new way to explain and motivate the idea. We exploit some simple symmetries to show how Costa de Beauregard’s zigzag needs to work, to explain the correlations at the core of Bell’s Theorem. As a bonus, the explanation shows how entanglement might be a much simpler matter than the orthodox view assumes—not a puzzling feature of quantum reality itself, but an entirely unpuzzling feature of our knowledge of reality, once zigzags are in play.

1. Strange Connections

One of the most puzzling things about quantum mechanics (QM) is entanglement—the strange connection between quantum systems that allows each to know something about what’s happening to the other, no matter how far apart they may be. Erwin Schrödinger, who invented the term, said that entanglement was not just one but “rather the characteristic trait of quantum mechanics, the one that enforces its entire departure from classical lines of thought” [1].

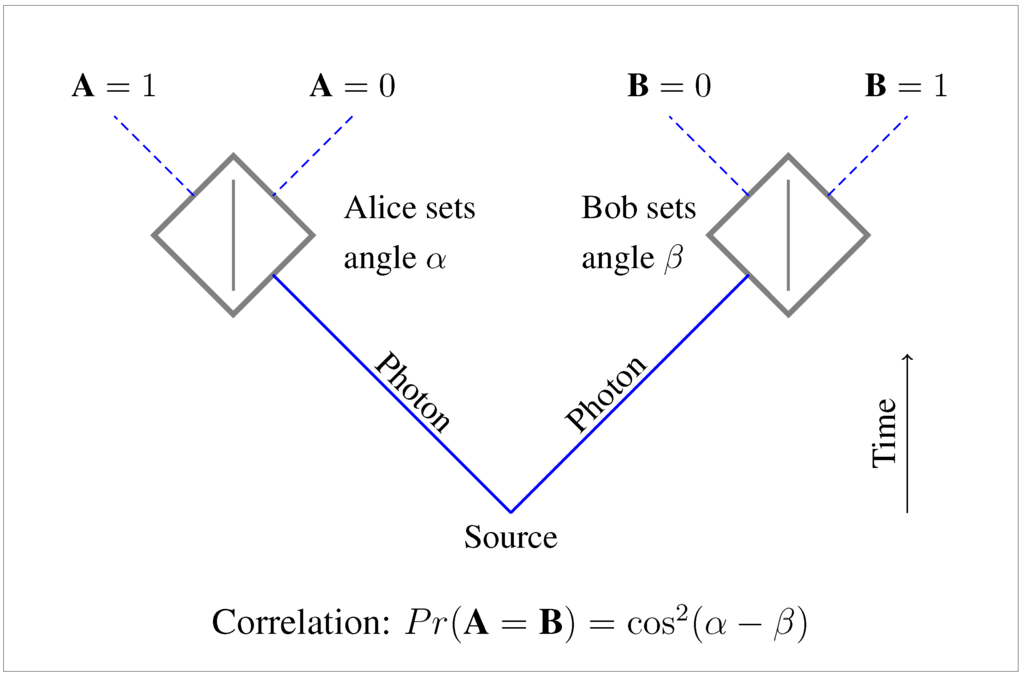

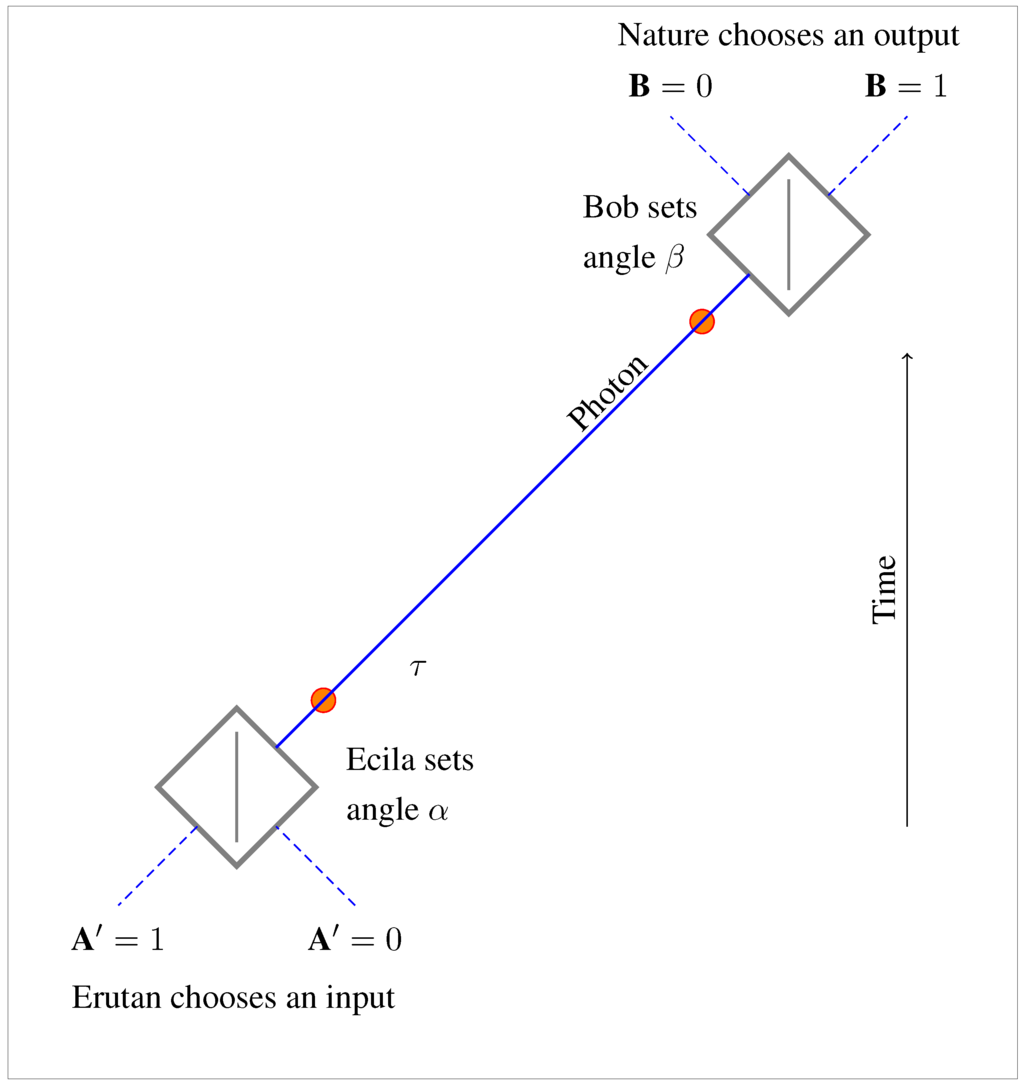

Schrödinger was discussing so-called EPR experiments, invented by Einstein, Podolsky and Rosen (EPR) in 1935. A typical case is shown in Figure 1. Two particles (photons, in this version) are created together at a source, and sent in different directions to experimenters Alice and Bob, who each have a choice of several measurements they can perform on their particle. And although each outcome is unpredictable on its own, when they choose matching measurements, the particles turn out to be perfectly correlated: the two outcomes match 100% of the time. EPR used these correlations to argue that the particles must carry “hidden instructions”, telling the particles how to behave for each measurement that Alice and Bob might choose to perform. They concluded that standard QM was incomplete, because it didn’t describe these hidden instructions [2].

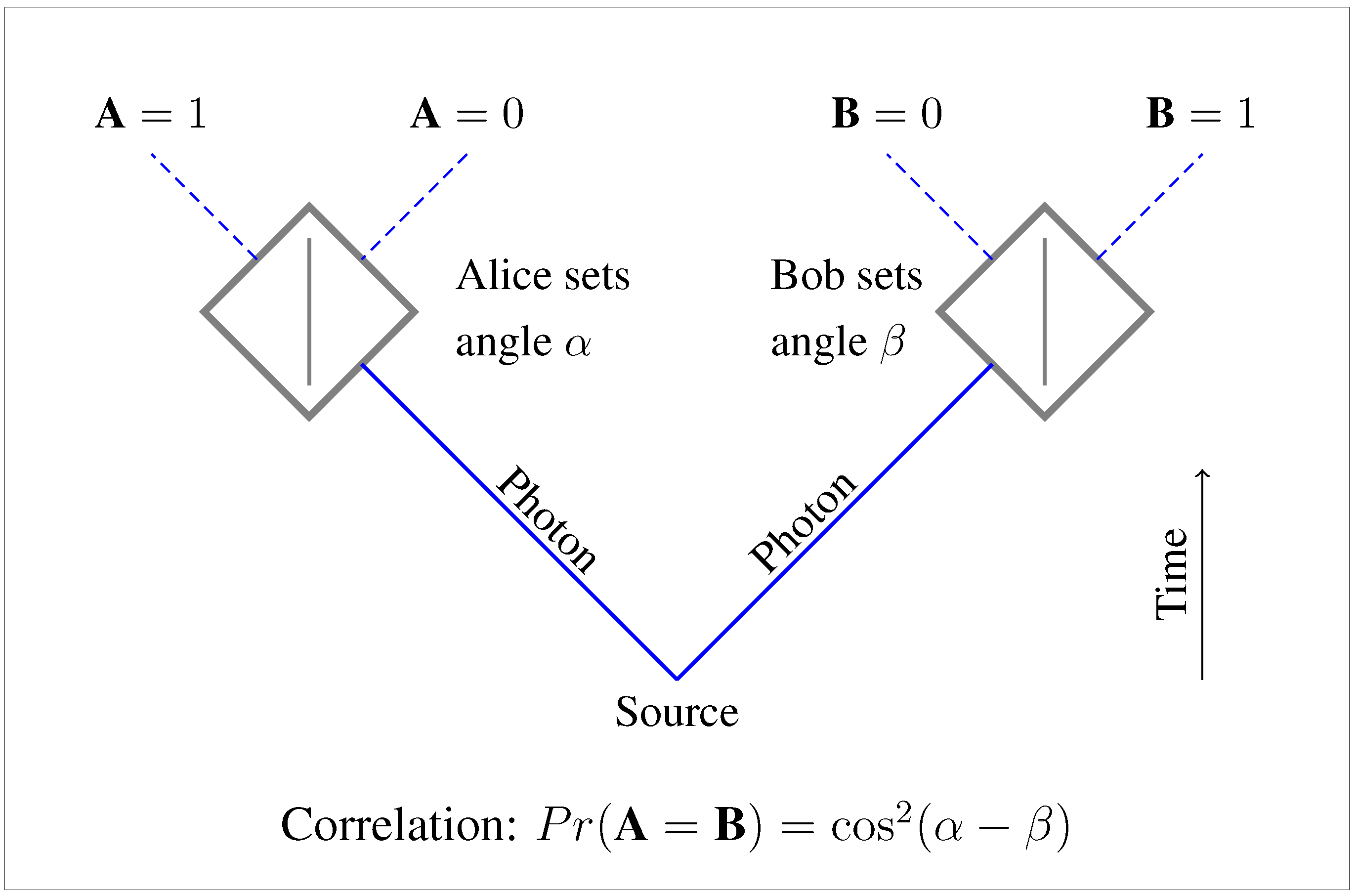

Figure 1.

EPR experiment with photons.

Figure 1.

EPR experiment with photons.

If EPR had been right about hidden instructions, quantum correlations would be no more spooky than similarities between identical twins who share the same genetic “instructions”. But in 1964 John Bell proved that the quantum case is different. Bell’s Theorem shows that any hidden instructions would themselves have to rely on action at a distance, to be consistent with the predictions of QM. (Many experiments have since confirmed these predictions.)

Entanglement is this counterintuitive connection between Alice’s particle and Bob’s, somehow able to guarantee certain correlations, no matter how far apart Alice and Bob might be. They could be separated by lightyears, or have the mass of a planet between them, but entanglement doesn’t care. Whether this is explained by a framework in which separated objects aren’t truly separated, (e.g., as in orthodox QM, where wave functions live in a highly-connected configuration space) or by allowing instantaneous communication at the level of hidden parameters, this distant connection is termed “nonlocality”. And it is now widely believed to be essential to the quantum world.

Nonlocality imperils more than just our sensibilities about action at a distance. As David Albert and Rivka Galchen put it, in a recent piece in Scientific American [3]: “Quantum mechanics has upended many an intuition, but none deeper than [locality]. And this particular upending carries with it a threat, as yet unresolved, to special relativity—a foundation stone of our 21st-century physics.”

2. The Parisian Zigzag

Back in 1935, thirty years before Bell’s Theorem, it still seemed “obvious” that there could be no action at a distance. As Schrödinger put it at that time, “measurements on separated systems cannot directly influence each other—that would be magic” [4]. The EPR argument for “hidden instructions” assumed that Alice’s choice of measurement cannot influence Bob’s particle, and vice versa.

But a decade later, in post-war Paris, a young French graduate student, Olivier Costa de Beauregard, spotted an interesting loophole in EPR’s reasoning. He realized that Alice’s choices could affect Bob’s particle indirectly—so without action at a distance—if the effect followed a zigzag path, via the past. Alice’s choice could affect her particle “retrocausally”, so to speak, right back to the common source, in turn correlating Bob’s particle with Alice’s choice (and vice versa) [5].

Unfortunately for Costa de Beauregard, his thesis advisor was Louis de Broglie, one of the giants of early quantum theory (and a prince, to boot!) For several years, de Broglie forbade his student to publish his strange idea—relenting only when Feynman published a famous paper describing positrons as electrons zigzagging backwards in time. Despite the Feynman factor, however, Costa de Beauregard’s proposal made no impact among the Copenhagen-minded physicists of the day. (Most of them thought that Bohr had already dealt with the EPR argument.)

Ironically, one of the few anti-Copenhagen heretics in those days was the young John Bell, whose conviction that EPR were making an important point was to lead him to his own famous reason for thinking that Einstein must nevertheless be wrong. As Bell himself put it, many years later:

For me it is so reasonable to assume that the photons in those experiments carry with them programs [i.e., “hidden instructions”], which have been correlated in advance, telling them how to behave. This is so rational that I think that when Einstein saw that, and the others refused to see it, he was the rational man. The other people, although history has justified them, were burying their heads in the sand … So for me, it is a pity that Einstein’s idea doesn’t work. The reasonable thing just doesn’t work.— J. S. Bell [6]

In the decades after Bell’s Theorem, a few writers noticed that Costa de Beauregard’s loophole also applied to Bell’s reasoning (see, e.g., [7,8,9,10]). As Bell himself makes clear, his result requires the assumption that Alice and Bob’s measurement choices don’t affect the prior state of the particles. If we allow such retrocausality—if Alice’s and Bob’s choices affect their particles’ common past—then Bell’s argument for action at a distance is undermined.

This loophole receives little attention, and remains poorly understood. Our purpose here is to throw some light on the idea, by proposing a new way to explain and motivate it. We exploit some simple symmetries to show how Costa de Beauregard’s zigzag would need to work, to explain the correlations at the core of Bell’s Theorem. As a bonus, the explanation shows how entanglement might be a much simpler matter than the orthodox view assumes—less a puzzling feature of quantum reality itself, than an entirely unpuzzling feature of our knowledge of reality, once the zigzags are in play. It also shows how one of the intuitive objections to retrocausality—that it would lead to time-travel-like paradoxes—can be avoided very easily.

An important note about terminology, before we begin. The central idea of Costa de Beauregard’s proposal is that Alice’s choices may affect what happens on Bob’s side of the experiment, without action at a distance, so long as the effect goes via the past. Does this mean that the zigzag avoids nonlocality? Yes in one sense, but no in another, for the term “locality” is ambiguous, once the zigzag option is in play. If “local” means that Alice’s choices can’t affect Bob’s simultaneous measurement at all, then the zigzag model is not local. But if it means that every distant influence must be explained by some contiguous chain of intermediate events in space-time (with no faster-than-light individual links), then zigzags are local.

Normally, these two meanings of “locality” would be thought to coincide, but they come apart in zigzag models. To avoid confusion, we shall avoid the term altogether, from now on. But we note that it is the second sense of nonlocality—fundamental faster-than-light processes—that offends both old objections to action at a distance and new objections based on relativity. A great advantage of the Parisian zigzag, if it works, is that it avoids nonlocality in this sense.

3. Alice Through the Looking Glass

3.1. Polarizing Cubes

Let’s begin with some more details about the kind of experiment depicted in Figure 1. The devices that Alice and Bob control are intended to be polarizing cubes. Classically, such cubes separate the polarization components of the incoming light. Each cube can be set at an arbitrary angle, and any incoming light whose polarization matches the chosen angle will pass straight through. But any incoming light with a polarization perpendicular to this chosen angle will reflect off the line drawn in the center of the cube, and change direction.

Generally, then, in the classical case, the cube will split one incoming beam into two outgoing beams. The exceptions are the cases in which incoming beam is already polarized along (or perpendicular to) the setting angle of the cube. In those cases, 100% of the outgoing light lies in a single beam.

For future reference, we note that such a cube can also be used in reverse, to “splice” two suitably polarized (and phase-locked) beams into a single beam. Splicing is simply the time-reverse of splitting, and its possibility follows from the fact that classical electromagnetism is time-symmetric.

However, this “splitting” of classical electromagnetic waves does not extend to the low-energy limit introduced in quantum theory. In this limit, we encounter particle-like packets called “photons”. When the experiment of Figure 1 is conducted with a single pair of photons, each of these photons is found entirely on one path or the other, at the relevant wing of the experiment. (The probability of finding a photon on each path may still be said to “split”, thus matching classical predictions in the many-photon limit, but our concern will be in making sense of these experiments at the level of single photons.) This difference between the quantum and classical cases is crucial to entanglement and the case for nonlocality. Bell’s Theorem, for example, turns entirely on the correlations between these “discrete” single-photon outcomes, on the two sides of experiments such as that of Figure 1. There are no such discrete phenomena in the classical case.

For fully entangled photons, as in Figure 1, the strange correlations between Alice’s and Bob’s outcomes are masked by a curious feature: each individual outcome appears to be completely random. No matter what setting Alice chooses, she always finds a 50% chance of measuring her photon on each of her two possible outputs (A). The same goes for Bob’s outcome B. It’s only when they compare notes, after the fact, that the strange correlations become apparent.

3.2. Into the Mirror

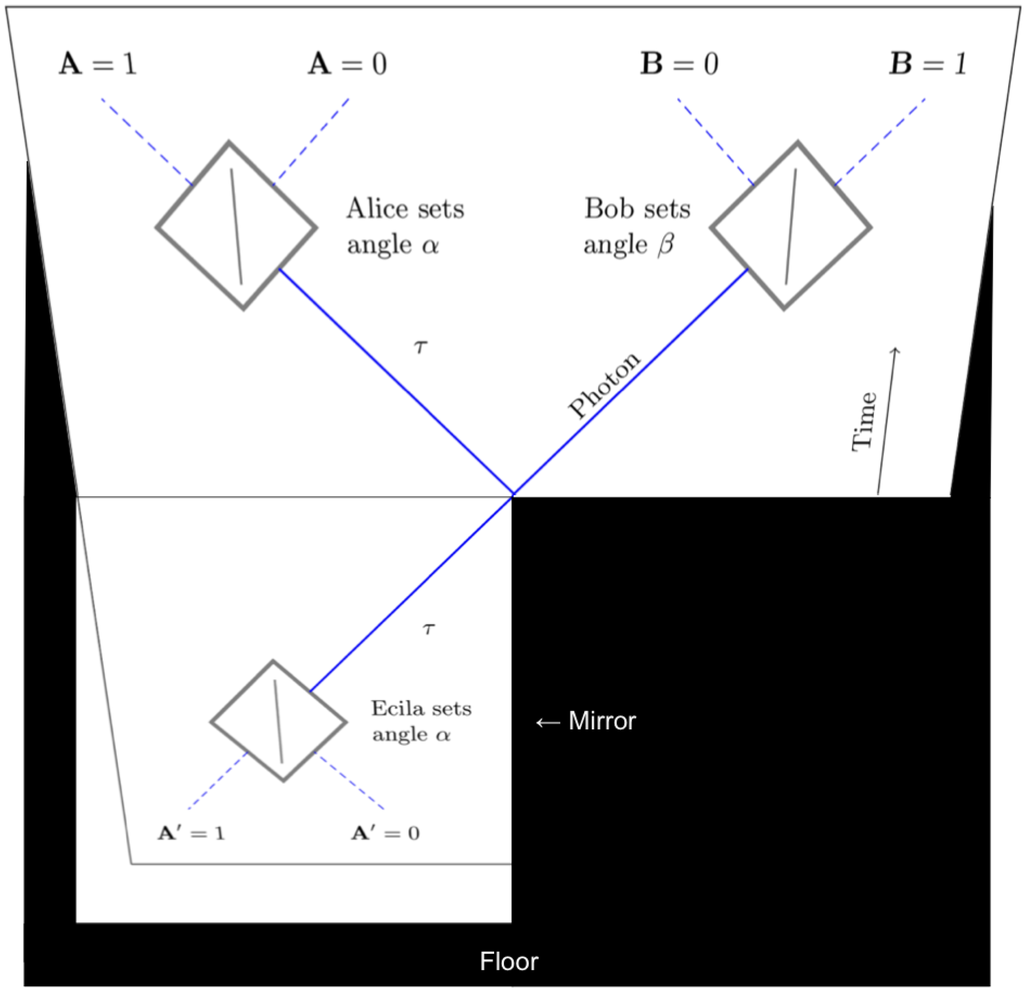

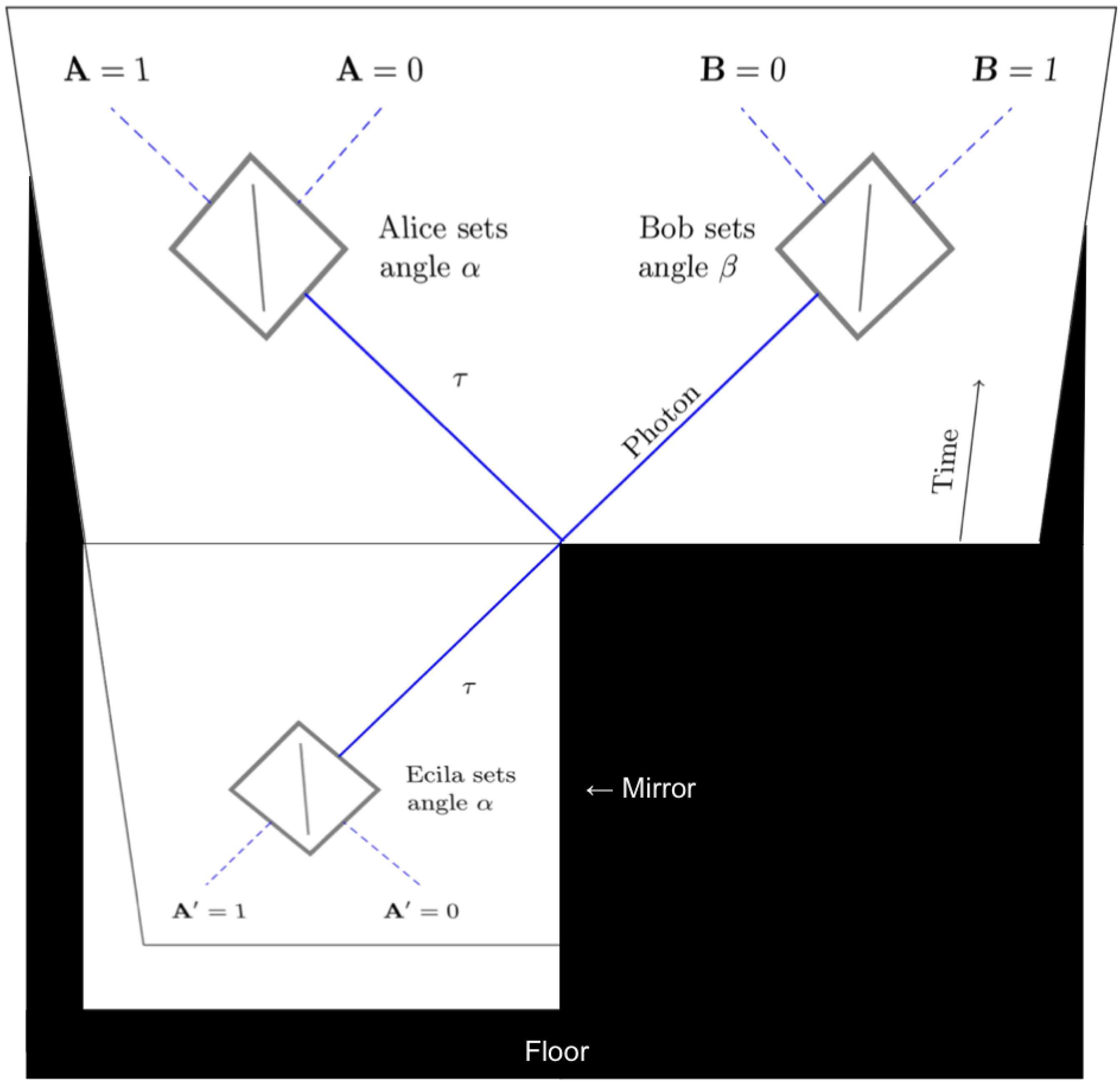

With these preliminaries in place, let’s now stand Figure 1 on top of a mirror, as shown in Figure 2. In the mirror we can see the reflection of Alice and her half of the experiment. (There is no mirror under Bob’s half.) Now focus on this reflection of Alice and her half of the experiment, and combine it in your mind’s eye with Bob’s half of the original figure. This combination (reflected Alice, plus Bob) looks exactly like the spacetime diagram of a different experiment—one in which a single photon passes from Alice to Bob, going through two polarizing cubes. (To make this trick work, we have to be careful to place the far righthand corner of the mirror at the point on Figure 1 where the entangled particles are created.)

Figure 2.

Alice through the looking glass.

Figure 2.

Alice through the looking glass.

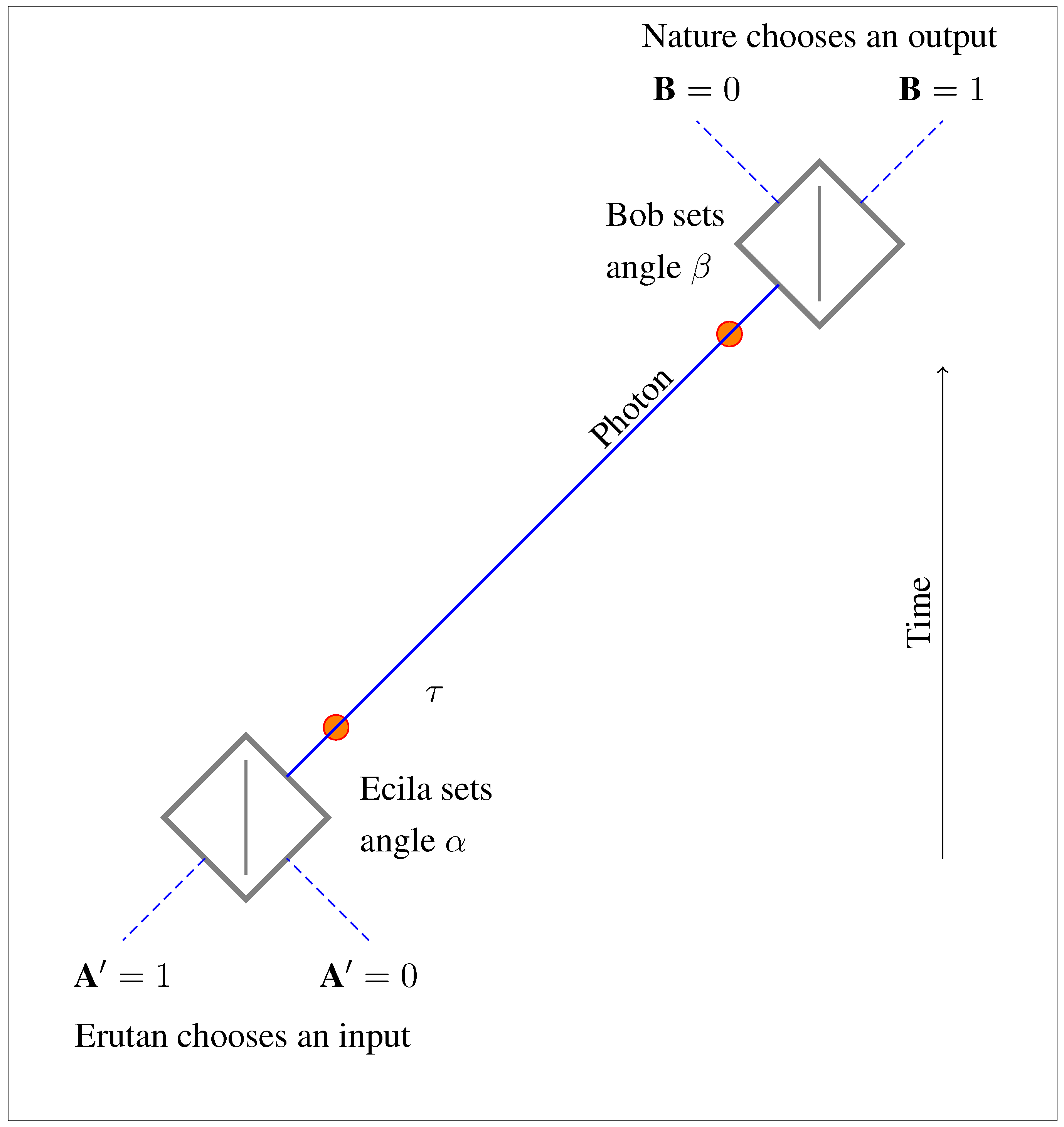

Figure 3.

The one-photon experiment.

Figure 3.

The one-photon experiment.

To avoid confusion we’ve reproduced this new one-photon experiment as Figure 3. Reflection in the mirror corresponds to time-reversal, so we have named Alice’s time-reversed counterpart “Ecila”. (Ignore the orange dots for the moment).

3.3. The One-Photon Experiment

This new experiment (Figure 3) is not some impossible permutation of the original—it’s a perfectly valid experiment, which we can actually carry out. But it is unusual in one respect, and this oddity will play a crucial role in the use we want to make of the experiment. Normally, if we were performing a two-polarizer experiment of this kind, it would be natural to take advantage of our ability to control the input channel () at Ecila’s end of the experiment. We (or Ecila herself) could simply choose to supply photons on one channel or the other.

For Ecila’s end of Figure 3 be a proper mirror image of Alice’s end of Figure 1, we need to do something different. We need to ensure that photons are secretly supplied at random on one channel or other, to mirror the unpredictable random outputs from Alice’s cube in Figure 1. We call this random source “Erutan”, since it mirrors the action of Nature in choosing Alice’s outputs in Figure 1. (In Figure 1, we are stipulating that Alice is measuring which output path A the photon ends up on. To mirror this behavior in Figure 3, it must also be a determinate matter that Ecila’s input photon arrives on one input path or other.)

Intriguingly, the correlations that one sees between Ecila’s inputs and Bob’s outputs in real-life versions of Figure 3 are exactly the same as those between Alice’s outputs and Bob’s outputs in Figure 1—and they depend on the choice of α and β in exactly the same way.

In the case of Figure 3, however, the explanation of these correlations is thought to be straightforward. The photon polarization τ is determined both by Erutan’s randomly-chosen input at Ecila’s end of the experiment and by Ecila’s choice of the measurement setting α. (More on the details of this below.) In turn, τ makes a difference to the outcome at Bob’s end of the experiment, in combination with Bob’s setting β.

Intuitively, the polarization τ connects events at one end to events at the other, without action at a (temporal) distance, or any mysterious entanglement. We simply have a single enduring property of the photon, τ, that “bridges the temporal gap”, and ensures in an entirely non-mysterious fashion that the output channel of the photon at Bob’s end of the experiment is related to its input at Ecila’s end of the experiment, in a way that depends on the settings chosen by Ecila and Bob.

3.4. Backing out of the Mirror

We want to use this simple, uncontroversial explanation from Figure 3 to put some flesh on the bones of Costa de Beauregard’s zigzag, in Figure 1. More precisely, we want to reach into the mirror in Figure 2, pull out the τ-based explanation, and apply it to the original EPR experiment in Figure 1. We are exploiting the fact that, in effect, the mirror is already showing us exactly what we need for a zigzag explanation of the correlations in Figure 1. We simply need to assign a property τ to the photon in Figure 1, before it reaches Alice’s cube (matching the property of the photon in Figure 3, after it leaves Ecila’s cube).

If we allow this property τ to be a constant throughout the zigzag path from Alice to Bob in Figure 1 (just as it is a constant from Ecila to Bob in Figure 3), then it plays exactly the same role in “propagating influence” to Bob in one experiment as in the other. That is, it makes exactly the same contribution to showing how a difference in Alice’s choice of the setting α makes a difference to the photon in the region of Bob’s cube, as it does to showing how a difference in Ecila’s choice of the setting α makes a difference to the photon in the region of Bob’s cube. (For philosophers we might say: The relevant counterfactuals are exactly the same!) So we have an explanation—or, for cautious folk, an “explanation”!—of the correlations in Figure 1, just as we do in Figure 3.

So we now have a picture of an EPR-style experiment that shows us how the world needs to behave, to explain the Alice–Bob correlations via Costa de Beauregard’s “retrocausal” proposal. Want to know what the retrocausality needs to look like? Just think about what ordinary causality looks like in Figure 3, according to the standard quantum picture, in the special case in which the input channel is random. The control that the retrocausal proposal needs to give to Alice is exactly the control that looks like the standard “forward causal” story, when reflected in the mirror. We want to see Ecila controlling τ after it leaves her cube (that’s the standard story), so we need to show Alice controlling τ before it reaches her cube—and that’s the retrocausality, in the zigzag proposal.

3.5. Too Good to Be True?

At this point, readers may feel that our use of the mirror involves some sort of sleight of hand, and that there are obvious difficulties for the zigzag proposal. We can’t anticipate all such concerns, but we want to respond to three objections:

- If Alice can control τ over on Bob’s side of the experiment, why can’t she send a signal to him? It is well-known that QM does not allow signaling in the kind of experiment depicted in Figure 1, and we might therefore suspect that this zigzag connection would be incompatible with standard QM. (Typical causal channels can be used to signal, after all.)

- Why is Alice allowed to influence τ, when Bob seems to do no such thing? (Discrimination against experimenters on the right!)

- Isn’t the zigzag model just another version of the discredited “superdeterminist” proposal?

We’ll come back to (2) in due course, and show how the zigzag proposal can give Bob an influence, too; and we’ll come back to (3), say what it means, and show why the objection is mistaken. But first let’s explain why Alice can’t signal to Bob, even if she has retrocausal control of τ. To do this, we need to go back into the mirror.

4. Causation without Signaling

4.1. Can Ecila Signal?

We are interested in whether Alice could signal to Bob in Figure 1, if she had retrocausal control of τ. To answer this question, let us first ask the corresponding question about Ecila. Is it possible for Ecila to signal to Bob in Figure 3?

If Ecila could control the path chosen by the input photon, the answer would be certainly be “Yes”. Fixing the path for a series of runs of the experiment would give Ecila complete control over the polarization τ, and Ecila could then vary τ to encode a message—she simply needs to send enough photons with the same polarization, for each bit of her message, to enable Bob to measure the polarization.

Suppose, for example, that Ecila’s photons come reliably from the lower left channel. To ensure that some photons sent to Bob have polarization τ, Ecila sets . Bob experiments with various settings β, and discovers that the bias between his outputs is greatest when or , and disappears altogether when . This tells him that or (and the direction of the bias between outputs will distinguish these possibilities).

Signaling remains possible even if Ecila doesn’t know which channel her photons are arriving from, so long as they all arriving from the same channel. In fact, it is enough that there is a reliable bias, so that one channel reliably has higher probability than the other. Bob can still detect that Ecila’s setting is either α or , by looking for the setting of his polarizer that produces maximum bias in his outputs. And this information is enough to carry a signal. (For example, “ or ” could mean “0”, while “ or ” means “1”.)

However, when Ecila’s input photon is supplied by a hidden randomizer, with no bias between the two input channels, this kind of signaling becomes impossible. (As we have specified above, the randomizer Erutan acts as a mirror image of Nature.) If Ecila chooses setting α in this case, each photon sent to Bob has equal probability of having polarization α (if the input came from the lower left), or (if the input came from the lower right). Even if Bob happens to set he cannot tell that he has done so, because his outputs display no bias, thanks to the random and hidden input at Ecila’s end of the experiment.

With the randomizer in place, then, Ecila’s choice of the angle α does not give her enough control to send a signal to Bob. It might be thought that she has lost control of the polarization τ altogether, but this is not so. She retains enough control to restrict the photon to just two possible polarizations (α or ). Intuitively, then, Ecila has a causal influence on the polarization τ, without being able to use that influence to signal to the future—and the fact that she still has causal influence continues to play a crucial role in the intuitive explanation of the correlations that obtain between her inputs and Bob’s outputs.

4.2. No Signaling, with Mirrors

Now that we know why Ecila can’t signal to Bob, despite having some control of τ, we can see why the same is true of Alice. The control of τ that the zigzag model gives to Alice is exactly the control of τ that Ecila retains in Figure 3. As in that case, it is control, but it doesn’t permit signaling. So there is no conflict on this score between the zigzag model and the prohibition of signaling in Figure 1 in orthodox QM.

Note that since Ecila’s inability to signal is linked to the fact that Erutan supplies symmetric inputs, an analogous claim must be true of Alice. In the particular two-photon experiment to which the mirror symmetry applies, the prohibition of signaling in orthodox QM must be linked to the fact that the Born Rule guarantees that Alice’s outputs are similarly symmetric. This explicit causation-without-signaling is comparable to orthodox QM’s description of “quantum steering” in these same cases.

4.3. Generalized No-Signaling?

This explanation shows why Alice cannot signal in this particular two-photon experiment, despite the retrocausal control provided by the zigzag model. Can the explanation be extended to other possible entangled states? If the zigzag model itself is to be extended to other entanglement experiments, we should hope that the no-signaling condition will also generalize. The general issue has recently been raised by Wood and Spekkens [11], who note that causation-without-signaling is logically possible in such causal models, but typically requires what they term “fine-tuning”.

In the above example, as we noted, this so-called fine tuning turns out to be naturally enforced by a symmetry between the outputs (in the case of Alice) or the inputs (in the case of Ecila). The same symmetry has been shown to explain no-signaling for every maximally entangled state [12], not merely the one considered here. An interesting open question is whether other natural symmetries (time-symmetry, Lorentz-symmetry, etc.) are available to supply the necessary “fine-tuning” for partially entangled states. Resolution of this question must await a hidden variable model rich enough to encode all such states, although one promising framework can be found in [13]. (For further discussion about how retrocausal models interface with the Wood-Spekkens fine-tuning argument, see [12,14].)

5. What about Bob?

As it stands, the zigzag explanation of the Alice–Bob correlations in Figure 1 shows an absurd spatial asymmetry: Alice has retrocausal control, but Bob does not. If the proposal is to have any claim to be taken seriously, this asymmetry will have to go.

In principle, there are three ways this might be done. One approach would be to double up the properties of the photon, so that there is a property controlled by Alice from the left, and a different property controlled by Bob from the right. In effect, this is the approach taken by the Two State Vector approach to QM, defended by Aharonov, Vaidman and others [15]. This can explain the Alice–Bob correlations—it provides two consistent explanations, in fact, depending on which end we start. (Another version of this approach proposes an analogy with the Wheeler–Feynman absorber theory of radiation, thinking of by comparison with the advanced potential in the Wheeler–Feynman picture. See [7,8,9] for details.)

A second approach would be to make do with a single τ that need not be constant. If τ is controlled on the left by Alice and on the right by Bob, it must be allowed to change value in between, from to , to avoid the inconsistencies that would otherwise arise when Alice and Bob choose incompatible settings (i.e., when any single, fixed τ is incompatible with either α or β). Remarkably, there is a simple rule that recovers the correct correlations between Alice and Bob for models of this sort [16].

Finally, one might take the approach that the polarization τ is not a “real” parameter, but just a summary of the knowledge we have about the system. This would correspond to what is now often called an “epistemic” interpretation of τ. Such an approach is not directly relevant to our present concerns (namely, showing how Costa de Beauregard’s zigzag supports an epistemic understanding of entanglement, even if τ itself is not interpreted in an epistemic fashion). However, we note that there are at least two reasons for thinking that any plausible epistemic interpretation of τ is also likely to be retrocausal, at the level of its underlying ontology. One is the requirement of time-symmetry; on this topic Pusey [17] has extended an argument of Price [18] to the epistemic case. The second is that retrocausality provides one of the few loopholes in the strongest argument against the epistemic view [19].

Setting the epistemic interpretation of τ to one side, we note that a bonus of either of the two previous approaches is that they remove a puzzling time-asymmetry in Figure 3. This is not the asymmetry-of-signaling that was removed by Erutan, as discussed in the previous section; with Erutan present, neither Ecila nor Bob can signal to each other. (Without Erutan, Ecila knows the input channel before she chooses her setting α, but Bob has no such access to B before he chooses his setting β.) But even with Erutan, a further time-asymmetry remains if there’s only one fixed value of τ. The standard assumption in this case is that Ecila still has control over τ in this case (up to an additive factor of ), while Bob does not.

For either of the time-symmetric approaches described in this section, this puzzling asymmetry disappears. In these cases, whatever control Ecila has over , Bob has the same control over . In other words, by restoring the spatial symmetry of the zigzag in Figure 1, we automatically restore the temporal symmetry of Figure 3. This reveals that the single-fixed-τ model involves a new and apparently fundamental time-asymmetry, and it is an advantage of the zigzag models that they remove it. This issue is new to the quantum case, being a product of the discretization in the single-photon limit. In the classical case, an initial randomizer mirroring Nature will deprive Ecila of any determinate control over the output polarization of a classical light beam at her end of such an experiment, restoring the symmetry between Ecila and Bob. (For further discussion of this point, see [18].)

6. Isn’t This Just “Superdeterminism”?

Some readers may feel that the proposal offered here is merely another version of a familiar but unpopular proposal known as “superdeterminism”. (We are grateful to a referee at this point, and borrow her/his formulation of this challenge in this paragraph and the next.) What is superdeterminism? We can explain it by contrast to our own proposal, referring to Figure 1. On the retrocausal view, Alice’s choice of angle α influences a photon property τ on the segment of the photon’s worldline between Alice’s measurement and the point in the past where the entangled photon pair has been created. The referee expresses this idea as the claim that the “photon property τ … somehow travels back in time (whatever that means).” We agree that the notion of travelling back (or forwards) in time is problematic, and therefore avoid it. (That’s why, when we spoke of influence “propagating” in Section 3.4, we put the word in scare-quotes. Instead we speak of properties of world-lines during temporal intervals. What is distinctive about retrocausal models, in this framework, is that they allow such properties to be influenced by choices made by experimenters at both ends of the interval.)

The superdeterminist proposes that this correlation between α and τ can be interpreted in a different way. At the time when the photon pair was created, the random variable τ was determined, and then sent to Alice (and Bob). This element of reality forced Alice to choose a specific setting α (or, in more detail, forced everything that happened close to Alice to result in a specific combination of the outcome A and α), so that Alice was not really free to choose her setting. In other words, the choice of setting was determined in the past, in such a way to conspire to make everything look like a Bell violation but satisfy no-signaling.

Superdeterminism is usually rejected for two reasons. First, it is felt to require either some highly implausible conspiracy in the initial conditions of the Universe, or some new realm of hidden variables with remarkable powers to control the behavior not only of human experimenters but also of the various mechanical substitutes that might be used for choosing measurement settings, apparently at random (e.g., the Swiss national lottery machine, as Bell once proposed). Second, it is felt to conflict with “core assumptions necessary to undertake scientific experiments” [20]. As Maudlin says, “all scientific interpretations of our observations presuppose that our choices of settings have not have been manipulated in such a way” [21]. This objection may also be traced to Bell, who says this, for example:

A respectable class of theories, including contemporary quantum theory as it is practiced, have “free” “external” variables in addition to those internal to and conditioned by the theory. These variables … provide a point of leverage for “free willed experimenters”, if reference to such hypothetical metaphysical entities is permitted. I am inclined to pay particular attention to theories of this kind, which seem to me most simply related to our everyday way of looking at the world.— J. S. Bell [22]

We agree with both of these objections to superdeterminism. By explaining how the retrocausal proposal avoids them, we can explain how it differs from superdeterminism.

Taking the second objection first, the retrocausal proposal accepts the standard presupposition of all experimental science, namely that experimenters such as Alice and Bob are free to choose measurement settings. Moreover, it accepts a standard operational definition of causality—a definition long assumed in science, and refined and formalized in philosophy over the past three decades—in which the notion of free agency plays a central role. According to this so-called “interventionist” account of causality, a variable X is a cause of a variable Y if and only if a free intervention on X makes a difference to Y. (See [23,24,25,26] for further details, and [10,27,28] for discussions of the application of this approach to the direction of causality and the possibility of retrocausality.) A point stressed in this literature (see particularly, e.g., [24,25]) is that an adequate operational definition of causation cannot be purely observational, if observation is understood in a passive sense. In science, as in ordinary life, the notion of causation depends on the fact that we intervene as well as observe. This approach to causality thus gives a central and indispensable role to what Bell referred to in the passage above as “free external variables”, and vindicates his view that this notion is central to “our everyday way of looking at the world”, as well as in science.

From this standard interventionist definition of causation, utilizing the assumption of free control of measurement settings that superdeterminism is rightly criticized for neglecting, it follows immediately that the direction of causation in our models is the one claimed by the retrocausal reading. Alice and Bob choose the measurement settings in the normal way, and these settings in turn make a difference to the prior values of τ.

Some writers object that retrocausation cannot be “real” causation, claiming that it is a matter of definition, or perhaps presupposed by relativistic spacetime, that true causation only works past-to-future. But this objection need not detain us here. What has happened is that two criteria for causation—the interventionist criterion, and the time-direction criterion—have turned out to conflict, in the kind of models here proposed. It is then a terminological choice which criterion we take to be the more important. The possibility of such a conflict was recognised long ago by the philosopher Michael Dummett [29]. Dummett himself opts for the second criterion, and proposes “quasi-causation” for the case involving control of something in the past. In Dummett’s terminology, these models for QM involve retro-quasi-causation, not retro-causation—but they retain all the advantages here described, under this new name. (See [10,27] for further discussion of this point.)

The retrocausal proposal also differs from superdeterminism on the points at issue in the first objection. The retrocausal model introduces no strange new hidden variables to control measurement settings. To the extent that it proposes new hidden variables, they are internal to the model (and, at least in the version referred to in these paragraphs, of a familiar kind—e.g., a new τ, controlled from the future). It may induce correlations in the initial conditions of the Universe (or subsystems of it), but if so, these will not be conspiratorial—on the contrary, they will be explicable within the model in just the way that a standard forward-causal model explains correlations in the future. (We discuss the distinction between superdeterminism and retrocausality further in [30].)

7. Entanglement without Spooks

Finally, back to what we promised at the beginning: an explanation of how the Parisian zigzag offers a less spooky explanation of entanglement. Once again, we will start with Figure 3, and then use the mirror to apply the lessons of that case to Figure 1. Please pay attention to the orange dots in Figure 3, that we earlier asked you to ignore. But now imagine that—concerning a particular photon—you know that it is participating in the experiment in Figure 3, but that you don’t know the setting or the input/output channel, at either end.

Consider the photon at the upper orange dot, for example, and the various possibilities for what Bob’s setting and outcome might be, immediately in its future. Imagine that we are interested in the probability we should assign to each outcome, conditional on the various possible settings. What we know at this point is something rather bland: whatever the setting, the probability of each outcome is 50%.

This is a “subjective” or “evidential” probability. If we had more evidence—in particular, if we knew the setting and input channel at Ecila’s end of the experiment—we would in general assign a different probability to each of Bob’s outcomes, for each choice of his setting. For example, if we learn that Ecila’s input and setting are and α, respectively, then we should now say that the probability of outcome , assuming Bob chooses setting α, is 100%. Nevertheless, the bland 50% probabilities are the correct probabilities, for the knowledge state we assumed here: ignorance of the setting and input, at Ecila’s end of the experiment.

Exactly the same is true in reverse at the lower orange dot. There, too, the probability of each input, for each assumption about Ecila’s settings, is 50%, if we don’t know Bob’s setting and outcome. And there, too, the probability changes, if we get additional evidence—if we learn about the setting and outcome at Bob’s end of the experiment.

So in Figure 3 we have a perfectly time-symmetric story about how getting information about one end of the experiment affects what probabilities we are correct to assign at the other end of the experiment, in the kind of knowledge-state we assumed. These evidence-based probabilities are time-symmetric in this way, even if we are assuming that the underlying reality is not symmetric—even if we think, as in the standard picture, that there is a real property τ influenced by Ecila but not by Bob.

Using the mirror, we can now transfer this understanding to Figure 1. In this case, the situation in which we are ignorant of the setting and outcome at both ends looks perfectly normal, for an evident reason: they all lie in the future! But the above analysis goes through in the very same way. Our best description of each side of the experiment predicts bland 50% probabilities, for each assumption about the choice of setting, until we learn about what happens on the other side. At that point, we have new evidence, and can update our probabilities on the opposite site.

The significance of this account is that these probabilities correspond exactly to the information carried by entanglement. In the standard view, this “entangled state” is thought to be a real property, that depends in a mysterious way on what happens on the other side of the experiment—it changes, when a measurement is made on the opposite side. But the mirror shows us that this interpretation is not compulsory. We can understand these probabilities in terms of changing evidence, just as we did in Figure 3.

Why is this understanding of entanglement so much harder to see in Figure 1 than in Figure 3? Because in Figure 3 we think we understand why these evidential probabilities work the way they do—the standard model, treating τ as a real property, offers an explanation of the correlations on which these probabilities are based. In Figure 1, there doesn’t seem to be any explanation on offer, except the one that thinks of entanglement in terms of a real property, mysteriously affected by choices made elsewhere. But once the zigzag model is on the table (even in the left-to-right, unfair-to-Bob version), it does the explanatory job. So it frees us to think of entanglement in this easy, state-of-information fashion, just as we do in Figure 3.

The project of trying to make sense of entanglement started with EPR, 80 years ago. At the end of their paper, EPR note that while they take themselves to have shown that the standard quantum state “does not provide a complete description of the physical reality,” they have “left open the question of whether or not such a description exists.” Nevertheless, they say, “we believe … that such a theory is possible.”

Costa de Beauregard himself saw his zigzag proposal as an objection to the EPR argument. It showed how there might be spacelike influence, without action at a distance—thus undermining EPR’s main reason for assuming that a measurement choice at one location could not affect an “element of reality” at another location. In another sense, however, it amounts to a vindication of EPR’s conclusion. If accepted, it shows not only that EPR were right in thinking that the standard description is incomplete (because it leaves out the zigzag mechanism) but also that they were right in thinking that more complete theory is indeed possible.

Our contribution here has been show how easy it is to motivate Costa de Beauregard’s zigzag, via the symmetries underlying our use of mirror in Figure 2. We don’t take ourselves to have offered conclusive arguments for the zigzag approach, of course, but we do urge that it deserves serious attention. For the moment, the prevailing view of entanglement—that it involves the mysterious connections between real properties that Schrödinger derided as “magic” in 1935—seems to rest on a considerable failure of imagination. The Parisian zigzag offers an elegant alternative.

Acknowledgments

We gratefully acknowledge the assistance of comments from two referees. Huw Price also acknowledges the support of the Templeton World Charity Foundation (TWCF) research grant Information at the Quantum Physics/Statistical Mechanics Nexus.

Author Contributions

Huw Price and Ken Wharton jointly wrote the paper. Both authors have read and approved the final manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Schrödinger, E. Discussion of probability relations between separated systems. Math. Proc. Camb. Philos. Soc. 1935, 31, 555–563. [Google Scholar] [CrossRef]

- Einstein, A.; Podolsky, B.; Rosen, N. Can Quantum-Mechanical Description of Physical Reality Be Considered Complete? Phys. Rev. 1935, 47, 777–780. [Google Scholar] [CrossRef]

- Albert, D.Z.; Galchen, R. A quantum threat to special relativity. Sci. Am. 2009, 300, 32–39. [Google Scholar] [CrossRef] [PubMed]

- Trimmer, J.D. The Present Situation in Quantum Mechanics: A Translation of Schrödinger’s “Cat Paradox” Paper. Proc. Am. Philos. Soc. 1980, 124, 323–338. [Google Scholar]

- Costa de Beauregard, O. Méchanique quantique. C. R. Math. Acad. Sci. 1953, 236, 1632–1634. (In French) [Google Scholar]

- Bernstein, J. Quantum Profiles; Princeton University Press: Princeton, NJ, USA, 1991; p. 84. [Google Scholar]

- Cramer, J.G. Generalized Absorber Theory and the Einstein-Podolsky-Rosen Paradox. Phys. Rev. D 1980, 22, 362–376. [Google Scholar] [CrossRef]

- Pegg, D.T. Objective reality, causality and the aspect experiment. Phys. Lett. A 1980, 78, 233–234. [Google Scholar] [CrossRef]

- Pegg, D.T. Time-symmetric electrodynamics and the Kocher-Commins experiment. Eur. J. Phys. 1982, 3, 44–49. [Google Scholar] [CrossRef]

- Price, H. Time’s Arrow and Archimedes’ Point; Oxford University Press: Oxford, UK, 1996. [Google Scholar]

- Wood, C.J.; Spekkens, R.W. The lesson of causal discovery algorithms for quantum correlations: Causal explanations of Bell-inequality violations require fine-tuning. New J. Phys. 2015, 17, 033002. [Google Scholar] [CrossRef]

- Almada, D.; Ch’ng, K.; Kintner, S.; Morrison, B.; Wharton, K.B. Are Retrocausal Accounts of Entanglement Unnaturally Fine-Tuned? 2015; arXiv:1510.03706. [Google Scholar]

- Wharton, K.B.; Koch, D. Unit quaternions and the Bloch sphere. J. Phys. A 2015, 48, 235302. [Google Scholar] [CrossRef]

- Evans, P.W. Quantum Causal Models, Faithfulness and Retrocausality. 2015; arXiv:1506.08925. [Google Scholar]

- Aharonov, Y.; Vaidman, L. The Two-State Vector Formalism: An Updated Review. In Time in Quantum Mechanics; Springer: Heidelberg/Berlin, Germany, 2008; pp. 399–447. [Google Scholar]

- Wharton, K. Quantum States as Ordinary Information. Information 2014, 5, 190–208. [Google Scholar] [CrossRef]

- Pusey, M. Time-symmetric ontologies for quantum theory. In Presented at Free Will and Retrocausality in the Quantum World, Trinity College, Cambridge, UK, 3 July 2014; Available online: http://bit.ly/Pusey2014 (accessed on 9 November 2015).

- Price, H. Does time-symmetry imply retrocausality? How the quantum world says “maybe”. Stud. Hist. Philos. Sci. B Stud. Hist. Philos. Mod. Phys. 2012, 43, 75–83. [Google Scholar] [CrossRef]

- Pusey, M.F.; Barrett, J.; Rudolph, T. On the reality of the quantum state. Nat. Phys. 2012, 8, 475–478. [Google Scholar] [CrossRef]

- Wiseman, H. From Einstein’s theorem to Bell’s theorem: A history of quantum non-locality. Contemp. Phys. 2006, 47, 79–88. [Google Scholar] [CrossRef]

- Maudlin, T. What Bell Did. J. Phys. A 2014, 47, 424010. [Google Scholar] [CrossRef]

- Bell, J.S. Speakable and Unspeakable in Quantum Mechanics, 2nd ed.; Cambridge University Press: Cambridge, UK, 2004; p. 101. [Google Scholar]

- Woodward, J. Making Things Happen: A Theory of Causal Explanation; Oxford University Press: Oxford, UK, 2003. [Google Scholar]

- Pearl, J. Causality: Models, Reasoning, and Inference; Cambridge University Press: Cambridge, UK, 2000. [Google Scholar]

- Menzies, P.; Price, H. Causation as a secondary quality. Br. J. Philos. Sci. 1993, 44, 187–203. [Google Scholar] [CrossRef]

- Price, H. Agency and probabilistic causality. Br. J. Philos. Sci. 1991, 42, 157–176. [Google Scholar] [CrossRef]

- Price, H.; Weslake, B. The time-asymmetry of causation. In The Oxford Handbook of Causation; Beebee, H., Hitchcock, C., Menzies, P., Eds.; Oxford University Press: Oxford, UK, 2010; p. 414. [Google Scholar]

- Price, H. Toy models for retrocausality. Stud. Hist. Philos. Sci. B Stud. Hist. Philos. Mod. Phys. 2008, 39, 752–761. [Google Scholar] [CrossRef]

- Dummett, M. Can an Effect Precede Its Cause? Aristot. Soc. Proc. Supp. Vols. 1954, 28, 27–62. [Google Scholar]

- Price, H.; Wharton, K. A Live Alternative to Quantum Spooks. 2015; arXiv:1510.06712. [Google Scholar]

© 2015 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/4.0/).